6 829 Computer Networks Lecture 12 Data Center

- Slides: 31

6. 829: Computer Networks Lecture 12: Data Center Network Architectures Mohammad Alizadeh Fall 2016 ² Slides adapted from presentations by Albert Greenberg and Changhoon Kim (Microsoft) 1

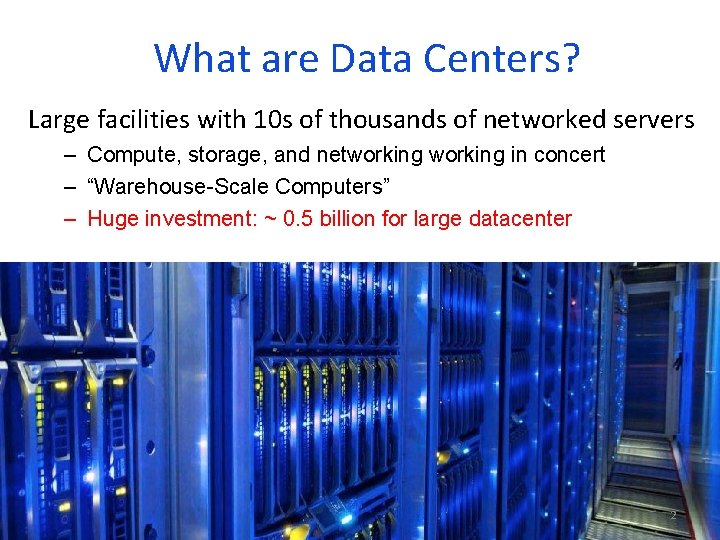

What are Data Centers? Large facilities with 10 s of thousands of networked servers – Compute, storage, and networking in concert – “Warehouse-Scale Computers” – Huge investment: ~ 0. 5 billion for large datacenter 2

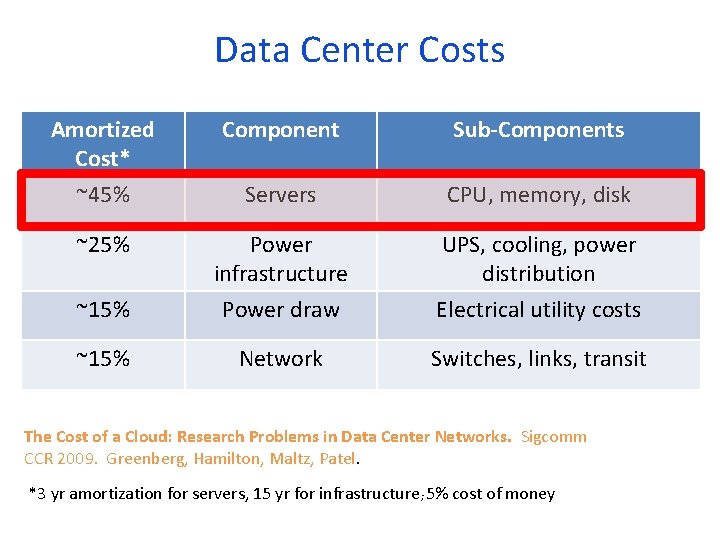

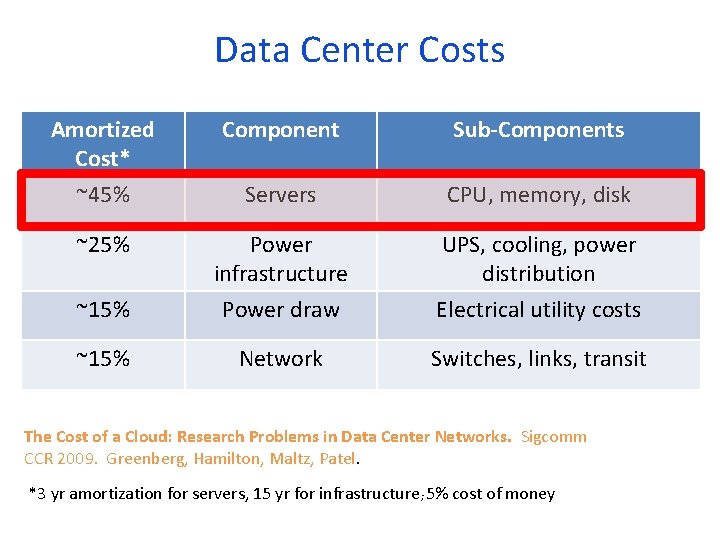

Data Center Costs Amortized Cost* ~45% Component Sub-Components Servers CPU, memory, disk ~25% ~15% Power infrastructure Power draw UPS, cooling, power distribution Electrical utility costs ~15% Network Switches, links, transit The Cost of a Cloud: Research Problems in Data Center Networks. Sigcomm CCR 2009. Greenberg, Hamilton, Maltz, Patel. *3 yr amortization for servers, 15 yr for infrastructure; 5% cost of money

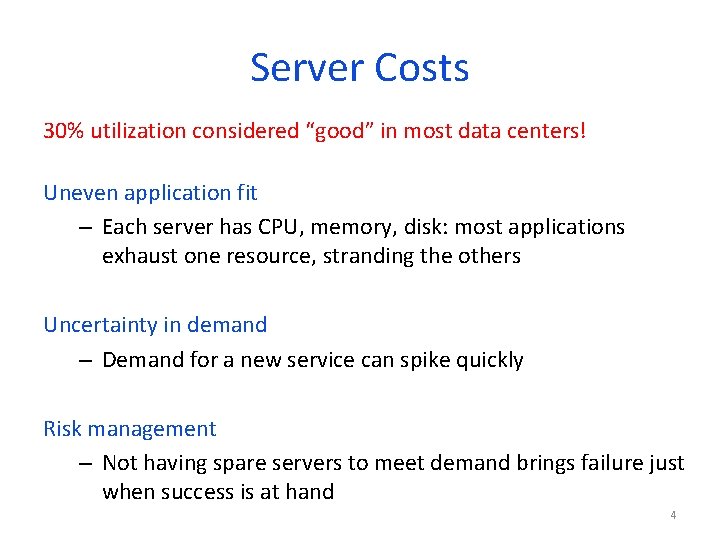

Server Costs 30% utilization considered “good” in most data centers! Uneven application fit – Each server has CPU, memory, disk: most applications exhaust one resource, stranding the others Uncertainty in demand – Demand for a new service can spike quickly Risk management – Not having spare servers to meet demand brings failure just when success is at hand 4

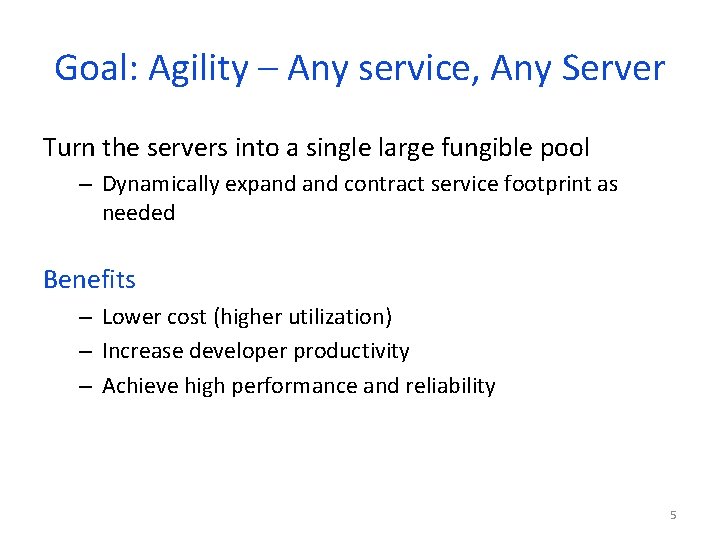

Goal: Agility – Any service, Any Server Turn the servers into a single large fungible pool – Dynamically expand contract service footprint as needed Benefits – Lower cost (higher utilization) – Increase developer productivity – Achieve high performance and reliability 5

Achieving Agility Workload management – Means for rapidly installing a service’s code on a server – Virtual machines, disk images, containers Storage Management – Means for a server to access persistent data – Distributed filesystems (e. g. , HDFS, blob stores) Network – Means for communicating with other servers, regardless of where they are in the data center 6

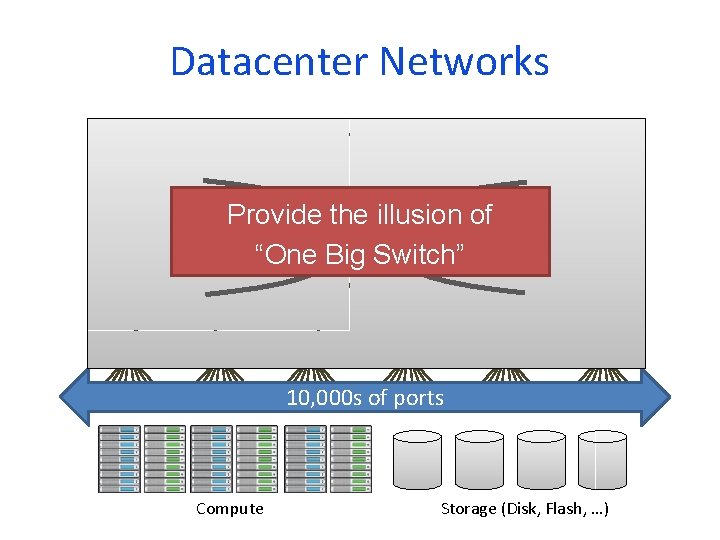

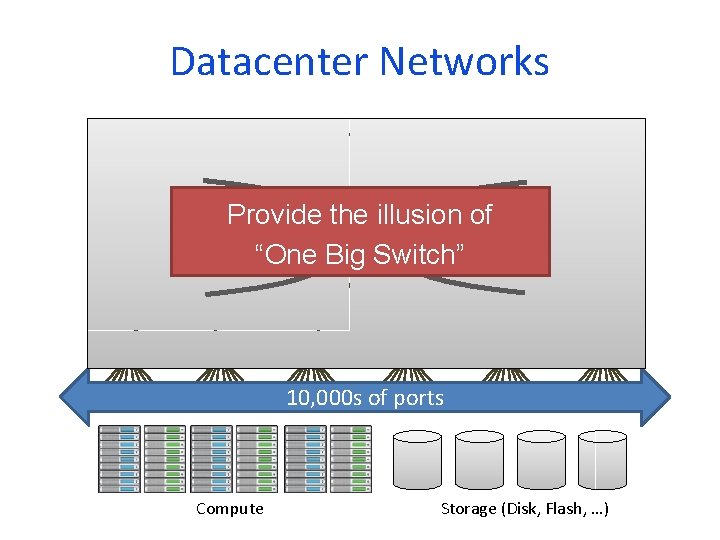

Datacenter Networks Provide the illusion of “One Big Switch” 10, 000 s of ports Compute Storage (Disk, Flash, …)

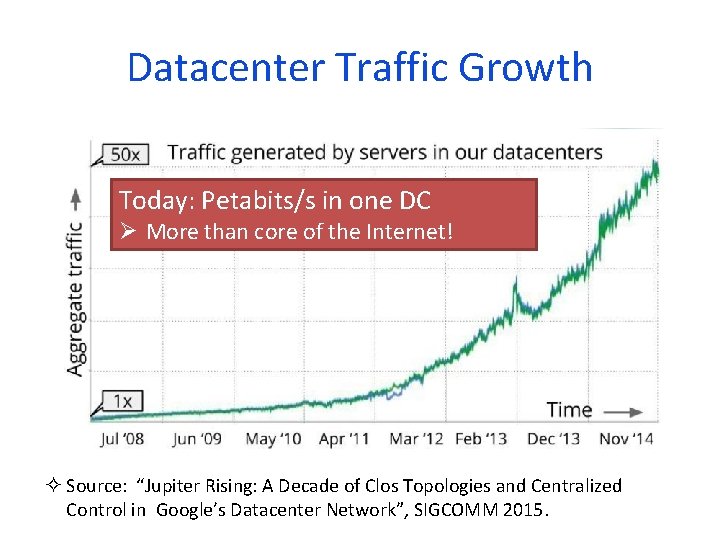

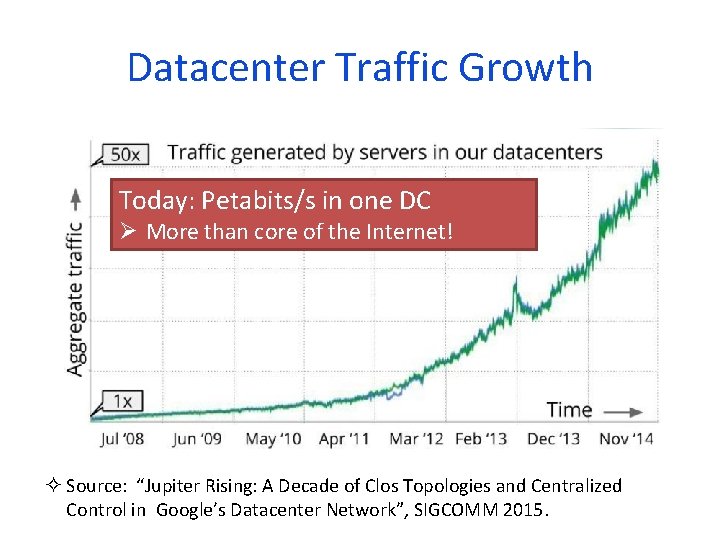

Datacenter Traffic Growth Today: Petabits/s in one DC Ø More than core of the Internet! ² Source: “Jupiter Rising: A Decade of Clos Topologies and Centralized Control in Google’s Datacenter Network”, SIGCOMM 2015.

Conventional DC Network Problems 9

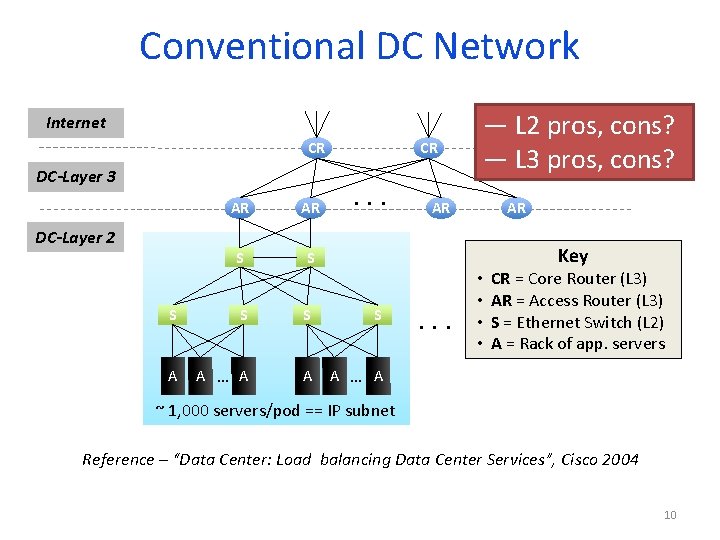

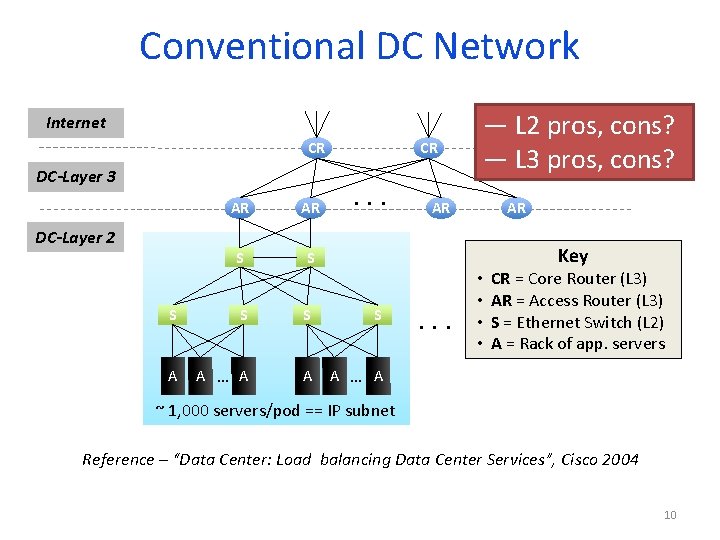

Conventional DC Network Internet CR DC-Layer 3 DC-Layer 2 AR AR S S CR . . . — L 2 pros, cons? — L 3 pros, cons? AR AR Key S S A A … A . . . • • CR = Core Router (L 3) AR = Access Router (L 3) S = Ethernet Switch (L 2) A = Rack of app. servers ~ 1, 000 servers/pod == IP subnet Reference – “Data Center: Load balancing Data Center Services”, Cisco 2004 10

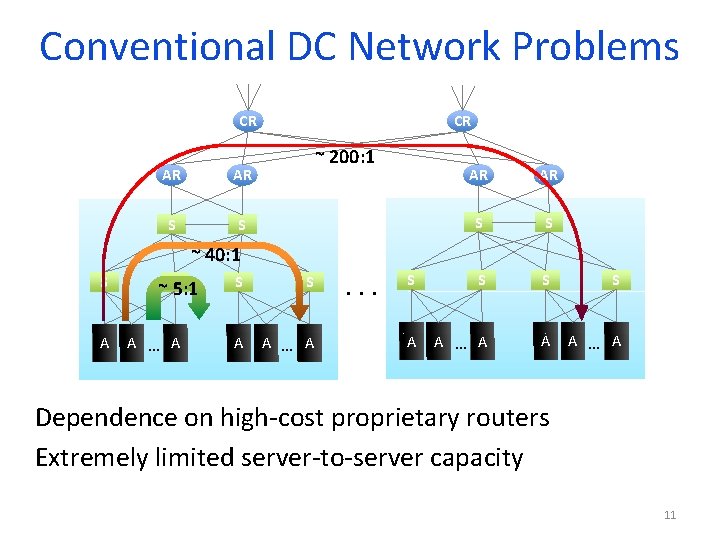

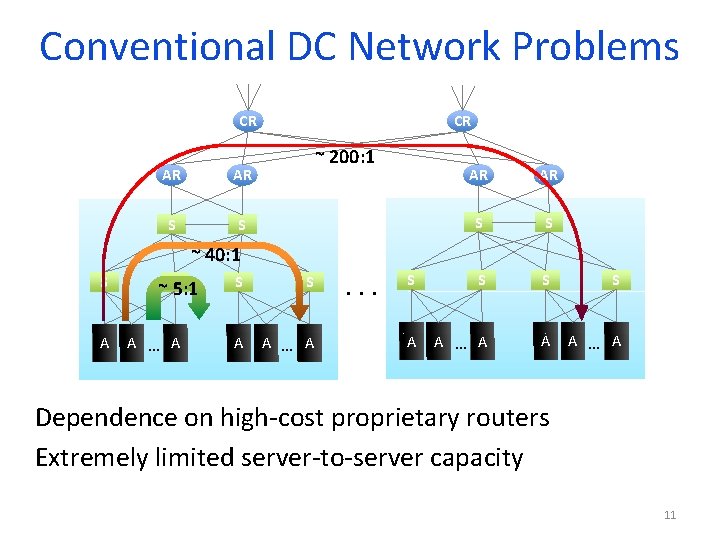

Conventional DC Network Problems CR AR AR S S CR ~ 200: 1 AR AR S S S A A … A ~ 40: 1 S A ~ S 5: 1 A … A S S A A … A . . . Dependence on high-cost proprietary routers Extremely limited server-to-server capacity 11

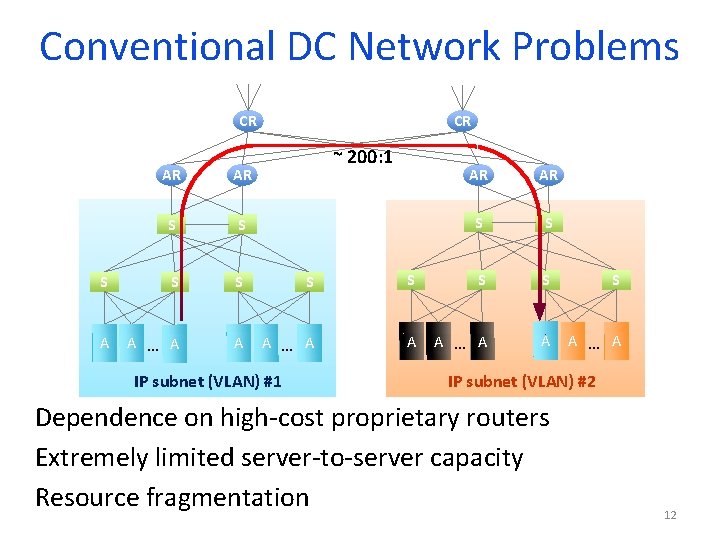

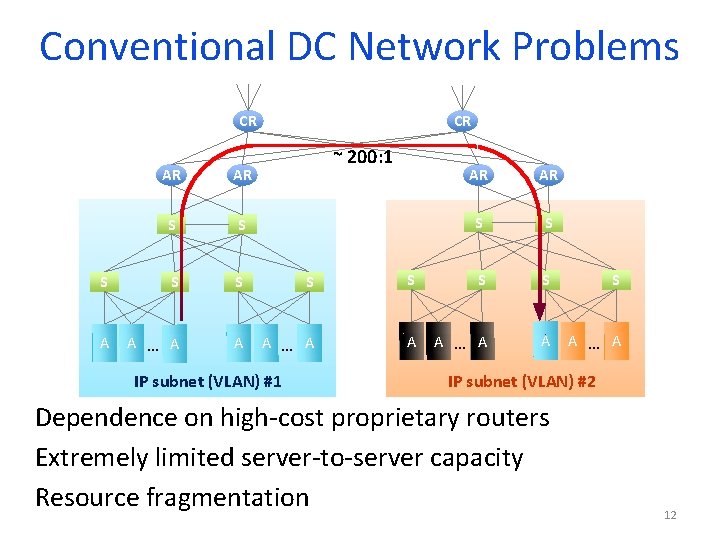

Conventional DC Network Problems CR CR ~ 200: 1 AR AR S S S A A … A IP subnet (VLAN) #2 Dependence on high-cost proprietary routers Extremely limited server-to-server capacity Resource fragmentation 12

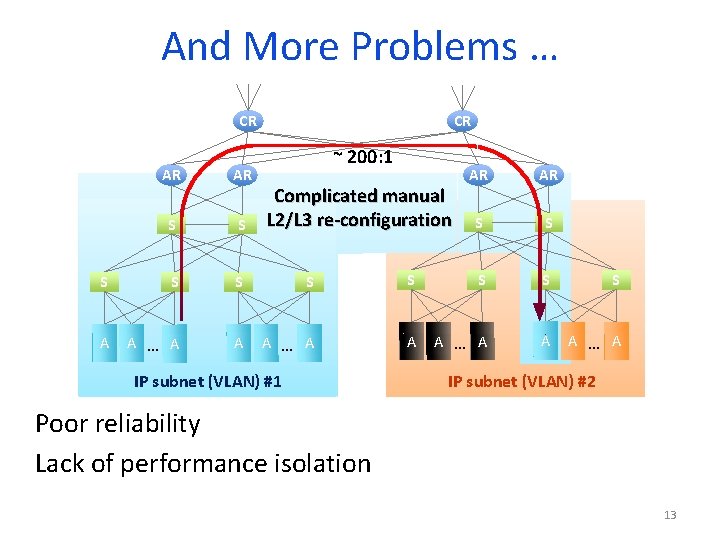

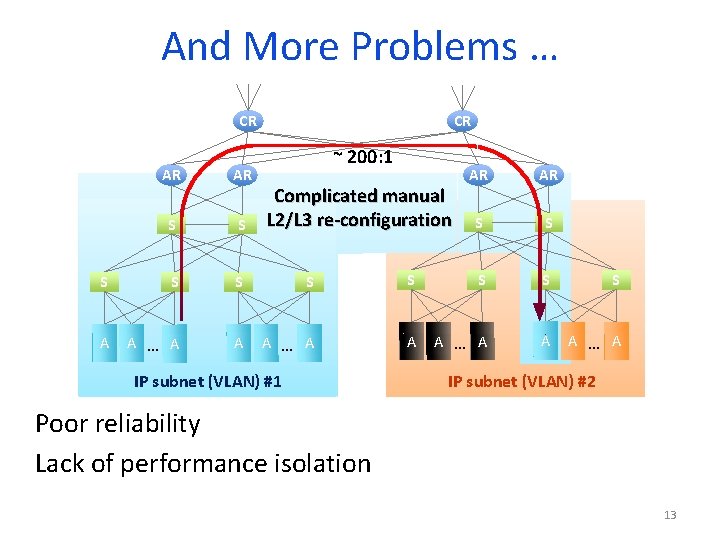

And More Problems … CR CR ~ 200: 1 AR AR S S S A A … A Complicated manual L 2/L 3 re-configuration IP subnet (VLAN) #1 IP subnet (VLAN) #2 Poor reliability Lack of performance isolation 13

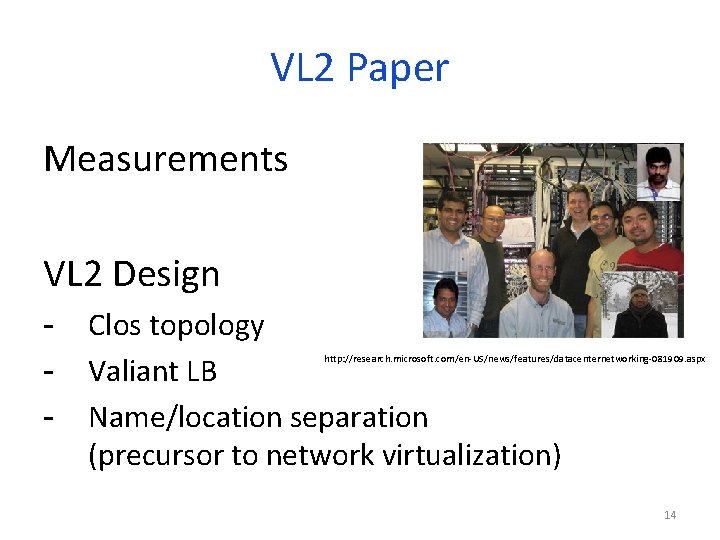

VL 2 Paper Measurements VL 2 Design - Clos topology Valiant LB Name/location separation (precursor to network virtualization) http: //research. microsoft. com/en-US/news/features/datacenternetworking-081909. aspx 14

Measurements 15

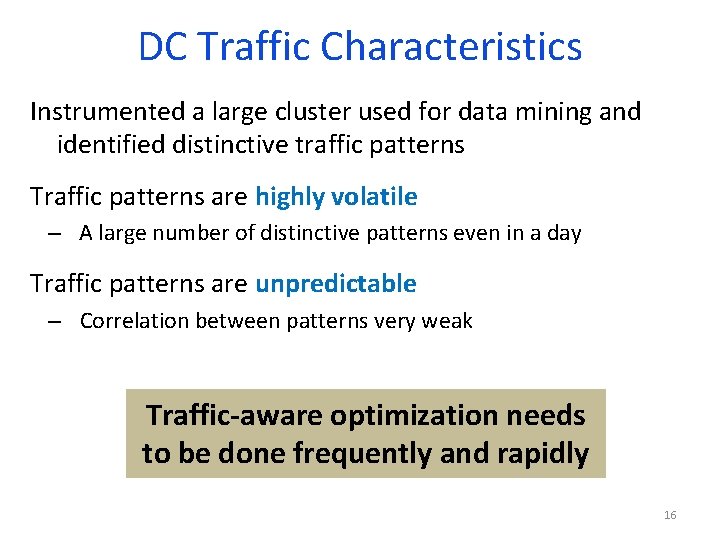

DC Traffic Characteristics Instrumented a large cluster used for data mining and identified distinctive traffic patterns Traffic patterns are highly volatile – A large number of distinctive patterns even in a day Traffic patterns are unpredictable – Correlation between patterns very weak Traffic-aware optimization needs to be done frequently and rapidly 16

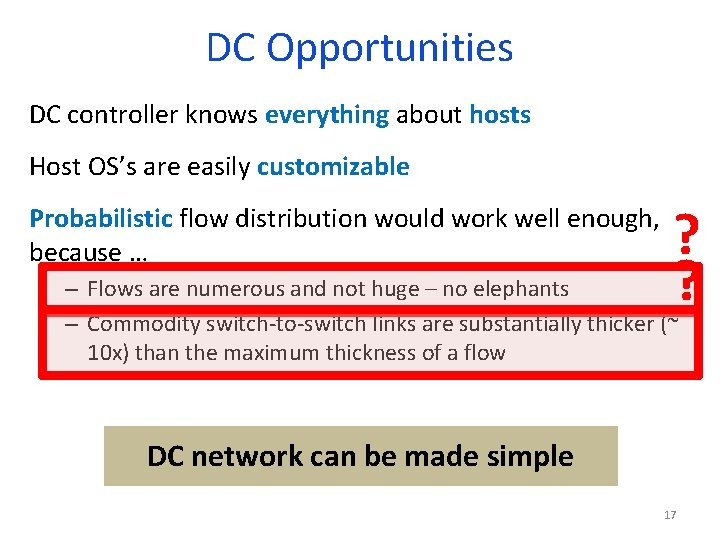

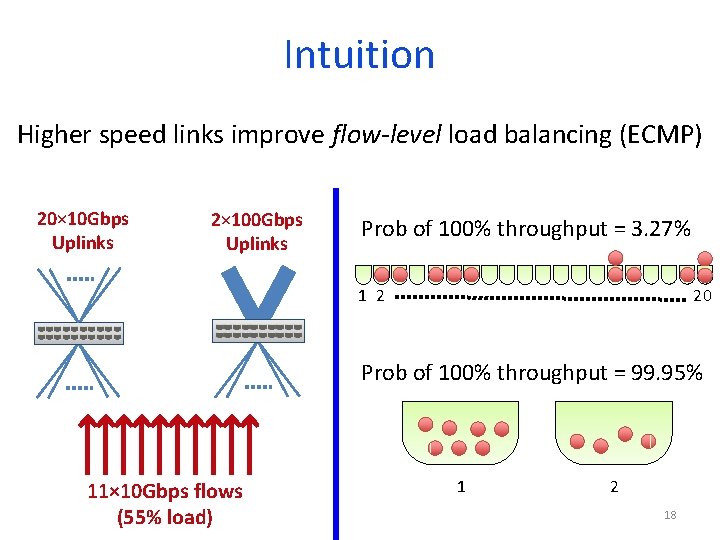

DC Opportunities DC controller knows everything about hosts Host OS’s are easily customizable Probabilistic flow distribution would work well enough, because … ? ? – Flows are numerous and not huge – no elephants – Commodity switch-to-switch links are substantially thicker (~ 10 x) than the maximum thickness of a flow DC network can be made simple 17

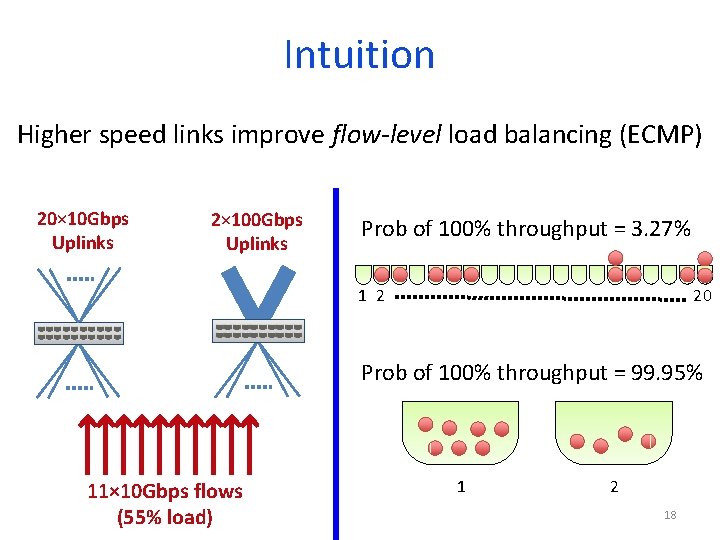

Intuition Higher speed links improve flow-level load balancing (ECMP) 20× 10 Gbps Uplinks 2× 100 Gbps Uplinks Prob of 100% throughput = 3. 27% 1 2 20 Prob of 100% throughput = 99. 95% 11× 10 Gbps flows (55% load) 1 2 18

Virtual Layer 2 19

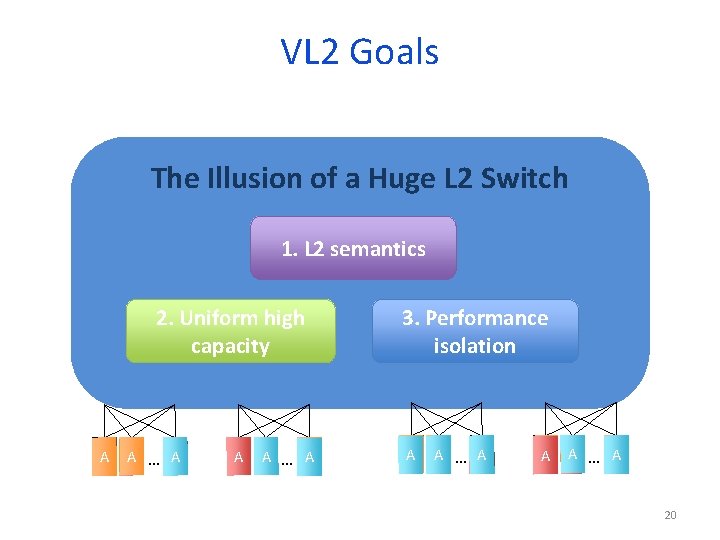

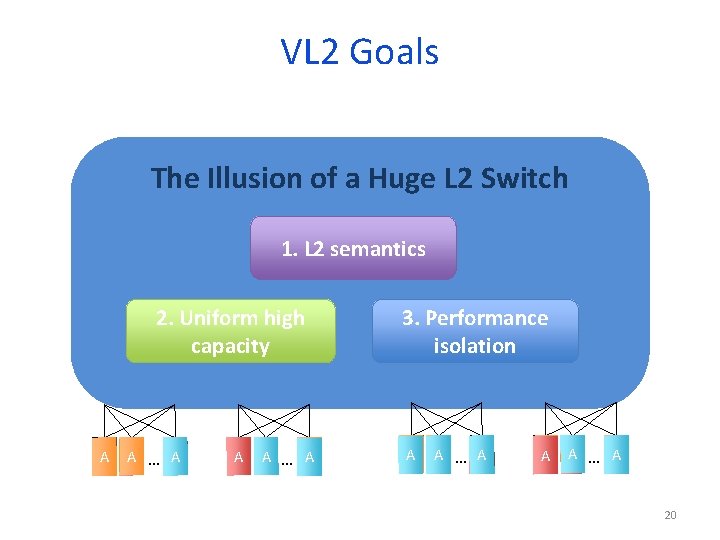

VL 2 Goals The Illusion of a Huge L 2 Switch 1. L 2 semantics 2. Uniform high capacity A A … A 3. Performance isolation A A … A 20

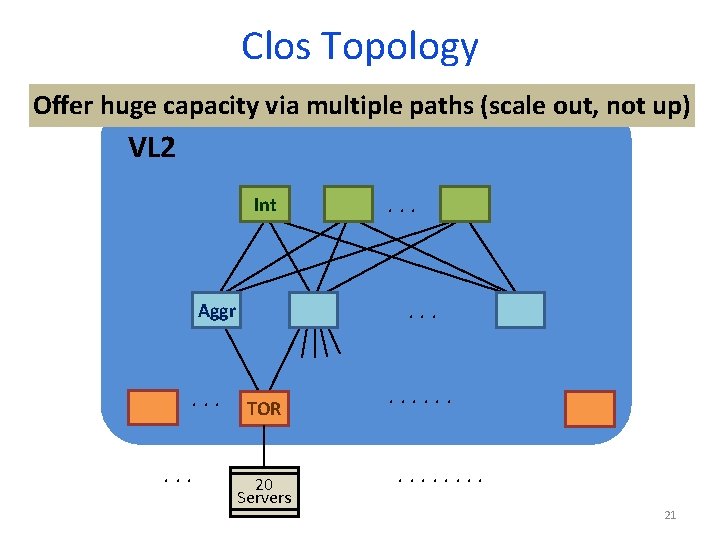

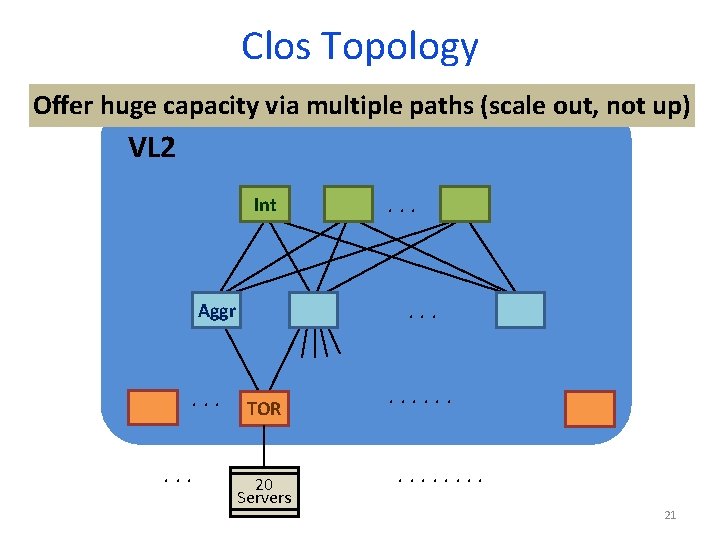

Clos Topology Offer huge capacity via multiple paths (scale out, not up) VL 2 Int . . . Aggr . . TOR 20 Servers . . . 21

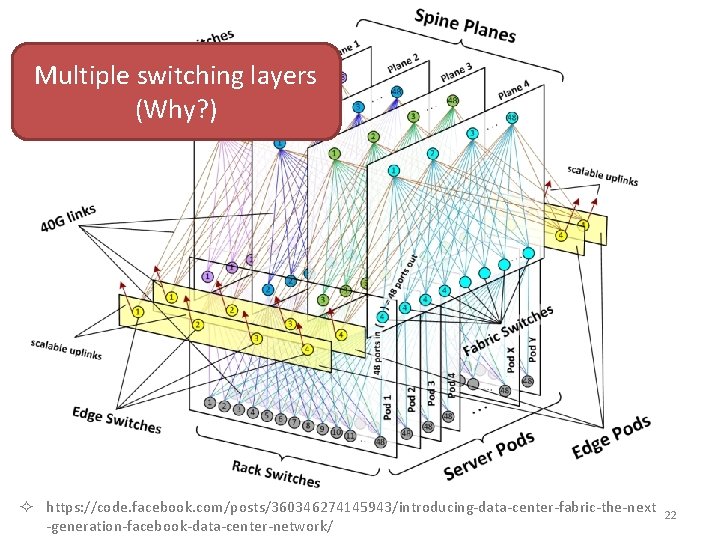

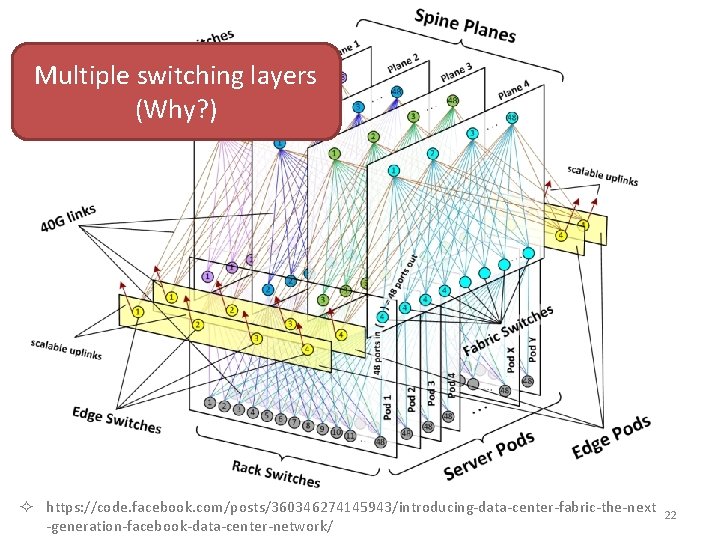

Multiple switching layers (Why? ) ² https: //code. facebook. com/posts/360346274145943/introducing-data-center-fabric-the-next -generation-facebook-data-center-network/ 22

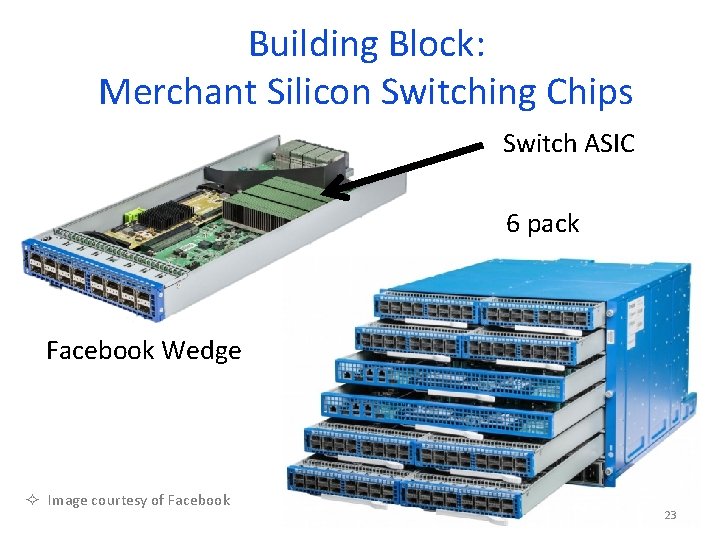

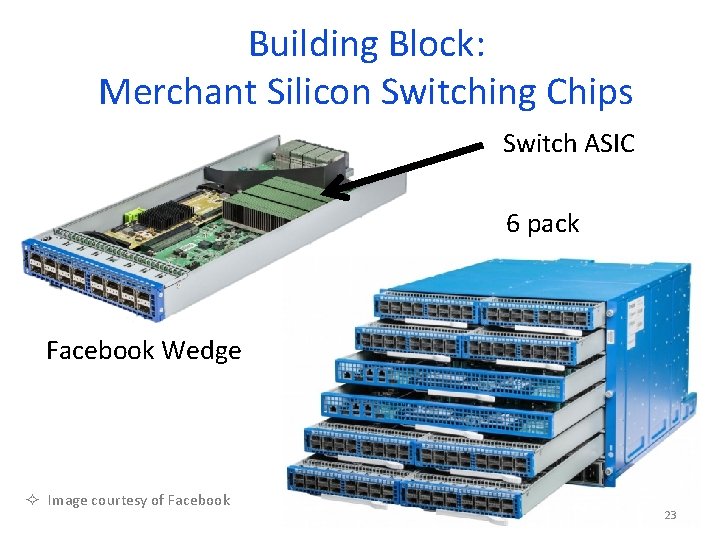

Building Block: Merchant Silicon Switching Chips Switch ASIC 6 pack Facebook Wedge ² Image courtesy of Facebook 23

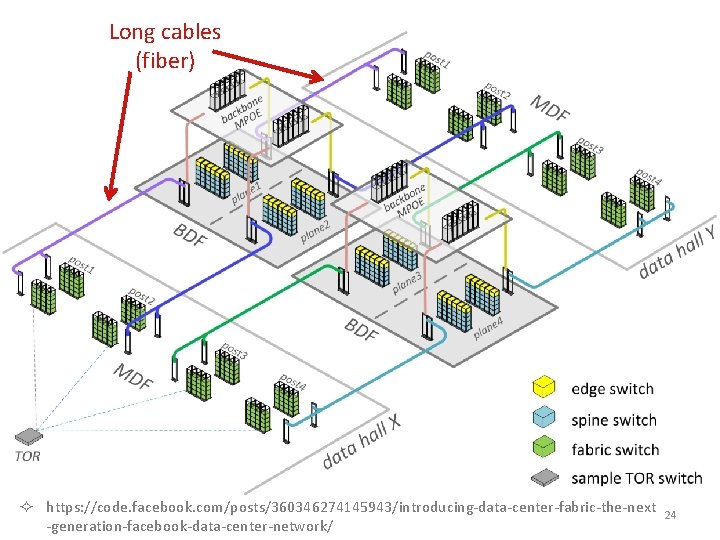

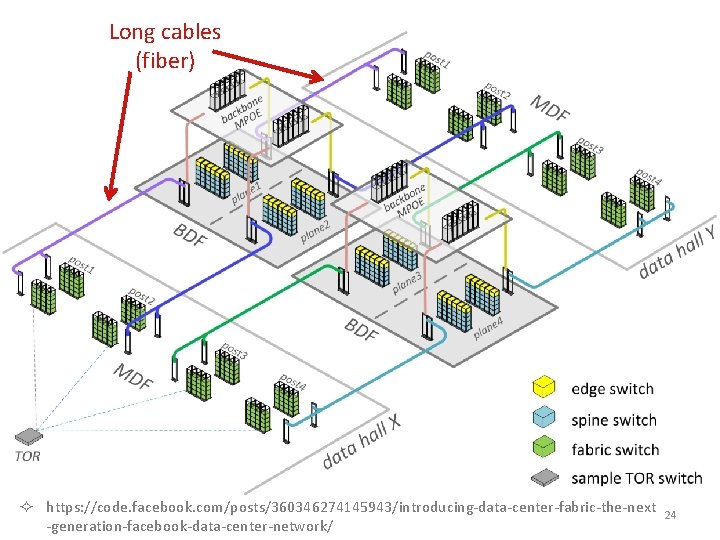

Long cables (fiber) ² https: //code. facebook. com/posts/360346274145943/introducing-data-center-fabric-the-next -generation-facebook-data-center-network/ 24

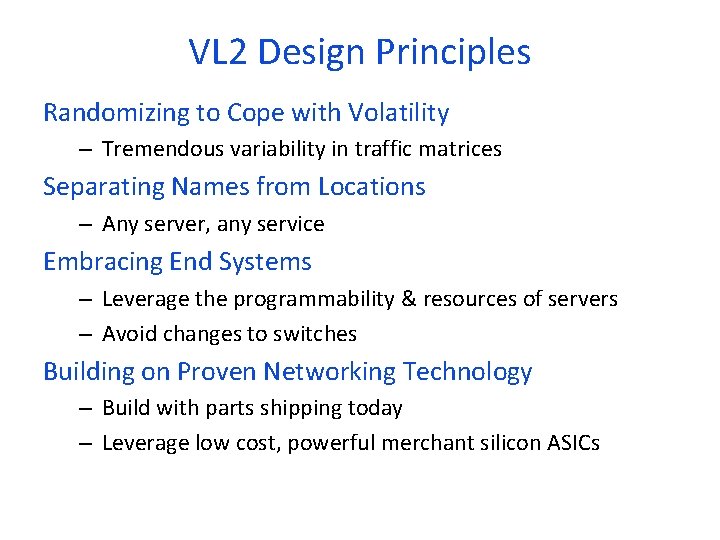

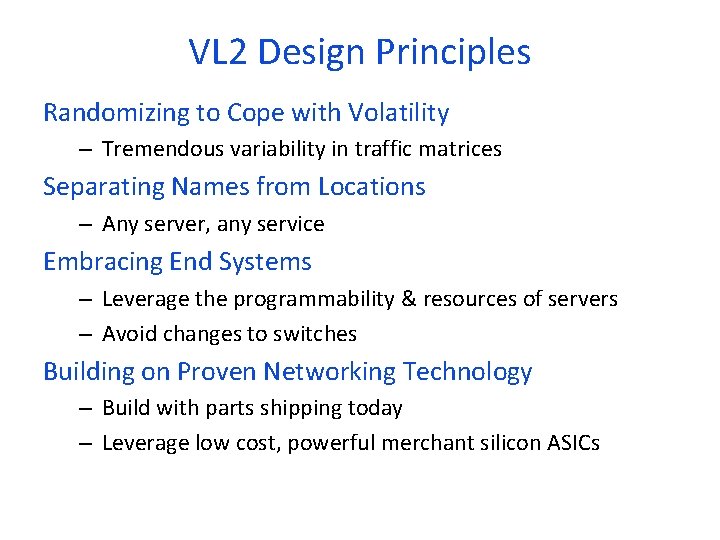

VL 2 Design Principles Randomizing to Cope with Volatility – Tremendous variability in traffic matrices Separating Names from Locations – Any server, any service Embracing End Systems – Leverage the programmability & resources of servers – Avoid changes to switches Building on Proven Networking Technology – Build with parts shipping today – Leverage low cost, powerful merchant silicon ASICs

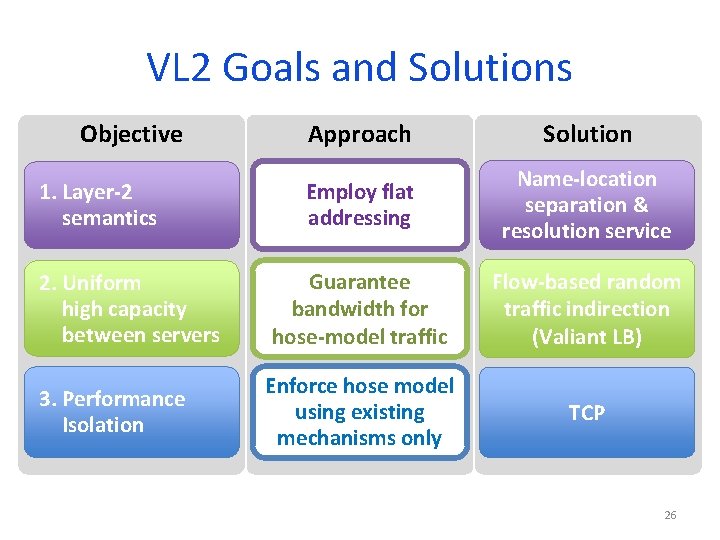

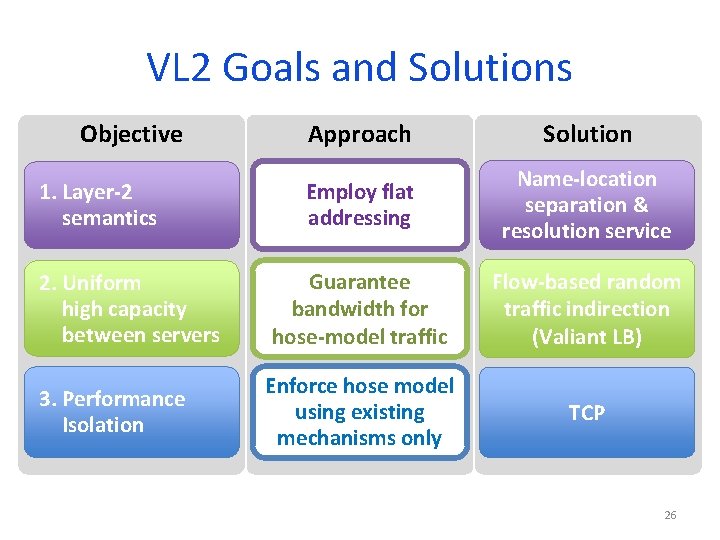

VL 2 Goals and Solutions Approach Solution Employ flat addressing Name-location separation & resolution service 2. Uniform high capacity between servers Guarantee bandwidth for hose-model traffic Flow-based random traffic indirection (Valiant LB) 3. Performance Isolation Enforce hose model using existing mechanisms only TCP Objective 1. Layer-2 semantics 26

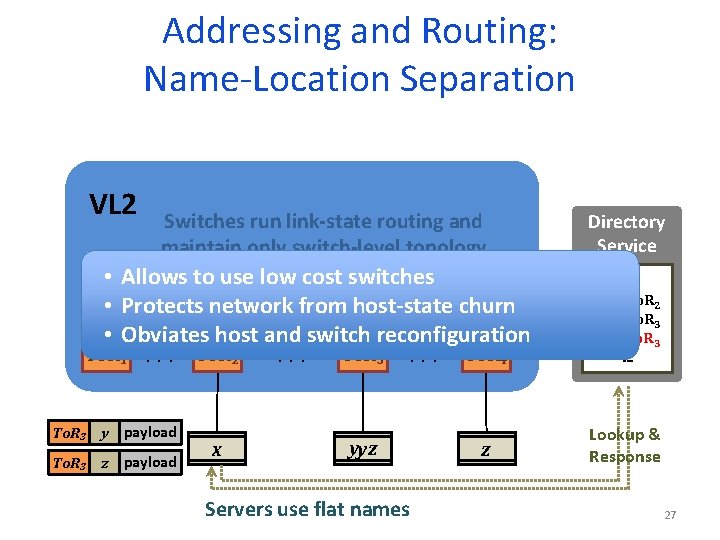

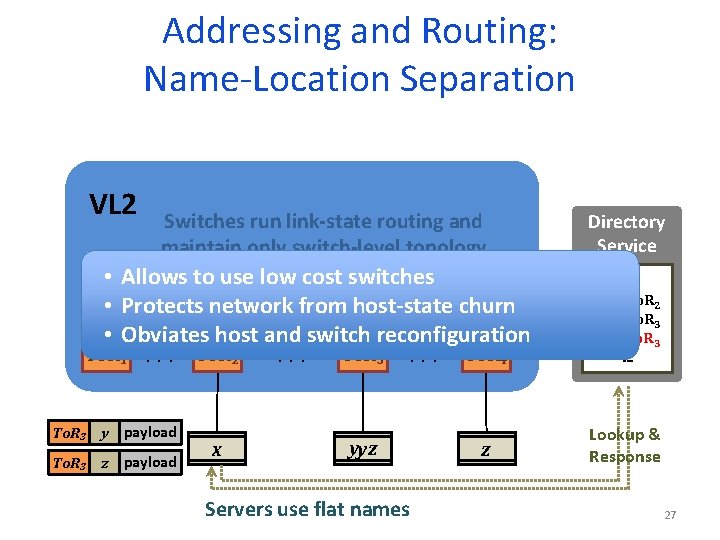

Addressing and Routing: Name-Location Separation VL 2 Switches run link-state routing and maintain only switch-level topology • Allows to use low cost switches • Protects network from host-state churn • Obviates host and switch reconfiguration To. R 1. . . To. R 2. . . To. R 3. . . To. R 4 To. R 3 y payload To. R 34 z payload x y, yz Servers use flat names z Directory Service … x To. R 2 y To. R 3 z To. R 34 … Lookup & Response 27

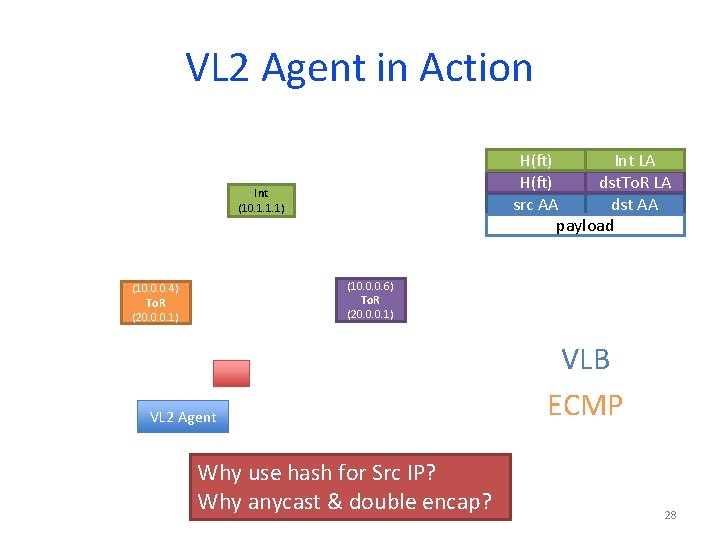

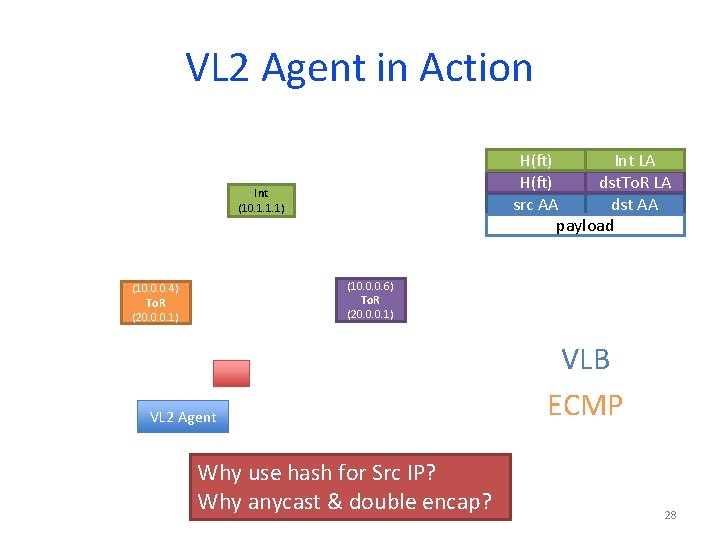

VL 2 Agent in Action H(ft) Int dst LA IP src IP dst IPLA src H(ft) IP dst. To. R dst AA src AA payload Int (10. 1. 1. 1) (10. 0. 0. 6) To. R (20. 0. 0. 1) (10. 0. 0. 4) To. R (20. 0. 0. 1) VL 2 Agent Why use hash for Src IP? Why anycast & double encap? VLB ECMP 28

Other details How does L 2 broadcast work? How does Internet communication work? 29

VL 2 Directory System Read-optimized Directory Servers for lookups Write-optimized Replicated State Machines for updates Stale mappings? 30

31