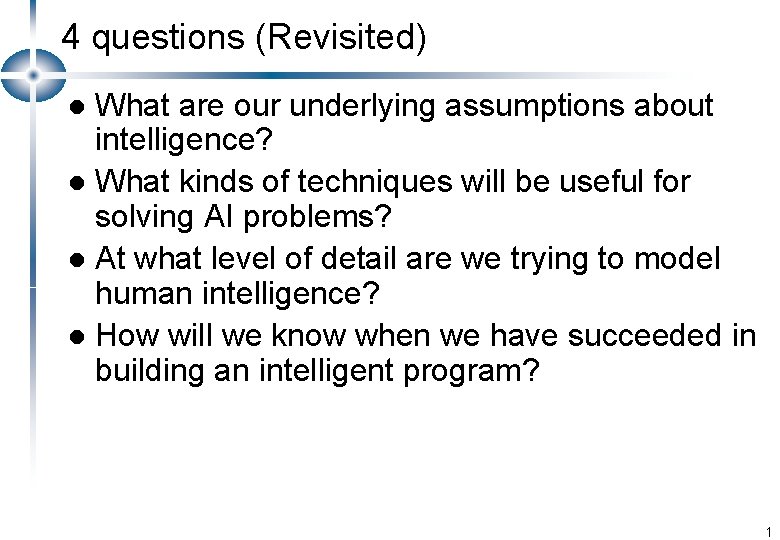

4 questions Revisited What are our underlying assumptions

- Slides: 22

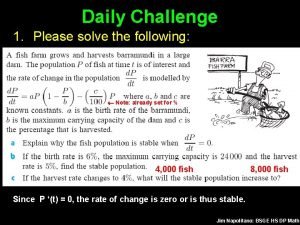

4 questions (Revisited) What are our underlying assumptions about intelligence? l What kinds of techniques will be useful for solving AI problems? l At what level of detail are we trying to model human intelligence? l How will we know when we have succeeded in building an intelligent program? l 1

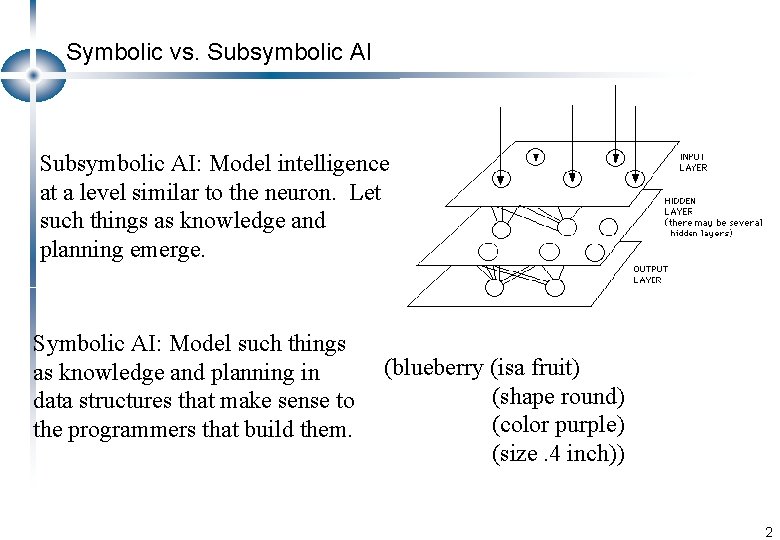

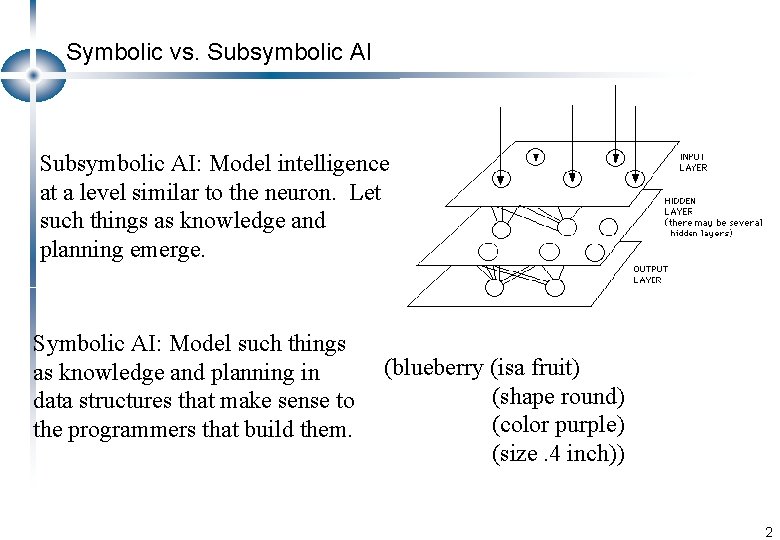

Symbolic vs. Subsymbolic AI: Model intelligence at a level similar to the neuron. Let such things as knowledge and planning emerge. Symbolic AI: Model such things as knowledge and planning in data structures that make sense to the programmers that build them. (blueberry (isa fruit) (shape round) (color purple) (size. 4 inch)) 2

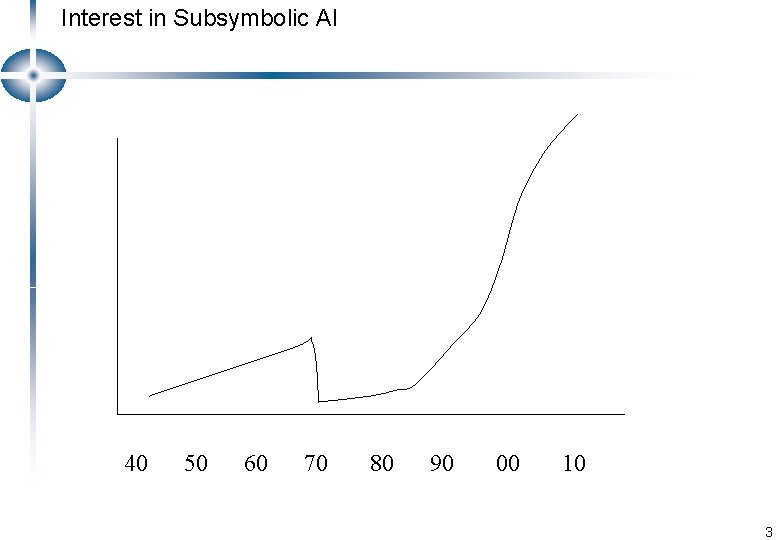

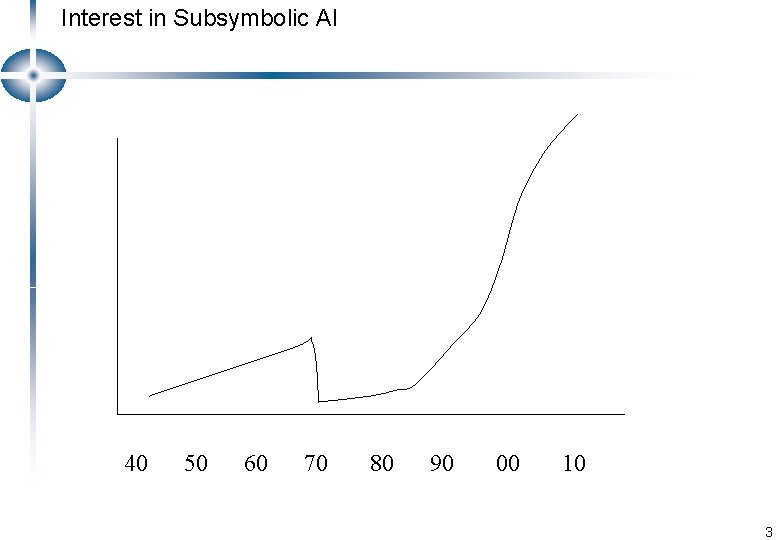

Interest in Subsymbolic AI 40 50 60 70 80 90 00 10 3

Low-level (Sensory and Motor) Processing and the Resurgence of Subsymbolic Systems • Computer vision • Motor control • Subsymbolic systems perform cognitive tasks • Detect credit card fraud • The backpropagation algorithm eliminated a formal weakness of earlier systems • Neural networks learn. 4

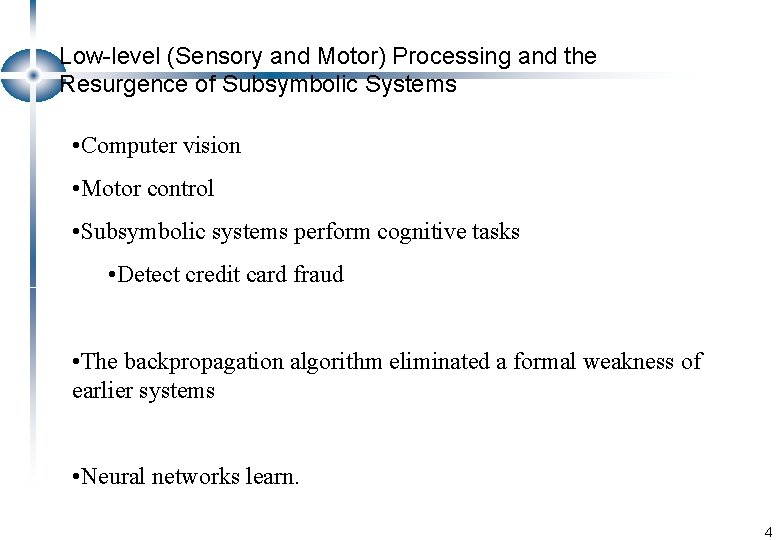

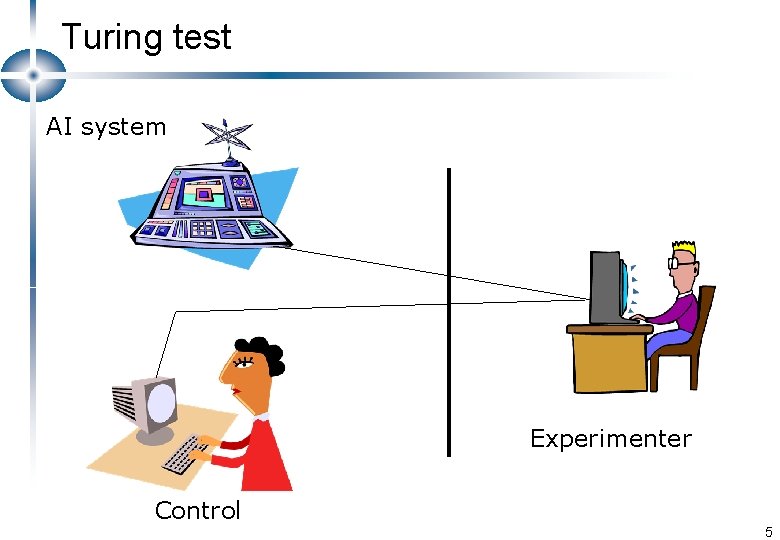

Turing test AI system Experimenter Control 5

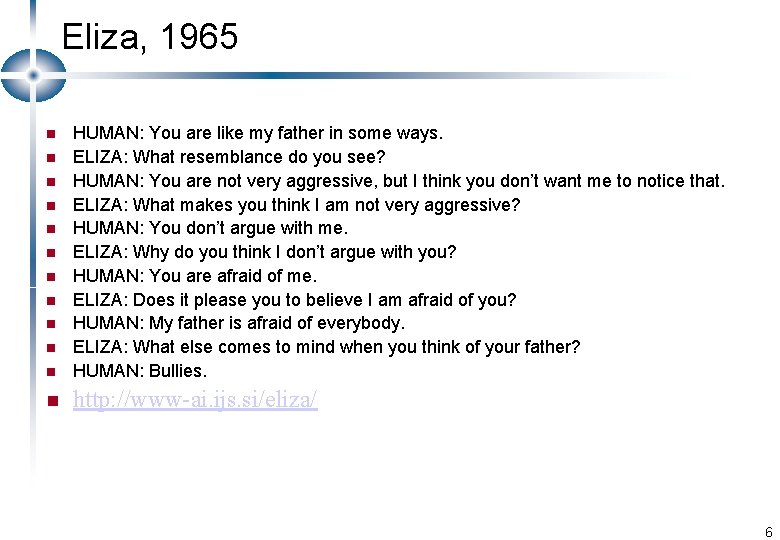

Eliza, 1965 n HUMAN: You are like my father in some ways. ELIZA: What resemblance do you see? HUMAN: You are not very aggressive, but I think you don’t want me to notice that. ELIZA: What makes you think I am not very aggressive? HUMAN: You don’t argue with me. ELIZA: Why do you think I don’t argue with you? HUMAN: You are afraid of me. ELIZA: Does it please you to believe I am afraid of you? HUMAN: My father is afraid of everybody. ELIZA: What else comes to mind when you think of your father? HUMAN: Bullies. n http: //www-ai. ijs. si/eliza/ n n n n n 6

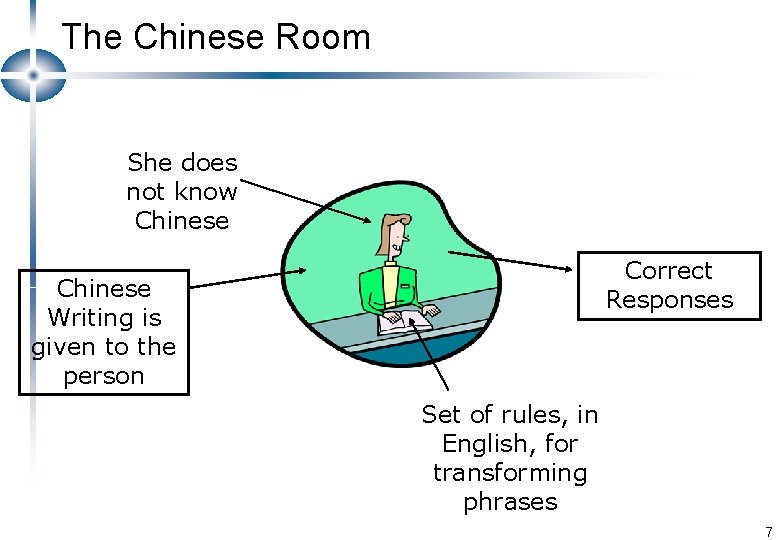

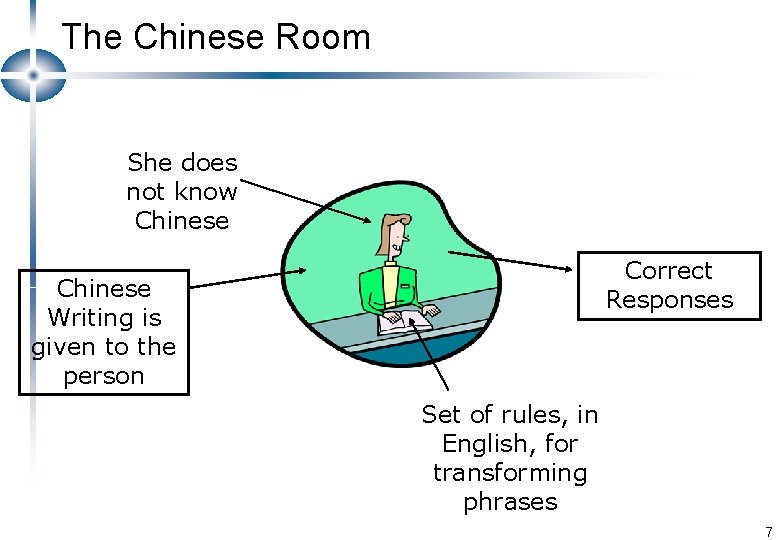

The Chinese Room She does not know Chinese Correct Responses Chinese Writing is given to the person Set of rules, in English, for transforming phrases 7

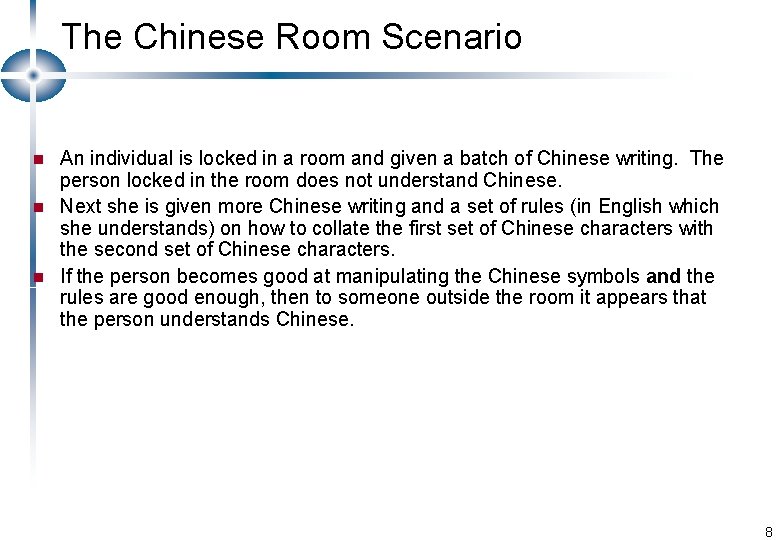

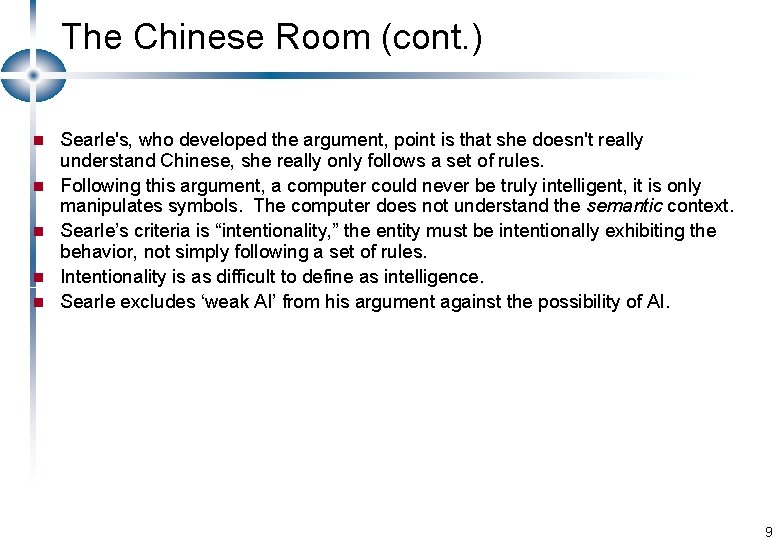

The Chinese Room Scenario n n n An individual is locked in a room and given a batch of Chinese writing. The person locked in the room does not understand Chinese. Next she is given more Chinese writing and a set of rules (in English which she understands) on how to collate the first set of Chinese characters with the second set of Chinese characters. If the person becomes good at manipulating the Chinese symbols and the rules are good enough, then to someone outside the room it appears that the person understands Chinese. 8

The Chinese Room (cont. ) n n n Searle's, who developed the argument, point is that she doesn't really understand Chinese, she really only follows a set of rules. Following this argument, a computer could never be truly intelligent, it is only manipulates symbols. The computer does not understand the semantic context. Searle’s criteria is “intentionality, ” the entity must be intentionally exhibiting the behavior, not simply following a set of rules. Intentionality is as difficult to define as intelligence. Searle excludes ‘weak AI’ from his argument against the possibility of AI. 9

Philosophical extremes in AI Weak AI vs. Strong AI § § Weak AI believes that machine intelligence need only mimic the behavior of human intelligence Strong AI demands that machine intelligence must mimic the internal processes of human intelligence, not just the external behavior 10

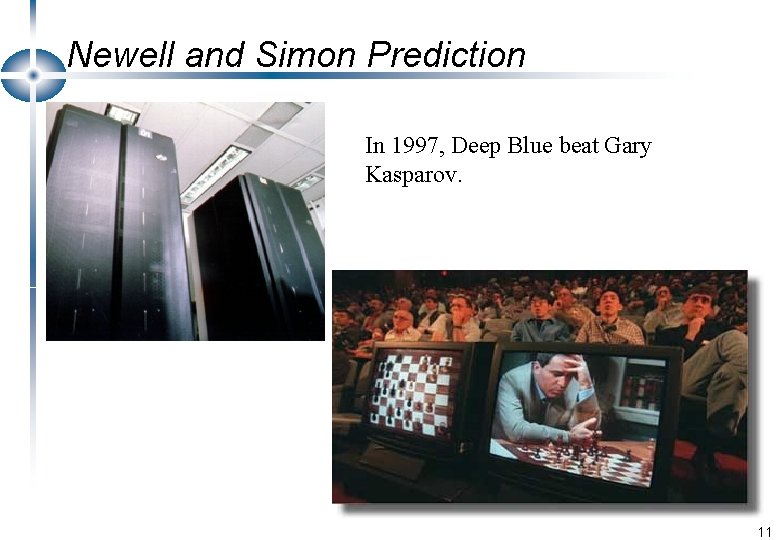

Newell and Simon Prediction In 1997, Deep Blue beat Gary Kasparov. 11

Why Did They Get it Wrong? They failed to understand at least three key things: • The need for knowledge (lots of it) • Scalability and the problem of complexity and exponential growth • The need to perceive the world 12

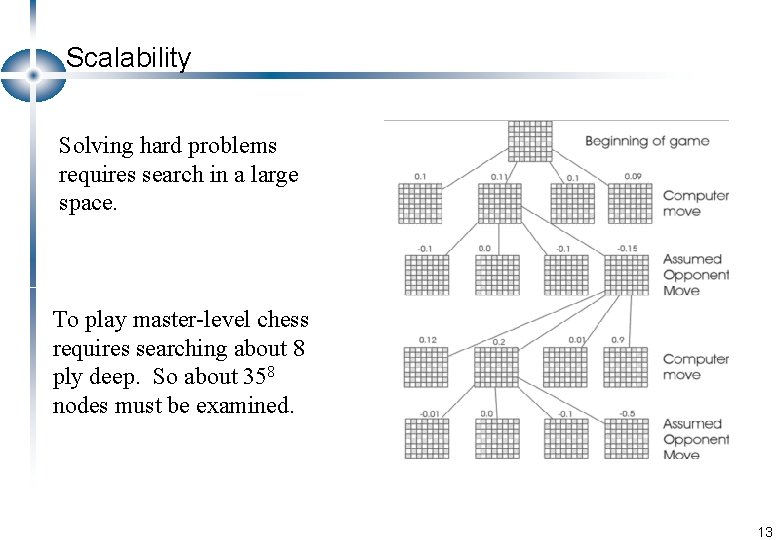

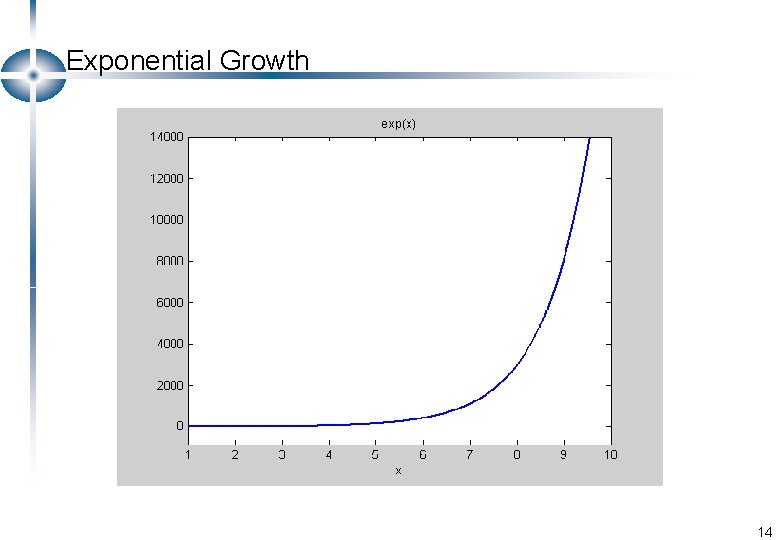

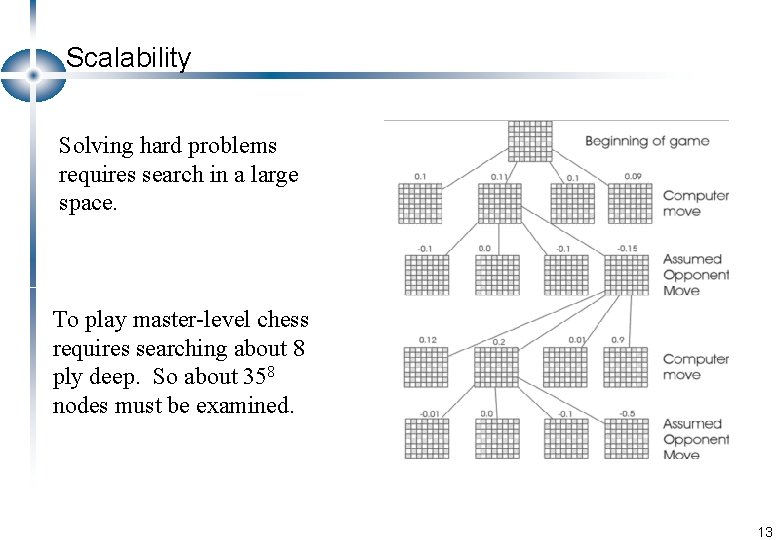

Scalability Solving hard problems requires search in a large space. To play master-level chess requires searching about 8 ply deep. So about 358 nodes must be examined. 13

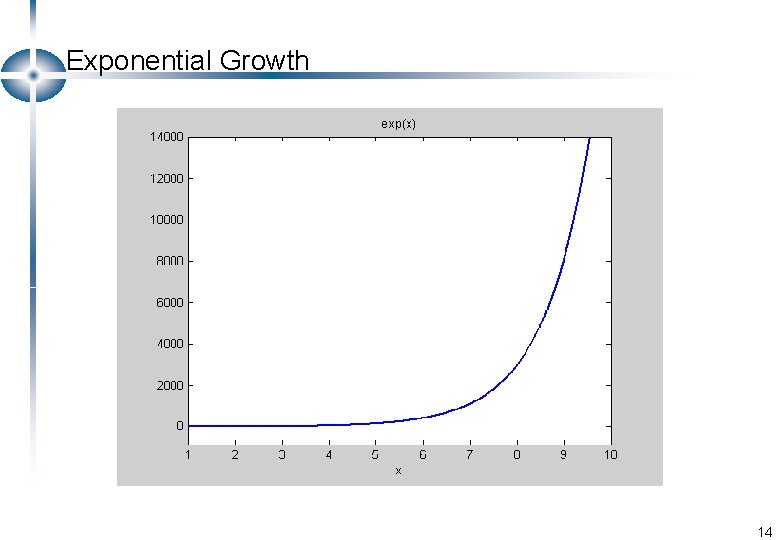

Exponential Growth 14

But Chess is Easy • The rules are simple enough to fit on one page • The branching factor is only 35. 15

A Harder One John saw a boy and a girl with a red wagon with one blue and one white wheel dragging on the ground under a tree with huge branches. 16

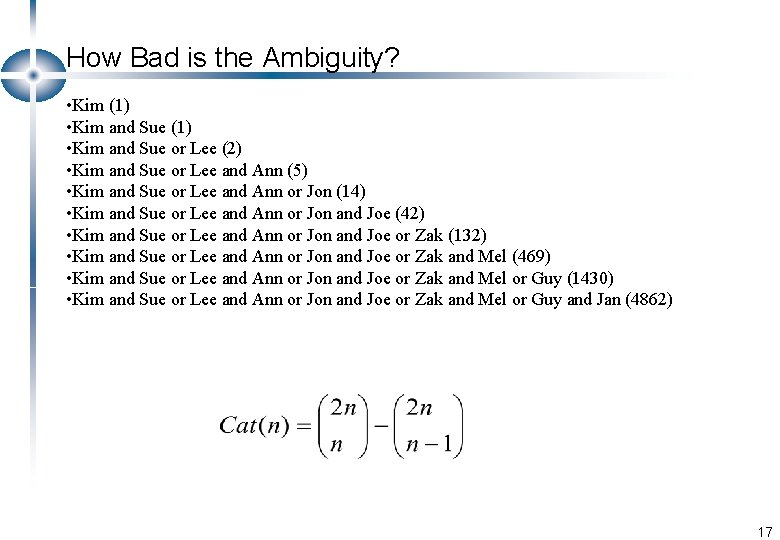

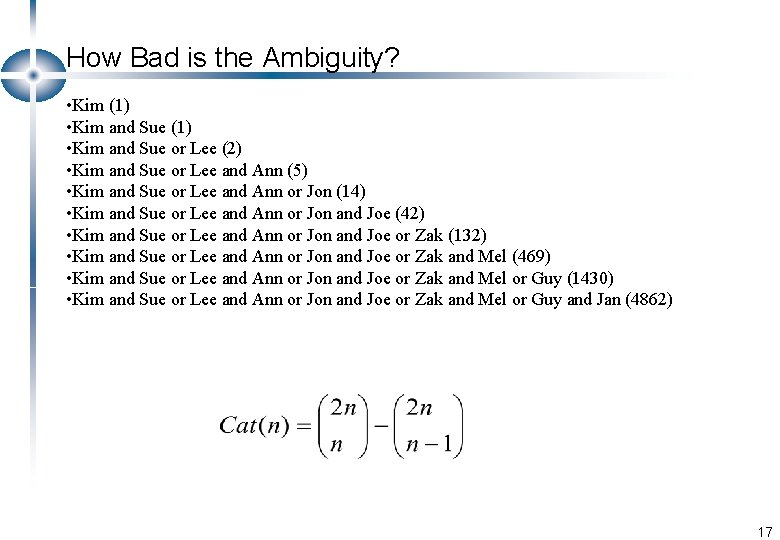

How Bad is the Ambiguity? • Kim (1) • Kim and Sue or Lee (2) • Kim and Sue or Lee and Ann (5) • Kim and Sue or Lee and Ann or Jon (14) • Kim and Sue or Lee and Ann or Jon and Joe (42) • Kim and Sue or Lee and Ann or Jon and Joe or Zak (132) • Kim and Sue or Lee and Ann or Jon and Joe or Zak and Mel (469) • Kim and Sue or Lee and Ann or Jon and Joe or Zak and Mel or Guy (1430) • Kim and Sue or Lee and Ann or Jon and Joe or Zak and Mel or Guy and Jan (4862) 17

The Differences Between Us and Them Emotions Understanding Consciousness 18

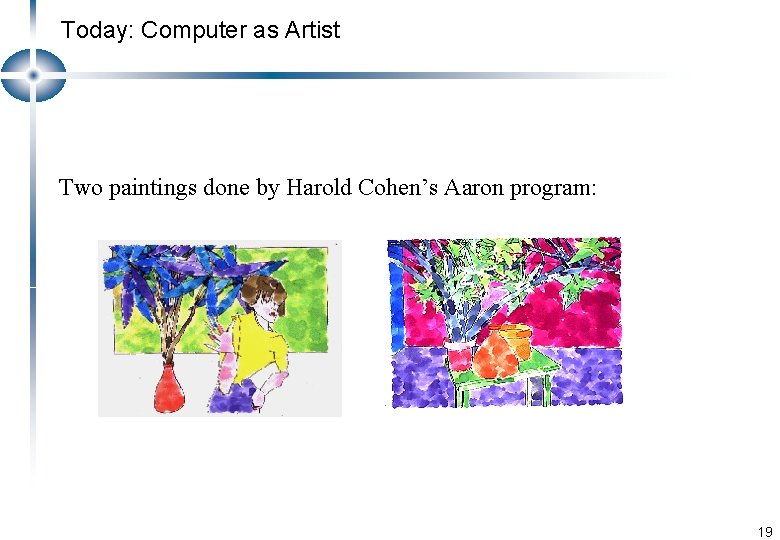

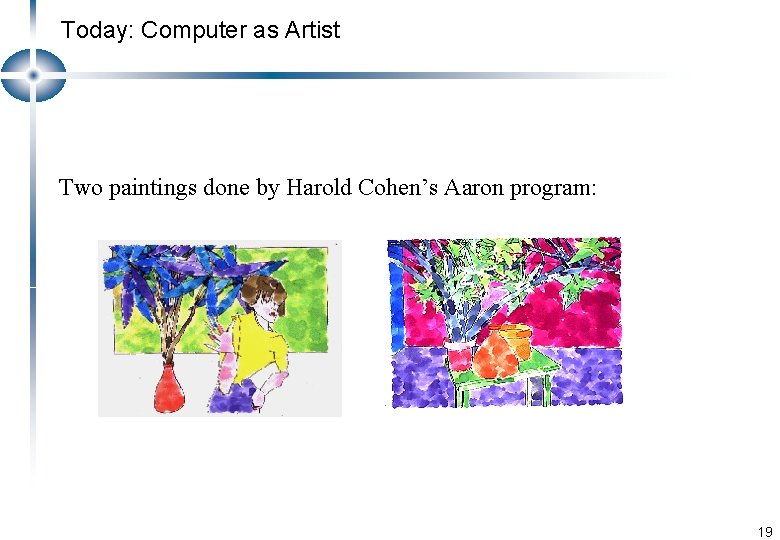

Today: Computer as Artist Two paintings done by Harold Cohen’s Aaron program: 19

Solving AI Problems l Define and analyse the problem – What knowledge is necessary? l Choose a problem-solving technique l e. g. Chess – What information do we need to represent in a chess-playing program? 20

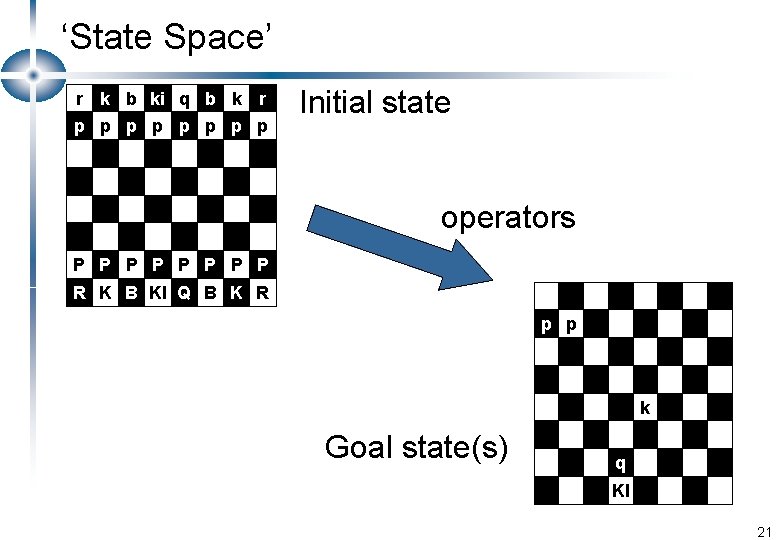

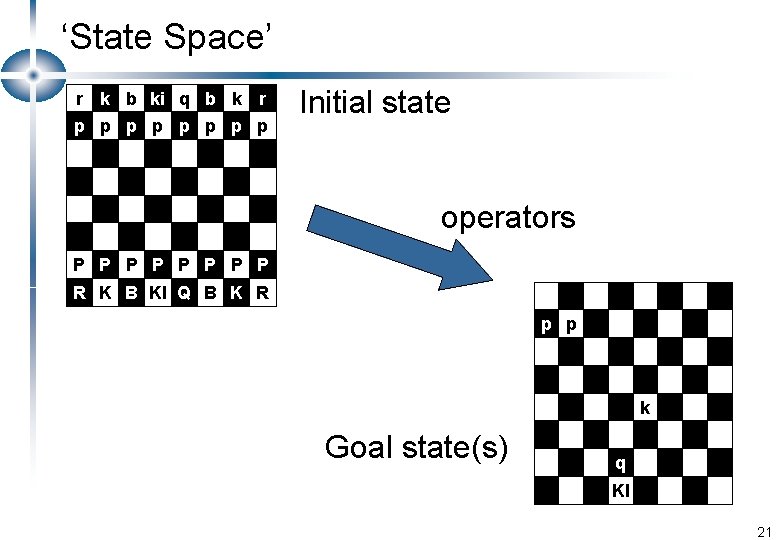

‘State Space’ r k b ki q b k r p p p p l Initial state operators P P P P R K B KI Q B K R p p k Goal state(s) q KI 21

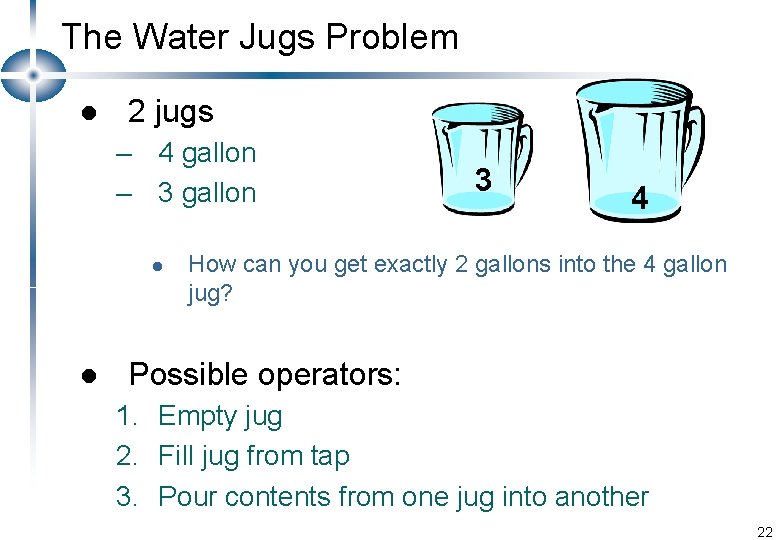

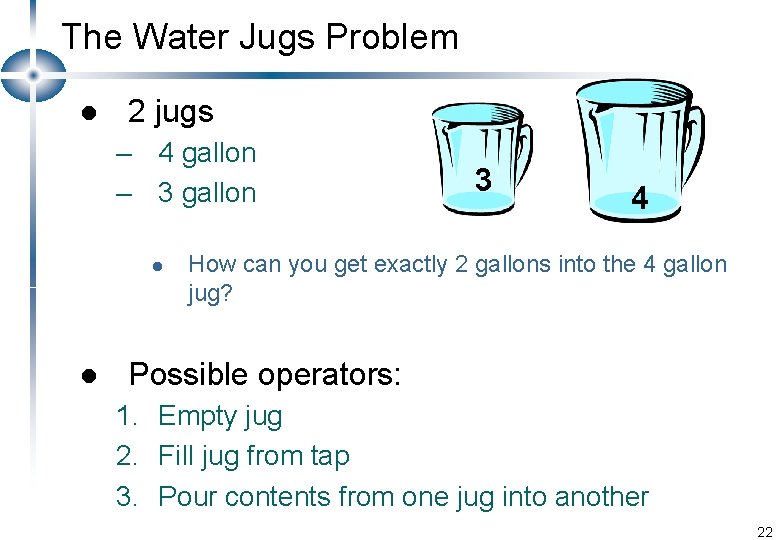

The Water Jugs Problem l 2 jugs – 4 gallon – 3 gallon l l 3 4 How can you get exactly 2 gallons into the 4 gallon jug? Possible operators: 1. Empty jug 2. Fill jug from tap 3. Pour contents from one jug into another 22

Insidan region jh

Insidan region jh Math lesson

Math lesson Zhuoyue zhao

Zhuoyue zhao Underlying assumptions of ai

Underlying assumptions of ai Our personal filters assumptions

Our personal filters assumptions Opinion vs argument

Opinion vs argument Common underlying proficiency

Common underlying proficiency Underlying theory in research

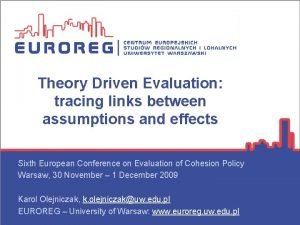

Underlying theory in research Imperiaalism

Imperiaalism Ter death certificate

Ter death certificate Underlying magic pitch deck

Underlying magic pitch deck What are the underlying assets in derivatives

What are the underlying assets in derivatives The underlying theme of the conceptual framework is

The underlying theme of the conceptual framework is Underlying causes of ww1

Underlying causes of ww1 Powder keg wwi

Powder keg wwi Ww1 causes

Ww1 causes Basic mechanisms underlying seizures and epilepsy

Basic mechanisms underlying seizures and epilepsy Underlying technology

Underlying technology Underlying undirected graph

Underlying undirected graph Underlying technology

Underlying technology Module 19 visual organization and interpretation

Module 19 visual organization and interpretation Underlying network

Underlying network Pitch deck template by guy kawasaki

Pitch deck template by guy kawasaki