250 000 700 600 500 400 300 200

- Slides: 20

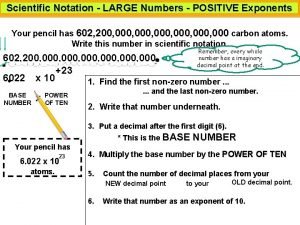

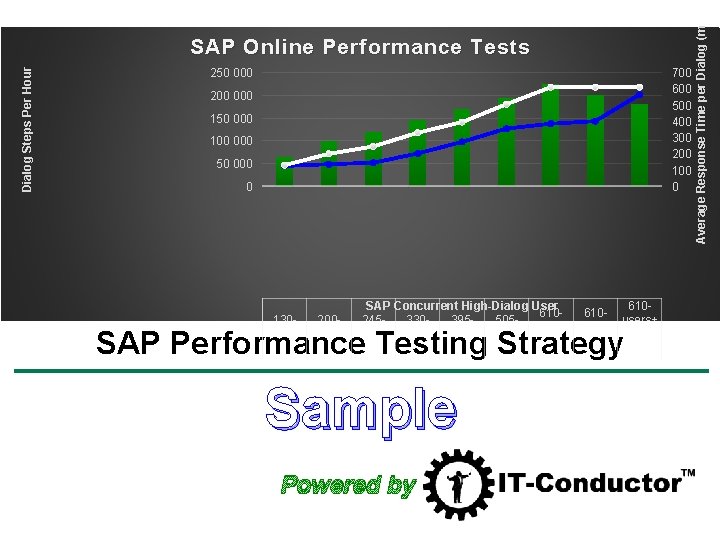

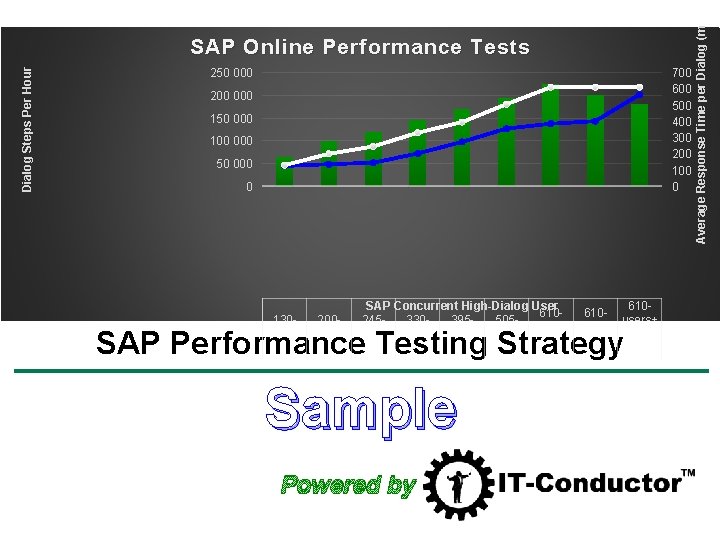

250 000 700 600 500 400 300 200 100 0 200 000 150 000 100 000 50 000 0 SAP Concurrent High-Dialog User 610610610 users+ 130200245330395505 users+ WAN+8 users users WAN LUN Number of Dialog Steps 65 829 100 331121 180146 415170 365195 653225 259202 504182 639 Average response Time/Dialog Step (ms) 125 136 145 202 274 355 385 401 564 Concurrent Users 130 200 245 330 395 505 610 610 SAP Performance Testing Strategy Sample Powered by Average Response Time per Dialog (ms) Dialog Steps Per Hour SAP Online Performance Tests

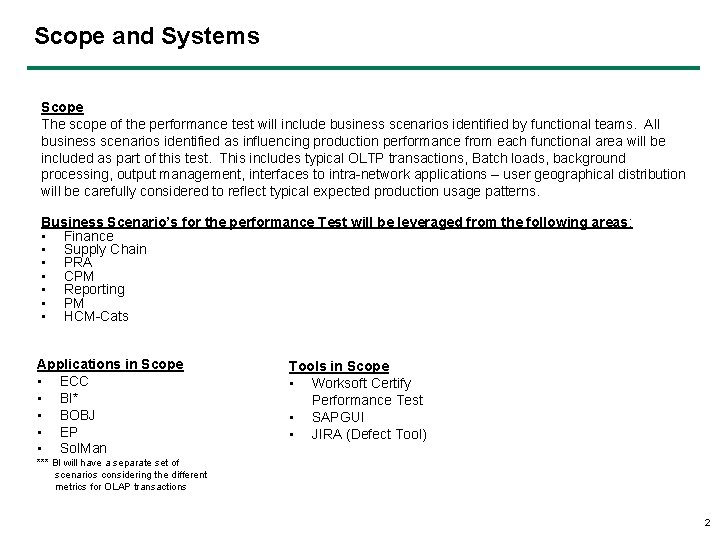

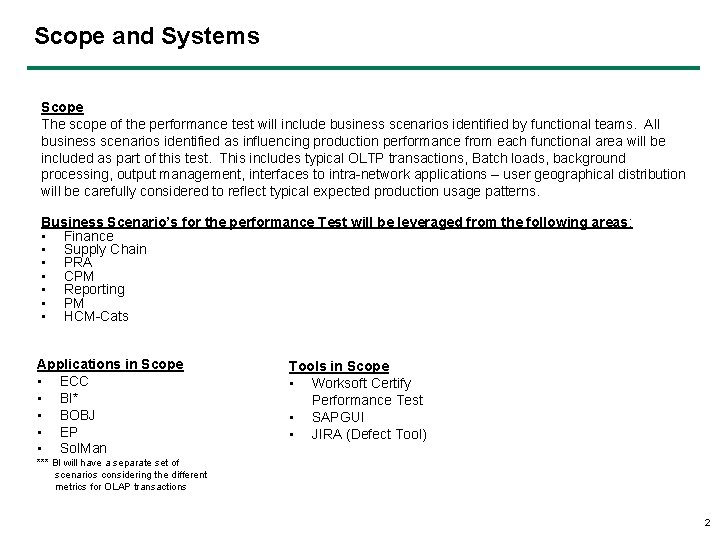

Scope and Systems Scope The scope of the performance test will include business scenarios identified by functional teams. All business scenarios identified as influencing production performance from each functional area will be included as part of this test. This includes typical OLTP transactions, Batch loads, background processing, output management, interfaces to intra-network applications – user geographical distribution will be carefully considered to reflect typical expected production usage patterns. Business Scenario’s for the performance Test will be leveraged from the following areas: • Finance • Supply Chain • PRA • CPM • Reporting • PM • HCM-Cats Applications in Scope • ECC • BI* • BOBJ • EP • Sol. Man Tools in Scope • Worksoft Certify Performance Test • SAPGUI • JIRA (Defect Tool) *** BI will have a separate set of scenarios considering the different metrics for OLAP transactions 2

Performance Testing Objectives • Verify load of 60 K dialog steps per hours with <1 second average response time • Measure average response time with 90 k, 120 K, and 150 K dialog steps • Measure GUI time with network delay simulated for location XXX and YYY • Stress Test Application Server • HA Failover 3

Assumptions • There will be no changes to Requirements during or after the performance test. • Performance test environment has all required infrastructure, software, and licenses to simulate “production like” conditions. • Performance Test architecture reflects that of production architecture • The Performance Test team (Basis Team) will send communications of test timeframes to all appropriate teams. • Latency data that is available, to be incorporated into the Performance Test to simulate geographical distribution of users, is relevant and up to date for the Performance Test. • Product test of all major business scenarios, with impact to production performance, would have been completed prior to beginning of the performance test execution. • The test will be managed from XXX; we will simulate YYY and ZZZ load • The data conversion team will monitor and address performance of data conversion procedures during their scheduled conversion tests. • Application Product Test 1 will be completed for all business scenarios that are identified for performance test usage, prior to performance test execution. This will ensure the scenario is functionally valid to avoid inaccurate Performance Test results. • Performance Test activities will be staggered to match APT 1& 2 (Product Test ) completion. Once a scenario has been completed in APT 1/2, Performance Test prep and execution activities will begin on that scenario. • Before moving into User Test, Performance Test will have been executed • JIRA will be used for all defects • Data will be from Mock 1 • Reporting will be the same as other test phases 4

Performance Requirements List of Performance Requirements for Perf Test as defined in the Platform_Services_for_SAP_ADW_(final) document by Microsoft. • • Dialog Response Time = 1000 ms or less HTTP Response Time = 2000 ms or less Database Response Time = 40% of dialog response time Batch Response Time = Current duration or better I/O Read Service Times = 10 – 15 ms I/O Write Service Times = 1 – 5 ms Buffer Cache Hit Ratio = 99% Page Life Expectancy = 350 seconds or higher Monitoring of system resources performed by Basis and infrastructure teams Signoff Result of each scenario evaluated by Project owner and signed off before the start of next scenario 5

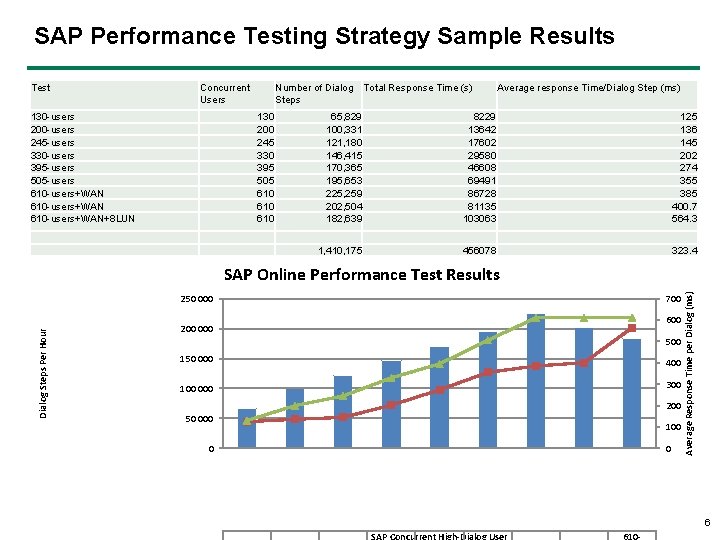

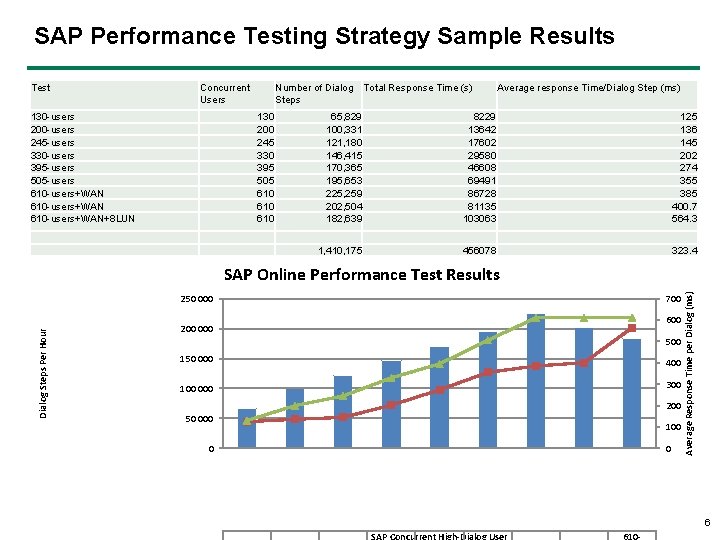

SAP Performance Testing Strategy Sample Results Test Concurrent Users 130 -users 200 -users 245 -users 330 -users 395 -users 505 -users 610 -users+WAN+8 LUN Number of Dialog Steps 130 200 245 330 395 505 610 610 Total Response Time (s) Average response Time/Dialog Step (ms) 65, 829 100, 331 121, 180 146, 415 170, 365 195, 653 225, 259 202, 504 182, 639 8229 13642 17602 29580 46608 69491 86728 81135 103063 125 136 145 202 274 355 385 400. 7 564. 3 1, 410, 175 456078 323. 4 Dialog Steps Per Hour 250 000 700 600 200 000 500 150 000 400 100 000 300 200 50 000 100 0 0 Average Response Time per Dialog (ms) SAP Online Performance Test Results 6 SAP Concurrent High-Dialog User 610 -

User Requirements Current production system has a peak user base of 675 and an average daily user base of 500. Adding 20% contingency we will test with a Peak Usage of 810. For each scenario, we will test with the average estimated user base to be determined by the testing team. 7

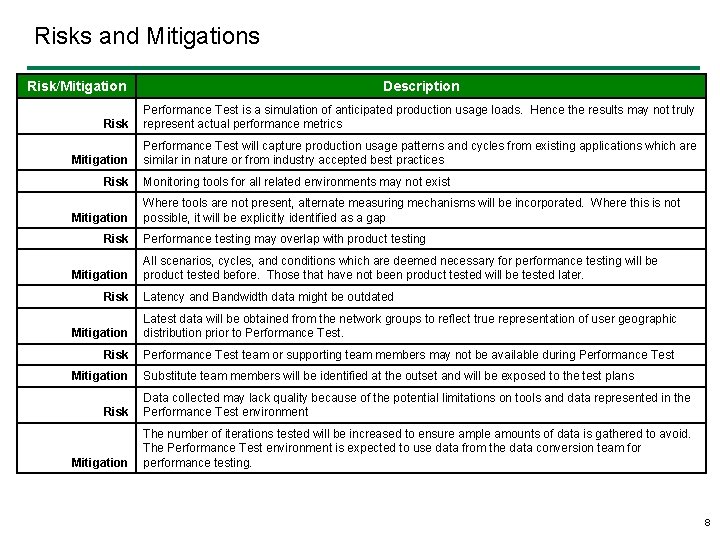

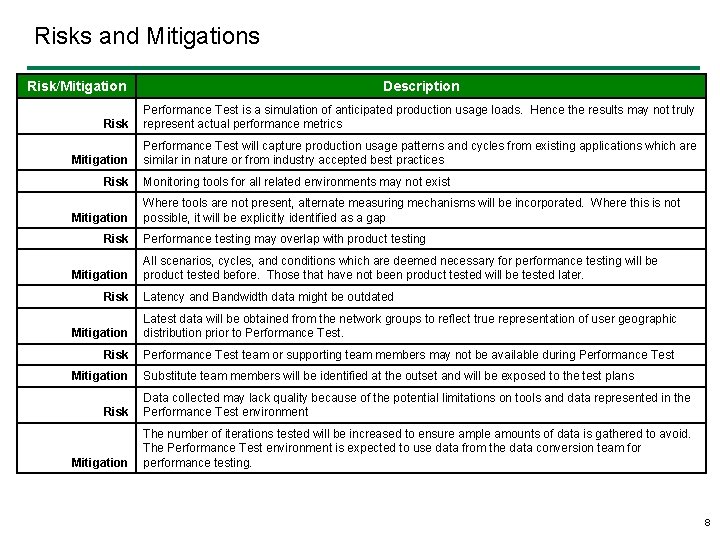

Risks and Mitigations Risk/Mitigation Description Risk Performance Test is a simulation of anticipated production usage loads. Hence the results may not truly represent actual performance metrics Mitigation Performance Test will capture production usage patterns and cycles from existing applications which are similar in nature or from industry accepted best practices Risk Mitigation Risk Monitoring tools for all related environments may not exist Where tools are not present, alternate measuring mechanisms will be incorporated. Where this is not possible, it will be explicitly identified as a gap Performance testing may overlap with product testing All scenarios, cycles, and conditions which are deemed necessary for performance testing will be product tested before. Those that have not been product tested will be tested later. Latency and Bandwidth data might be outdated Mitigation Latest data will be obtained from the network groups to reflect true representation of user geographic distribution prior to Performance Test. Risk Performance Test team or supporting team members may not be available during Performance Test Mitigation Substitute team members will be identified at the outset and will be exposed to the test plans Risk Data collected may lack quality because of the potential limitations on tools and data represented in the Performance Test environment Mitigation The number of iterations tested will be increased to ensure ample amounts of data is gathered to avoid. The Performance Test environment is expected to use data from the data conversion team for performance testing. 8

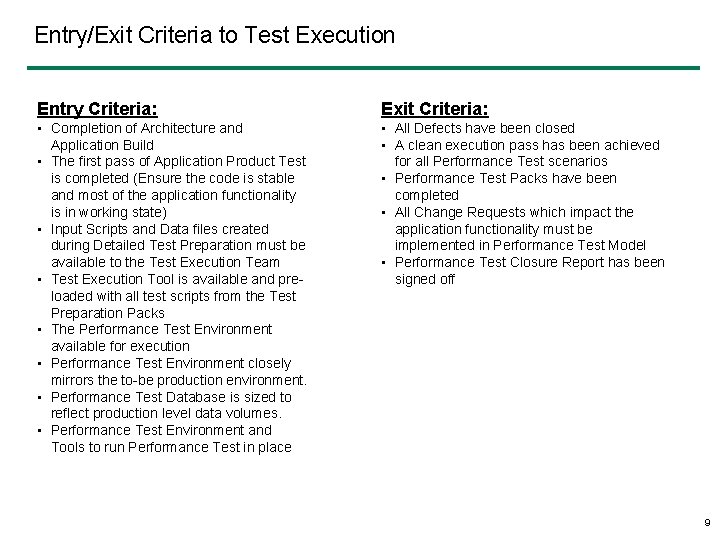

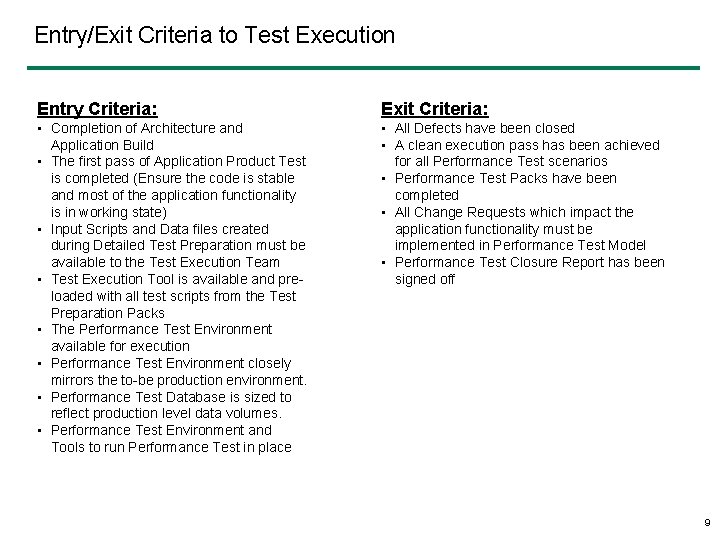

Entry/Exit Criteria to Test Execution Entry Criteria: Exit Criteria: • Completion of Architecture and Application Build • The first pass of Application Product Test is completed (Ensure the code is stable and most of the application functionality is in working state) • Input Scripts and Data files created during Detailed Test Preparation must be available to the Test Execution Team • Test Execution Tool is available and preloaded with all test scripts from the Test Preparation Packs • The Performance Test Environment available for execution • Performance Test Environment closely mirrors the to-be production environment. • Performance Test Database is sized to reflect production level data volumes. • Performance Test Environment and Tools to run Performance Test in place • All Defects have been closed • A clean execution pass has been achieved for all Performance Test scenarios • Performance Test Packs have been completed • All Change Requests which impact the application functionality must be implemented in Performance Test Model • Performance Test Closure Report has been signed off 9

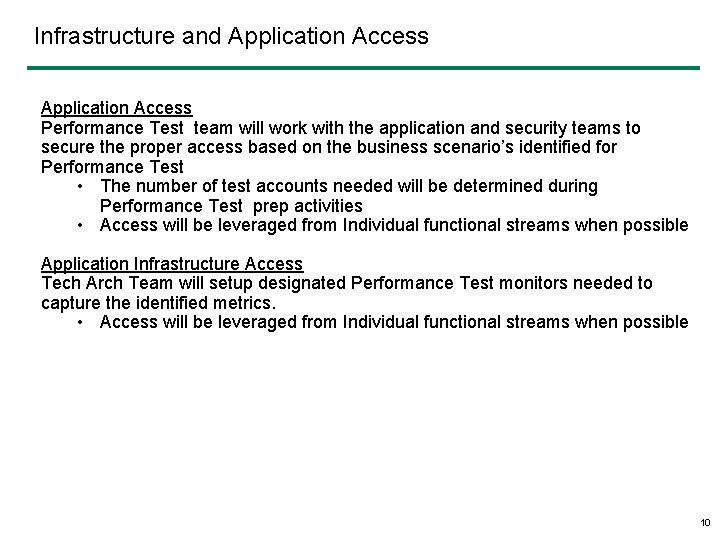

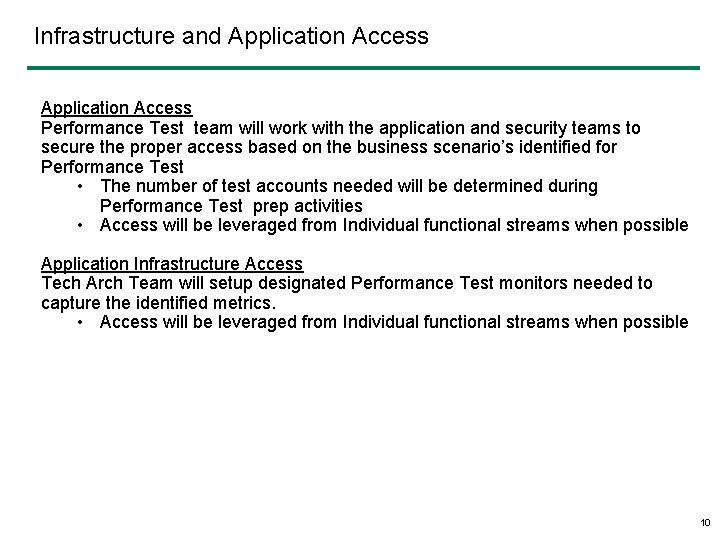

Infrastructure and Application Access Performance Test team will work with the application and security teams to secure the proper access based on the business scenario’s identified for Performance Test • The number of test accounts needed will be determined during Performance Test prep activities • Access will be leveraged from Individual functional streams when possible Application Infrastructure Access Tech Arch Team will setup designated Performance Test monitors needed to capture the identified metrics. • Access will be leveraged from Individual functional streams when possible 10

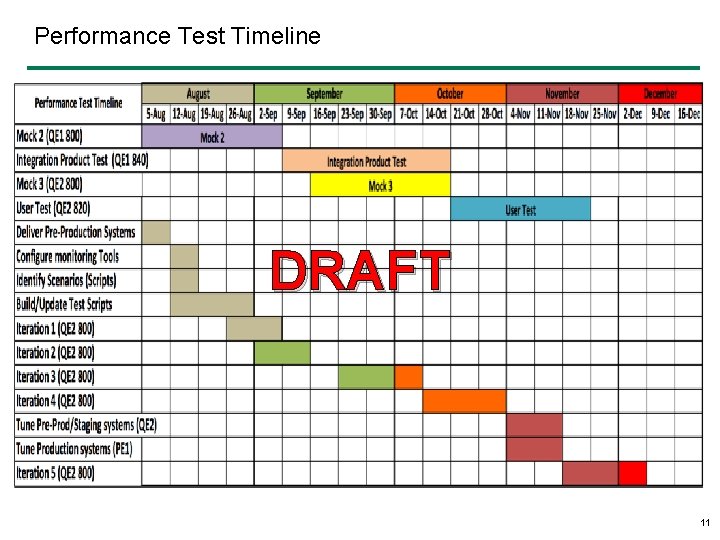

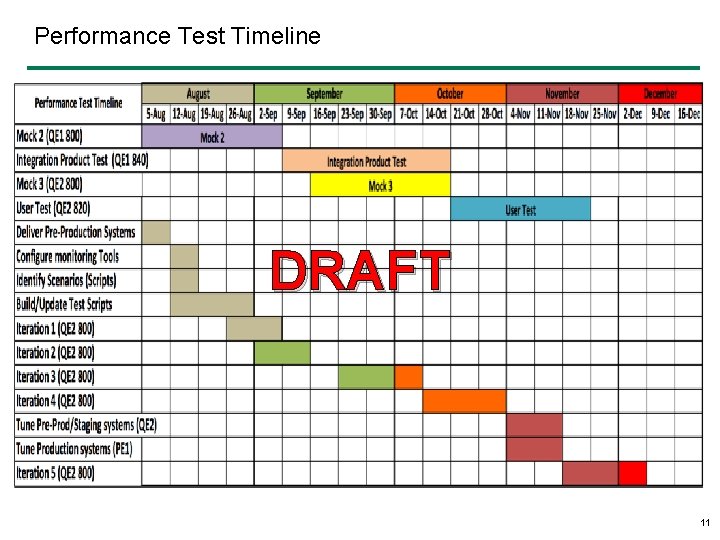

Performance Test Timeline DRAFT 11

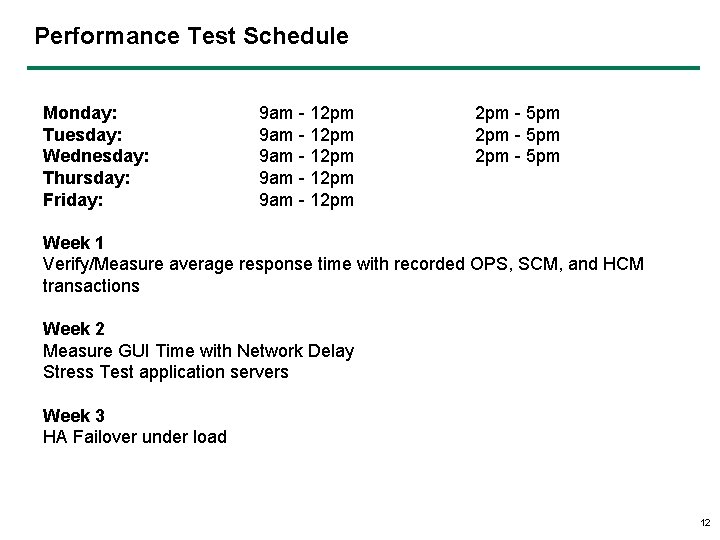

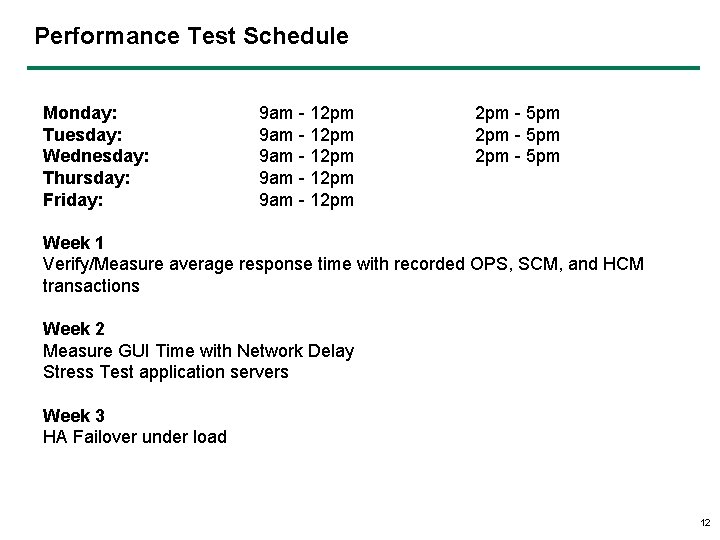

Performance Test Schedule Monday: Tuesday: Wednesday: Thursday: Friday: 9 am - 12 pm 9 am - 12 pm - 5 pm Week 1 Verify/Measure average response time with recorded OPS, SCM, and HCM transactions Week 2 Measure GUI Time with Network Delay Stress Test application servers Week 3 HA Failover under load 12

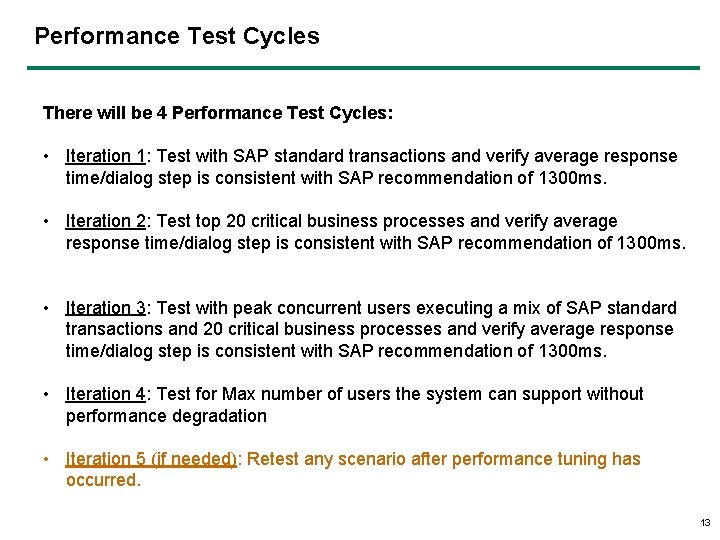

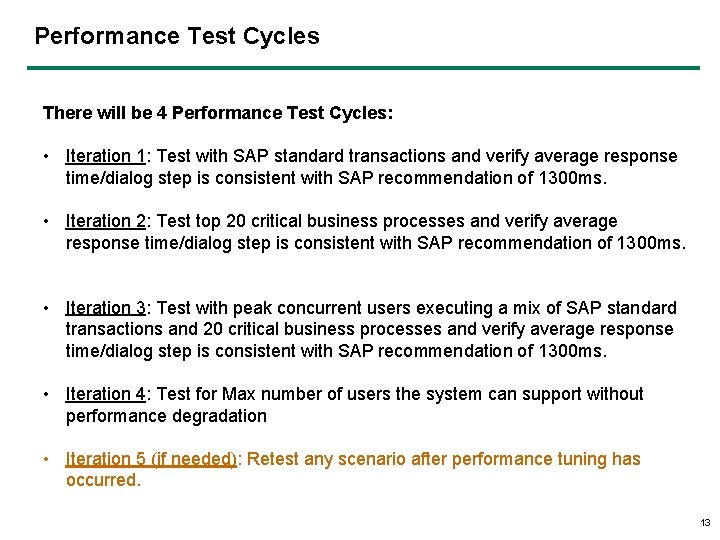

Performance Test Cycles There will be 4 Performance Test Cycles: • Iteration 1: Test with SAP standard transactions and verify average response time/dialog step is consistent with SAP recommendation of 1300 ms. • Iteration 2: Test top 20 critical business processes and verify average response time/dialog step is consistent with SAP recommendation of 1300 ms. • Iteration 3: Test with peak concurrent users executing a mix of SAP standard transactions and 20 critical business processes and verify average response time/dialog step is consistent with SAP recommendation of 1300 ms. • Iteration 4: Test for Max number of users the system can support without performance degradation • Iteration 5 (if needed): Retest any scenario after performance tuning has occurred. 13

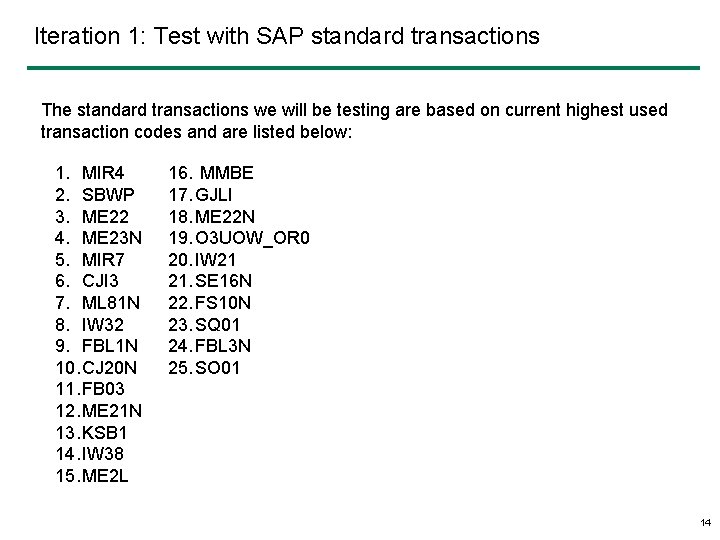

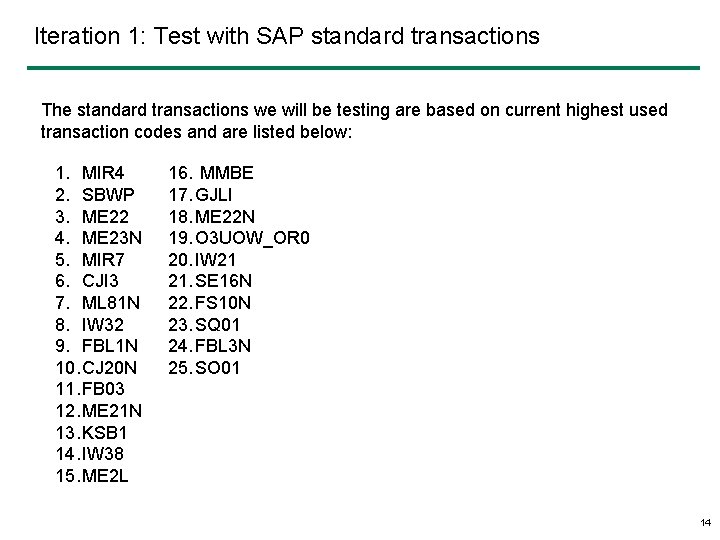

Iteration 1: Test with SAP standard transactions The standard transactions we will be testing are based on current highest used transaction codes and are listed below: 1. MIR 4 2. SBWP 3. ME 22 4. ME 23 N 5. MIR 7 6. CJI 3 7. ML 81 N 8. IW 32 9. FBL 1 N 10. CJ 20 N 11. FB 03 12. ME 21 N 13. KSB 1 14. IW 38 15. ME 2 L 16. MMBE 17. GJLI 18. ME 22 N 19. O 3 UOW_OR 0 20. IW 21 21. SE 16 N 22. FS 10 N 23. SQ 01 24. FBL 3 N 25. SO 01 14

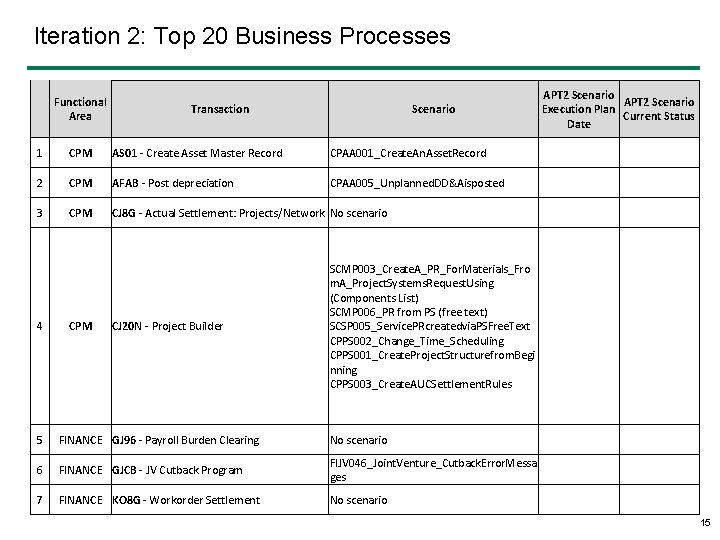

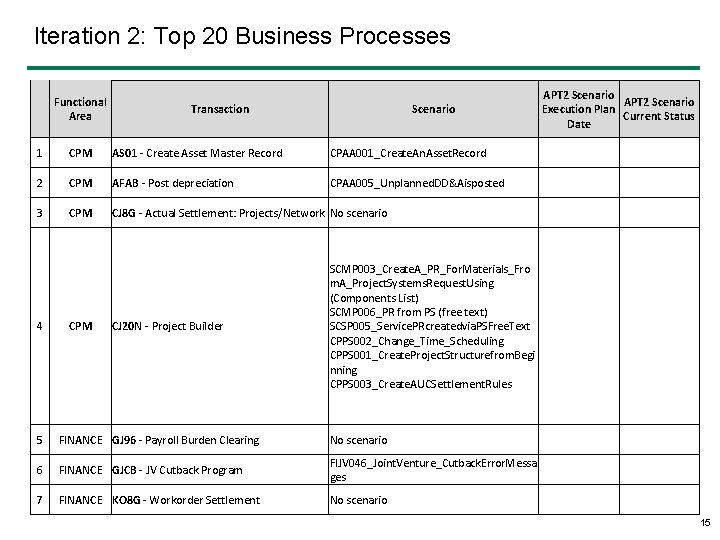

Iteration 2: Top 20 Business Processes Functional Area Transaction Scenario 1 CPM AS 01 - Create Asset Master Record CPAA 001_Create. An. Asset. Record 2 CPM AFAB - Post depreciation CPAA 005_Unplanned. DD&Aisposted 3 CPM CJ 8 G - Actual Settlement: Projects/Network No scenario 4 CPM CJ 20 N - Project Builder APT 2 Scenario Execution Plan Current Status Date SCMP 003_Create. A_PR_For. Materials_Fro m. A_Project. Systems. Request. Using (Components List) SCMP 006_PR from PS (free text) SCSP 005_Service. PRcreatedvia. PSFree. Text CPPS 002_Change_Time_Scheduling CPPS 001_Create. Project. Structurefrom. Begi nning CPPS 003_Create. AUCSettlement. Rules 5 FINANCE GJ 96 - Payroll Burden Clearing No scenario 6 FINANCE GJCB - JV Cutback Program FIJV 046_Joint. Venture_Cutback. Error. Messa ges 7 FINANCE KO 8 G - Workorder Settlement No scenario 15

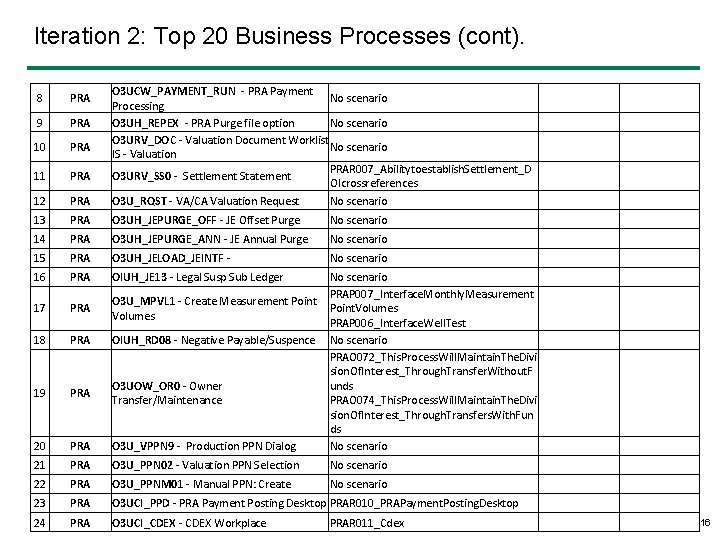

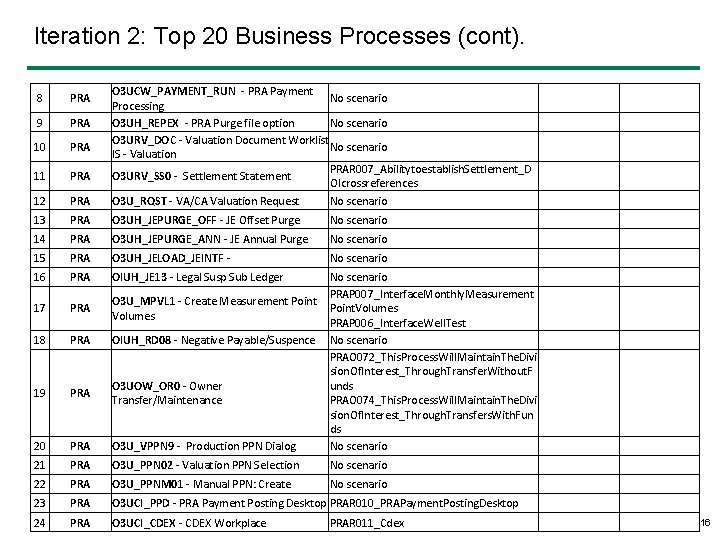

Iteration 2: Top 20 Business Processes (cont). 8 PRA 9 PRA 10 PRA 11 PRA 12 PRA O 3 UCW_PAYMENT_RUN - PRA Payment No scenario Processing O 3 UH_REPEX - PRA Purge file option No scenario O 3 URV_DOC - Valuation Document Worklist No scenario IS - Valuation PRAR 007_Abilitytoestablish. Settlement_D O 3 URV_SS 0 - Settlement Statement OIcrossreferences O 3 U_RQST - VA/CA Valuation Request No scenario 13 PRA O 3 UH_JEPURGE_OFF - JE Offset Purge No scenario 14 PRA O 3 UH_JEPURGE_ANN - JE Annual Purge No scenario 15 PRA O 3 UH_JELOAD_JEINTF - No scenario 16 PRA OIUH_JE 13 - Legal Susp Sub Ledger 17 PRA O 3 U_MPVL 1 - Create Measurement Point Volumes 18 PRA OIUH_RD 08 - Negative Payable/Suspence 19 PRA O 3 UOW_OR 0 - Owner Transfer/Maintenance 20 PRA O 3 U_VPPN 9 - Production PPN Dialog No scenario PRAP 007_Interface. Monthly. Measurement Point. Volumes PRAP 006_Interface. Well. Test No scenario PRAO 072_This. Process. Will. Maintain. The. Divi sion. Of. Interest_Through. Transfer. Without. F unds PRAO 074_This. Process. Will. Maintain. The. Divi sion. Of. Interest_Through. Transfers. With. Fun ds No scenario 21 PRA O 3 U_PPN 02 - Valuation PPN Selection No scenario 22 PRA O 3 U_PPNM 01 - Manual PPN: Create No scenario 23 PRA O 3 UCI_PPD - PRA Payment Posting Desktop PRAR 010_PRAPayment. Posting. Desktop 24 PRA O 3 UCI_CDEX - CDEX Workplace PRAR 011_Cdex 16

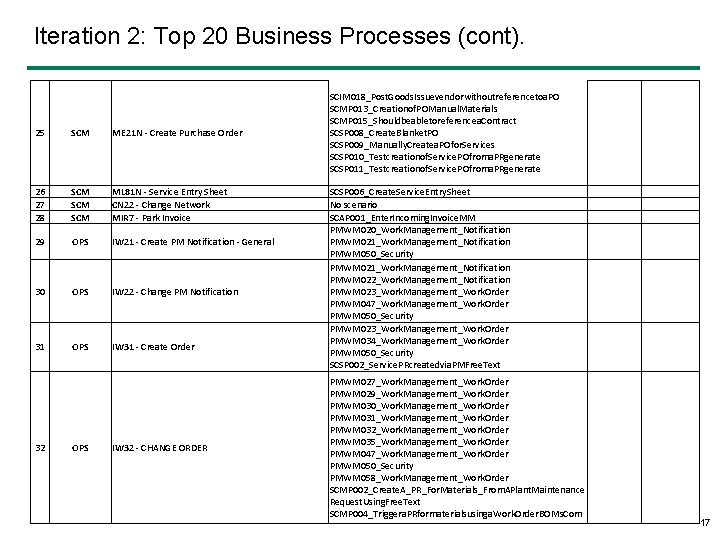

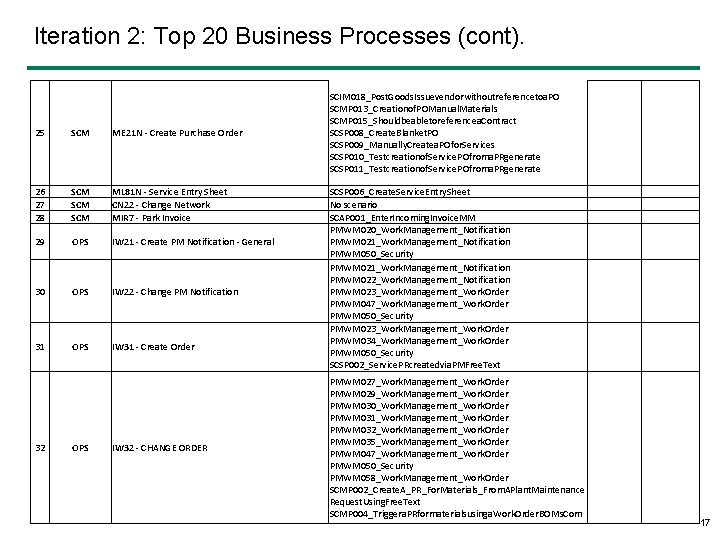

Iteration 2: Top 20 Business Processes (cont). 25 SCM ME 21 N - Create Purchase Order 26 27 28 SCM SCM ML 81 N - Service Entry Sheet CN 22 - Change Network MIR 7 - Park Invoice 29 OPS IW 21 - Create PM Notification - General 30 OPS IW 22 - Change PM Notification 31 OPS IW 31 - Create Order 32 OPS IW 32 - CHANGE ORDER SCIM 018_Post. Goods. Issuevendorwithoutreferencetoa. PO SCMP 013_Creationof. POManual. Materials SCMP 015_Shouldbeabletoreferencea. Contract SCSP 008_Create. Blanket. PO SCSP 009_Manually. Createa. POfor. Services SCSP 010_Testcreationof. Service. POfroma. PRgenerate SCSP 011_Testcreationof. Service. POfroma. PRgenerate SCSP 006_Create. Service. Entry. Sheet No scenario SCAP 001_Enter. Incoming. Invoice. MM PMWM 020_Work. Management_Notification PMWM 021_Work. Management_Notification PMWM 050_Security PMWM 021_Work. Management_Notification PMWM 022_Work. Management_Notification PMWM 023_Work. Management_Work. Order PMWM 047_Work. Management_Work. Order PMWM 050_Security PMWM 023_Work. Management_Work. Order PMWM 034_Work. Management_Work. Order PMWM 050_Security SCSP 002_Service. PRcreatedvia. PMFree. Text PMWM 027_Work. Management_Work. Order PMWM 029_Work. Management_Work. Order PMWM 030_Work. Management_Work. Order PMWM 031_Work. Management_Work. Order PMWM 032_Work. Management_Work. Order PMWM 035_Work. Management_Work. Order PMWM 047_Work. Management_Work. Order PMWM 050_Security PMWM 058_Work. Management_Work. Order SCMP 002_Create. A_PR_For. Materials_From. APlant. Maintenance Request. Using. Free. Text SCMP 004_Triggera. PRformaterialsusinga. Work. Order. BOMs. Com 17

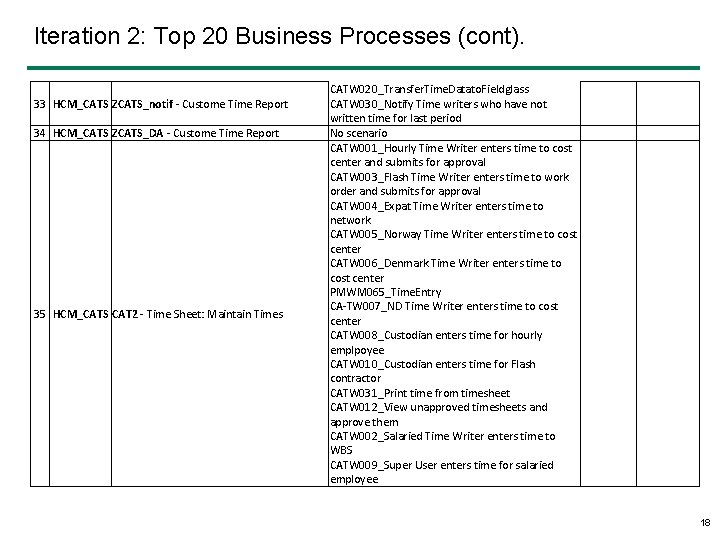

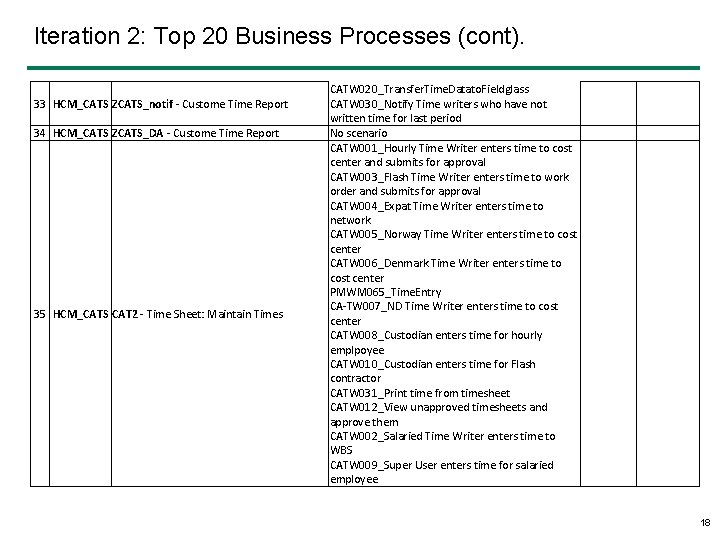

Iteration 2: Top 20 Business Processes (cont). 33 HCM_CATS ZCATS_notif - Custome Time Report 34 HCM_CATS ZCATS_DA - Custome Time Report 35 HCM_CATS CAT 2 - Time Sheet: Maintain Times CATW 020_Transfer. Time. Datato. Fieldglass CATW 030_Notify Time writers who have not written time for last period No scenario CATW 001_Hourly Time Writer enters time to cost center and submits for approval CATW 003_Flash Time Writer enters time to work order and submits for approval CATW 004_Expat Time Writer enters time to network CATW 005_Norway Time Writer enters time to cost center CATW 006_Denmark Time Writer enters time to cost center PMWM 065_Time. Entry CA-TW 007_ND Time Writer enters time to cost center CATW 008_Custodian enters time for hourly emplpoyee CATW 010_Custodian enters time for Flash contractor CATW 031_Print time from timesheet CATW 012_View unapproved timesheets and approve them CATW 002_Salaried Time Writer enters time to WBS CATW 009_Super User enters time for salaried employee 18

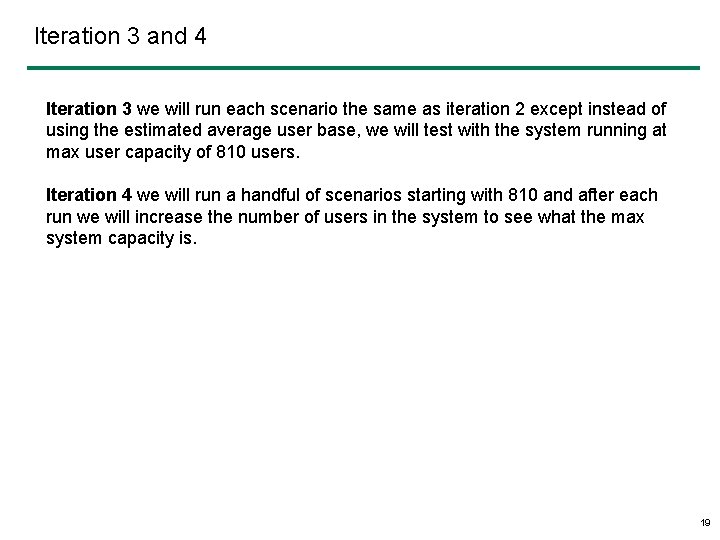

Iteration 3 and 4 Iteration 3 we will run each scenario the same as iteration 2 except instead of using the estimated average user base, we will test with the system running at max user capacity of 810 users. Iteration 4 we will run a handful of scenarios starting with 810 and after each run we will increase the number of users in the system to see what the max system capacity is. 19

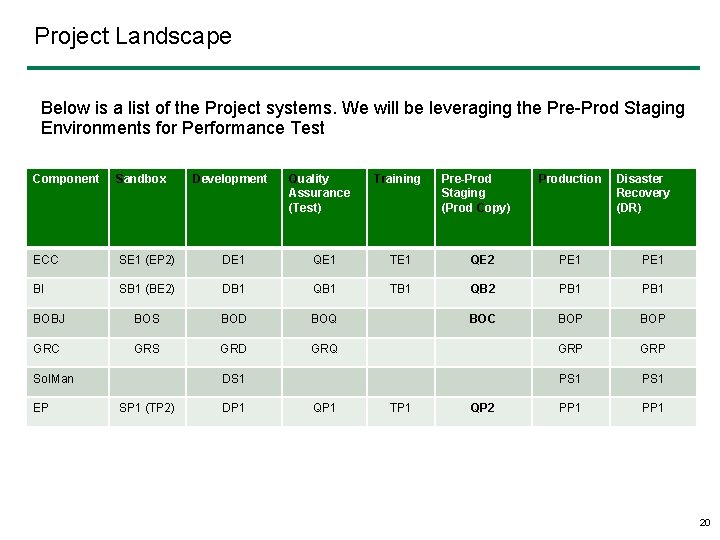

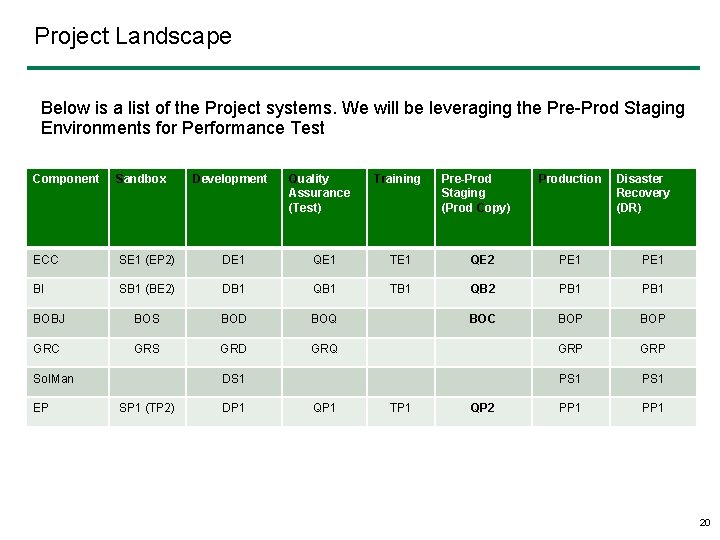

Project Landscape Below is a list of the Project systems. We will be leveraging the Pre-Prod Staging Environments for Performance Test Component Sandbox ECC SE 1 (EP 2) DE 1 QE 1 TE 1 QE 2 PE 1 BI SB 1 (BE 2) DB 1 QB 1 TB 1 QB 2 PB 1 BOBJ BOS BOD BOQ BOC BOP GRC GRS GRD GRQ GRP PS 1 PP 1 Sol. Man EP Development Quality Assurance (Test) Training Pre-Prod Staging (Prod Copy) DS 1 SP 1 (TP 2) DP 1 QP 1 TP 1 QP 2 Production Disaster Recovery (DR) 20

What is 97 000 in scientific notation

What is 97 000 in scientific notation 100 200 300 400 500 600 700 800 900

100 200 300 400 500 600 700 800 900 Express 602200 in scientific notation.

Express 602200 in scientific notation. 71 000 in scientific notation

71 000 in scientific notation 100 200 300 400 500 600

100 200 300 400 500 600 600+800+800

600+800+800 100 200 300 400 500

100 200 300 400 500 300 + 200 + 200

300 + 200 + 200 100 + 100 200

100 + 100 200 200+200+300+300

200+200+300+300 100+200+200

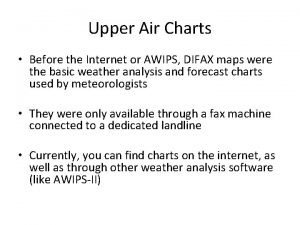

100+200+200 How to read upper air maps

How to read upper air maps Upper air 850, 700, 500 & 300 mb charts

Upper air 850, 700, 500 & 300 mb charts 300+300+400

300+300+400 300+300+400

300+300+400 300 square root

300 square root 300+300+400

300+300+400 400 + 300 + 300

400 + 300 + 300 300+300+400

300+300+400 300 300 400

300 300 400 600+400+500

600+400+500