20 th Century Esfinge Sphinx solving the riddles

- Slides: 1

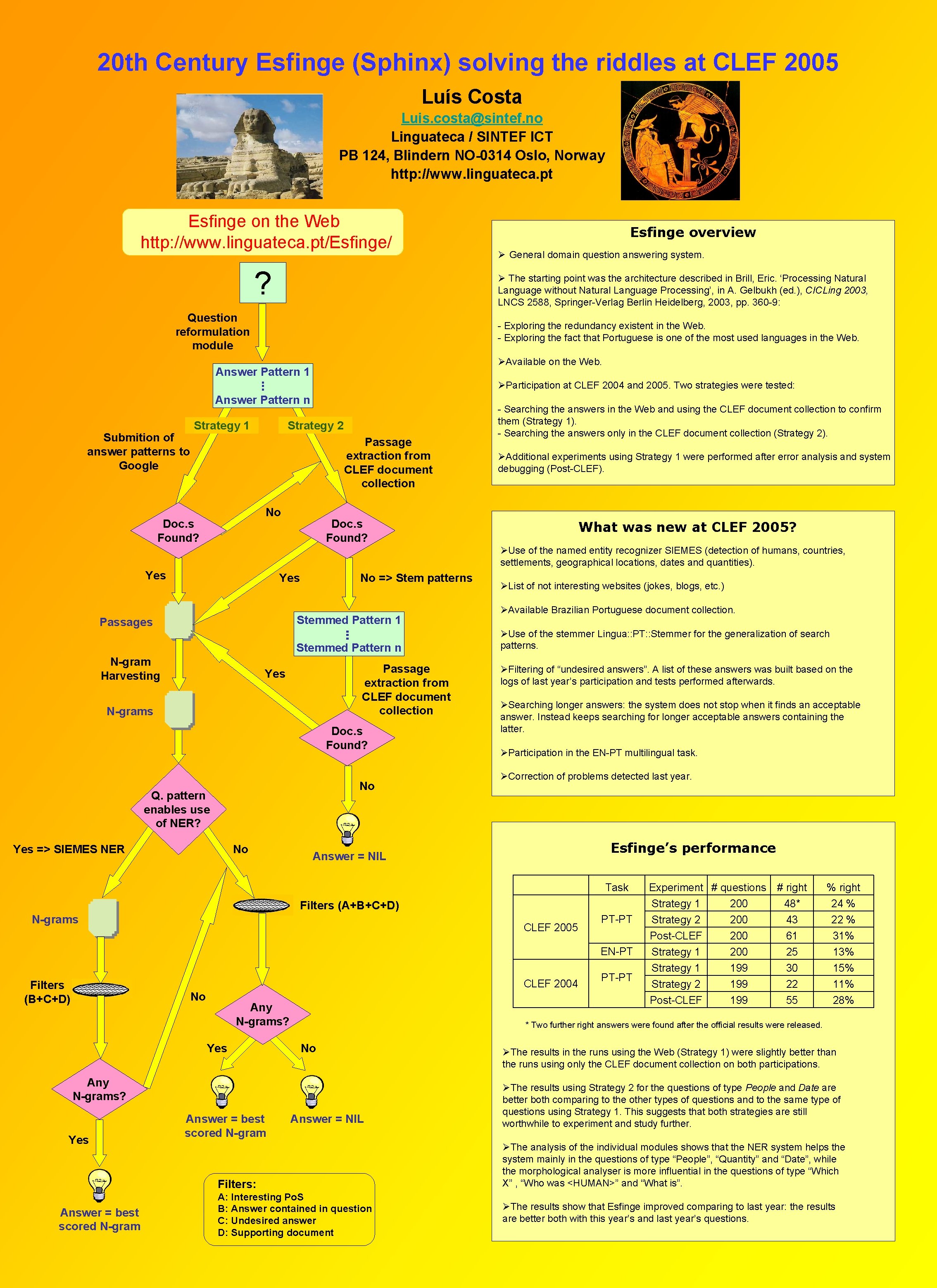

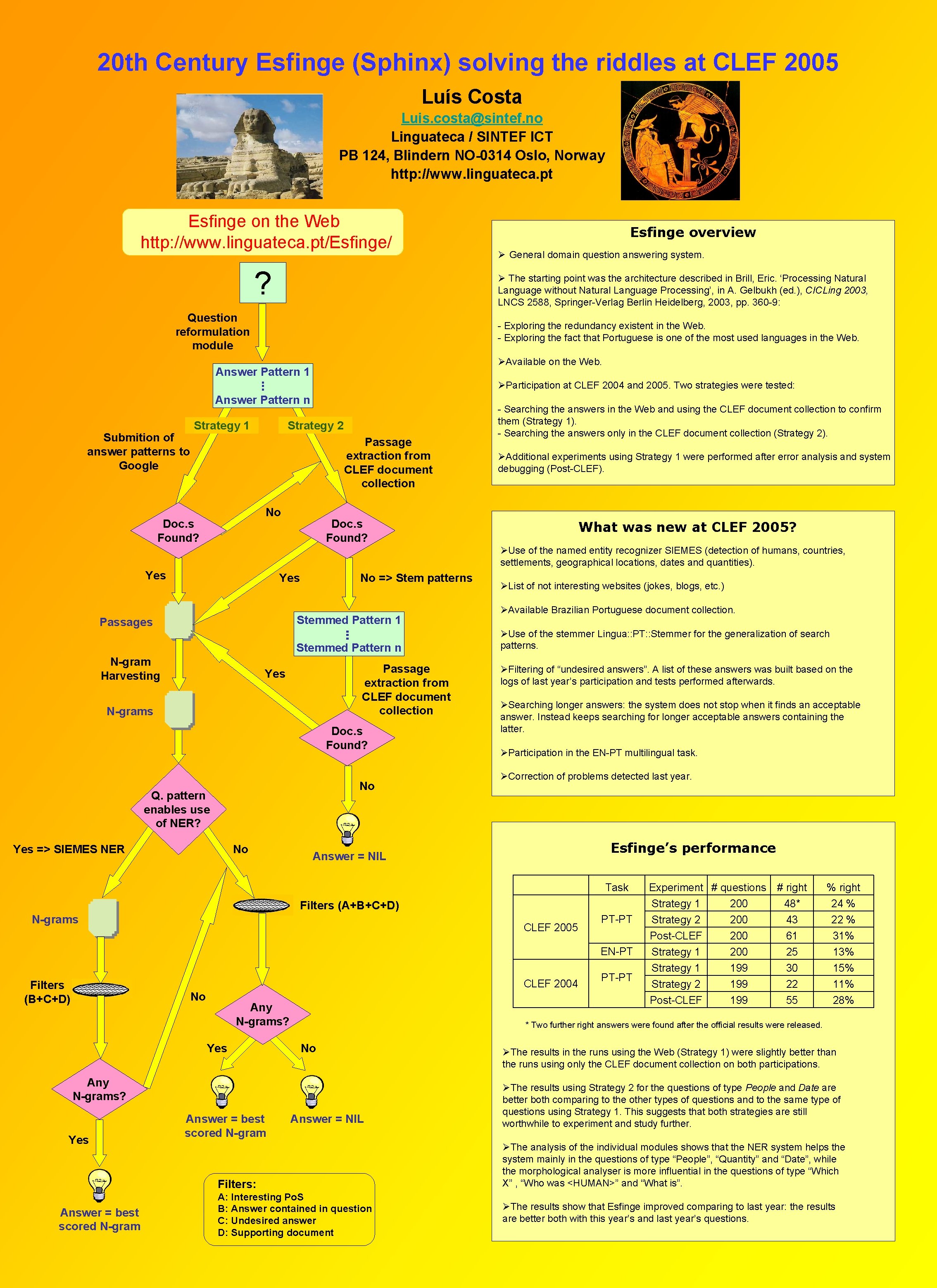

20 th Century Esfinge (Sphinx) solving the riddles at CLEF 2005 Luís Costa Luis. costa@sintef. no Linguateca / SINTEF ICT PB 124, Blindern NO-0314 Oslo, Norway http: //www. linguateca. pt Esfinge on the Web http: //www. linguateca. pt/Esfinge/ ? Esfinge overview Ø General domain question answering system. Ø The starting point was the architecture described in Brill, Eric. ‘Processing Natural Language without Natural Language Processing’, in A. Gelbukh (ed. ), CICLing 2003, LNCS 2588, Springer-Verlag Berlin Heidelberg, 2003, pp. 360 -9: Question reformulation module - Exploring the redundancy existent in the Web. - Exploring the fact that Portuguese is one of the most used languages in the Web. ØAvailable on the Web. Answer Pattern 1 ØParticipation at CLEF 2004 and 2005. Two strategies were tested: Answer Pattern n Submition of answer patterns to Google Strategy 2 Strategy 1 Passage extraction from CLEF document collection No Doc. s Found? - Searching the answers in the Web and using the CLEF document collection to confirm them (Strategy 1). - Searching the answers only in the CLEF document collection (Strategy 2). ØAdditional experiments using Strategy 1 were performed after error analysis and system debugging (Post-CLEF). Doc. s Found? What was new at CLEF 2005? ØUse of the named entity recognizer SIEMES (detection of humans, countries, settlements, geographical locations, dates and quantities). Yes No => Stem patterns Stemmed Pattern 1 Passages Stemmed Pattern n N-gram Harvesting Passage extraction from CLEF document collection Yes N-grams Doc. s Found? No Q. pattern enables use of NER? No Yes => SIEMES NER ØList of not interesting websites (jokes, blogs, etc. ) ØAvailable Brazilian Portuguese document collection. ØUse of the stemmer Lingua: : PT: : Stemmer for the generalization of search patterns. ØFiltering of “undesired answers”. A list of these answers was built based on the logs of last year’s participation and tests performed afterwards. ØSearching longer answers: the system does not stop when it finds an acceptable answer. Instead keeps searching for longer acceptable answers containing the latter. ØParticipation in the EN-PT multilingual task. ØCorrection of problems detected last year. Esfinge’s performance Answer = NIL Task Filters (A+B+C+D) N-grams CLEF 2005 PT-PT EN-PT CLEF 2004 Filters (B+C+D) No Any N-grams? Yes No Answer = NIL Filters: Answer = best scored N-gram Strategy 1 Strategy 2 Post-CLEF 200 200 199 199 48* 43 61 25 30 22 55 % right 24 % 22 % 31% 13% 15% 11% 28% * Two further right answers were found after the official results were released. Any N-grams? Answer = best scored N-gram PT-PT Experiment # questions # right A: Interesting Po. S B: Answer contained in question C: Undesired answer D: Supporting document ØThe results in the runs using the Web (Strategy 1) were slightly better than the runs using only the CLEF document collection on both participations. ØThe results using Strategy 2 for the questions of type People and Date are better both comparing to the other types of questions and to the same type of questions using Strategy 1. This suggests that both strategies are still worthwhile to experiment and study further. ØThe analysis of the individual modules shows that the NER system helps the system mainly in the questions of type “People”, “Quantity” and “Date”, while the morphological analyser is more influential in the questions of type “Which X” , “Who was <HUMAN>” and “What is”. ØThe results show that Esfinge improved comparing to last year: the results are better both with this year’s and last year’s questions.