2 nd ACM Symposium on Cloud Computing Oct

- Slides: 19

2 nd ACM Symposium on Cloud Computing Oct 27, 2011 Small-World Datacenters Ji-Yong Shin* Bernard Wong+, and Emin Gün Sirer* *Cornell University +University of Waterloo

Motivation • Conventional networks are hierarchical – Higher layers become bottlenecks • Non-traditional datacenter networks are emerging – Fat Tree, VL 2, DCell and BCube • Highly structured or sophisticated regular connections • Redesign of network protocols – Cam. Cube (3 D Torus) • High bandwidth and APIs exposing network architecture • Large network hops

Small-World Datacenters • Regular + random connections – Based on a simple underlying grid – Achieves low network diameter – Enables content routing • Characteristics – High bandwidth – Fault tolerant – Scalable

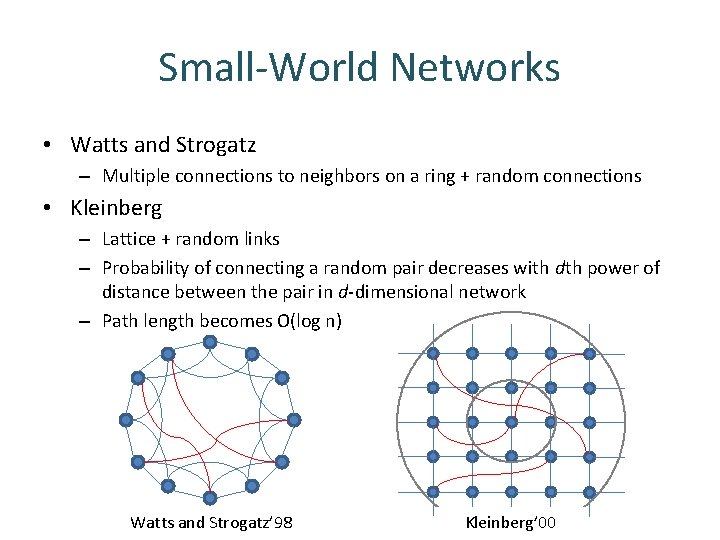

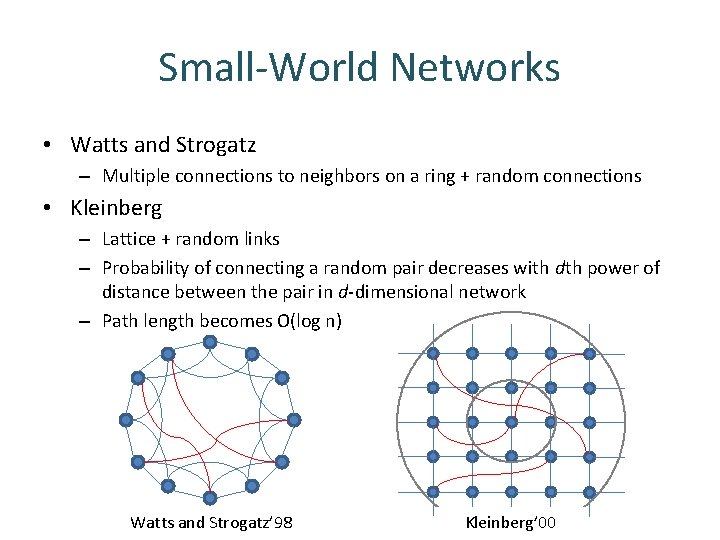

Small-World Networks • Watts and Strogatz – Multiple connections to neighbors on a ring + random connections • Kleinberg – Lattice + random links – Probability of connecting a random pair decreases with dth power of distance between the pair in d-dimensional network – Path length becomes O(log n) Watts and Strogatz’ 98 Kleinberg’ 00

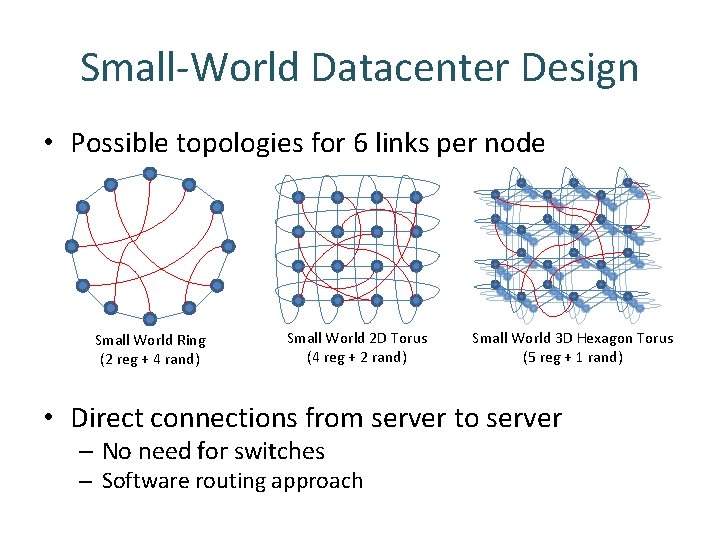

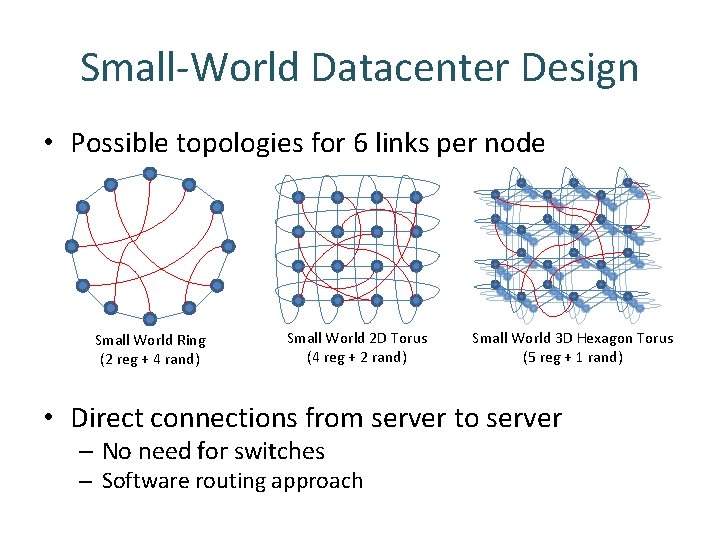

Small-World Datacenter Design • Possible topologies for 6 links per node Small World Ring (2 reg + 4 rand) Small World 2 D Torus (4 reg + 2 rand) Small World 3 D Hexagon Torus (5 reg + 1 rand) • Direct connections from server to server – No need for switches – Software routing approach

Routing in Small-World Datacenters • Shortest path – Link state protocol (OSPF) – Expensive due to TCAM cost • Greedy geographical – Find min distance neighbor – Coordinates in lattice used as ID – Maintain info of 3 hop neighbors – Comparable to shortest path for 3 DHex. Torus

Content Routing in Small-Worlds • Content routing – Logical coordinate space and network to map data – Logical and physical network do not necessarily match • Geographical identity + simple regular network in SWDC – Logical topology can be mapped physically – Random links only accelerates routing • SWDC can support DHT and key value stores directly – Similar to Cam. Cube

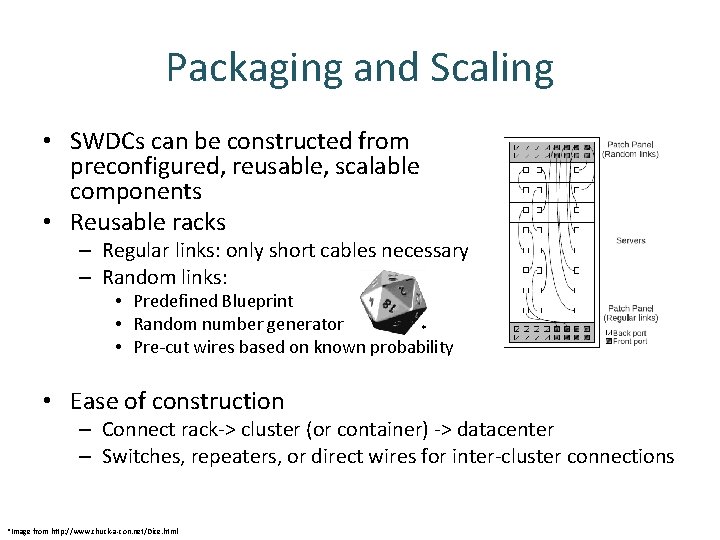

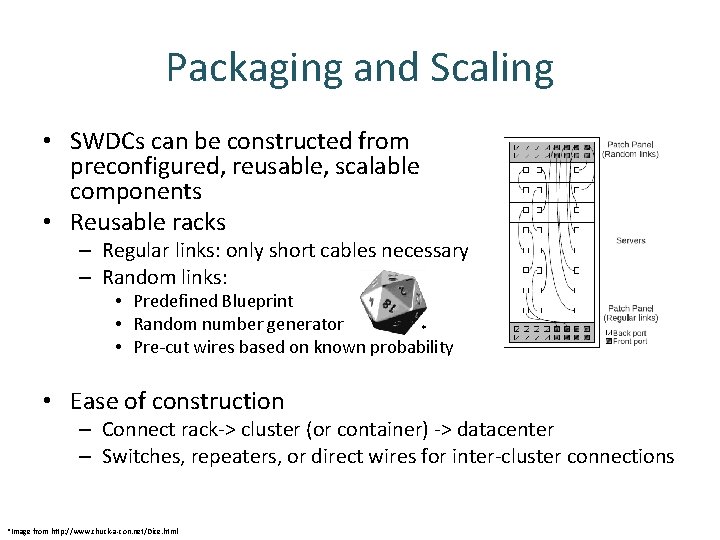

Packaging and Scaling • SWDCs can be constructed from preconfigured, reusable, scalable components • Reusable racks – Regular links: only short cables necessary – Random links: • Predefined Blueprint • Random number generator * • Pre-cut wires based on known probability • Ease of construction – Connect rack-> cluster (or container) -> datacenter – Switches, repeaters, or direct wires for inter-cluster connections *Image from http: //www. chuck-a-con. net/Dice. html

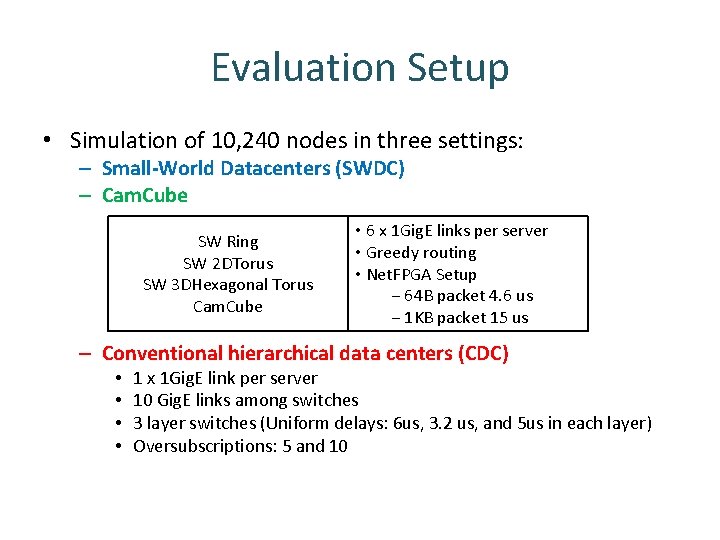

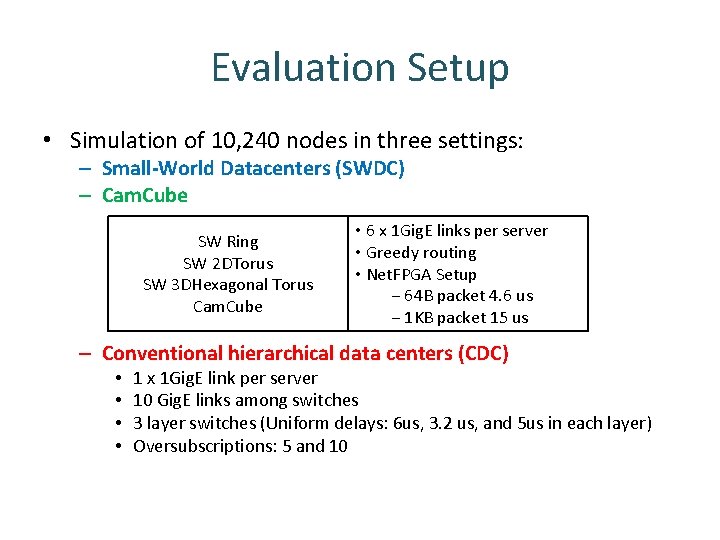

Evaluation Setup • Simulation of 10, 240 nodes in three settings: – Small-World Datacenters (SWDC) – Cam. Cube SW Ring SW 2 DTorus SW 3 DHexagonal Torus Cam. Cube • 6 x 1 Gig. E links per server • Greedy routing • Net. FPGA Setup − 64 B packet 4. 6 us − 1 KB packet 15 us – Conventional hierarchical data centers (CDC) • • 1 x 1 Gig. E link per server 10 Gig. E links among switches 3 layer switches (Uniform delays: 6 us, 3. 2 us, and 5 us in each layer) Oversubscriptions: 5 and 10

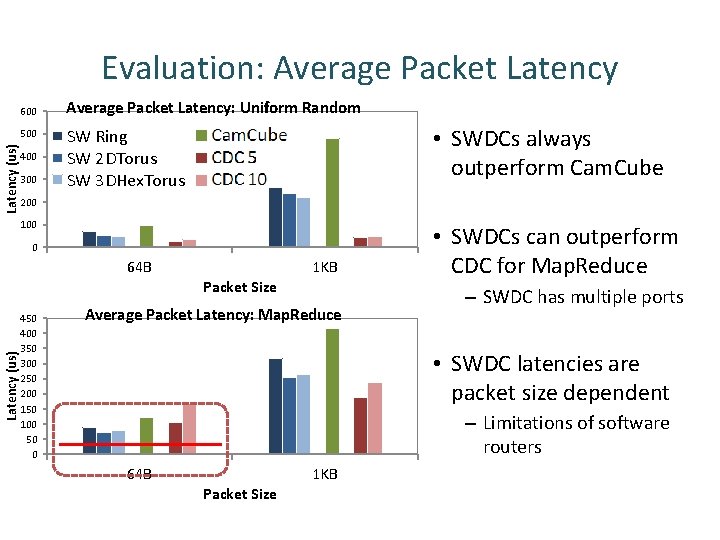

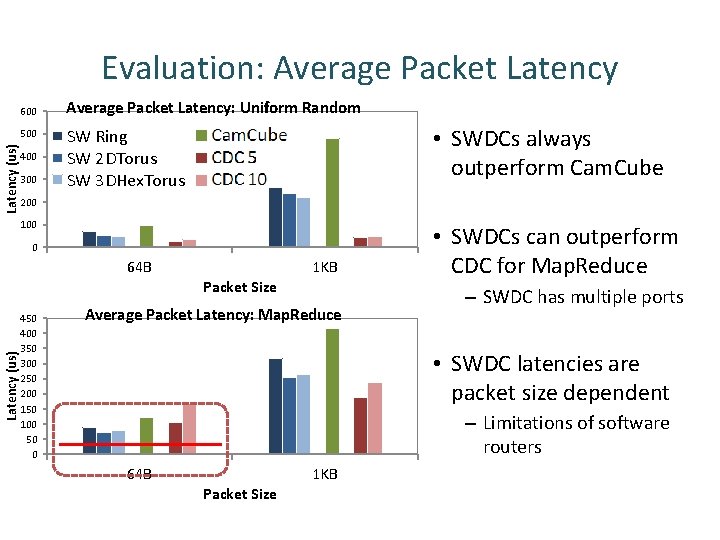

Evaluation: Average Packet Latency 600 Latency (us) 500 400 300 Average Packet Latency: Uniform Random • SWDCs always outperform Cam. Cube SW Ring SW 2 DTorus SW 3 DHex. Torus 200 100 0 64 B 1 KB Latency (us) Packet Size 450 400 350 300 250 200 150 100 50 0 Average Packet Latency: Map. Reduce • SWDCs can outperform CDC for Map. Reduce – SWDC has multiple ports • SWDC latencies are packet size dependent – Limitations of software routers 64 B 1 KB Packet Size

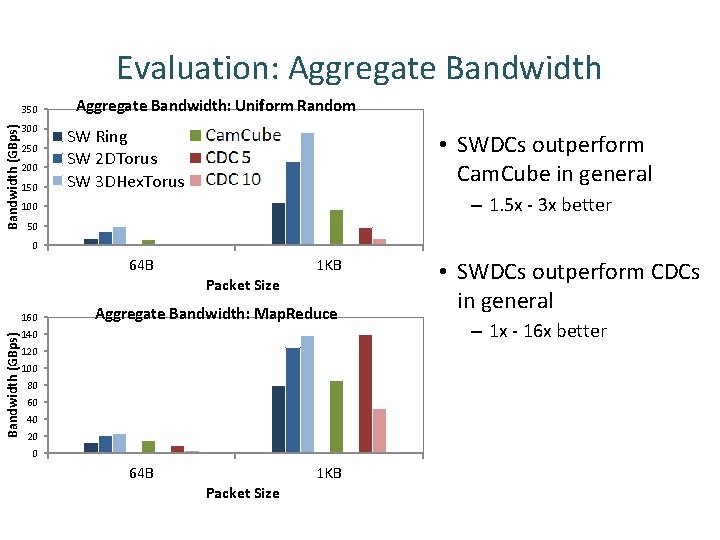

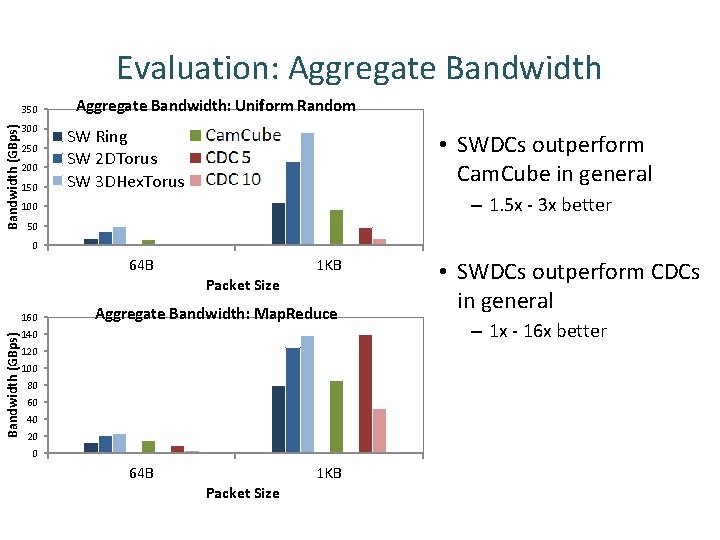

Evaluation: Aggregate Bandwidth (GBps) 350 300 250 200 150 Aggregate Bandwidth: Uniform Random SW Ring SW 2 DTorus SW 3 DHex. Torus • SWDCs outperform Cam. Cube in general – 1. 5 x - 3 x better 100 50 0 64 B 1 KB Packet Size Bandwidth (GBps) 160 Aggregate Bandwidth: Map. Reduce 140 120 100 80 60 40 20 0 64 B 1 KB Packet Size • SWDCs outperform CDCs in general – 1 x - 16 x better

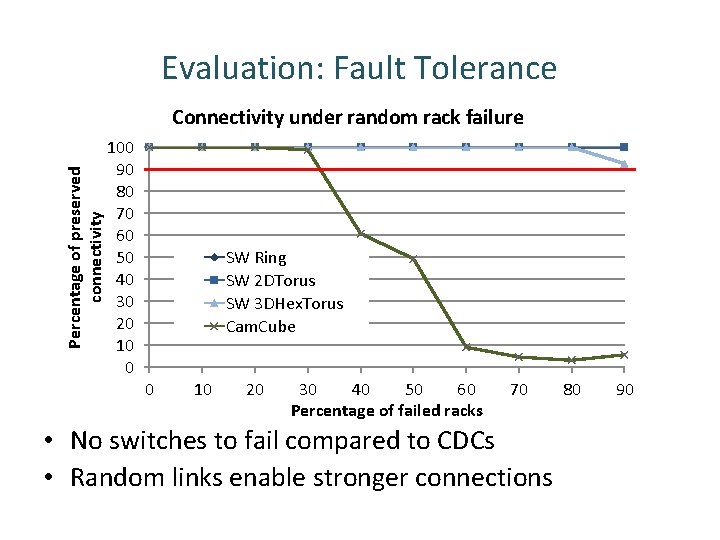

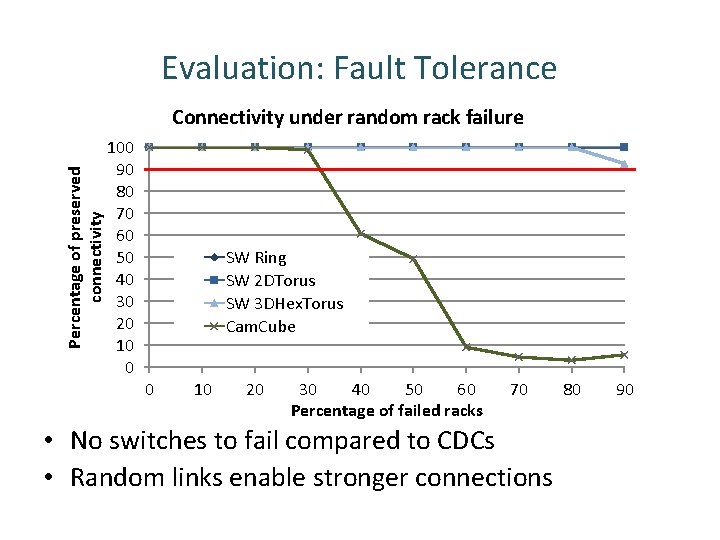

Evaluation: Fault Tolerance Percentage of preserved connectivity Connectivity under random rack failure 100 90 80 70 60 50 40 30 20 10 0 SW Ring SW 2 DTorus SW 3 DHex. Torus Cam. Cube 0 10 20 30 40 50 60 Percentage of failed racks 70 • No switches to fail compared to CDCs • Random links enable stronger connections 80 90

Related Concurrent Work • Scafida and Jellyfish – Rely on random connections – Achieve high bandwidth • Comparison to SWDC – SWDCs have more regular links – Routing can be simpler

Summary • Unorthodox topology comprising a mix of regular and random links can yield: – High performance – Fault tolerant – Easy to construct and scalable • Issues of cost at scale, routing around failures, multipath routing, etc. are discussed in the paper

Extra: Path Length Comparison Average Path Length (10240 nodes, Errorbar = stddev) Path Length 30 25 20 Shortest Greedy 15 10 5 0 SW Ring SW 2 DTorus SW 3 DHex. Torus Cam. Cube

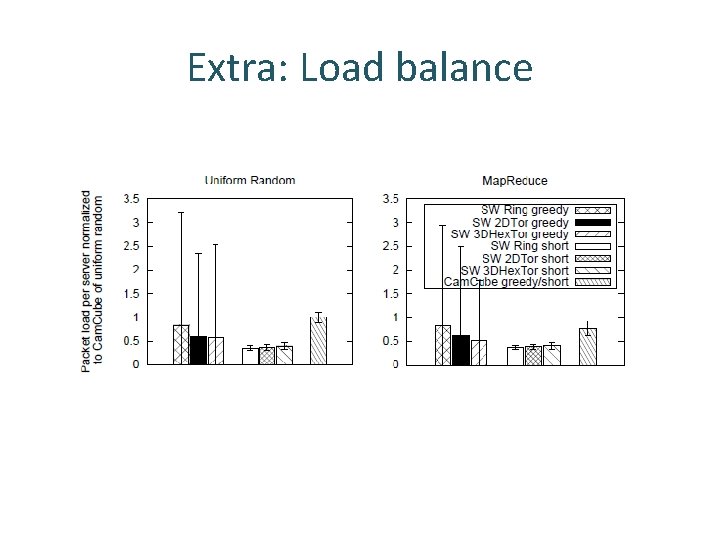

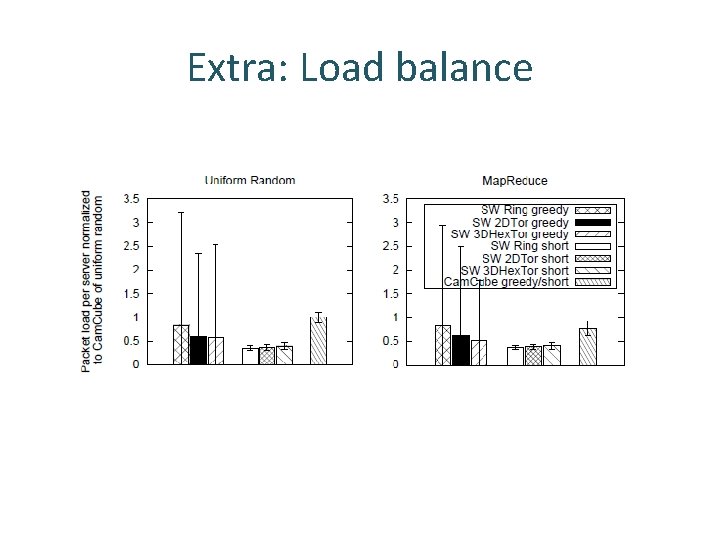

Extra: Load balance

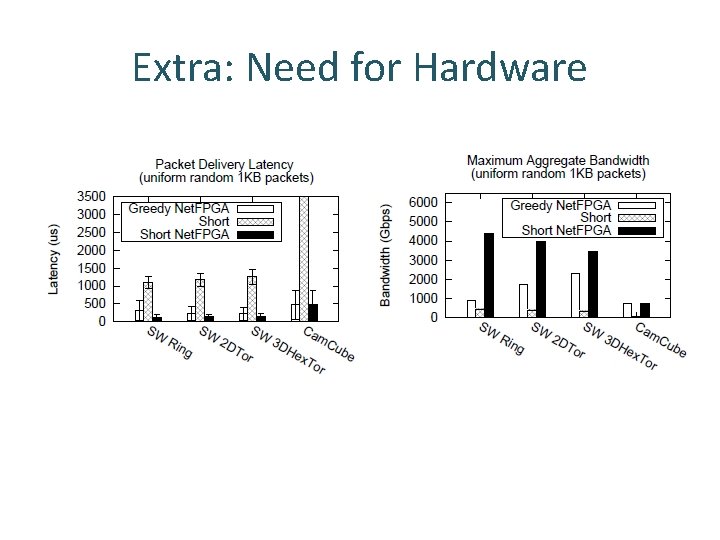

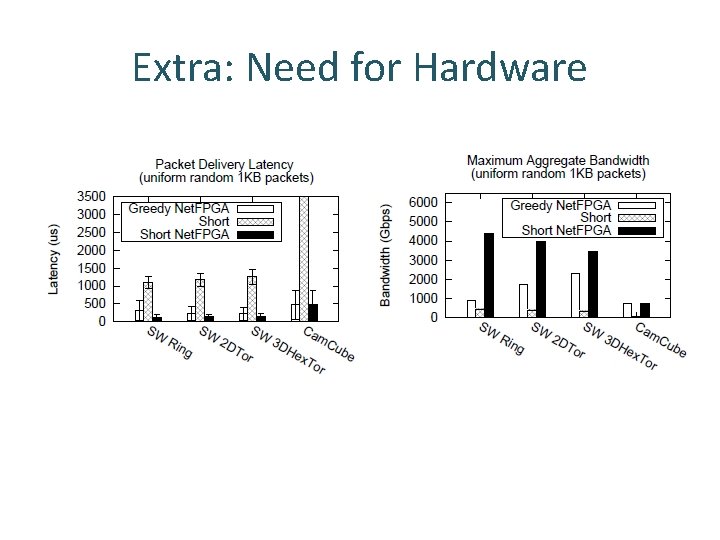

Extra: Need for Hardware

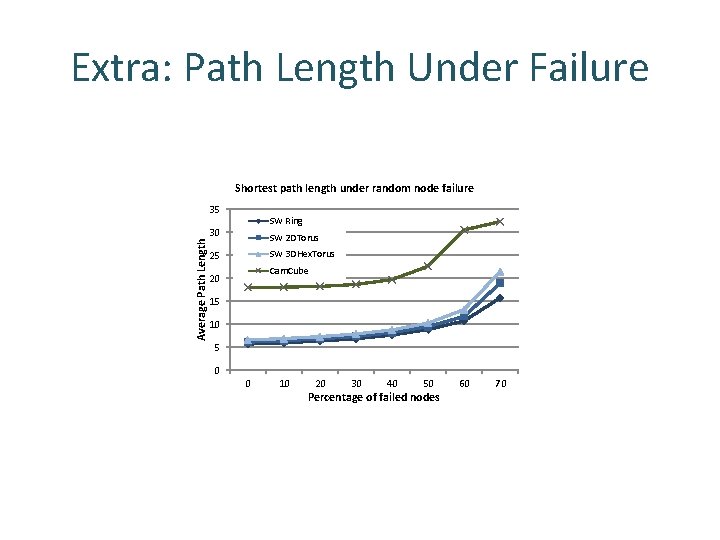

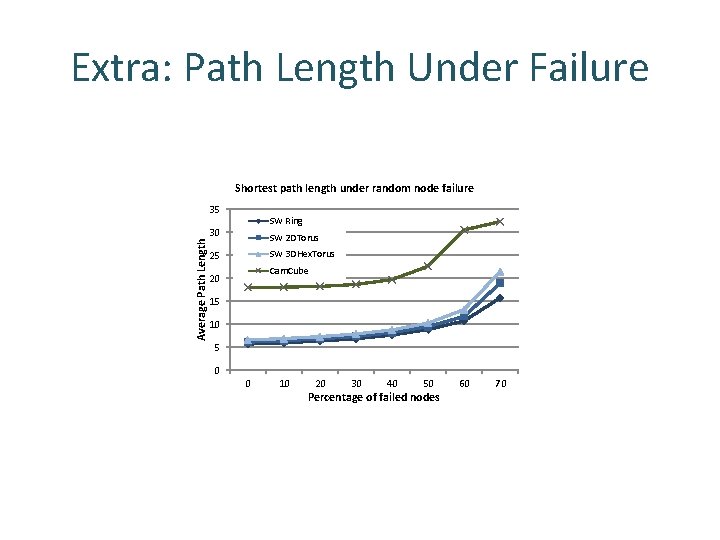

Extra: Path Length Under Failure Shortest path length under random node failure Average Path Length 35 SW Ring 30 SW 2 DTorus SW 3 DHex. Torus 25 Cam. Cube 20 15 10 5 0 0 10 20 30 40 50 Percentage of failed nodes 60 70

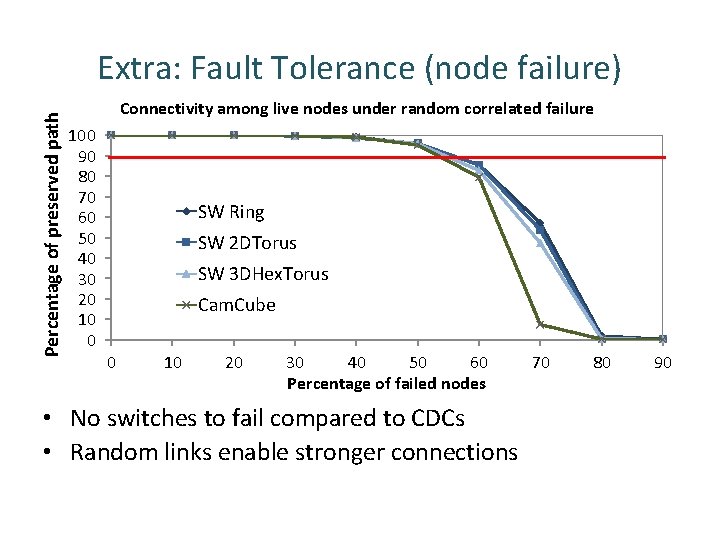

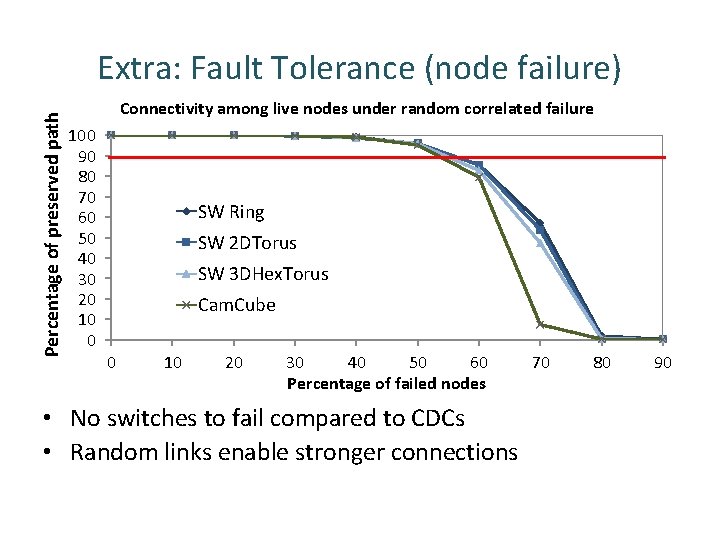

Percentage of preserved path Extra: Fault Tolerance (node failure) Connectivity among live nodes under random correlated failure 100 90 80 70 60 50 40 30 20 10 0 SW Ring SW 2 DTorus SW 3 DHex. Torus Cam. Cube 0 10 20 30 40 50 60 Percentage of failed nodes • No switches to fail compared to CDCs • Random links enable stronger connections 70 80 90