2 Information Retrieval Motivation n Information Retrieval is

- Slides: 86

2 Information Retrieval

Motivation n Information Retrieval is a field of activity for many years n It was long seen as an area of narrow interest n Advent of the Web changed this perception w universal repository of knowledge w free (low cost) universal access w no central editorial board w many problems though: IR seen as key to finding the solutions! Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 2

Motivation n Information Retrieval: representation, storage, organization of, and access to information items n Emphasis on the retrieval of information (not data) n Focus is on the user information need n User information need w Example: Find all documents containing information about car accidents which happend in Vienna had people injured n The information need is expressed as a query Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 3

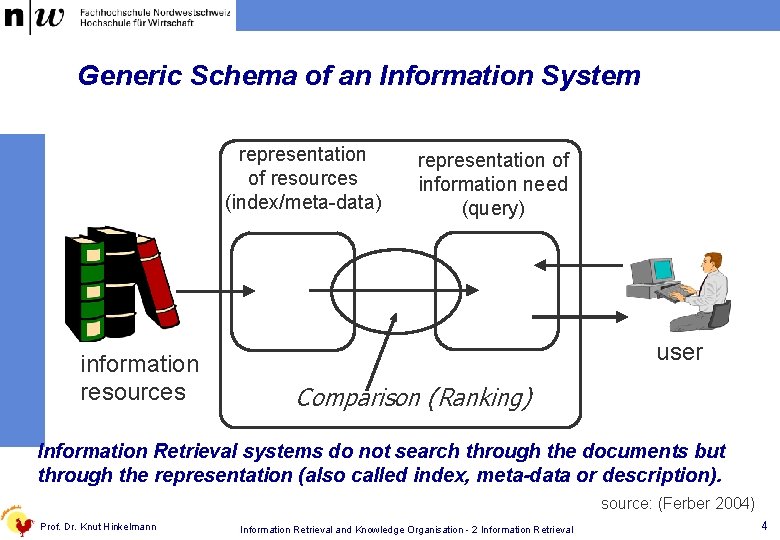

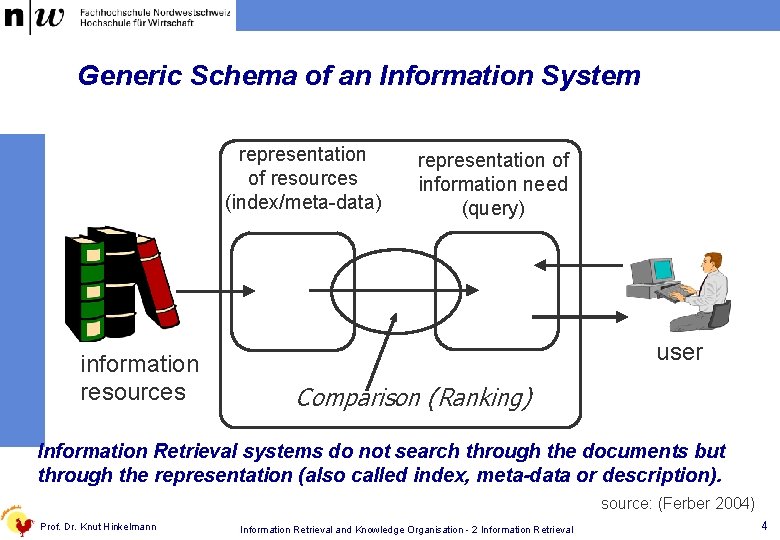

Generic Schema of an Information System representation of resources (index/meta-data) information resources representation of information need (query) user Comparison (Ranking) Information Retrieval systems do not search through the documents but through the representation (also called index, meta-data or description). source: (Ferber 2004) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 4

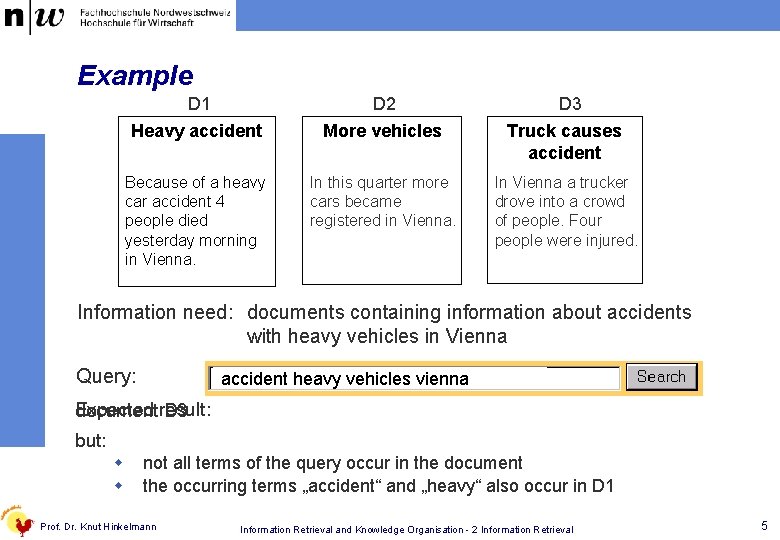

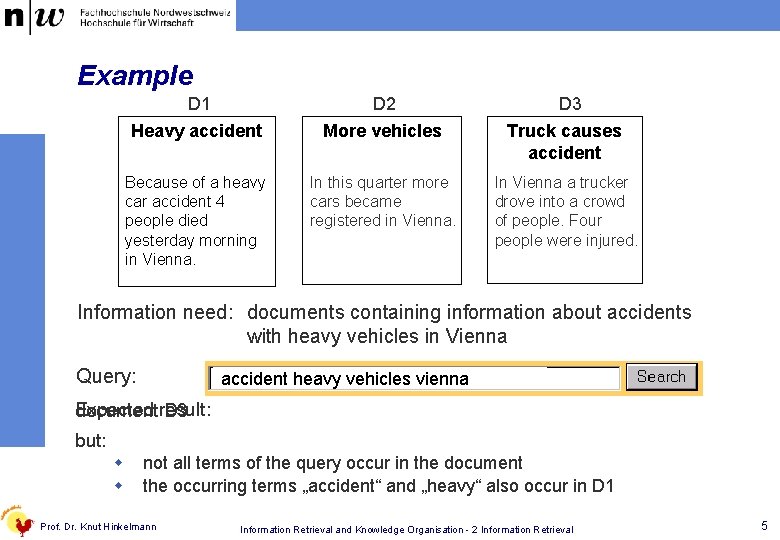

Example D 1 D 2 D 3 Heavy accident More vehicles Truck causes accident Because of a heavy car accident 4 people died yesterday morning in Vienna. In this quarter more cars became registered in Vienna. In Vienna a trucker drove into a crowd of people. Four people were injured. Information need: documents containing information about accidents with heavy vehicles in Vienna Query: accident heavy vehicles vienna Expected document result: D 3 but: w w not all terms of the query occur in the document the occurring terms „accident“ and „heavy“ also occur in D 1 Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 5

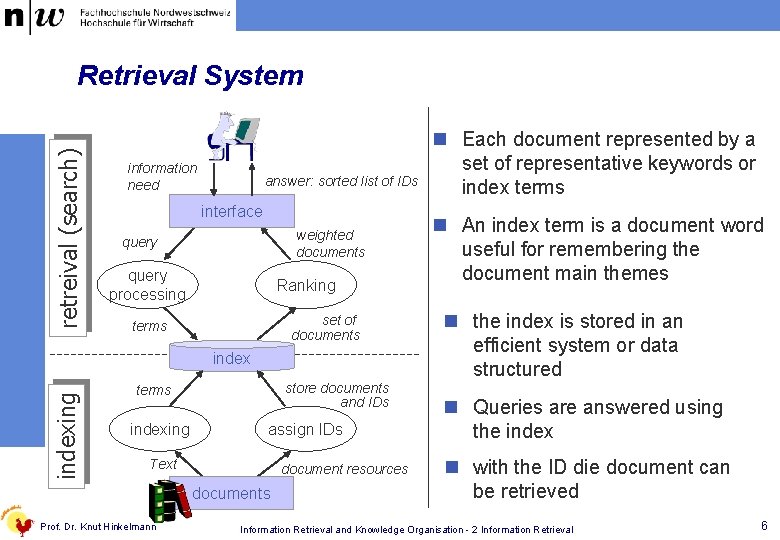

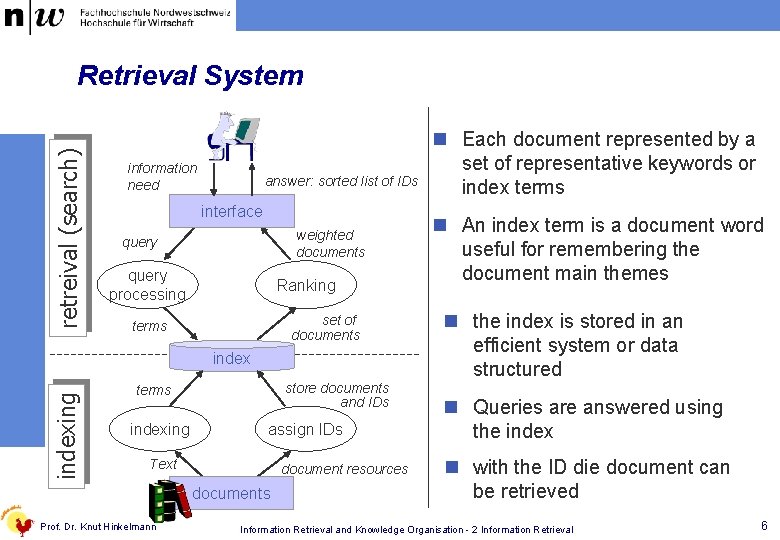

retreival (search) Retrieval System information need answer: sorted list of IDs interface weighted documents query processing Ranking set of documents terms indexing index store documents and IDs terms indexing assign IDs Text document resources documents Prof. Dr. Knut Hinkelmann n Each document represented by a set of representative keywords or index terms n An index term is a document word useful for remembering the document main themes n the index is stored in an efficient system or data structured n Queries are answered using the index n with the ID die document can be retrieved Information Retrieval and Knowledge Organisation - 2 Information Retrieval 6

Indexing n manual indexing – key words w w w user specifies key words, he/she assumes useful Usually, key words are nouns because nouns have meaning by themselves there are two possibilities 1. user can assign any terms 2. user can select from a predefined set of terms ( controlled vocabulary) n automatic indexing – full text search w w search engines assume that all words are index terms (full text representation) system generates index terms from the words occurring in the text Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 7

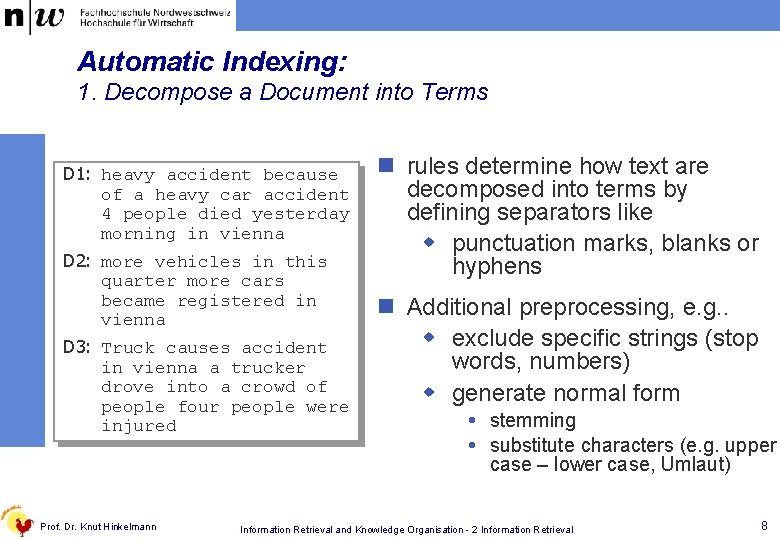

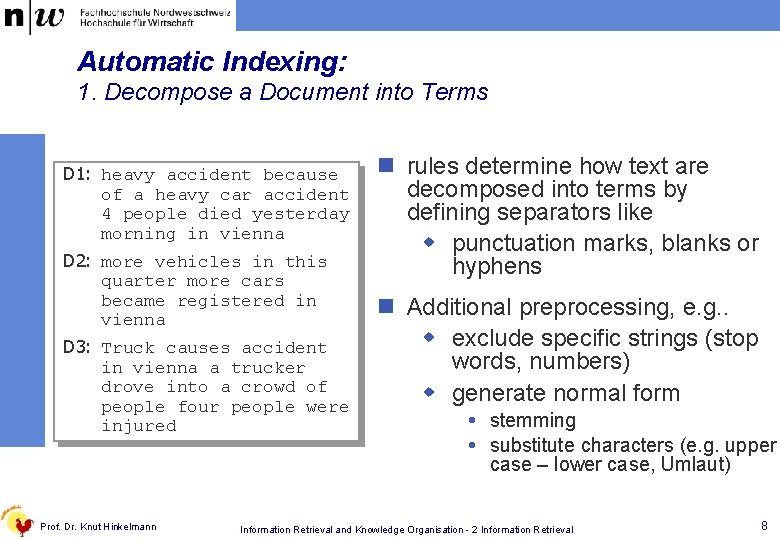

Automatic Indexing: 1. Decompose a Document into Terms D 1: heavy accident because of a heavy car accident 4 people died yesterday morning in vienna D 2: more vehicles in this quarter more cars became registered in vienna D 3: Truck causes accident in vienna a trucker drove into a crowd of people four people were injured Prof. Dr. Knut Hinkelmann n rules determine how text are decomposed into terms by defining separators like w punctuation marks, blanks or hyphens n Additional preprocessing, e. g. . w exclude specific strings (stop words, numbers) w generate normal form stemming substitute characters (e. g. upper case – lower case, Umlaut) Information Retrieval and Knowledge Organisation - 2 Information Retrieval 8

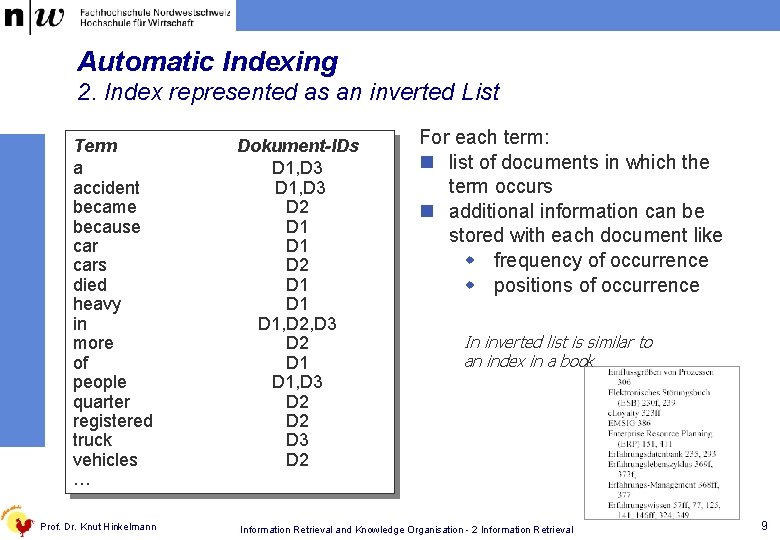

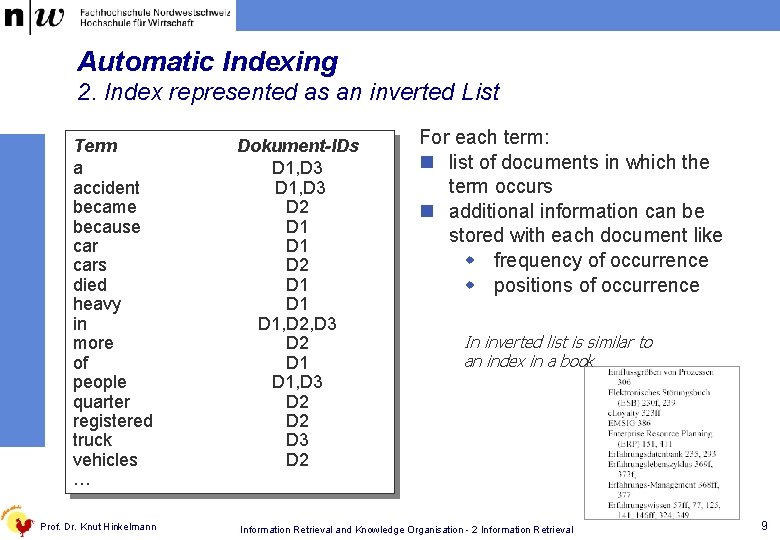

Automatic Indexing 2. Index represented as an inverted List Term a accident became because cars died heavy in more of people quarter registered truck vehicles … Prof. Dr. Knut Hinkelmann Dokument-IDs D 1, D 3 D 2 D 1 D 1 D 1, D 2, D 3 D 2 D 1, D 3 D 2 For each term: n list of documents in which the term occurs n additional information can be stored with each document like w frequency of occurrence w positions of occurrence In inverted list is similar to an index in a book Information Retrieval and Knowledge Organisation - 2 Information Retrieval 9

Index as Inverted List with Frequency In this example the inverted list contains the document identifier an the frequency of the term in the document. term (document; frequency) a accident became because cars died heavy in more of people quarter registered truck vehicles. . . (D 1, 1) (D 3, 2) (D 1, 2) (D 3, 1) (D 2, 1) (D 1, 2) (D 1, 1) (D 2, 1) (D 3, 1) (D 2, 1) (D 1, 1) (D 3, 2) (D 2, 1) (D 3, 1) (D 2, 1) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 10

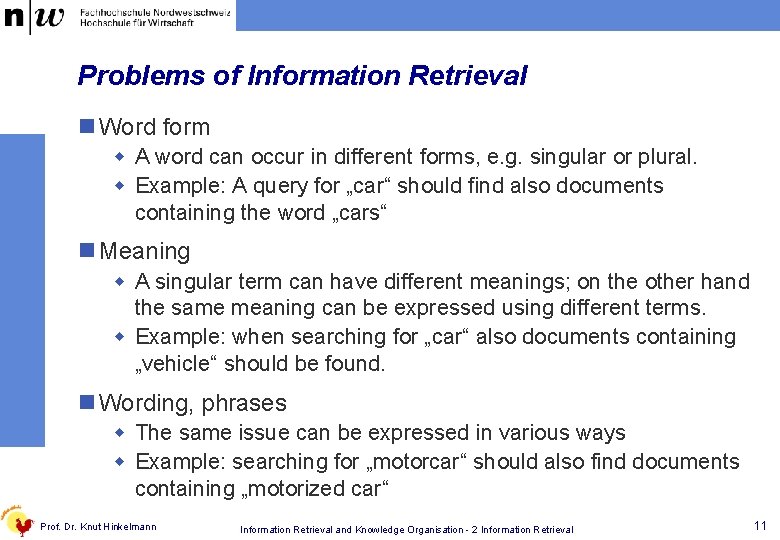

Problems of Information Retrieval n Word form w A word can occur in different forms, e. g. singular or plural. w Example: A query for „car“ should find also documents containing the word „cars“ n Meaning w A singular term can have different meanings; on the other hand the same meaning can be expressed using different terms. w Example: when searching for „car“ also documents containing „vehicle“ should be found. n Wording, phrases w The same issue can be expressed in various ways w Example: searching for „motorcar“ should also find documents containing „motorized car“ Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 11

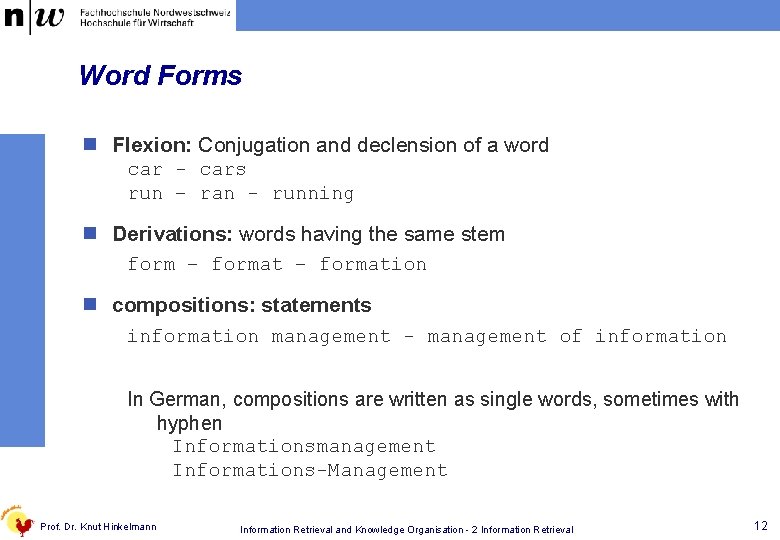

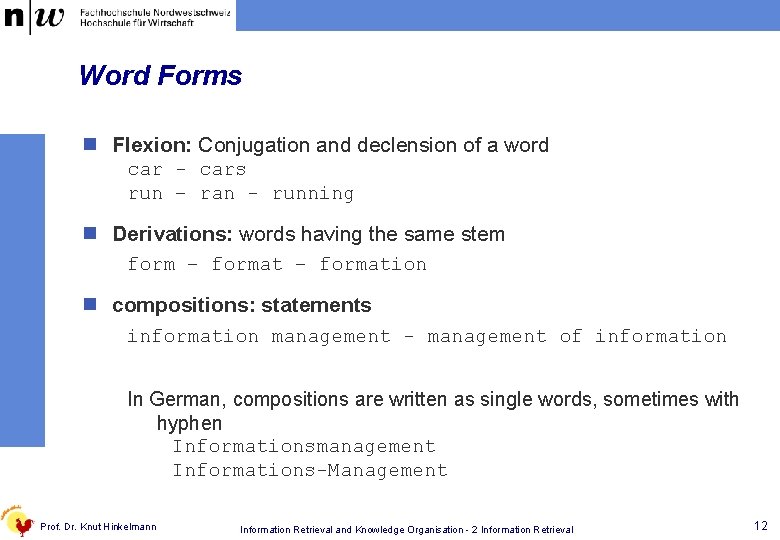

Word Forms n Flexion: Conjugation and declension of a word car - cars run – ran - running n Derivations: words having the same stem form – formation n compositions: statements information management - management of information In German, compositions are written as single words, sometimes with hyphen Informationsmanagement Informations-Management Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 12

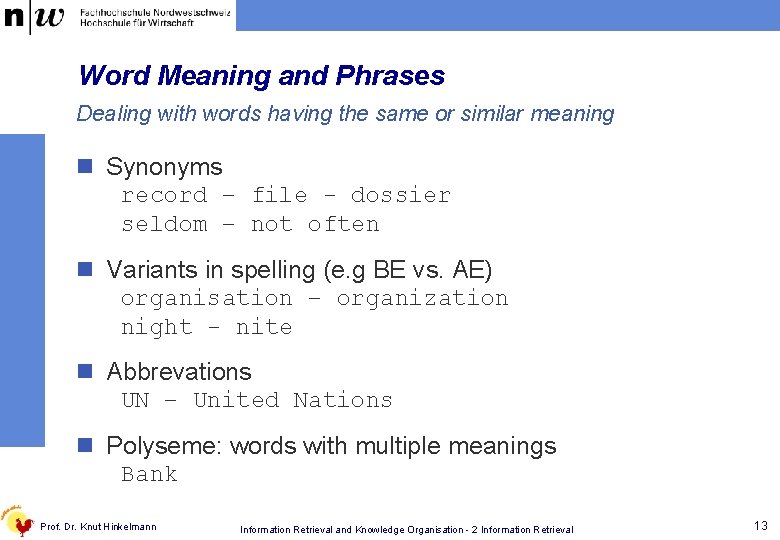

Word Meaning and Phrases Dealing with words having the same or similar meaning n Synonyms record – file - dossier seldom – not often n Variants in spelling (e. g BE vs. AE) organisation – organization night - nite n Abbrevations UN – United Nations n Polyseme: words with multiple meanings Bank Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 13

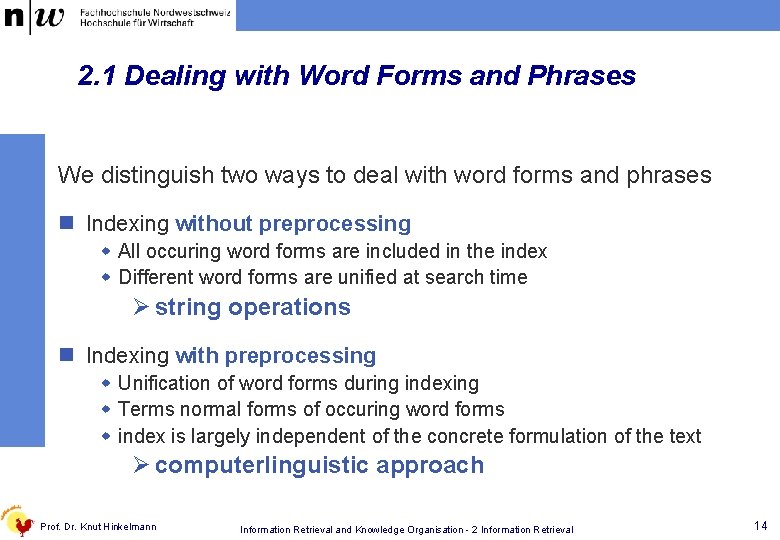

2. 1 Dealing with Word Forms and Phrases We distinguish two ways to deal with word forms and phrases n Indexing without preprocessing w All occuring word forms are included in the index w Different word forms are unified at search time Ø string operations n Indexing with preprocessing w Unification of word forms during indexing w Terms normal forms of occuring word forms w index is largely independent of the concrete formulation of the text Ø computerlinguistic approach Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 14

2. 1. 1 Indexing Without Preprocessing n Index: contains all the word forms occuring in the document n Query: w Searching for specific word forms is possible (e. g. searching for „cars“ but not for „car“) w To search for different word forms string operations can be applied Operators for truncation and masking, e. g. ? covers exactly one character * covers arbitrary number of characters Context operators, e. g. [n] exact distance between terms <n> maximal distance between terms Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 15

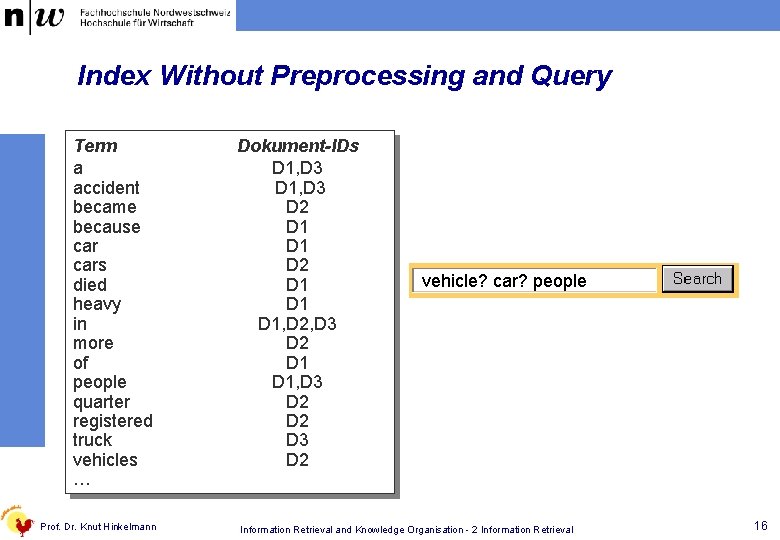

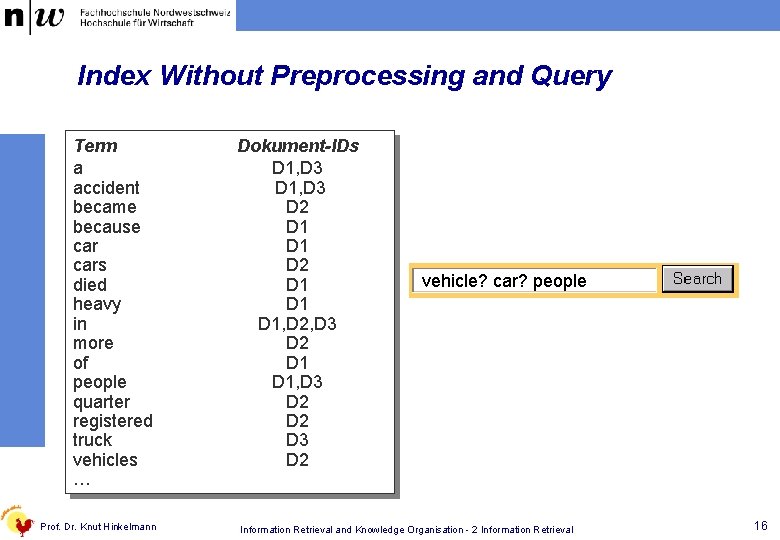

Index Without Preprocessing and Query Term a accident became because cars died heavy in more of people quarter registered truck vehicles … Prof. Dr. Knut Hinkelmann Dokument-IDs D 1, D 3 D 2 D 1 D 1 D 1, D 2, D 3 D 2 D 1, D 3 D 2 vehicle? car? people Information Retrieval and Knowledge Organisation - 2 Information Retrieval 16

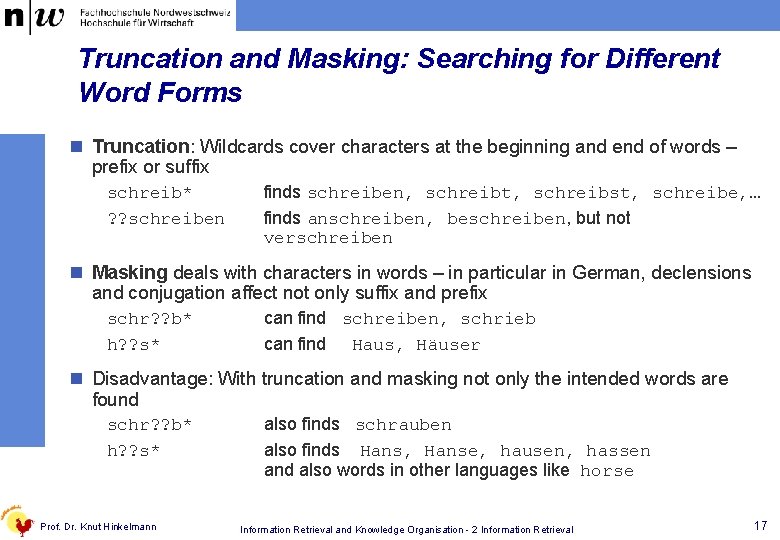

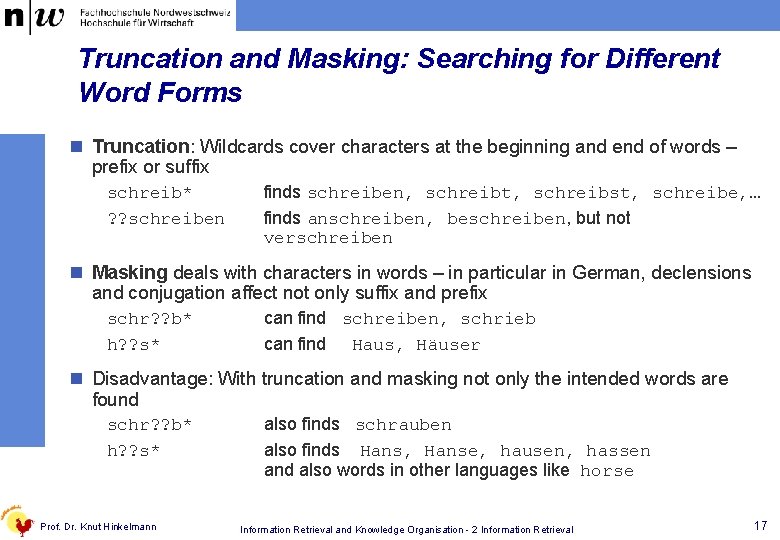

Truncation and Masking: Searching for Different Word Forms n Truncation: Wildcards cover characters at the beginning and end of words – prefix or suffix schreib* ? ? schreiben finds schreiben, schreibt, schreibst, schreibe, … finds anschreiben, beschreiben, but not verschreiben n Masking deals with characters in words – in particular in German, declensions and conjugation affect not only suffix and prefix schr? ? b* h? ? s* can find schreiben, schrieb can find Haus, Häuser n Disadvantage: With truncation and masking not only the intended words are found schr? ? b* h? ? s* Prof. Dr. Knut Hinkelmann also finds schrauben also finds Hans, Hanse, hausen, hassen and also words in other languages like horse Information Retrieval and Knowledge Organisation - 2 Information Retrieval 17

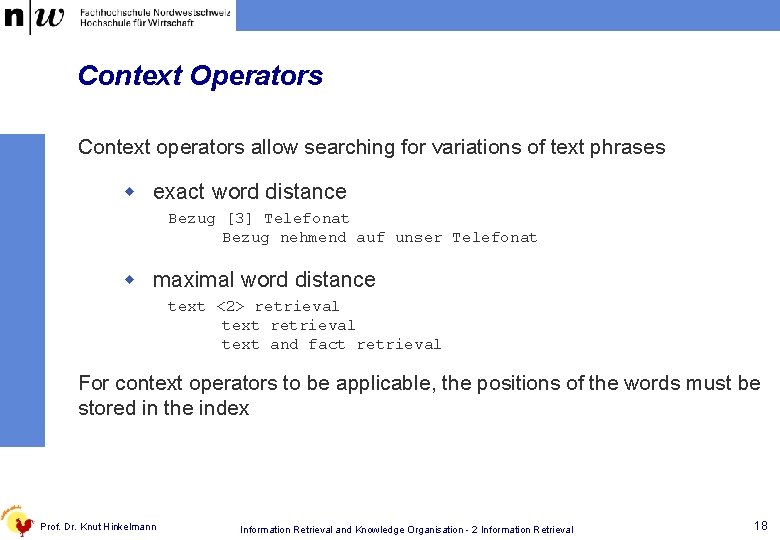

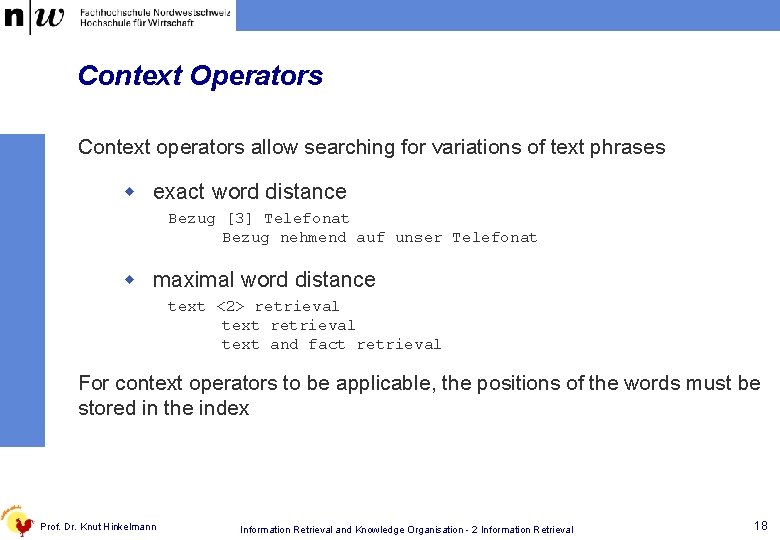

Context Operators Context operators allow searching for variations of text phrases w exact word distance Bezug [3] Telefonat Bezug nehmend auf unser Telefonat w maximal word distance text <2> retrieval text and fact retrieval For context operators to be applicable, the positions of the words must be stored in the index Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 18

Indexing Without Preprocessing n Efficiency w Efficient Indexing w Overhead at retrieval time to apply string operators n Wort forms w user has to codify all possible word forms and phrases in the query using truncation and masking operators w no support given by search engine w retrieval engine is language independent n Phrases w Variants in text phrases can be coded using context operators Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 19

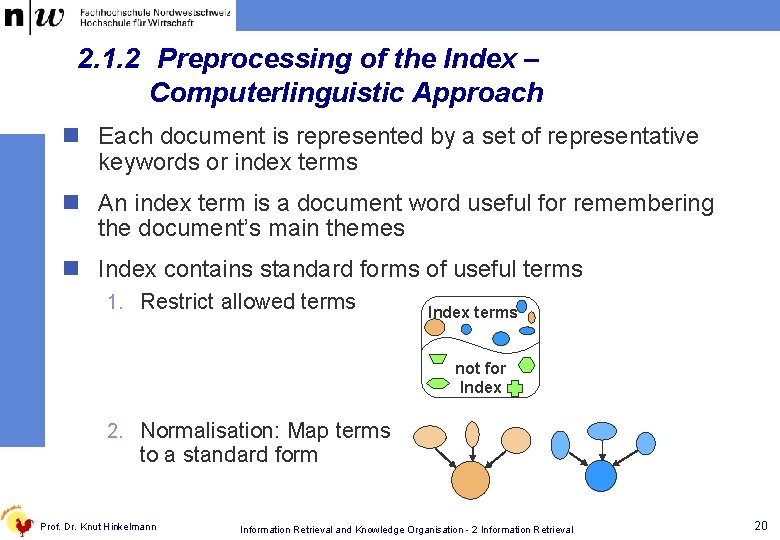

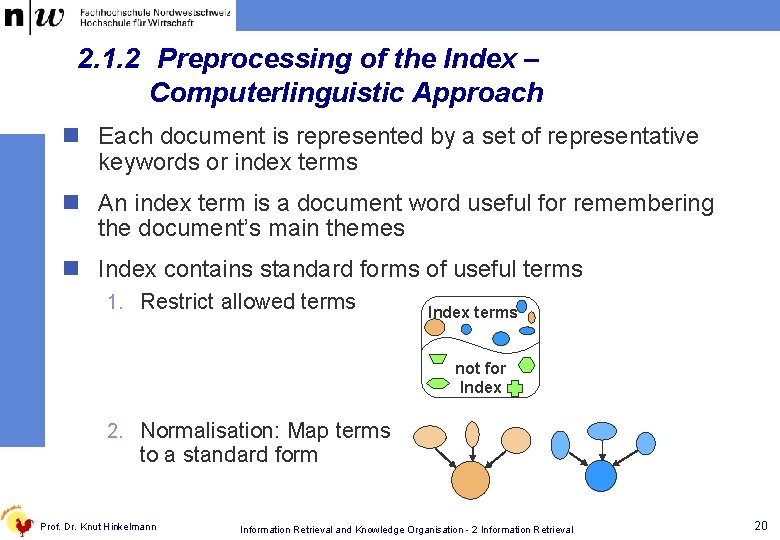

2. 1. 2 Preprocessing of the Index – Computerlinguistic Approach n Each document is represented by a set of representative keywords or index terms n An index term is a document word useful for remembering the document’s main themes n Index contains standard forms of useful terms 1. Restrict allowed terms Index terms not for Index 2. Normalisation: Map terms to a standard form Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 20

Restricting allowed Index Terms n Objective: w increase efficiency effectivity by neglecting terms that do not contribute to the assessment of a document‘s relevance n There are two possibilities to restrict allowed index terms 1. Explicitly specify allowed index terms Ø controlled vocabulary 2. Specify terms that are not allowed as index terms Ø stopwords Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 21

Stop Words n Stop words are terms that are not stored in the index n Candidates for stop words are w words that occur very frequently A term occurring in every document ist useless as an index term, because it does not tell anything about which document the user might be interested in a word which occurs only in 0. 001% of the documents is quite useful because it narrows down the space of documents which might be of interest for the user w words with no/little meanings w terms that are not words (e. g. numbers) n Examples: w General: articles, conjunctions, prepositions, auxiliary verbs (to be, to have) occur very often and in general have no meaning as a search criteria w application-specific stop words are also possible Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 22

Normalisation of Terms n There are various possibilities to compute standard forms w N-Grams w stemming: removing suffixes or prefixes Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 23

N-Grams n Index: sequence of charcters of length N w Example: „persons“ w 3 -Grams (N=3): per, ers, rso, son, ons w 4 -Grams (N=4): pers, erso, rson, sons n N-Grams can also cross word boundaries w Example: „persons from switzerland“ w 3 -Grams (N=3): er, ers, rso, son, ons, ns_, s_f, _fr, rom, om_, m_s, _sw, swi, wit, itz, tze, zer, erl, rla, lan, and Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 24

Stemming n Stemming: remove suffixes and prefixes to find a comming stem, e. g. w remove –ing and –ed for verbs w remove plural -s for nouns n There a number of exceptions, e. g. w –ing and –ed may belong to a stem as in red or ring w irregular verbs like go - went - gone, run - ran - run n Approaches for stemming: w rule-based approach w lexicon-based approach Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 25

Rules for Stemming in English Kuhlen (1977) derived a rule set for stemming of most English words: Ending 1 2 3 4 5 6 7 8 9 10 11 12 13 ies XYs ies' Xs' X 's X' XYing ied XYed Replacement y XY XY y X X XY XYe y XY XYe Condition XY = Co, ch, ss, zz oder Xx XY = XC, Xe, Vy, Vo, oa oder ea X and Y are any letters C stands for a consonant V stands for any vowel XY= CC, XV, Xx XY= VC XY = CC, XV, Xx XY= VC Source: (Ferber 2003) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 26

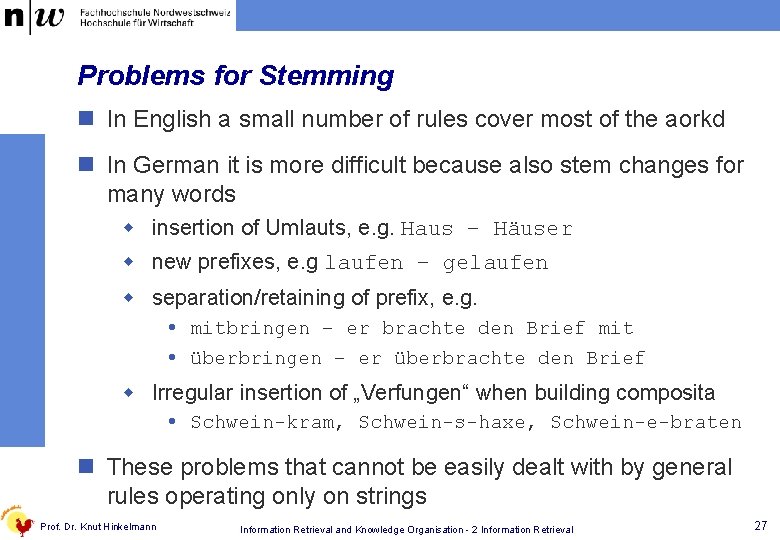

Problems for Stemming n In English a small number of rules cover most of the aorkd n In German it is more difficult because also stem changes for many words w insertion of Umlauts, e. g. Haus – Häuser w new prefixes, e. g laufen – gelaufen w separation/retaining of prefix, e. g. mitbringen – er brachte den Brief mit überbringen – er überbrachte den Brief w Irregular insertion of „Verfungen“ when building composita Schwein-kram, Schwein-s-haxe, Schwein-e-braten n These problems that cannot be easily dealt with by general rules operating only on strings Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 27

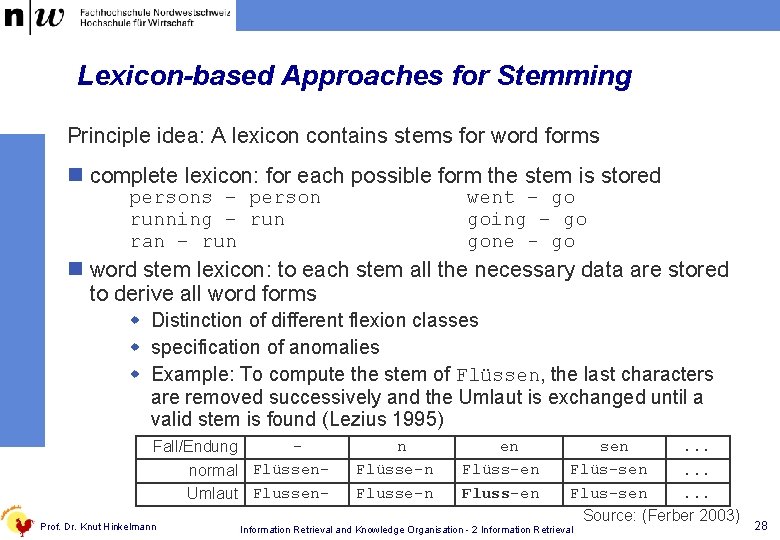

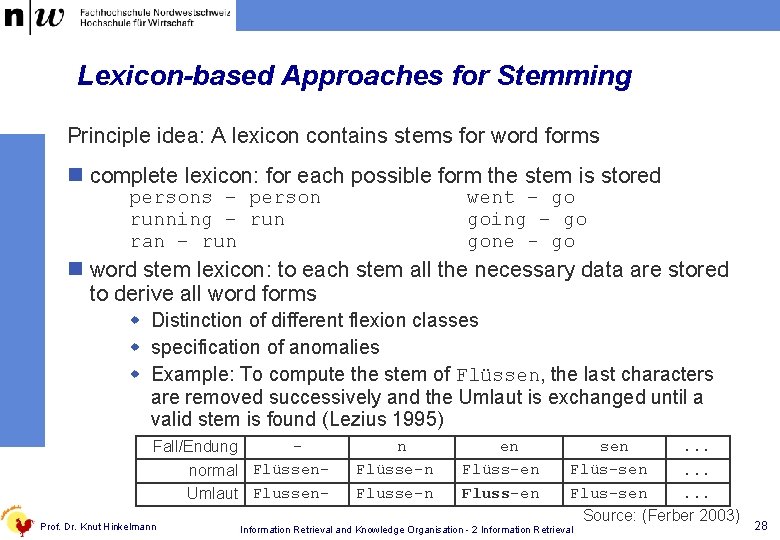

Lexicon-based Approaches for Stemming Principle idea: A lexicon contains stems for word forms n complete lexicon: for each possible form the stem is stored persons – person running – run ran – run went – go going – go gone - go n word stem lexicon: to each stem all the necessary data are stored to derive all word forms w Distinction of different flexion classes w specification of anomalies w Example: To compute the stem of Flüssen, the last characters are removed successively and the Umlaut is exchanged until a valid stem is found (Lezius 1995) Fall/Endung normal Flüssen. Umlaut Flussen. Prof. Dr. Knut Hinkelmann n Flüsse-n Flusse-n en Flüss-en Fluss-en sen. . . Flüs-sen. . . Flus-sen. . . Source: (Ferber 2003) Information Retrieval and Knowledge Organisation - 2 Information Retrieval 28

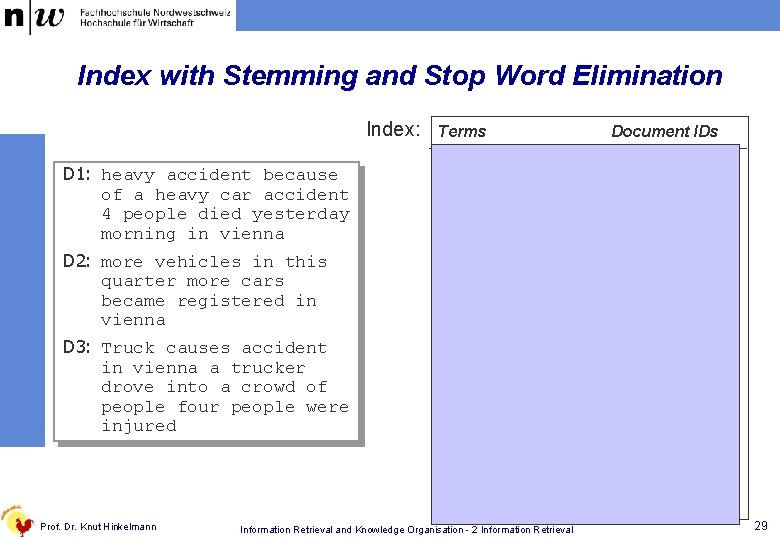

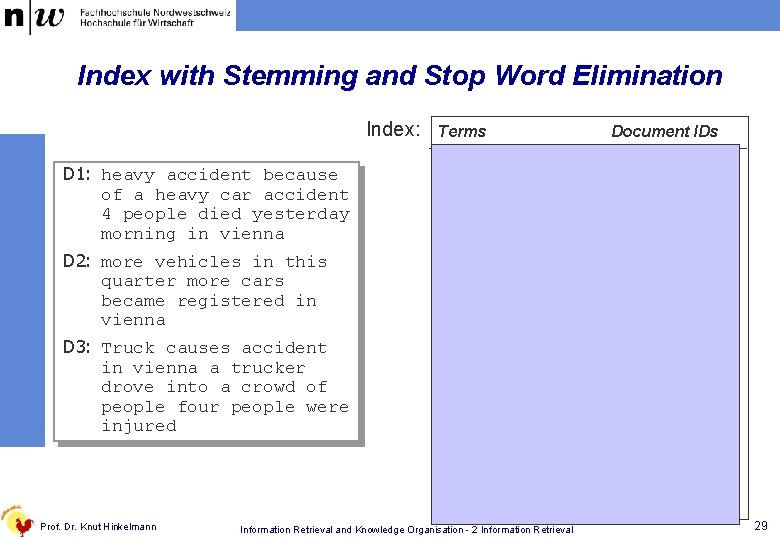

Index with Stemming and Stop Word Elimination Index: D 1: heavy accident because of a heavy car accident 4 people died yesterday morning in vienna D 2: more vehicles in this quarter more cars became registered in vienna D 3: Truck causes accident in vienna a trucker drove into a crowd of people four people were injured Prof. Dr. Knut Hinkelmann Terms accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Information Retrieval and Knowledge Organisation - 2 Information Retrieval Document IDs D 1, D 3 D 1, D 2 D 3 D 3 D 1 D 3 D 2 D 1, D 3 D 2 D 1, D 2, D 3 D 1 29

2. 2 Classical Information Retrieval Models n Classcial Models w Boolean Model w Vectorspace model w Probabilistic Model n Alternative Models w user preferences w Associative Search w Social Filtering Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 30

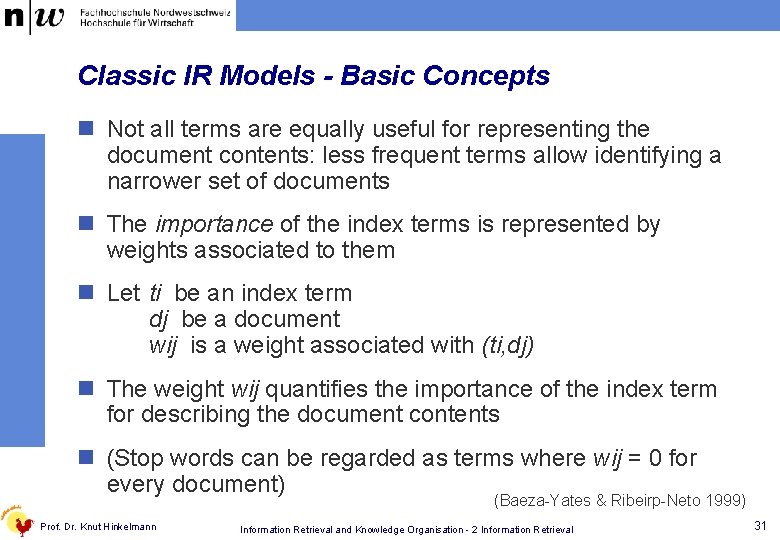

Classic IR Models - Basic Concepts n Not all terms are equally useful for representing the document contents: less frequent terms allow identifying a narrower set of documents n The importance of the index terms is represented by weights associated to them n Let ti be an index term dj be a document wij is a weight associated with (ti, dj) n The weight wij quantifies the importance of the index term for describing the document contents n (Stop words can be regarded as terms where wij = 0 for every document) (Baeza-Yates & Ribeirp-Neto 1999) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 31

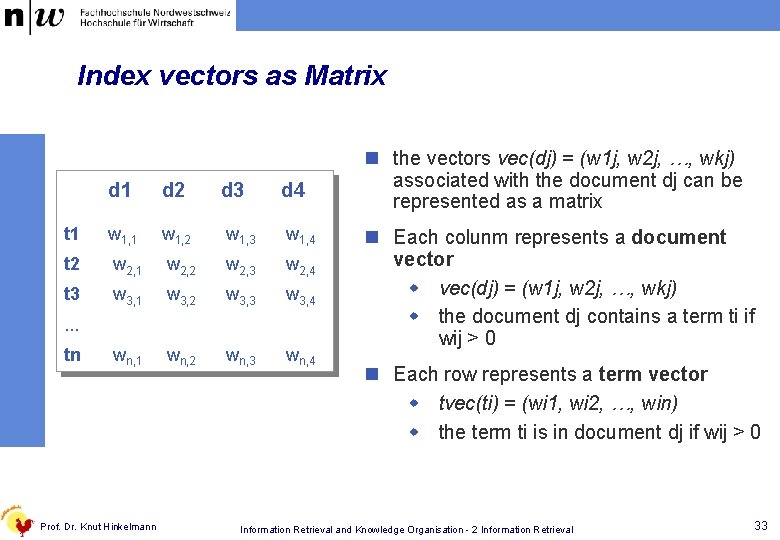

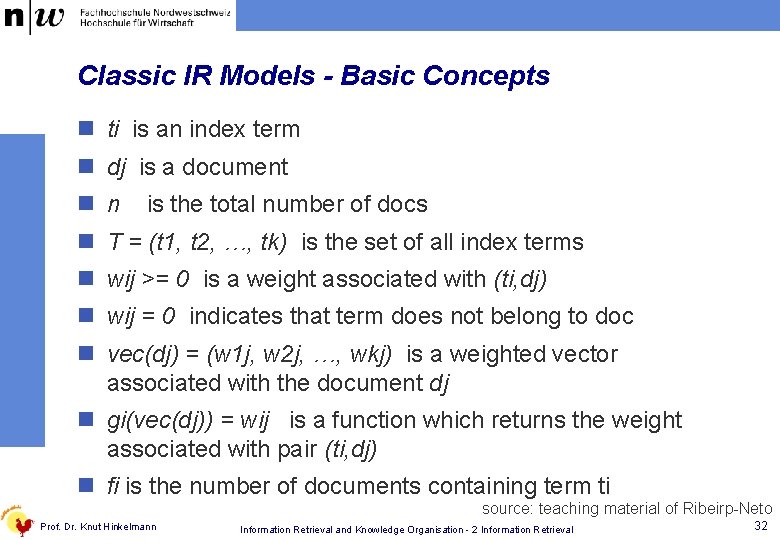

Classic IR Models - Basic Concepts n ti is an index term n dj is a document n n is the total number of docs n T = (t 1, t 2, …, tk) is the set of all index terms n wij >= 0 is a weight associated with (ti, dj) n wij = 0 indicates that term does not belong to doc n vec(dj) = (w 1 j, w 2 j, …, wkj) is a weighted vector associated with the document dj n gi(vec(dj)) = wij is a function which returns the weight associated with pair (ti, dj) n fi is the number of documents containing term ti source: teaching material of Ribeirp-Neto Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 32

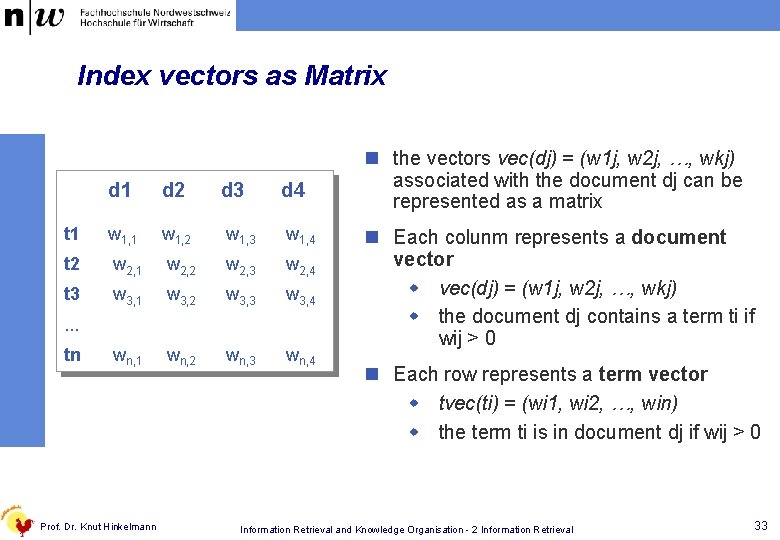

Index vectors as Matrix d 1 d 2 d 3 d 4 t 1 w 1, 2 w 1, 3 w 1, 4 t 2 w 2, 1 w 2, 2 w 2, 3 w 2, 4 t 3 w 3, 1 w 3, 2 w 3, 3 w 3, 4 wn, 1 wn, 2 wn, 3 wn, 4 . . . tn Prof. Dr. Knut Hinkelmann n the vectors vec(dj) = (w 1 j, w 2 j, …, wkj) associated with the document dj can be represented as a matrix n Each colunm represents a document vector w vec(dj) = (w 1 j, w 2 j, …, wkj) w the document dj contains a term ti if wij > 0 n Each row represents a term vector w tvec(ti) = (wi 1, wi 2, …, win) w the term ti is in document dj if wij > 0 Information Retrieval and Knowledge Organisation - 2 Information Retrieval 33

Boolean Document Vectors d 1: heavy accident because of a heavy car accident 4 people died yesterday morning in vienna d 2: more vehicles in this quarter more cars became registered in vienna d 3: Truck causes accident in vienna a trucker drove into a crowd of people four people were injured Prof. Dr. Knut Hinkelmann accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday d 1 d 2 d 3 1 1 0 0 1 1 0 0 0 0 1 0 0 1 1 0 1 0 0 1 1 0 Information Retrieval and Knowledge Organisation - 2 Information Retrieval 34

2. 2. 1 The Boolean Model n Simple model based on set theory w precise semantics w neat formalism n Binary index: Terms are either present or absent. Thus, wij Î {0, 1} n Queries are specified as boolean expressions using operators AND ( ), OR ( ), and NOT ( ) w q = ta (tb tc) vehicle OR car AND accident Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 35

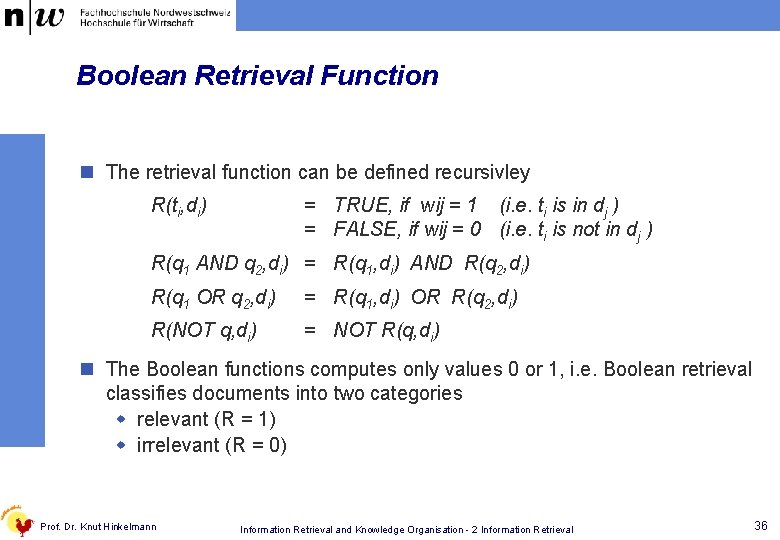

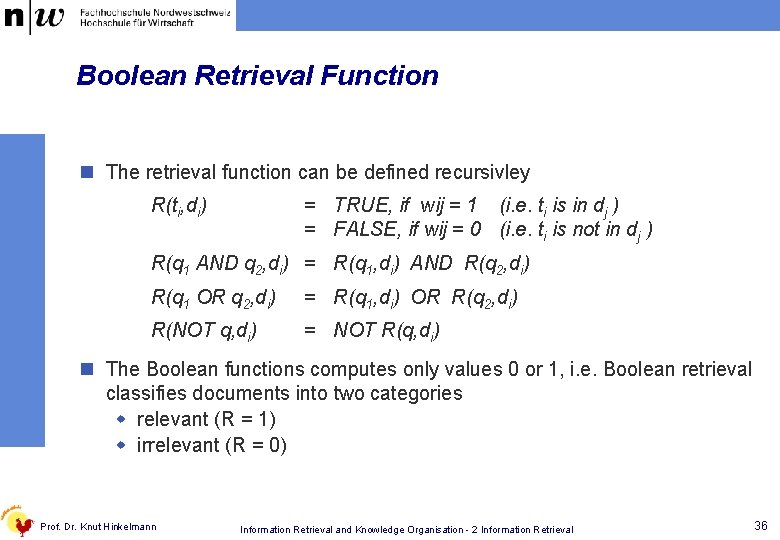

Boolean Retrieval Function n The retrieval function can be defined recursivley R(ti, di) = TRUE, if wij = 1 (i. e. ti is in dj ) = FALSE, if wij = 0 (i. e. ti is not in dj ) R(q 1 AND q 2, di) = R(q 1, di) AND R(q 2, di) R(q 1 OR q 2, di) = R(q 1, di) OR R(q 2, di) R(NOT q, di) = NOT R(q, di) n The Boolean functions computes only values 0 or 1, i. e. Boolean retrieval classifies documents into two categories w relevant (R = 1) w irrelevant (R = 0) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 36

Example für Boolesches Retrieval accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann d 1 d 2 d 3 1 1 0 0 1 1 0 0 0 0 1 0 0 1 1 0 1 0 0 1 1 0 n Query: (vehicle OR car) AND accident R(vehicle OR car AND accident, d 1) = R(vehicle OR car AND accident, d 2) = R(vehicle OR car AND accident, d 3) = 1 0 0 n Query: (vehicle AND car) OR accident R(vehicle AND car OR accident, d 1) = R(vehicle AND car OR accident, d 2) = R(vehicle AND car OR accident, d 3) = Information Retrieval and Knowledge Organisation - 2 Information Retrieval 0 1 0 37

Drawbacks of the Boolean Model n Retrieval based on binary decision criteria w no notion of partial matching w No ranking of the documents is provided (absence of a grading scale) The query q = t 1 OR t 2 OR t 3 is satisfied by document containing one, two or three of the terms t 1, t 2, t 3 n No weighting of terms, wij Î {0, 1} n Information need has to be translated into a Boolean expression which most users find awkward n The Boolean queries formulated by the users are most often too simplistic n As a consequence, the Boolean model frequently returns either too few or too many documents in response to a user query Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 38

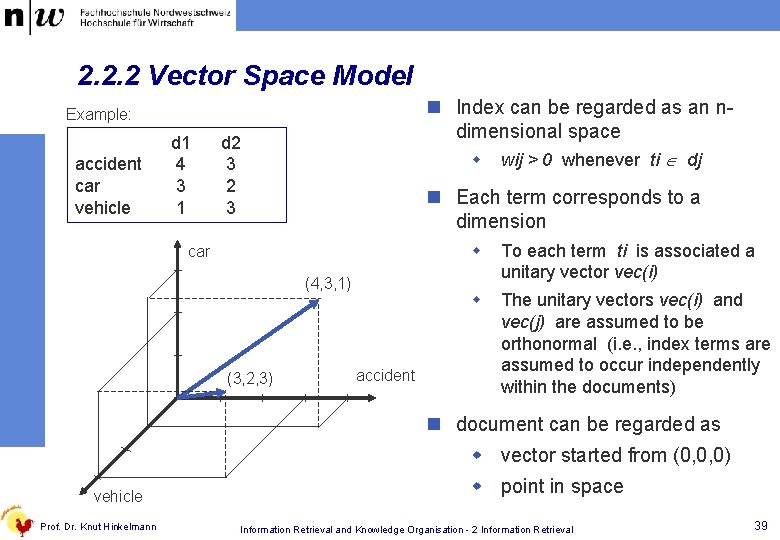

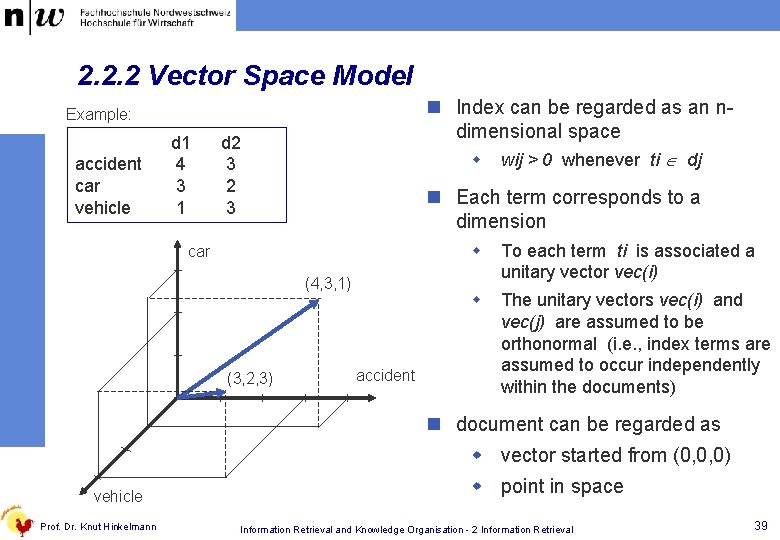

2. 2. 2 Vector Space Model n Index can be regarded as an ndimensional space Example: accident car vehicle d 1 4 3 1 d 2 3 w n Each term corresponds to a dimension car (4, 3, 1) (3, 2, 3) wij > 0 whenever ti dj accident w To each term ti is associated a unitary vector vec(i) w The unitary vectors vec(i) and vec(j) are assumed to be orthonormal (i. e. , index terms are assumed to occur independently within the documents) n document can be regarded as w vector started from (0, 0, 0) vehicle Prof. Dr. Knut Hinkelmann w point in space Information Retrieval and Knowledge Organisation - 2 Information Retrieval 39

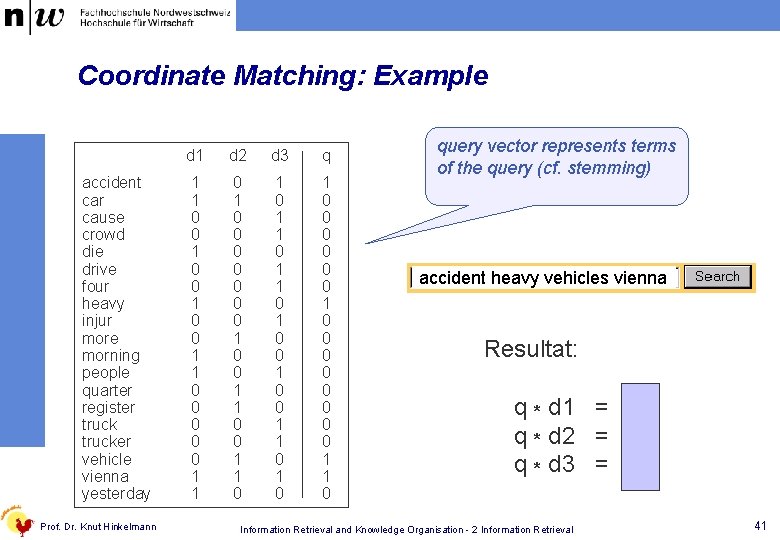

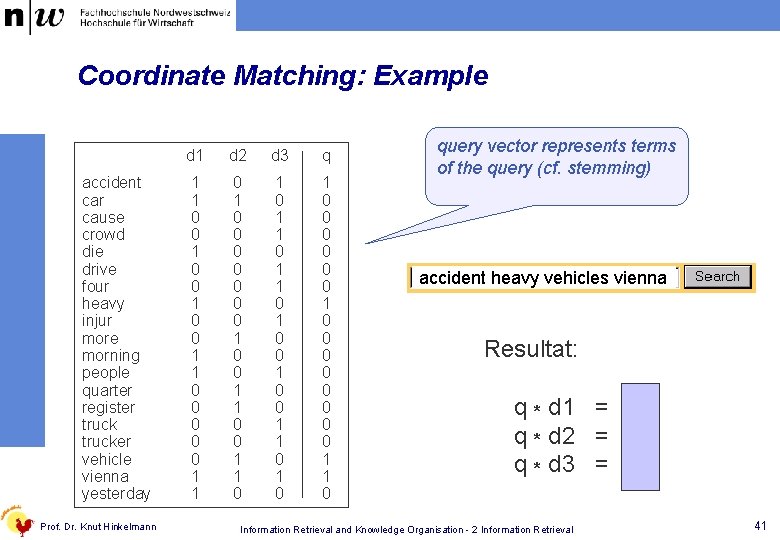

2. 2. 2. 1 Coordinate Matching n Documents and query are represented as w document vectors vec(dj) = (w 1 j, w 2 j, …, wkj) w query vector vec(q) = (w 1 q, . . . , wkq) n Vectors have binary values w wij = 1 w wij = 0 else if term ti occurs in Dokument dj n Ranking: w Return the documents containing at least one query term w rank by number of occuring query terms n Ranking function: scalar product w R(q, d) = q * d n = Prof. Dr. Knut Hinkelmann S q i * di i=1 Multiply components and summarize Information Retrieval and Knowledge Organisation - 2 Information Retrieval 40

Coordinate Matching: Example accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann d 1 d 2 d 3 q 1 1 0 0 1 1 0 0 0 0 1 0 0 1 1 0 1 0 0 1 1 0 1 0 0 0 0 1 1 0 query vector represents terms of the query (cf. stemming) accident heavy vehicles vienna Resultat: q * d 1 = 3 q * d 2 = 2 q * d 3 = 2 Information Retrieval and Knowledge Organisation - 2 Information Retrieval 41

Assessment of Coordinate Matching n Advantage compared to Boolean Model: Ranking n Three main drawbacks w frequency of terms in documents in not considered w no weighting of terms w privilege for larger documents Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 42

2. 2 Term Weighting n Use of binary weights is too limiting w Non-binary weights provide consideration for partial matches w These term weights are used to compute a degree of similarity between a query and each document n How to compute the weights wij and wiq ? n A good weight must take into account two effects: w quantification of intra-document contents (similarity) tf factor, the term frequency within a document w quantification of inter-documents separation (dissi-milarity) idf factor, the inverse document frequency w wij = tf(i, j) * idf(i) Prof. Dr. Knut Hinkelmann (Baeza-Yates & Ribeirp-Neto 1999) Information Retrieval and Knowledge Organisation - 2 Information Retrieval 43

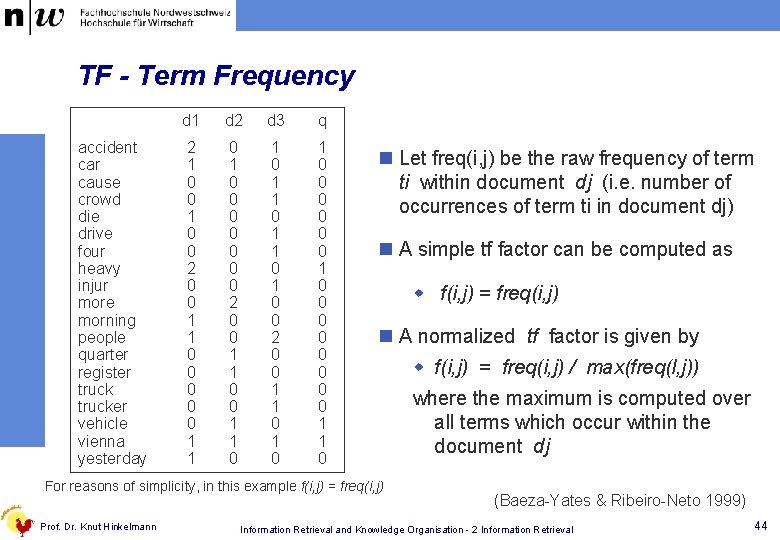

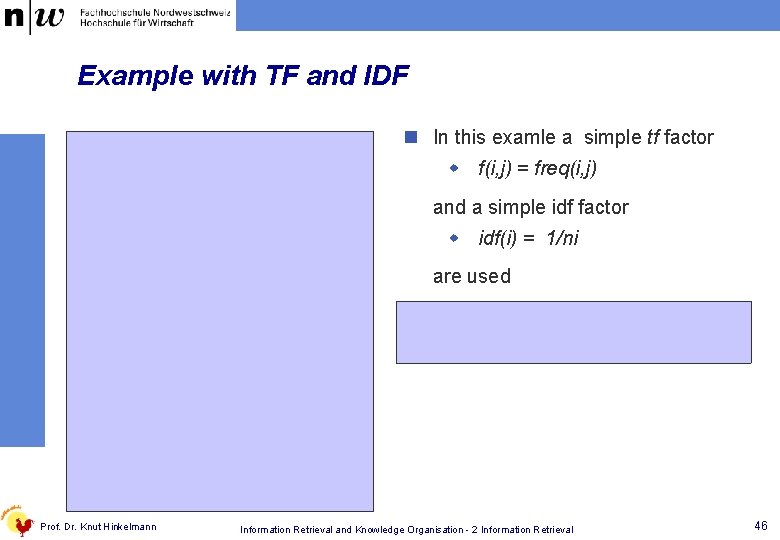

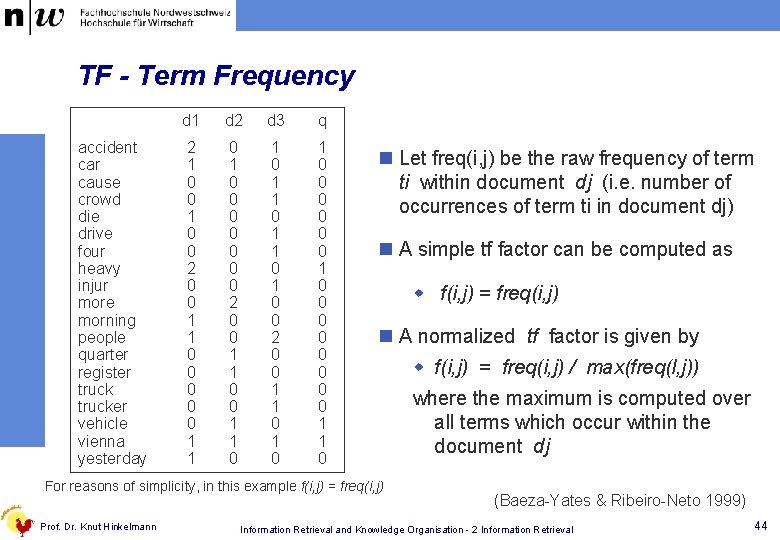

TF - Term Frequency accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday d 1 d 2 d 3 q 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 1 0 0 0 0 1 1 0 n Let freq(i, j) be the raw frequency of term ti within document dj (i. e. number of occurrences of term ti in document dj) n A simple tf factor can be computed as w f(i, j) = freq(i, j) n A normalized tf factor is given by For reasons of simplicity, in this example f(i, j) = freq(i, j) Prof. Dr. Knut Hinkelmann w f(i, j) = freq(i, j) / max(freq(l, j)) where the maximum is computed over all terms which occur within the document dj (Baeza-Yates & Ribeiro-Neto 1999) Information Retrieval and Knowledge Organisation - 2 Information Retrieval 44

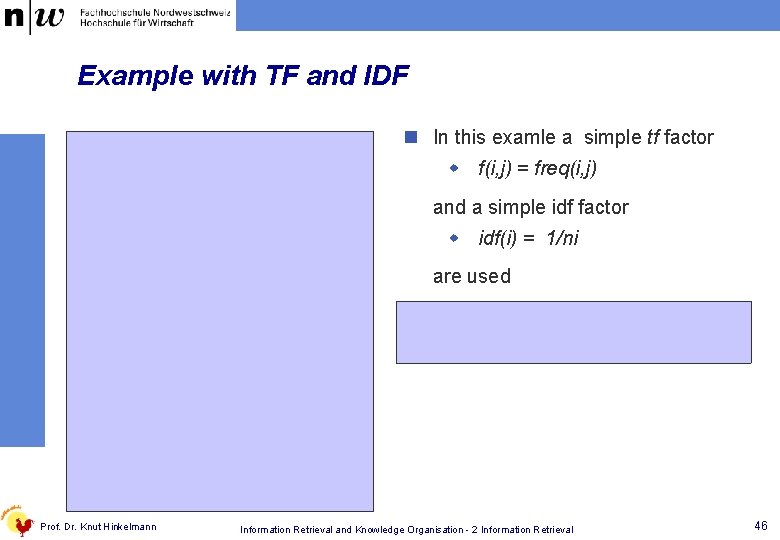

IDF – Inverse Document Frequency n IDF can also be interpreted as the amount of information associated with the term ti. A term occurring in few documents is more useful as an index term than a term occurring in nearly every document n Let ni be the number of documents containing term ti N be the total number of documents n A simple idf factor can be computed as w idf(i) = 1/ni n A normalized idf factor is given by w idf(i) = log (N/ni) the log is used to make the values of tf and idf comparable. Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 45

Example with TF and IDF accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann IDF d 1 d 2 d 3 0. 5 1 1 1 1 1 0. 5 1 1 1 0. 33 1 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 n In this examle a simple tf factor w f(i, j) = freq(i, j) and a simple idf factor w idf(i) = 1/ni are used n It is of advantage to store IDF and TF separately Information Retrieval and Knowledge Organisation - 2 Information Retrieval 46

Indexing a new Document n Changes of the indexes when adding a new document d w a new document vector with tf factors for d is created w idf factors for terms occuring in d are adapted n All other document vectors remain unchanged Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 47

Ranking n Scalar product computes co-occurrences of term in document and query w Drawback: Scalar product privileges large documents over small ones t 1 q n Euclidian distance between endpoint of vectors d w Drawback: euclidian distance privileges small documents over large ones t 2 n Angle between vectors w the smaller the angle beween query and document vector the more similar they are w the angle is independent of the size of the document w the cosine is a good measure of the angle Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 48

Cosine Ranking Formula t 1 n the more the directions of query a and document dj coincide the more relevant is dj q cos(q, dj) = Prof. Dr. Knut Hinkelmann dj n the cosine formula takes into account the ratio of the terms not their concrete number t 2 q ° dj |q| ° |dj| n Let q be the angle between q and dj n Because all values wij >= 0 the angle q is between 0° und 90° w the larger q the less is cos q w the less q the larger is cos q w cos 0 = 1 w cos 90° = 0 Information Retrieval and Knowledge Organisation - 2 Information Retrieval 49

The Vector Model n The best term-weighting schemes use weights which are given by w wij = f(i, j) * log(N/ni) w the strategy is called a tf-idf weighting scheme n For the query term weights, a suggestion is w wiq = (0. 5 + [0. 5 * freq(i, q) / max(freq(l, q)]) * log(N/ni) (Baeza-Yates & Ribeirp-Neto 1999) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 50

The Vector Model n The vector model with tf-idf weights is a good ranking strategy with general collections n The vector model is usually as good as the known ranking alternatives. It is also simple and fast to compute. n Advantages: w term-weighting improves quality of the answer set w partial matching allows retrieval of docs that approximate the query conditions w cosine ranking formula sorts documents according to degree of similarity to the query n Disadvantages: w assumes independence of index terms (? ? ); not clear that this is bad though (Baeza-Yates & Ribeiro-Neto 1999) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 51

2. 2. 3 Extensions of the Classical Models n Combination of w Boolean model w vector model w indexing with and without preprocessing n Extended index with additional information like w document format (. doc, . pdf, …) w language n Using information about links in hypertext w link structure w anchor text Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 52

Boolean Operators in the Vector Model accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann d 1 d 2 d 3 q 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 1 0 0 0 0 1 1 0 n Many search engines allow queries with Boolean operators (vehicle OR car) AND accident n Retrieval: w Boolean operators are used to select relevant documents in the example, only documents containing „accident“ and either „vehicle“ or „car“are considered relevant w ranking of the relevant documents is based on vector model idf-tf weighting cosine ranking formula Information Retrieval and Knowledge Organisation - 2 Information Retrieval 53

Queries with Wild Cards in the Vector Model accident cars causes crowd died drove four heavy injured more morning people quarter registered trucker vehicles vienna yesterday Prof. Dr. Knut Hinkelmann d 1 d 2 d 3 q 2 1 0 0 0 1 0 0 2 0 0 1 1 0 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 1 0 0 0 0 1 1 0 n vector model based in index without preprocessing n index contains all word forms occuring in the documents n Queries allow wildcards (masking and truncation), e. g. accident heavy vehicle* vienna n Principle of query answering w First, wildcards are extended to all matching terms (here vehicle* matches „vehicles“) w ranking according to vector model Information Retrieval and Knowledge Organisation - 2 Information Retrieval 54

Using Link Information in Hypertext n Ranking: link structure is used to calculate a quality ranking for each web page w Page. Rank® w HITS – Hypertext Induced Topic Selection (Authority and Hub) w Hilltop n Indexing: text of a link (anchor text) is associated both w with the page the link is on and w with the page the link points to Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 55

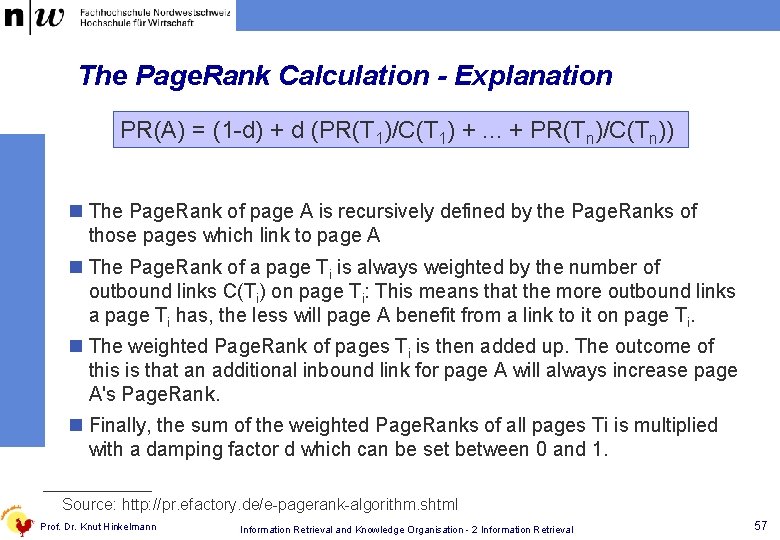

The Page. Rank Calculation n Page. Rank has been developed by Sergey Brin and Lawrence Page at Stanford University and published in 19981) n Page. Rank uses the link structure of web pages n Original version of Page. Rank calculation: PR(A) = (1 -d) + d (PR(T 1)/C(T 1) +. . . + PR(Tn)/C(Tn)) n with PR(A) PR(Ti) C(Ti) d being the Page. Rank of page A, being the Page. Rank of apges Ti that contain a link to page A being the number of links going out of page Ti being a damping factor with 0 <= d <= 1 S. Brin and L. Page: The Anatomy of a Large-Scale Hypertextual Web Search Engine. In: Computer Networks and ISDN Systems. Vol. 30, 1998, Seiten 107 -117 http: //www-db. stanford. edu/~backrub/google. html oder http: //infolab. stanford. edu/pub/papers/google. pdf 1) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 56

The Page. Rank Calculation - Explanation PR(A) = (1 -d) + d (PR(T 1)/C(T 1) +. . . + PR(Tn)/C(Tn)) n The Page. Rank of page A is recursively defined by the Page. Ranks of those pages which link to page A n The Page. Rank of a page Ti is always weighted by the number of outbound links C(Ti) on page Ti: This means that the more outbound links a page Ti has, the less will page A benefit from a link to it on page Ti. n The weighted Page. Rank of pages Ti is then added up. The outcome of this is that an additional inbound link for page A will always increase page A's Page. Rank. n Finally, the sum of the weighted Page. Ranks of all pages Ti is multiplied with a damping factor d which can be set between 0 and 1. Source: http: //pr. efactory. de/e-pagerank-algorithm. shtml Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 57

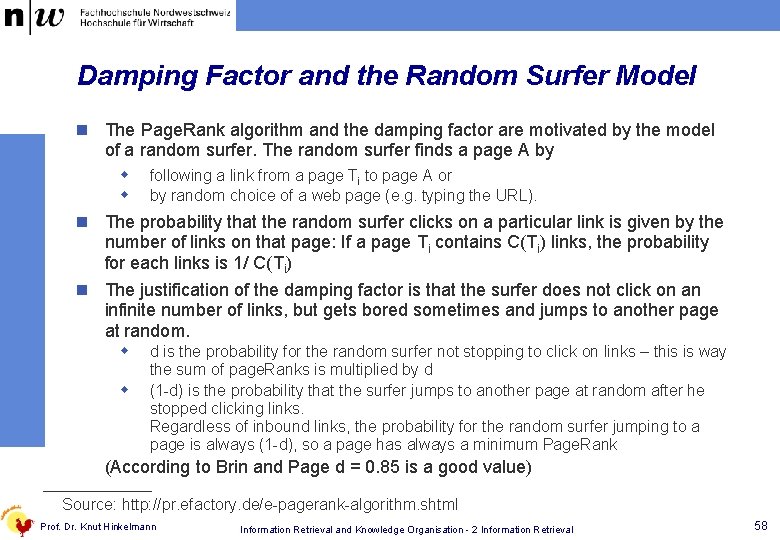

Damping Factor and the Random Surfer Model n The Page. Rank algorithm and the damping factor are motivated by the model of a random surfer. The random surfer finds a page A by w w following a link from a page Ti to page A or by random choice of a web page (e. g. typing the URL). n The probability that the random surfer clicks on a particular link is given by the number of links on that page: If a page Ti contains C(Ti) links, the probability for each links is 1/ C(Ti) n The justification of the damping factor is that the surfer does not click on an infinite number of links, but gets bored sometimes and jumps to another page at random. w w d is the probability for the random surfer not stopping to click on links – this is way the sum of page. Ranks is multiplied by d (1 -d) is the probability that the surfer jumps to another page at random after he stopped clicking links. Regardless of inbound links, the probability for the random surfer jumping to a page is always (1 -d), so a page has always a minimum Page. Rank (According to Brin and Page d = 0. 85 is a good value) Source: http: //pr. efactory. de/e-pagerank-algorithm. shtml Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 58

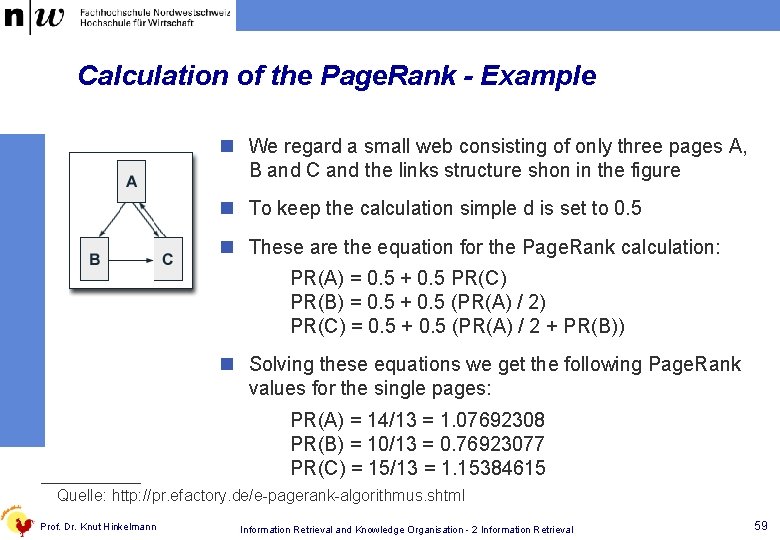

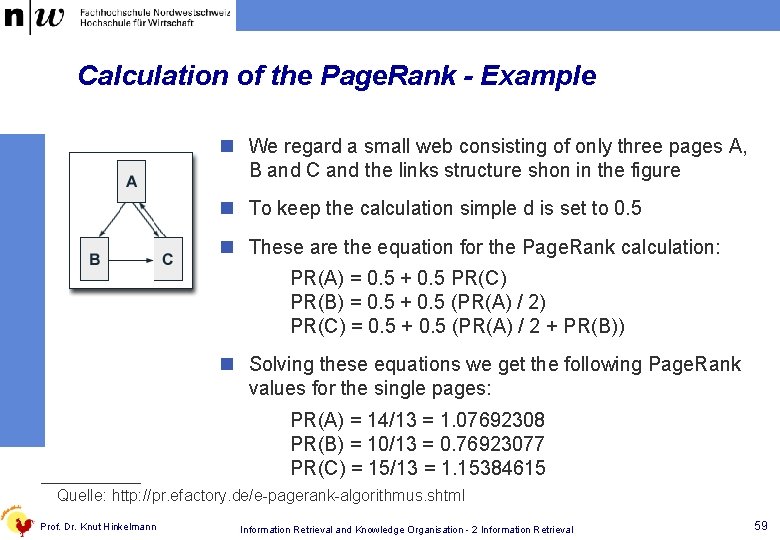

Calculation of the Page. Rank - Example n We regard a small web consisting of only three pages A, B and C and the links structure shon in the figure n To keep the calculation simple d is set to 0. 5 n These are the equation for the Page. Rank calculation: PR(A) = 0. 5 + 0. 5 PR(C) PR(B) = 0. 5 + 0. 5 (PR(A) / 2) PR(C) = 0. 5 + 0. 5 (PR(A) / 2 + PR(B)) n Solving these equations we get the following Page. Rank values for the single pages: PR(A) = 14/13 = 1. 07692308 PR(B) = 10/13 = 0. 76923077 PR(C) = 15/13 = 1. 15384615 Quelle: http: //pr. efactory. de/e-pagerank-algorithmus. shtml Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 59

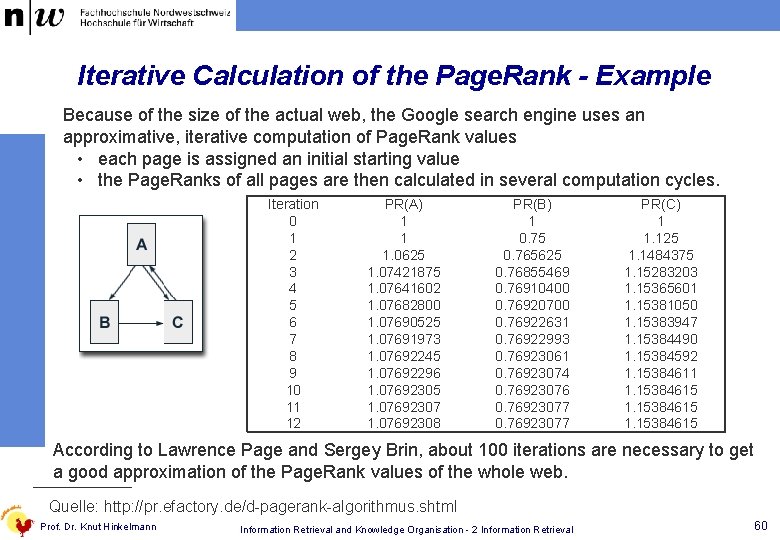

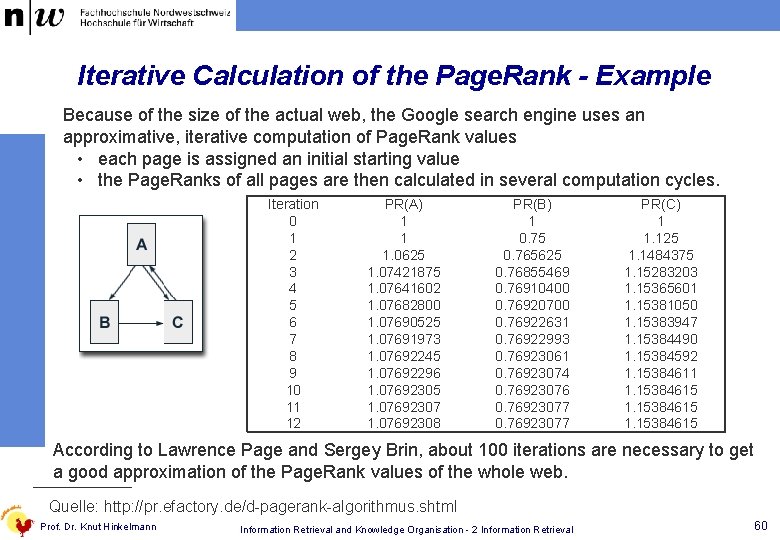

Iterative Calculation of the Page. Rank - Example Because of the size of the actual web, the Google search engine uses an approximative, iterative computation of Page. Rank values • each page is assigned an initial starting value • the Page. Ranks of all pages are then calculated in several computation cycles. Iteration 0 1 2 3 4 5 6 7 8 9 10 11 12 PR(A) 1 1 1. 0625 1. 07421875 1. 07641602 1. 07682800 1. 07690525 1. 07691973 1. 07692245 1. 07692296 1. 07692305 1. 07692307 1. 07692308 PR(B) 1 0. 75 0. 765625 0. 76855469 0. 76910400 0. 76920700 0. 76922631 0. 76922993 0. 76923061 0. 76923074 0. 76923076 0. 76923077 PR(C) 1 1. 125 1. 1484375 1. 15283203 1. 15365601 1. 15381050 1. 15383947 1. 15384490 1. 15384592 1. 15384611 1. 15384615 According to Lawrence Page and Sergey Brin, about 100 iterations are necessary to get a good approximation of the Page. Rank values of the whole web. Quelle: http: //pr. efactory. de/d-pagerank-algorithmus. shtml Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 60

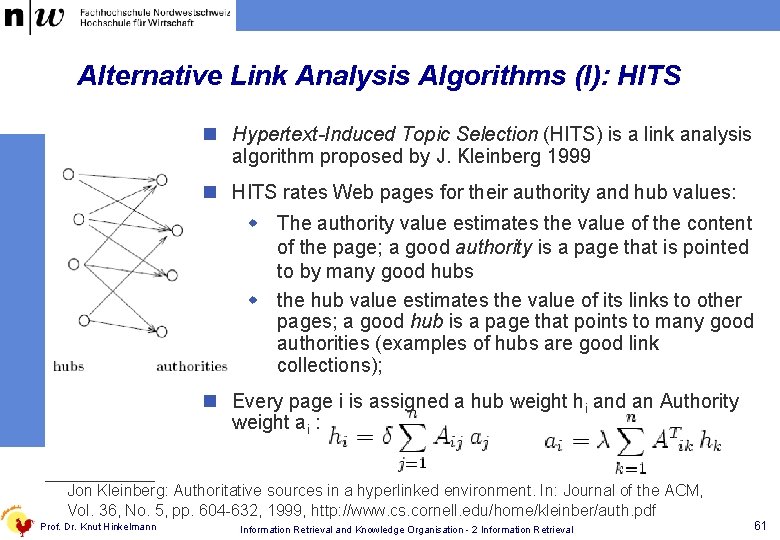

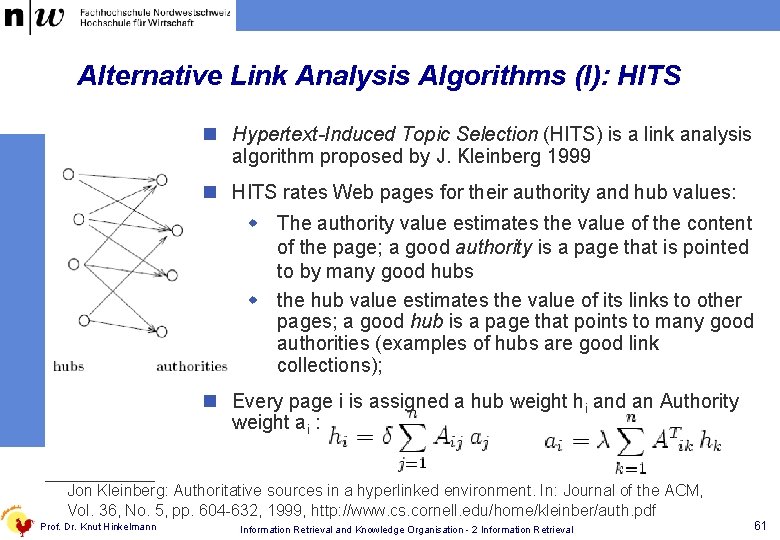

Alternative Link Analysis Algorithms (I): HITS n Hypertext-Induced Topic Selection (HITS) is a link analysis algorithm proposed by J. Kleinberg 1999 n HITS rates Web pages for their authority and hub values: w The authority value estimates the value of the content of the page; a good authority is a page that is pointed to by many good hubs w the hub value estimates the value of its links to other pages; a good hub is a page that points to many good authorities (examples of hubs are good link collections); n Every page i is assigned a hub weight hi and an Authority weight ai : Jon Kleinberg: Authoritative sources in a hyperlinked environment. In: Journal of the ACM, Vol. 36, No. 5, pp. 604 -632, 1999, http: //www. cs. cornell. edu/home/kleinber/auth. pdf Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 61

Alternative Link Analysis Algorithms (II): Hilltop n The Hilltop-Algorithm 1) rates documents based on their incoming links from so-called expert pages w Expert pages are defined as pages that are about a topic and have links to many non-affiliated pages on that topic. w Pages are defined as non-affiliated if they are from authors of nonaffiliated organisations. w Websites which have backlinks from many of the best expert pages are authorities and are ranked high. n A good directory page is an example of an expert page (cp. hubs). n Determination of expert pages is a central point of the hilltop algorithm. 1) The Hilltop-Algorithmus was developed by Bharat und Mihaila an publishes in 1999: Krishna Bharat, George A. Mihaila: Hilltop: A Search Engine based on Expert Documents. In 2003 Google bought the patent of the algorithm (see also http: //pagerank. suchmaschinen-doktor. de/hilltop. html) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 62

Anchor-Text The polar bear Knut was born in the zoo of Berlin n The Google search engine uses the text of links twice w First, the text of a link is associated with the page that the link is on, w In addition, it is associated with the page the link points to. n Advantages: w Anchors provide additional description of a web pages – from a user‘s point of view w Documents without text can be indexed, such as images, programs, and databases. n Disadvantage: w Search results can be manipulated (cf. Google Bombing 1)) A Google bomb influences the ranking of the search engine. It is created if a large number of sites link to the page with anchor text that often has humourous, political or defamatory statements. In the meanwhile, Google bombs are defused by Google. Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 63

Natural Language Queries i need information about accidents with cars and other vehicles is equivalent to information accident car vehicle n Natural language queries are treated as any other query w Stop word elimination w Stemming but no interpretation of the meaning of the query Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 64

Searching Similar Documents n Is is often difficult to express the information need as a query n An alternative search method can be to search for similar documents to a given document d Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 65

Finding Similar Documents – Principle and Example: accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann Find the most similar documents to d 1 IDF d 1 d 2 d 3 0. 5 1 1 1 1 1 0. 5 1 1 1 0. 33 1 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 n Principle: Use a given document d as a query n Compare all document di with d n Example (scalar product): IDF * d 1 * d 2 = 0. 83 IDF * d 1 * d 3 = 2. 33 n The approach is the same as for a : w same index w same ranking function Information Retrieval and Knowledge Organisation - 2 Information Retrieval 66

The Vector Space Model n The vector space model. . . … is relatively simple and clear, … is efficient, … ranks documents, … can be applied for any collection of documents n The model has many heuristic components of parameters, e. g. w determintation of index terms w calculation of tf and idf w ranking function n The best parameter setting depends on the document collection Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 67

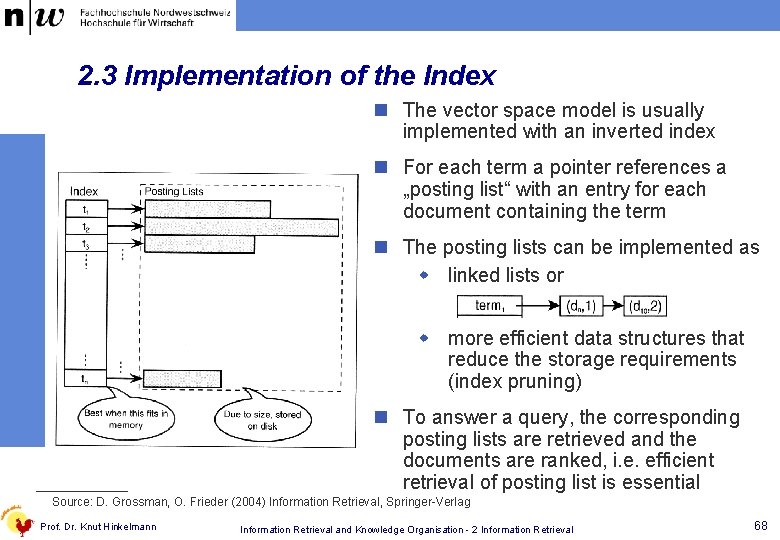

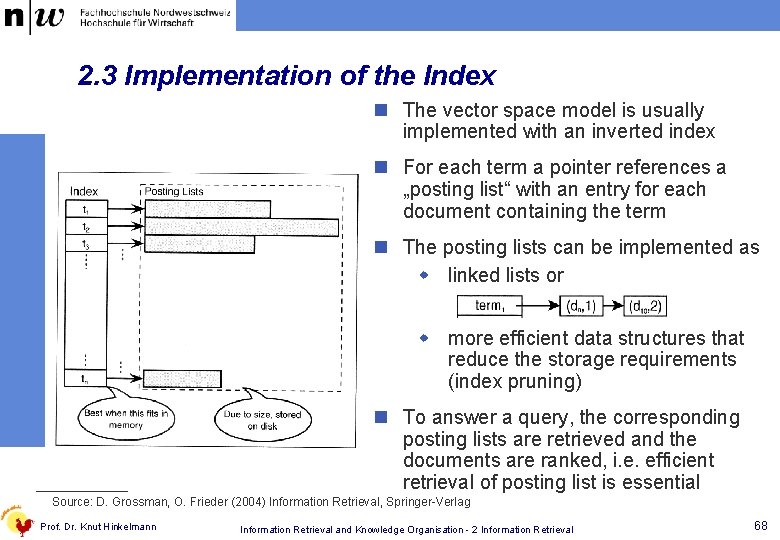

2. 3 Implementation of the Index n The vector space model is usually implemented with an inverted index n For each term a pointer references a „posting list“ with an entry for each document containing the term n The posting lists can be implemented as w linked lists or w more efficient data structures that reduce the storage requirements (index pruning) n To answer a query, the corresponding posting lists are retrieved and the documents are ranked, i. e. efficient retrieval of posting list is essential Source: D. Grossman, O. Frieder (2004) Information Retrieval, Springer-Verlag Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 68

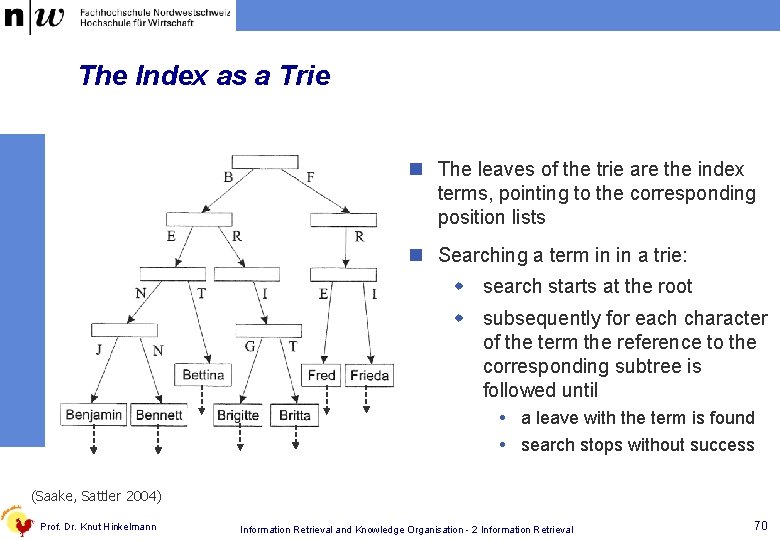

Implementing the Term Structure as a Trie Example: Structure of a node in a trie*) n Sequentially scanning the index for query terms/posting lists is inefficient n a trie is a tree structure w each node is an array, one element for each character *) the characters and their order are identical for each node. Therefore they do not need to be stored explicitly. w each element contains a link to another node Source: G. Saake, K. -U. Sattler: Algorithmen und Datenstrukturen – Eine Einführung mit Java. dpunkt Verlag 2004 Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 69

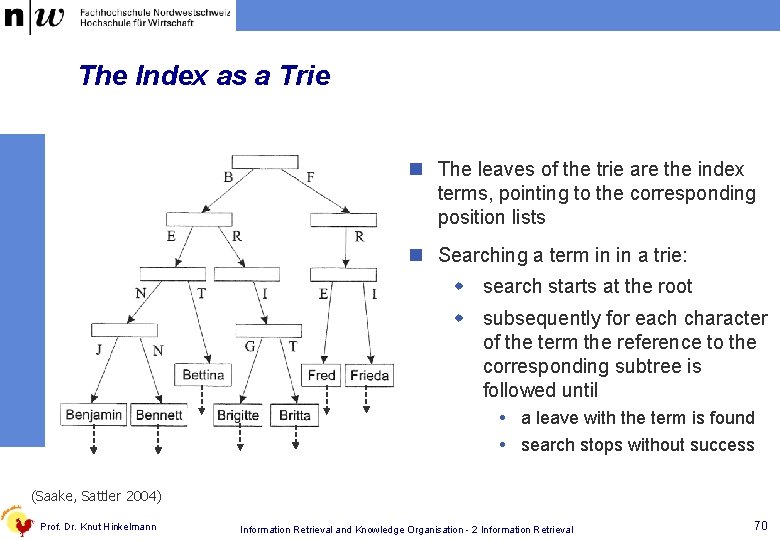

The Index as a Trie n The leaves of the trie are the index terms, pointing to the corresponding position lists n Searching a term in in a trie: w search starts at the root w subsequently for each character of the term the reference to the corresponding subtree is followed until a leave with the term is found search stops without success (Saake, Sattler 2004) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 70

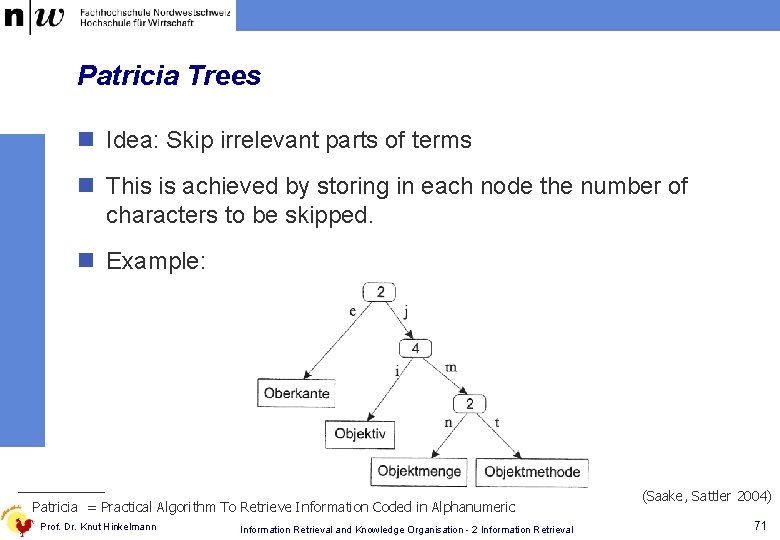

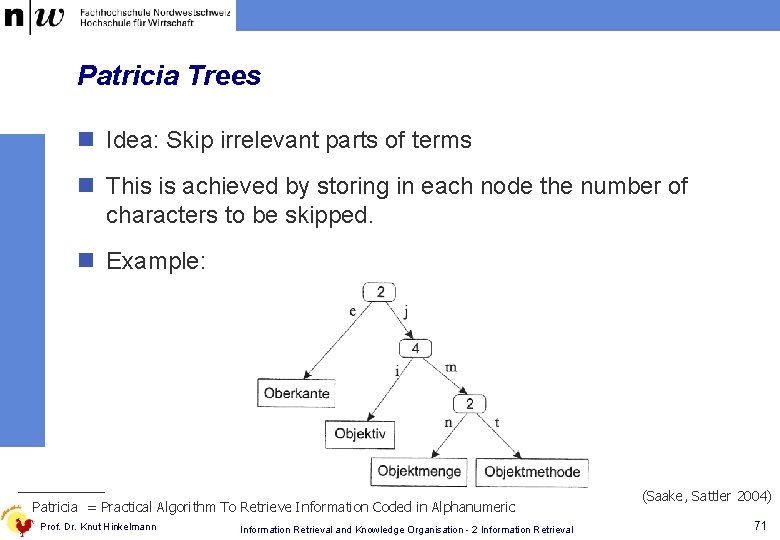

Patricia Trees n Idea: Skip irrelevant parts of terms n This is achieved by storing in each node the number of characters to be skipped. n Example: Patricia = Practical Algorithm To Retrieve Information Coded in Alphanumeric Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval (Saake, Sattler 2004) 71

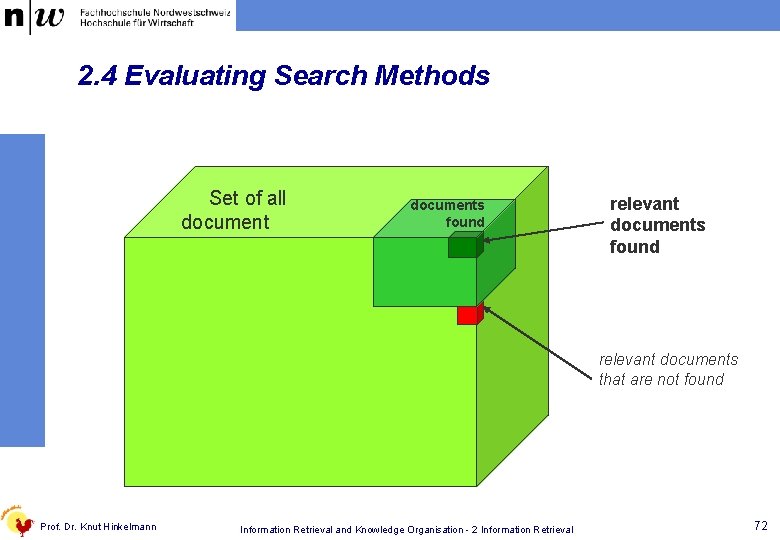

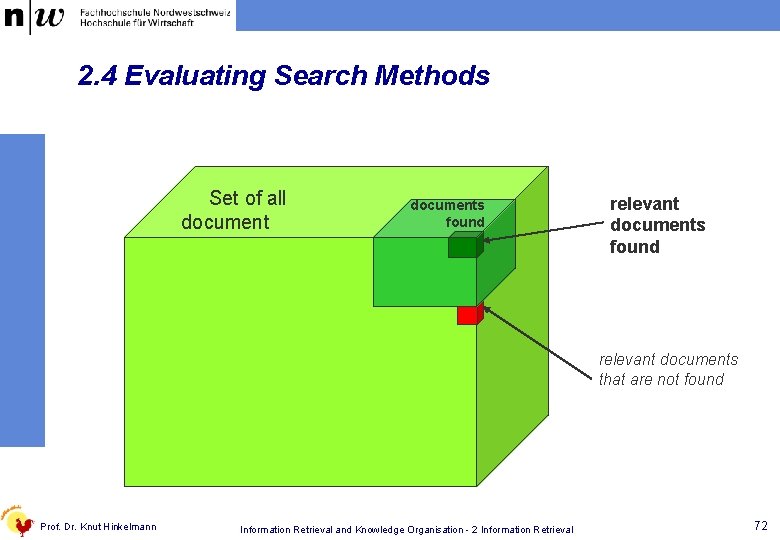

2. 4 Evaluating Search Methods Set of all documents found relevant documents that are not found Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 72

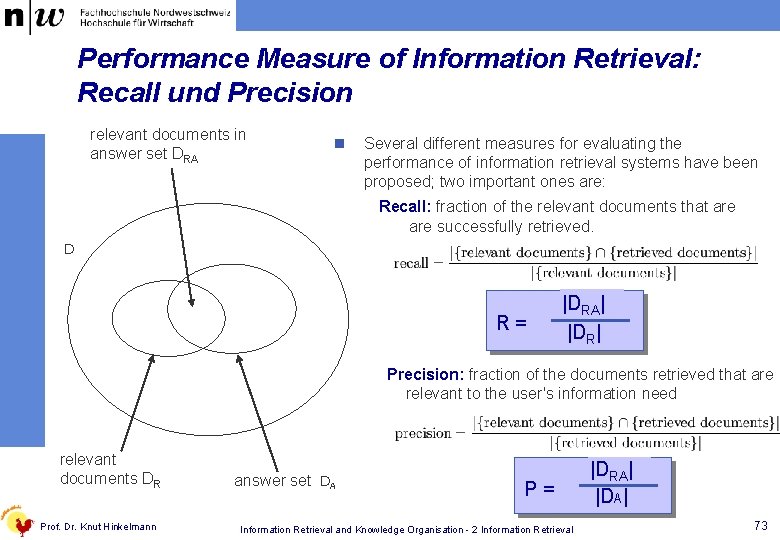

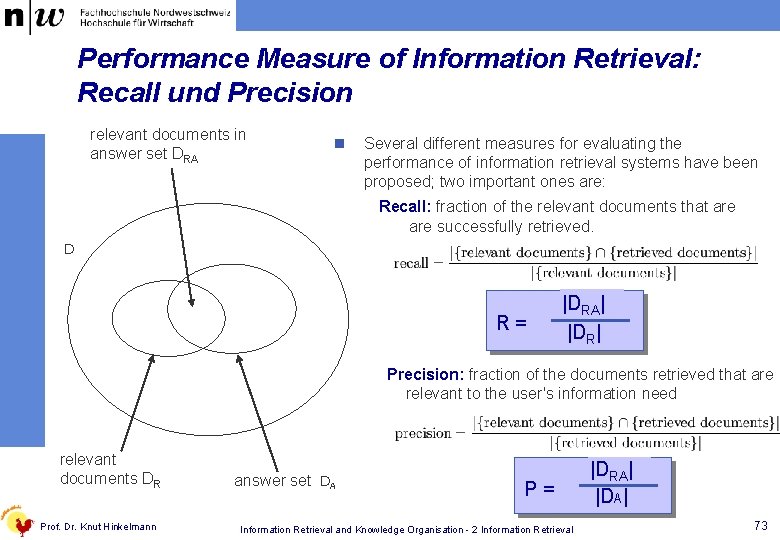

Performance Measure of Information Retrieval: Recall und Precision relevant documents in answer set DRA n Several different measures for evaluating the performance of information retrieval systems have been proposed; two important ones are: Recall: fraction of the relevant documents that are successfully retrieved. D R= |DRA| |DR| Precision: fraction of the documents retrieved that are relevant to the user's information need relevant documents DR Prof. Dr. Knut Hinkelmann answer set DA P= Information Retrieval and Knowledge Organisation - 2 Information Retrieval |DRA| |DA| 73

F-Measure n The F-measure is a mean of precision and recall n In this version, precision and recall are equally weighted. n The more general version allows to give preference to recall or precision w F 2 weights recall twice as much as precision w F 0. 5 weights precision twice as much as recall Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 74

Computing Recall and Precision n Evaluation: Perform a predefined set of queries w The search engines delivers a ranked set of documents w Use the first X documents of the result list as answer set w Compute recall and precision for the frist X documents of the ranked result list. n How do you know, which documents are relevant? 1. A general reference set of documents can be used. For example, TREC (Text REtrieval Conference) is an annual event where large test collections in different domains are used to measure and compare performance of infomration retrieval systems 2. For companies it is more important to evaluate information retrieval systems using their own documents 1. 2. 3. Prof. Dr. Knut Hinkelmann Collect a representative set of documents Specify queries and associated relevant documents evaluate search engines by computing recall and precision for the query results Information Retrieval and Knowledge Organisation - 2 Information Retrieval 75

2. 5 User Adaptation Take into account information of a user to filter document particularly relevant to this user w Relevance Feedback Retrieval in multiple passes; in each pass the use refines the query based on results of previous queries w Explicit User Profiles subscription User-specific weights of terms w Social Filtering Similar use get similar documents Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 76

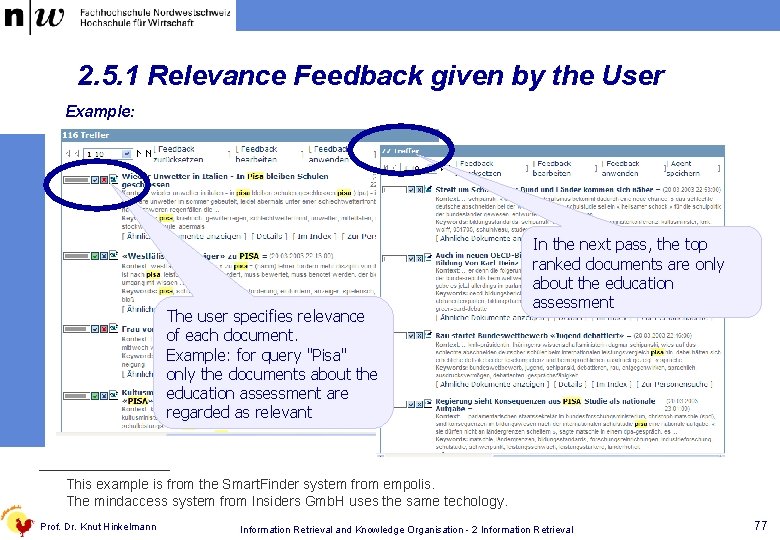

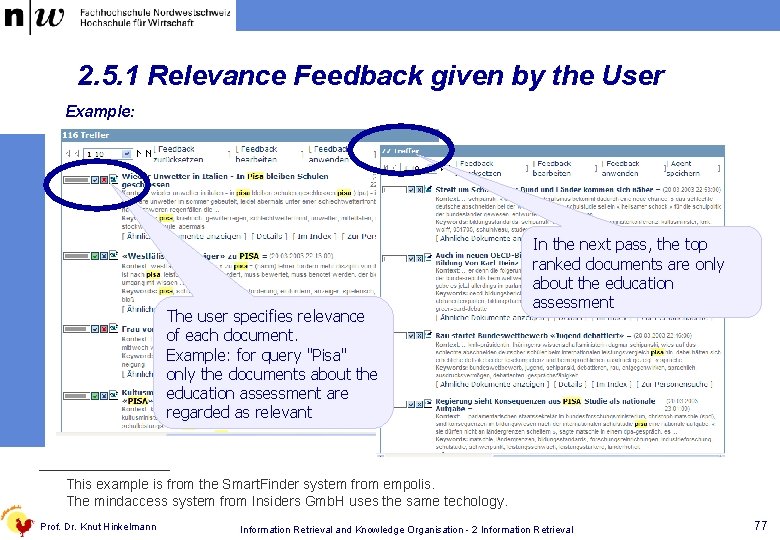

2. 5. 1 Relevance Feedback given by the User Example: The user specifies relevance of each document. Example: for query "Pisa" only the documents about the education assessment are regarded as relevant In the next pass, the top ranked documents are only about the education assessment This example is from the Smart. Finder system from empolis. The mindaccess system from Insiders Gmb. H uses the same techology. Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 77

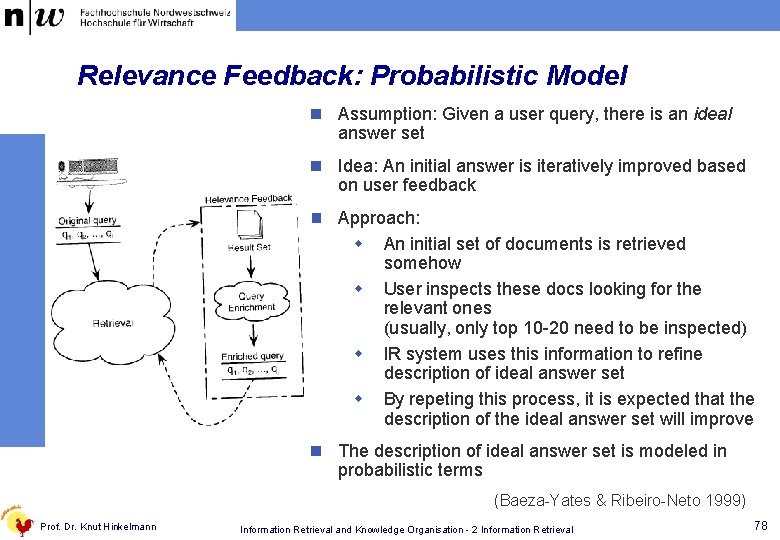

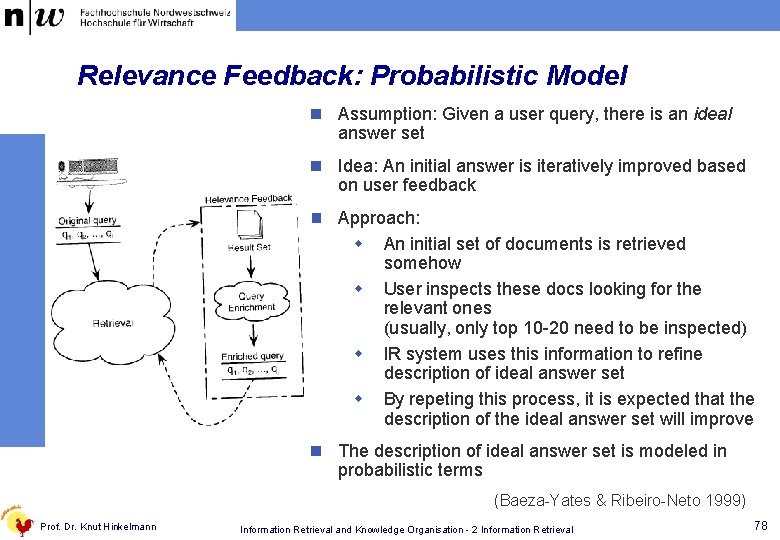

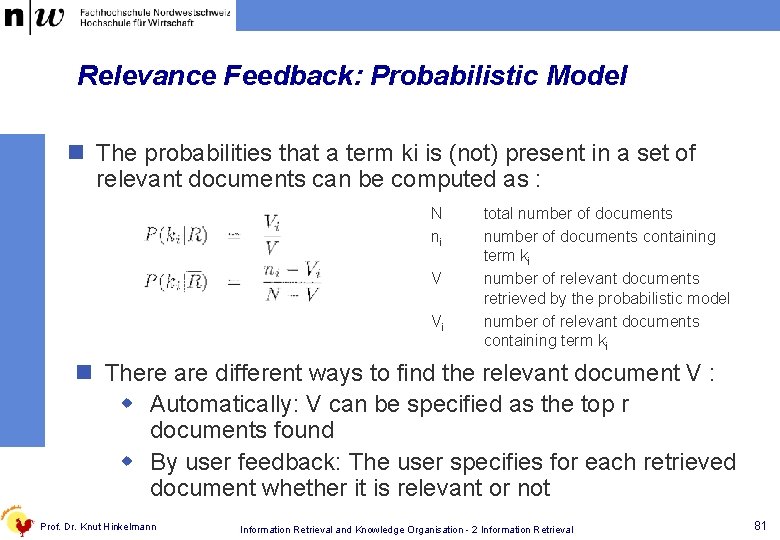

Relevance Feedback: Probabilistic Model n Assumption: Given a user query, there is an ideal answer set n Idea: An initial answer is iteratively improved based on user feedback n Approach: w An initial set of documents is retrieved somehow w User inspects these docs looking for the relevant ones (usually, only top 10 -20 need to be inspected) w IR system uses this information to refine description of ideal answer set w By repeting this process, it is expected that the description of the ideal answer set will improve n The description of ideal answer set is modeled in probabilistic terms (Baeza-Yates & Ribeiro-Neto 1999) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 78

Probabilistic Ranking n Given a user query q and a document dj, the probabilistic model tries to estimate the probability that the user will find the document dj interesting (i. e. , relevant). n The model assumes that this probability of relevance depends on the query and the document representations only. n Probabilistic ranking is: n Definitions: wij {0, 1} (i. e. weights are binary) similarity of document dj to the query q is the probability that document dj is relevant is the probability that document dj is not relevant is the document vector of dj Prof. Dr. Knut Hinkelmann (Baeza-Yates & Ribeiro-Neto 1999) Information Retrieval and Knowledge Organisation - 2 Information Retrieval 79

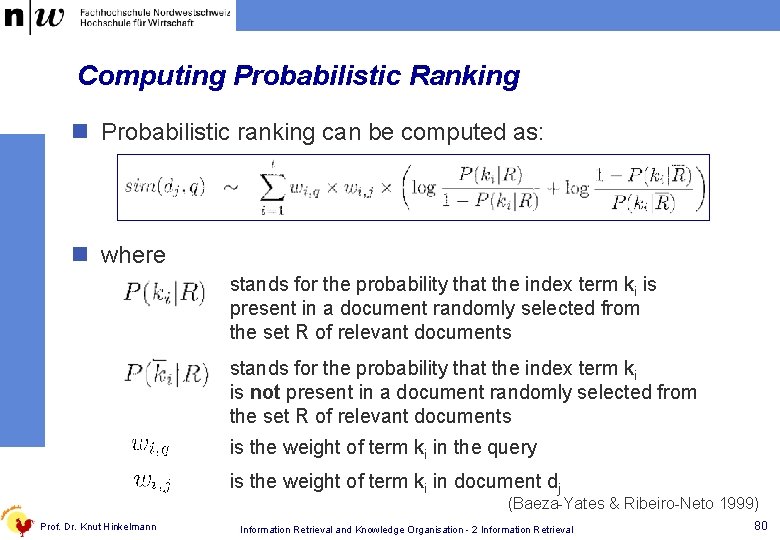

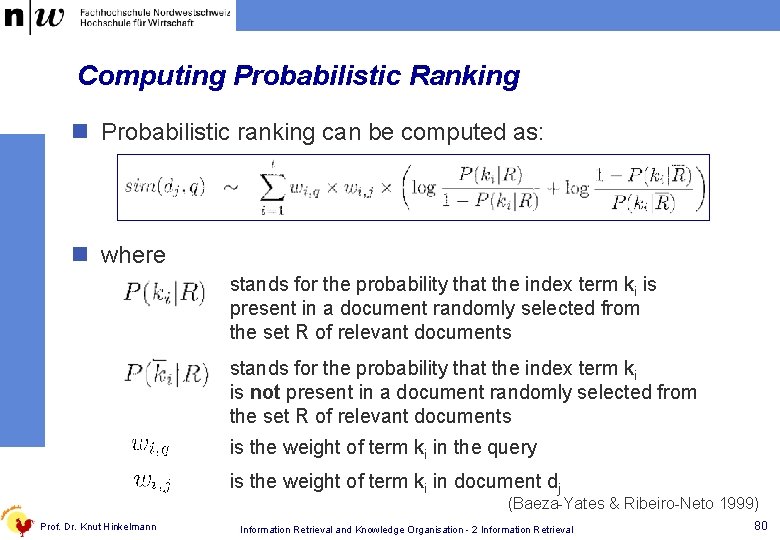

Computing Probabilistic Ranking n Probabilistic ranking can be computed as: n where stands for the probability that the index term ki is present in a document randomly selected from the set R of relevant documents stands for the probability that the index term ki is not present in a document randomly selected from the set R of relevant documents is the weight of term ki in the query is the weight of term ki in document dj (Baeza-Yates & Ribeiro-Neto 1999) Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 80

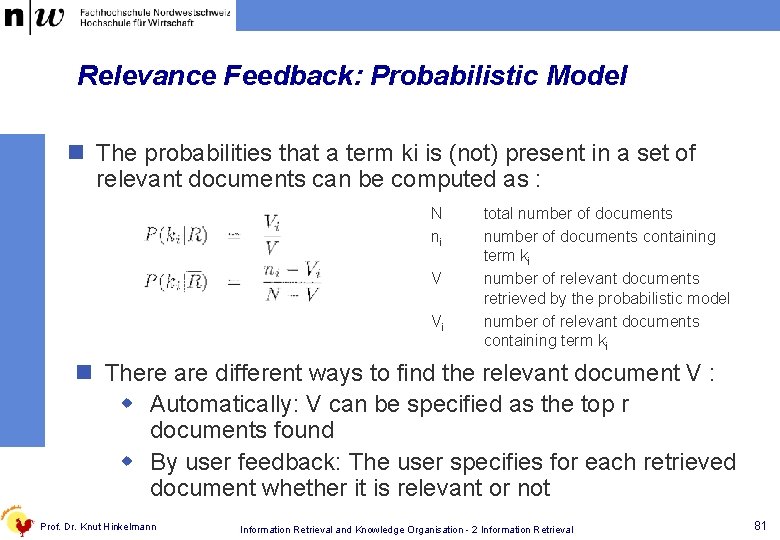

Relevance Feedback: Probabilistic Model n The probabilities that a term ki is (not) present in a set of relevant documents can be computed as : N ni V Vi total number of documents containing term ki number of relevant documents retrieved by the probabilistic model number of relevant documents containing term ki n There are different ways to find the relevant document V : w Automatically: V can be specified as the top r documents found w By user feedback: The user specifies for each retrieved document whether it is relevant or not Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 81

2. 5. 2 Explicit User Profiles Idea: Using knoweldge about the user to provide information that is particularly relelvant for him/her w users specify topics of interest as a set of terms w these terms represent the user profile w documents containing the terms of the user profile are prefered information need/ preferences document representation profile acquisition user profile index ranking function Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 82

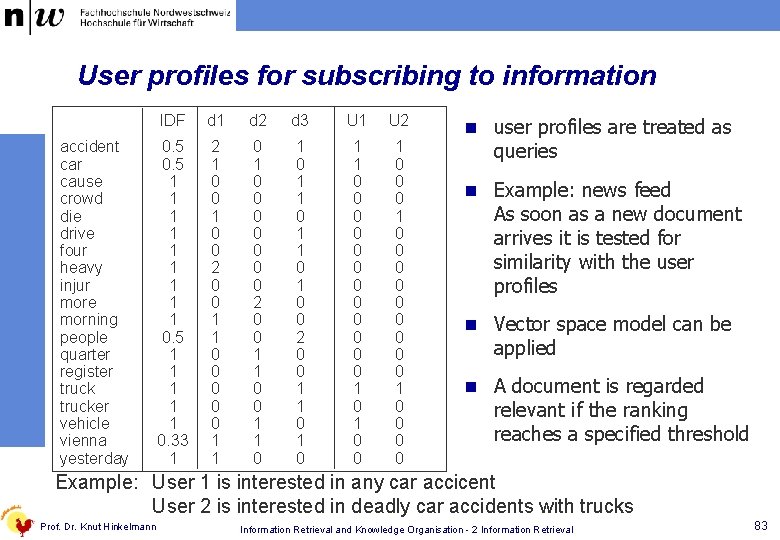

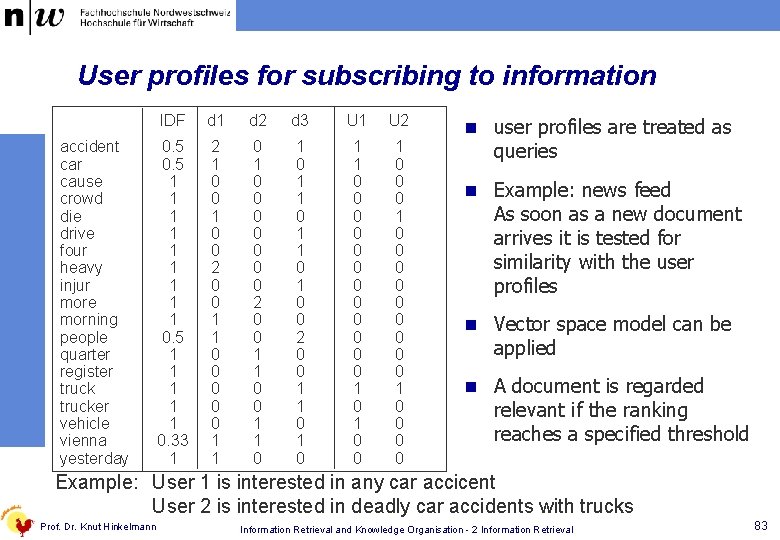

User profiles for subscribing to information accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday IDF d 1 d 2 d 3 U 1 U 2 0. 5 1 1 1 1 1 0. 5 1 1 1 0. 33 1 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 0 0 1 0 0 0 0 0 0 1 0 0 n user profiles are treated as queries n Example: news feed As soon as a new document arrives it is tested for similarity with the user profiles n Vector space model can be applied n A document is regarded relevant if the ranking reaches a specified threshold Example: User 1 is interested in any car accicent User 2 is interested in deadly car accidents with trucks Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 83

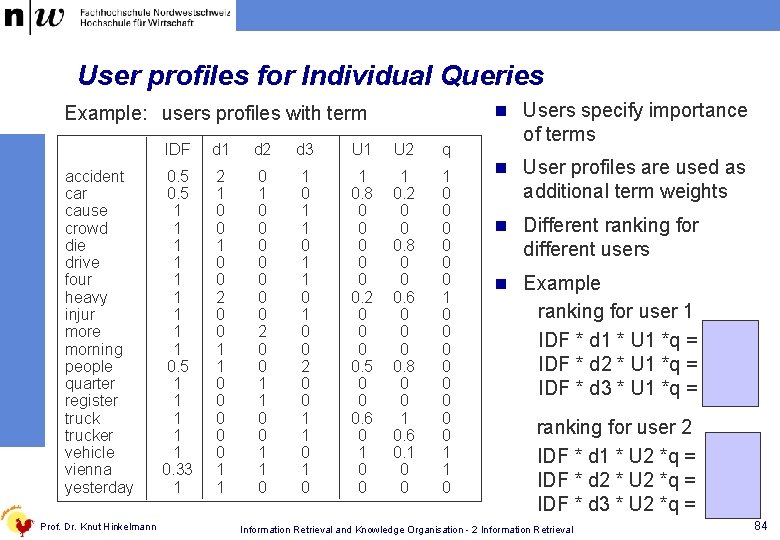

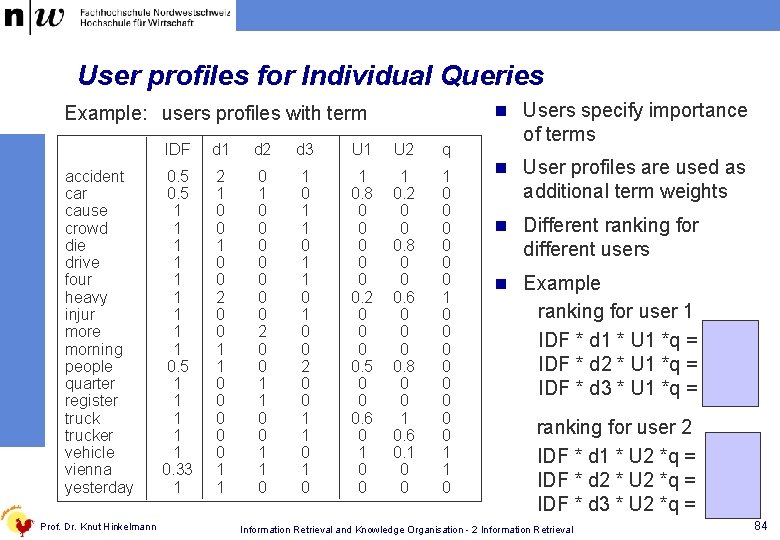

User profiles for Individual Queries n Users specify importance Example: users profiles with term accident car cause crowd die drive four heavy injur more morning people quarter register trucker vehicle vienna yesterday Prof. Dr. Knut Hinkelmann IDF d 1 d 2 d 3 U 1 U 2 q 0. 5 1 1 1 1 1 0. 5 1 1 1 0. 33 1 2 1 0 0 2 0 0 1 1 0 0 0 0 2 0 0 1 1 0 1 0 0 2 0 0 1 1 0 1 0. 8 0 0 0. 2 0 0. 5 0 0 0. 6 0 1 0. 2 0 0 0. 8 0 0 0. 6 0 0. 8 0 0 1 0. 6 0. 1 0 0 0 0 1 1 0 of terms n User profiles are used as additional term weights n Different ranking for different users n Example ranking for user 1 IDF * d 1 * U 1 *q = 1, 4 IDF * d 2 * U 1 *q = 1 IDF * d 3 * U 1 *q = 0, 5 ranking for user 2 IDF * d 1 * U 2 *q = 2, 2 IDF * d 2 * U 2 *q = 0, 1 IDF * d 3 * U 2 *q = 0, 5 Information Retrieval and Knowledge Organisation - 2 Information Retrieval 84

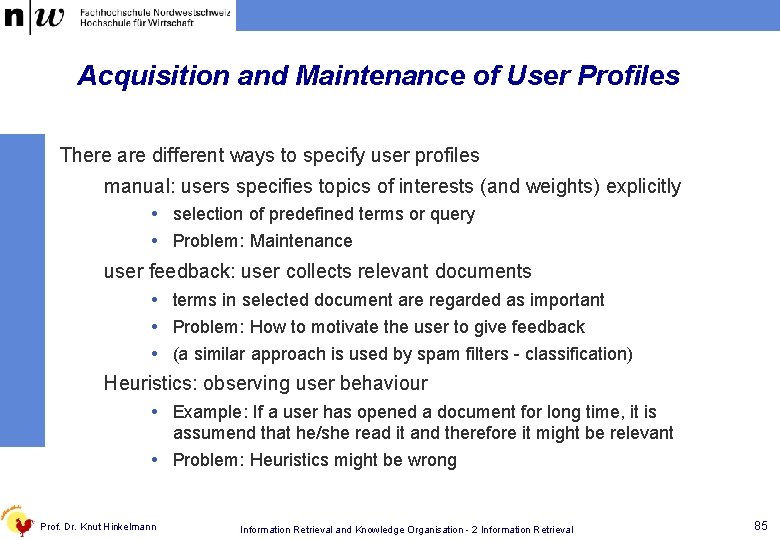

Acquisition and Maintenance of User Profiles There are different ways to specify user profiles manual: users specifies topics of interests (and weights) explicitly selection of predefined terms or query Problem: Maintenance user feedback: user collects relevant documents terms in selected document are regarded as important Problem: How to motivate the user to give feedback (a similar approach is used by spam filters - classification) Heuristics: observing user behaviour Example: If a user has opened a document for long time, it is assumend that he/she read it and therefore it might be relevant Problem: Heuristics might be wrong Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 85

Social Filtering n Idea: Information is relevant, if other users who showed similar behaviour regarded the information as relevant w Relevance is specified by the users w User profiles are compared n Example: A simple variant can be found at Amazon w purchases of books and CDs are stored w „people who bought this book also bought …“ Prof. Dr. Knut Hinkelmann Information Retrieval and Knowledge Organisation - 2 Information Retrieval 86