2 D rendering SzirmayKalos Lszl 2 D rendering

![Vectorization r(t), t in [0, 1] r 2 r 1 rn [0, 1]: t Vectorization r(t), t in [0, 1] r 2 r 1 rn [0, 1]: t](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-8.jpg)

![(Ivan) Sutherland-Hodgeman poligon clipping p[2] q[2] p[3] p[4] q[3] Polygon. Clip(p[n] q[m]) p[5] m (Ivan) Sutherland-Hodgeman poligon clipping p[2] q[2] p[3] p[4] q[3] Polygon. Clip(p[n] q[m]) p[5] m](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-24.jpg)

![2 D Texturing x=ax u+bx v+cx y=ay u+by v+cy [Xh, Yh, h] = [x, 2 D Texturing x=ax u+bx v+cx y=ay u+by v+cy [Xh, Yh, h] = [x,](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-34.jpg)

- Slides: 45

2 D rendering Szirmay-Kalos László

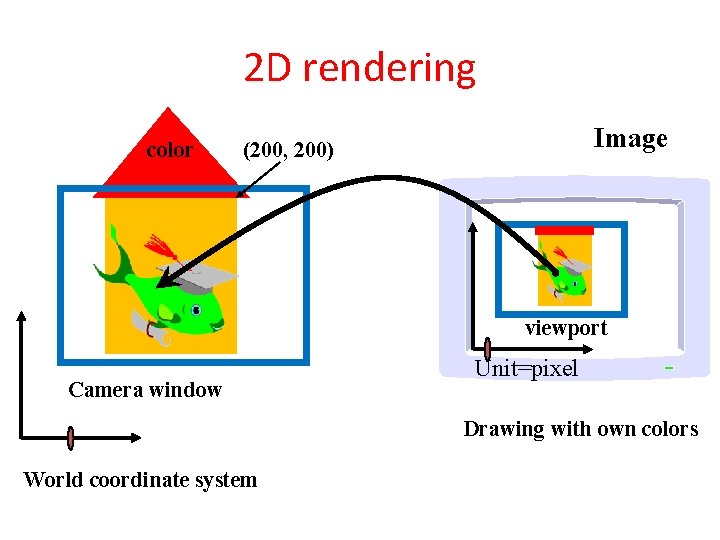

2 D rendering color Image (200, 200) viewport Camera window Unit=pixel Drawing with own colors World coordinate system

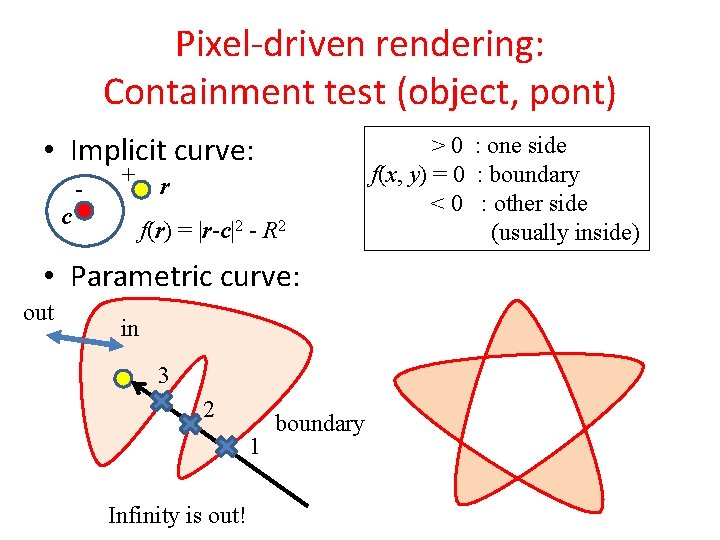

Pixel-driven rendering: Containment test (object, pont) • Implicit curve: c - + r f(r) = |r-c|2 - R 2 • Parametric curve: out in 3 2 1 Infinity is out! boundary > 0 : one side f(x, y) = 0 : boundary < 0 : other side (usually inside)

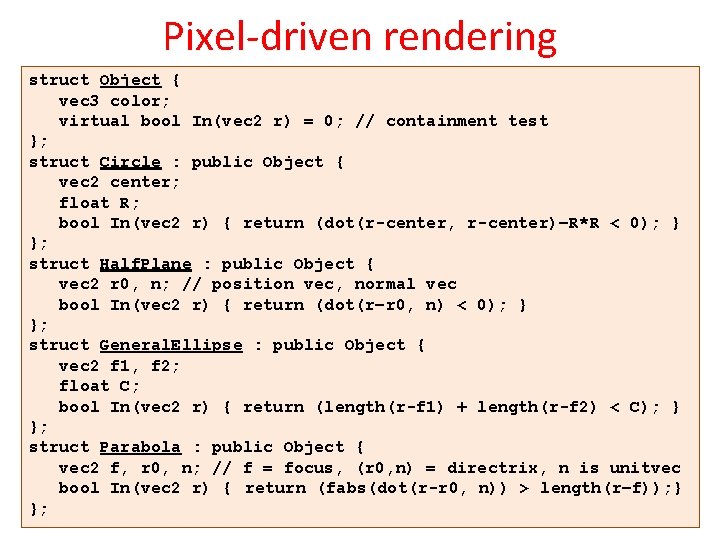

Pixel-driven rendering struct Object { vec 3 color; virtual bool In(vec 2 r) = 0; // containment test }; struct Circle : public Object { vec 2 center; float R; bool In(vec 2 r) { return (dot(r-center, r-center)–R*R < 0); } }; struct Half. Plane : public Object { vec 2 r 0, n; // position vec, normal vec bool In(vec 2 r) { return (dot(r–r 0, n) < 0); } }; struct General. Ellipse : public Object { vec 2 f 1, f 2; float C; bool In(vec 2 r) { return (length(r-f 1) + length(r-f 2) < C); } }; struct Parabola : public Object { vec 2 f, r 0, n; // f = focus, (r 0, n) = directrix, n is unitvec bool In(vec 2 r) { return (fabs(dot(r-r 0, n)) > length(r–f)); } };

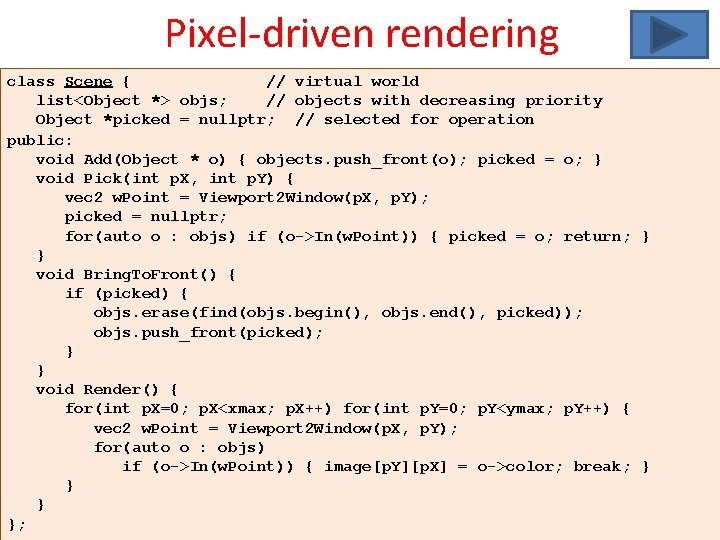

Pixel-driven rendering class Scene { // virtual world list<Object *> objs; // objects with decreasing priority Object *picked = nullptr; // selected for operation public: void Add(Object * o) { objects. push_front(o); picked = o; } void Pick(int p. X, int p. Y) { vec 2 w. Point = Viewport 2 Window(p. X, p. Y); picked = nullptr; for(auto o : objs) if (o->In(w. Point)) { picked = o; return; } } void Bring. To. Front() { if (picked) { objs. erase(find(objs. begin(), objs. end(), picked)); objs. push_front(picked); } } void Render() { for(int p. X=0; p. X<xmax; p. X++) for(int p. Y=0; p. Y<ymax; p. Y++) { vec 2 w. Point = Viewport 2 Window(p. X, p. Y); for(auto o : objs) if (o->In(w. Point)) { image[p. Y][p. X] = o->color; break; } };

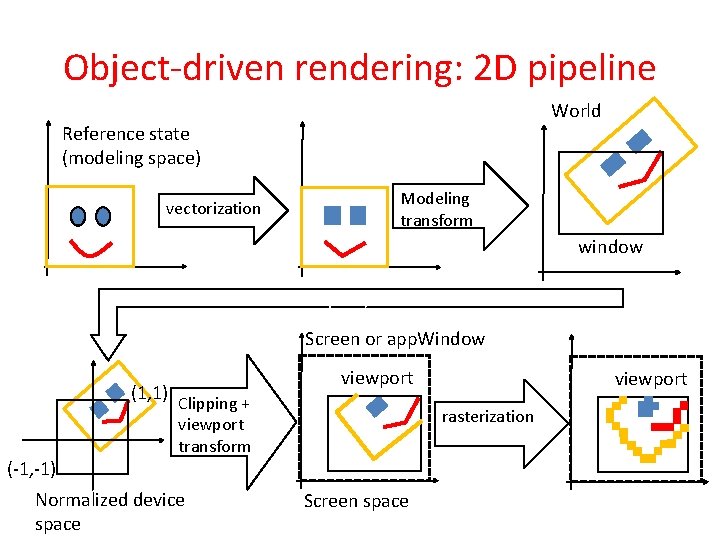

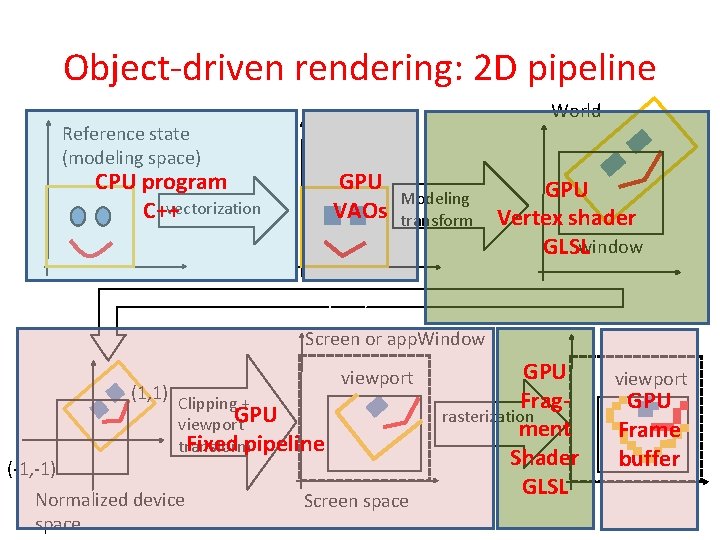

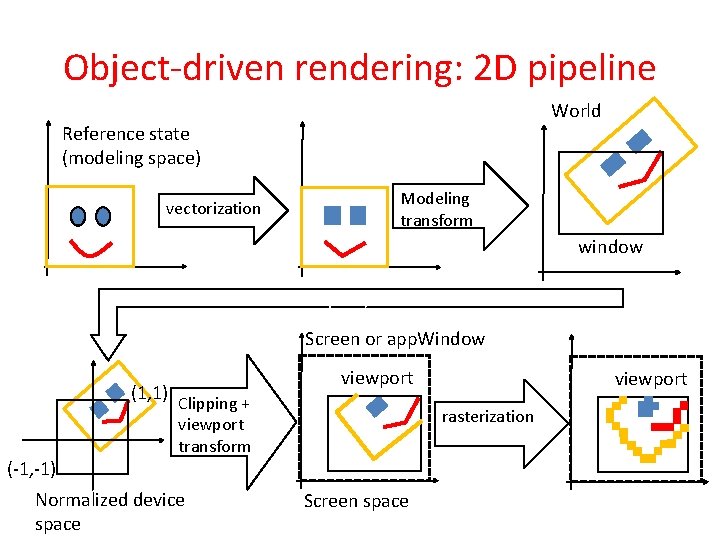

Object-driven rendering: 2 D pipeline World Reference state (modeling space) vectorization Modeling transform window Képernyőre vetítés Screen or app. Window (1, 1) Clipping + (-1, -1) viewport rasterization viewport transform Normalized device space viewport Screen space

Object-driven rendering: 2 D pipeline World Reference state (modeling space) CPU program vectorization C++ GPU VAOs Modeling transform GPU Vertex shader GLSLwindow Képernyőre vetítés Screen or app. Window (1, 1) Clipping + (-1, -1) viewport GPU viewport Fixed pipeline transform Normalized device space Screen space GPU Fragrasterization ment Shader GLSL viewport GPU Frame buffer

![Vectorization rt t in 0 1 r 2 r 1 rn 0 1 t Vectorization r(t), t in [0, 1] r 2 r 1 rn [0, 1]: t](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-8.jpg)

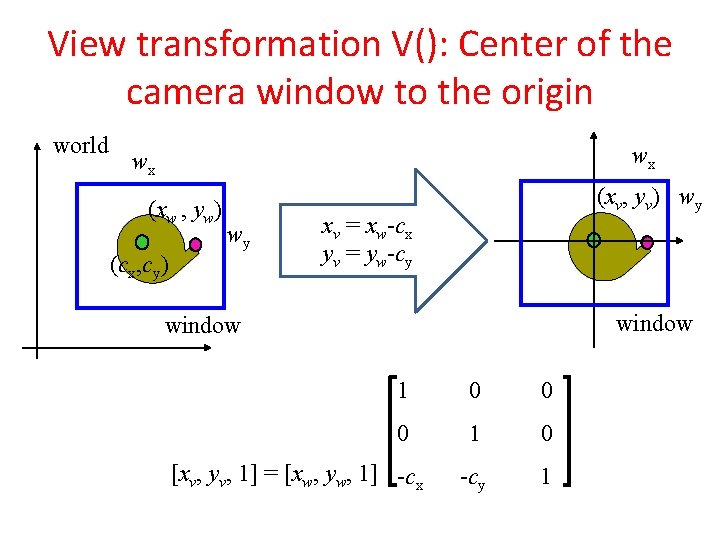

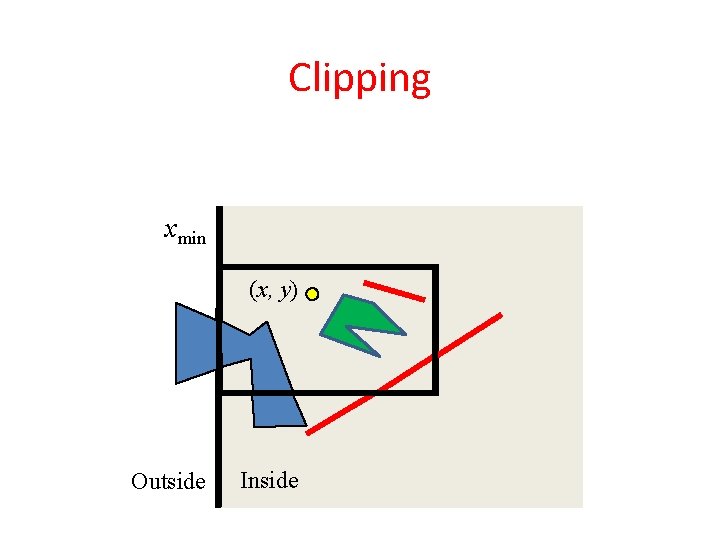

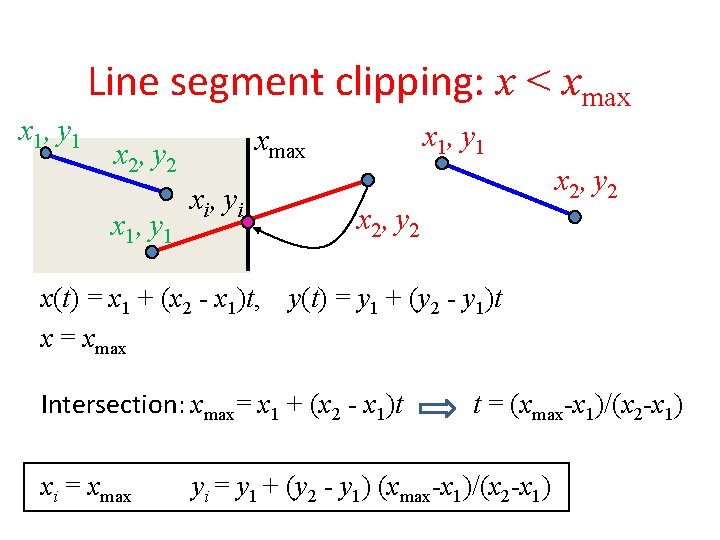

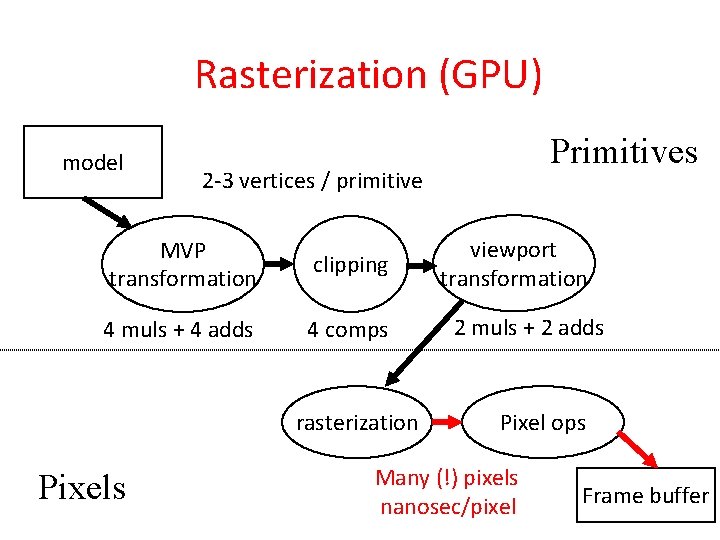

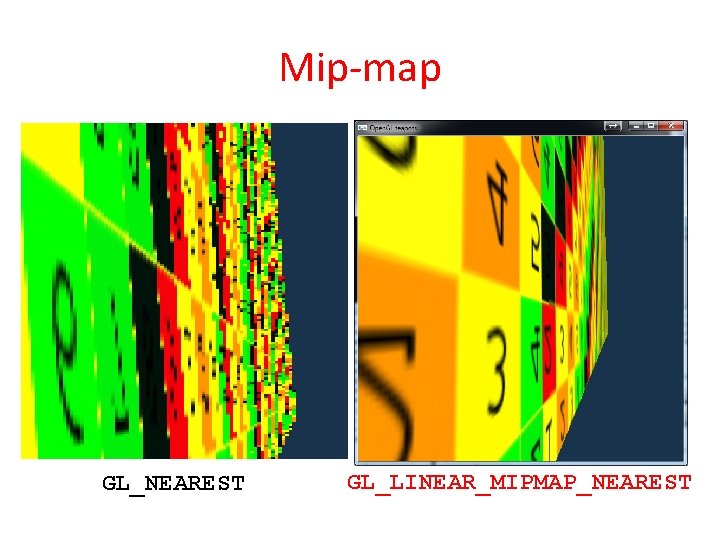

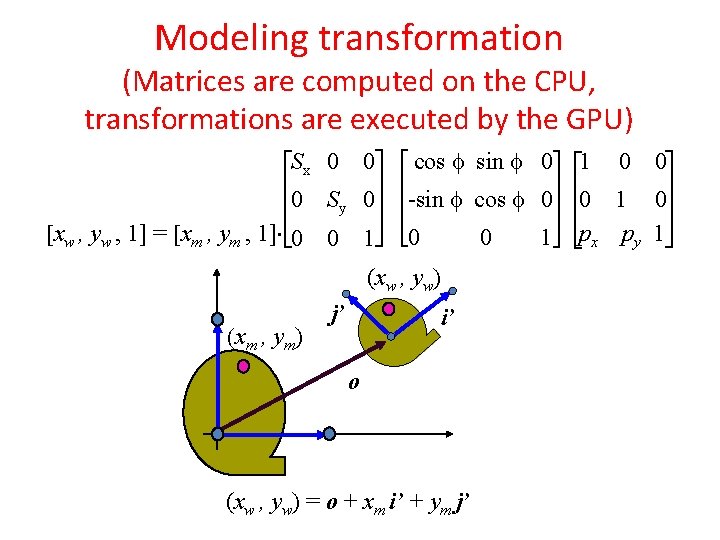

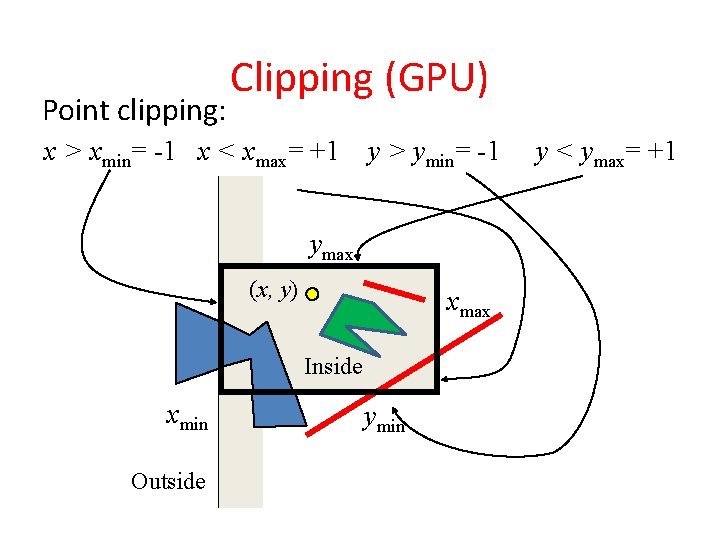

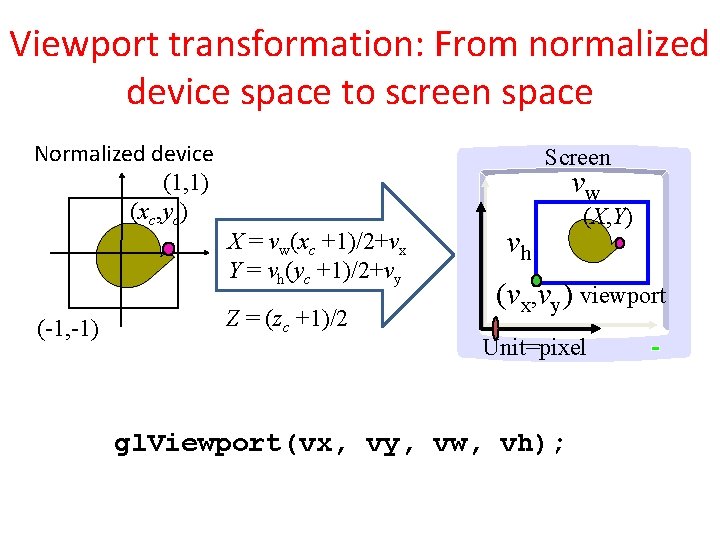

Vectorization r(t), t in [0, 1] r 2 r 1 rn [0, 1]: t 1= 0, t 2 = 1/n , . . . , tn =1 r 1 =r(0), r 2 = r(t 2), … , rn = r(1) Curve Line strip Region boundary line loop = polygon For HW support line segments triangles

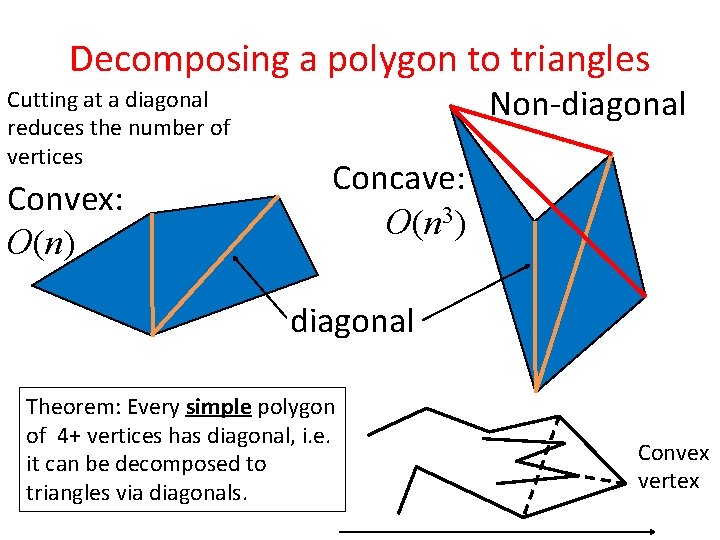

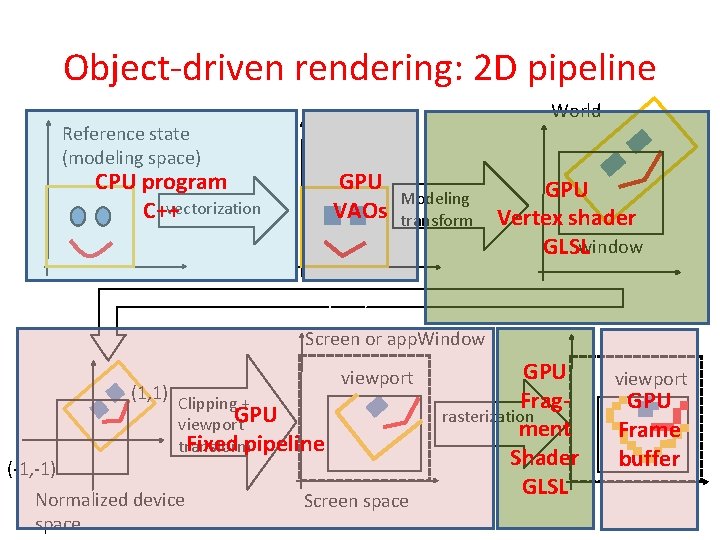

Decomposing a polygon to triangles Cutting at a diagonal reduces the number of vertices Convex: O(n) Non-diagonal Concave: O(n 3) diagonal Theorem: Every simple polygon of 4+ vertices has diagonal, i. e. it can be decomposed to triangles via diagonals. Convex vertex

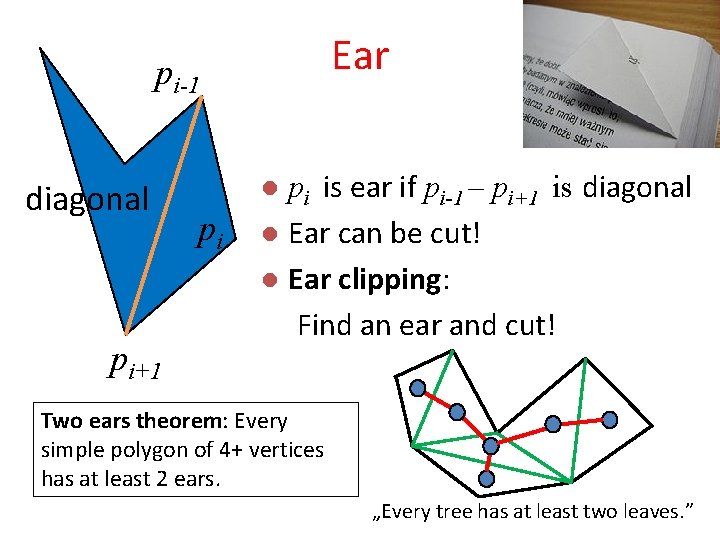

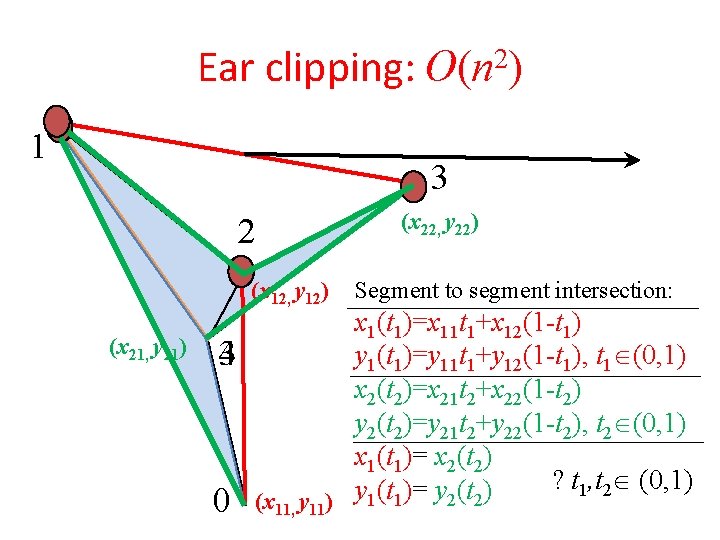

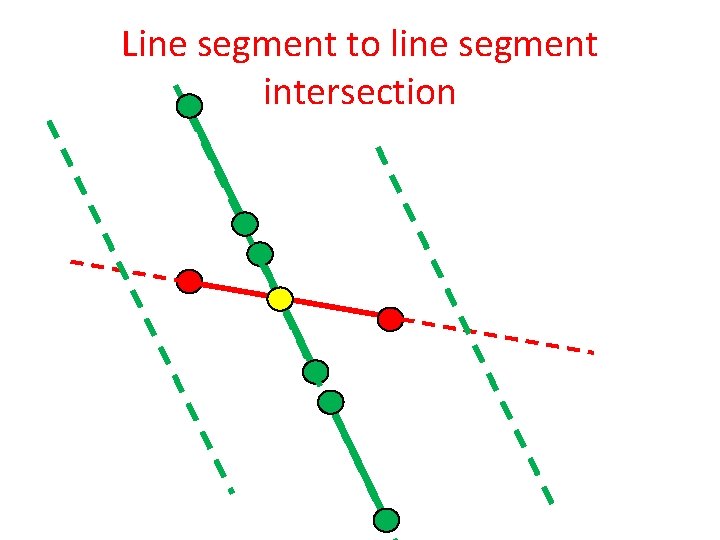

Ear pi-1 diagonal pi+1 pi is ear if pi-1 – pi+1 is diagonal l Ear can be cut! l Ear clipping: Find an ear and cut! l pi Two ears theorem: Every simple polygon of 4+ vertices has at least 2 ears. „Every tree has at least two leaves. ”

Ear clipping: O(n 2) 1 3 2 (x 12, y 12) (x 21, y 21) 43 0 (x 22, y 22) Segment to segment intersection: x 1(t 1)=x 11 t 1+x 12(1 -t 1) y 1(t 1)=y 11 t 1+y 12(1 -t 1), t 1 (0, 1) x 2(t 2)=x 21 t 2+x 22(1 -t 2) y 2(t 2)=y 21 t 2+y 22(1 -t 2), t 2 (0, 1) x 1(t 1)= x 2(t 2) ? t 1, t 2 (0, 1) y (t )= y (t ) 2 2 (x 11, y 11) 1 1

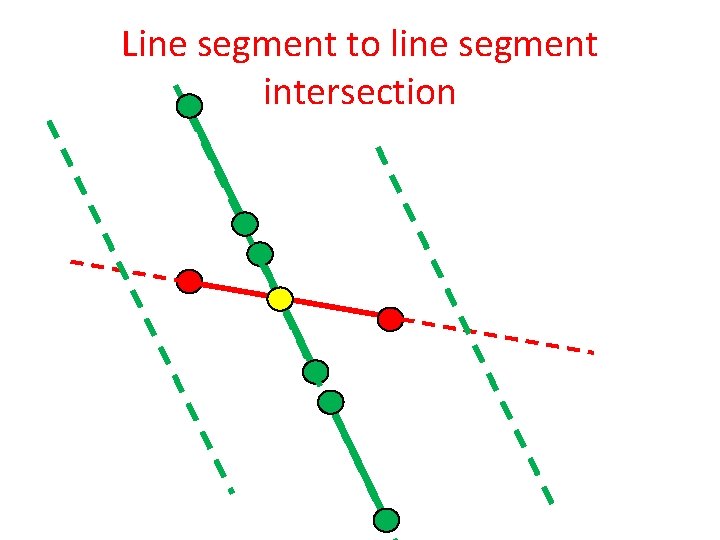

Line segment to line segment intersection

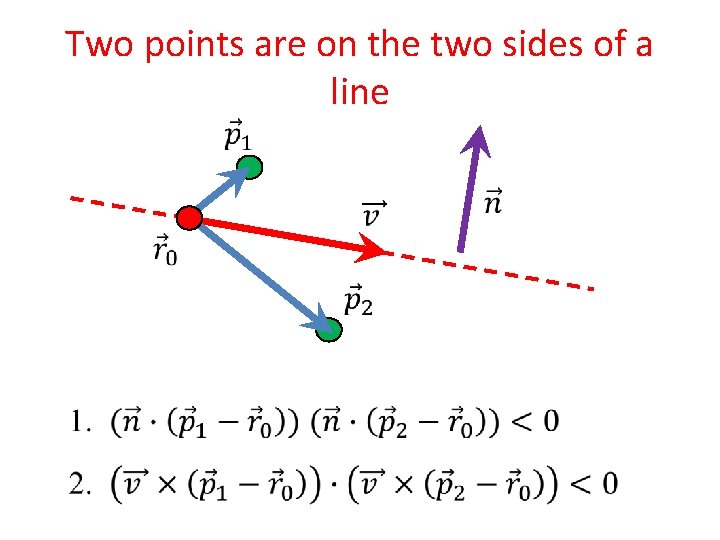

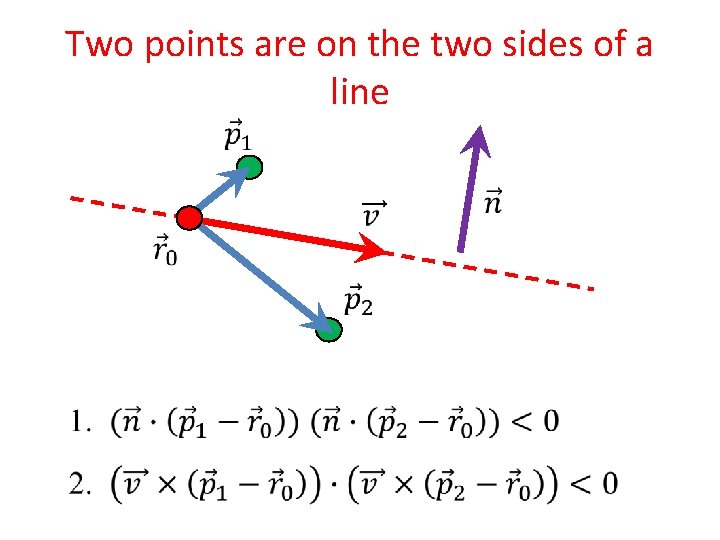

Two points are on the two sides of a line

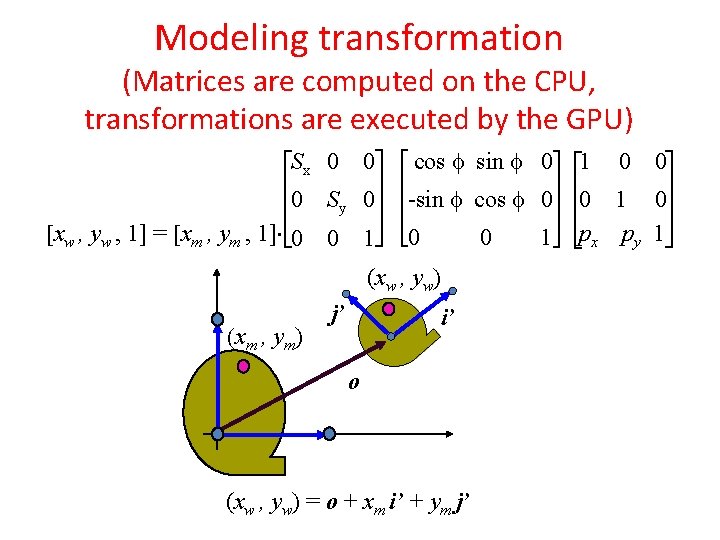

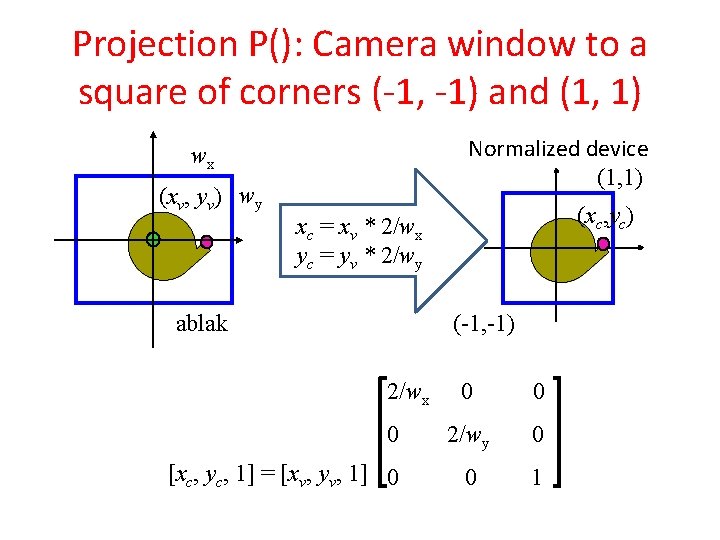

Modeling transformation (Matrices are computed on the CPU, transformations are executed by the GPU) Sx 0 0 cos f sin f 0 1 0 0 0 Sy 0 -sin f cos f 0 0 1 0 px py 1 [xw , yw , 1] = [xm , ym , 1] 0 0 1 (xw , yw) (xm , ym) j’ i’ o (xw , yw) = o + xm i’ + ym j’

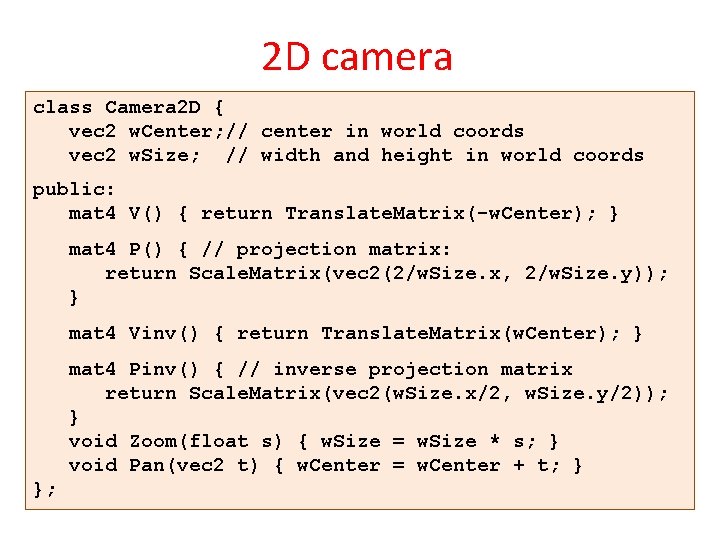

View transformation V(): Center of the camera window to the origin world wx wx (xw , yw) (cx, cy) w y (xv, yv) wy xv = xw-cx yv = yw-cy window 1 0 0 1 0 [xv, yv, 1] = [xw, yw, 1] -cx -cy 1

Projection P(): Camera window to a square of corners (-1, -1) and (1, 1) Normalized device (1, 1) wx (xv, yv) wy (xc, yc) xc = xv * 2/wx yc = yv * 2/wy ablak (-1, -1) 2/wx 0 0 2/wy 0 [xc, yc, 1] = [xv, yv, 1] 0 0 1

2 D camera class Camera 2 D { vec 2 w. Center; // center in world coords vec 2 w. Size; // width and height in world coords public: mat 4 V() { return Translate. Matrix(-w. Center); } mat 4 P() { // projection matrix: return Scale. Matrix(vec 2(2/w. Size. x, 2/w. Size. y)); } mat 4 Vinv() { return Translate. Matrix(w. Center); } mat 4 Pinv() { // inverse projection matrix return Scale. Matrix(vec 2(w. Size. x/2, w. Size. y/2)); } void Zoom(float s) { w. Size = w. Size * s; } void Pan(vec 2 t) { w. Center = w. Center + t; } };

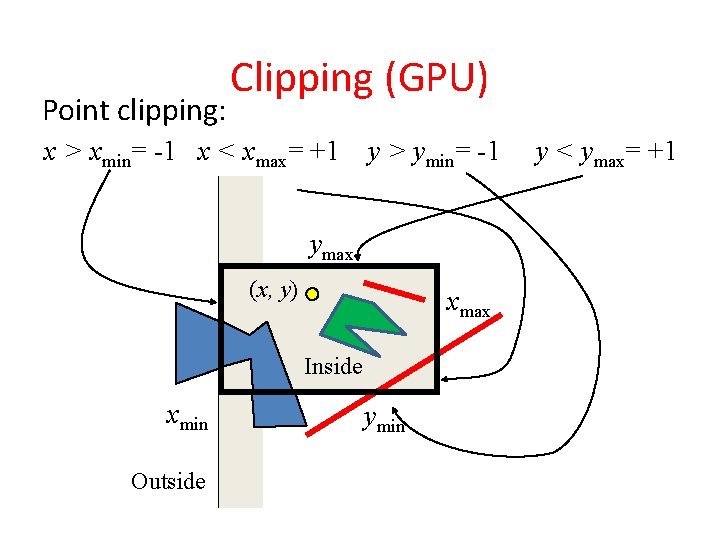

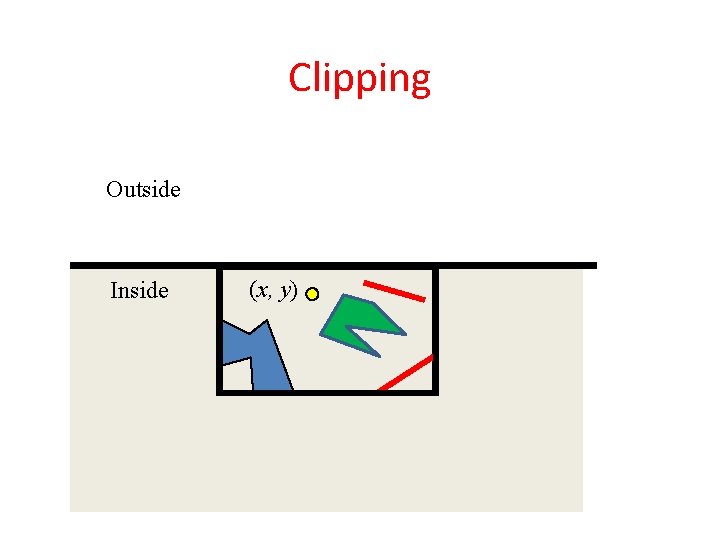

Point clipping: Clipping (GPU) x > xmin= -1 x < xmax= +1 y > ymin= -1 ymax (x, y) xmax Inside xmin Outside ymin y < ymax= +1

Clipping x > xmin (x, y) Outside Inside

Clipping y > ymin Inside (x, y) ymin Outside

Clipping x < xmax (x, y) Inside Outside

Clipping y < ymax Outside Inside (x, y)

x 1, y 1 Line segment clipping: x < xmax x 2, y 2 x 1, y 1 xmax x i, y i x 2, y 2 x(t) = x 1 + (x 2 - x 1)t, y(t) = y 1 + (y 2 - y 1)t x = xmax Intersection: xmax= x 1 + (x 2 - x 1)t t = (xmax-x 1)/(x 2 -x 1) xi = xmax yi = y 1 + (y 2 - y 1) (xmax-x 1)/(x 2 -x 1)

![Ivan SutherlandHodgeman poligon clipping p2 q2 p3 p4 q3 Polygon Clippn qm p5 m (Ivan) Sutherland-Hodgeman poligon clipping p[2] q[2] p[3] p[4] q[3] Polygon. Clip(p[n] q[m]) p[5] m](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-24.jpg)

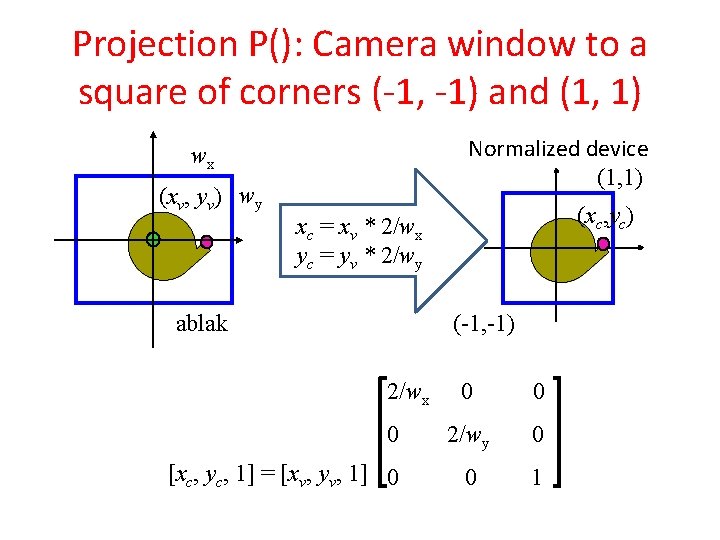

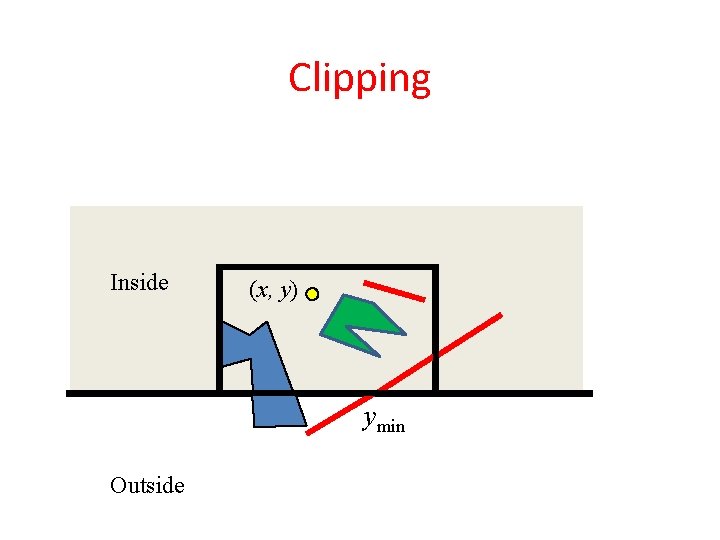

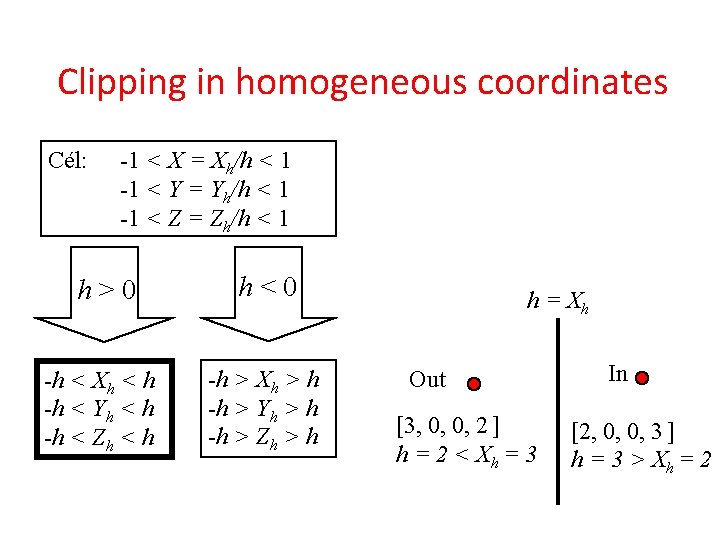

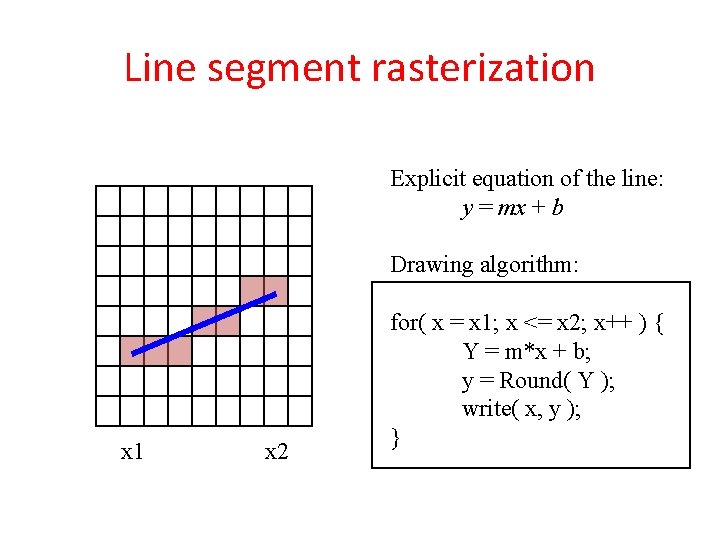

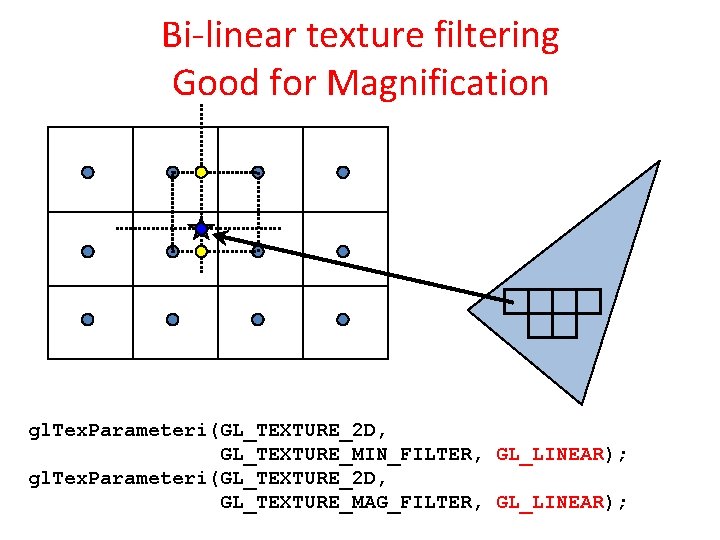

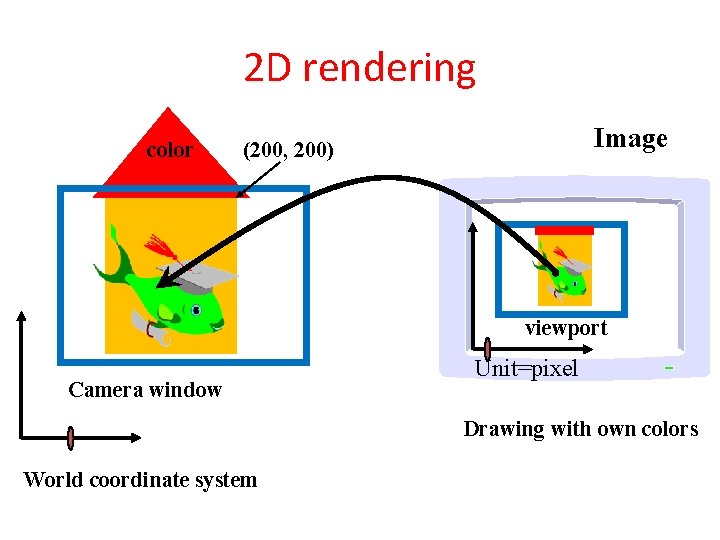

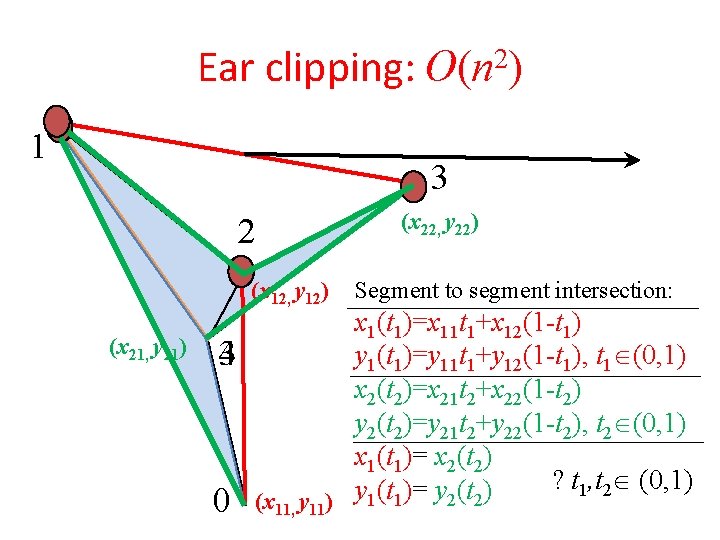

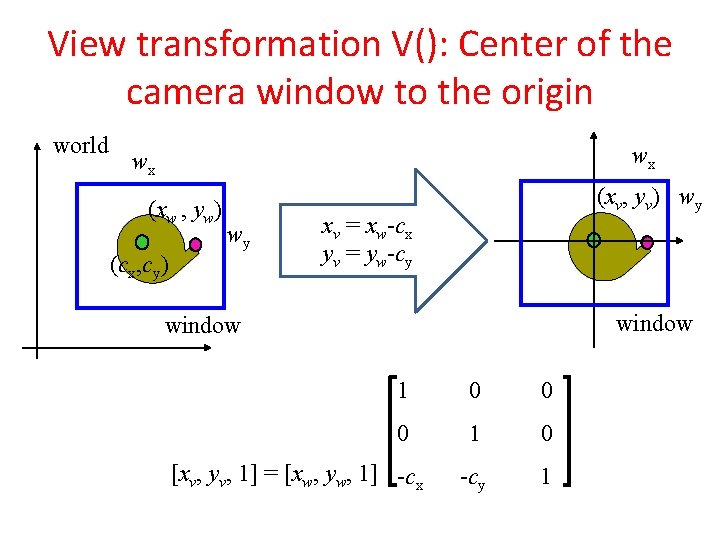

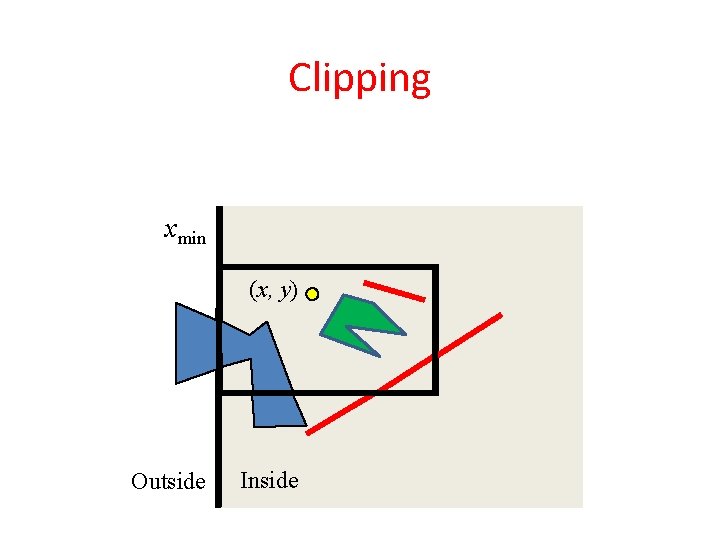

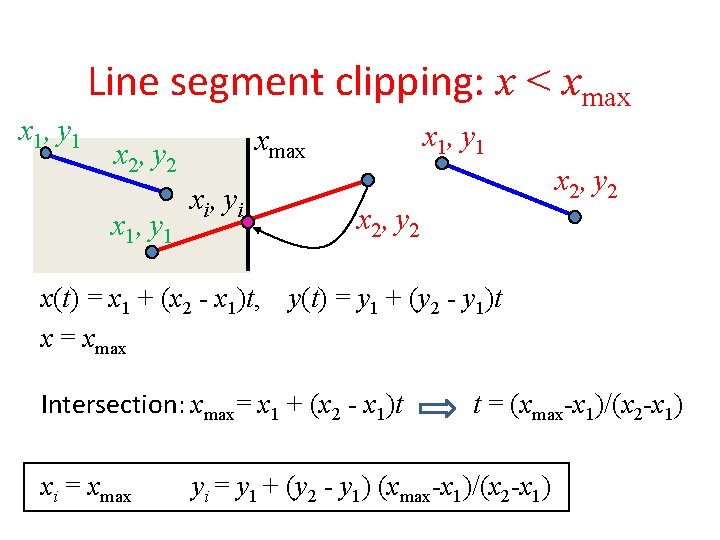

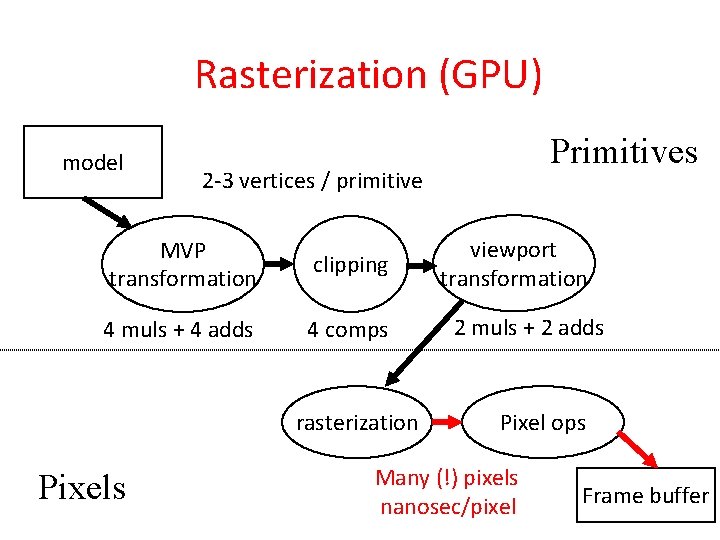

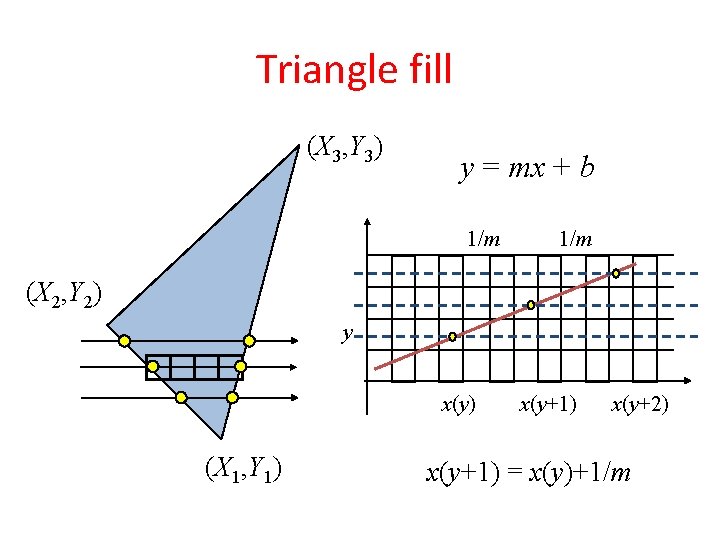

(Ivan) Sutherland-Hodgeman poligon clipping p[2] q[2] p[3] p[4] q[3] Polygon. Clip(p[n] q[m]) p[5] m = 0; for(i=0; i < n; i++) { p[1] q[4] p[0] if (p[i] inside) { q[1] q[0] q[m++] = p[i]; if (p[i+1] outside) q[m++] = Intersect(p[i], p[i+1], boundary); } else { if (p[i+1] inside) q[m++] = Intersect(p[i], p[i+1], boundary); } } Insert the first point at } the end as well.

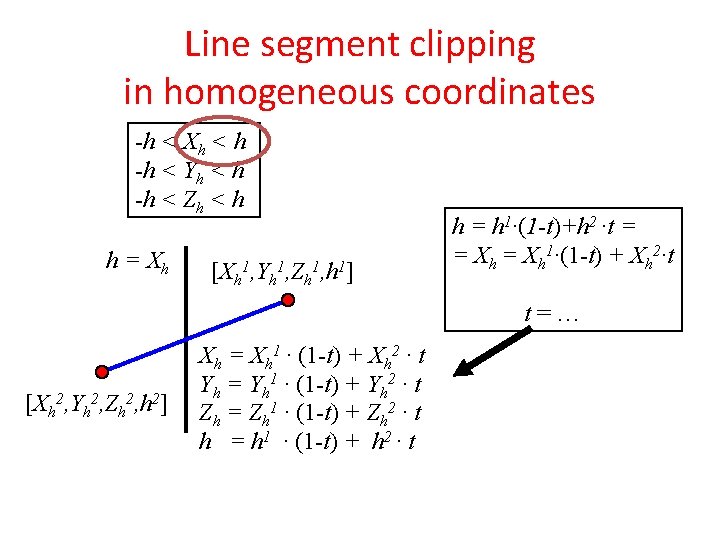

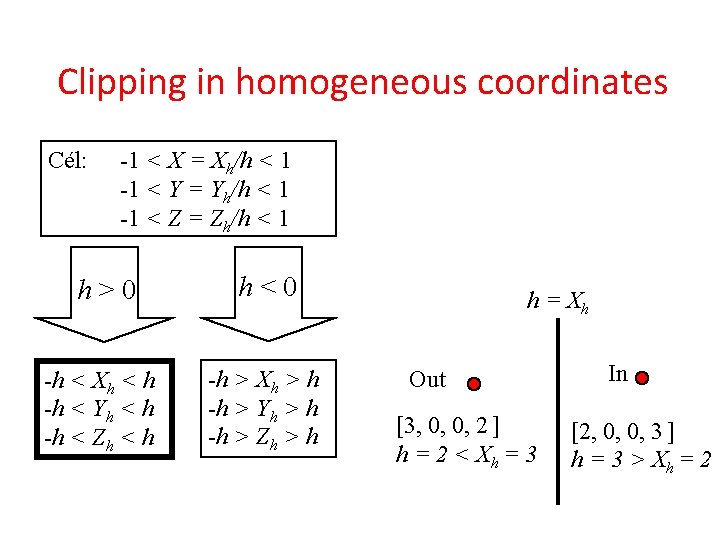

Clipping in homogeneous coordinates Cél: -1 < X = Xh/h < 1 -1 < Y = Yh/h < 1 -1 < Z = Zh/h < 1 h > 0 h < 0 -h < Xh < h -h < Yh < h -h < Zh < h -h > Xh > h -h > Yh > h -h > Zh > h h = Xh Out [3, 0, 0, 2 ] h = 2 < Xh = 3 In [2, 0, 0, 3 ] h = 3 > Xh = 2

Line segment clipping in homogeneous coordinates -h < Xh < h -h < Yh < h -h < Zh < h h = Xh [Xh 1, Yh 1, Zh 1, h 1] h = h 1·(1 -t)+h 2 ·t = = Xh 1·(1 -t) + Xh 2·t t = … [Xh 2, Yh 2, Zh 2, h 2] Xh = Xh 1 · (1 -t) + Xh 2 · t Yh = Yh 1 · (1 -t) + Yh 2 · t Zh = Zh 1 · (1 -t) + Zh 2 · t h = h 1 · (1 -t) + h 2 · t

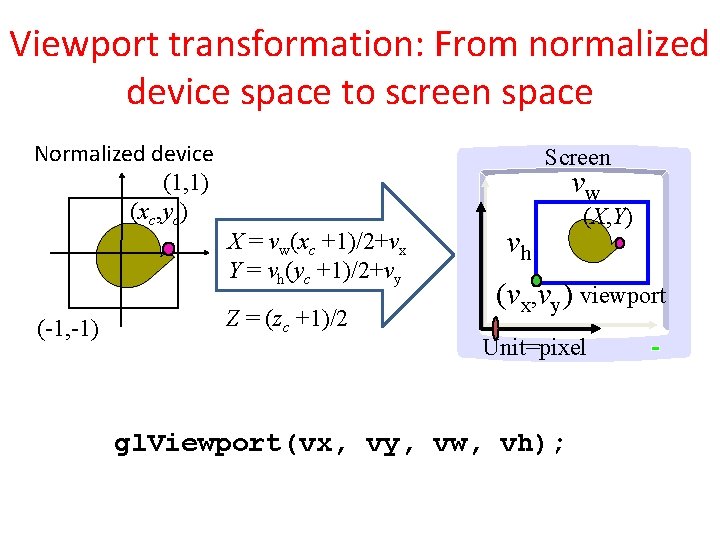

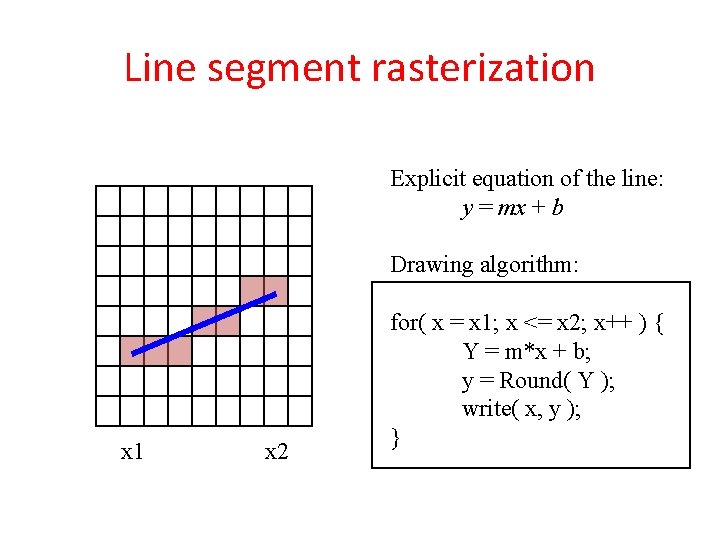

Viewport transformation: From normalized device space to screen space Normalized device (1, 1) (xc, yc) (-1, -1) Screen vw X = vw(xc +1)/2+vx Y = vh(yc +1)/2+vy Z = (zc +1)/2 vh (X, Y) (vx, vy) viewport Unit=pixel gl. Viewport(vx, vy, vw, vh);

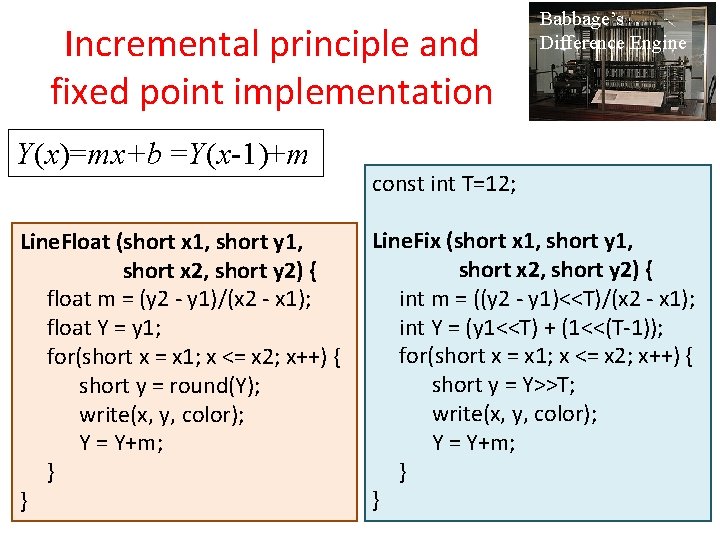

Rasterization (GPU) model 2 -3 vertices / primitive MVP transformation clipping 4 muls + 4 adds 4 comps rasterization Pixels Primitives viewport transformation 2 muls + 2 adds Pixel ops Many (!) pixels nanosec/pixel Frame buffer

Line segment rasterization Explicit equation of the line: y = mx + b Drawing algorithm: x 1 x 2 for( x = x 1; x <= x 2; x++ ) { Y = m*x + b; y = Round( Y ); write( x, y ); }

Incremental principle and fixed point implementation Y(x)=mx+b =Y(x-1)+m Line. Float (short x 1, short y 1, short x 2, short y 2) { float m = (y 2 - y 1)/(x 2 - x 1); float Y = y 1; for(short x = x 1; x <= x 2; x++) { short y = round(Y); write(x, y, color); Y = Y+m; } } Babbage’s Difference Engine const int T=12; Line. Fix (short x 1, short y 1, short x 2, short y 2) { int m = ((y 2 - y 1)<<T)/(x 2 - x 1); int Y = (y 1<<T) + (1<<(T-1)); for(short x = x 1; x <= x 2; x++) { short y = Y>>T; write(x, y, color); Y = Y+m; } }

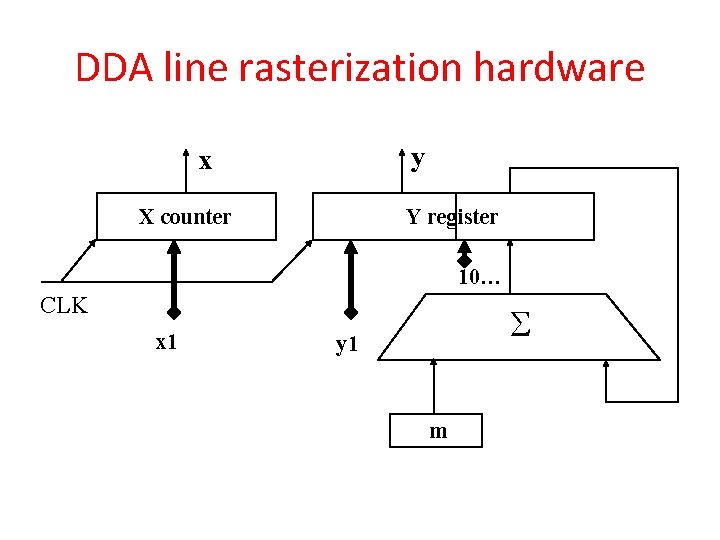

DDA line rasterization hardware y x X counter Y register 10… CLK x 1 S y 1 m

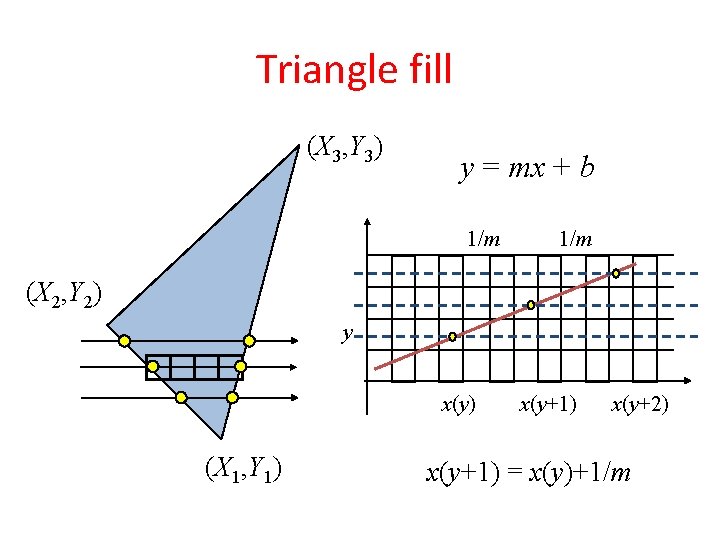

Triangle fill (X 3, Y 3) y = mx + b 1/m (X 2, Y 2) y x(y) (X 1, Y 1) x(y+2) x(y+1) = x(y)+1/m

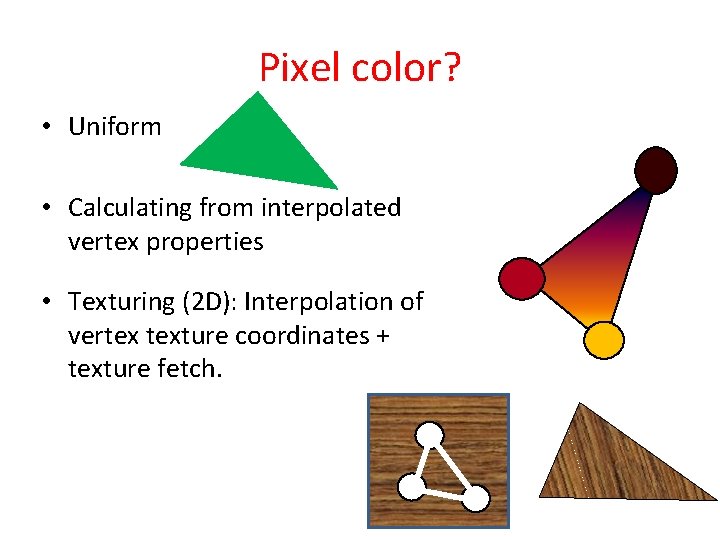

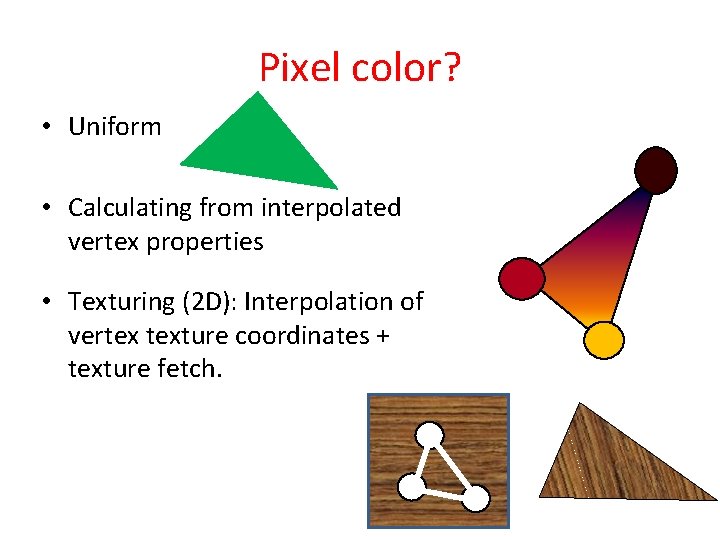

Pixel color? • Uniform • Calculating from interpolated vertex properties • Texturing (2 D): Interpolation of vertex texture coordinates + texture fetch.

![2 D Texturing xax ubx vcx yay uby vcy Xh Yh h x 2 D Texturing x=ax u+bx v+cx y=ay u+by v+cy [Xh, Yh, h] = [x,](https://slidetodoc.com/presentation_image/5ef7b139072c47beaddde777c950e47a/image-34.jpg)

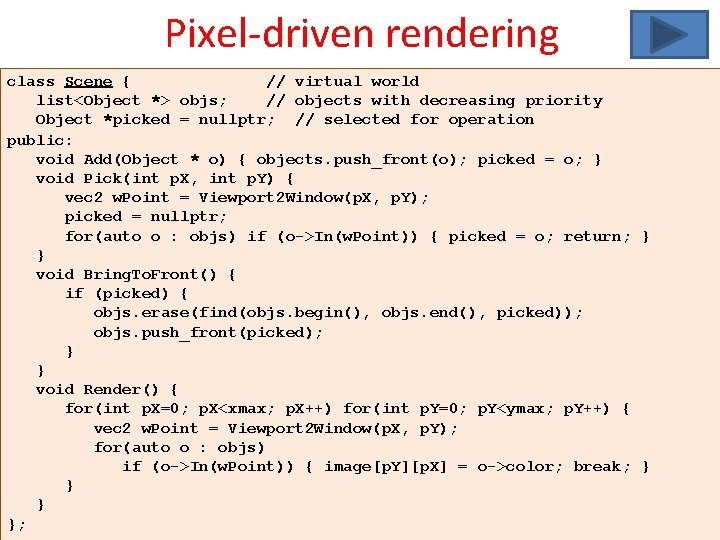

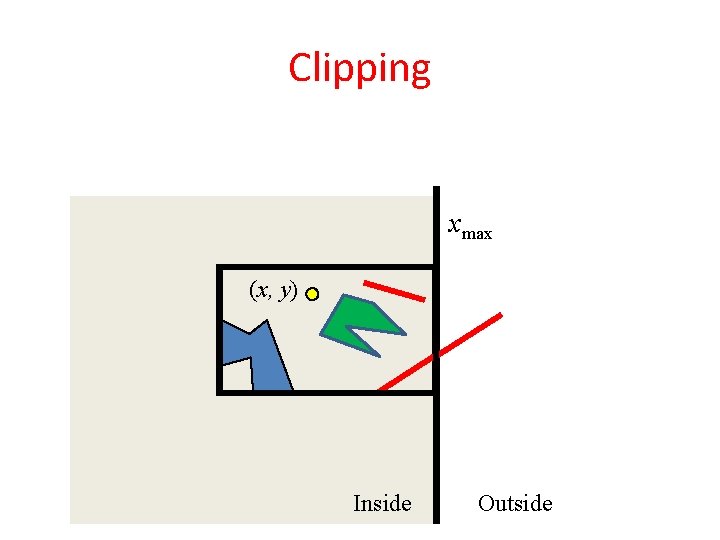

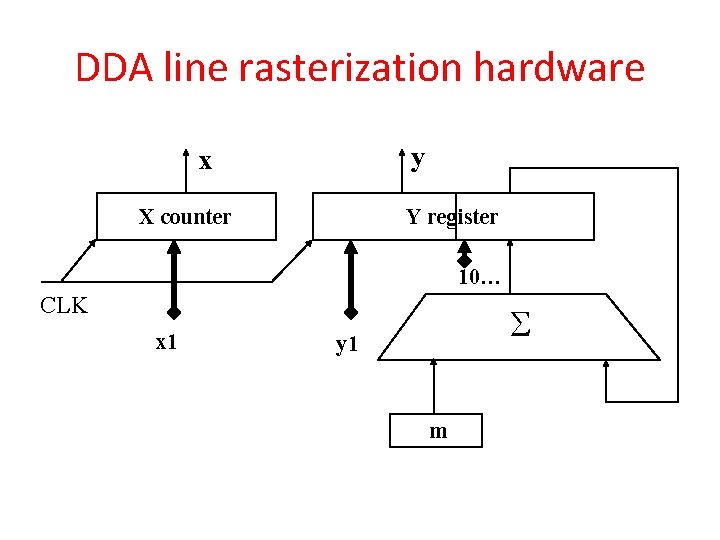

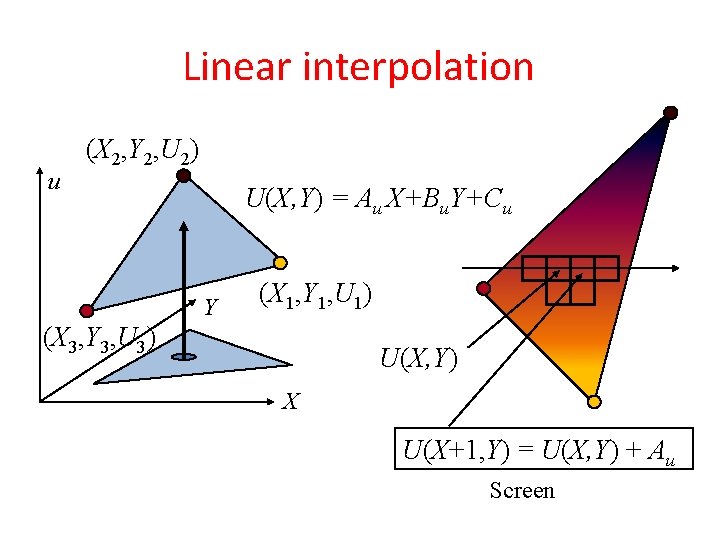

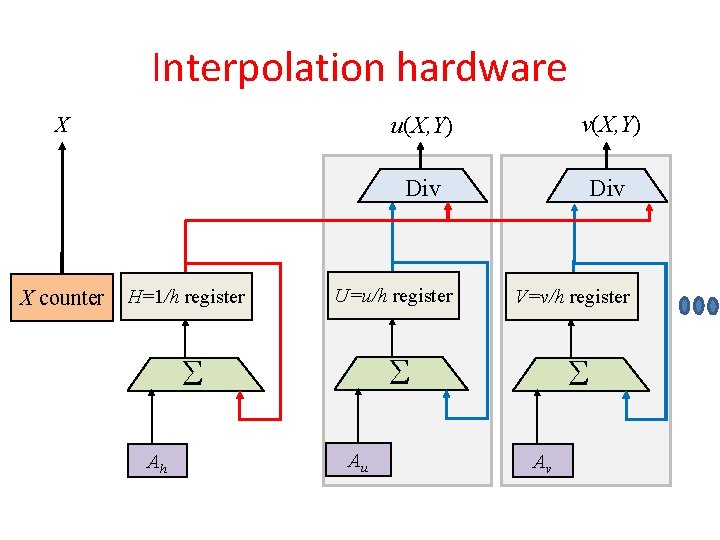

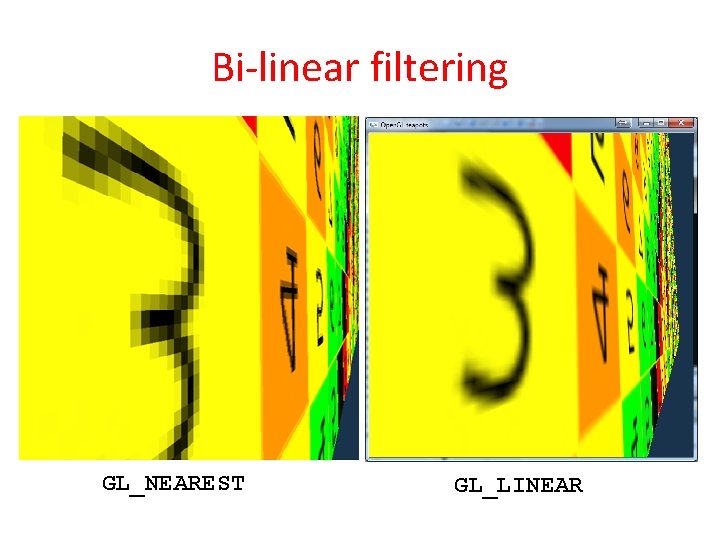

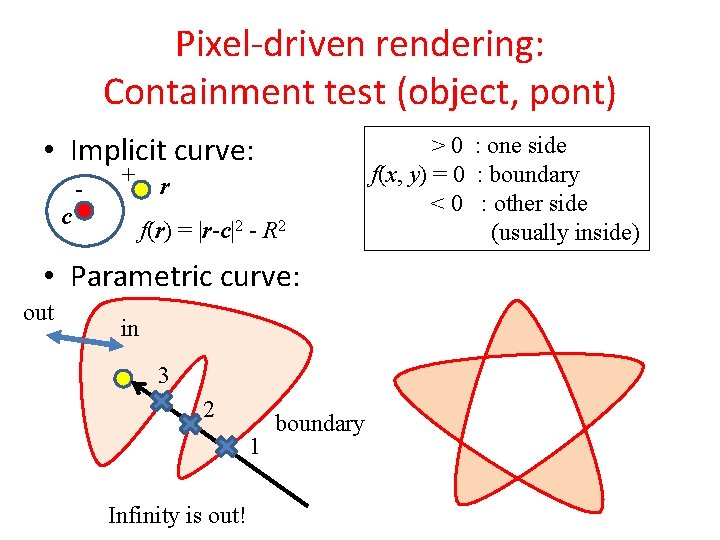

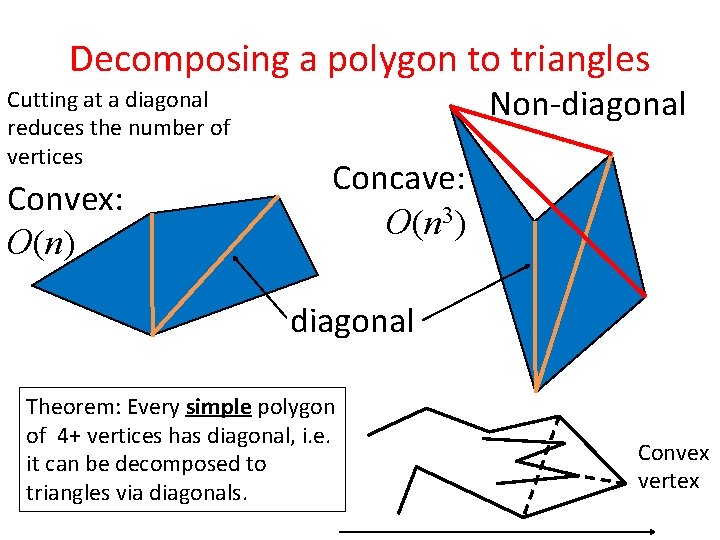

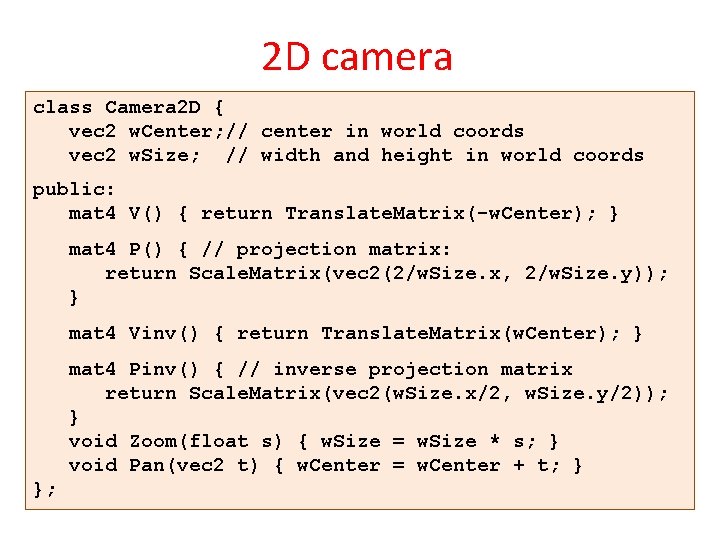

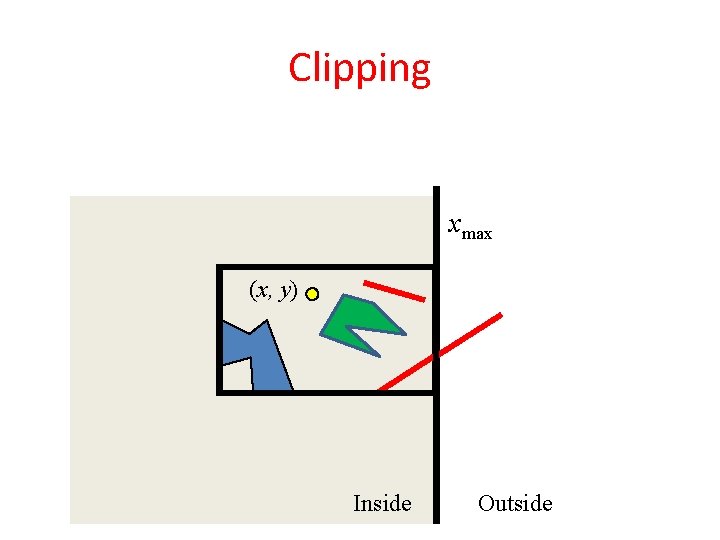

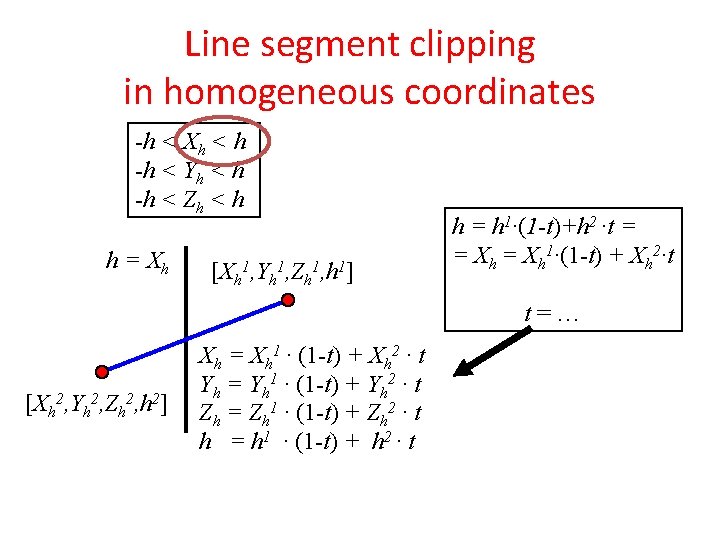

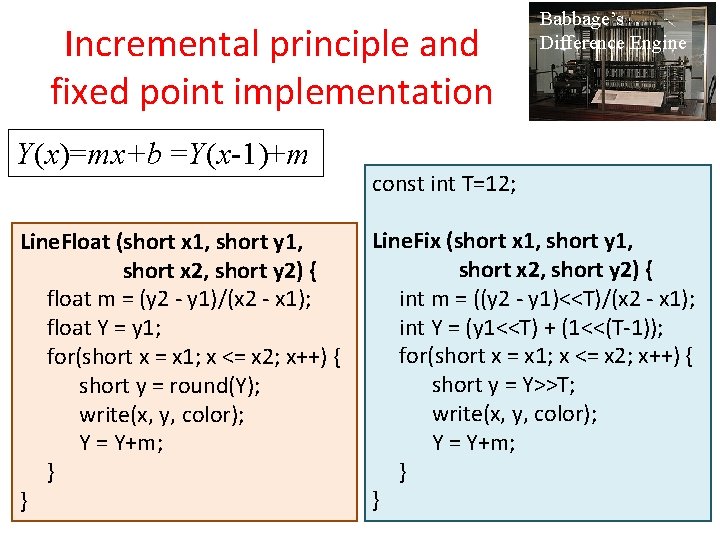

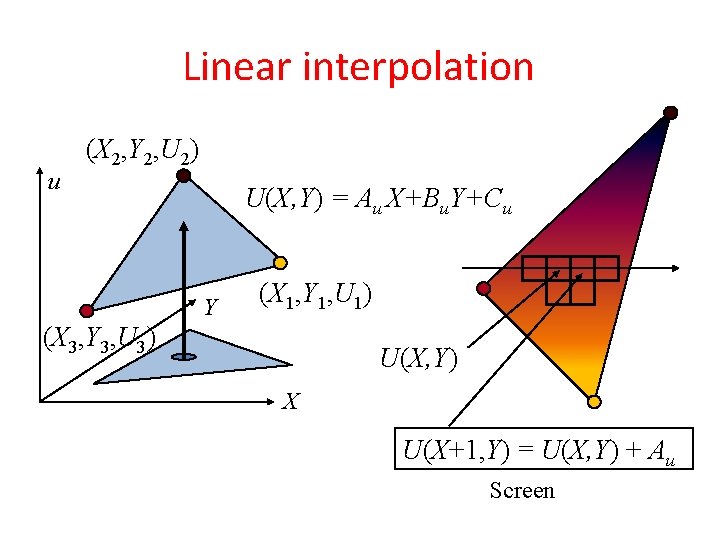

2 D Texturing x=ax u+bx v+cx y=ay u+by v+cy [Xh, Yh, h] = [x, y, 1]T (X, Y)= [Xh/h, Yh/h] Parameterization Rendering v 1 (x 1, y 1) (u 1, v 1) (u 3, v 3) (u 2, v 2) y u Texture space: Unit square 1 Y (X 1, Y 1), h 1 (u 1, v 1) (x 2, y 2) (x 3, y 3) x (X 3, Y 3), h 3 (u 3, v 3) Model [X h, Y h, h] = [u, v, 1] T, [U, V, H] = [u/h, v/h, 1/h] = [X, Y, 1] T-1 u = U/H, v = V/H (X 2, Y 2), h 2 (u 2, v 2) X Image

Linear interpolation u (X 2, Y 2, U 2) U(X, Y) = Au X+Bu. Y+Cu Y (X 1, Y 1, U 1) (X 3, Y 3, U 3) U(X, Y) X U(X+1, Y) = U(X, Y) + Au Screen

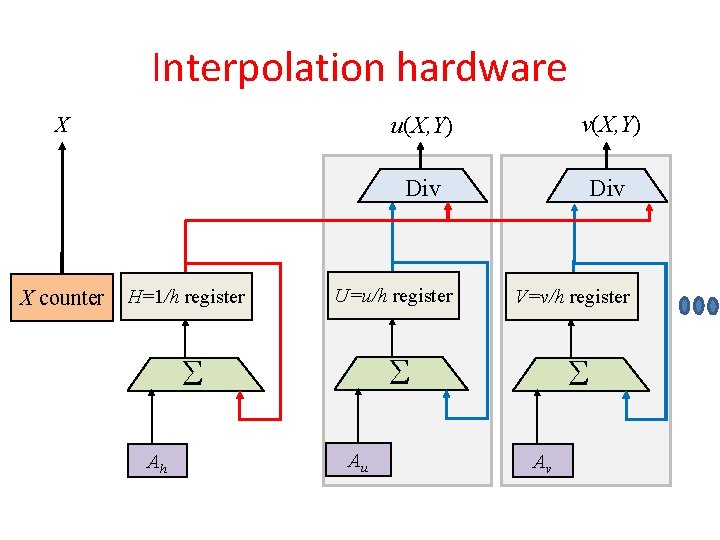

Interpolation hardware X X counter H=1/h register v(X, Y) Div U=u/h register V=v/h register S S Ah u(X, Y) Au S Av

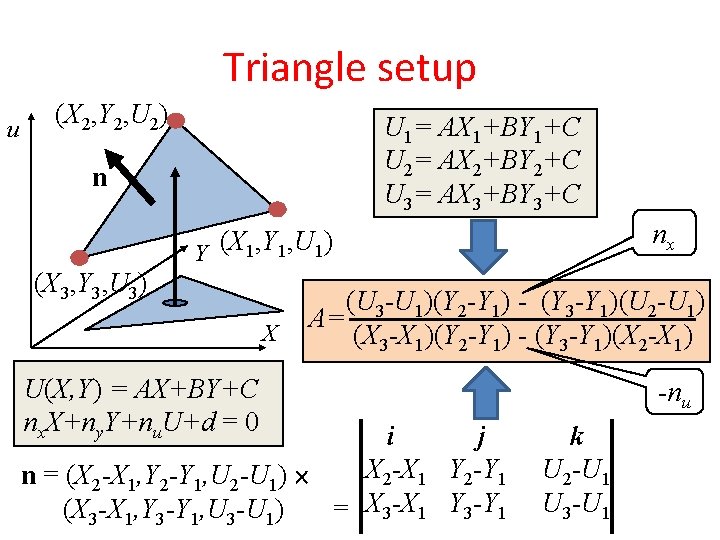

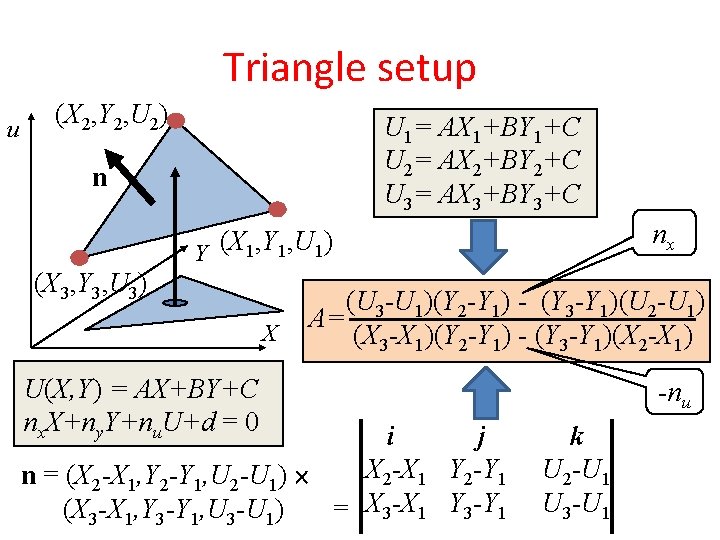

Triangle setup u (X 2, Y 2, U 2) U 1= AX 1+BY 1+C U 2= AX 2+BY 2+C U 3= AX 3+BY 3+C n nx Y (X 1, Y 1, U 1) (X 3, Y 3, U 3) X U(X, Y) = AX+BY+C nx. X+ny. Y+nu. U+d = 0 (U 3 -U 1)(Y 2 -Y 1) - (Y 3 -Y 1)(U 2 -U 1) A= (X 3 -X 1)(Y 2 -Y 1) - (Y 3 -Y 1)(X 2 -X 1) i j X 2 -X 1 Y 2 -Y 1 n = (X 2 -X 1, Y 2 -Y 1, U 2 -U 1) (X 3 -X 1, Y 3 -Y 1, U 3 -U 1) = X 3 -X 1 Y 3 -Y 1 -nu k U 2 -U 1 U 3 -U 1

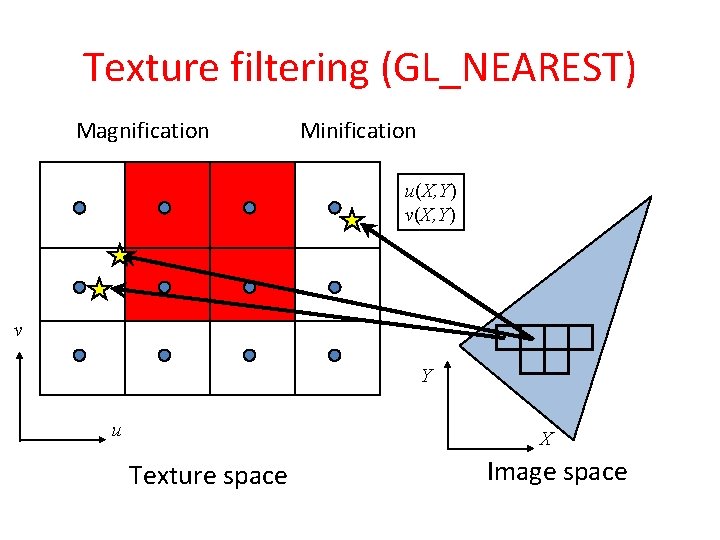

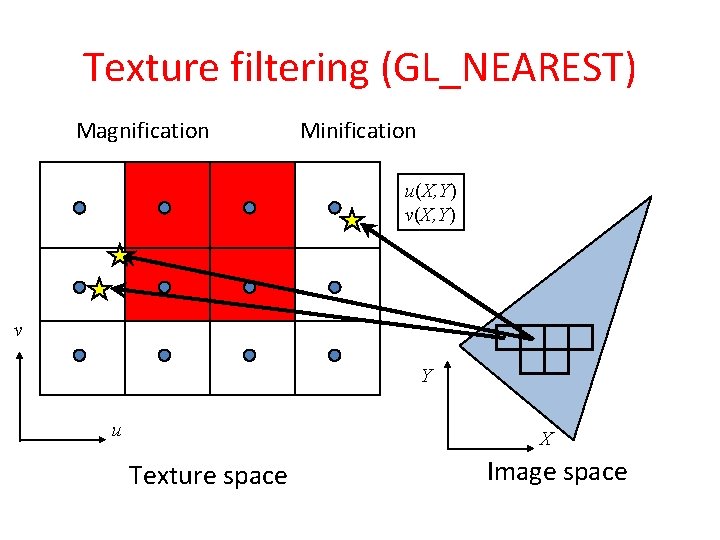

Texture filtering (GL_NEAREST) Magnification Minification u(X, Y) v Y u X Texture space Image space

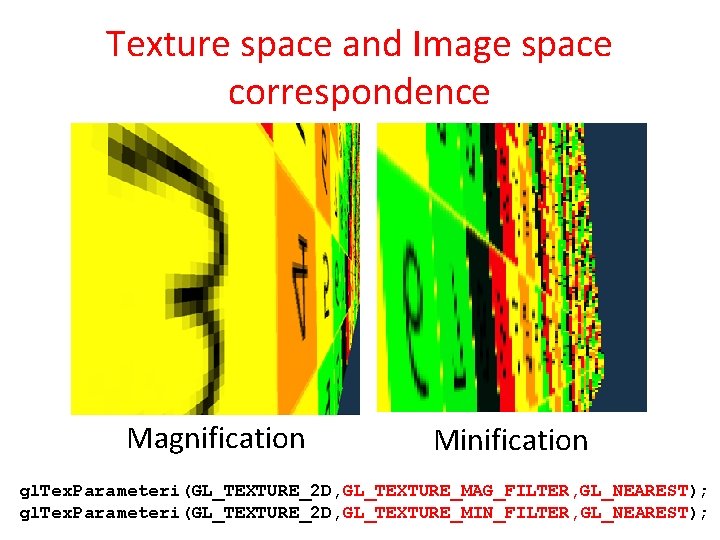

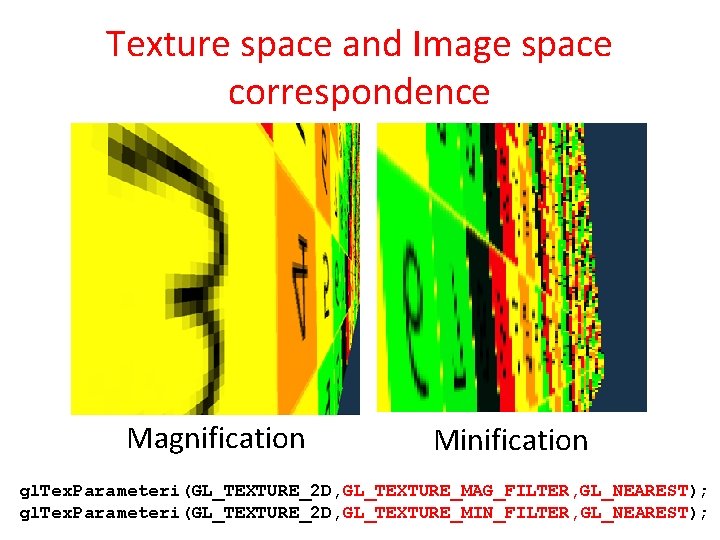

Texture space and Image space correspondence Magnification Minification gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

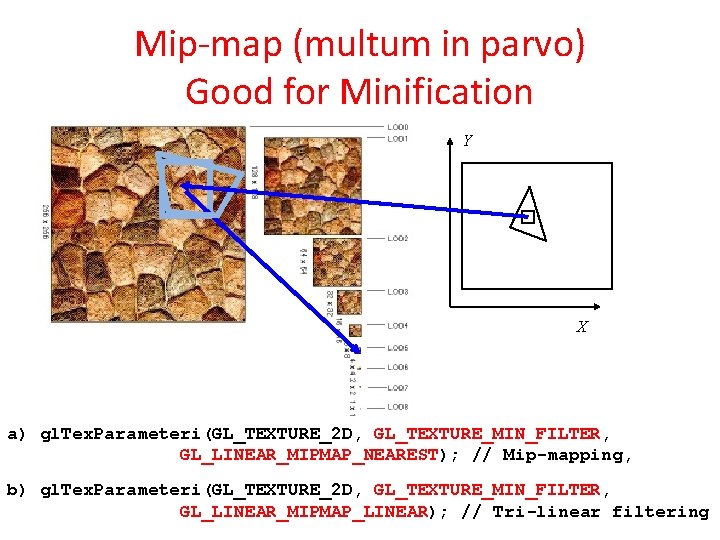

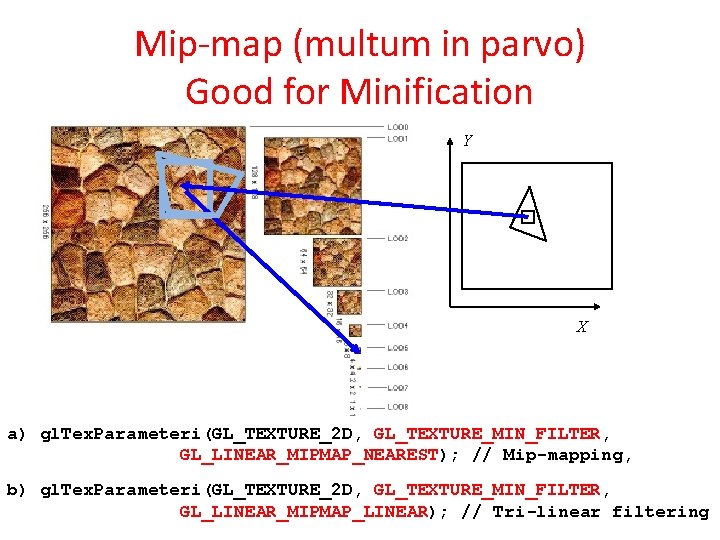

Mip-map (multum in parvo) Good for Minification Y X a) gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_NEAREST); // Mip-mapping, b) gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); // Tri-linear filtering

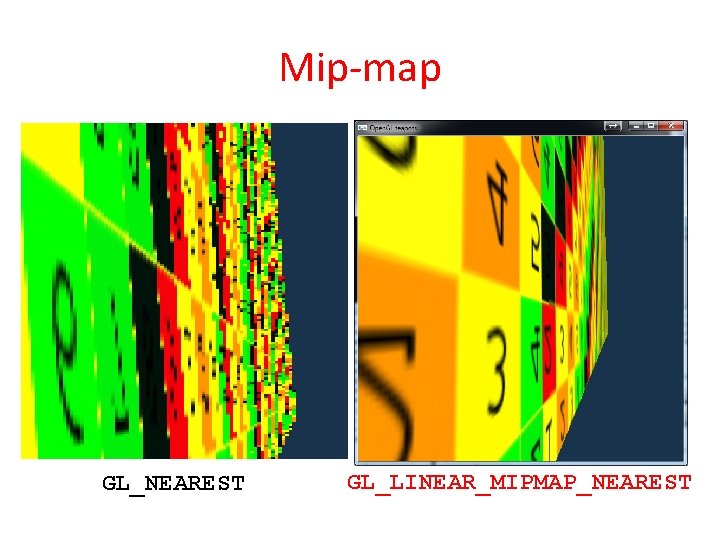

Mip-map GL_NEAREST GL_LINEAR_MIPMAP_NEAREST

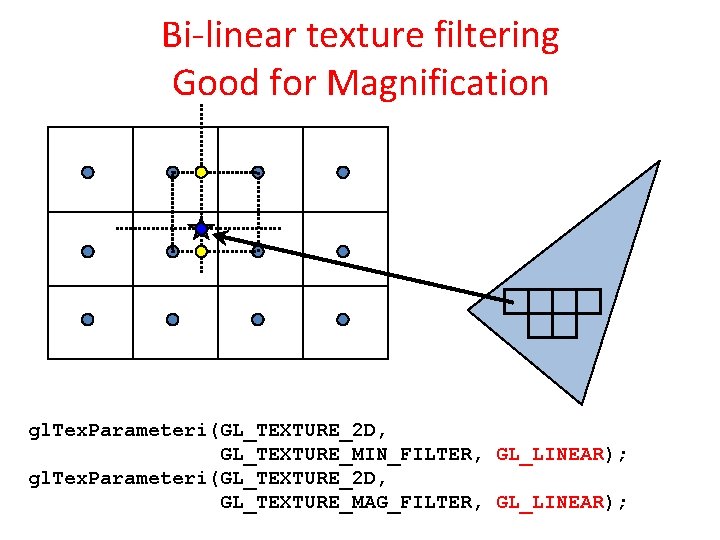

Bi-linear texture filtering Good for Magnification gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MIN_FILTER, GL_LINEAR); gl. Tex. Parameteri(GL_TEXTURE_2 D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

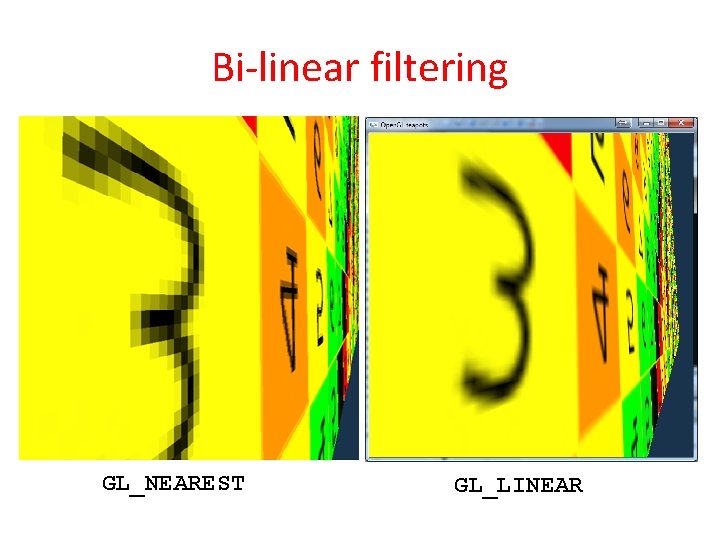

Bi-linear filtering GL_NEAREST GL_LINEAR

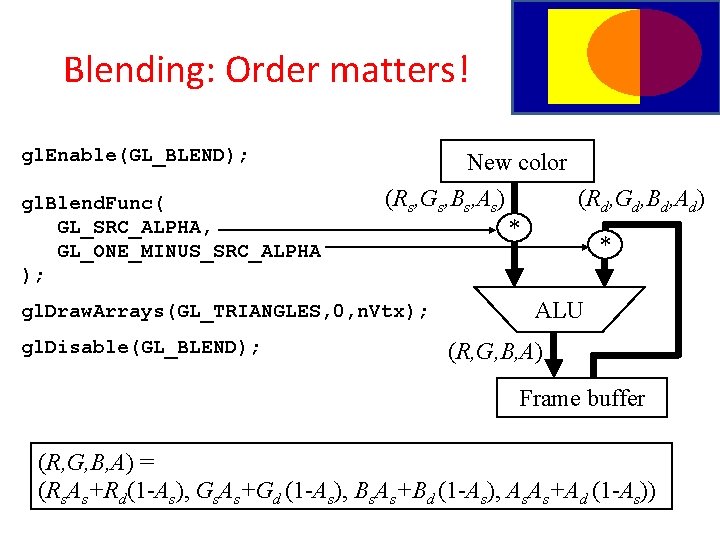

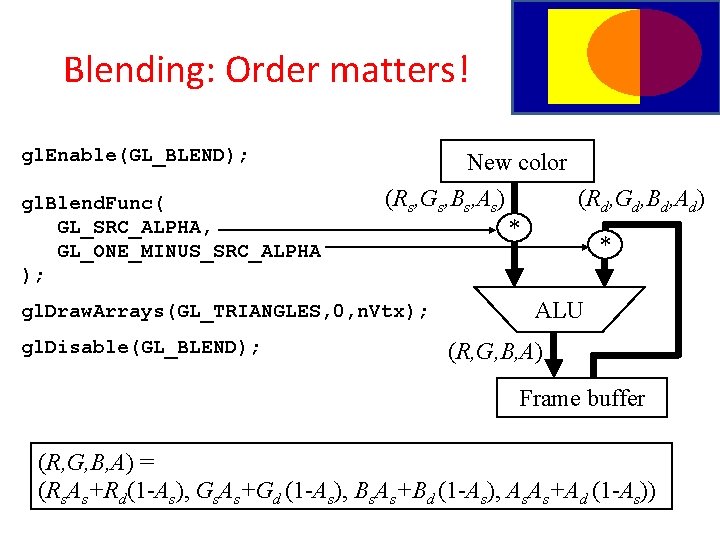

Blending: Order matters! gl. Enable(GL_BLEND); gl. Blend. Func( GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA ); New color (Rs, Gs, Bs, As) gl. Draw. Arrays(GL_TRIANGLES, 0, n. Vtx); gl. Disable(GL_BLEND); (Rd, Gd, Bd, Ad) * * ALU (R, G, B, A) Frame buffer (R, G, B, A) = (Rs. As+Rd(1 -As), Gs. As+Gd (1 -As), Bs. As+Bd (1 -As), As. As+Ad (1 -As))

Excercises • Prove that any polygon of at least 4 vertices has a diagonal! • Prove the two ears theorem! • Does it make sense to develop a circle rasterization algorithm? • Write a polygon filling algorithm that can handle even non -simple polygons (the boundary can be built of multiple polylines and may intersect itself). • Implement the learnt clipping and rasterization algorithms! • Write a program that decides whether an axis aligned window may contain any primitive. • Give the class diagram of a Power. Point like program. • What is the rational behind the introduction of normalized device space?