1999 Outline I Introduction to pattern recognition Definition

- Slides: 62

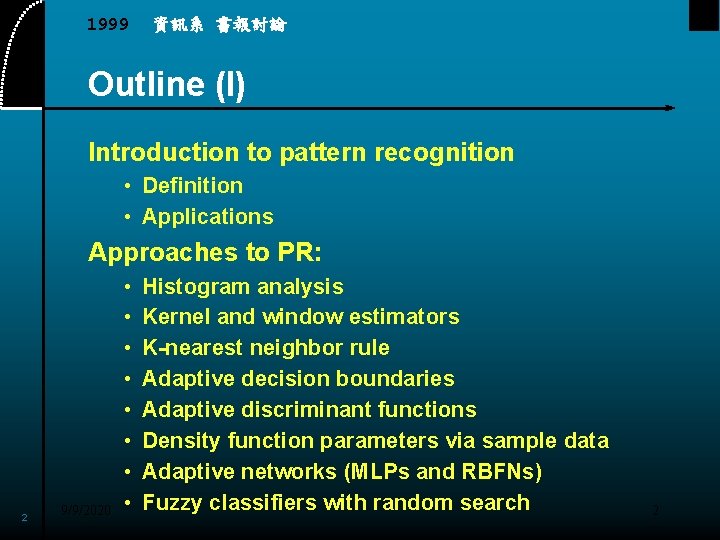

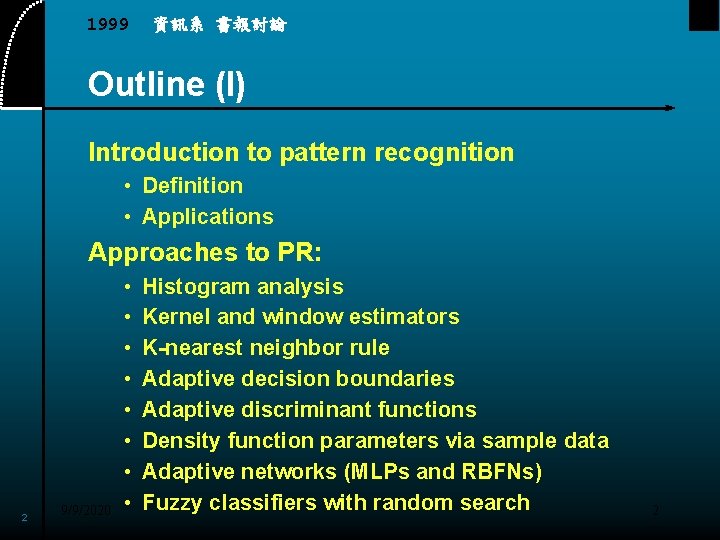

1999 資訊系 書報討論 Outline (I) Introduction to pattern recognition • Definition • Applications Approaches to PR: 2 9/9/2020 • • Histogram analysis Kernel and window estimators K-nearest neighbor rule Adaptive decision boundaries Adaptive discriminant functions Density function parameters via sample data Adaptive networks (MLPs and RBFNs) Fuzzy classifiers with random search 2

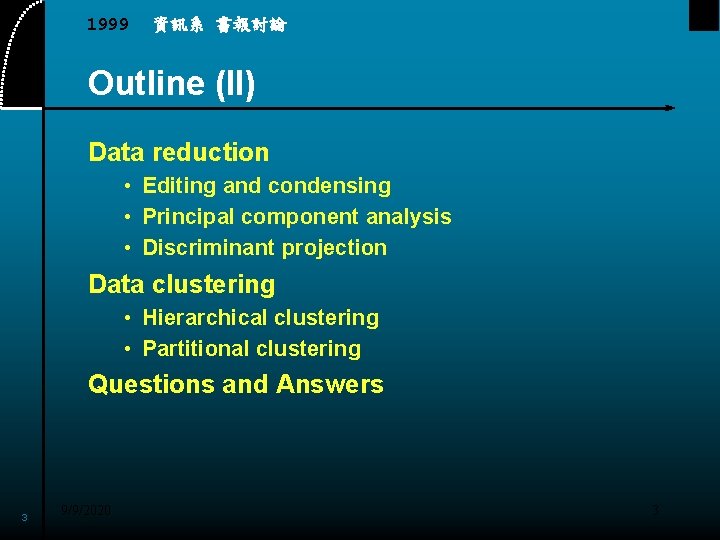

1999 資訊系 書報討論 Outline (II) Data reduction • Editing and condensing • Principal component analysis • Discriminant projection Data clustering • Hierarchical clustering • Partitional clustering Questions and Answers 3 9/9/2020 3

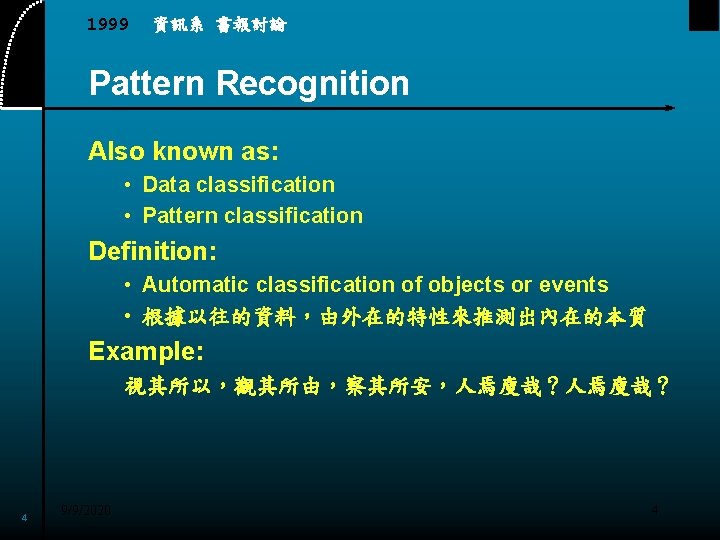

1999 資訊系 書報討論 Pattern Recognition Also known as: • Data classification • Pattern classification Definition: • Automatic classification of objects or events • 根據以往的資料,由外在的特性來推測出內在的本質 Example: 視其所以,觀其所由,察其所安,人焉廋哉? 4 9/9/2020 4

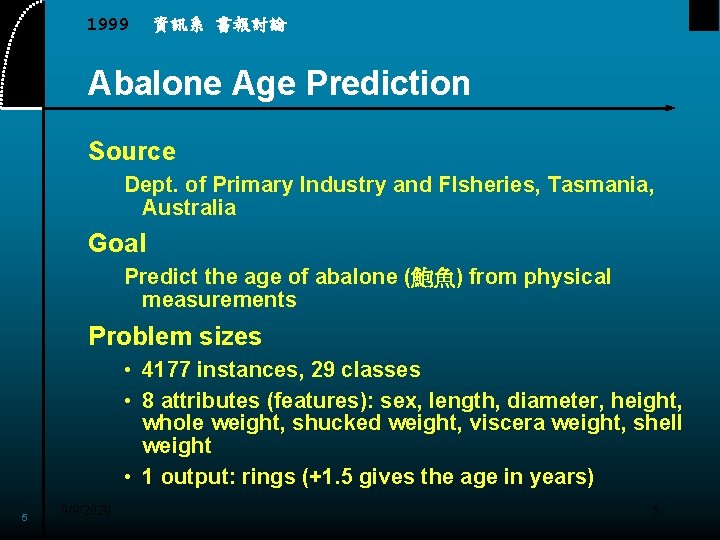

1999 資訊系 書報討論 Abalone Age Prediction Source Dept. of Primary Industry and FIsheries, Tasmania, Australia Goal Predict the age of abalone (鮑魚) from physical measurements Problem sizes • 4177 instances, 29 classes • 8 attributes (features): sex, length, diameter, height, whole weight, shucked weight, viscera weight, shell weight • 1 output: rings (+1. 5 gives the age in years) 5 9/9/2020 5

1999 資訊系 書報討論 Wine Recognition Source Institute of Pharmaceutical and Food Analysis and Technologies, Via Brigata Salerno, 16147 Genoa, Italy. Goal Using 13 chemical constituents to determine the origin of wines Problem size 178 instances, 3 classes, 13 attributes 6 9/9/2020 6

1999 資訊系 書報討論 Mushroom classification Source Mushroom records drawn from The Audubon Society Field Guide to North American Mushrooms (1981) Goal To determine a mushroom is poisonous or edible Problem size 8124 instances, 2 classes, 22 attributes 7 9/9/2020 7

1999 資訊系 書報討論 Liver Disorder Classification Source BUPA Medical Research Ltd. Goal Use variables from blood tests and alcohol consumption to see if liver disorder exists Problem size 345 instances, 2 classes, 6 attributes (the first five are results from blood tests, the last one is alcohol consumption per day) 8 9/9/2020 8

1999 資訊系 書報討論 Credit Screening Source Chiharu Sano, csano@bonnie. ICS. UCI. EDU Goal Determine people who are granted credit Problem size 125 instances, 2 classes, 15 attributes 9 9/9/2020 9

1999 資訊系 書報討論 House Price Prediction Source CMU Stat. Library Goal Predict house price near Boston Problem Size 506 instances, 13 attributes 10 9/9/2020 10

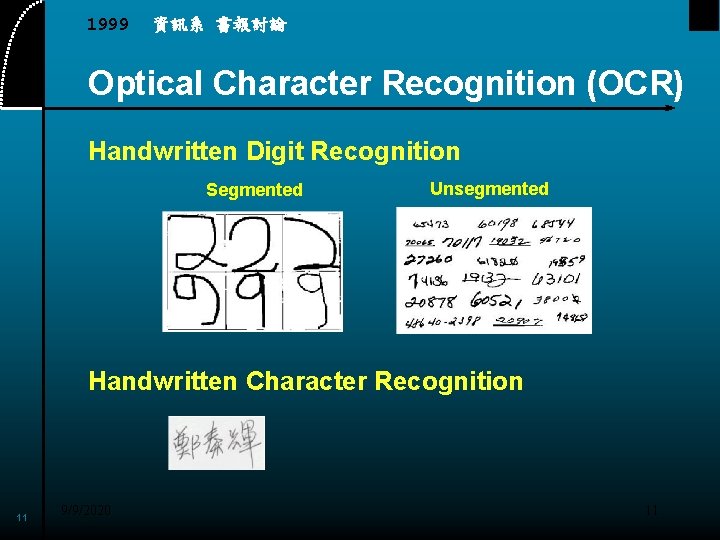

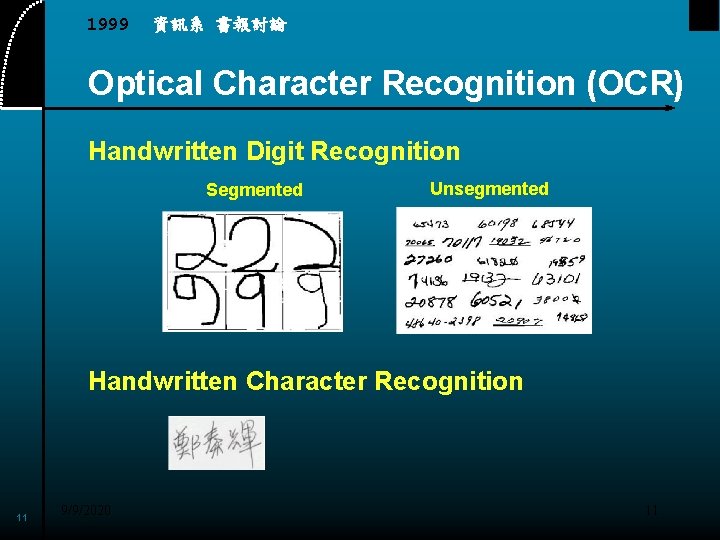

1999 資訊系 書報討論 Optical Character Recognition (OCR) Handwritten Digit Recognition Segmented Unsegmented Handwritten Character Recognition 11 9/9/2020 11

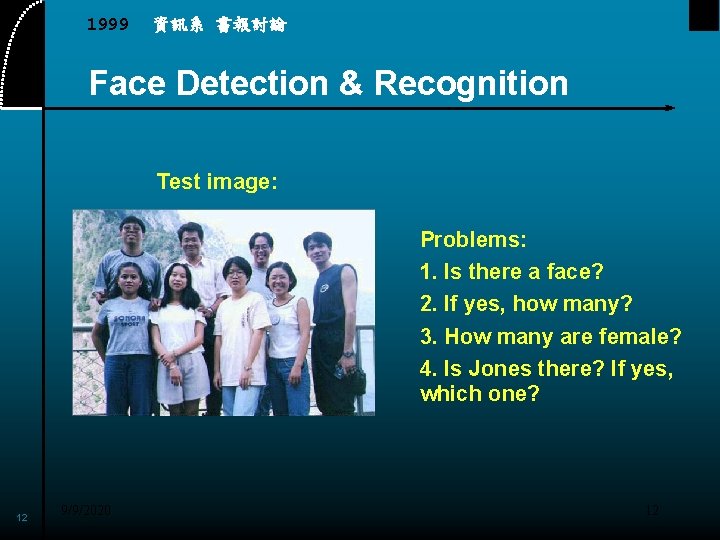

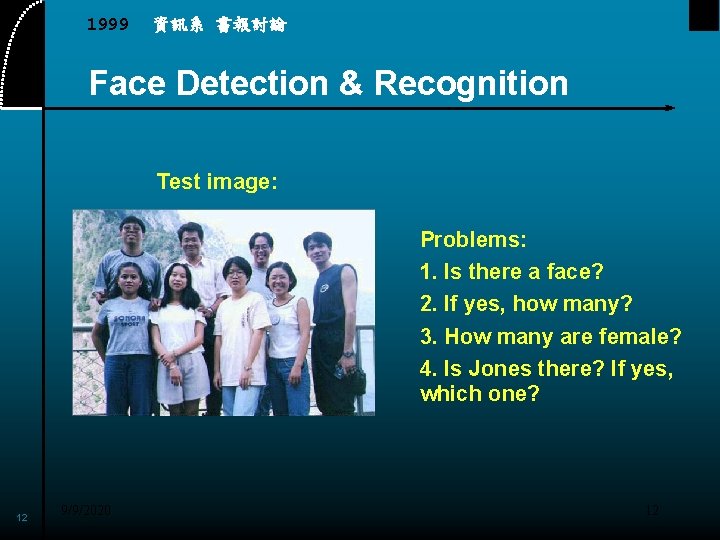

1999 資訊系 書報討論 Face Detection & Recognition Test image: Problems: 1. Is there a face? 2. If yes, how many? 3. How many are female? 4. Is Jones there? If yes, which one? 12 9/9/2020 12

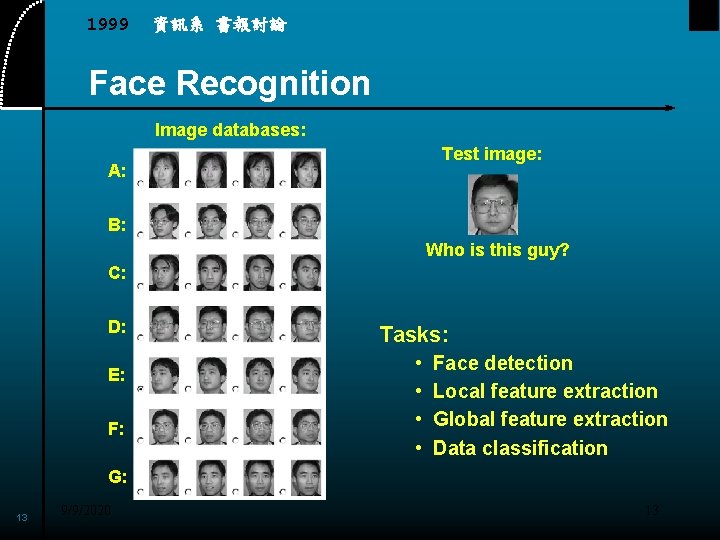

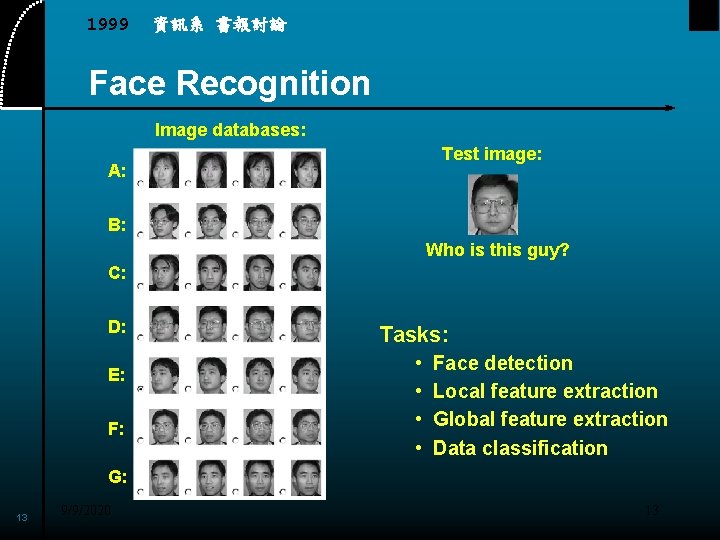

1999 資訊系 書報討論 Face Recognition Image databases: Test image: A: B: Who is this guy? C: D: E: F: Tasks: • • Face detection Local feature extraction Global feature extraction Data classification G: 13 9/9/2020 13

1999 資訊系 書報討論 Pattern Recognition Applications • Biometric ID: Identification of people from physical characteristics • Speech/speaker recognition • Classification of seismic signals • Medical waveform classification (EEG, ECG) • Wave-based nuclear reactor diagnosis • License plate recognition • Decision making in stock trading • User modeling in WWW environments • Satellite picture analyses • Medical image analyses (CAT scan, MRI) 14 9/9/2020 14

1999 資訊系 書報討論 Biometric Identification of people from their physical characteristics, such as • • 15 9/9/2020 faces voices fingerprints palm prints hand vein distributions hand shapes and sizes retinal scans 15

1999 資訊系 書報討論 Who’s Doing Pattern Recognition? Computer science (machine learning, data mining and knowledge discovery) • • Nearest neighbor rule ID 3, C 4. 5 Neural networks Fuzzy logic Statistics/Mathematics (multivariate analysis) 16 9/9/2020 • • • Discriminant analysis CART: Classification and Regression Trees MARS Jackknife procedure Projection pursuit 16

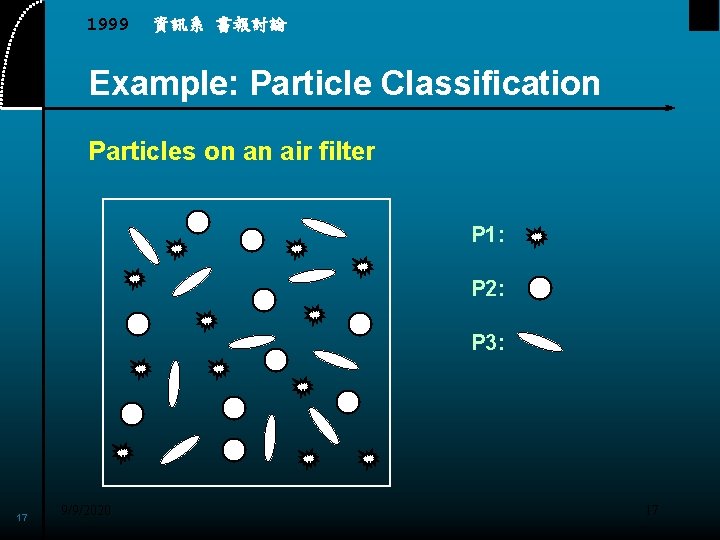

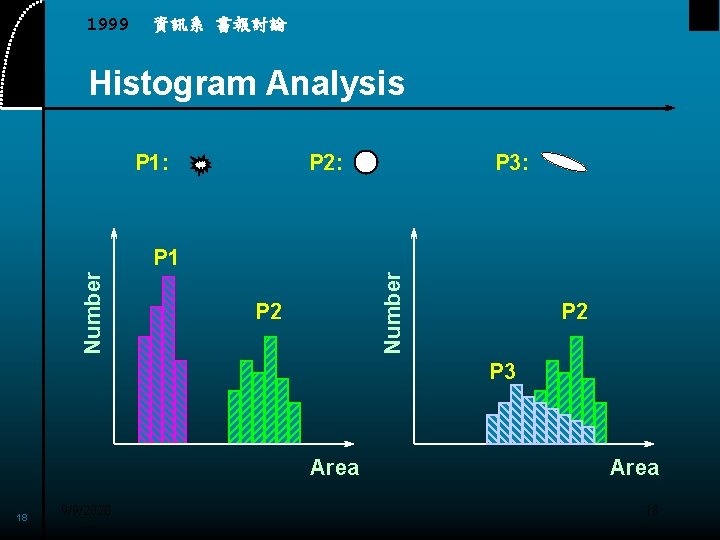

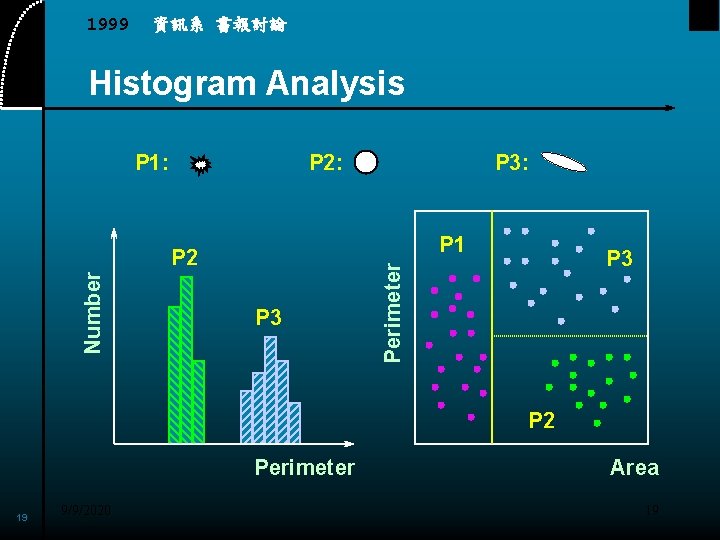

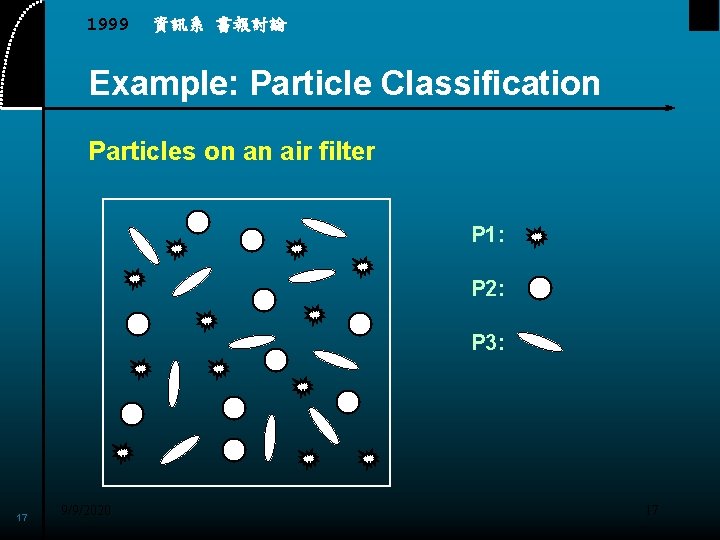

1999 資訊系 書報討論 Example: Particle Classification Particles on an air filter P 1: P 2: P 3: 17 9/9/2020 17

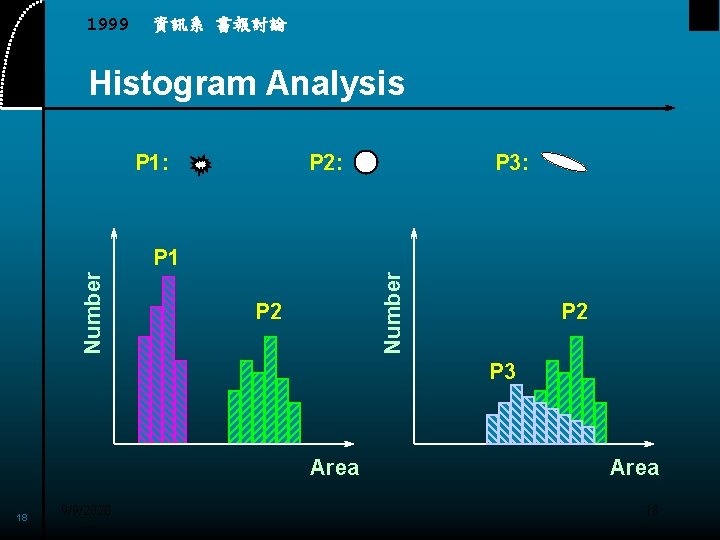

1999 資訊系 書報討論 Histogram Analysis P 1: P 2: P 3: Number P 1 P 2 P 3 Area 18 9/9/2020 Area 18

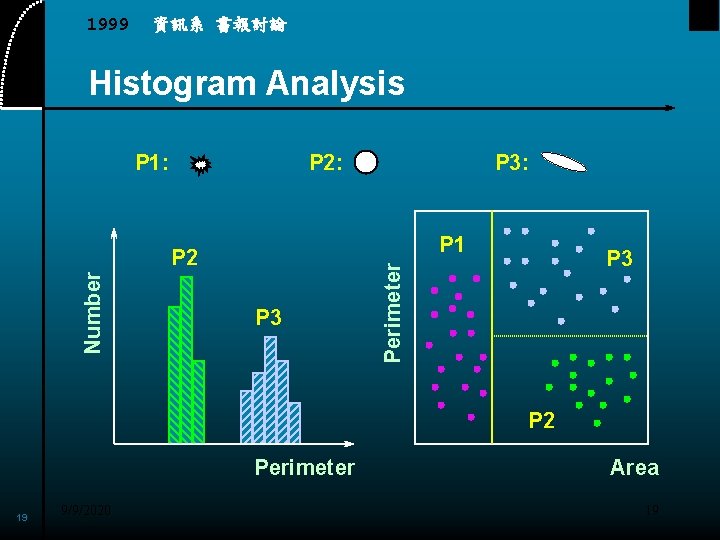

1999 資訊系 書報討論 Histogram Analysis P 1: P 2: P 1 P 3 Perimeter P 2 Number P 3: P 2 Perimeter 19 9/9/2020 Area 19

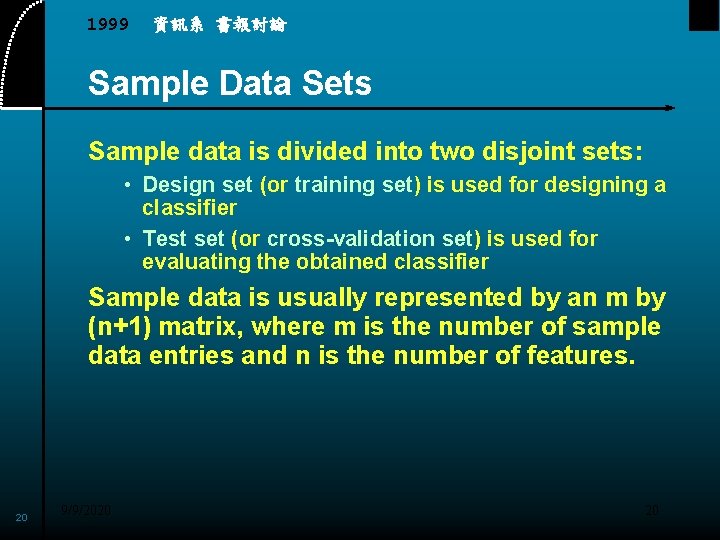

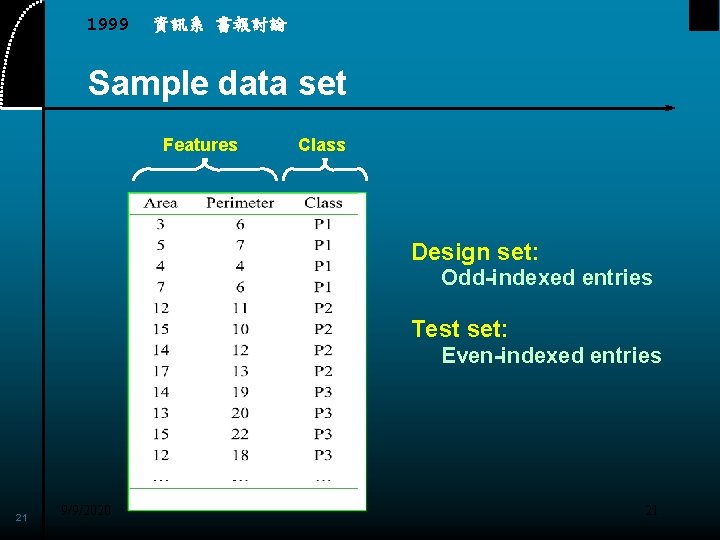

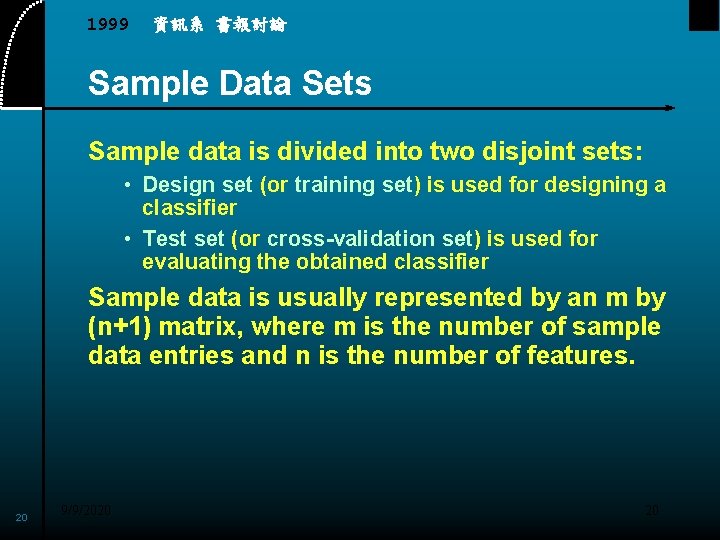

1999 資訊系 書報討論 Sample Data Sets Sample data is divided into two disjoint sets: • Design set (or training set) is used for designing a classifier • Test set (or cross-validation set) is used for evaluating the obtained classifier Sample data is usually represented by an m by (n+1) matrix, where m is the number of sample data entries and n is the number of features. 20 9/9/2020 20

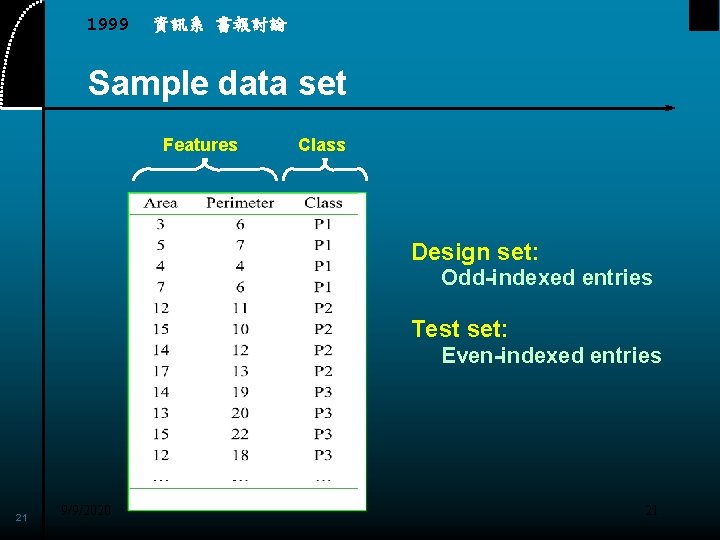

1999 資訊系 書報討論 Sample data set Features Class Design set: Odd-indexed entries Test set: Even-indexed entries 21 9/9/2020 21

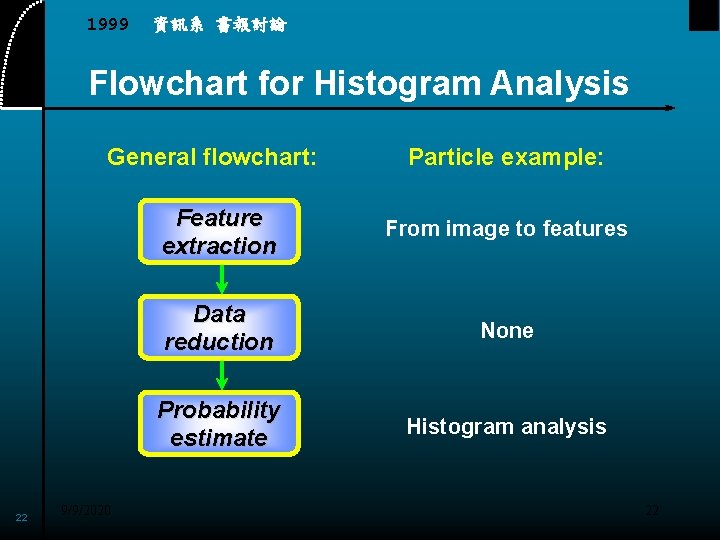

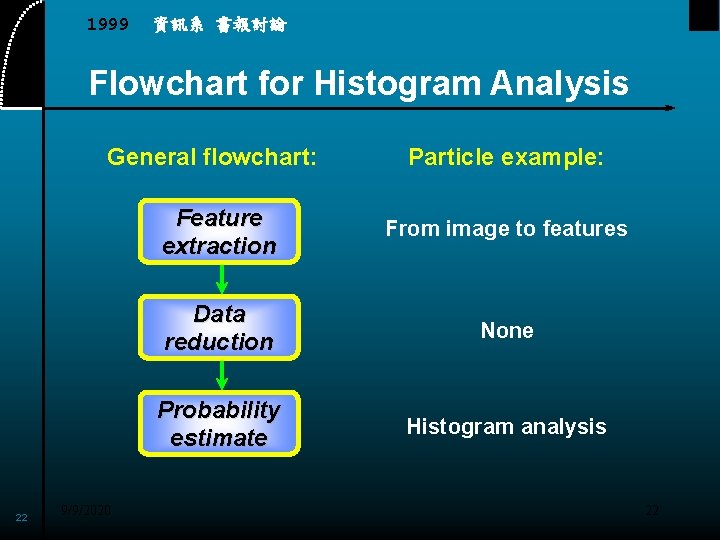

1999 資訊系 書報討論 Flowchart for Histogram Analysis General flowchart: 22 9/9/2020 Particle example: Feature extraction From image to features Data reduction None Probability estimate Histogram analysis 22

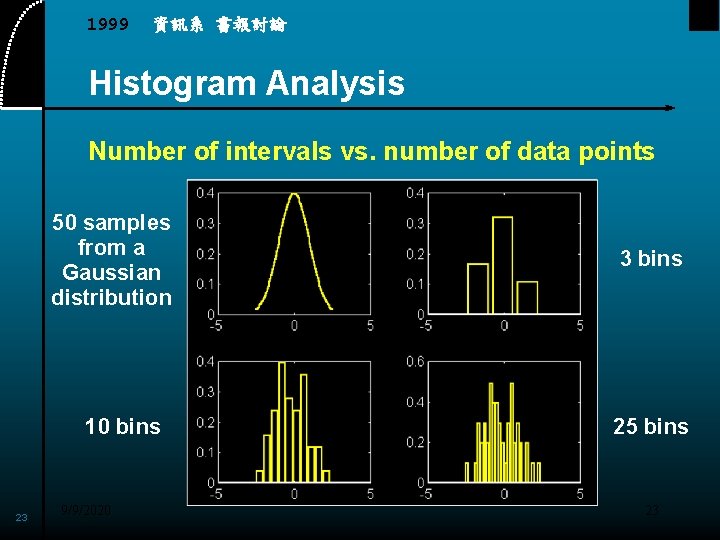

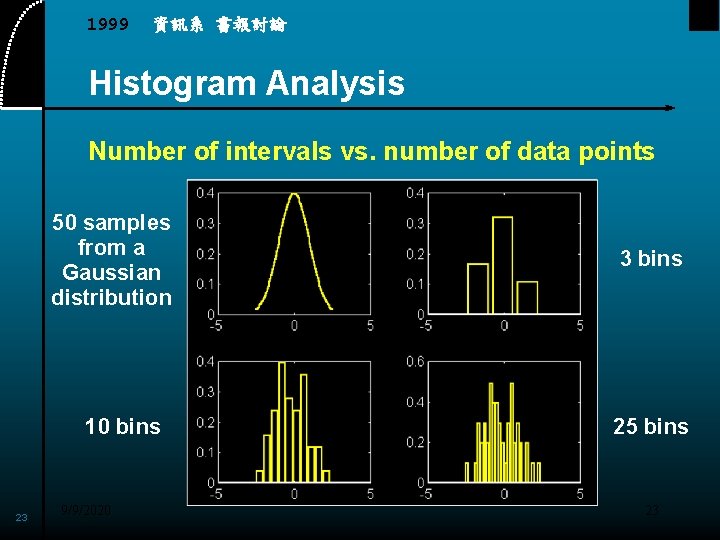

1999 資訊系 書報討論 Histogram Analysis Number of intervals vs. number of data points 50 samples from a Gaussian distribution 10 bins 23 9/9/2020 3 bins 25 bins 23

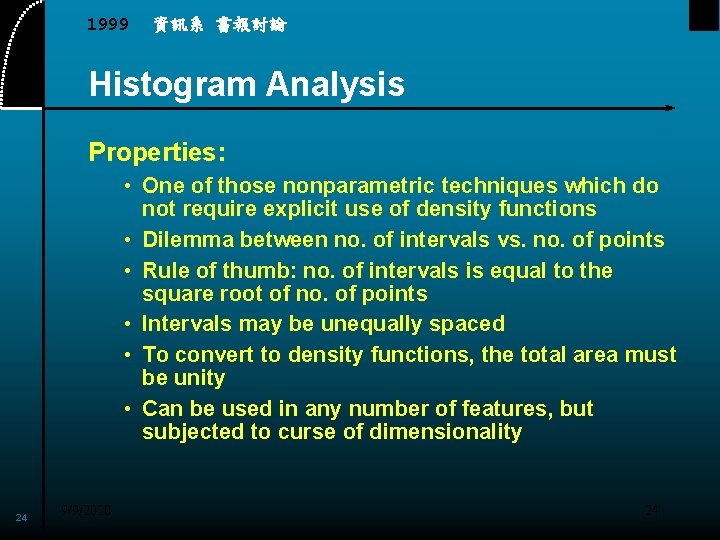

1999 資訊系 書報討論 Histogram Analysis Properties: • One of those nonparametric techniques which do not require explicit use of density functions • Dilemma between no. of intervals vs. no. of points • Rule of thumb: no. of intervals is equal to the square root of no. of points • Intervals may be unequally spaced • To convert to density functions, the total area must be unity • Can be used in any number of features, but subjected to curse of dimensionality 24 9/9/2020 24

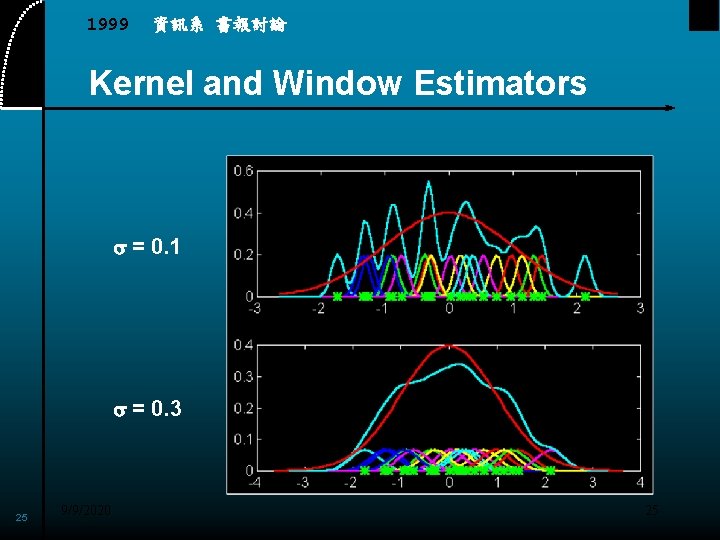

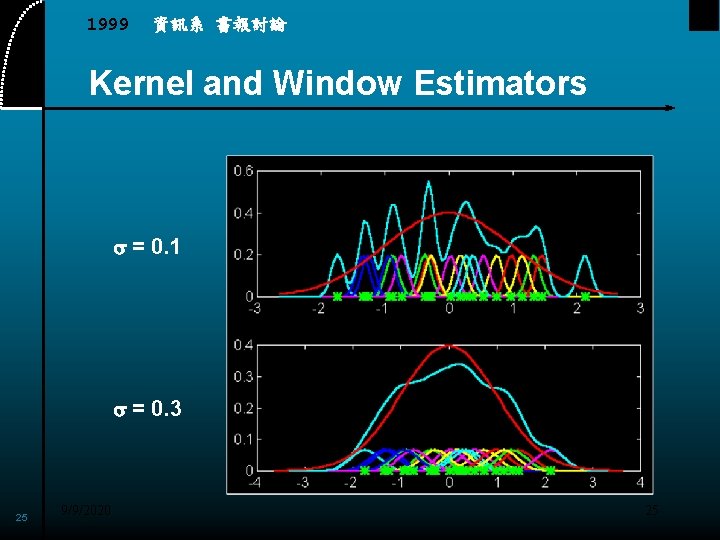

1999 資訊系 書報討論 Kernel and Window Estimators s = 0. 1 s = 0. 3 25 9/9/2020 25

1999 資訊系 書報討論 Kernel and Window Estimators Properties: • • 26 9/9/2020 Also known as Parzen estimator Its computation is similar to convolution Can be used in multi-features estimation Width is found by trial and error 26

1999 資訊系 書報討論 Example: Gender Classification Goal Determine a person’s gender from his/her profile data Features collected 27 9/9/2020 • • • Birthday Blood type Height and weight Density Three measures Hair length Voice pitch … Chromosome 27

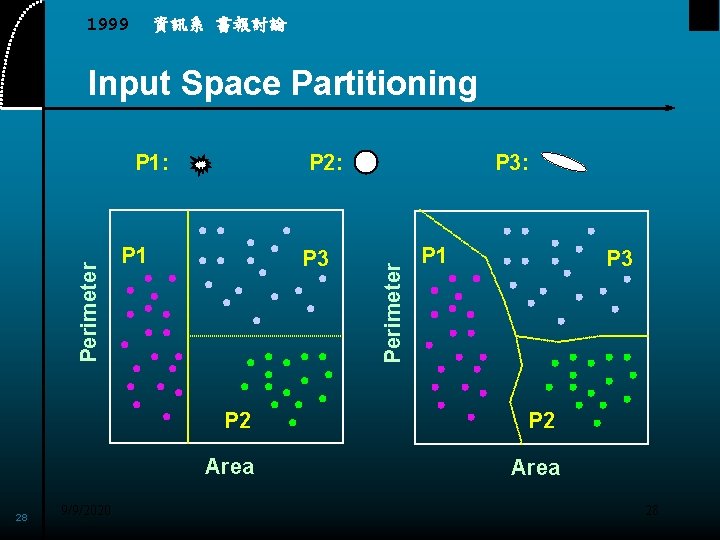

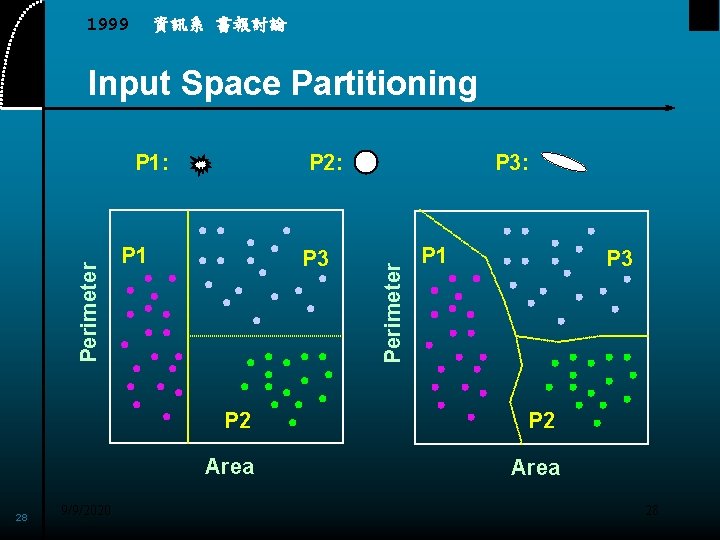

1999 資訊系 書報討論 Input Space Partitioning 28 9/9/2020 P 2: P 1 P 3: Perimeter P 1: P 1 P 3 P 2 Area 28

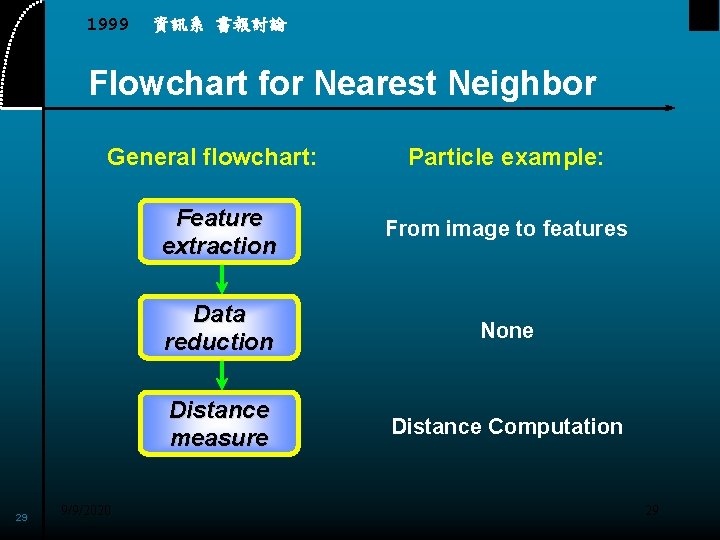

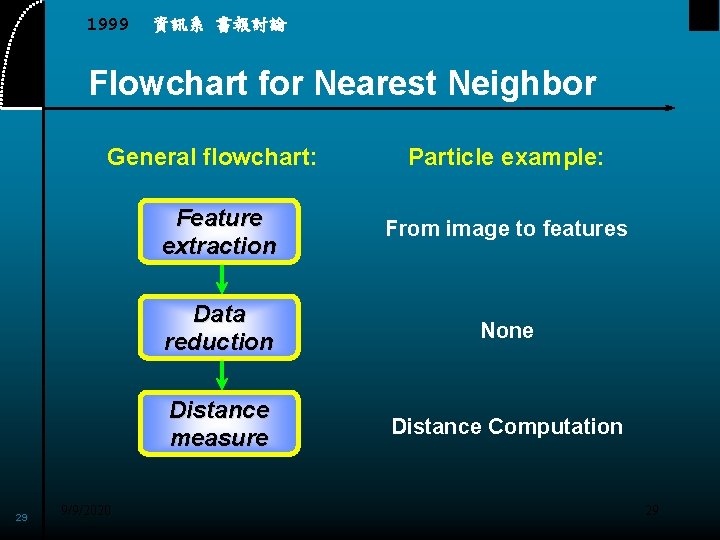

1999 資訊系 書報討論 Flowchart for Nearest Neighbor General flowchart: 29 9/9/2020 Particle example: Feature extraction From image to features Data reduction None Distance measure Distance Computation 29

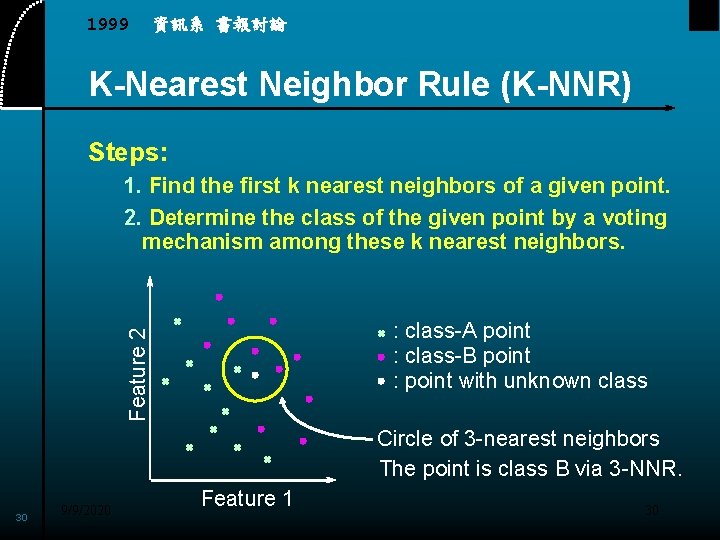

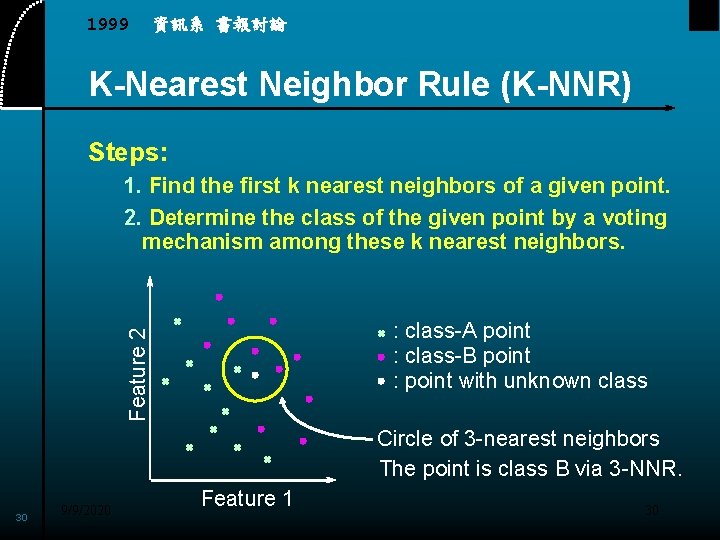

1999 資訊系 書報討論 K-Nearest Neighbor Rule (K-NNR) Steps: 1. Find the first k nearest neighbors of a given point. 2. Determine the class of the given point by a voting mechanism among these k nearest neighbors. Feature 2 : class-A point : class-B point : point with unknown class Circle of 3 -nearest neighbors The point is class B via 3 -NNR. 30 9/9/2020 Feature 1 30

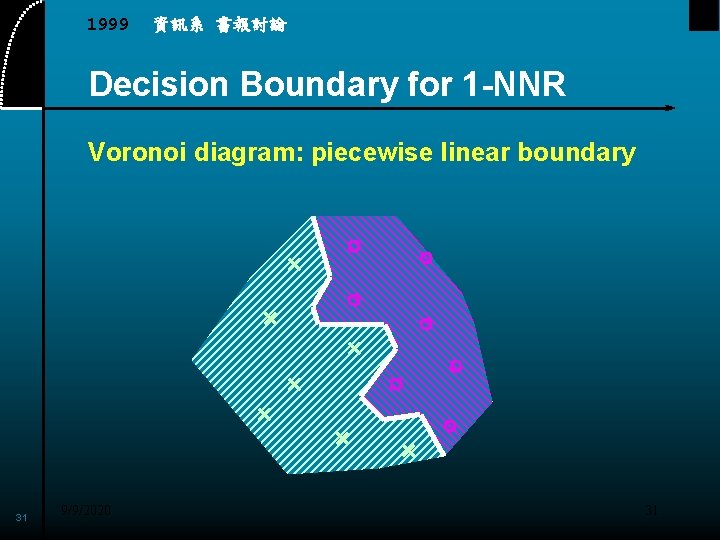

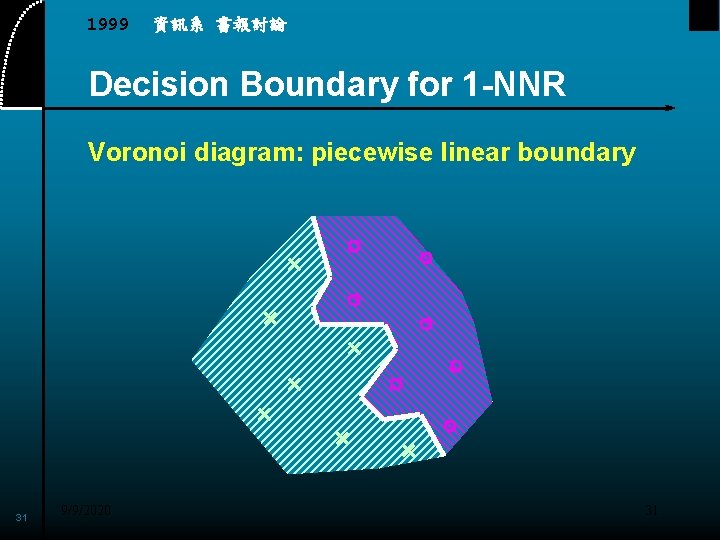

1999 資訊系 書報討論 Decision Boundary for 1 -NNR Voronoi diagram: piecewise linear boundary 31 9/9/2020 31

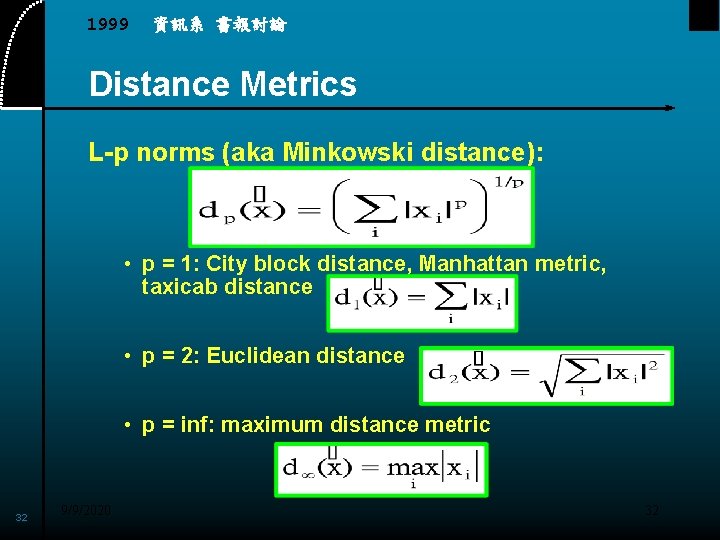

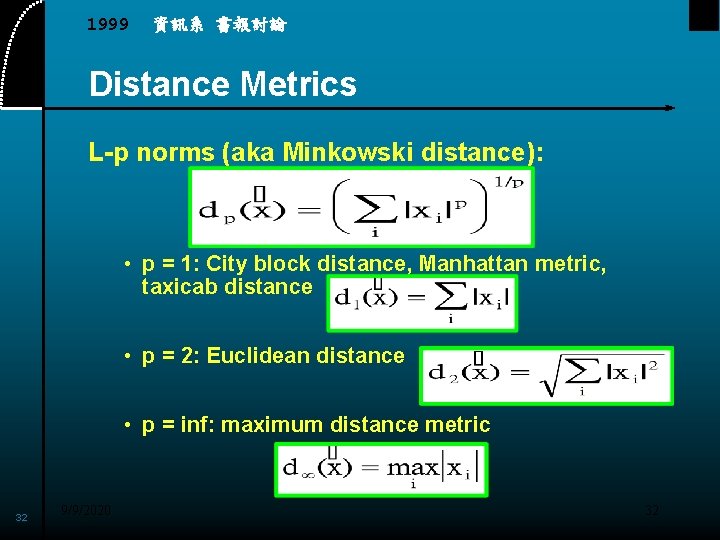

1999 資訊系 書報討論 Distance Metrics L-p norms (aka Minkowski distance): • p = 1: City block distance, Manhattan metric, taxicab distance • p = 2: Euclidean distance • p = inf: maximum distance metric 32 9/9/2020 32

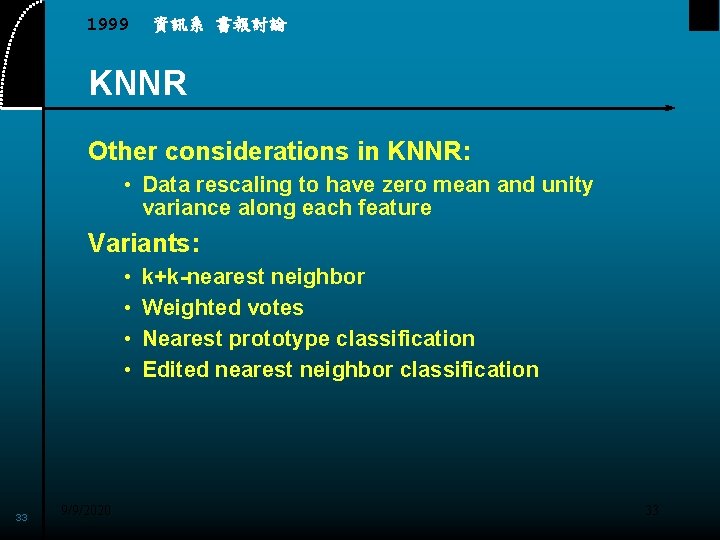

1999 資訊系 書報討論 KNNR Other considerations in KNNR: • Data rescaling to have zero mean and unity variance along each feature Variants: • • 33 9/9/2020 k+k-nearest neighbor Weighted votes Nearest prototype classification Edited nearest neighbor classification 33

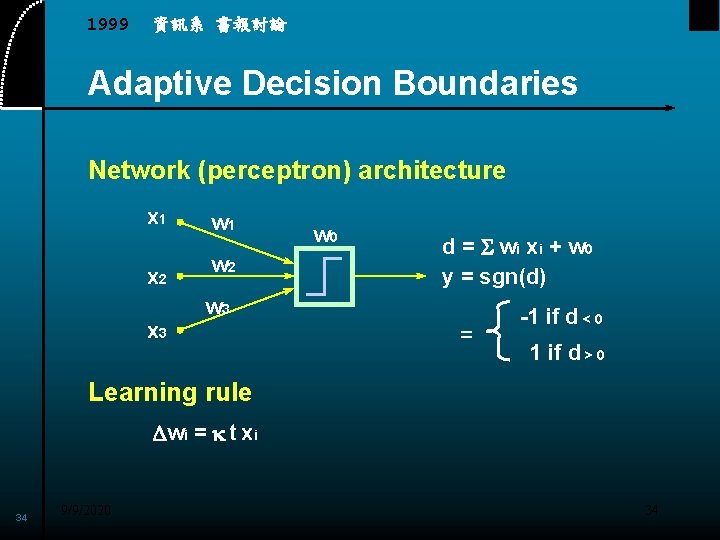

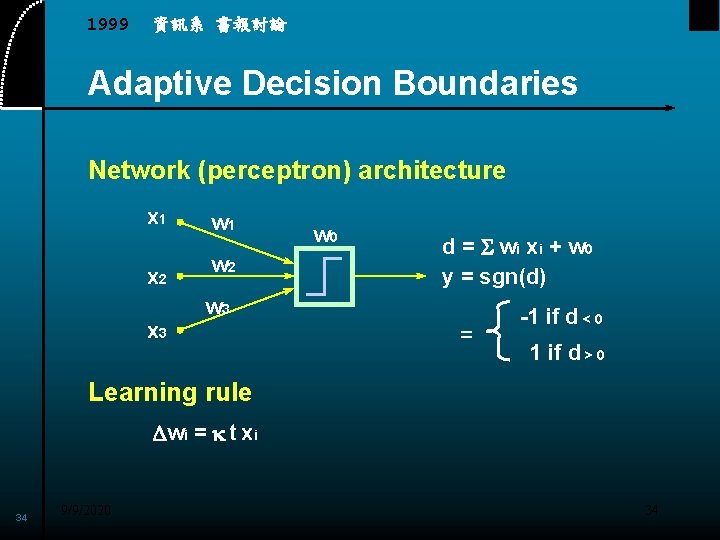

1999 資訊系 書報討論 Adaptive Decision Boundaries Network (perceptron) architecture x 1 x 2 x 3 w 1 w 2 w 0 d = S wi xi + w 0 y = sgn(d) w 3 = -1 if d < 0 1 if d > 0 Learning rule Dwi = k t xi 34 9/9/2020 34

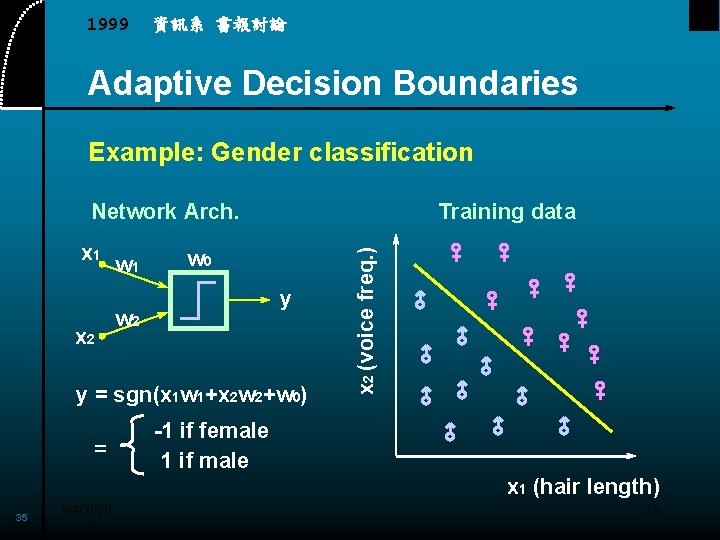

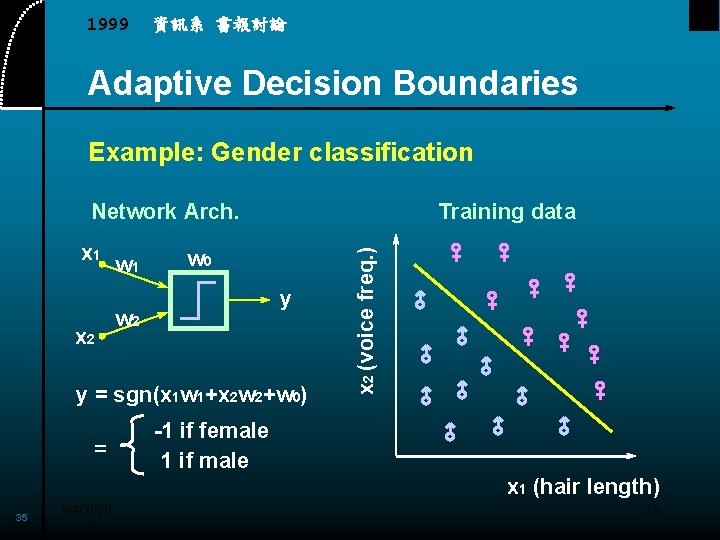

1999 資訊系 書報討論 Adaptive Decision Boundaries Example: Gender classification x 1 x 2 w 1 Training data w 0 y w 2 y = sgn(x 1 w 1+x 2 w 2+w 0) = x 2 (voice freq. ) Network Arch. -1 if female 1 if male x 1 (hair length) 35 9/9/2020 35

1999 資訊系 書報討論 Adaptive Decision Boundaries Properties: • Guaranteed to converge to a set of weights that will perfectly classify all the data if such a solution exists • Data rescaling is necessary to speed up convergence of the algorithm • Stops whenever a solution with zero error rate is found • Nonlinear decision boundaries can also be found by the adaptive technique • For a k-class problem, it needs k(k-1)/2 decision boundaries to do complete classification 36 9/9/2020 36

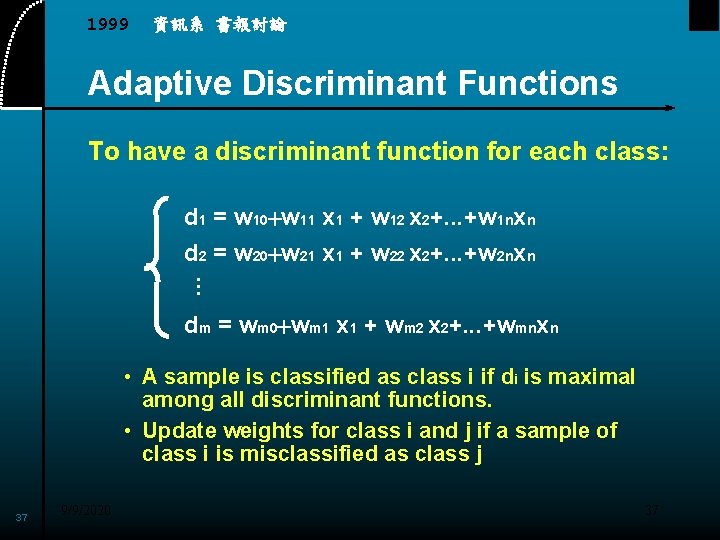

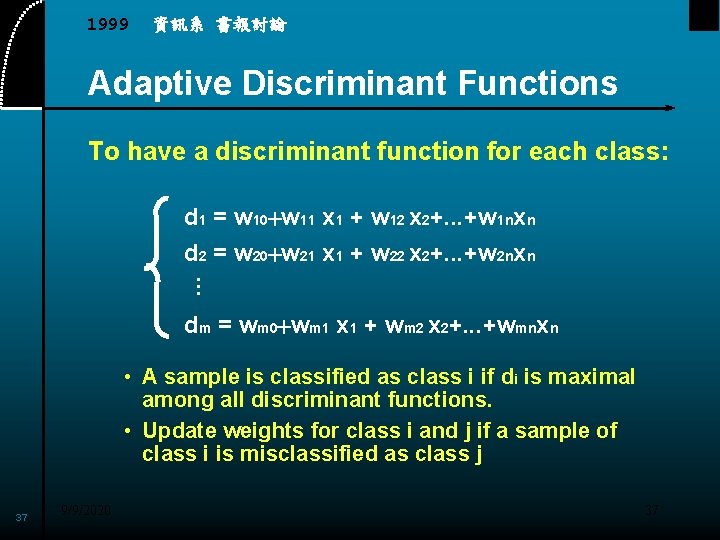

1999 資訊系 書報討論 Adaptive Discriminant Functions To have a discriminant function for each class: . . . d 1 = w 10+w 11 x 1 + w 12 x 2+. . . +w 1 nxn d 2 = w 20+w 21 x 1 + w 22 x 2+. . . +w 2 nxn dm = wm 0+wm 1 x 1 + wm 2 x 2+. . . +wmnxn • A sample is classified as class i if di is maximal among all discriminant functions. • Update weights for class i and j if a sample of class i is misclassified as class j 37 9/9/2020 37

1999 資訊系 書報討論 Adaptive Discriminant Functions Properties: • Guaranteed to converge to a set of weights that will perfectly classify all the data if such a solution exists • Data rescaling is necessary to speed up convergence of the algorithm • Stops whenever a solution with zero error rate is found • Nonlinear discriminant functions can also be used • Unable to classify some data sets that can be classified perfectly by the adaptive decision boundaries technique 38 9/9/2020 38

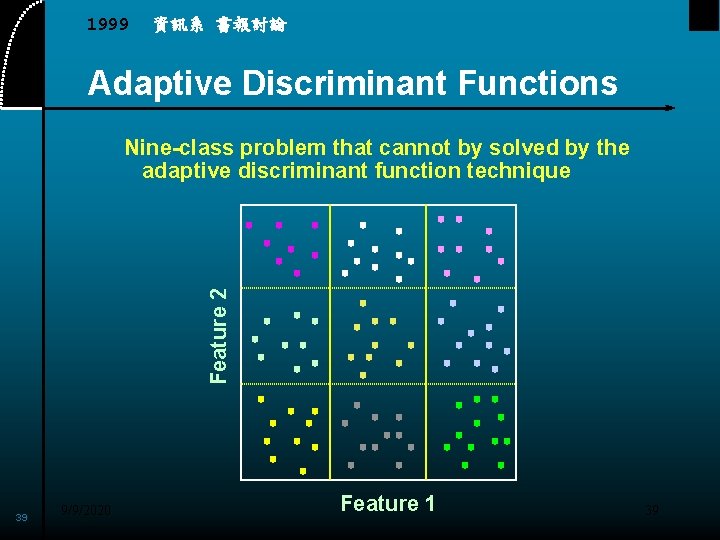

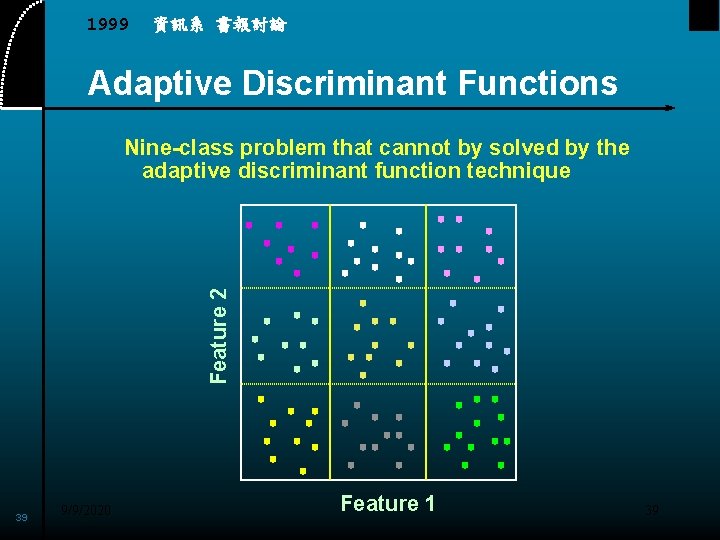

1999 資訊系 書報討論 Adaptive Discriminant Functions Feature 2 Nine-class problem that cannot by solved by the adaptive discriminant function technique 39 9/9/2020 Feature 1 39

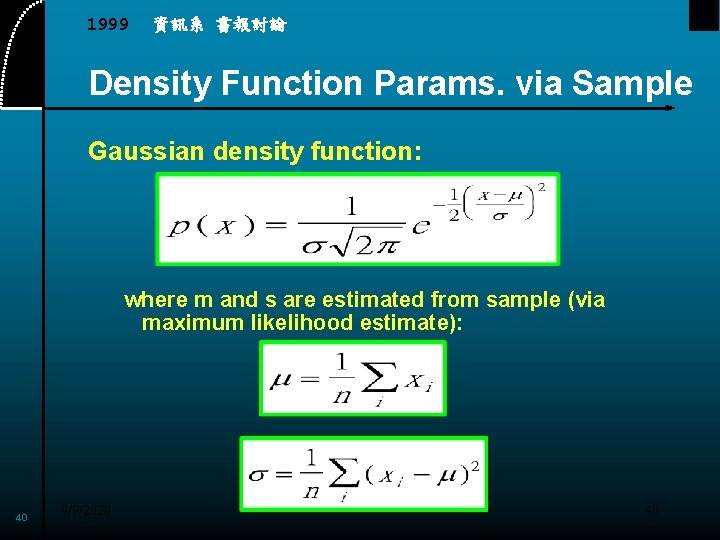

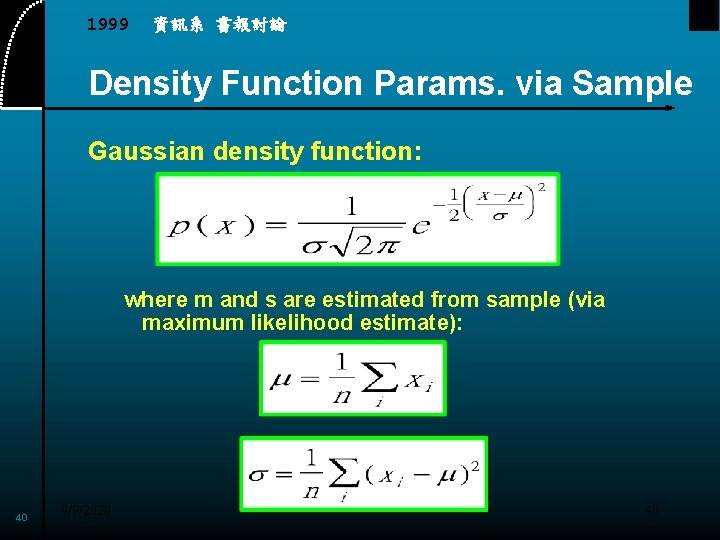

1999 資訊系 書報討論 Density Function Params. via Sample Gaussian density function: where m and s are estimated from sample (via maximum likelihood estimate): 40 9/9/2020 40

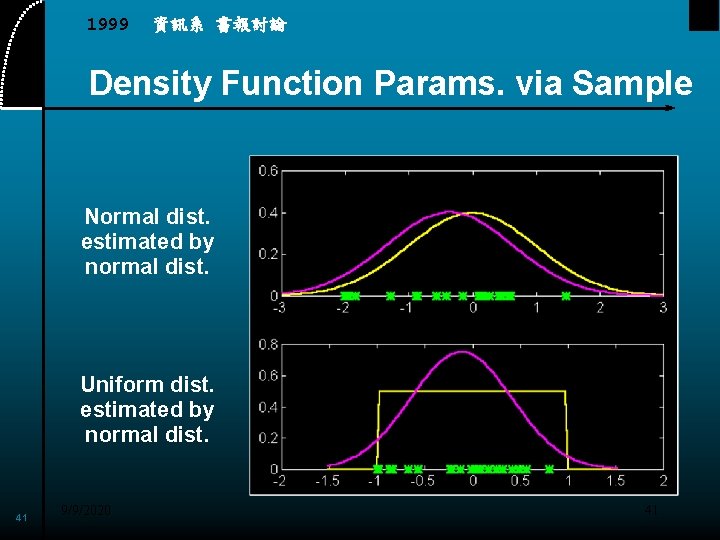

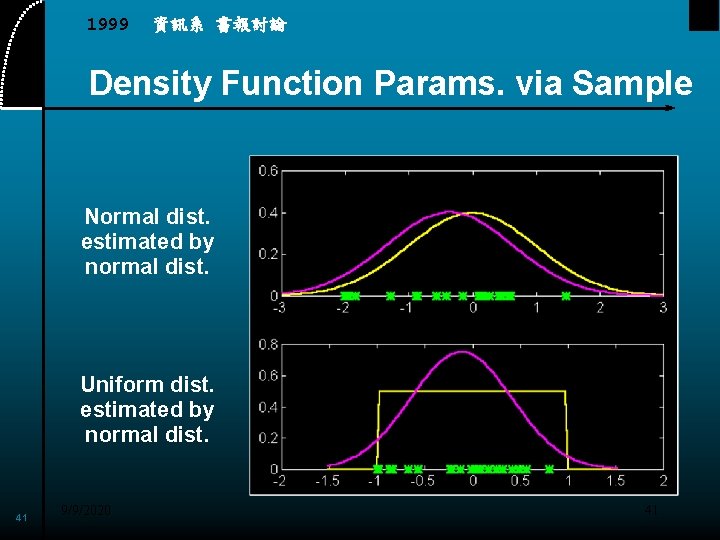

1999 資訊系 書報討論 Density Function Params. via Sample Normal dist. estimated by normal dist. Uniform dist. estimated by normal dist. 41 9/9/2020 41

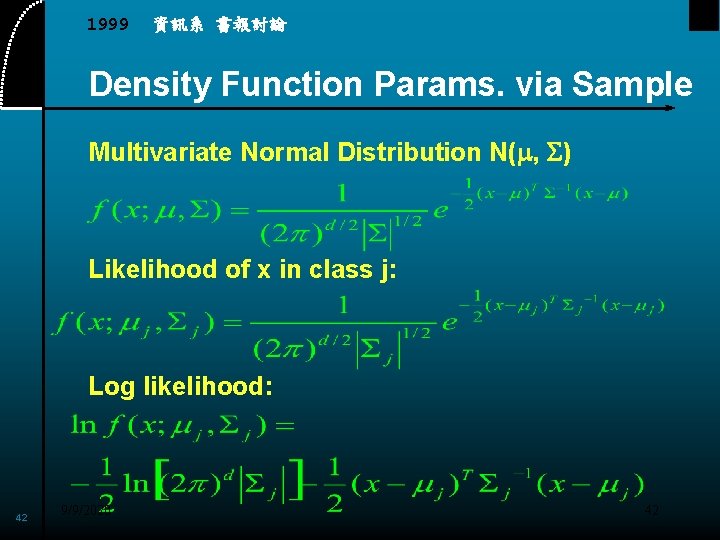

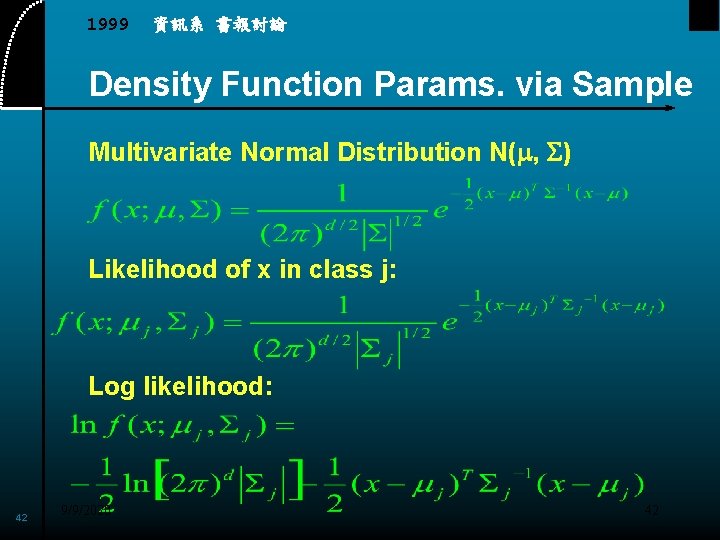

1999 資訊系 書報討論 Density Function Params. via Sample Multivariate Normal Distribution N(m, S) Likelihood of x in class j: Log likelihood: 42 9/9/2020 42

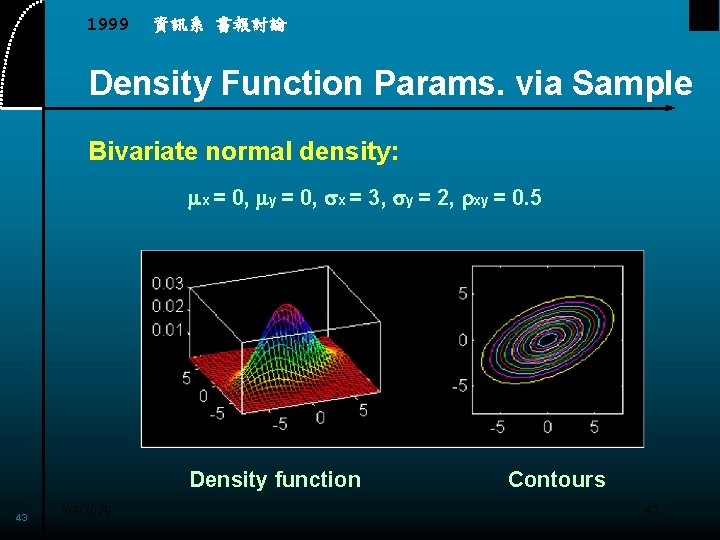

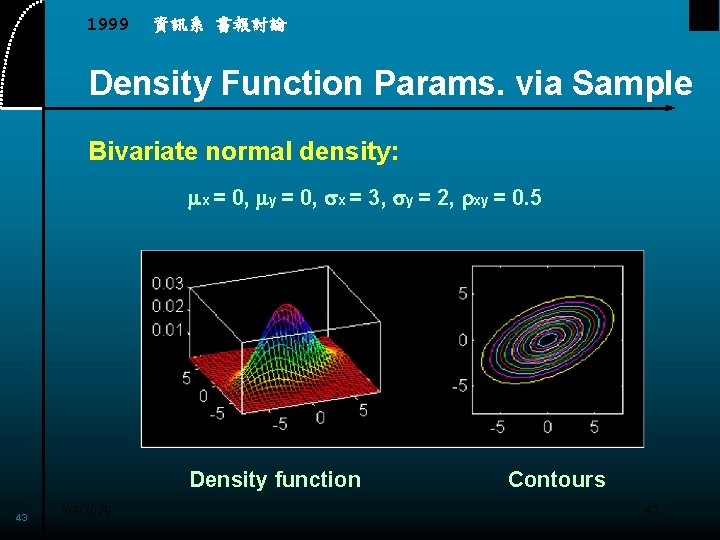

1999 資訊系 書報討論 Density Function Params. via Sample Bivariate normal density: mx = 0, my = 0, sx = 3, sy = 2, rxy = 0. 5 Density function 43 9/9/2020 Contours 43

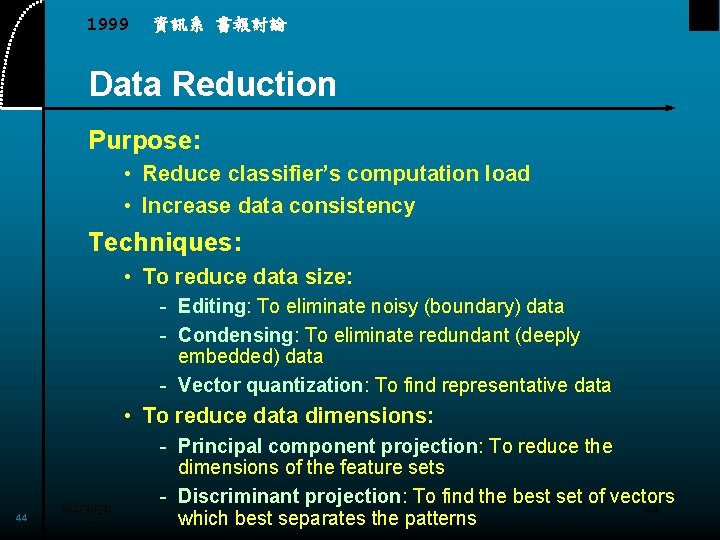

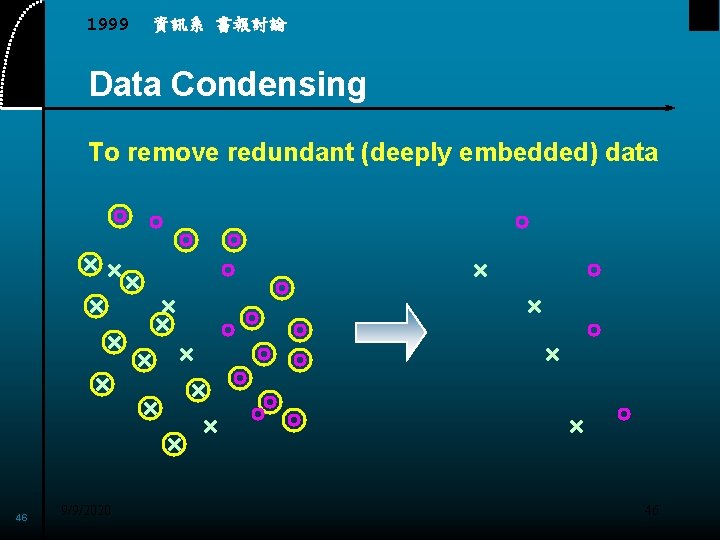

1999 資訊系 書報討論 Data Reduction Purpose: • Reduce classifier’s computation load • Increase data consistency Techniques: • To reduce data size: - Editing: To eliminate noisy (boundary) data - Condensing: To eliminate redundant (deeply embedded) data - Vector quantization: To find representative data • To reduce data dimensions: 44 9/9/2020 - Principal component projection: To reduce the dimensions of the feature sets - Discriminant projection: To find the best set of vectors 44 which best separates the patterns

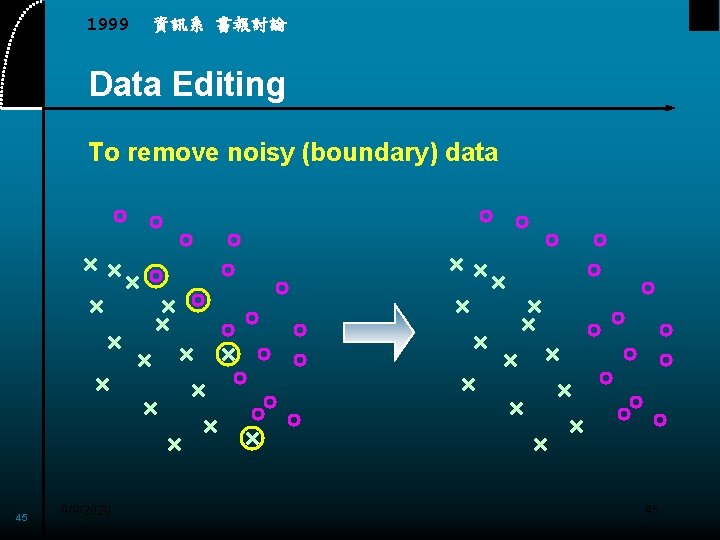

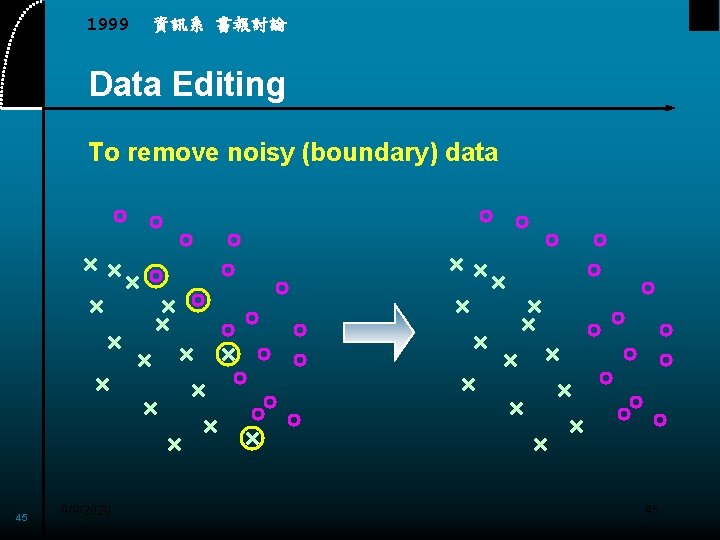

1999 資訊系 書報討論 Data Editing To remove noisy (boundary) data 45 9/9/2020 45

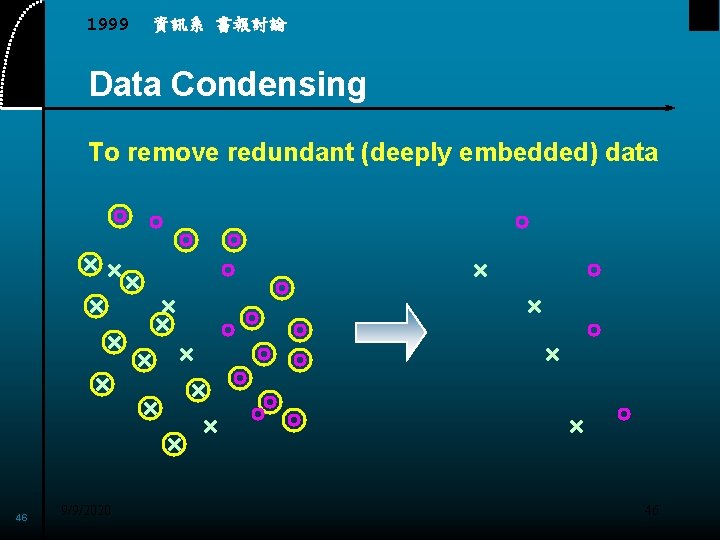

1999 資訊系 書報討論 Data Condensing To remove redundant (deeply embedded) data 46 9/9/2020 46

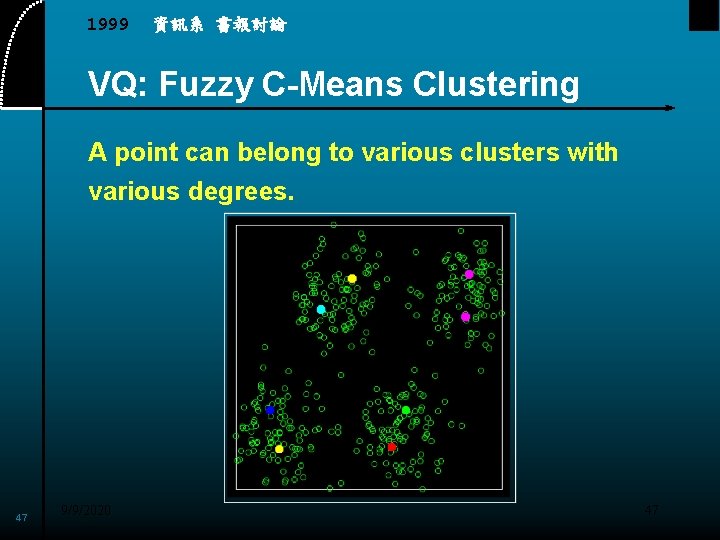

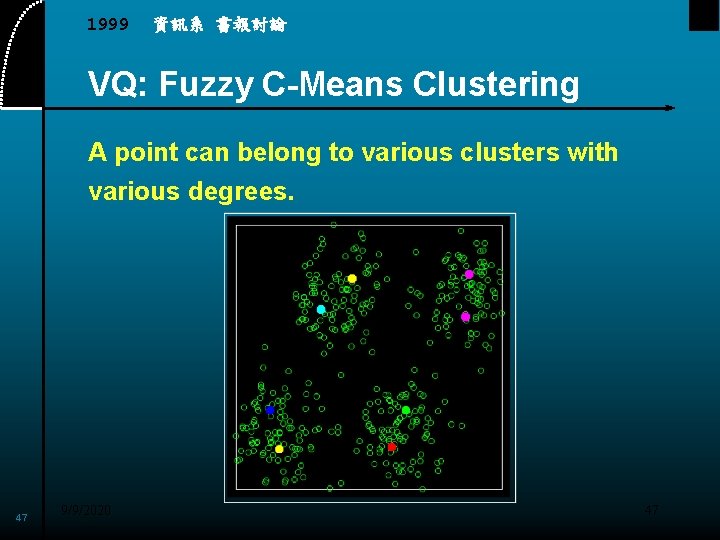

1999 資訊系 書報討論 VQ: Fuzzy C-Means Clustering A point can belong to various clusters with various degrees. 47 9/9/2020 47

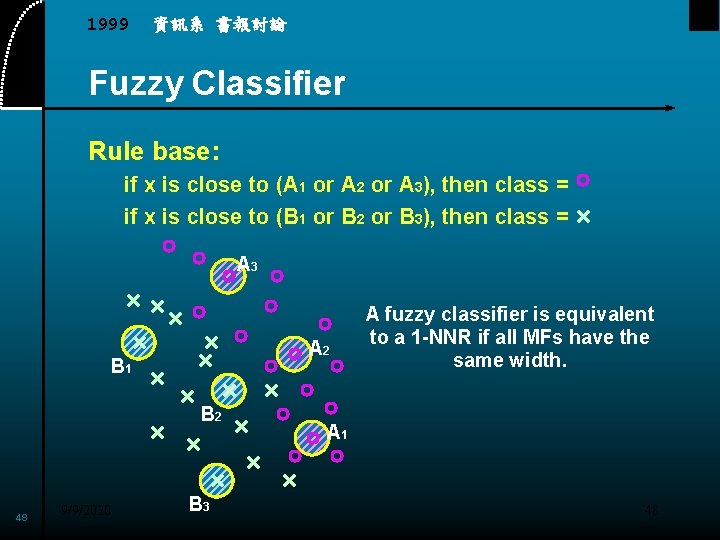

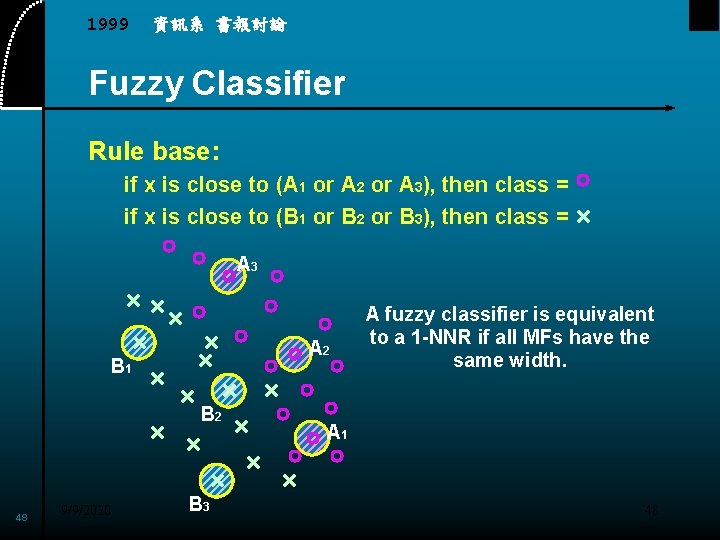

1999 資訊系 書報討論 Fuzzy Classifier Rule base: if x is close to (A 1 or A 2 or A 3), then class = if x is close to (B 1 or B 2 or B 3), then class = A 3 A 2 v B 1 A fuzzy classifier is equivalent to a 1 -NNR if all MFs have the same width. v B 2 A 1 v 48 9/9/2020 B 3 48

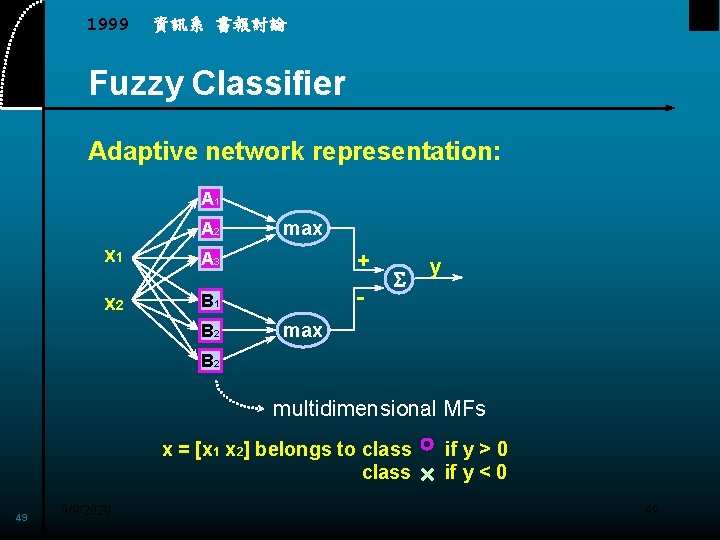

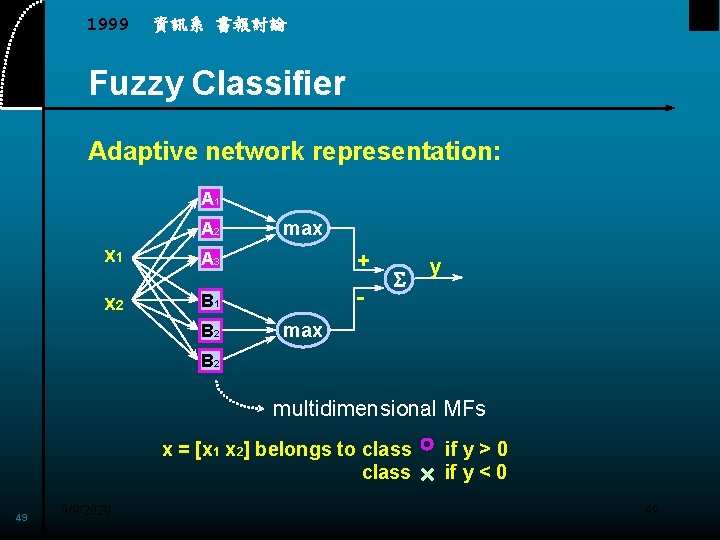

1999 資訊系 書報討論 Fuzzy Classifier Adaptive network representation: A 1 A 2 max x 1 A 3 + x 2 B 1 - B 2 S y max B 2 multidimensional MFs x = [x 1 x 2] belongs to class 49 9/9/2020 if y > 0 if y < 0 49

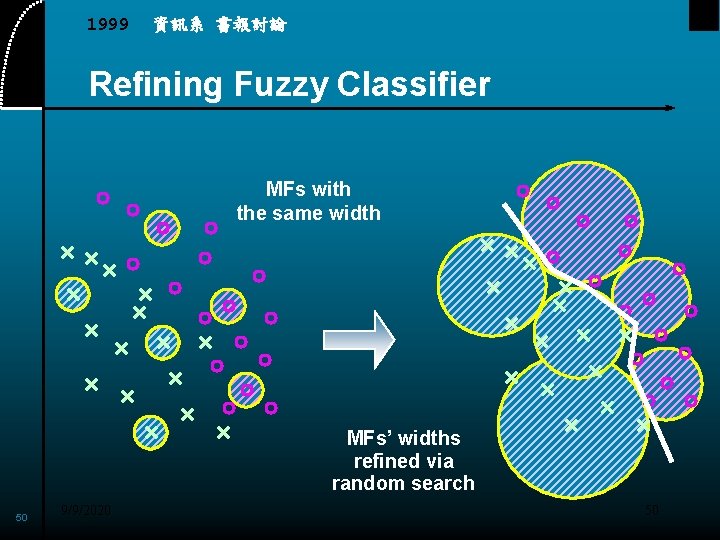

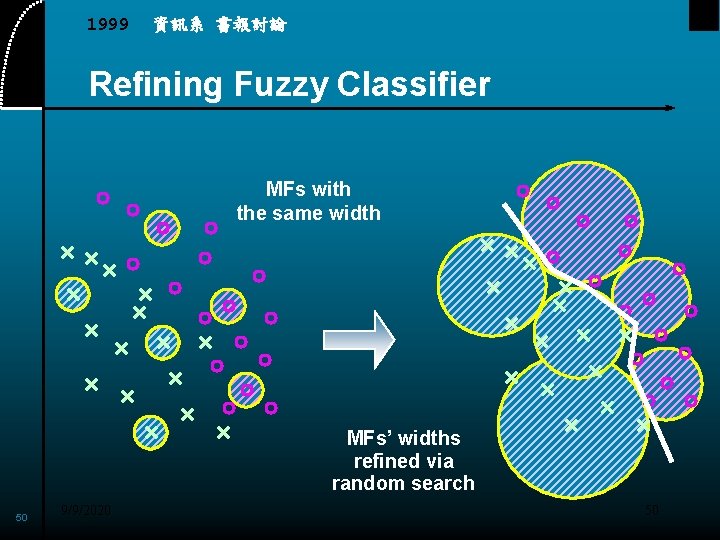

1999 資訊系 書報討論 Refining Fuzzy Classifier MFs with the same width v v v 50 9/9/2020 MFs’ widths refined via random search 50

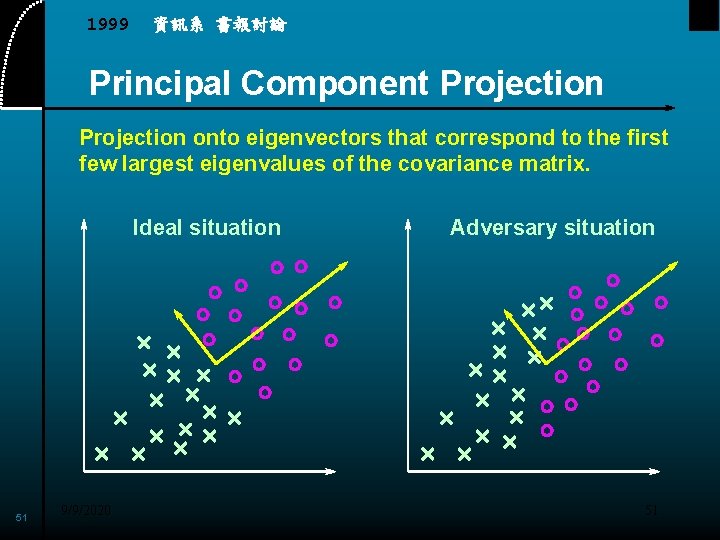

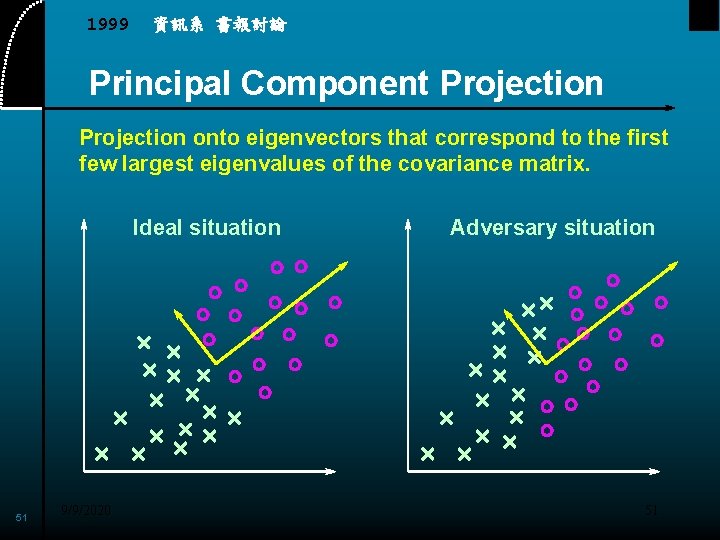

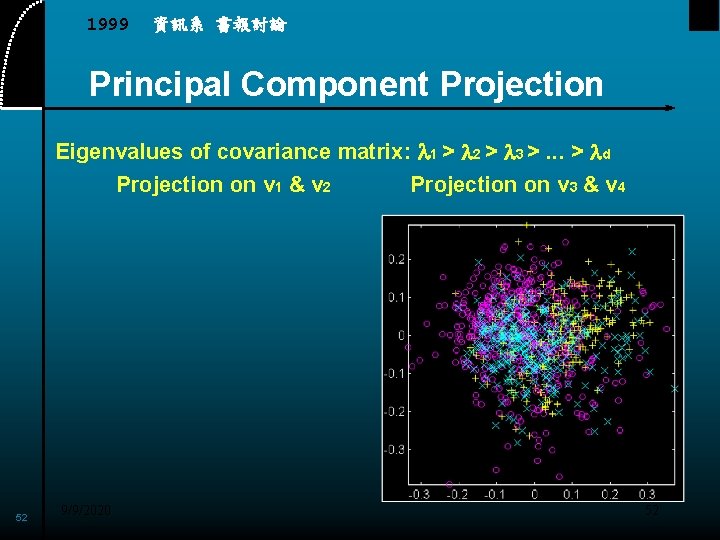

1999 資訊系 書報討論 Principal Component Projection onto eigenvectors that correspond to the first few largest eigenvalues of the covariance matrix. Ideal situation 51 9/9/2020 Adversary situation 51

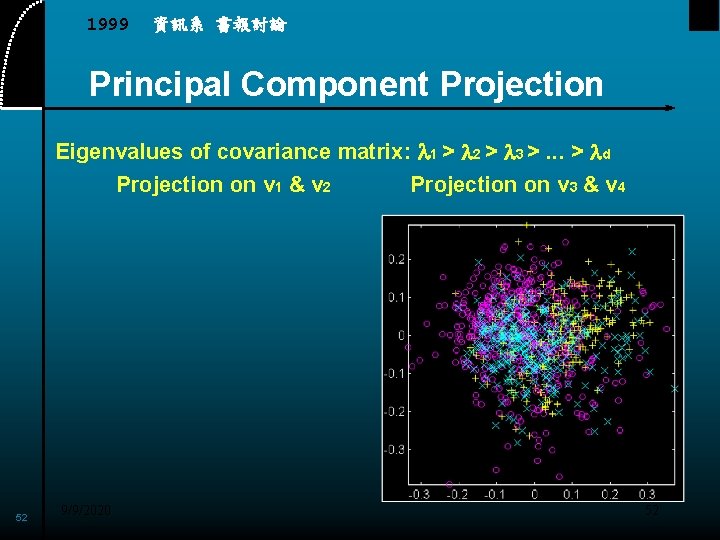

1999 資訊系 書報討論 Principal Component Projection Eigenvalues of covariance matrix: l 1 > l 2 > l 3 >. . . > ld Projection on v 1 & v 2 52 9/9/2020 Projection on v 3 & v 4 52

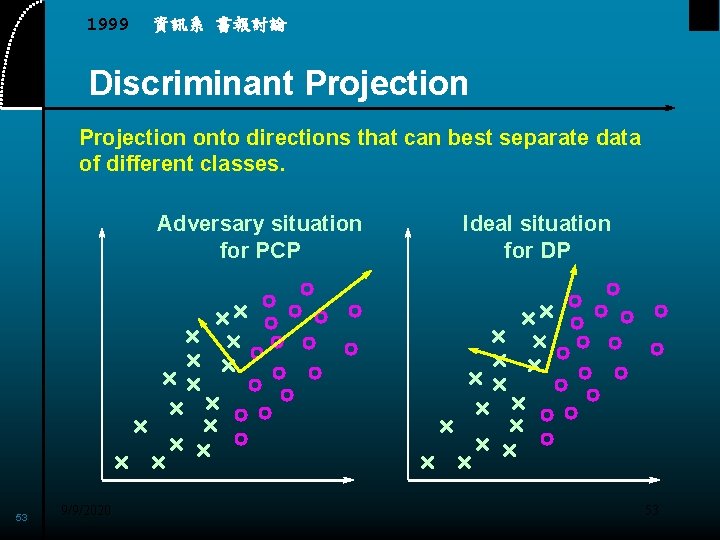

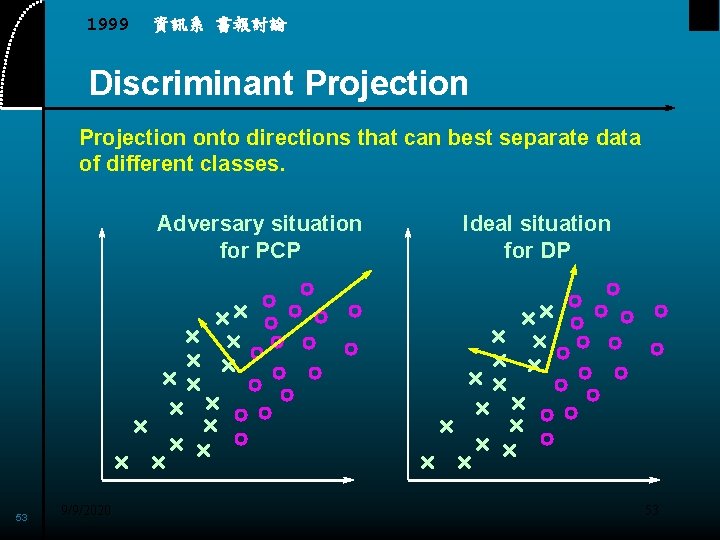

1999 資訊系 書報討論 Discriminant Projection onto directions that can best separate data of different classes. Adversary situation for PCP 53 9/9/2020 Ideal situation for DP 53

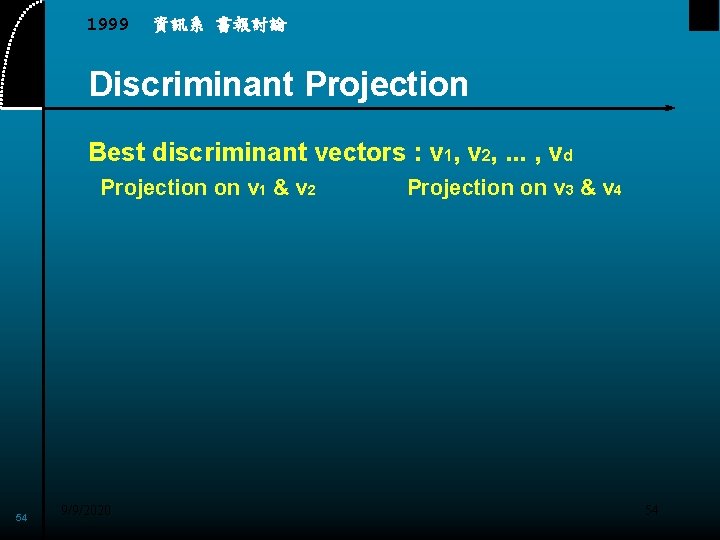

1999 資訊系 書報討論 Discriminant Projection Best discriminant vectors : v 1, v 2, . . . , vd Projection on v 1 & v 2 54 9/9/2020 Projection on v 3 & v 4 54

1999 資訊系 書報討論 Data Clustering Hierarchical clustering • • Single-linkage algorithm (minimum method) Complete-linkage algorithm (maximum method) Average linkage algorithm Minimum-variance method (Ward’s method) Partitional clustering • K-means algorithm • Fuzzy c-means algorithm • Isodata algorithm 55 9/9/2020 55

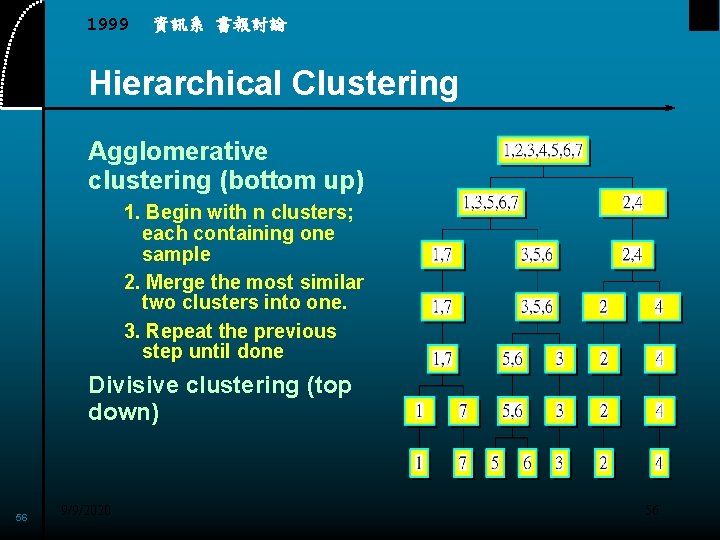

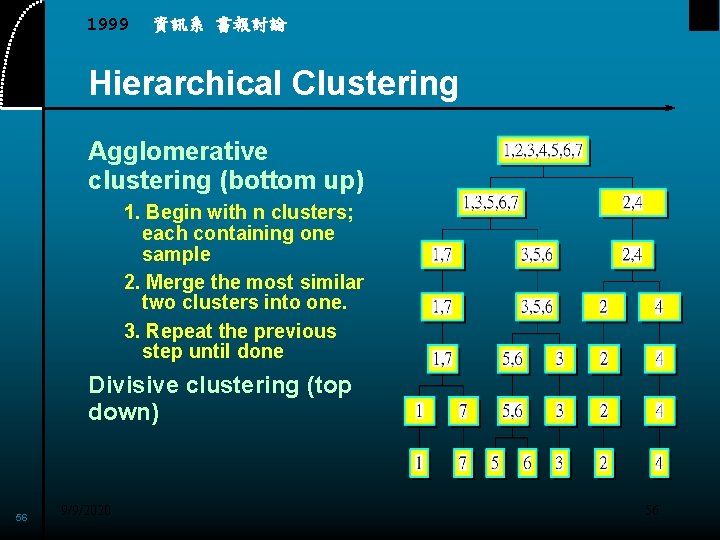

1999 資訊系 書報討論 Hierarchical Clustering Agglomerative clustering (bottom up) 1. Begin with n clusters; each containing one sample 2. Merge the most similar two clusters into one. 3. Repeat the previous step until done Divisive clustering (top down) 56 9/9/2020 56

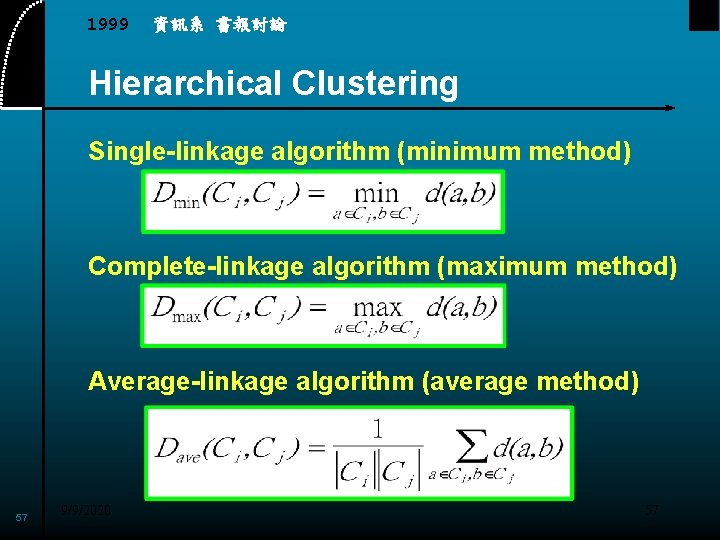

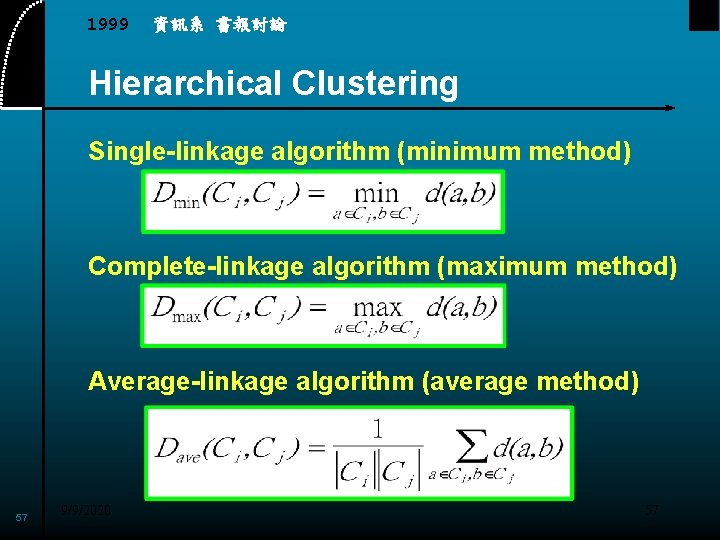

1999 資訊系 書報討論 Hierarchical Clustering Single-linkage algorithm (minimum method) Complete-linkage algorithm (maximum method) Average-linkage algorithm (average method) 57 9/9/2020 57

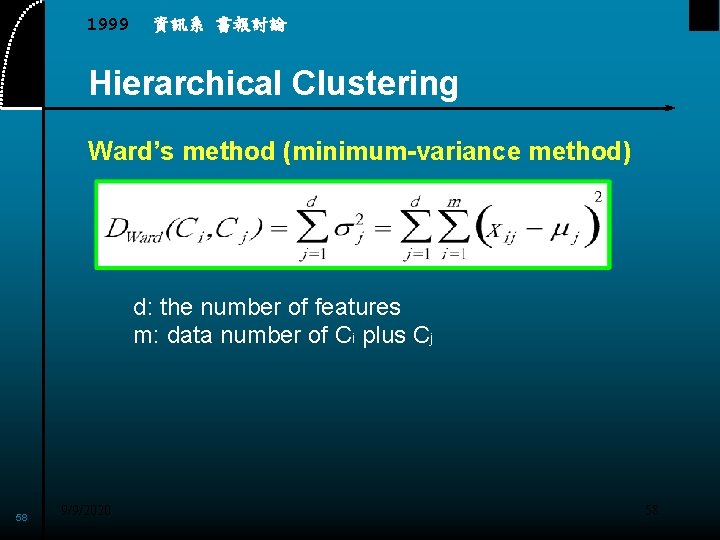

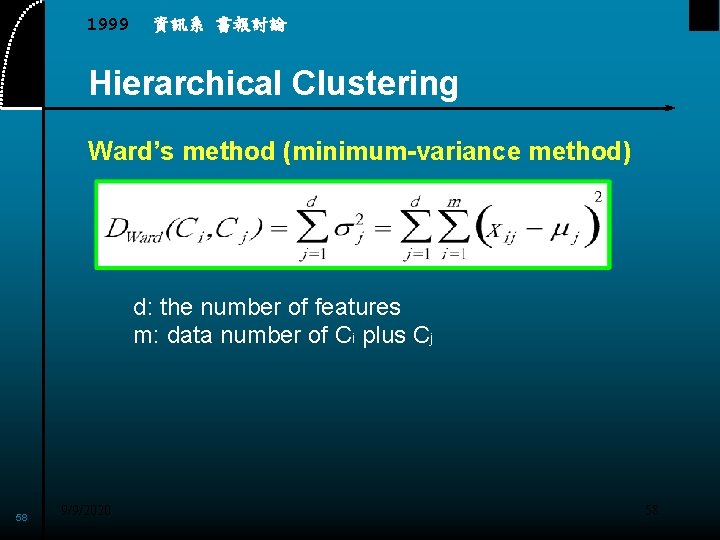

1999 資訊系 書報討論 Hierarchical Clustering Ward’s method (minimum-variance method) d: the number of features m: data number of Ci plus Cj 58 9/9/2020 58

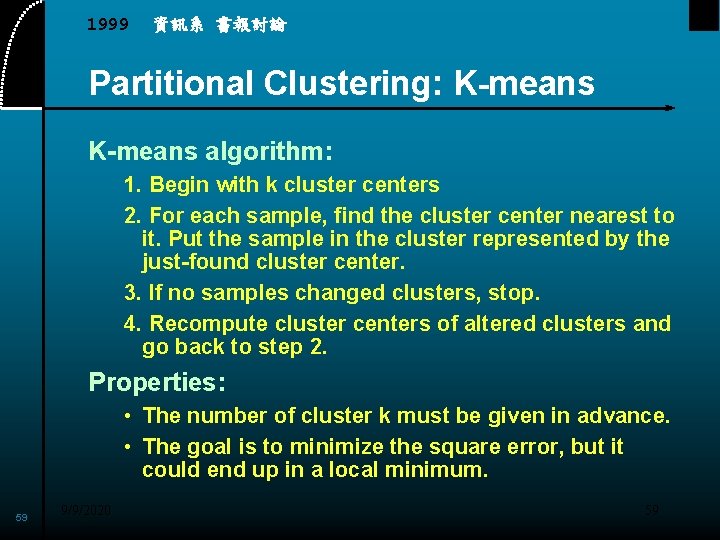

1999 資訊系 書報討論 Partitional Clustering: K-means algorithm: 1. Begin with k cluster centers 2. For each sample, find the cluster center nearest to it. Put the sample in the cluster represented by the just-found cluster center. 3. If no samples changed clusters, stop. 4. Recompute cluster centers of altered clusters and go back to step 2. Properties: • The number of cluster k must be given in advance. • The goal is to minimize the square error, but it could end up in a local minimum. 59 9/9/2020 59

1999 資訊系 書報討論 Fuzzy C-means Clustering Properties: • Similar to k-means, but each sample can belong to various clusters with degrees from 0 to 1 • For a given sample, the degrees of membership to all clusters sum to 1. • Computationally more extensive than k-means, but usually reach a better result 60 9/9/2020 60

1999 資訊系 書報討論 Partitional Clustering: Isodata Similar to k-means with some enhancements: • Clusters with too few elements are discarded. • Clusters are merged if the number of clusters grows too large or if clusters are too close together. • A cluster is split if the number of clusters is too few or if the cluster contains very dissimilar samples Properties: • The number of clusters k is not given exactly in advance. • The algorithm may requires extensive computation. 61 9/9/2020 61

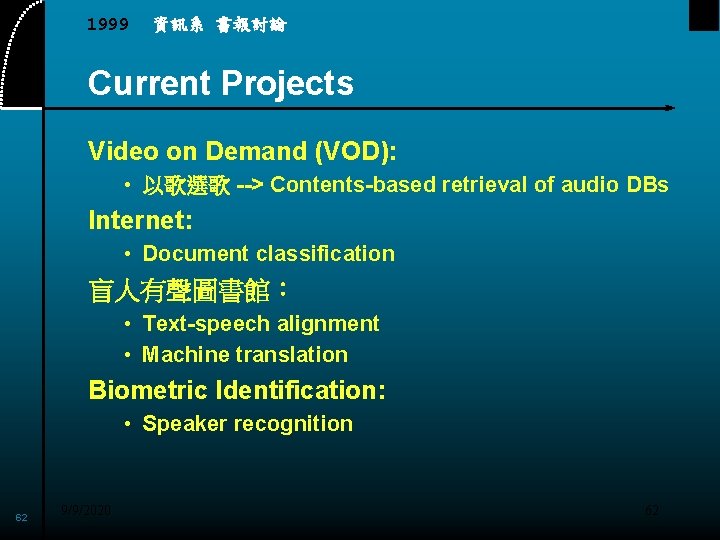

1999 資訊系 書報討論 Current Projects Video on Demand (VOD): • 以歌選歌 --> Contents-based retrieval of audio DBs Internet: • Document classification 盲人有聲圖書館: • Text-speech alignment • Machine translation Biometric Identification: • Speaker recognition 62 9/9/2020 62

1999 資訊系 書報討論 Questions and Answers Now it’s your turn! 63 9/9/2020 63