18742 Parallel Computer Architecture Caching in Multicore Systems

- Slides: 30

18742 Parallel Computer Architecture Caching in Multi-core Systems Vivek Seshadri Carnegie Mellon University Fall 2012 – 10/03 1

Problems in Multi-core Caching • Managing individual blocks – Demand-fetched blocks – Prefetched blocks – Dirty blocks • Application awareness – High system performance – High fairness 2

Part 1 Managing Demand-fetched Blocks 3

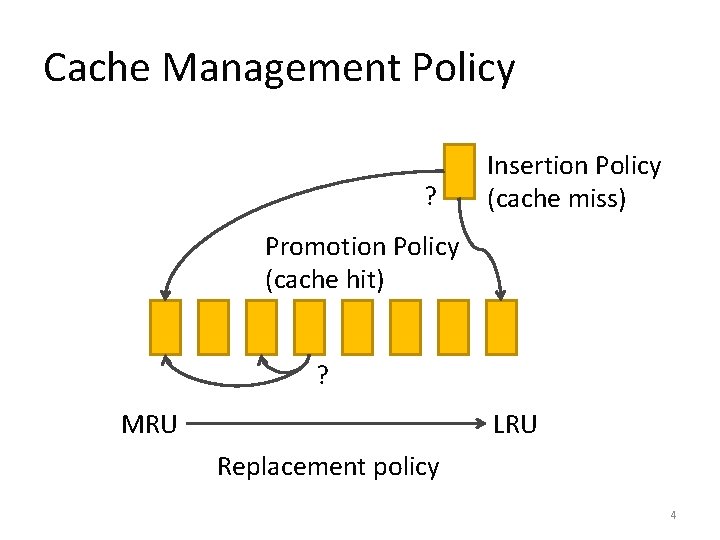

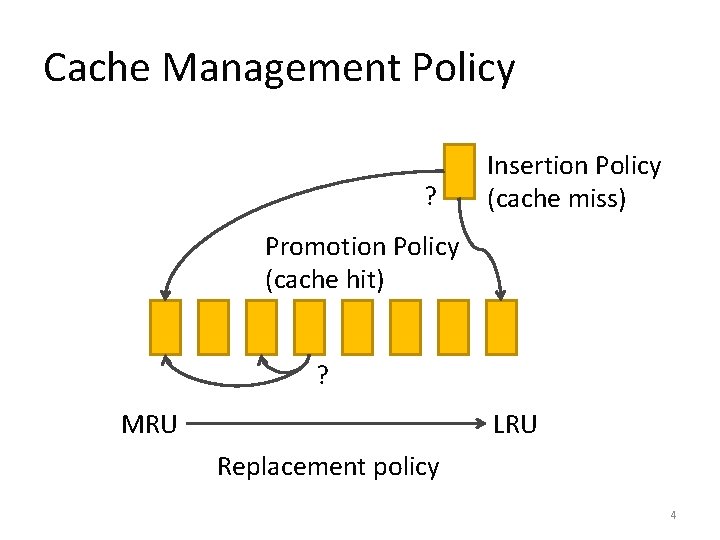

Cache Management Policy ? Insertion Policy (cache miss) Promotion Policy (cache hit) ? MRU LRU Replacement policy 4

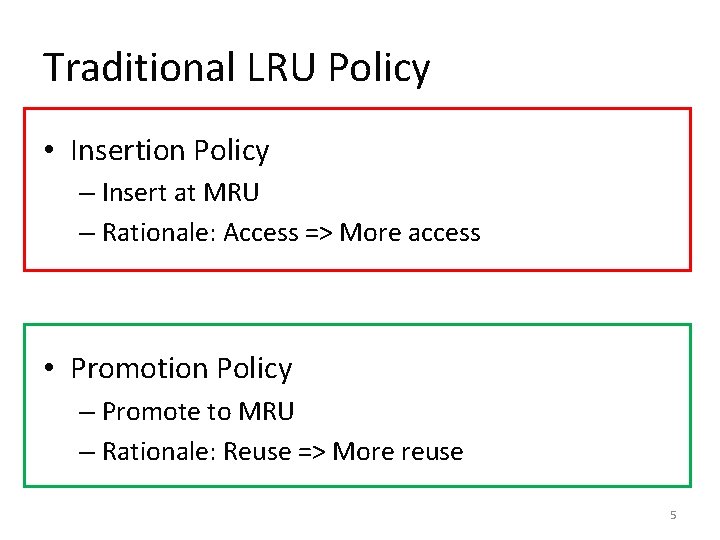

Traditional LRU Policy • Insertion Policy – Insert at MRU – Rationale: Access => More access • Promotion Policy – Promote to MRU – Rationale: Reuse => More reuse 5

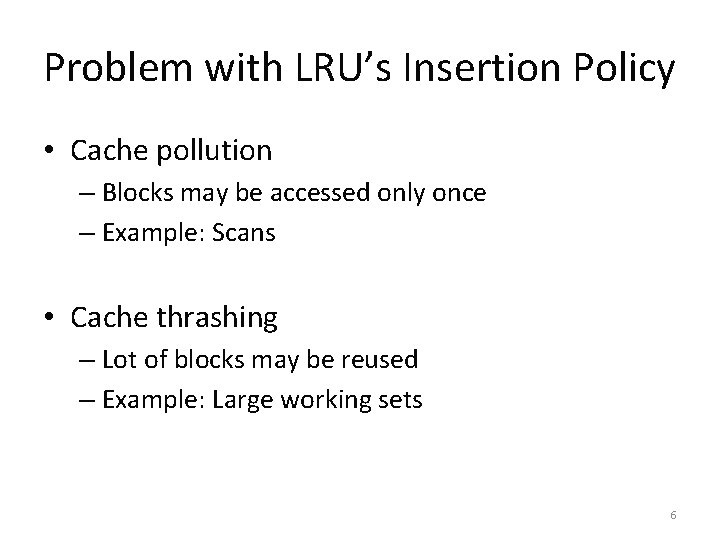

Problem with LRU’s Insertion Policy • Cache pollution – Blocks may be accessed only once – Example: Scans • Cache thrashing – Lot of blocks may be reused – Example: Large working sets 6

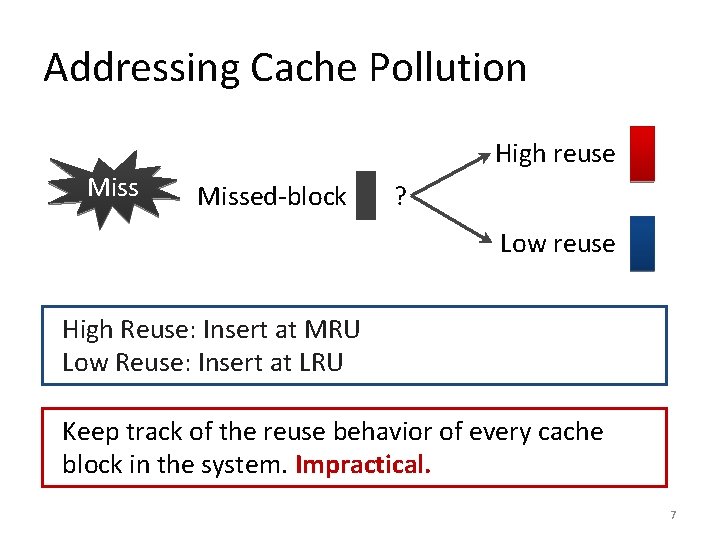

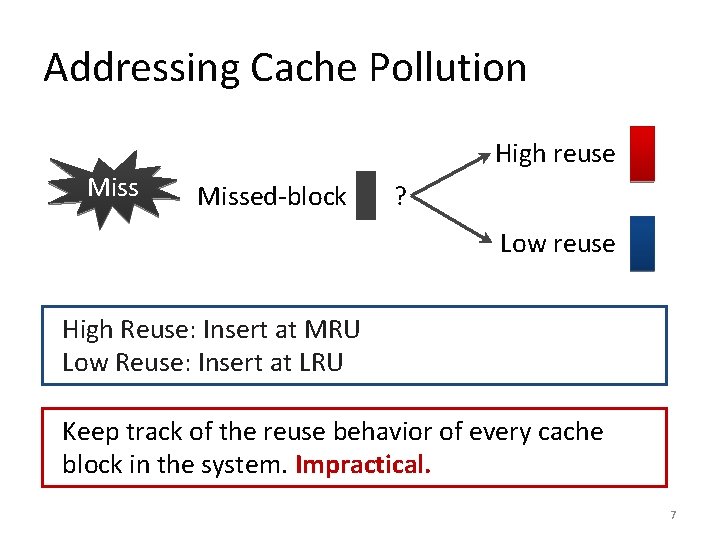

Addressing Cache Pollution High reuse Missed-block ? Low reuse High Reuse: Insert at MRU Low Reuse: Insert at LRU Keep track of the reuse behavior of every cache block in the system. Impractical. 7

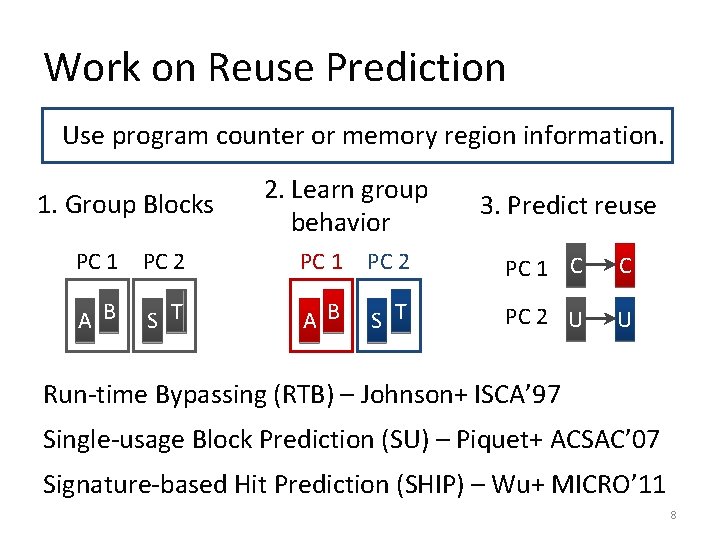

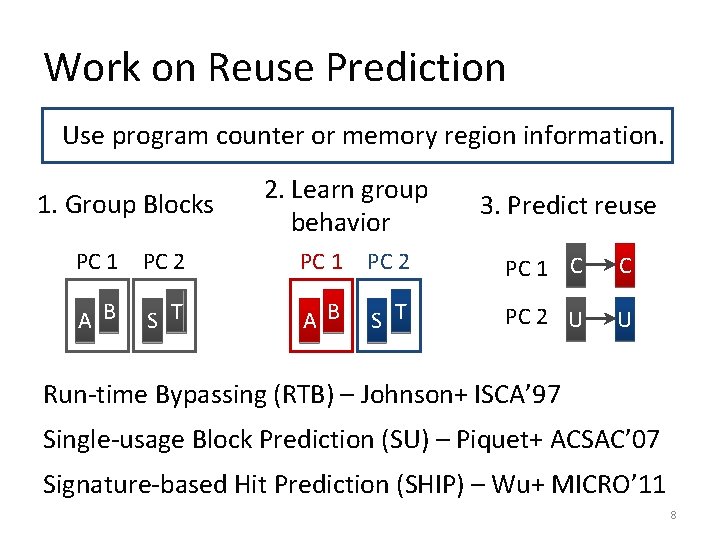

Work on Reuse Prediction Use program counter or memory region information. 1. Group Blocks 2. Learn group behavior 3. Predict reuse PC 1 PC 2 PC 1 C C A B S T PC 2 U U Run-time Bypassing (RTB) – Johnson+ ISCA’ 97 Single-usage Block Prediction (SU) – Piquet+ ACSAC’ 07 Signature-based Hit Prediction (SHIP) – Wu+ MICRO’ 11 8

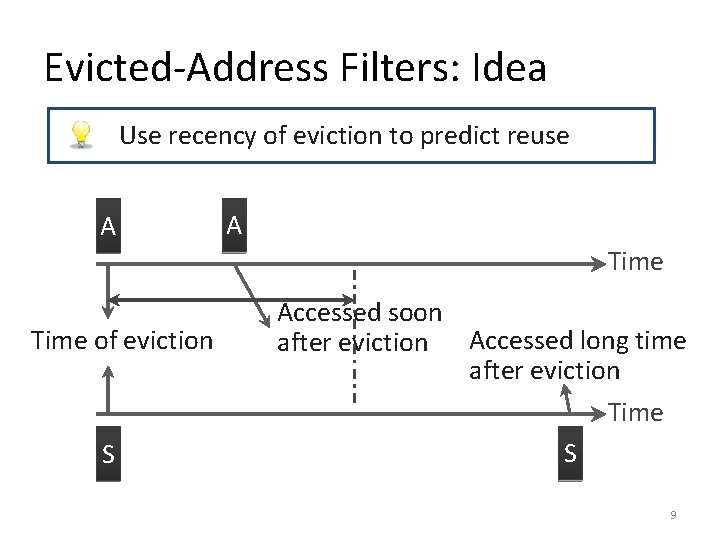

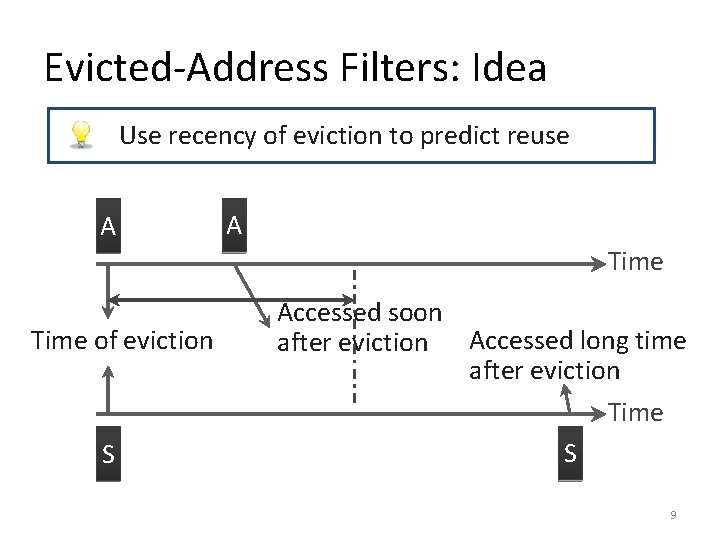

Evicted-Address Filters: Idea Use recency of eviction to predict reuse A A Time of eviction S Accessed soon after eviction Accessed long time after eviction Time S 9

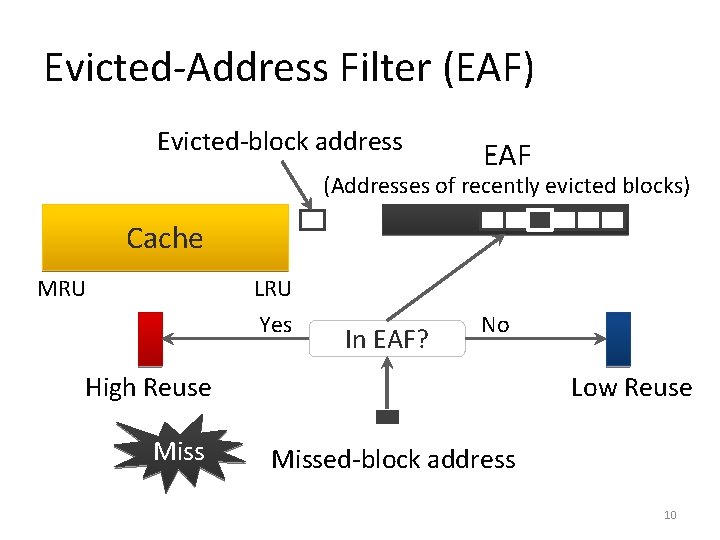

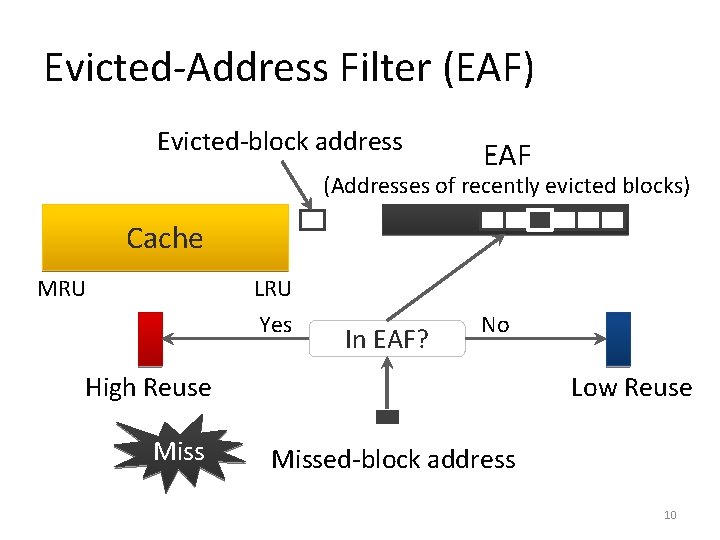

Evicted-Address Filter (EAF) Evicted-block address EAF (Addresses of recently evicted blocks) Cache LRU MRU Yes In EAF? No High Reuse Miss Low Reuse Missed-block address 10

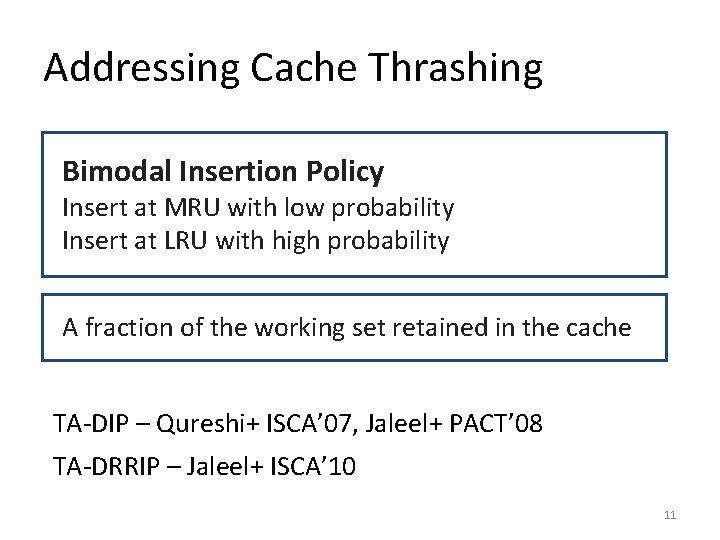

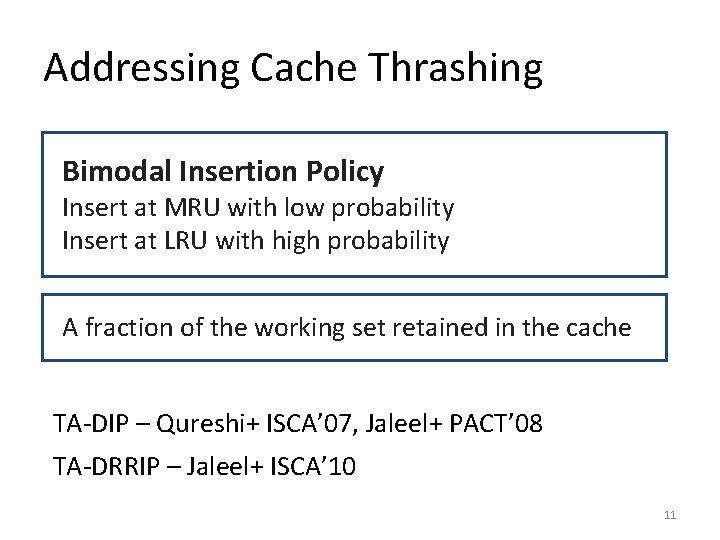

Addressing Cache Thrashing Bimodal Insertion Policy Insert at MRU with low probability Insert at LRU with high probability A fraction of the working set retained in the cache TA-DIP – Qureshi+ ISCA’ 07, Jaleel+ PACT’ 08 TA-DRRIP – Jaleel+ ISCA’ 10 11

Addressing Pollution and Thrashing • Combine the two approaches? • Problems? • Ideas? • EAF using a Bloom filter 12

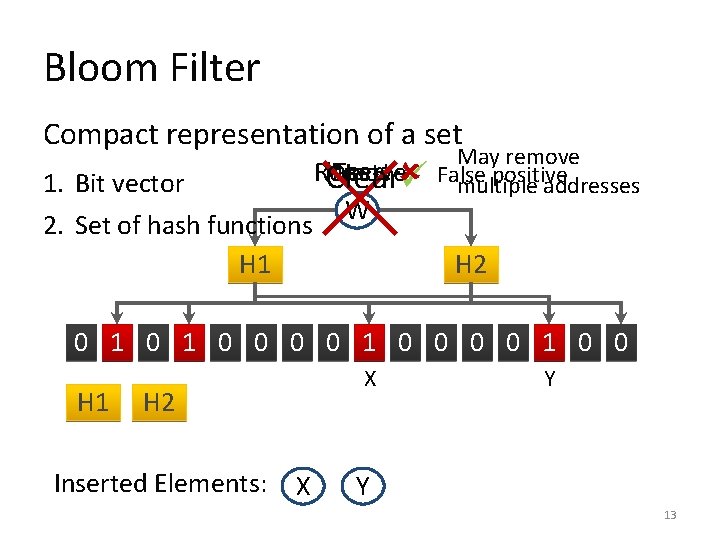

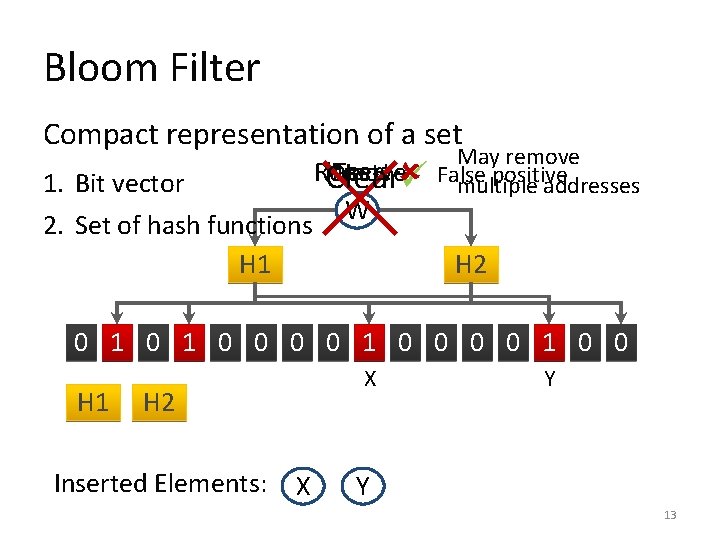

Bloom Filter Compact representation of a set 1. Bit vector 2. Set of hash functions H 1 May remove Insert Remove Test positive Clear False multiple addresses W X YZ H 2 0 1 0 0 0 0 0 1 0 0 0 H 1 X H 2 Inserted Elements: X Y Y 13

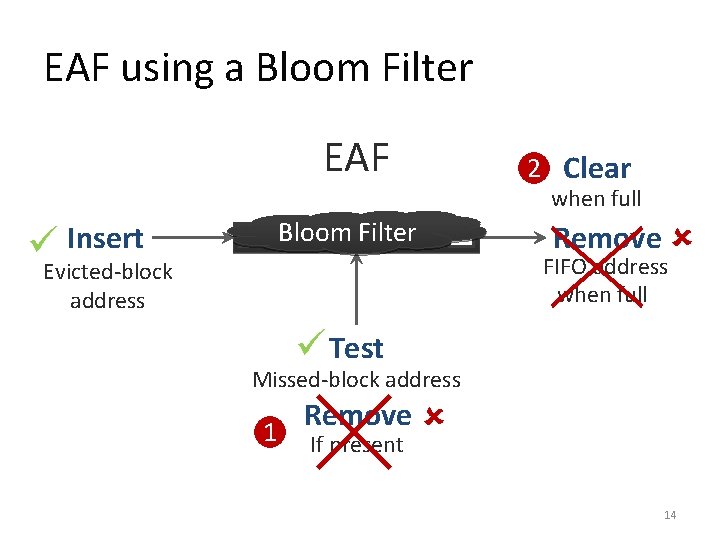

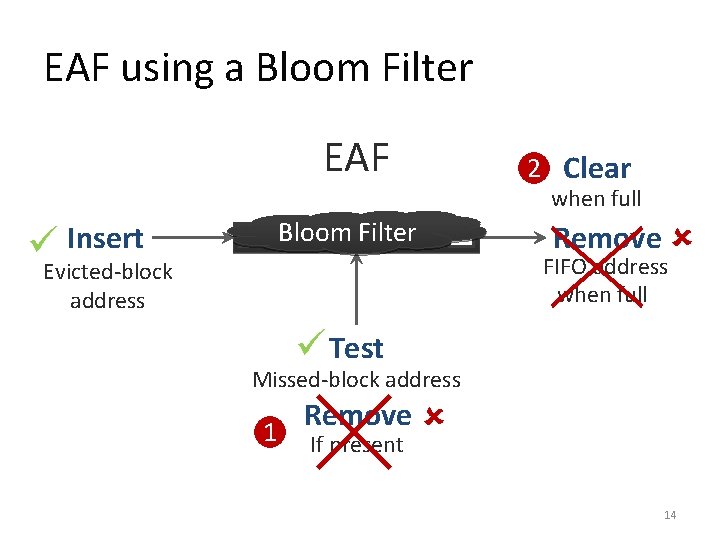

EAF using a Bloom Filter EAF 2 Clear when full Bloom Filter Insert Remove FIFO address when full Evicted-block address Test Missed-block address 1 Remove If present 14

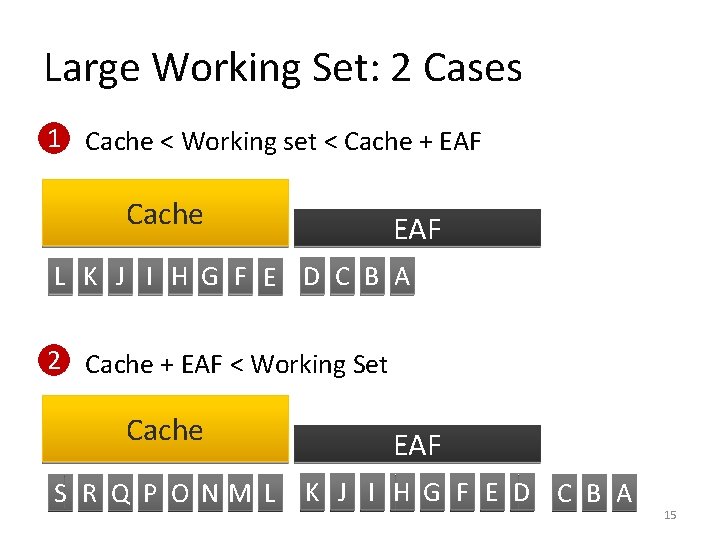

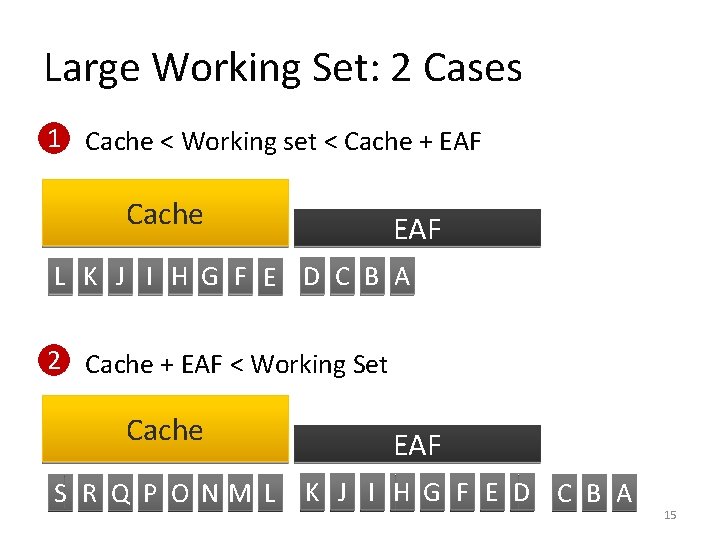

Large Working Set: 2 Cases 1 Cache < Working set < Cache + EAF Cache EAF L K J I H G F E D C B A 2 Cache + EAF < Working Set Cache S R Q P O NM L EAF K J I H G F E D C B A 15

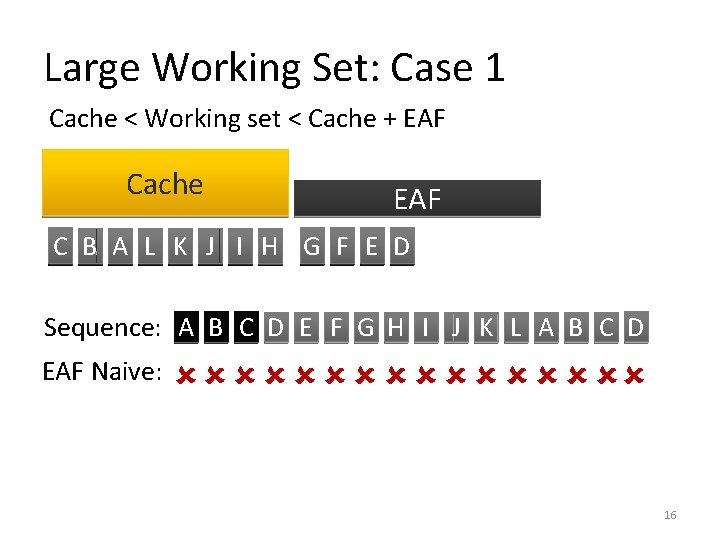

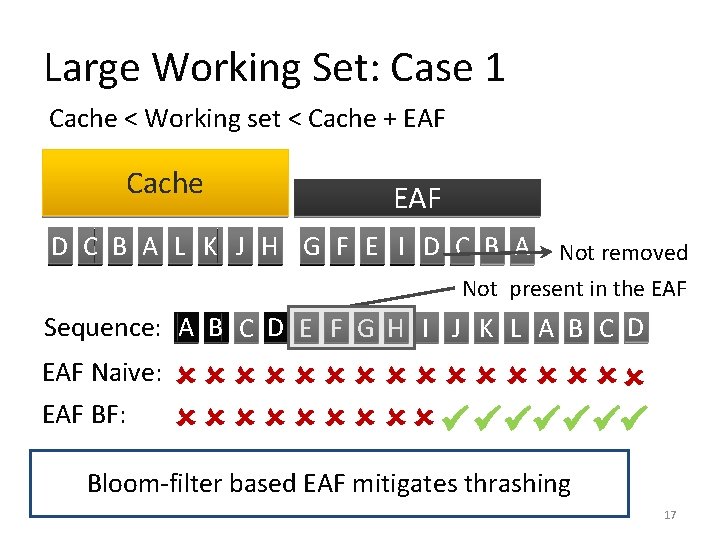

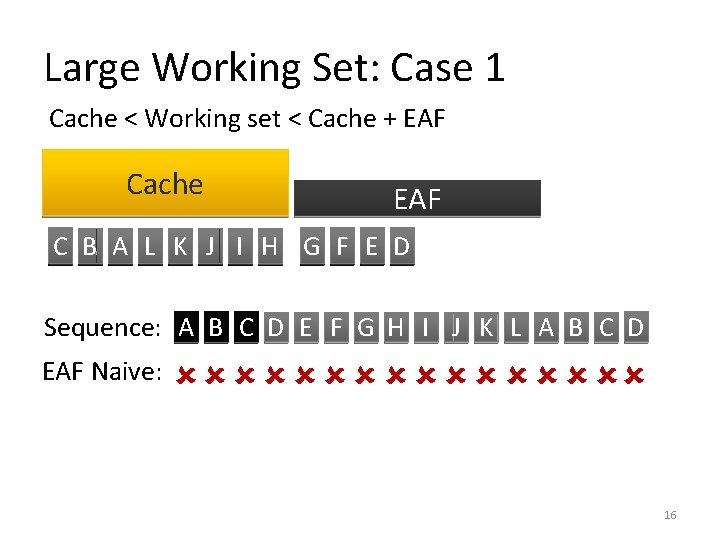

Large Working Set: Case 1 Cache < Working set < Cache + EAF Cache EAF CL A D EF D C EF D B C E D A B C A B B KL A KLJI H KJI G H FI G H EF G Sequence: A B C D E F G H I J K L A B C D EAF Naive: 16

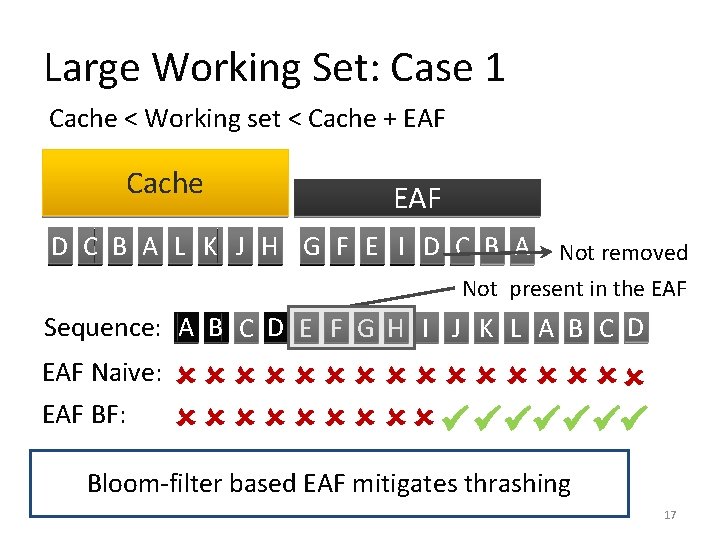

Large Working Set: Case 1 Cache < Working set < Cache + EAF Cache EAF D BL A C D EF G D C EF D B C C EI D A C B A H B A KL B KLJ A KJI H LJI G H KI G H FJ G H EF A B A EFI G Not removed Not present in the EAF Sequence: A B C D E F G H I J K L A B C D EAF Naive: EAF BF: Bloom-filter based EAF mitigates thrashing 17

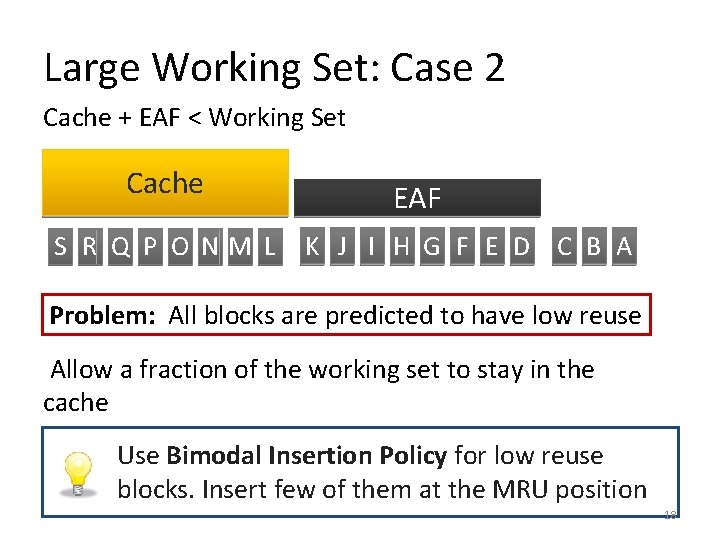

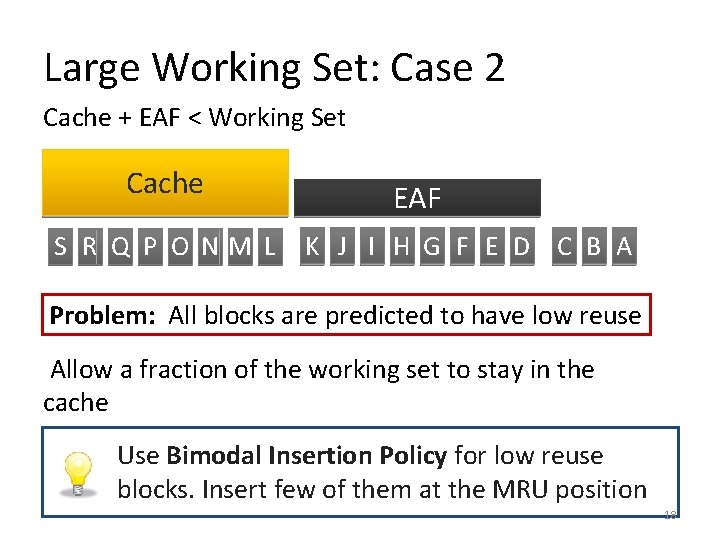

Large Working Set: Case 2 Cache + EAF < Working Set Cache S R Q P O NM L EAF K J I H G F E D C B A Problem: All blocks are predicted to have low reuse Allow a fraction of the working set to stay in the cache Use Bimodal Insertion Policy for low reuse blocks. Insert few of them at the MRU position 18

Performance Improvement over LRU Results – Summary 25% 20% TA-DIP SHIP TA-DRRIP EAF RTB D-EAF MCT 15% 10% 5% 0% 1 -Core 2 -Core 4 -Core 19

Part 2 Managing Prefetched Blocks Hopefully in a future course! 20

Part 2 Managing Dirty Blocks Hopefully in a future course! 21

Part 2 Application Awareness 22

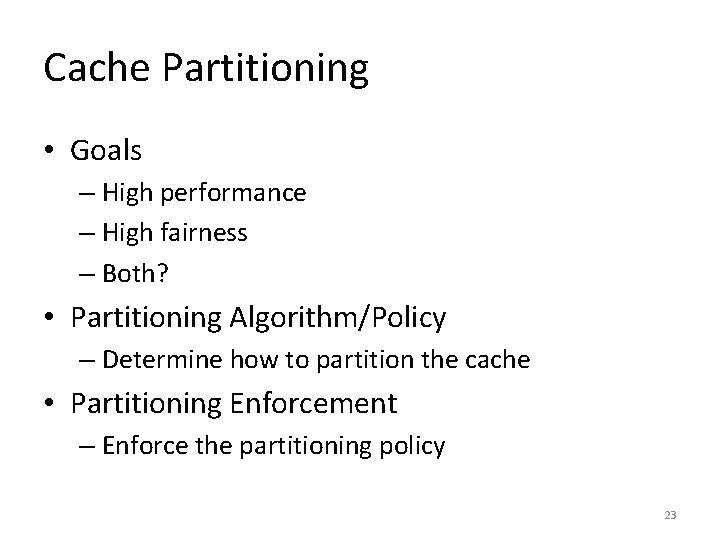

Cache Partitioning • Goals – High performance – High fairness – Both? • Partitioning Algorithm/Policy – Determine how to partition the cache • Partitioning Enforcement – Enforce the partitioning policy 23

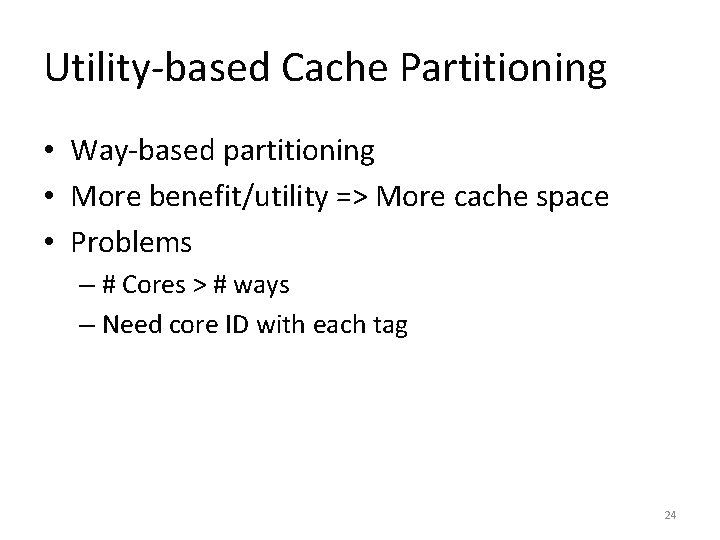

Utility-based Cache Partitioning • Way-based partitioning • More benefit/utility => More cache space • Problems – # Cores > # ways – Need core ID with each tag 24

Promotion-Insertion Pseudo Partitioning • Partitioning Algorithm – Same as UCP • Partitioning Enforcement – Modify cache insertion policy – Probabilistic promotion Promotion Insertion Pseudo Partitioning – Xie+ ISCA’ 09 25

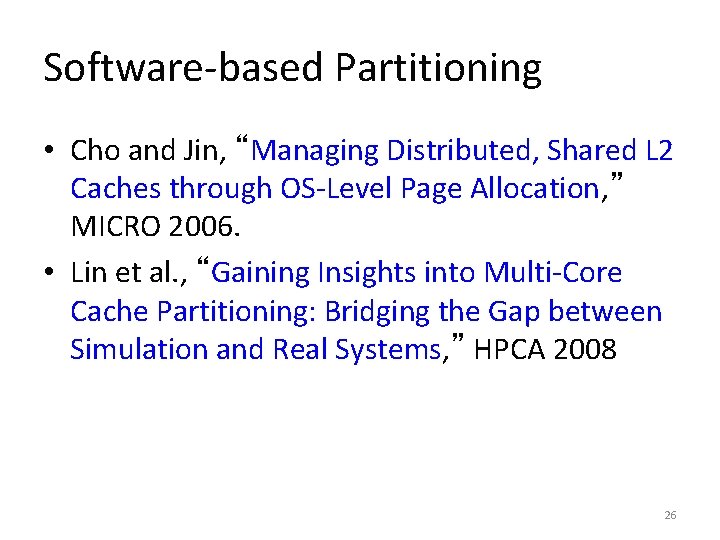

Software-based Partitioning • Cho and Jin, “Managing Distributed, Shared L 2 Caches through OS-Level Page Allocation, ” MICRO 2006. • Lin et al. , “Gaining Insights into Multi-Core Cache Partitioning: Bridging the Gap between Simulation and Real Systems, ” HPCA 2008 26

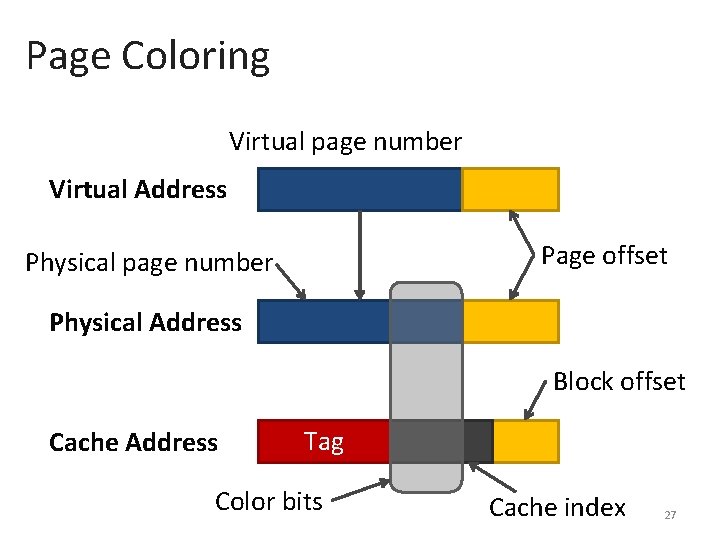

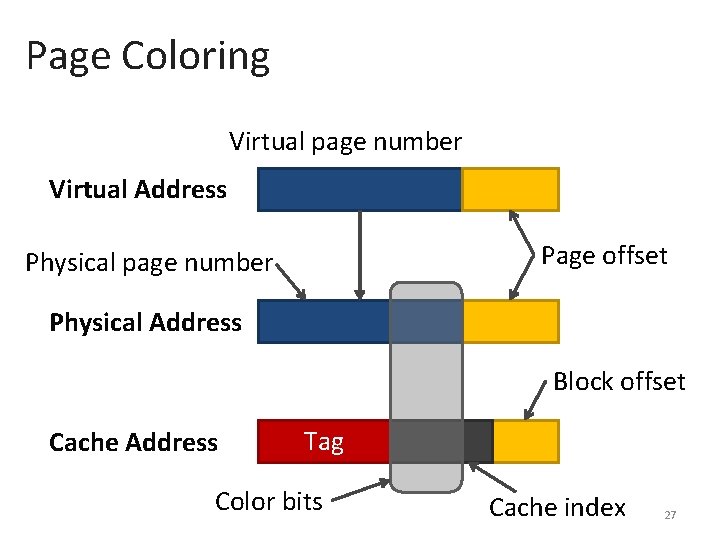

Page Coloring Virtual page number Virtual Address Page offset Physical page number Physical Address Block offset Cache Address Tag Color bits Cache index 27

OS-based Partitioning • Enforcing Partition – Colors partition the cache – Assign colors to each application – Application’s pages are allocated in the assigned colors – Number of colors => amount of cache space • Partitioning algorithm – Use hardware counters – # Cache misses 28

Set Imbalance • Problem – Some sets may have lot of conflict misses – Others may be under-utilized • Solution approaches – Randomize index • Not good for cache coherence. Why? – Set balancing cache • Pair an under-utilized set with one that has frequent conflict misses 29

That’s it! 30