18 742 Parallel Computer Architecture Lecture 11 Caching

![Qualitative Comparison with Prior Work • Zero-based designs – ZCA [Dusser+, ICS’ 09]: zero-content Qualitative Comparison with Prior Work • Zero-based designs – ZCA [Dusser+, ICS’ 09]: zero-content](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-41.jpg)

![Methodology • Simulator – x 86 event-driven simulator based on Simics [Magnusson+, Computer’ 02] Methodology • Simulator – x 86 event-driven simulator based on Simics [Magnusson+, Computer’ 02]](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-43.jpg)

![Methodology • Simulator – x 86 event-driven simulators • Simics-based [Magnusson+, Computer’ 02] for Methodology • Simulator – x 86 event-driven simulators • Simics-based [Magnusson+, Computer’ 02] for](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-64.jpg)

- Slides: 68

18 -742 Parallel Computer Architecture Lecture 11: Caching in Multi-Core Systems Prof. Onur Mutlu and Gennady Pekhimenko Carnegie Mellon University Fall 2012, 10/01/2012

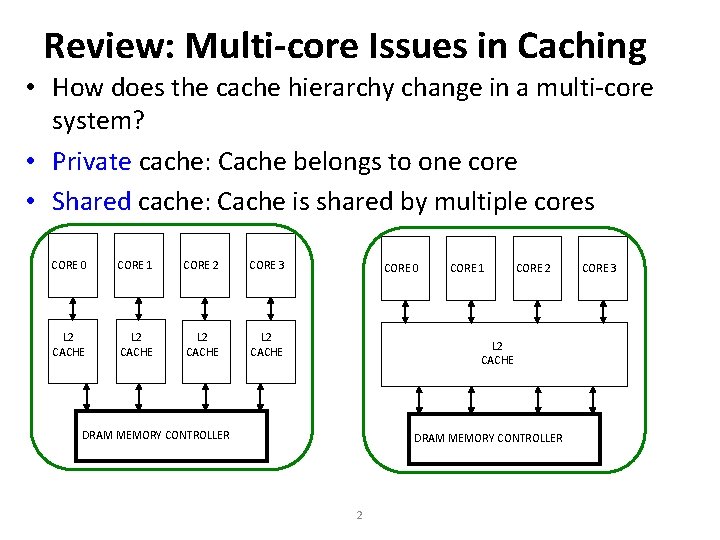

Review: Multi-core Issues in Caching • How does the cache hierarchy change in a multi-core system? • Private cache: Cache belongs to one core • Shared cache: Cache is shared by multiple cores CORE 0 CORE 1 CORE 2 CORE 3 L 2 CACHE CORE 0 CORE 1 CORE 2 L 2 CACHE DRAM MEMORY CONTROLLER 2 CORE 3

Outline • Multi-cores and Caching: Review • Utility-based partitioning • Cache compression – Frequent value – Frequent pattern – Base-Delta-Immediate • Main memory compression – IBM MXT – Linearly Compressed Pages (LCP) 3

Review: Shared Caches Between Cores • Advantages: – Dynamic partitioning of available cache space • No fragmentation due to static partitioning – Easier to maintain coherence – Shared data and locks do not ping pong between caches • Disadvantages – Cores incur conflict misses due to other cores’ accesses • Misses due to inter-core interference • Some cores can destroy the hit rate of other cores – What kind of access patterns could cause this? – Guaranteeing a minimum level of service (or fairness) to each core is harder (how much space, how much bandwidth? ) – High bandwidth harder to obtain (N cores N ports? ) 4

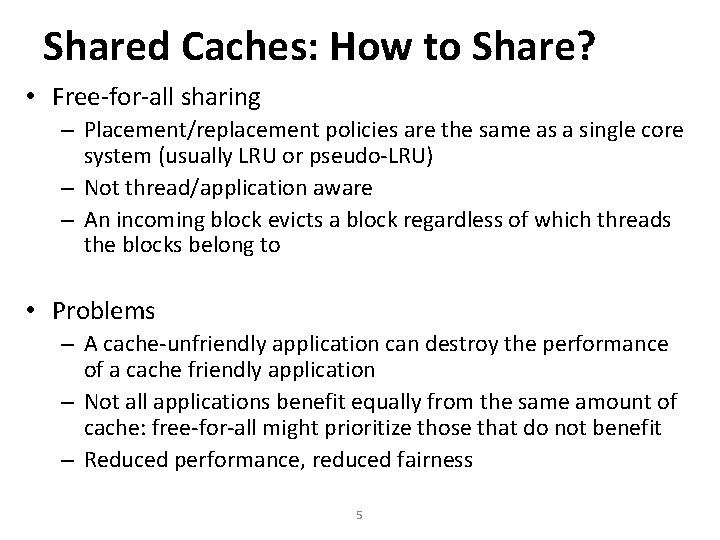

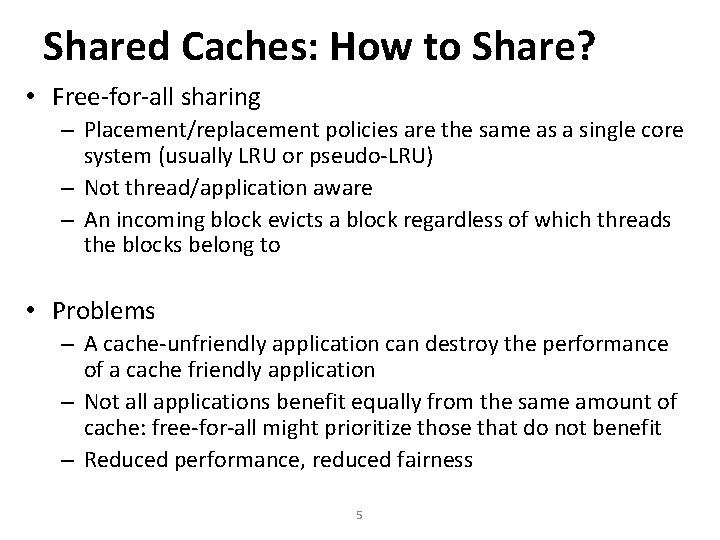

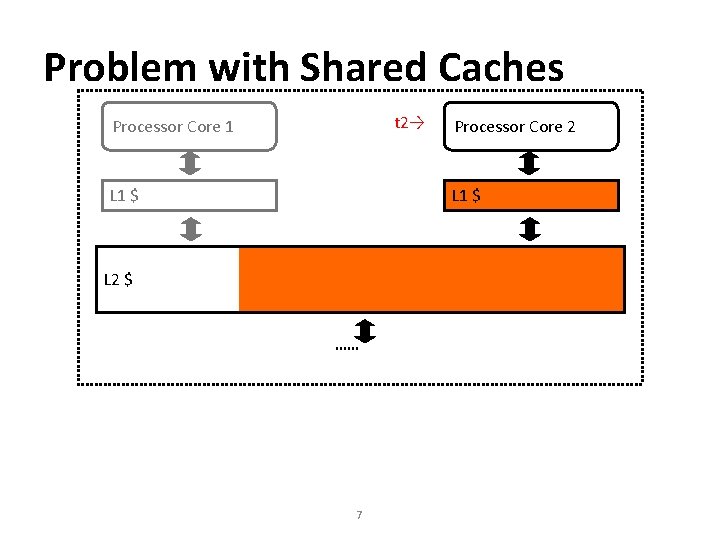

Shared Caches: How to Share? • Free-for-all sharing – Placement/replacement policies are the same as a single core system (usually LRU or pseudo-LRU) – Not thread/application aware – An incoming block evicts a block regardless of which threads the blocks belong to • Problems – A cache-unfriendly application can destroy the performance of a cache friendly application – Not all applications benefit equally from the same amount of cache: free-for-all might prioritize those that do not benefit – Reduced performance, reduced fairness 5

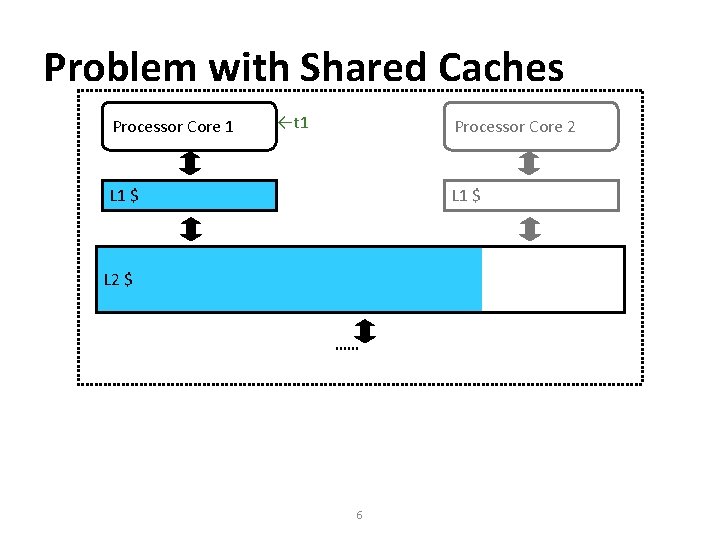

Problem with Shared Caches Processor Core 1 ←t 1 Processor Core 2 L 1 $ L 2 $ …… 6

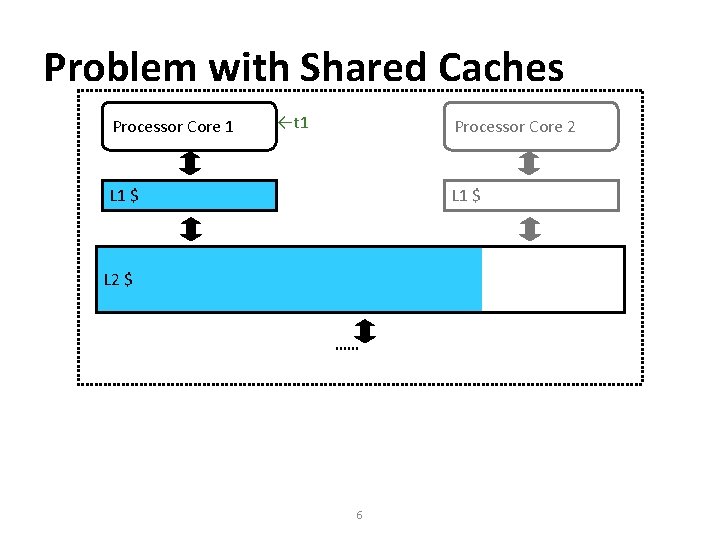

Problem with Shared Caches t 2→ Processor Core 1 L 1 $ Processor Core 2 L 1 $ L 2 $ …… 7

Problem with Shared Caches Processor Core 1 ←t 1 t 2→ L 1 $ Processor Core 2 L 1 $ L 2 $ …… t 2’s throughput is significantly reduced due to unfair cache sharing. 8

Controlled Cache Sharing • Utility based cache partitioning – Qureshi and Patt, “Utility-Based Cache Partitioning: A Low-Overhead, High. Performance, Runtime Mechanism to Partition Shared Caches, ” MICRO 2006. – Suh et al. , “A New Memory Monitoring Scheme for Memory-Aware Scheduling and Partitioning, ” HPCA 2002. • Fair cache partitioning – Kim et al. , “Fair Cache Sharing and Partitioning in a Chip Multiprocessor Architecture, ” PACT 2004. • Shared/private mixed cache mechanisms – Qureshi, “Adaptive Spill-Receive for Robust High-Performance Caching in CMPs, ” HPCA 2009. 9

Utility Based Shared Cache Partitioning • Goal: Maximize system throughput • Observation: Not all threads/applications benefit equally from caching simple LRU replacement not good for system throughput • Idea: Allocate more cache space to applications that obtain the most benefit from more space • The high-level idea can be applied to other shared resources as well. • Qureshi and Patt, “Utility-Based Cache Partitioning: A Low-Overhead, High-Performance, Runtime Mechanism to Partition Shared Caches, ” MICRO 2006. • Suh et al. , “A New Memory Monitoring Scheme for Memory-Aware Scheduling and Partitioning, ” HPCA 2002. 10

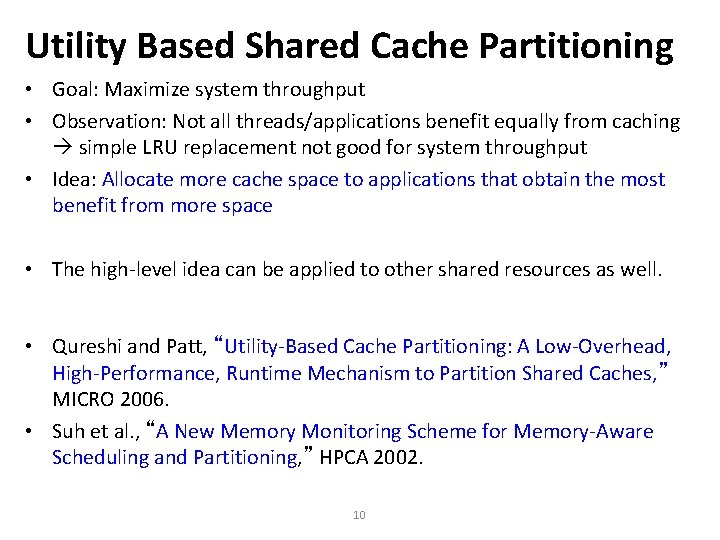

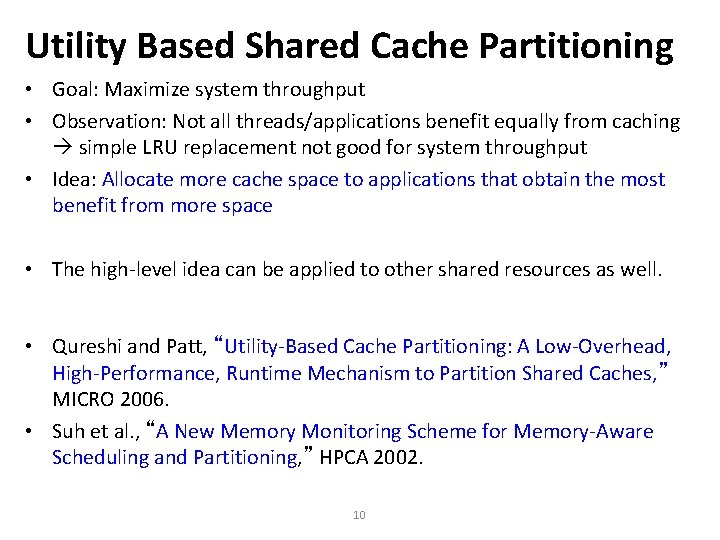

Utility Based Cache Partitioning (I) Misses per 1000 instructions Utility Uab = Misses with a ways – Misses with b ways Low Utility High Utility Saturating Utility Num ways from 16 -way 1 MB L 2 11

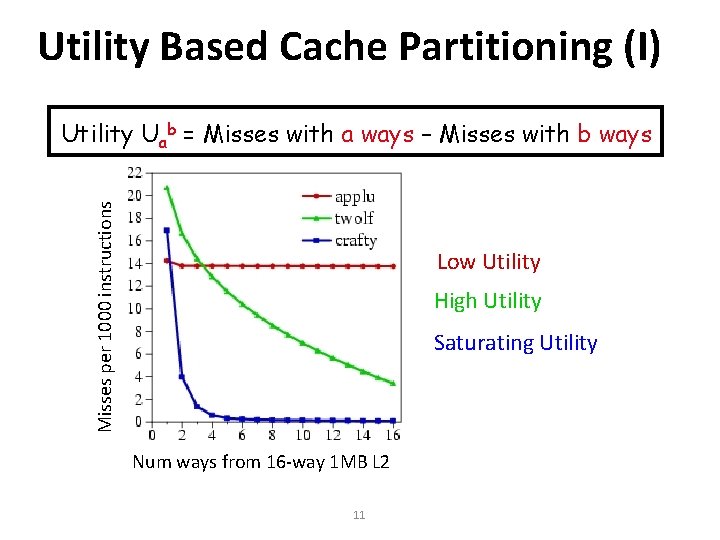

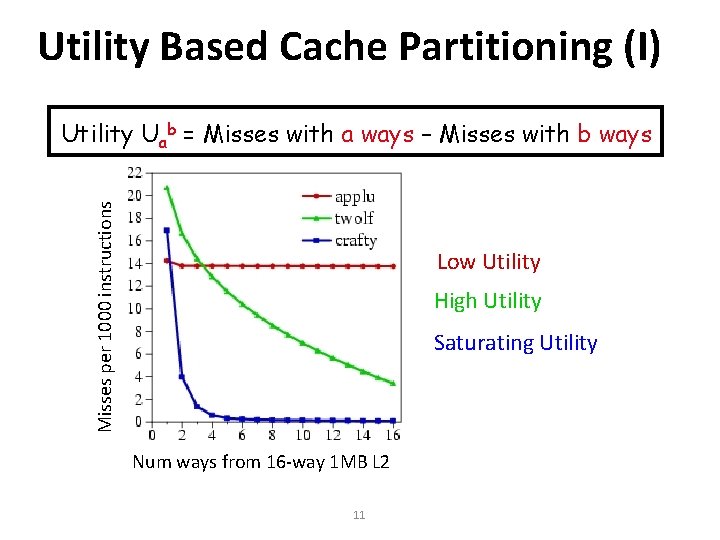

Misses per 1000 instructions (MPKI) Utility Based Cache Partitioning (II) equake vpr UTIL LRU Idea: Give more cache to the application that benefits more from cache 12

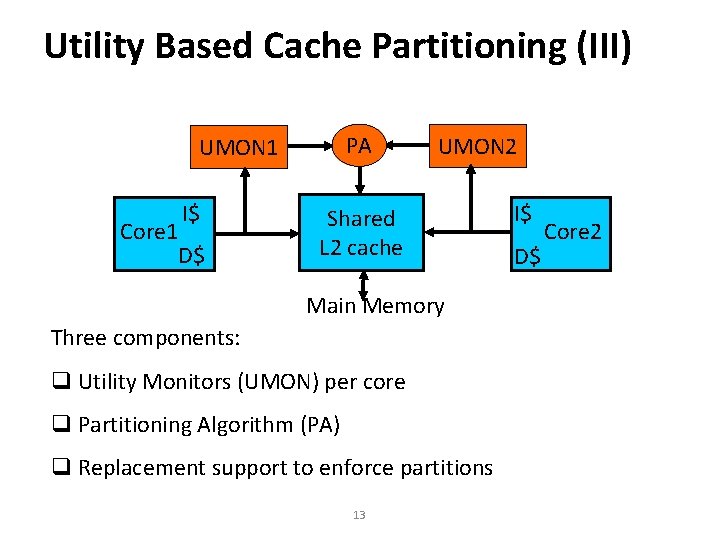

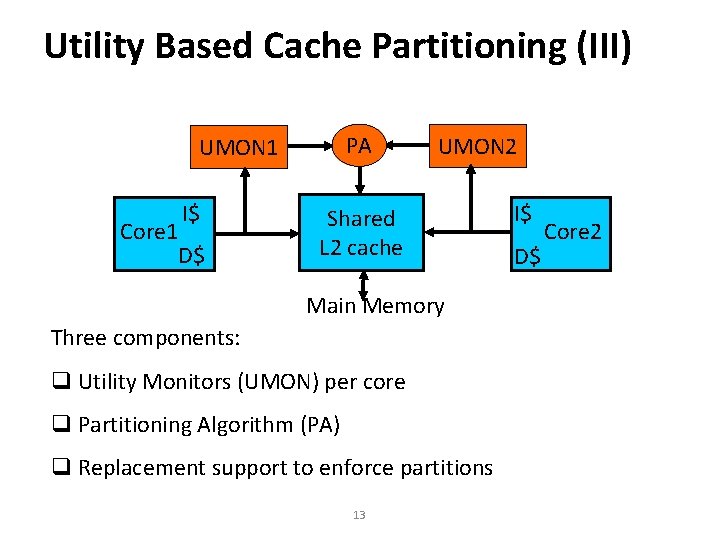

Utility Based Cache Partitioning (III) PA UMON 1 Core 1 I$ D$ UMON 2 Shared L 2 cache Main Memory Three components: q Utility Monitors (UMON) per core q Partitioning Algorithm (PA) q Replacement support to enforce partitions 13 I$ D$ Core 2

Cache Capacity • How to get more cache without making it physically larger? • Idea: Data compression for on chip-caches 14

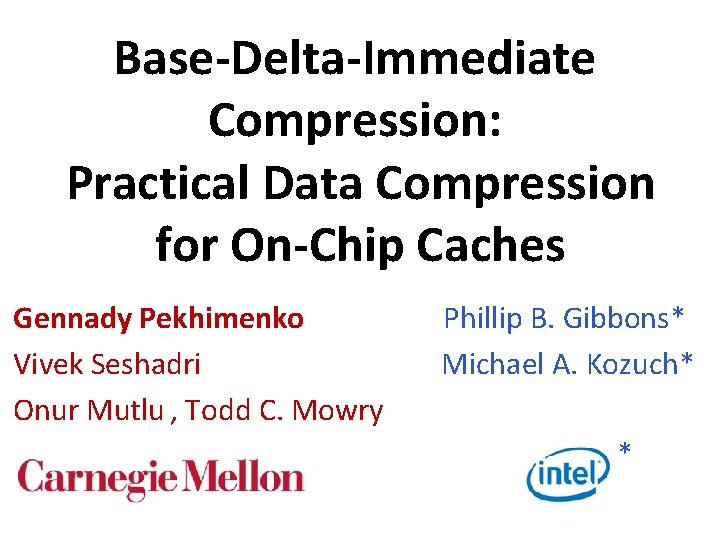

Base-Delta-Immediate Compression: Practical Data Compression for On-Chip Caches Gennady Pekhimenko Vivek Seshadri Onur Mutlu , Todd C. Mowry Phillip B. Gibbons* Michael A. Kozuch* *

Executive Summary • Off-chip memory latency is high – Large caches can help, but at significant cost • Compressing data in cache enables larger cache at low cost • Problem: Decompression is on the execution critical path • Goal: Design a new compression scheme that has 1. low decompression latency, 2. low cost, 3. high compression ratio • Observation: Many cache lines have low dynamic range data • Key Idea: Encode cachelines as a base + multiple differences • Solution: Base-Delta-Immediate compression with low decompression latency and high compression ratio – Outperforms three state-of-the-art compression mechanisms 16

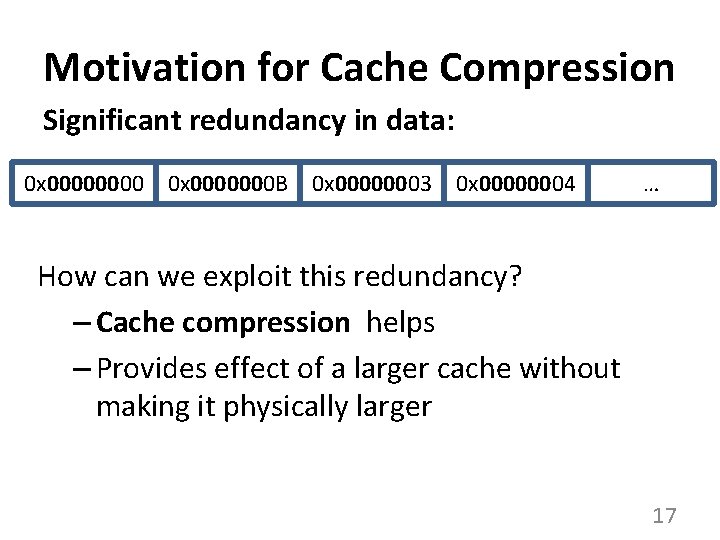

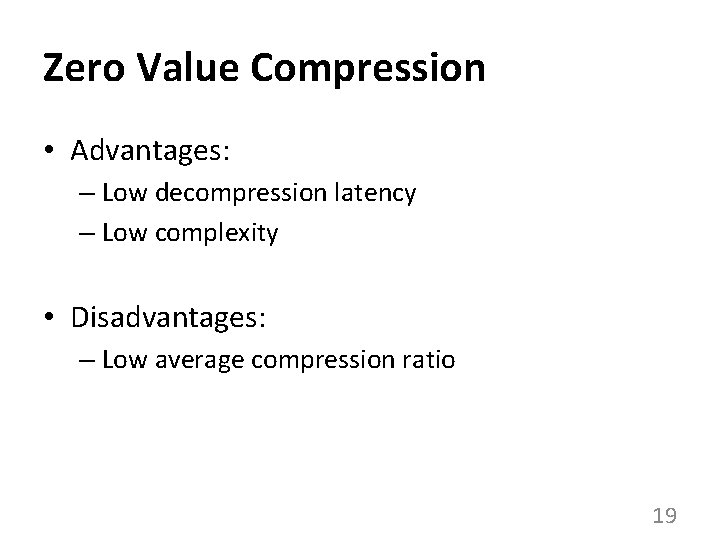

Motivation for Cache Compression Significant redundancy in data: 0 x 0000000 B 0 x 00000003 0 x 00000004 … How can we exploit this redundancy? – Cache compression helps – Provides effect of a larger cache without making it physically larger 17

Background on Cache Compression Hit CPU L 1 Cache L 2 Cache Decompression Uncompressed Compressed • Key requirements: – Fast (low decompression latency) – Simple (avoid complex hardware changes) – Effective (good compression ratio) 18

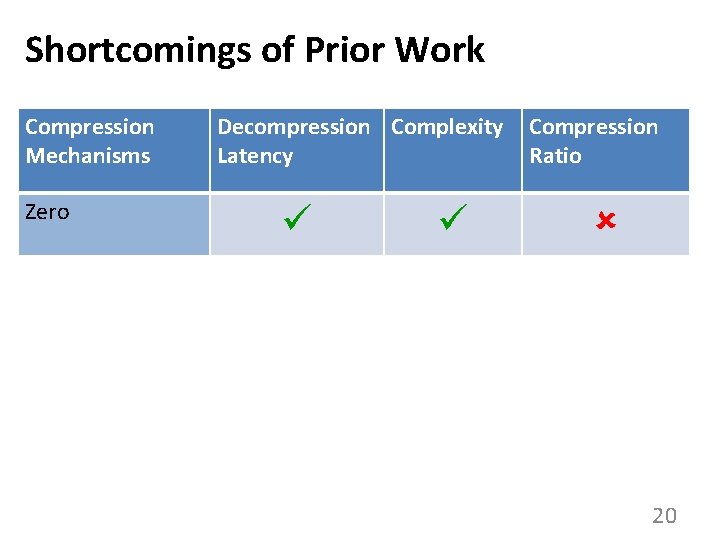

Zero Value Compression • Advantages: – Low decompression latency – Low complexity • Disadvantages: – Low average compression ratio 19

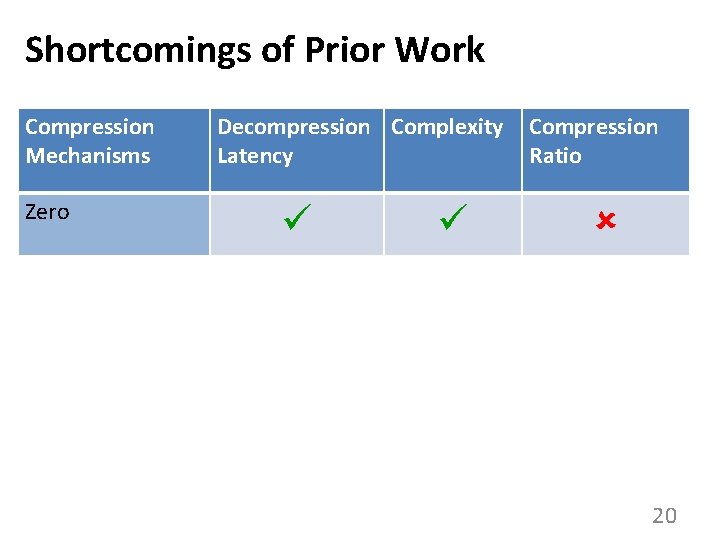

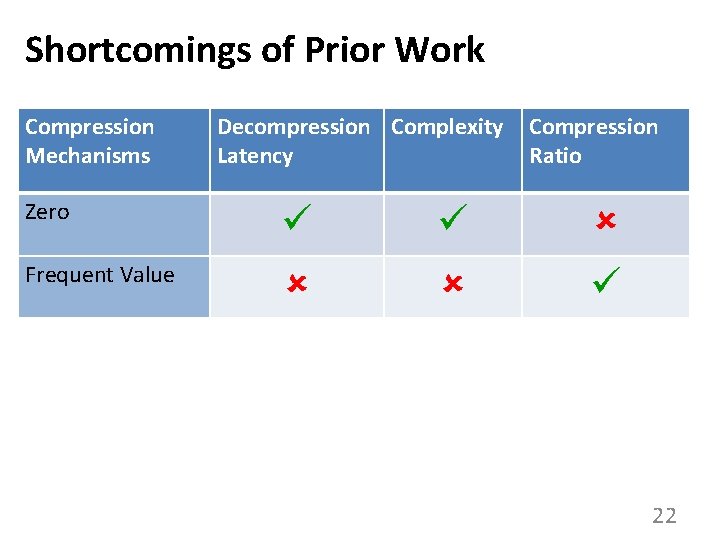

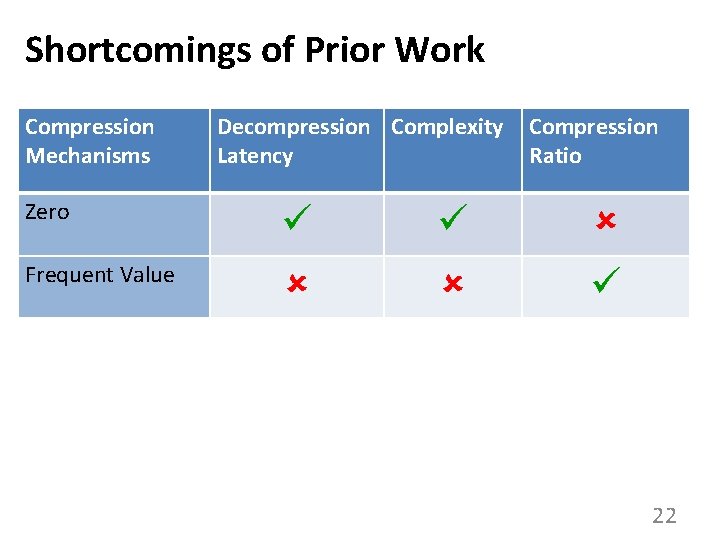

Shortcomings of Prior Work Compression Mechanisms Zero Decompression Complexity Latency Compression Ratio 20

Frequent Value Compression • Idea: encode cache lines based on frequently occurring values • Advantages: – Good compression ratio • Disadvantages: – Needs profiling – High decompression latency – High complexity 21

Shortcomings of Prior Work Compression Mechanisms Decompression Complexity Latency Compression Ratio Zero Frequent Value 22

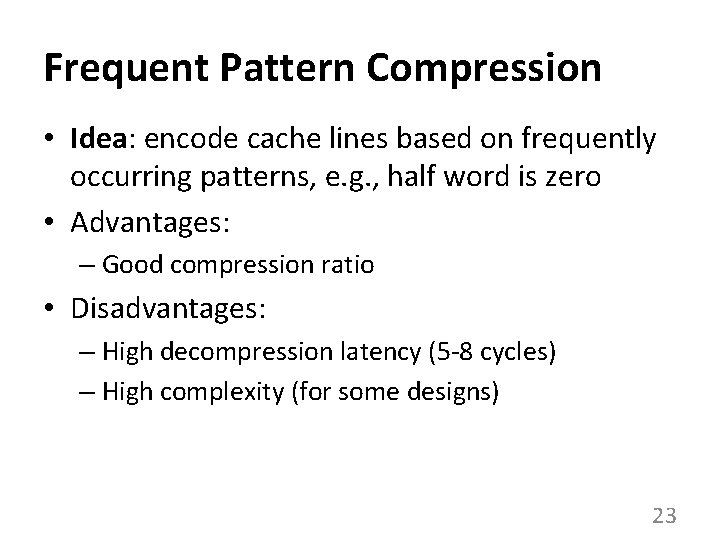

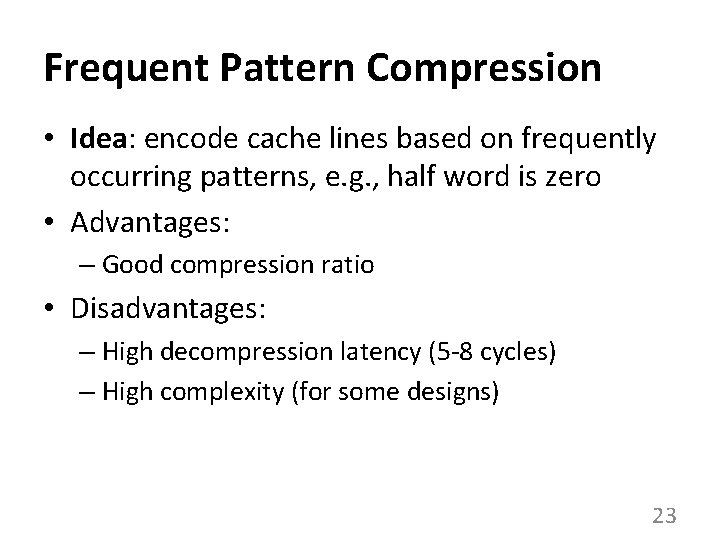

Frequent Pattern Compression • Idea: encode cache lines based on frequently occurring patterns, e. g. , half word is zero • Advantages: – Good compression ratio • Disadvantages: – High decompression latency (5 -8 cycles) – High complexity (for some designs) 23

Shortcomings of Prior Work Compression Mechanisms Decompression Complexity Latency Compression Ratio Zero Frequent Value Frequent Pattern / 24

Shortcomings of Prior Work Compression Mechanisms Decompression Complexity Latency Compression Ratio Zero Frequent Value Frequent Pattern / Our proposal: BΔI 25

Outline • • Motivation & Background Key Idea & Our Mechanism Evaluation Conclusion 26

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 … Repeated Values: common initial values, adjacent pixels 0 x 000000 FF … Narrow Values: small values stored in a big data type 0 x 0000000 B 0 x 00000003 0 x 00000004 … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 27

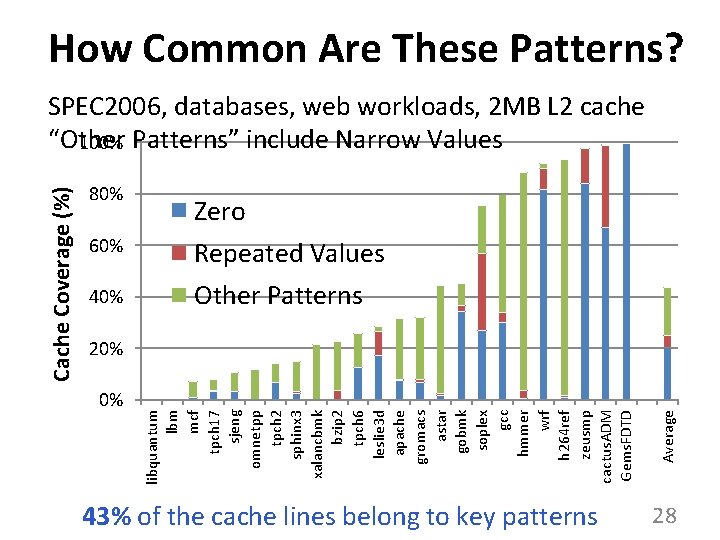

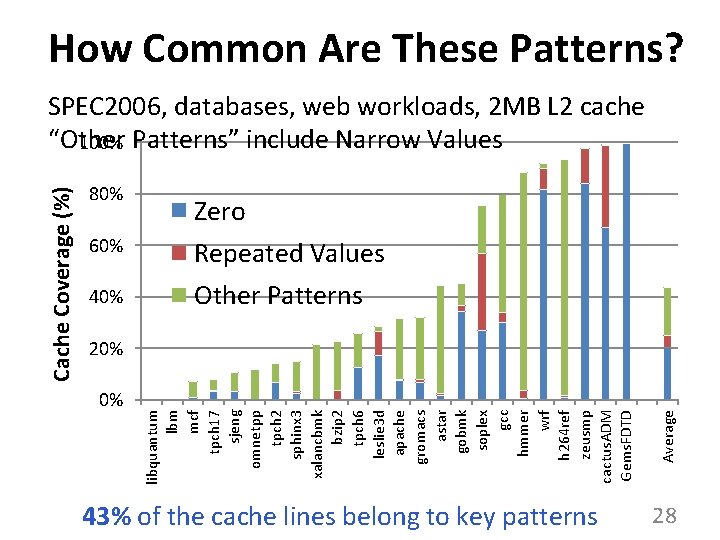

How Common Are These Patterns? 80% Zero 60% Repeated Values 40% Other Patterns 0% 43% of the cache lines belong to key patterns Average 20% libquantum lbm mcf tpch 17 sjeng omnetpp tpch 2 sphinx 3 xalancbmk bzip 2 tpch 6 leslie 3 d apache gromacs astar gobmk soplex gcc hmmer wrf h 264 ref zeusmp cactus. ADM Gems. FDTD Cache Coverage (%) SPEC 2006, databases, web workloads, 2 MB L 2 cache “Other 100% Patterns” include Narrow Values 28

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 Low Dynamic Range: … Repeated Values: common initial values, adjacent pixels 0 x 000000 FF … Differences between valuesinare significantly Narrow Values: small values stored a big data type than 0 x 00000003 the values 0 x 00000004 themselves 0 x 0000 smaller 0 x 0000000 B … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 29

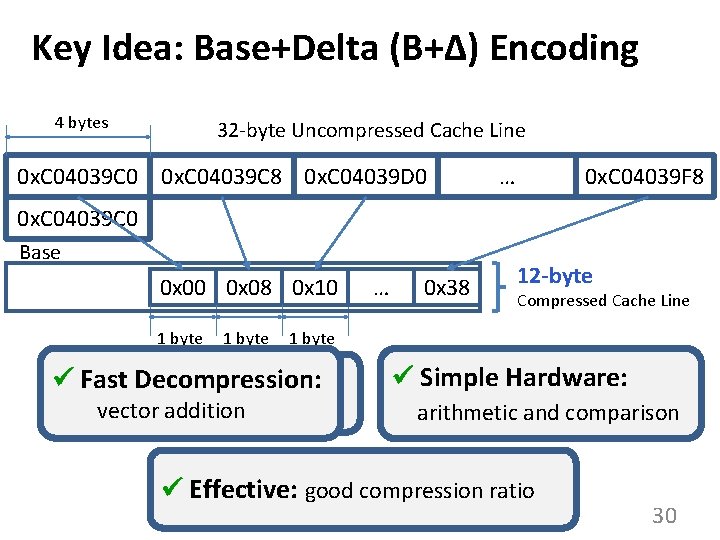

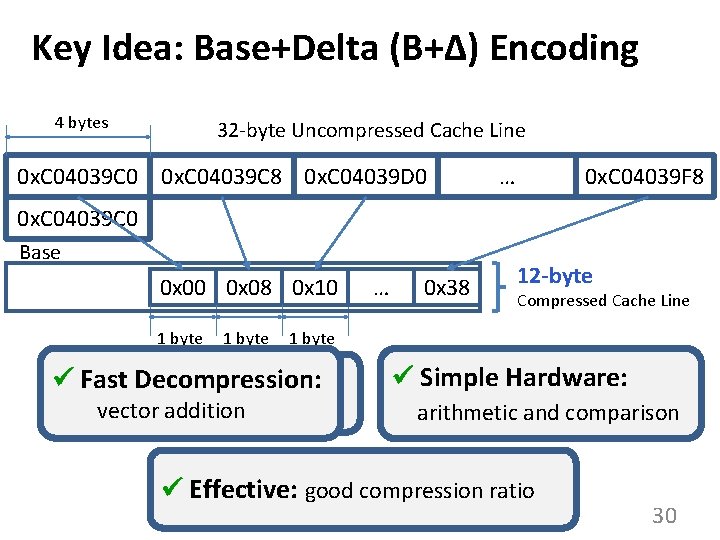

Key Idea: Base+Delta (B+Δ) Encoding 4 bytes 32 -byte Uncompressed Cache Line 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 … 0 x. C 04039 F 8 0 x. C 04039 C 0 Base 0 x 00 0 x 08 0 x 10 1 byte 0 x 38 12 -byte Compressed Cache Line 1 byte Fast Decompression: 20 bytes saved vector addition … Simple Hardware: arithmetic and comparison Effective: good compression ratio 30

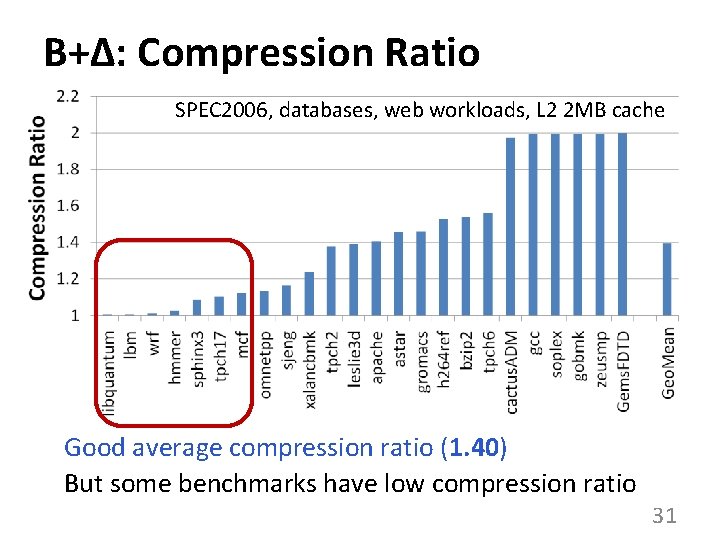

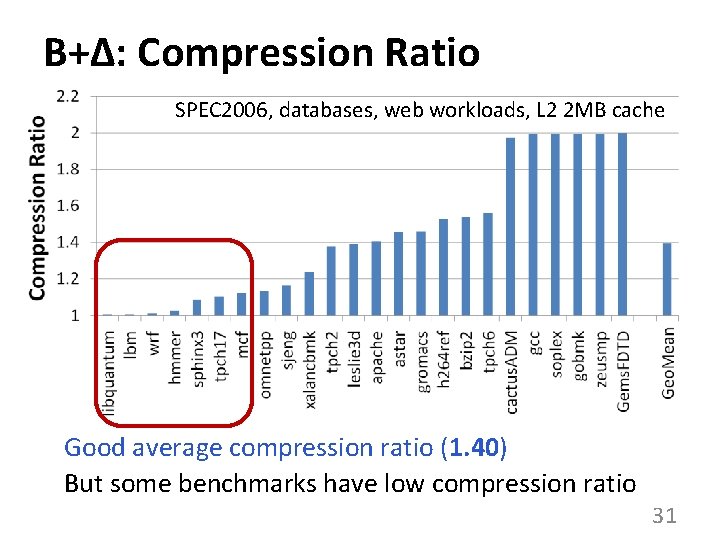

B+Δ: Compression Ratio SPEC 2006, databases, web workloads, L 2 2 MB cache Good average compression ratio (1. 40) But some benchmarks have low compression ratio 31

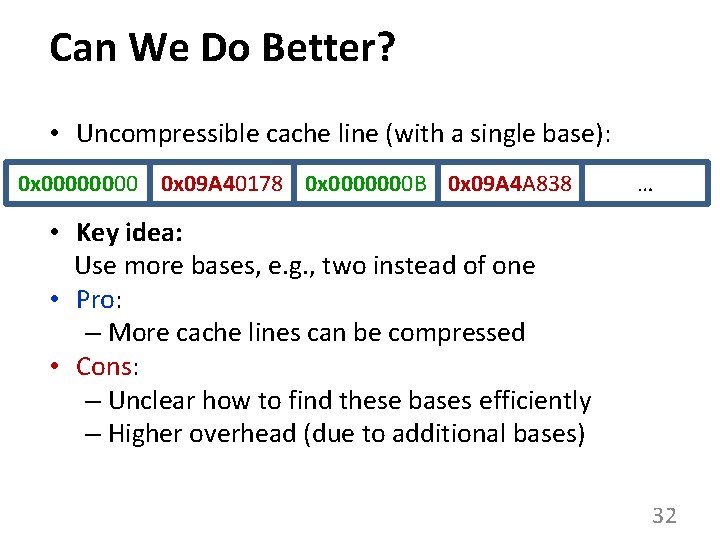

Can We Do Better? • Uncompressible cache line (with a single base): 0 x 0000 0 x 09 A 40178 0 x 0000000 B 0 x 09 A 4 A 838 … • Key idea: Use more bases, e. g. , two instead of one • Pro: – More cache lines can be compressed • Cons: – Unclear how to find these bases efficiently – Higher overhead (due to additional bases) 32

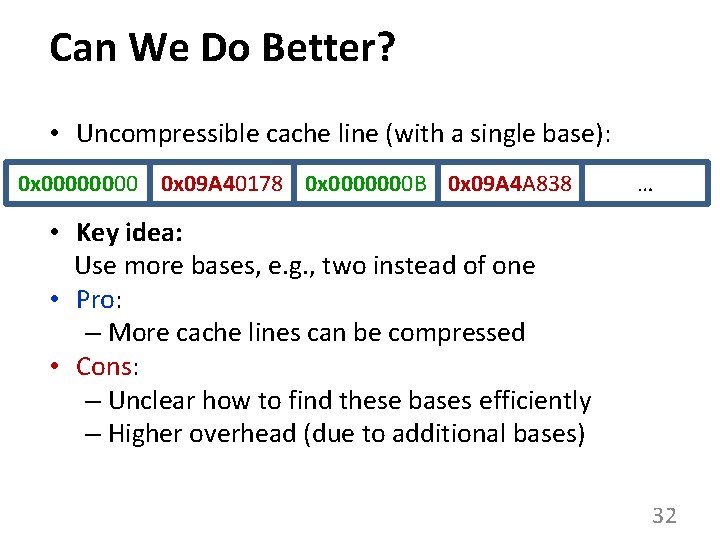

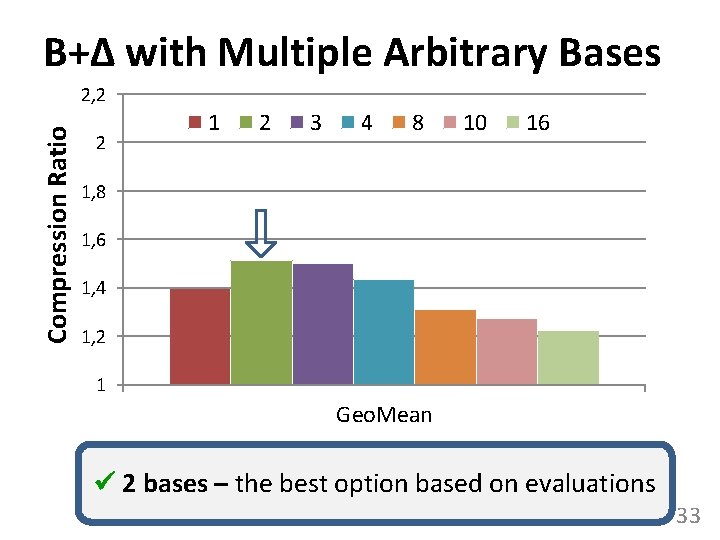

B+Δ with Multiple Arbitrary Bases Compression Ratio 2, 2 2 1 2 3 4 8 10 16 1, 8 1, 6 1, 4 1, 2 1 Geo. Mean 2 bases – the best option based on evaluations 33

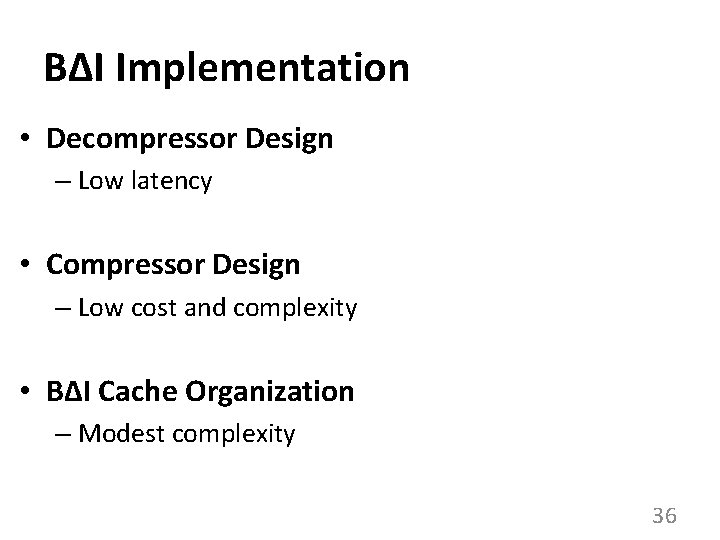

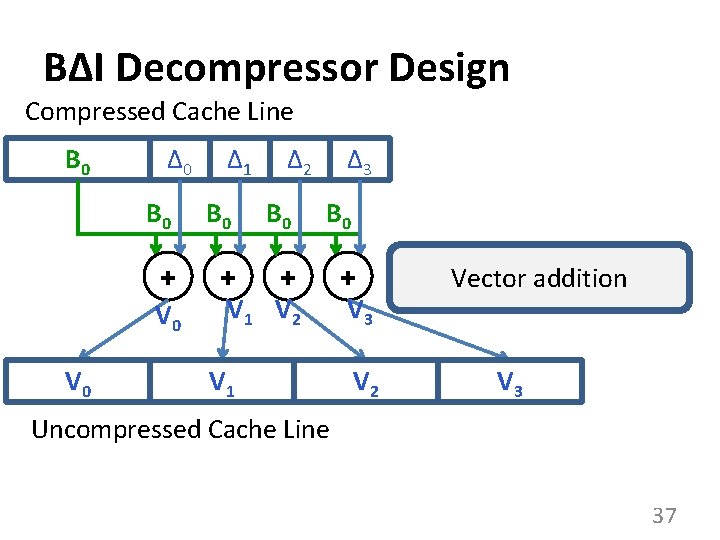

How to Find Two Bases Efficiently? 1. First base - first element in the cache line Base+Delta part 2. Second base - implicit base of 0 Immediate part Advantages over 2 arbitrary bases: – Better compression ratio – Simpler compression logic Base-Delta-Immediate (BΔI) Compression 34

B+Δ (with two arbitrary bases) vs. BΔI Average compression ratio is close, but BΔI is simpler 35

BΔI Implementation • Decompressor Design – Low latency • Compressor Design – Low cost and complexity • BΔI Cache Organization – Modest complexity 36

BΔI Decompressor Design Compressed Cache Line B 0 Δ 2 Δ 3 B 0 B 0 + + V 0 Δ 1 V 2 V 1 V 3 V 2 Vector addition V 3 Uncompressed Cache Line 37

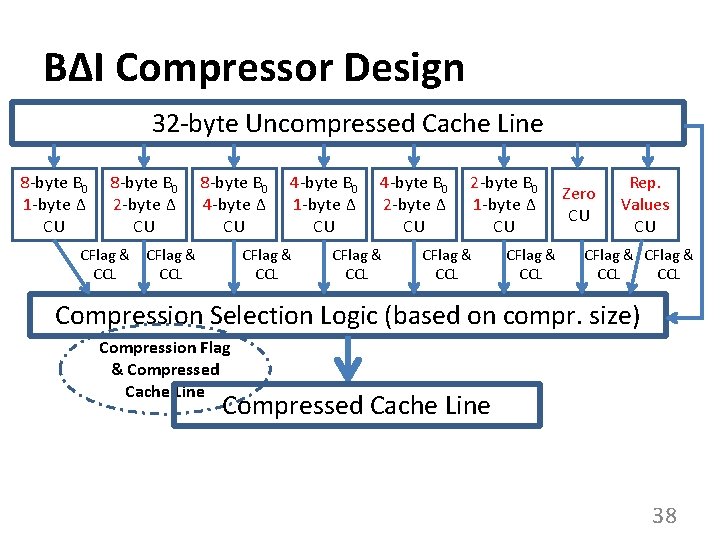

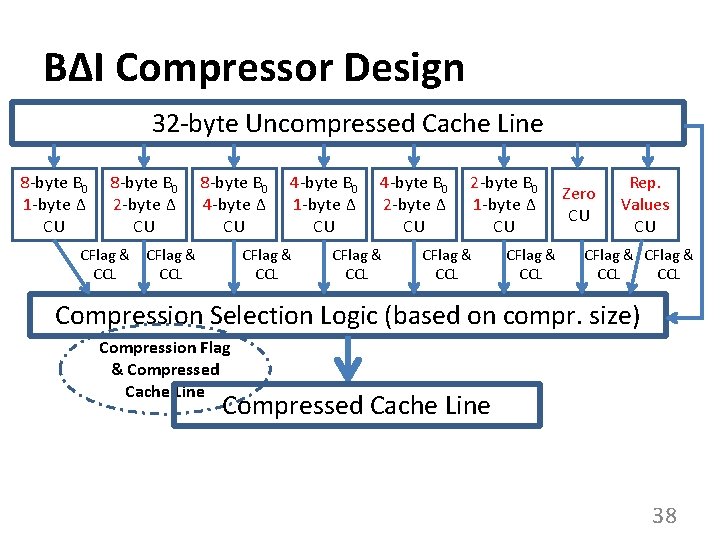

BΔI Compressor Design 32 -byte Uncompressed Cache Line 8 -byte B 0 1 -byte Δ CU 8 -byte B 0 2 -byte Δ CU CFlag & CCL 8 -byte B 0 4 -byte Δ CU CFlag & CCL 4 -byte B 0 1 -byte Δ CU CFlag & CCL 4 -byte B 0 2 -byte Δ CU CFlag & CCL 2 -byte B 0 1 -byte Δ CU CFlag & CCL Zero CU Rep. Values CU CFlag & CCL Compression Selection Logic (based on compr. size) Compression Flag & Compressed Cache Line 38

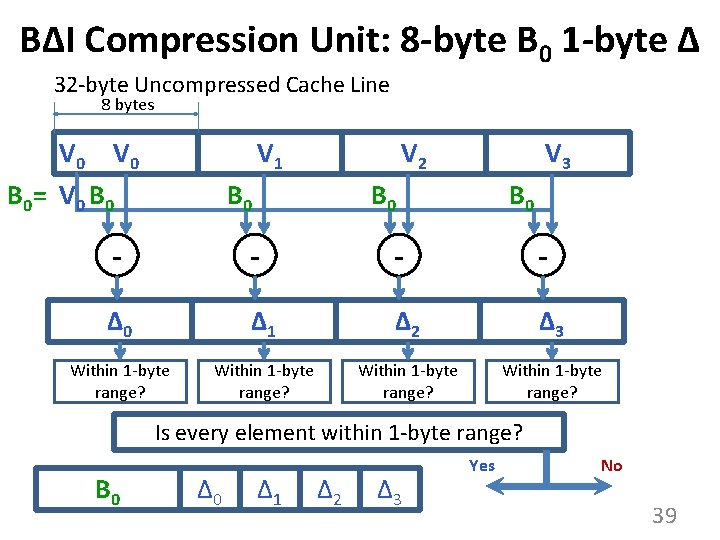

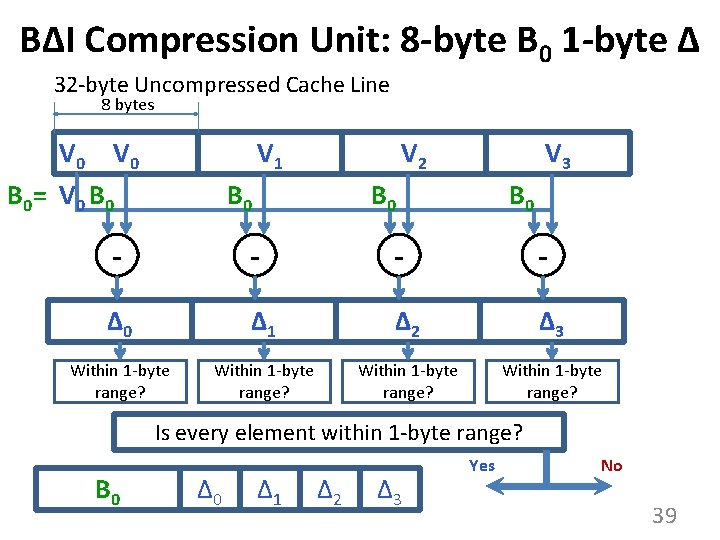

BΔI Compression Unit: 8 -byte B 0 1 -byte Δ 32 -byte Uncompressed Cache Line 8 bytes V 0 B 0 = V 0 B 0 V 1 V 2 V 3 B 0 B 0 - - Δ 0 Δ 1 Δ 2 Δ 3 Within 1 -byte range? Is every element within 1 -byte range? B 0 Δ 1 Δ 2 Δ 3 Yes No 39

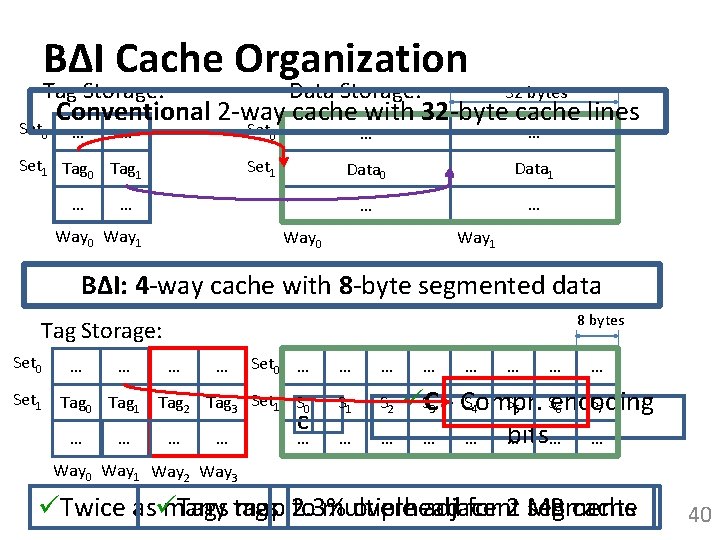

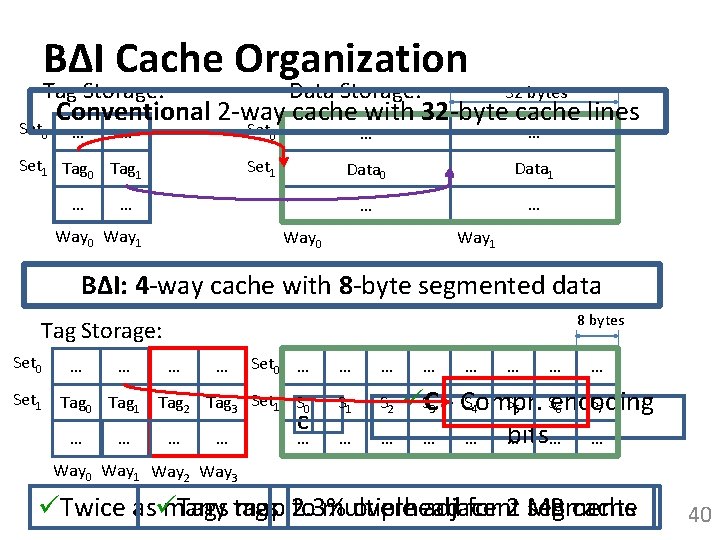

BΔI Cache Organization Tag Storage: Set 0 Data Storage: 32 bytes Conventional 2 -way cache with 32 -byte cache lines … … Set 1 Tag 0 Tag 1 … Set 0 … … Set 1 Data 0 Data 1 … … … Way 0 Way 1 BΔI: 4 -way cache with 8 -byte segmented data 8 bytes Tag Storage: Set 0 … … … Set 1 Tag 0 Tag 1 Tag 2 Tag 3 Set 1 S 0 S 1 S 2 … … … … … C … … … S 3 - Compr. S 4 S 5 Sencoding S 7 C 6 bits… … … Way 0 Way 1 Way 2 Way 3 Twice as Tags many tags map 2. 3% to multiple overhead adjacent for 2 MB segments cache 40

![Qualitative Comparison with Prior Work Zerobased designs ZCA Dusser ICS 09 zerocontent Qualitative Comparison with Prior Work • Zero-based designs – ZCA [Dusser+, ICS’ 09]: zero-content](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-41.jpg)

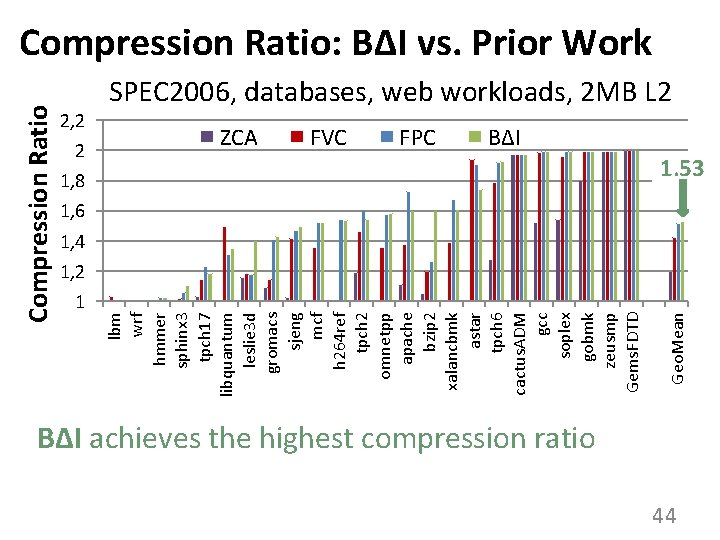

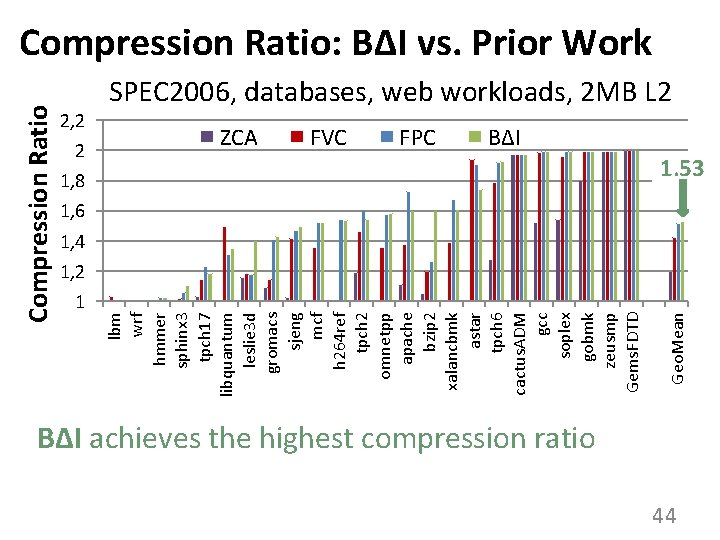

Qualitative Comparison with Prior Work • Zero-based designs – ZCA [Dusser+, ICS’ 09]: zero-content augmented cache – ZVC [Islam+, PACT’ 09]: zero-value cancelling – Limited applicability (only zero values) • FVC [Yang+, MICRO’ 00]: frequent value compression – High decompression latency and complexity • Pattern-based compression designs – FPC [Alameldeen+, ISCA’ 04]: frequent pattern compression • High decompression latency (5 cycles) and complexity – C-pack [Chen+, T-VLSI Systems’ 10]: practical implementation of FPC-like algorithm • High decompression latency (8 cycles) 41

Outline • • Motivation & Background Key Idea & Our Mechanism Evaluation Conclusion 42

![Methodology Simulator x 86 eventdriven simulator based on Simics Magnusson Computer 02 Methodology • Simulator – x 86 event-driven simulator based on Simics [Magnusson+, Computer’ 02]](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-43.jpg)

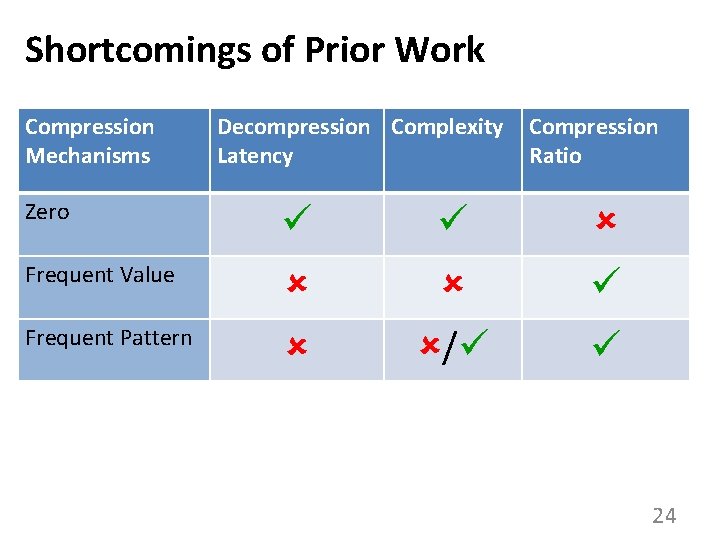

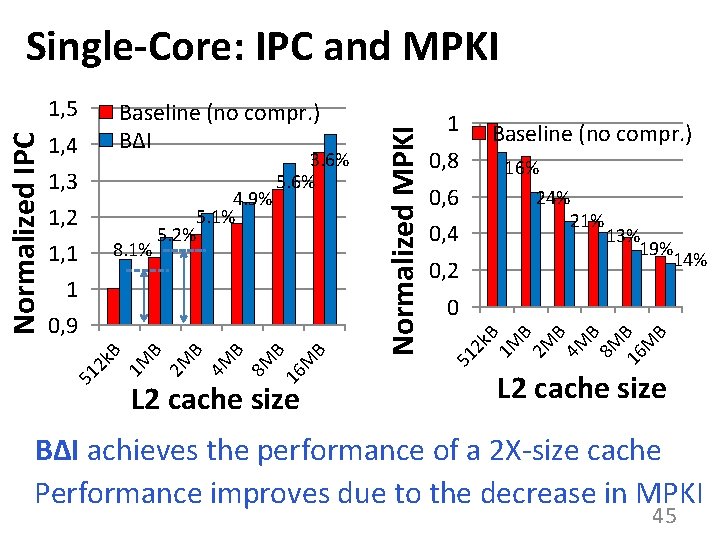

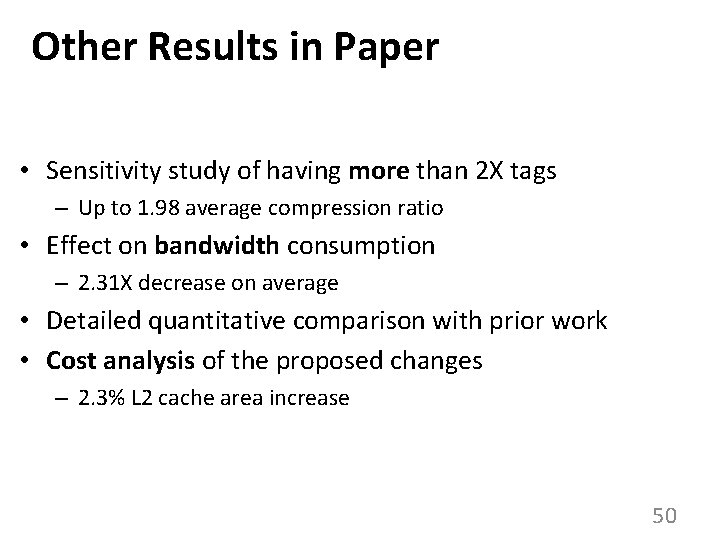

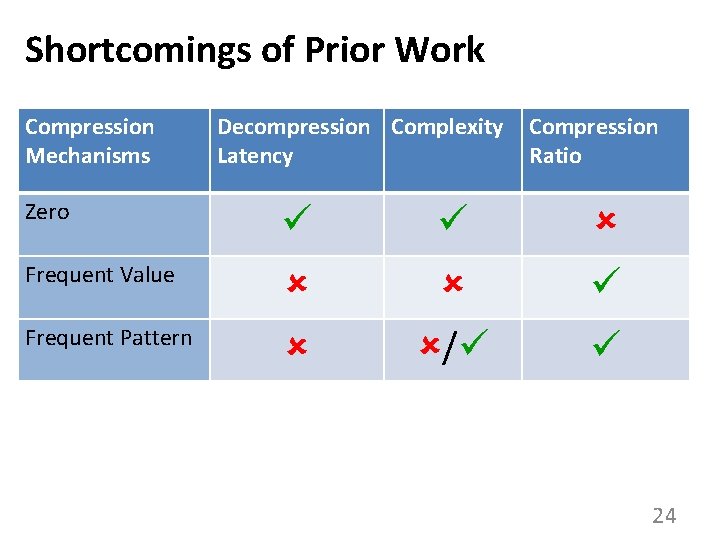

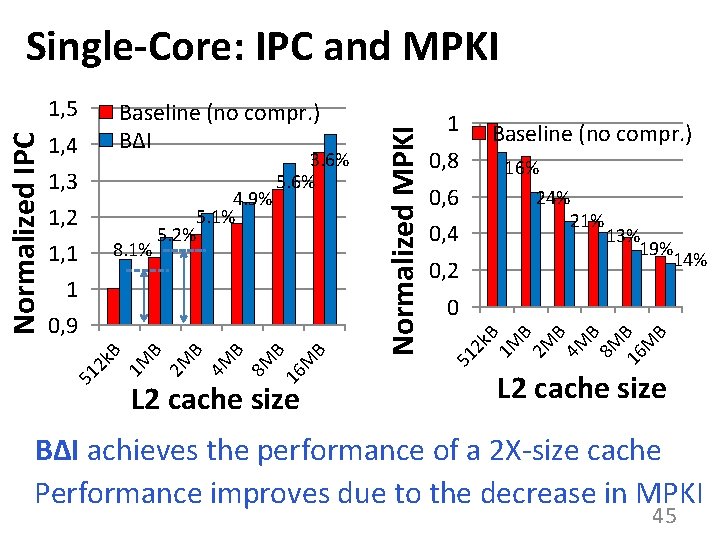

Methodology • Simulator – x 86 event-driven simulator based on Simics [Magnusson+, Computer’ 02] • Workloads – SPEC 2006 benchmarks, TPC, Apache web server – 1 – 4 core simulations for 1 billion representative instructions • System Parameters – L 1/L 2/L 3 cache latencies from CACTI [Thoziyoor+, ISCA’ 08] – 4 GHz, x 86 in-order core, 512 k. B - 16 MB L 2, simple memory model (300 -cycle latency for row-misses) 43

Compression Ratio 2 1 ZCA FVC FPC 1, 8 Geo. Mean 2, 2 lbm wrf hmmer sphinx 3 tpch 17 libquantum leslie 3 d gromacs sjeng mcf h 264 ref tpch 2 omnetpp apache bzip 2 xalancbmk astar tpch 6 cactus. ADM gcc soplex gobmk zeusmp Gems. FDTD Compression Ratio: BΔI vs. Prior Work SPEC 2006, databases, web workloads, 2 MB L 2 BΔI 1. 53 1, 6 1, 4 1, 2 BΔI achieves the highest compression ratio 44

1, 3 1, 2 8. 1% 1, 1 4. 9% 5. 1% 5. 2% 3. 6% 5. 6% 1 0, 9 2 k 51 L 2 cache size 0, 8 0, 6 0, 4 0, 2 Baseline (no compr. ) 16% 24% 21% 13% 19% 14% 0 2 k B 1 M B 2 M B 4 M B 8 M 16 B M B 1, 4 1 51 Baseline (no compr. ) BΔI B 1 M B 2 M B 4 M B 8 M B 16 M B Normalized IPC 1, 5 Normalized MPKI Single-Core: IPC and MPKI L 2 cache size BΔI achieves the performance of a 2 X-size cache Performance improves due to the decrease in MPKI 45

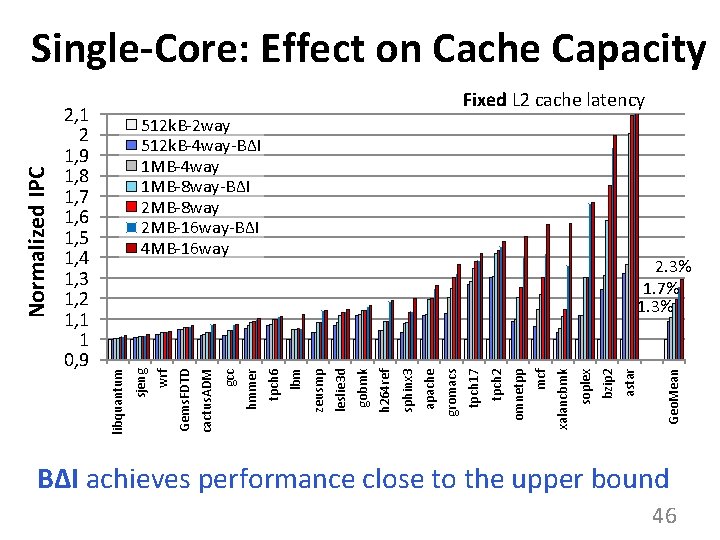

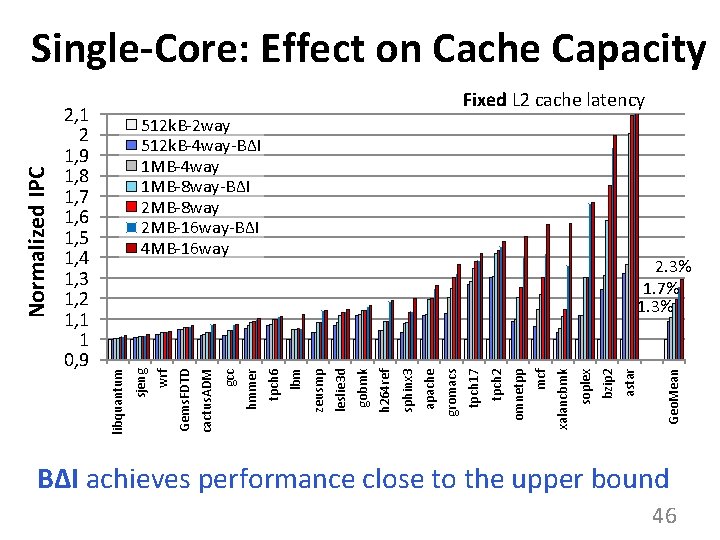

Geo. Mean astar bzip 2 soplex xalancbmk mcf omnetpp tpch 2 tpch 17 gromacs apache sphinx 3 h 264 ref gobmk leslie 3 d zeusmp lbm 2. 3% 1. 7% 1. 3% tpch 6 hmmer gcc cactus. ADM Gems. FDTD wrf 512 k. B-2 way 512 k. B-4 way-BΔI 1 MB-4 way 1 MB-8 way-BΔI 2 MB-8 way 2 MB-16 way-BΔI 4 MB-16 way sjeng 2, 1 2 1, 9 1, 8 1, 7 1, 6 1, 5 1, 4 1, 3 1, 2 1, 1 1 0, 9 Fixed L 2 cache latency libquantum Normalized IPC Single-Core: Effect on Cache Capacity BΔI achieves performance close to the upper bound 46

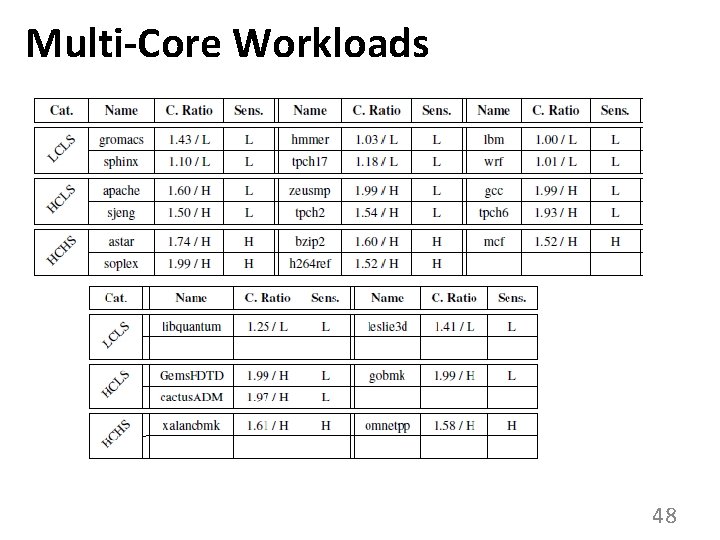

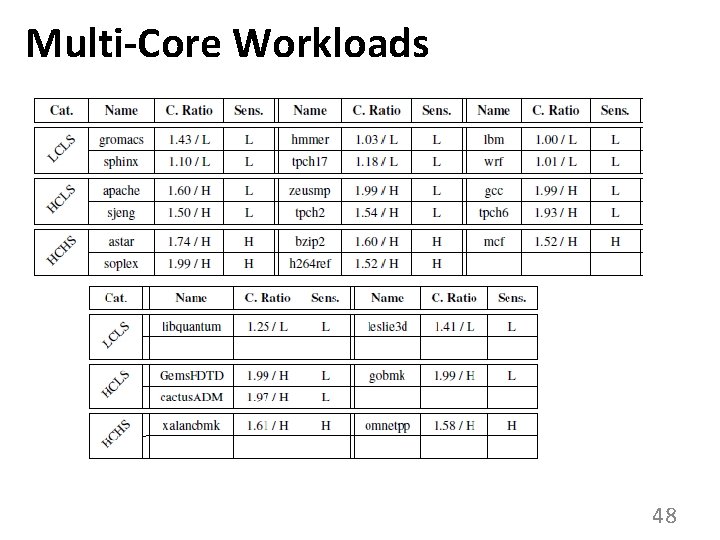

Multi-Core Workloads • Application classification based on Compressibility: effective cache size increase (Low Compr. (LC) < 1. 40, High Compr. (HC) >= 1. 40) Sensitivity: performance gain with more cache (Low Sens. (LS) < 1. 10, High Sens. (HS) >= 1. 10; 512 k. B -> 2 MB) • Three classes of applications: – LCLS, HCHS, no LCHS applications • For 2 -core - random mixes of each possible class pairs (20 each, 120 total workloads) 47

Multi-Core Workloads 48

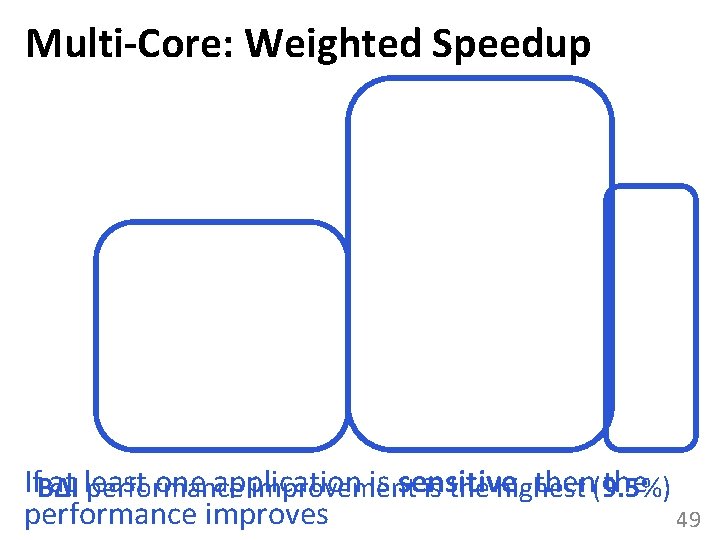

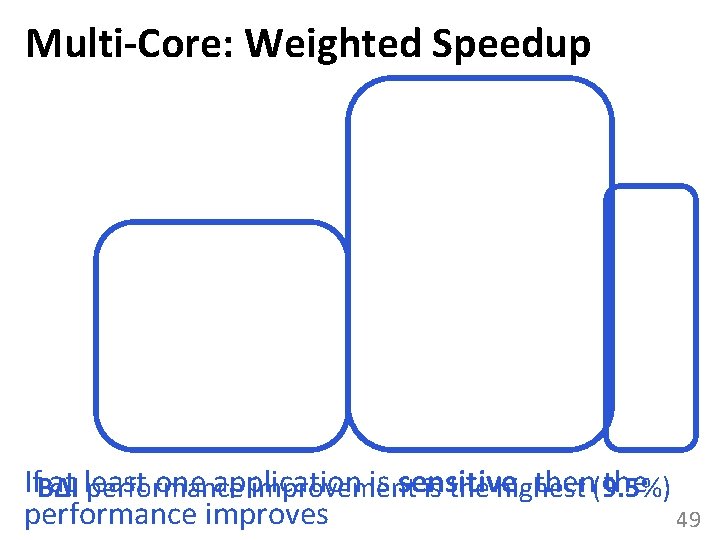

Multi-Core: Weighted Speedup If. BΔI at least one application is sensitive, then(9. 5%) the performance improvement is the highest performance improves 49

Other Results in Paper • Sensitivity study of having more than 2 X tags – Up to 1. 98 average compression ratio • Effect on bandwidth consumption – 2. 31 X decrease on average • Detailed quantitative comparison with prior work • Cost analysis of the proposed changes – 2. 3% L 2 cache area increase 50

Conclusion • A new Base-Delta-Immediate compression mechanism • Key insight: many cache lines can be efficiently represented using base + delta encoding • Key properties: – Low latency decompression – Simple hardware implementation – High compression ratio with high coverage • Improves cache hit ratio and performance of both singlecore and multi-core workloads – Outperforms state-of-the-art cache compression techniques: FVC and FPC 51

Linearly Compressed Pages: A Main Memory Compression Framework with Low Complexity and Low Latency Gennady Pekhimenko, Vivek Seshadri, Yoongu Kim, Hongyi Xin, Onur Mutlu, Phillip B. Gibbons*, Michael A. Kozuch*, Todd C. Mowry

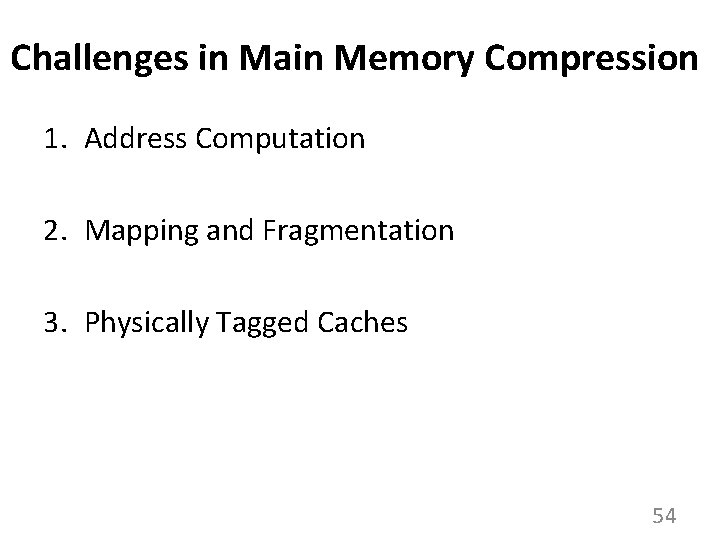

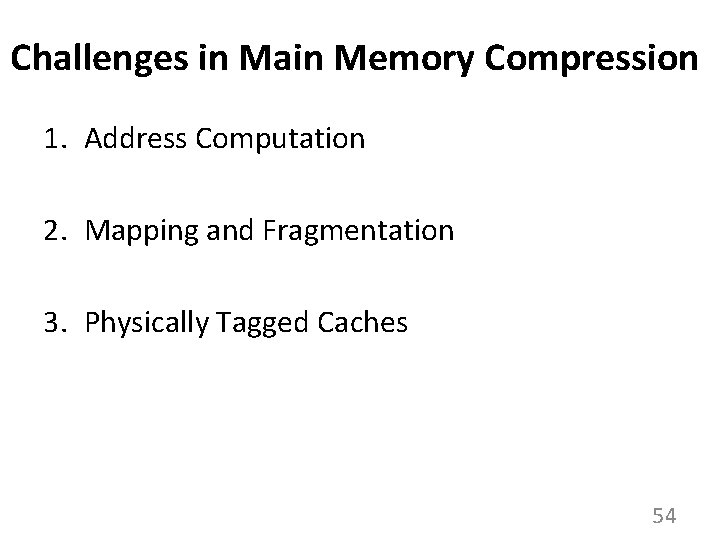

Executive Summary § § § Main memory is a limited shared resource Observation: Significant data redundancy Idea: Compress data in main memory Problem: How to avoid latency increase? Solution: Linearly Compressed Pages (LCP): fixed-size cache line granularity compression 1. Increases capacity (69% on average) 2. Decreases bandwidth consumption (46%) 3. Improves overall performance (9. 5%) 53

Challenges in Main Memory Compression 1. Address Computation 2. Mapping and Fragmentation 3. Physically Tagged Caches 54

Address Computation Cache Line (64 B) Uncompressed Page Address Offset 0 Compressed Page Address Offset L 1 L 0 0 128 64 L 1 L 0 ? . . . L 2 ? LN-1 (N-1)*64 . . . LN-1 ? 55

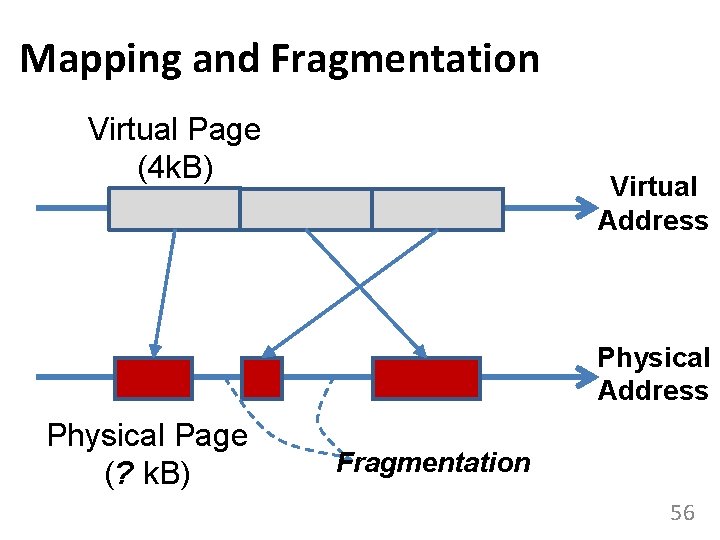

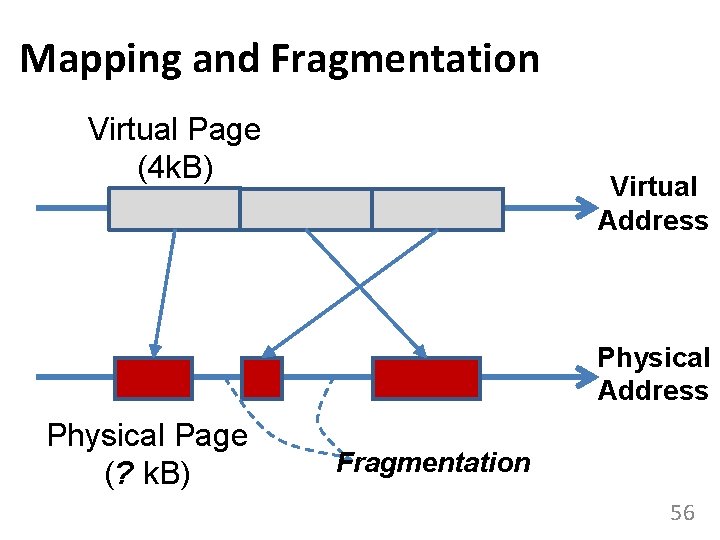

Mapping and Fragmentation Virtual Page (4 k. B) Virtual Address Physical Page (? k. B) Fragmentation 56

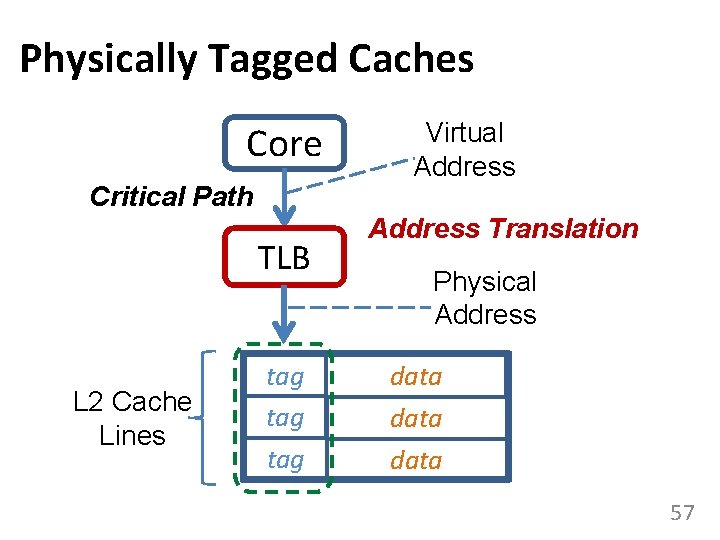

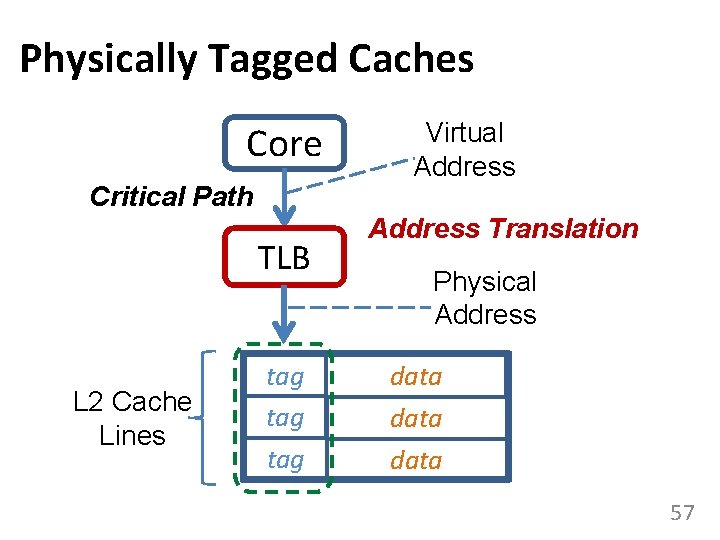

Physically Tagged Caches Core Critical Path TLB L 2 Cache Lines tag tag Virtual Address Translation Physical Address data 57

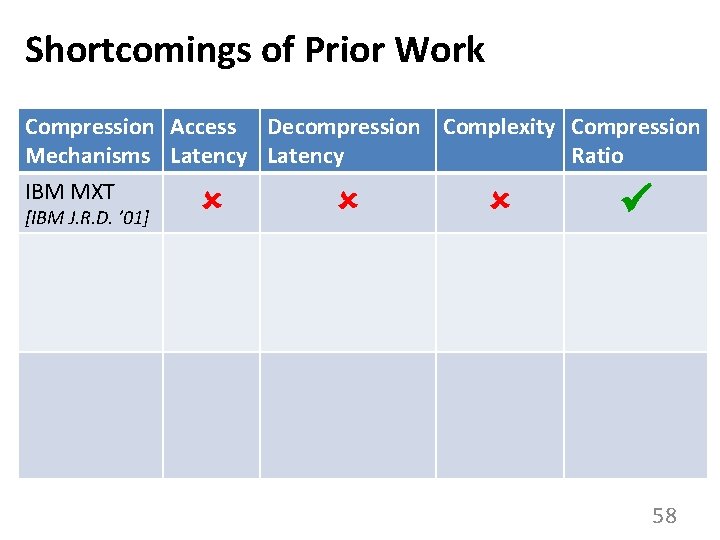

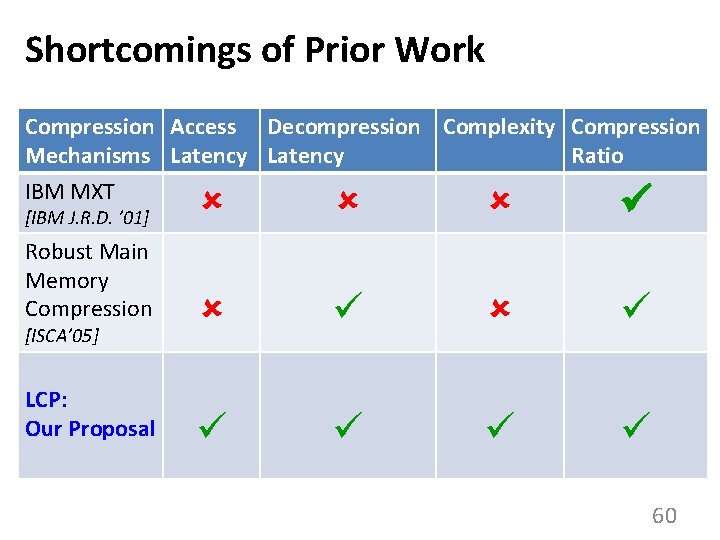

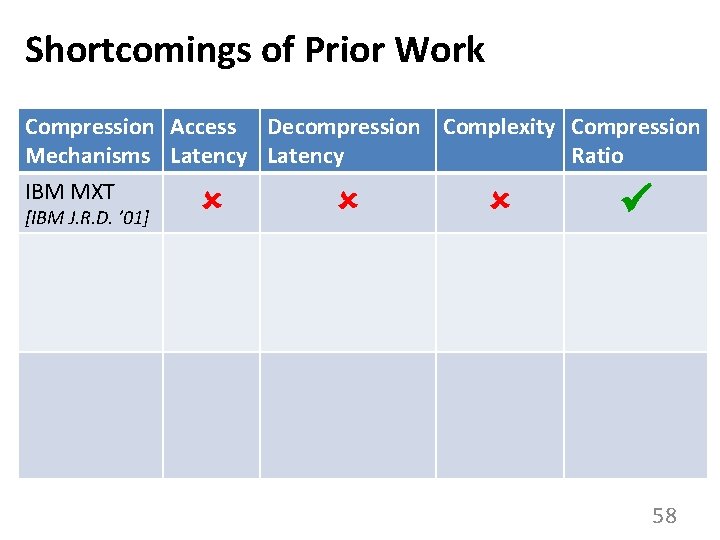

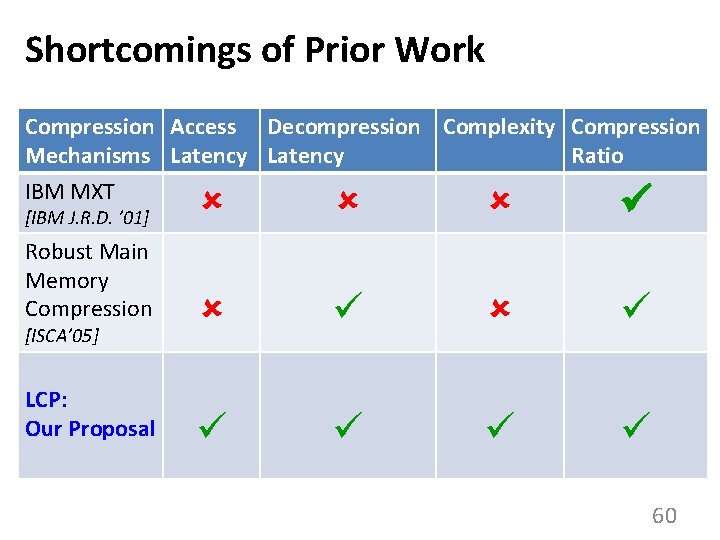

Shortcomings of Prior Work Compression Access Decompression Complexity Compression Mechanisms Latency Ratio IBM MXT [IBM J. R. D. ’ 01] 58

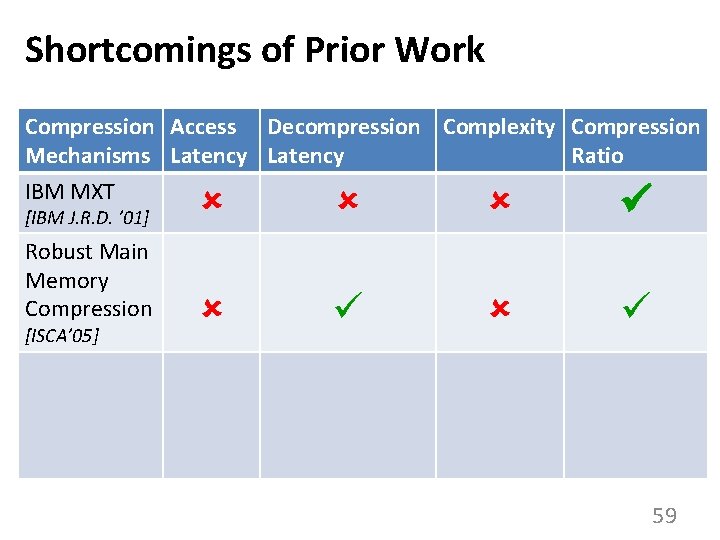

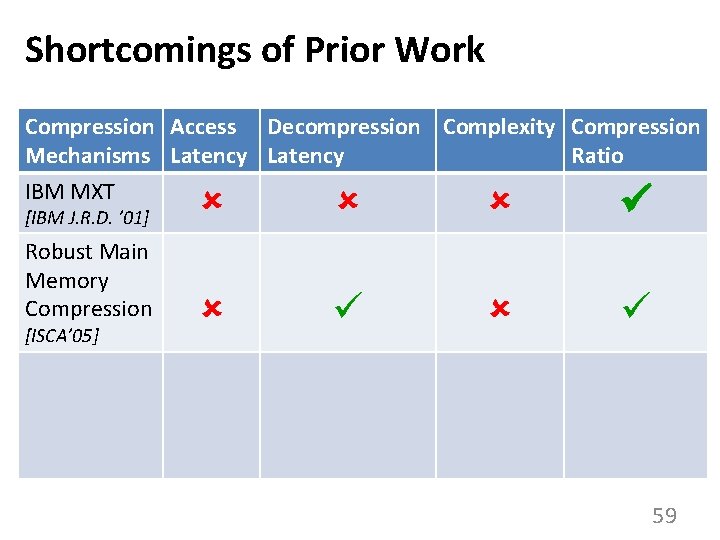

Shortcomings of Prior Work Compression Access Decompression Complexity Compression Mechanisms Latency Ratio IBM MXT [IBM J. R. D. ’ 01] Robust Main Memory Compression [ISCA’ 05] 59

Shortcomings of Prior Work Compression Access Decompression Complexity Compression Mechanisms Latency Ratio IBM MXT [IBM J. R. D. ’ 01] Robust Main Memory Compression LCP: Our Proposal [ISCA’ 05] 60

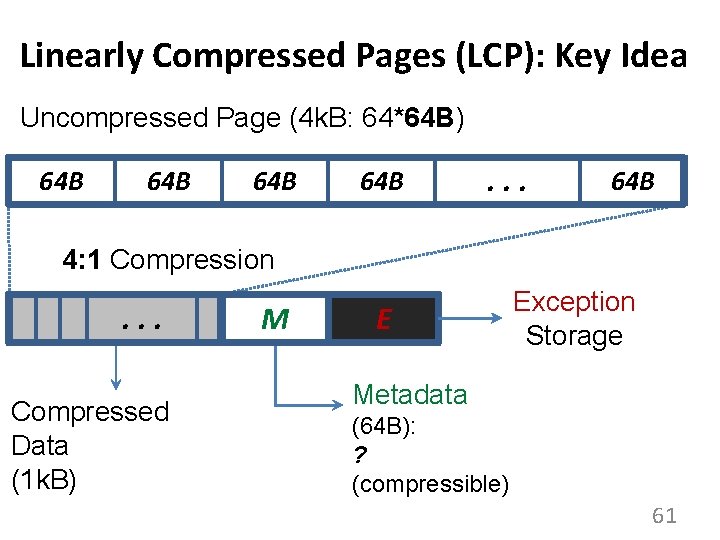

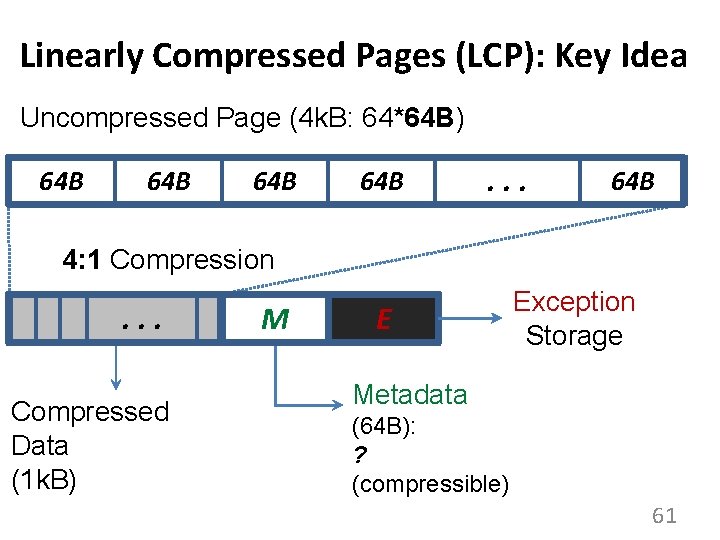

Linearly Compressed Pages (LCP): Key Idea Uncompressed Page (4 k. B: 64*64 B) 64 B 64 B . . . 64 B 4: 1 Compression . . . Compressed Data (1 k. B) M E Exception Storage Metadata (64 B): ? (compressible) 61

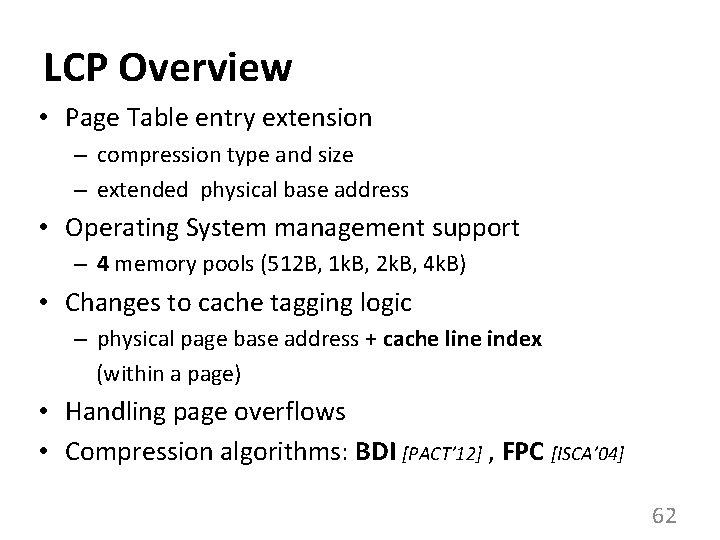

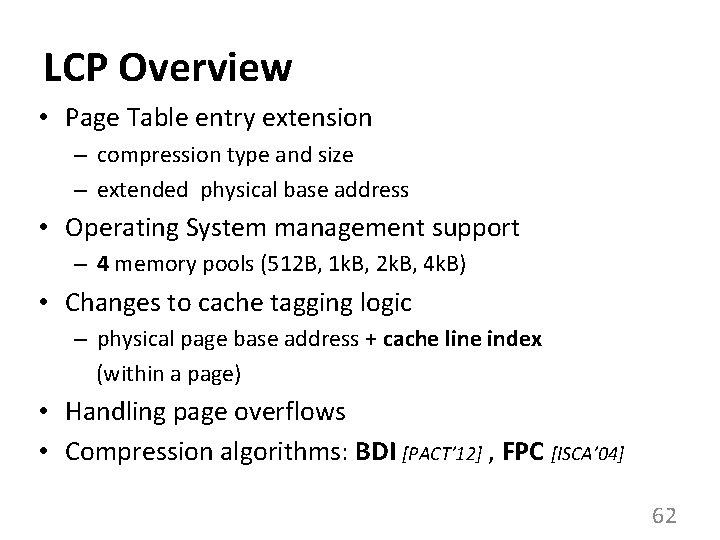

LCP Overview • Page Table entry extension – compression type and size – extended physical base address • Operating System management support – 4 memory pools (512 B, 1 k. B, 2 k. B, 4 k. B) • Changes to cache tagging logic – physical page base address + cache line index (within a page) • Handling page overflows • Compression algorithms: BDI [PACT’ 12] , FPC [ISCA’ 04] 62

LCP Optimizations • Metadata cache – Avoids additional requests to metadata • Memory bandwidth reduction: 64 B 64 B 1 transfer instead of 4 • Zero pages and zero cache lines – Handled separately in TLB (1 -bit) and in metadata (1 -bit per cache line) • Integration with cache compression – BDI and FPC 63

![Methodology Simulator x 86 eventdriven simulators Simicsbased Magnusson Computer 02 for Methodology • Simulator – x 86 event-driven simulators • Simics-based [Magnusson+, Computer’ 02] for](https://slidetodoc.com/presentation_image_h2/a9c6f1dcd62d8b60d2a22accbc243faf/image-64.jpg)

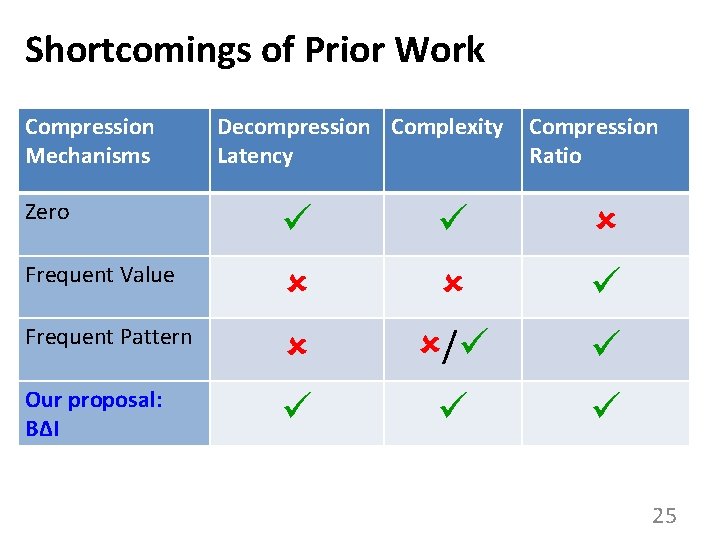

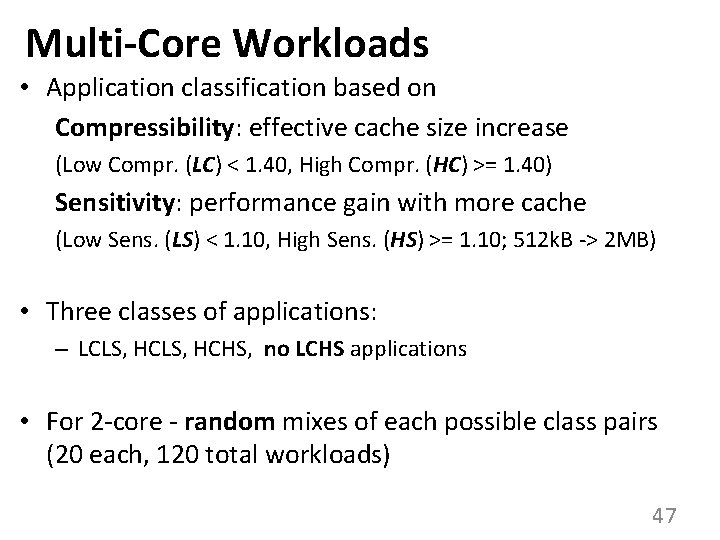

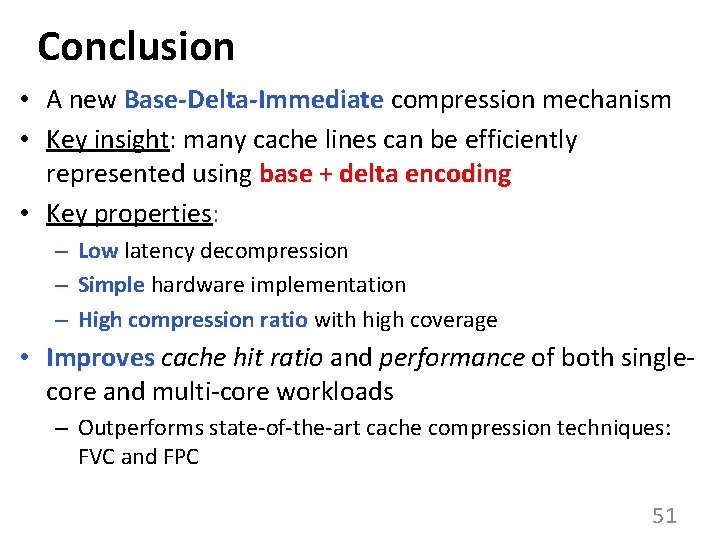

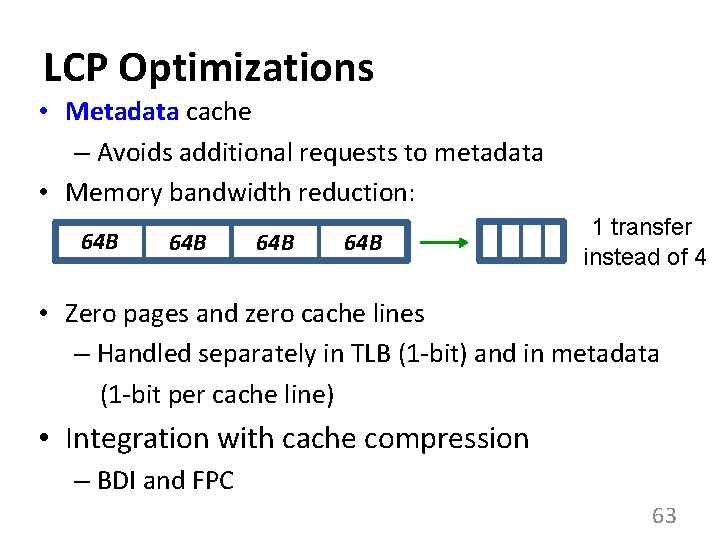

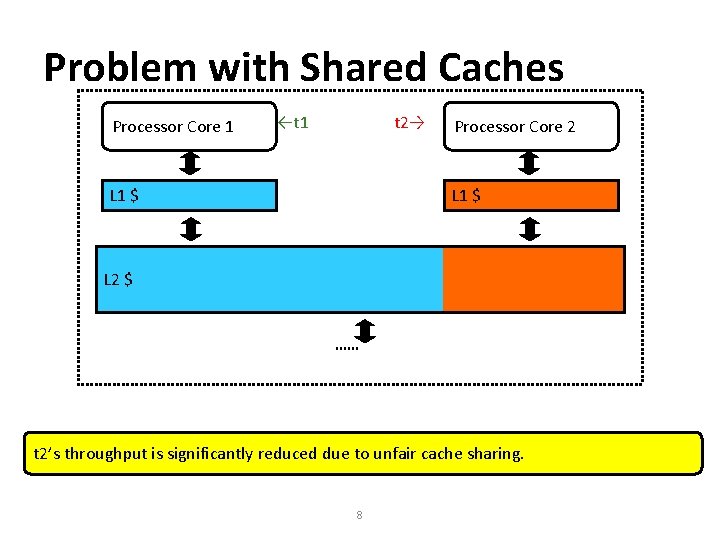

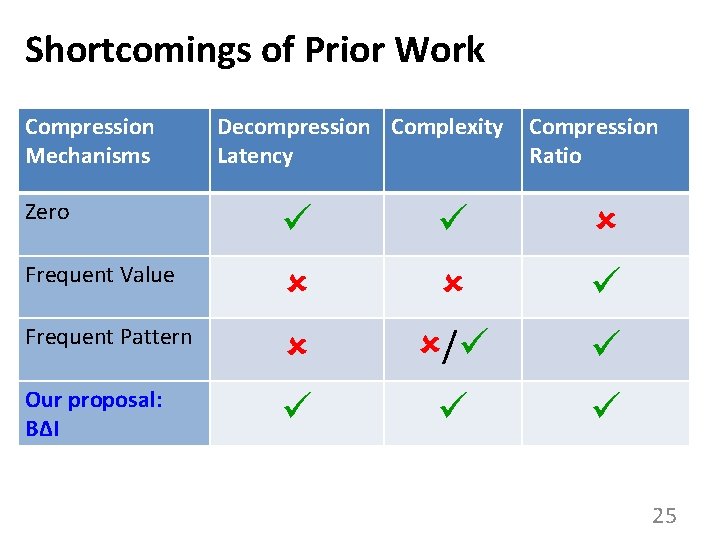

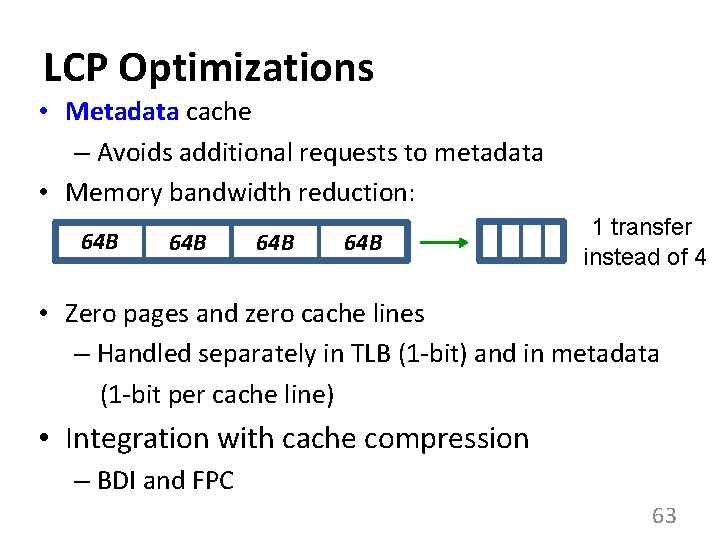

Methodology • Simulator – x 86 event-driven simulators • Simics-based [Magnusson+, Computer’ 02] for CPU • Multi 2 Sim [Ubal+, PACT’ 12] for GPU • Workloads – SPEC 2006 benchmarks, TPC, Apache web server, GPGPU applications • System Parameters – L 1/L 2/L 3 cache latencies from CACTI [Thoziyoor+, ISCA’ 08] – 512 k. B - 16 MB L 2, simple memory model 64

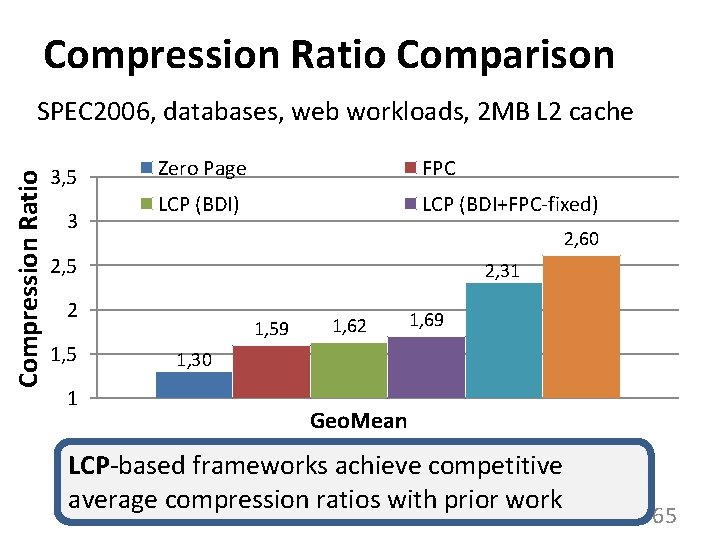

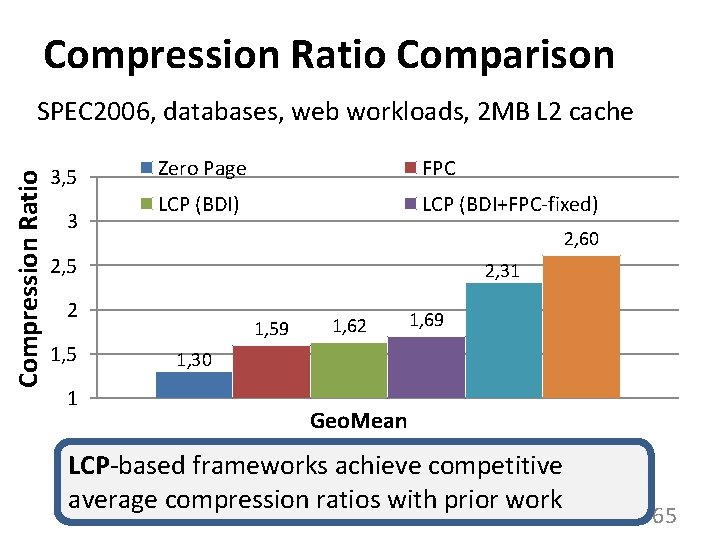

Compression Ratio Comparison Compression Ratio SPEC 2006, databases, web workloads, 2 MB L 2 cache 3, 5 3 Zero Page FPC LCP (BDI) LCP (BDI+FPC-fixed) 2, 60 2, 5 2, 31 2 1, 5 1 1, 59 1, 62 1, 69 1, 30 Geo. Mean LCP-based frameworks achieve competitive average compression ratios with prior work 65

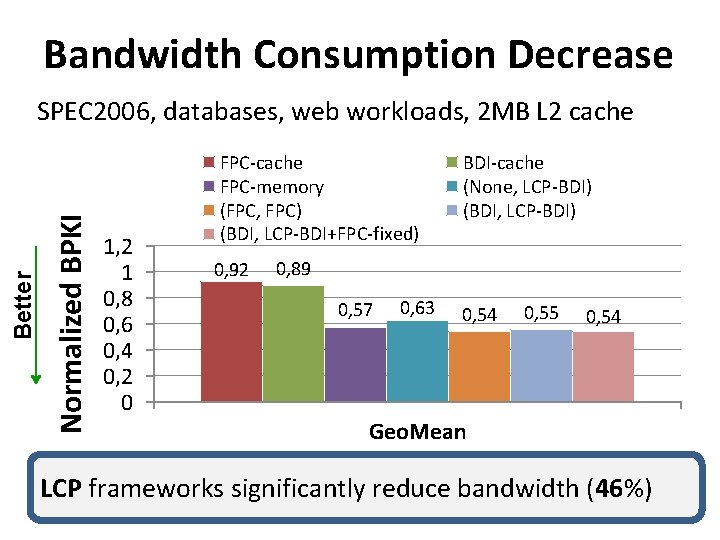

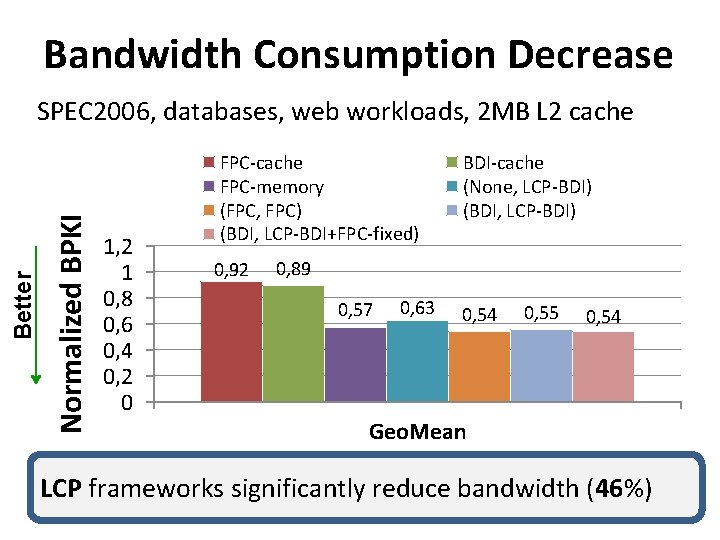

Bandwidth Consumption Decrease Normalized BPKI Better SPEC 2006, databases, web workloads, 2 MB L 2 cache 1, 2 1 0, 8 0, 6 0, 4 0, 2 0 FPC-cache FPC-memory (FPC, FPC) (BDI, LCP-BDI+FPC-fixed) 0, 92 BDI-cache (None, LCP-BDI) (BDI, LCP-BDI) 0, 89 0, 57 0, 63 0, 54 0, 55 0, 54 Geo. Mean LCP frameworks significantly reduce bandwidth (46%) 66

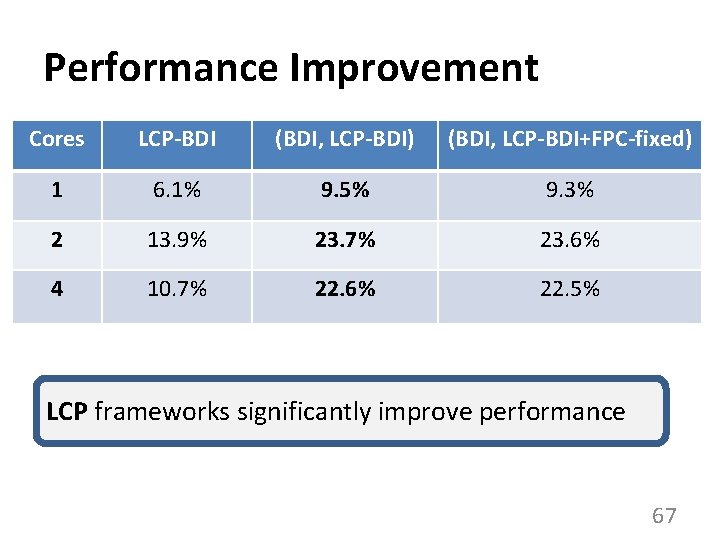

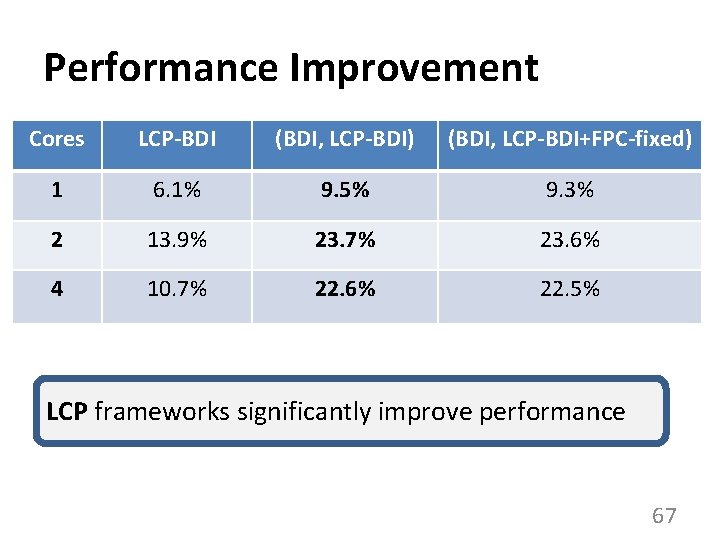

Performance Improvement Cores LCP-BDI (BDI, LCP-BDI) (BDI, LCP-BDI+FPC-fixed) 1 6. 1% 9. 5% 9. 3% 2 13. 9% 23. 7% 23. 6% 4 10. 7% 22. 6% 22. 5% LCP frameworks significantly improve performance 67

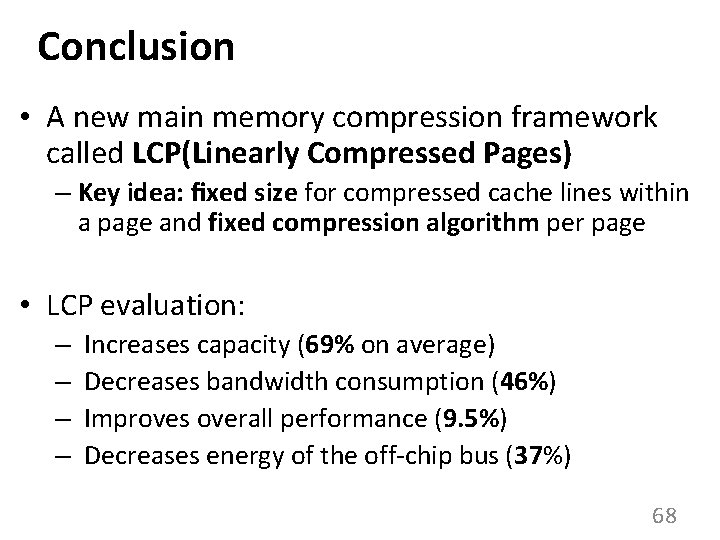

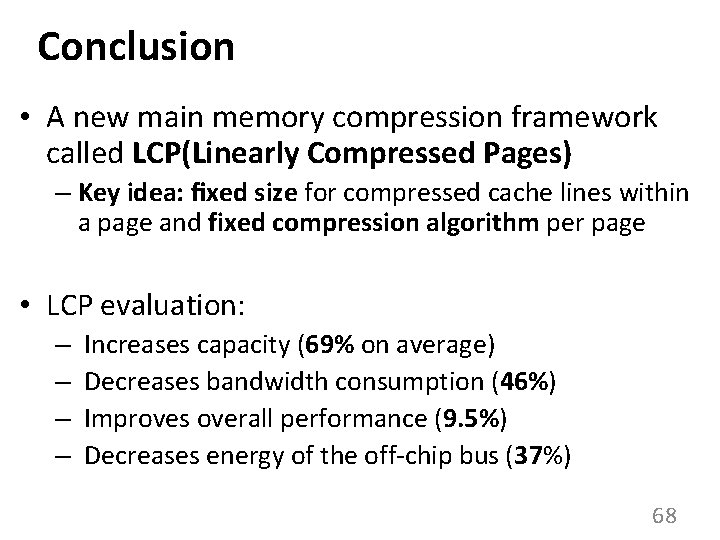

Conclusion • A new main memory compression framework called LCP(Linearly Compressed Pages) – Key idea: fixed size for compressed cache lines within a page and fixed compression algorithm per page • LCP evaluation: – – Increases capacity (69% on average) Decreases bandwidth consumption (46%) Improves overall performance (9. 5%) Decreases energy of the off-chip bus (37%) 68