18 447 Computer Architecture Lecture 9 Pipelining and

- Slides: 62

18 -447 Computer Architecture Lecture 9: Pipelining and Related Issues Prof. Onur Mutlu Carnegie Mellon University Spring 2012, 2/15/2012

Reminder: Homeworks n Homework 3 q q q Due Feb 27 Out 3 questions n n n LC-3 b microcode Adding REP MOVS to LC-3 b Pipelining 2

Reminder: Lab Assignments n Lab Assignment 2 q q Due Friday, Feb 17, at the end of the lab Individual assignment n n No collaboration; please respect the honor code Lab Assignment 3 q q Already out Extra credit n n n Early check off: 5% Fastest three designs: 5% + prizes More on this later 3

Reminder: Extra Credit for Lab Assignment 2 n Complete your normal (single-cycle) implementation first, n n n and get it checked off in lab. Then, implement the MIPS core using a microcoded approach similar to what we are discussing in class. We are not specifying any particular details of the microcode format or the microarchitecture; you should be creative. For the extra credit, the microcoded implementation should execute the same programs that your ordinary implementation does, and you should demo it by the normal lab deadline. 4

Readings for Today n Pipelining q q n P&H Chapter 4. 5 -4. 8 Pipelined LC-3 b Microarchitecture Handout Optional q Hamacher et al. book, Chapter 6, “Pipelining” 5

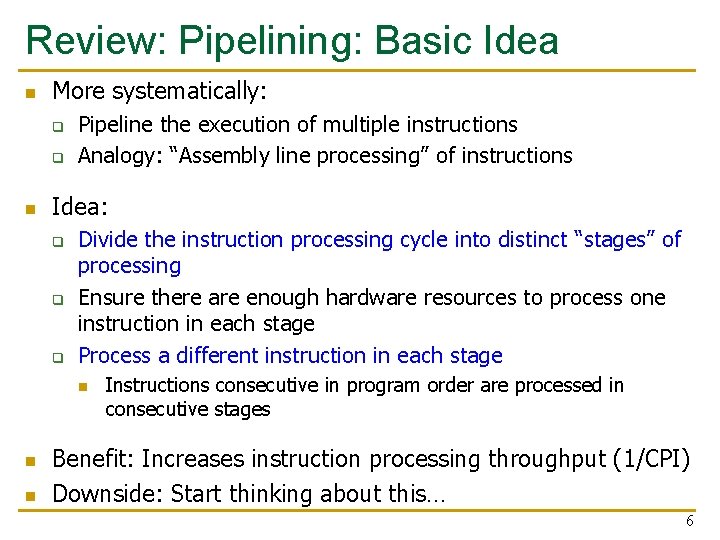

Review: Pipelining: Basic Idea n More systematically: q q n Pipeline the execution of multiple instructions Analogy: “Assembly line processing” of instructions Idea: q q q Divide the instruction processing cycle into distinct “stages” of processing Ensure there are enough hardware resources to process one instruction in each stage Process a different instruction in each stage n n n Instructions consecutive in program order are processed in consecutive stages Benefit: Increases instruction processing throughput (1/CPI) Downside: Start thinking about this… 6

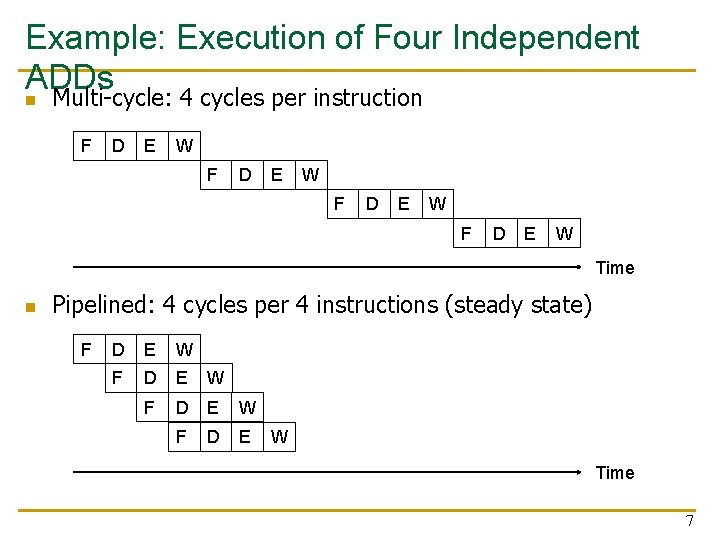

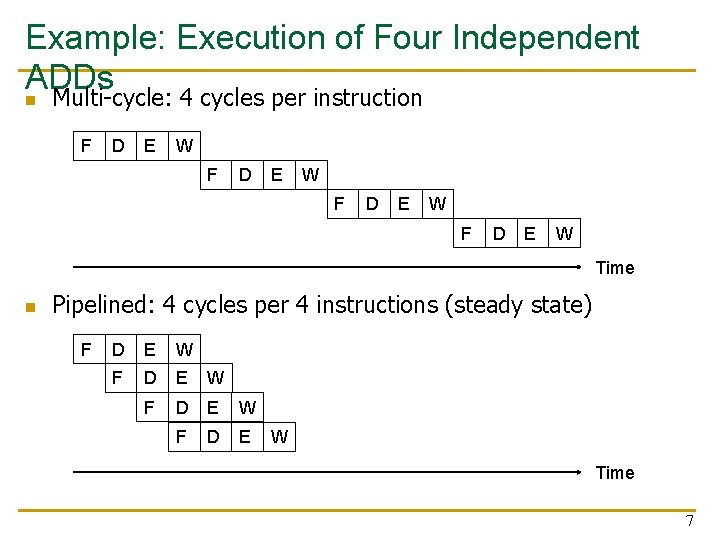

Example: Execution of Four Independent ADDs n Multi-cycle: 4 cycles per instruction F D E W Time n Pipelined: 4 cycles per 4 instructions (steady state) F D E W Time 7

Review: The Laundry Analogy n n “place one dirty load of clothes in the washer” “when the washer is finished, place the wet load in the dryer” “when the dryer is finished, take out the dry load and fold” “when folding is finished, ask your roommate (? ? ) to put the clothes away” - steps to do a load are sequentially dependent - no dependence between different loads - different steps do not share resources Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 8

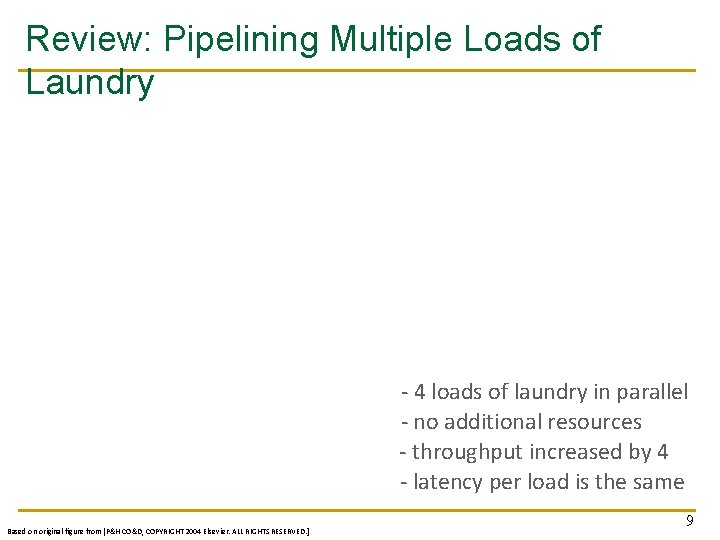

Review: Pipelining Multiple Loads of Laundry - 4 loads of laundry in parallel - no additional resources - throughput increased by 4 - latency per load is the same Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 9

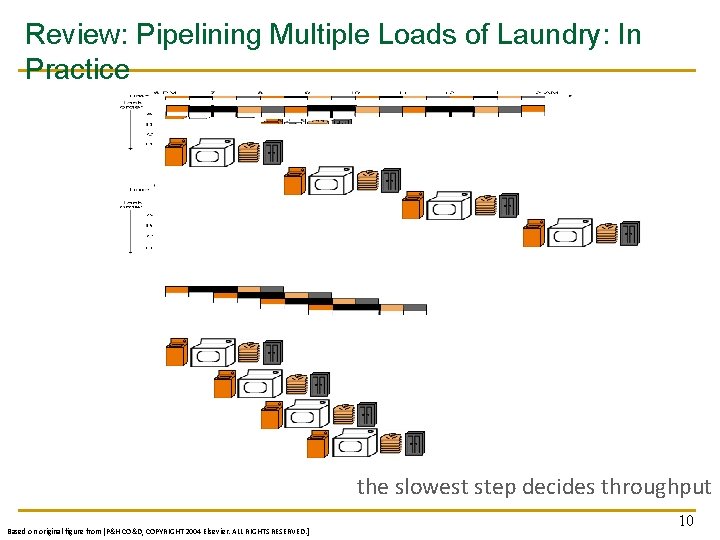

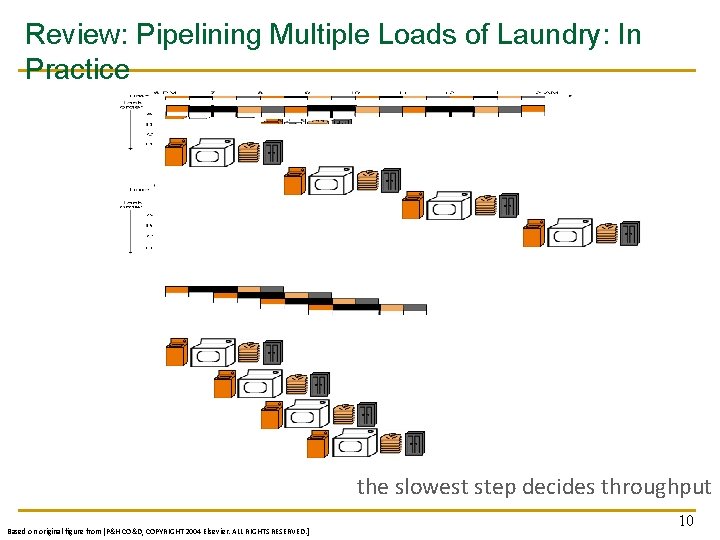

Review: Pipelining Multiple Loads of Laundry: In Practice the slowest step decides throughput Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 10

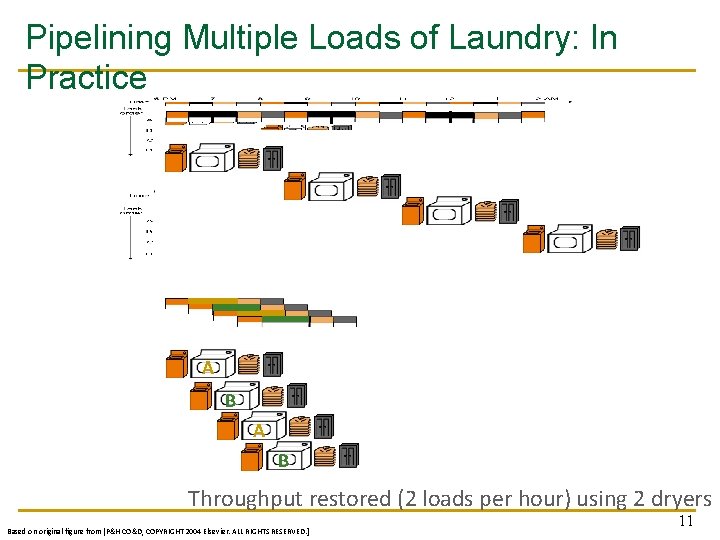

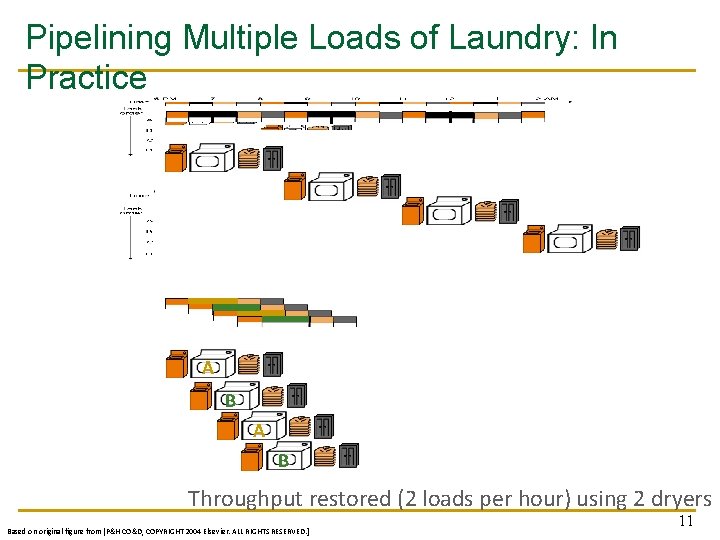

Pipelining Multiple Loads of Laundry: In Practice A B Throughput restored (2 loads per hour) using 2 dryers Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 11

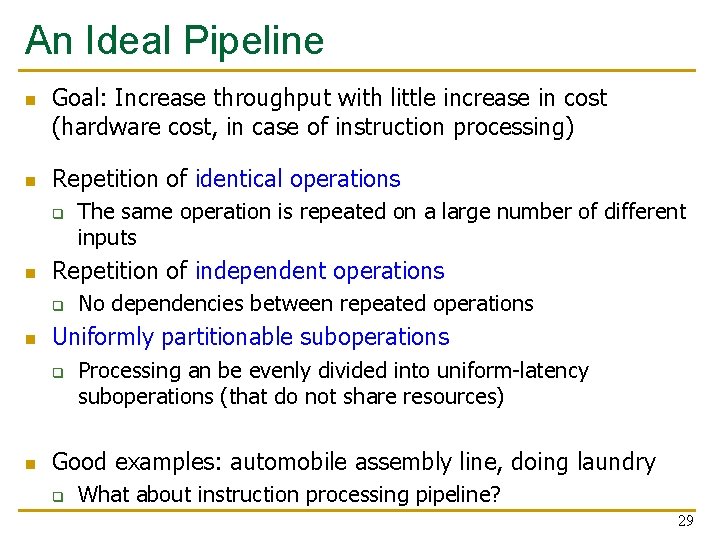

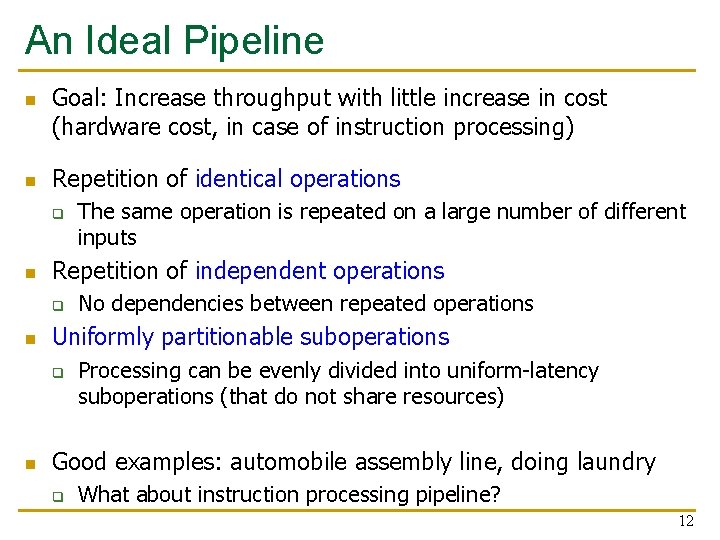

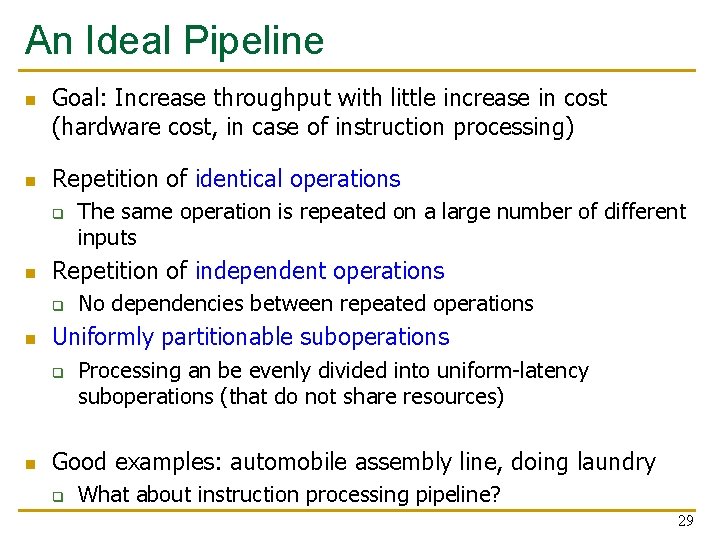

An Ideal Pipeline n n Goal: Increase throughput with little increase in cost (hardware cost, in case of instruction processing) Repetition of identical operations q n Repetition of independent operations q n No dependencies between repeated operations Uniformly partitionable suboperations q n The same operation is repeated on a large number of different inputs Processing can be evenly divided into uniform-latency suboperations (that do not share resources) Good examples: automobile assembly line, doing laundry q What about instruction processing pipeline? 12

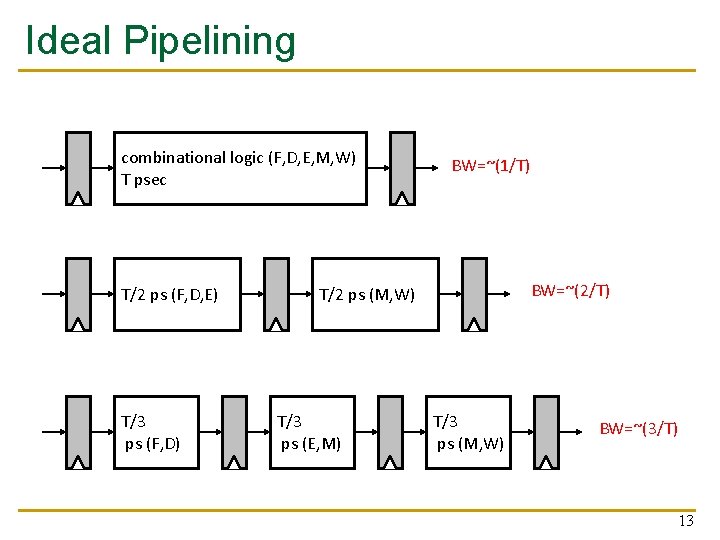

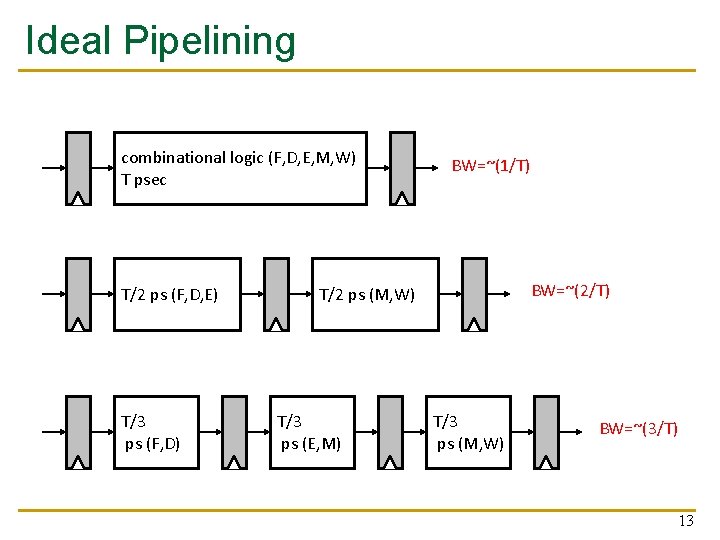

Ideal Pipelining combinational logic (F, D, E, M, W) T psec T/2 ps (F, D, E) T/3 ps (F, D) BW=~(1/T) BW=~(2/T) T/2 ps (M, W) T/3 ps (E, M) T/3 ps (M, W) BW=~(3/T) 13

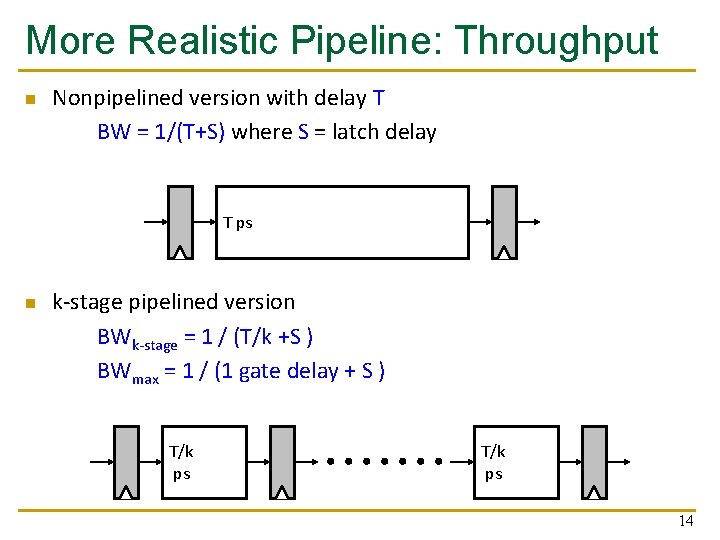

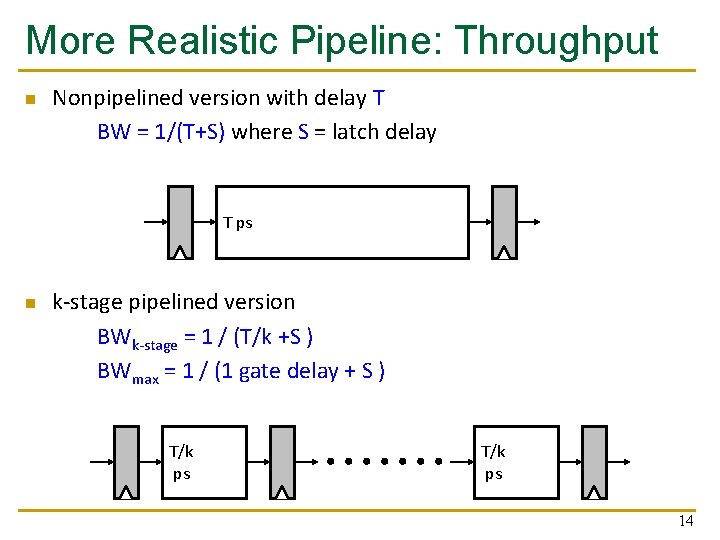

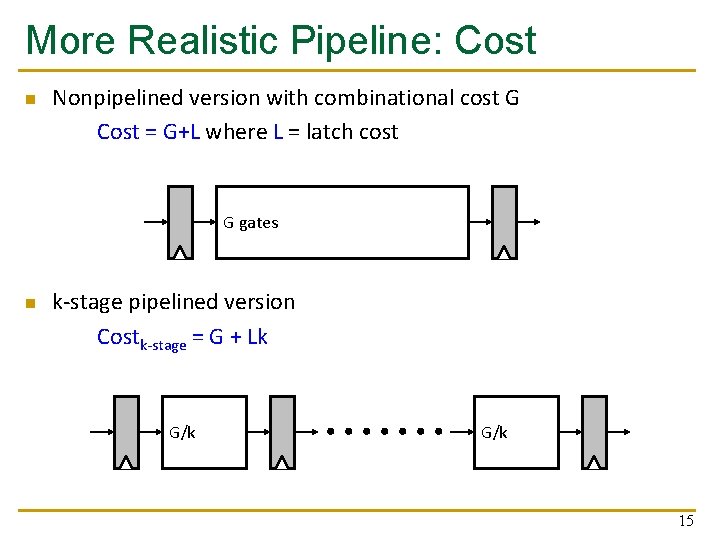

More Realistic Pipeline: Throughput n Nonpipelined version with delay T BW = 1/(T+S) where S = latch delay T ps n k-stage pipelined version BWk-stage = 1 / (T/k +S ) BWmax = 1 / (1 gate delay + S ) T/k ps 14

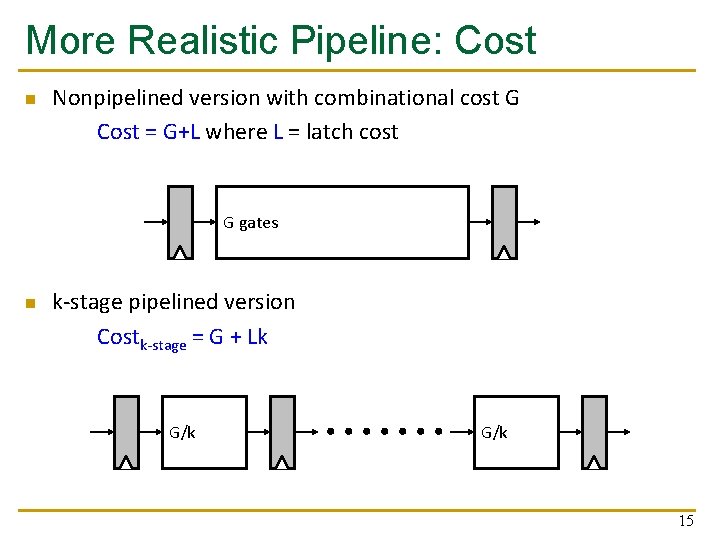

More Realistic Pipeline: Cost n Nonpipelined version with combinational cost G Cost = G+L where L = latch cost G gates n k-stage pipelined version Costk-stage = G + Lk G/k 15

Pipelining Instruction Processing 16

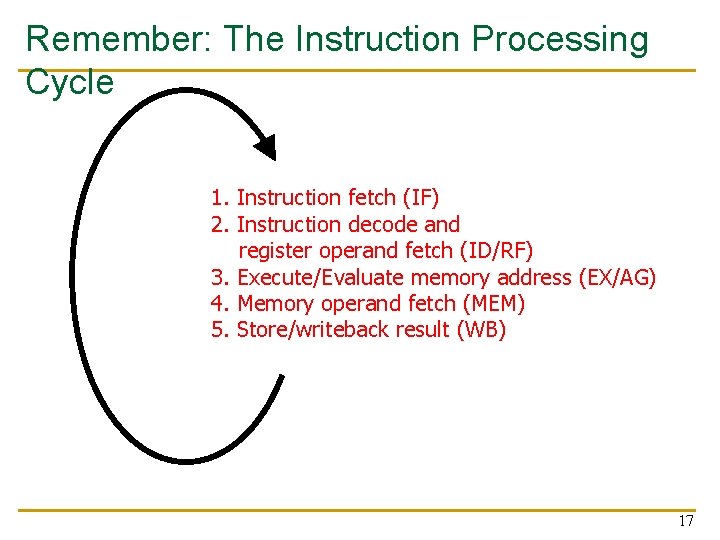

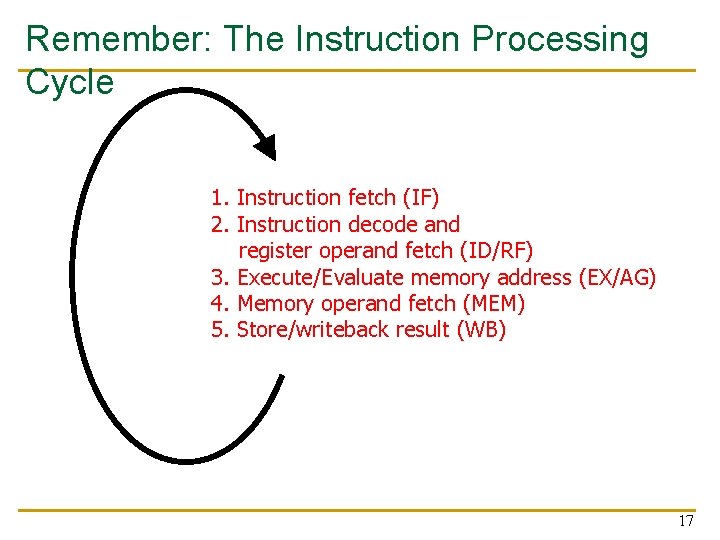

Remember: The Instruction Processing Cycle q Fetch fetch (IF) 1. Instruction q Decodedecode and 2. Instruction register operand fetch (ID/RF) q Evaluate Address 3. Execute/Evaluate memory address (EX/AG) q Fetch Operands 4. Memory operand fetch (MEM) q Execute 5. Store/writeback result (WB) q Store Result 17

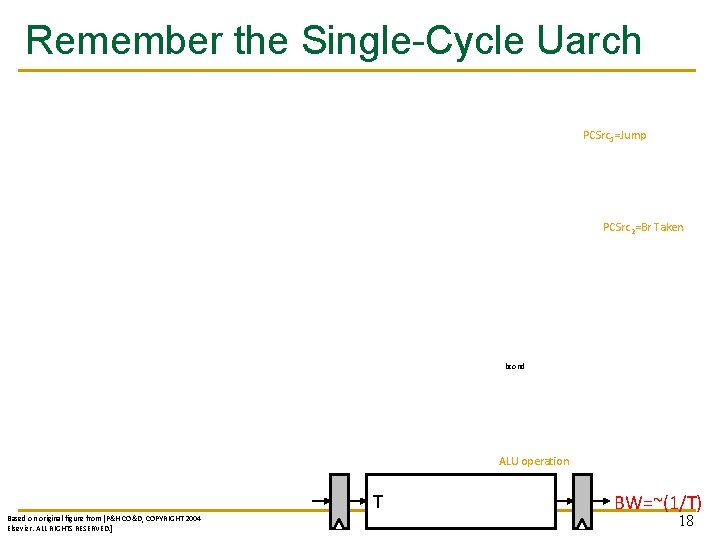

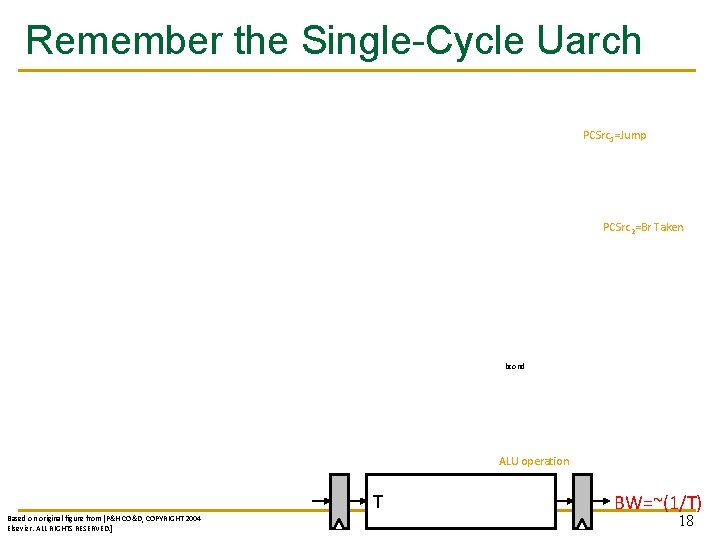

Remember the Single-Cycle Uarch PCSrc 1=Jump PCSrc 2=Br Taken bcond ALU operation T Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] BW=~(1/T) 18

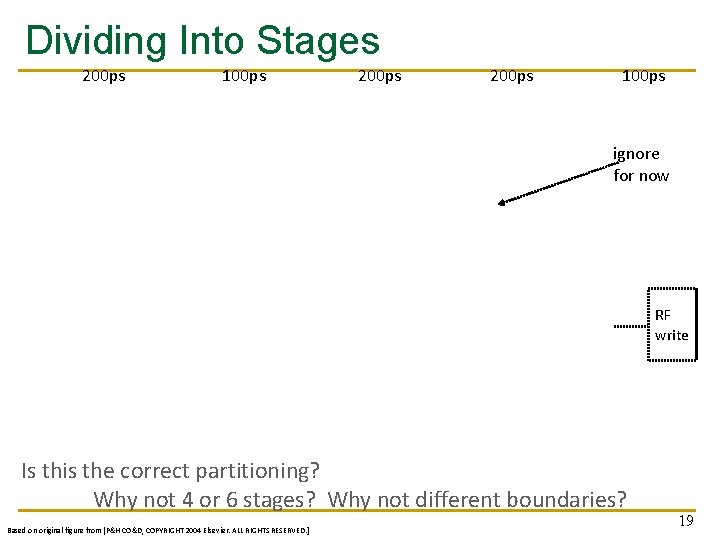

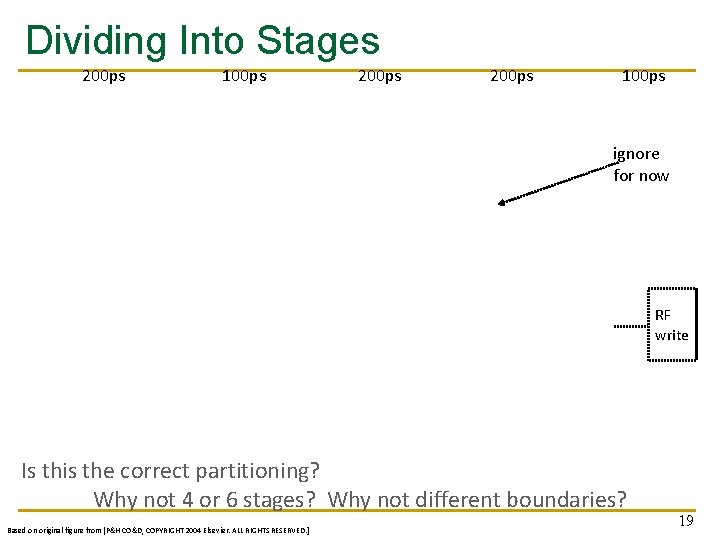

Dividing Into Stages 200 ps 100 ps ignore for now RF write Is this the correct partitioning? Why not 4 or 6 stages? Why not different boundaries? Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 19

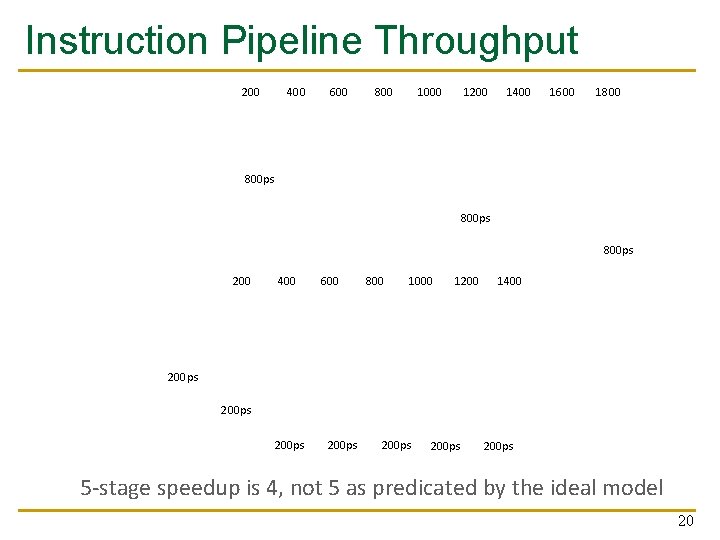

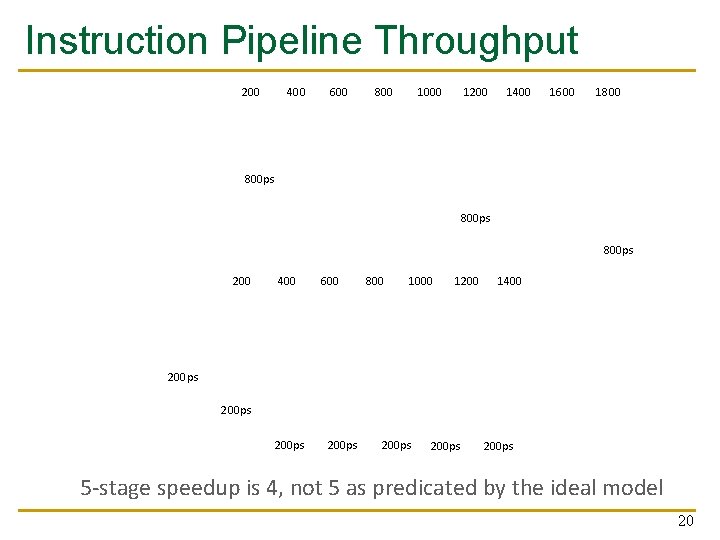

Instruction Pipeline Throughput 200 400 600 800 1000 1200 1400 1600 1800 800 ps 200 400 600 800 1000 1200 1400 200 ps 200 ps 5 -stage speedup is 4, not 5 as predicated by the ideal model 20

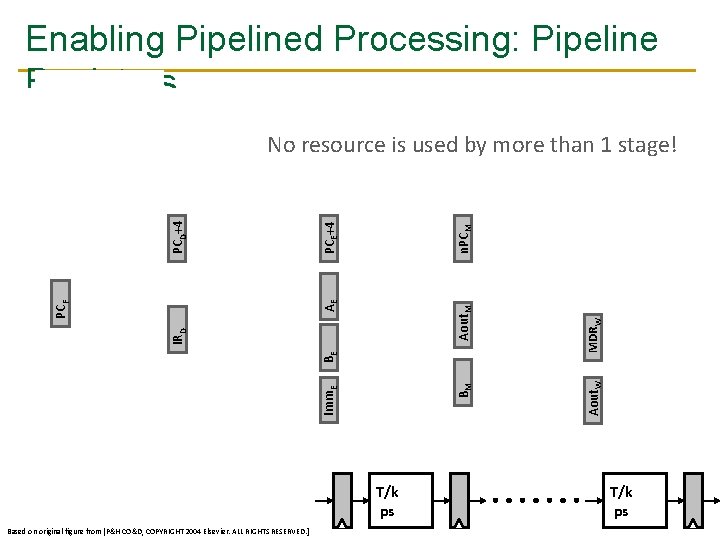

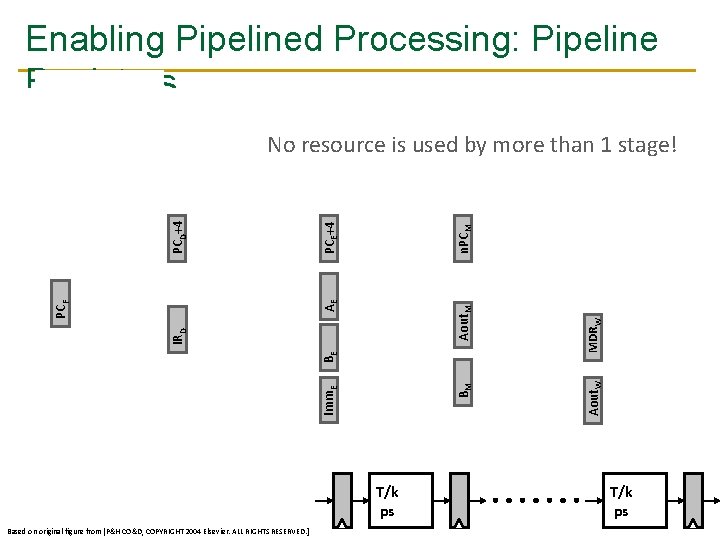

Enabling Pipelined Processing: Pipeline Registers Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] T Aout. W Aout. M BM Imm. E T/k ps MDRW n. PCM PCE+4 BE IRD PCF AE PCD+4 No resource is used by more than 1 stage! T/k ps 21

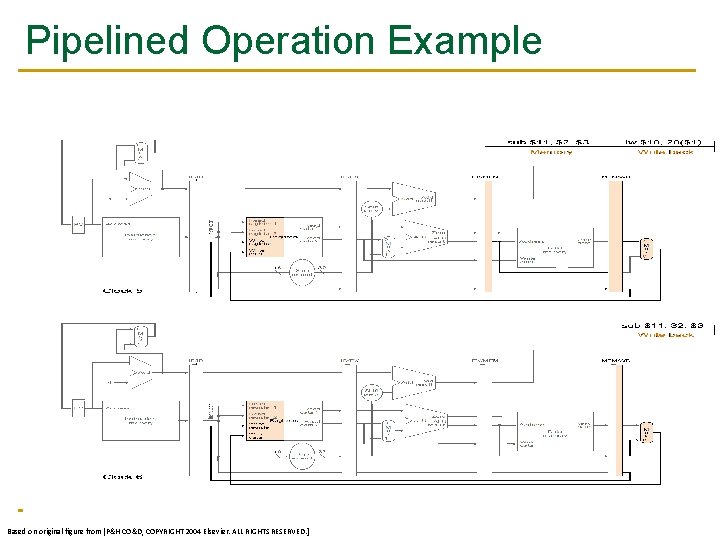

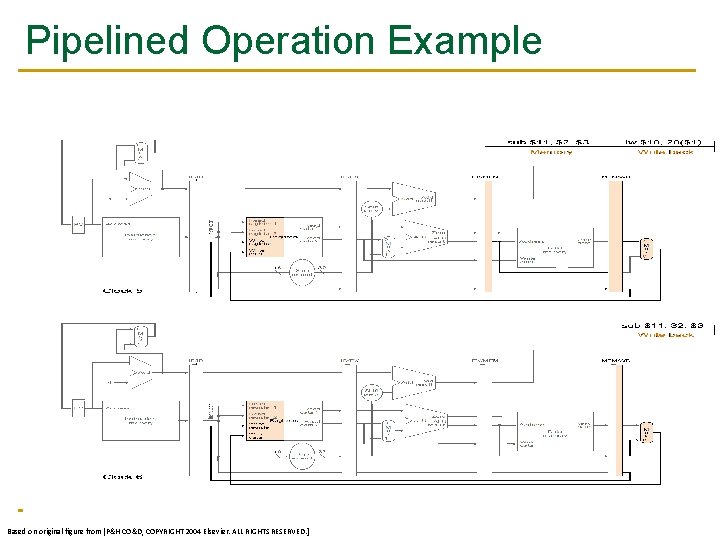

Pipelined Operation Example All instruction classes must follow the same path and timing through the pipeline stages. Any performance impact? Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 22

Pipelined Operation Example Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 23

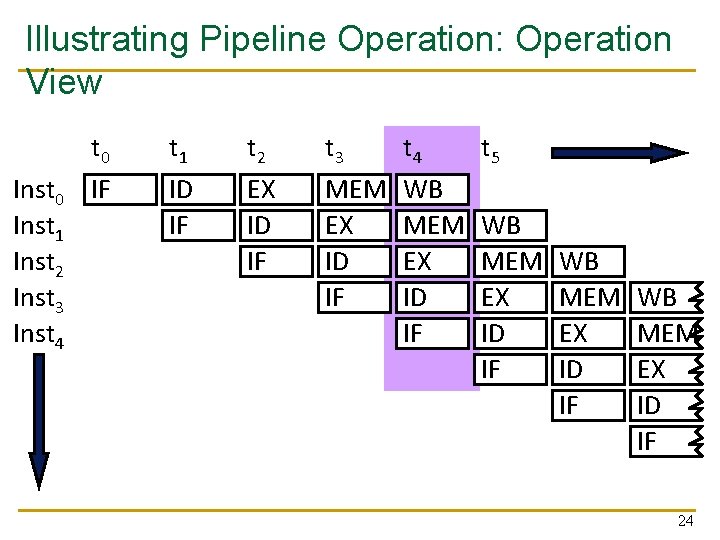

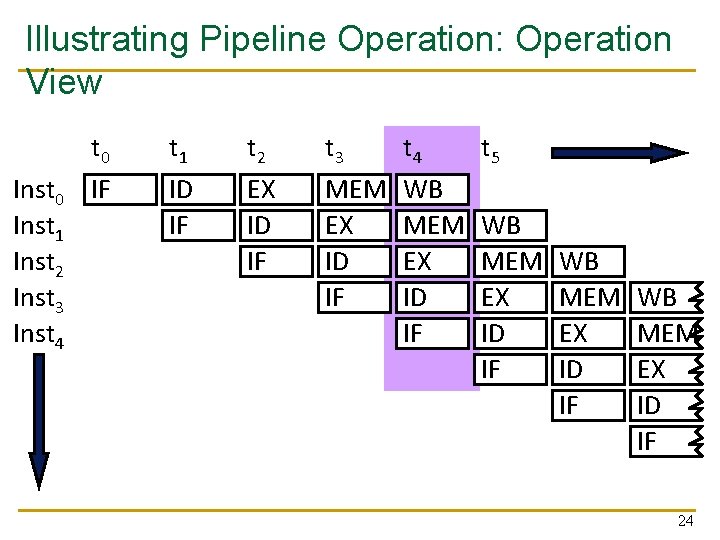

Illustrating Pipeline Operation: Operation View Inst 0 Inst 1 Inst 2 Inst 3 Inst 4 t 0 IF t 1 ID IF t 2 EX ID IF t 3 MEM EX ID IF t 4 WB MEM EX ID IF t 5 WB MEM EX ID IF 24

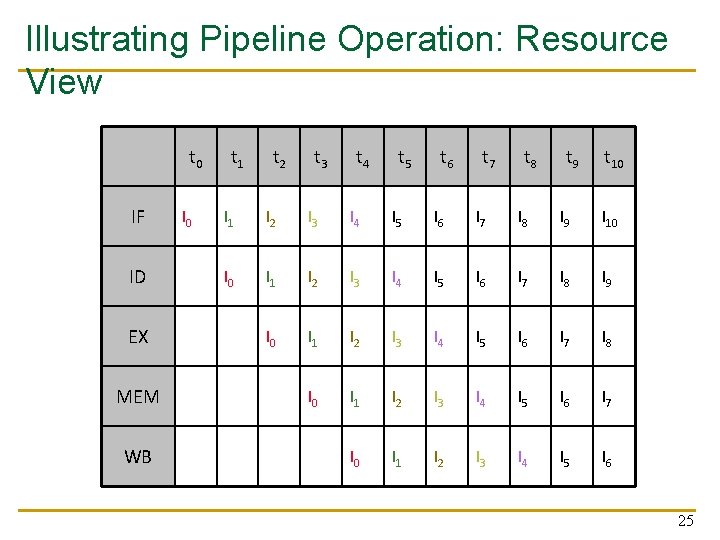

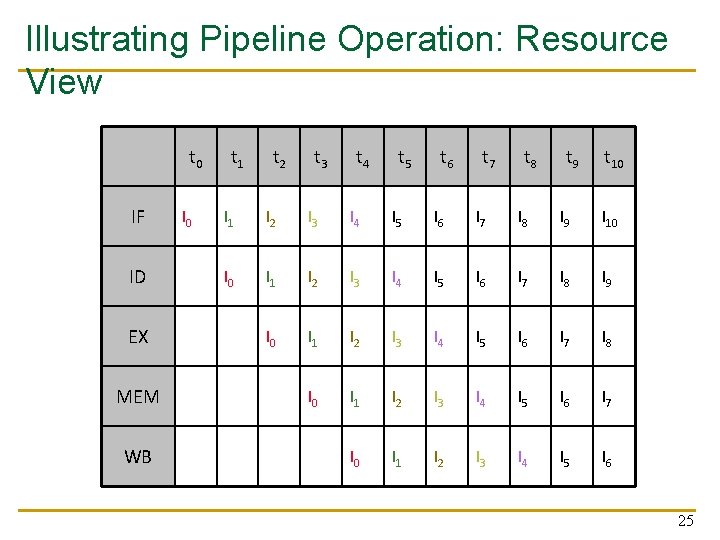

Illustrating Pipeline Operation: Resource View t 0 IF ID EX MEM WB I 0 t 1 t 2 t 3 t 4 t 5 t 6 t 7 t 8 t 9 t 10 I 1 I 2 I 3 I 4 I 5 I 6 I 7 I 8 I 9 I 0 I 1 I 2 I 3 I 4 I 5 I 6 I 7 I 8 I 0 I 1 I 2 I 3 I 4 I 5 I 6 I 7 I 0 I 1 I 2 I 3 I 4 I 5 I 6 25

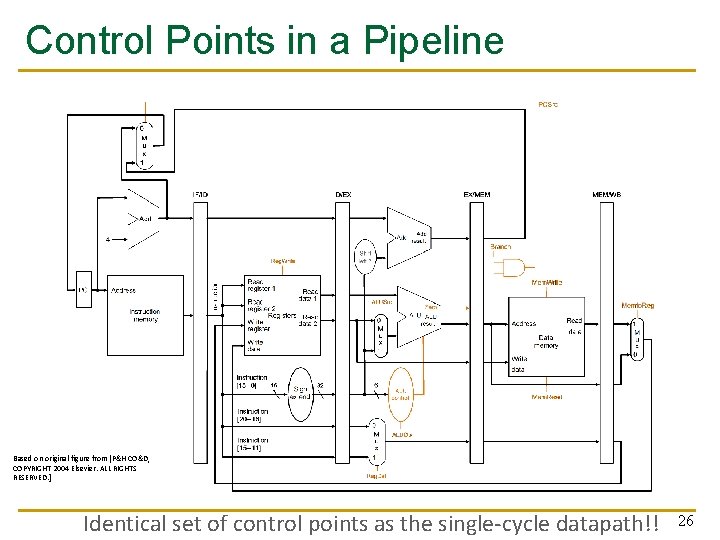

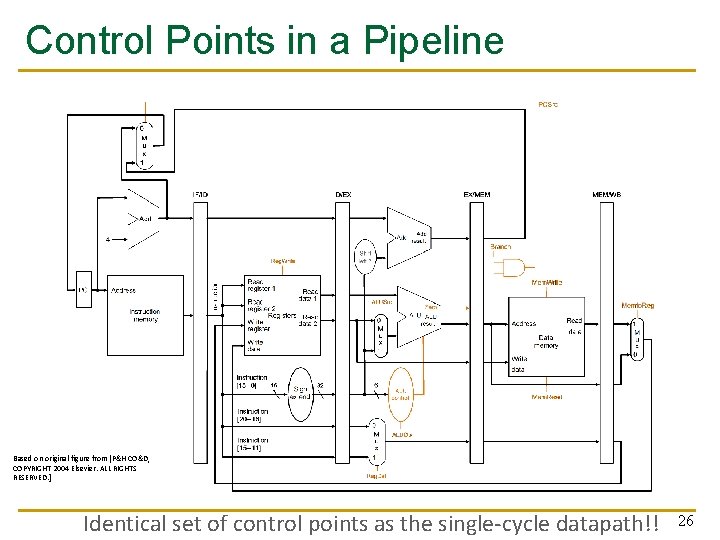

Control Points in a Pipeline Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] Identical set of control points as the single-cycle datapath!! 26

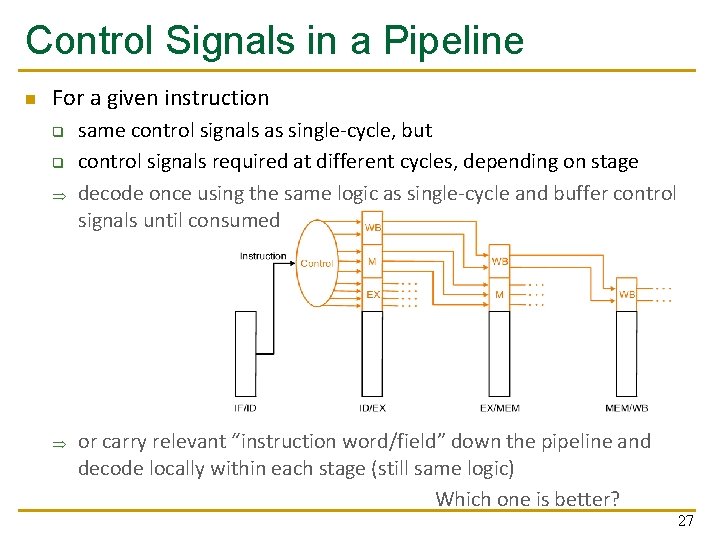

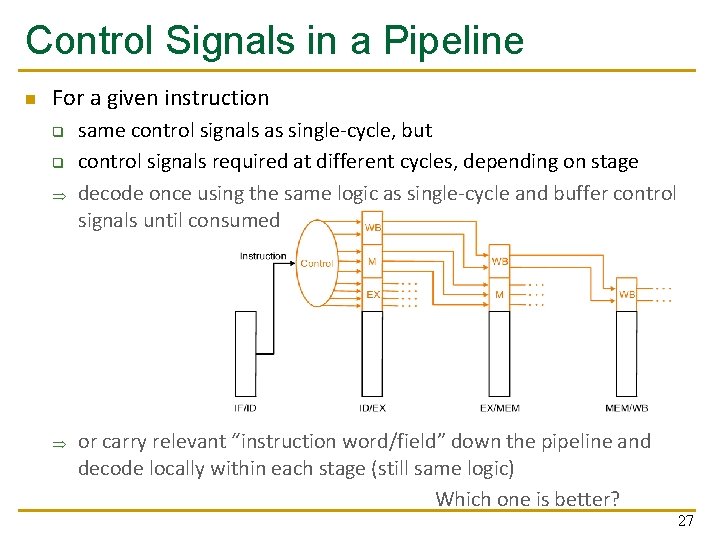

Control Signals in a Pipeline n For a given instruction q q same control signals as single-cycle, but control signals required at different cycles, depending on stage decode once using the same logic as single-cycle and buffer control signals until consumed or carry relevant “instruction word/field” down the pipeline and decode locally within each stage (still same logic) Which one is better? 27

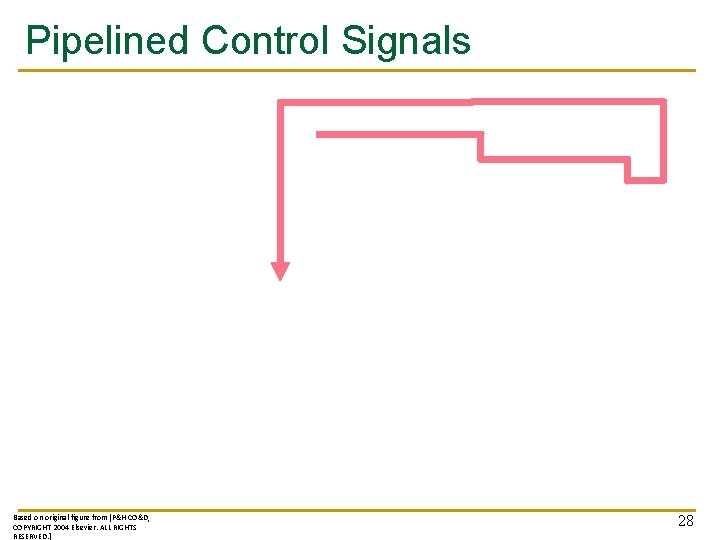

Pipelined Control Signals Based on original figure from [P&H CO&D, COPYRIGHT 2004 Elsevier. ALL RIGHTS RESERVED. ] 28

An Ideal Pipeline n n Goal: Increase throughput with little increase in cost (hardware cost, in case of instruction processing) Repetition of identical operations q n Repetition of independent operations q n No dependencies between repeated operations Uniformly partitionable suboperations q n The same operation is repeated on a large number of different inputs Processing an be evenly divided into uniform-latency suboperations (that do not share resources) Good examples: automobile assembly line, doing laundry q What about instruction processing pipeline? 29

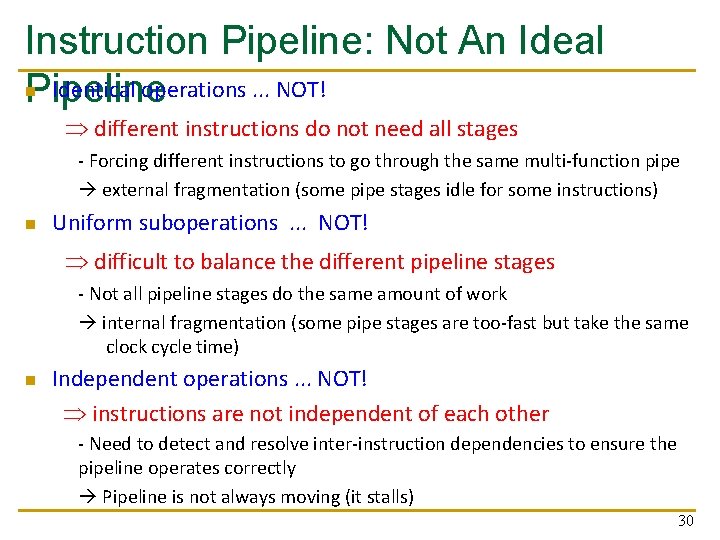

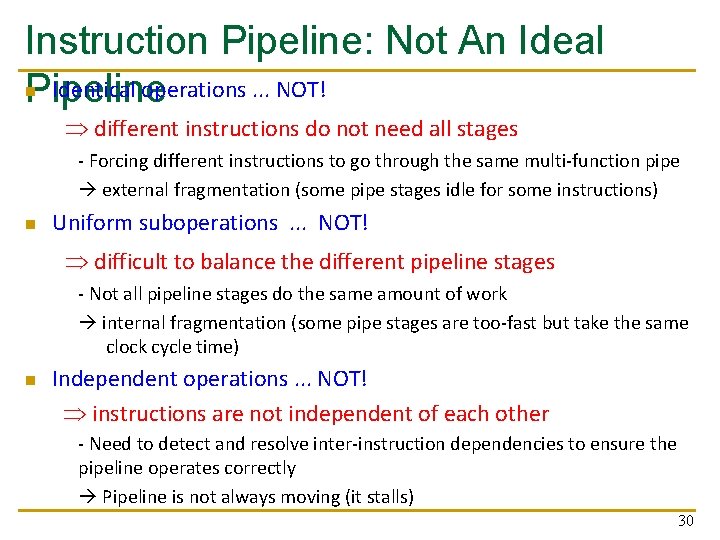

Instruction Pipeline: Not An Ideal n Identical operations. . . NOT! Pipeline different instructions do not need all stages - Forcing different instructions to go through the same multi-function pipe external fragmentation (some pipe stages idle for some instructions) n Uniform suboperations. . . NOT! difficult to balance the different pipeline stages - Not all pipeline stages do the same amount of work internal fragmentation (some pipe stages are too-fast but take the same clock cycle time) n Independent operations. . . NOT! instructions are not independent of each other - Need to detect and resolve inter-instruction dependencies to ensure the pipeline operates correctly Pipeline is not always moving (it stalls) 30

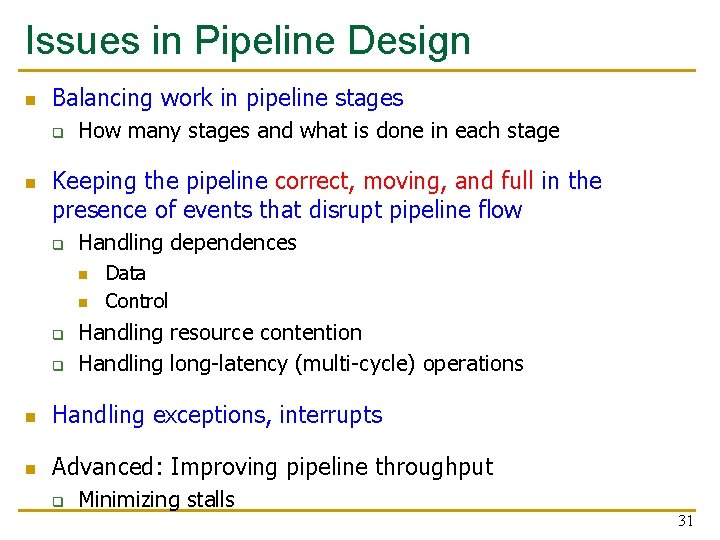

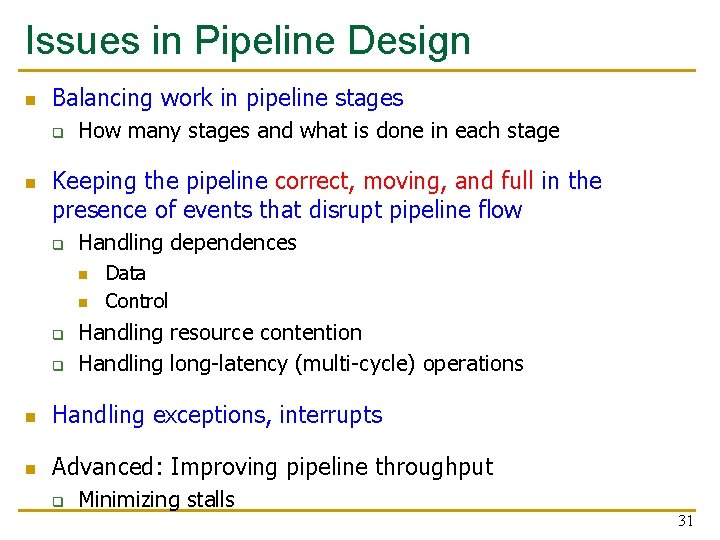

Issues in Pipeline Design n Balancing work in pipeline stages q n How many stages and what is done in each stage Keeping the pipeline correct, moving, and full in the presence of events that disrupt pipeline flow q Handling dependences n n q q Data Control Handling resource contention Handling long-latency (multi-cycle) operations n Handling exceptions, interrupts n Advanced: Improving pipeline throughput q Minimizing stalls 31

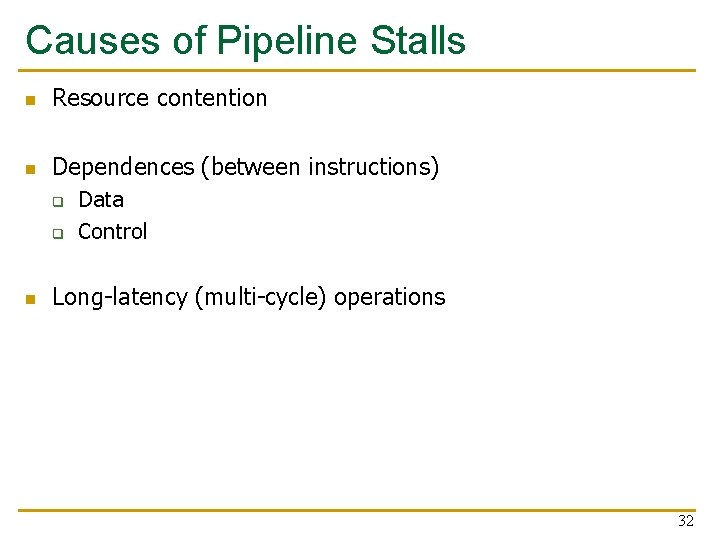

Causes of Pipeline Stalls n Resource contention n Dependences (between instructions) q q n Data Control Long-latency (multi-cycle) operations 32

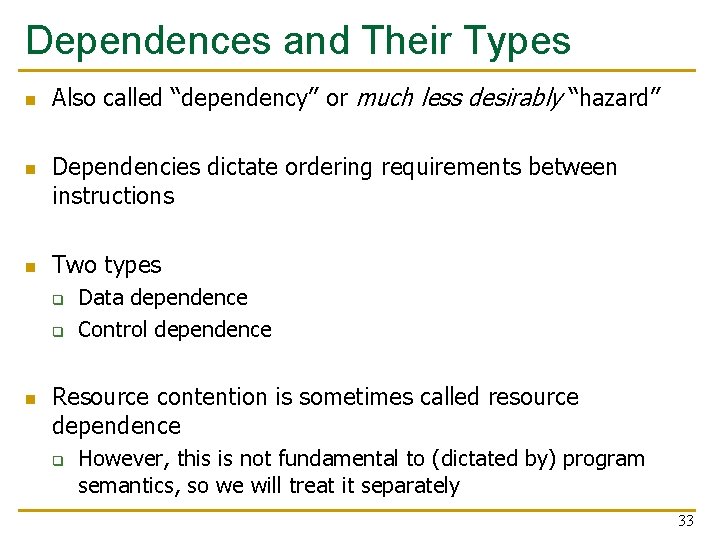

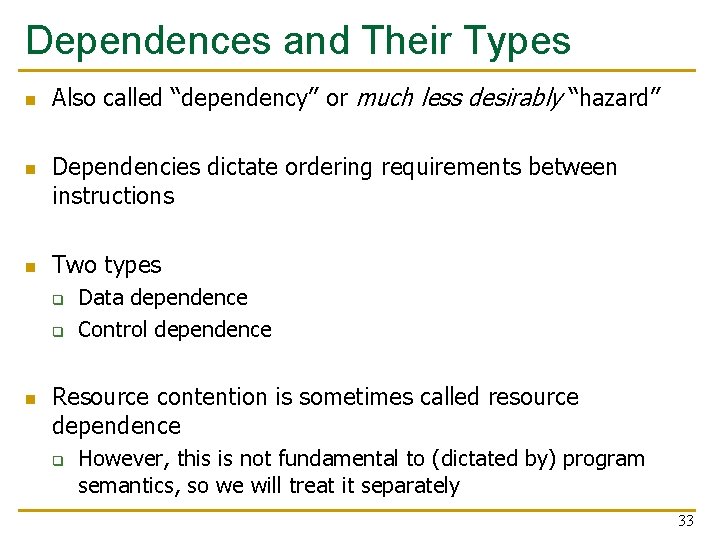

Dependences and Their Types n n n Also called “dependency” or much less desirably “hazard” Dependencies dictate ordering requirements between instructions Two types q q n Data dependence Control dependence Resource contention is sometimes called resource dependence q However, this is not fundamental to (dictated by) program semantics, so we will treat it separately 33

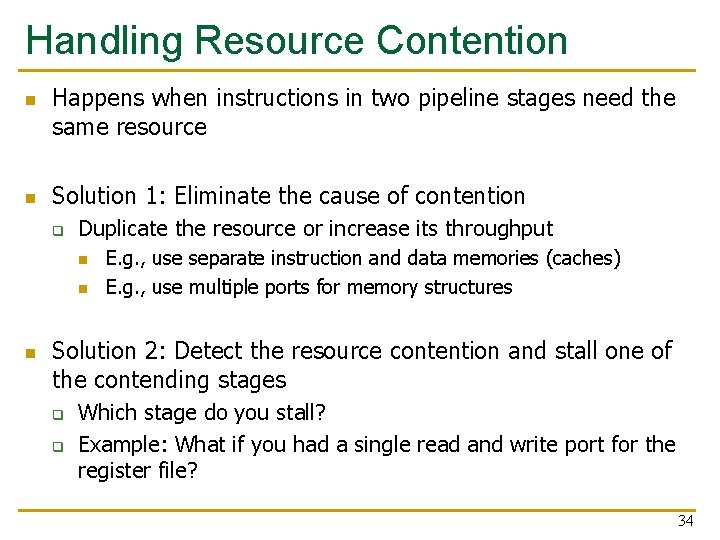

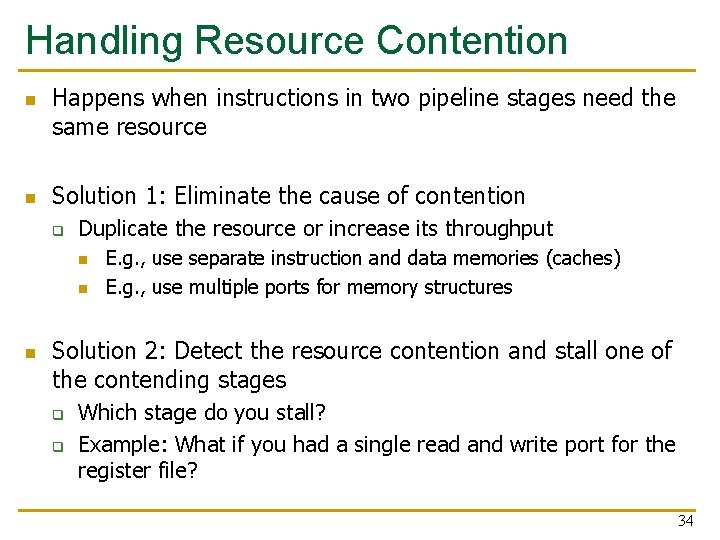

Handling Resource Contention n n Happens when instructions in two pipeline stages need the same resource Solution 1: Eliminate the cause of contention q Duplicate the resource or increase its throughput n n n E. g. , use separate instruction and data memories (caches) E. g. , use multiple ports for memory structures Solution 2: Detect the resource contention and stall one of the contending stages q q Which stage do you stall? Example: What if you had a single read and write port for the register file? 34

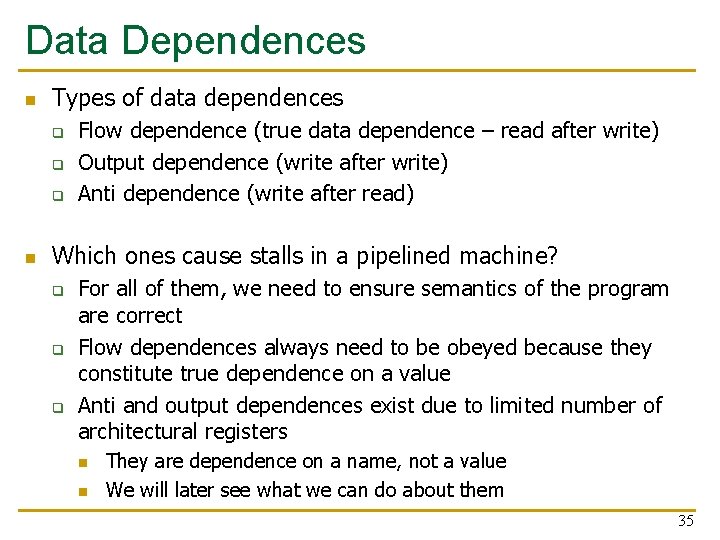

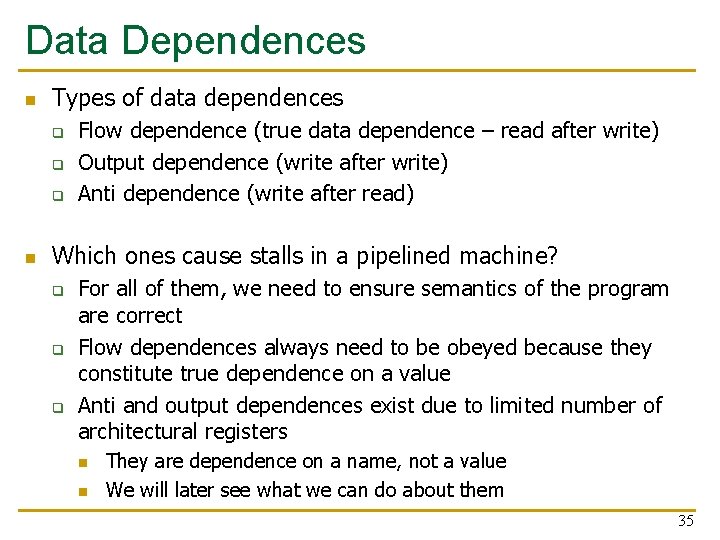

Data Dependences n Types of data dependences q q q n Flow dependence (true data dependence – read after write) Output dependence (write after write) Anti dependence (write after read) Which ones cause stalls in a pipelined machine? q q q For all of them, we need to ensure semantics of the program are correct Flow dependences always need to be obeyed because they constitute true dependence on a value Anti and output dependences exist due to limited number of architectural registers n n They are dependence on a name, not a value We will later see what we can do about them 35

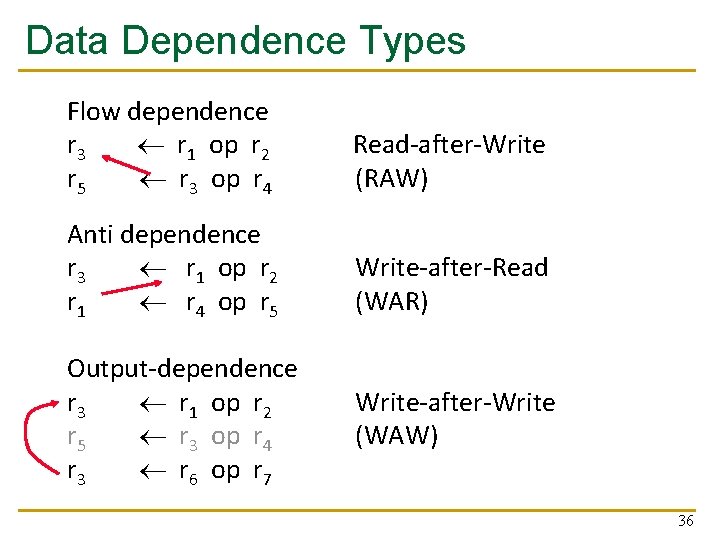

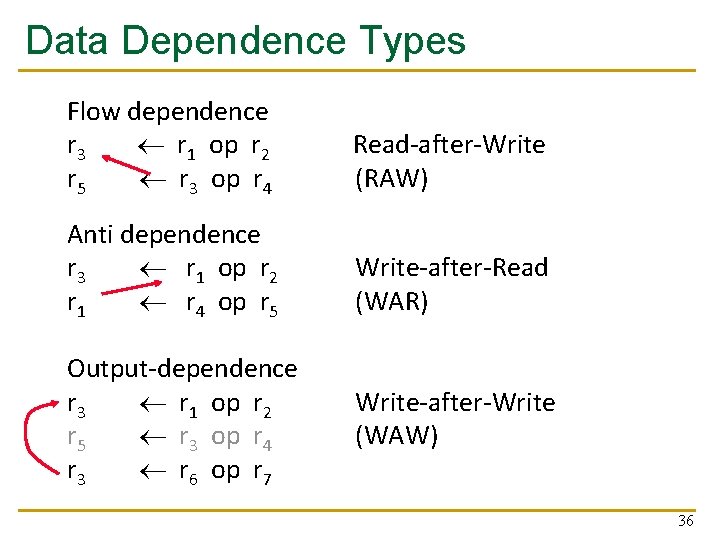

Data Dependence Types Flow dependence r 3 r 1 op r 2 r 5 r 3 op r 4 Read-after-Write (RAW) Anti dependence r 3 r 1 op r 2 r 1 r 4 op r 5 Write-after-Read (WAR) Output-dependence r 3 r 1 op r 2 r 5 r 3 op r 4 r 3 r 6 op r 7 Write-after-Write (WAW) 36

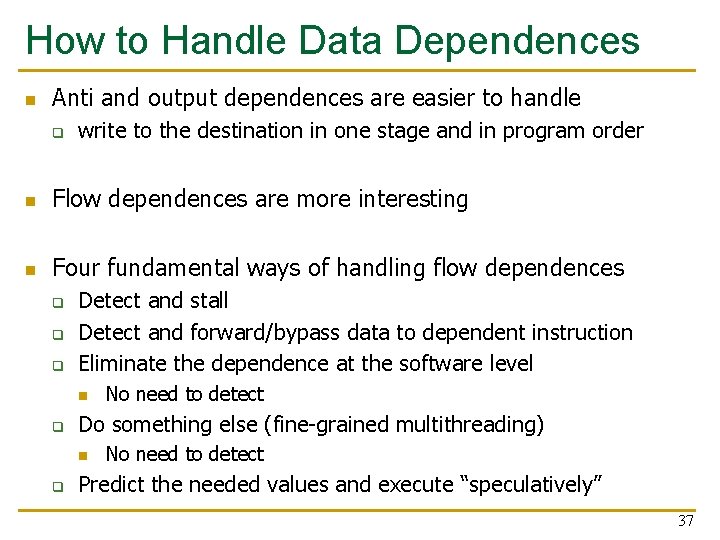

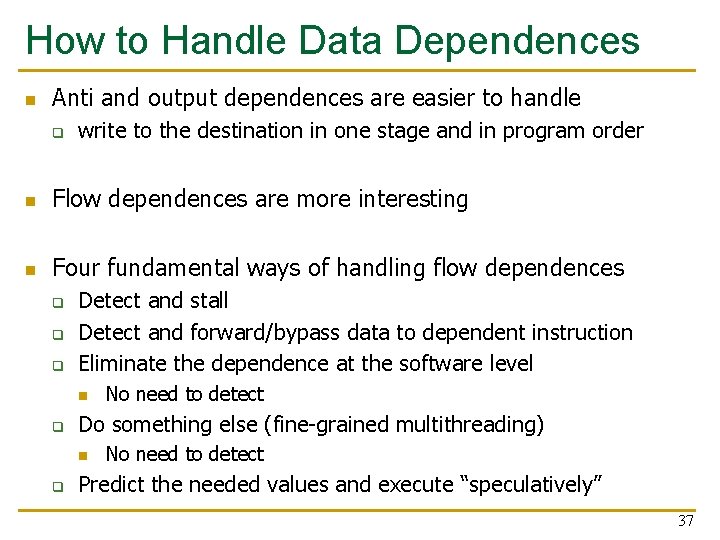

How to Handle Data Dependences n Anti and output dependences are easier to handle q write to the destination in one stage and in program order n Flow dependences are more interesting n Four fundamental ways of handling flow dependences q q q Detect and stall Detect and forward/bypass data to dependent instruction Eliminate the dependence at the software level n q Do something else (fine-grained multithreading) n q No need to detect Predict the needed values and execute “speculatively” 37

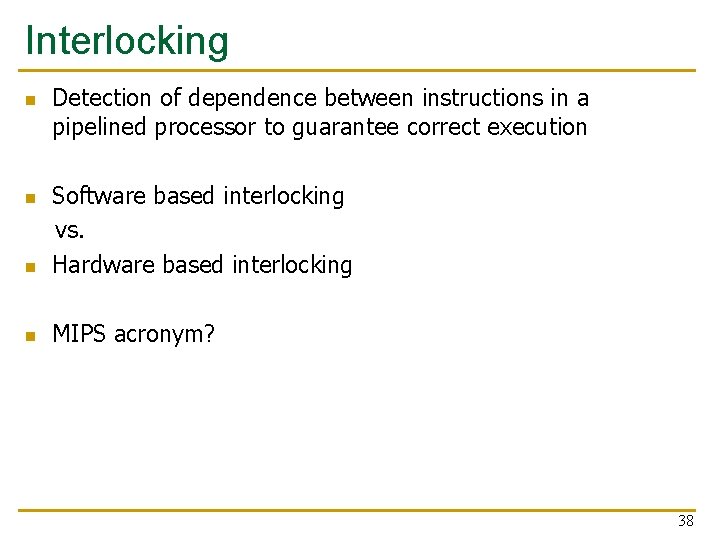

Interlocking n Detection of dependence between instructions in a pipelined processor to guarantee correct execution n Software based interlocking vs. Hardware based interlocking n MIPS acronym? n 38

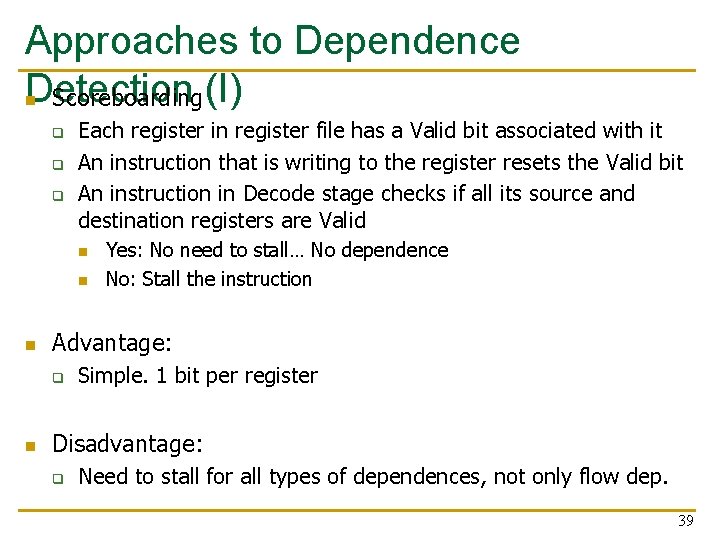

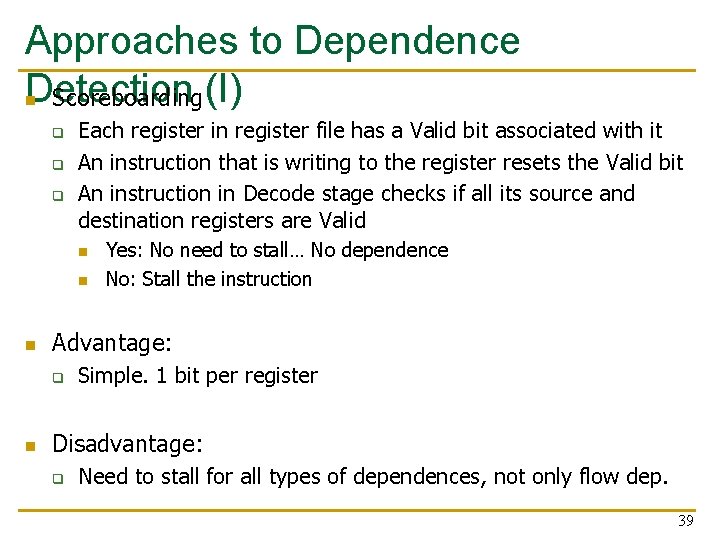

Approaches to Dependence Detection n Scoreboarding (I) q q q Each register in register file has a Valid bit associated with it An instruction that is writing to the register resets the Valid bit An instruction in Decode stage checks if all its source and destination registers are Valid n n n Advantage: q n Yes: No need to stall… No dependence No: Stall the instruction Simple. 1 bit per register Disadvantage: q Need to stall for all types of dependences, not only flow dep. 39

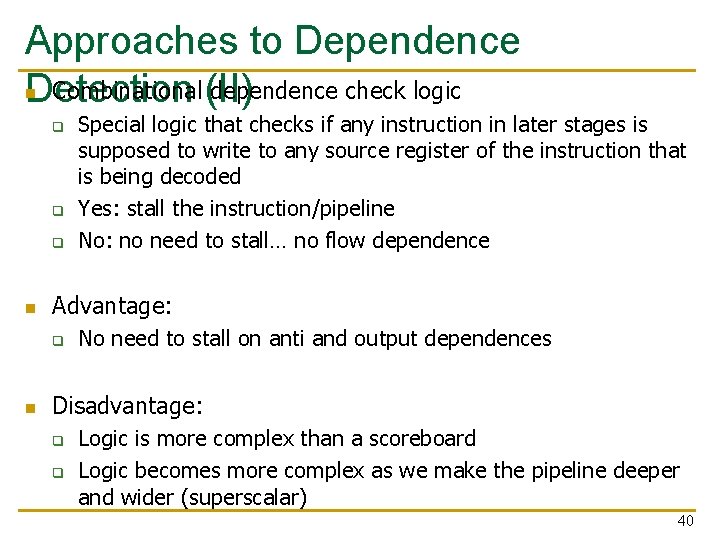

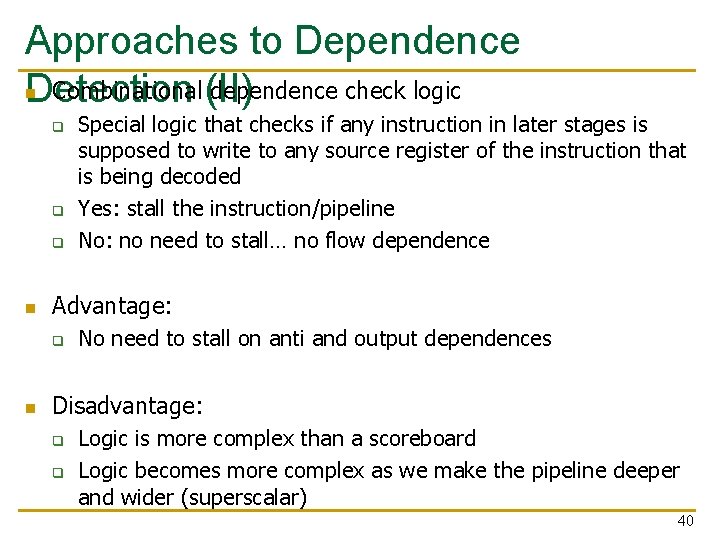

Approaches to Dependence n Combinational (II) dependence check logic Detection q q q n Advantage: q n Special logic that checks if any instruction in later stages is supposed to write to any source register of the instruction that is being decoded Yes: stall the instruction/pipeline No: no need to stall… no flow dependence No need to stall on anti and output dependences Disadvantage: q q Logic is more complex than a scoreboard Logic becomes more complex as we make the pipeline deeper and wider (superscalar) 40

We did not cover the following slides in lecture. These are for your preparation for the next lecture.

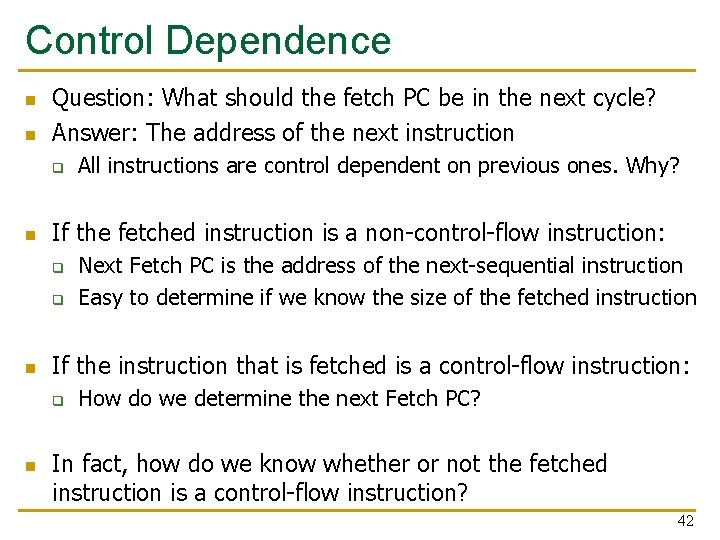

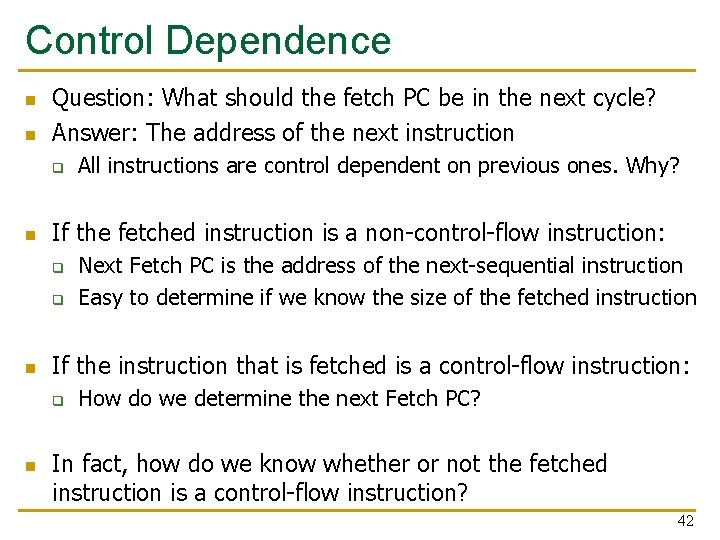

Control Dependence n n Question: What should the fetch PC be in the next cycle? Answer: The address of the next instruction q n If the fetched instruction is a non-control-flow instruction: q q n Next Fetch PC is the address of the next-sequential instruction Easy to determine if we know the size of the fetched instruction If the instruction that is fetched is a control-flow instruction: q n All instructions are control dependent on previous ones. Why? How do we determine the next Fetch PC? In fact, how do we know whether or not the fetched instruction is a control-flow instruction? 42

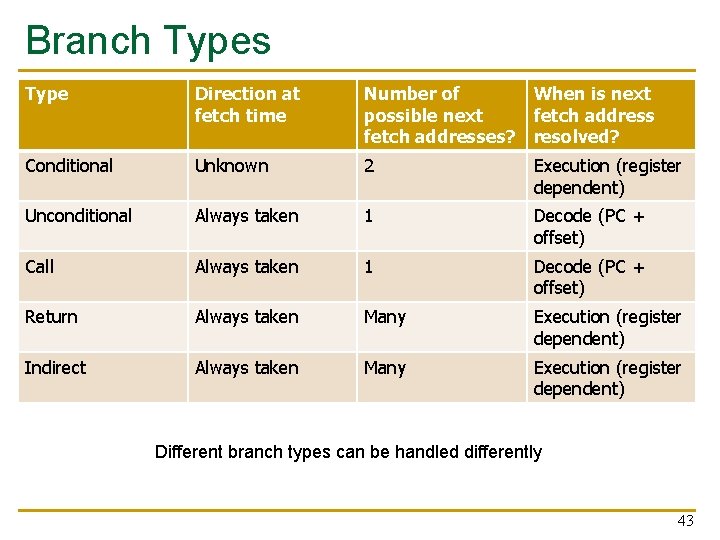

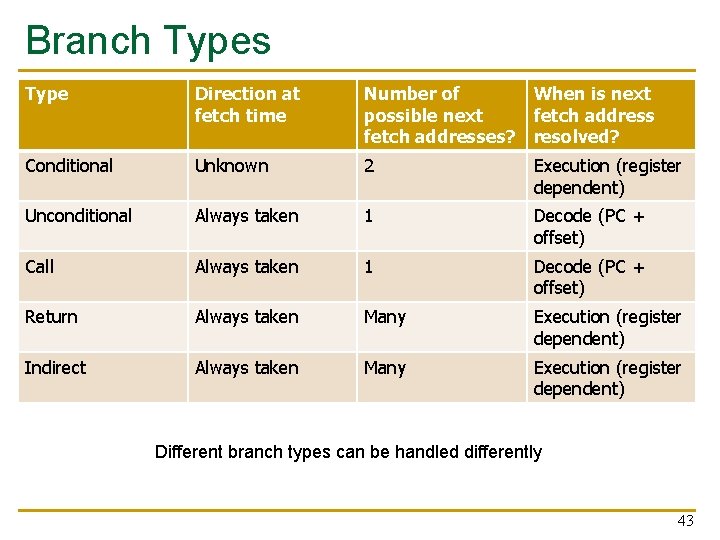

Branch Types Type Direction at fetch time Number of When is next possible next fetch addresses? resolved? Conditional Unknown 2 Execution (register dependent) Unconditional Always taken 1 Decode (PC + offset) Call Always taken 1 Decode (PC + offset) Return Always taken Many Execution (register dependent) Indirect Always taken Many Execution (register dependent) Different branch types can be handled differently 43

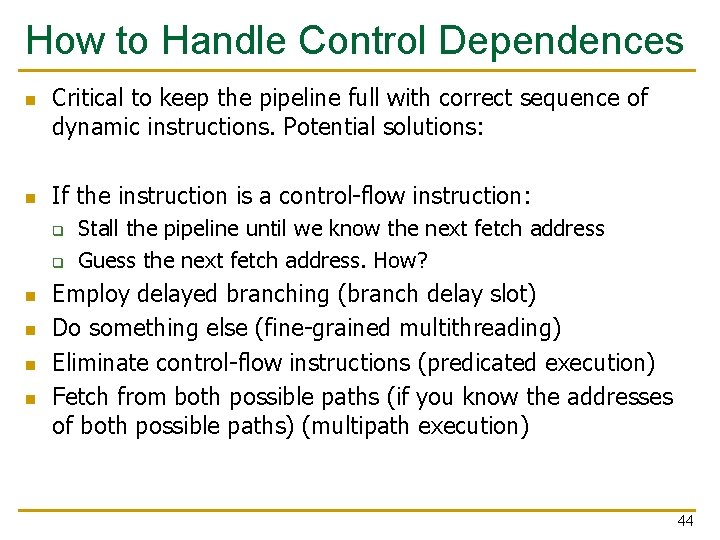

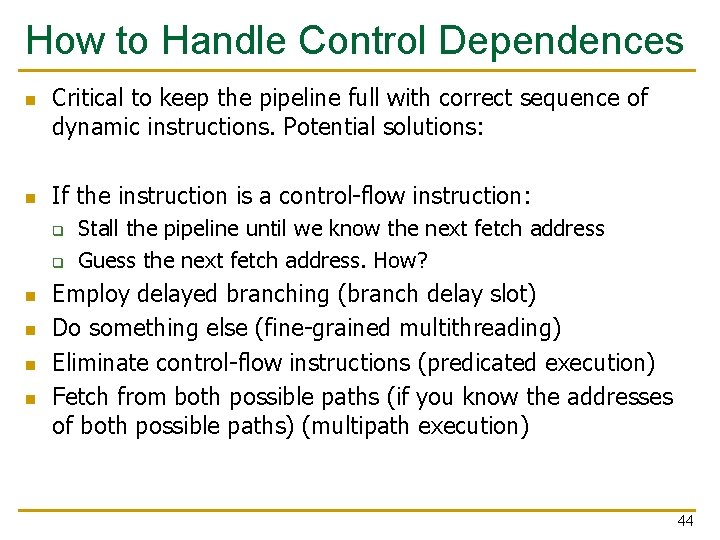

How to Handle Control Dependences n n Critical to keep the pipeline full with correct sequence of dynamic instructions. Potential solutions: If the instruction is a control-flow instruction: q q n n Stall the pipeline until we know the next fetch address Guess the next fetch address. How? Employ delayed branching (branch delay slot) Do something else (fine-grained multithreading) Eliminate control-flow instructions (predicated execution) Fetch from both possible paths (if you know the addresses of both possible paths) (multipath execution) 44

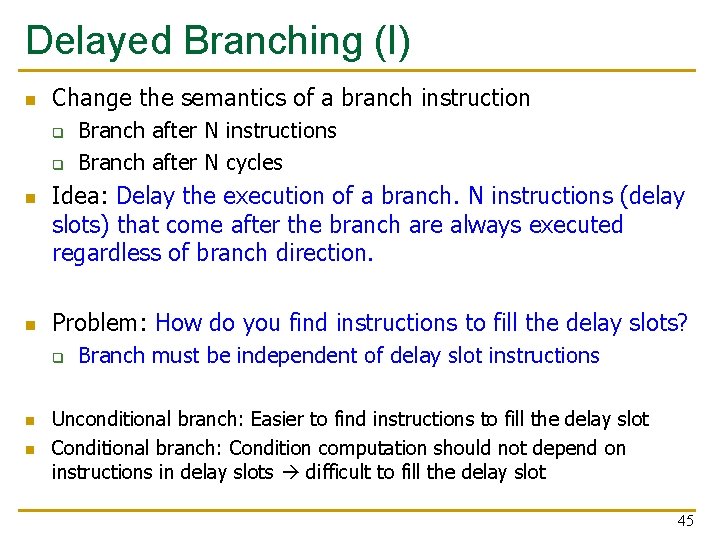

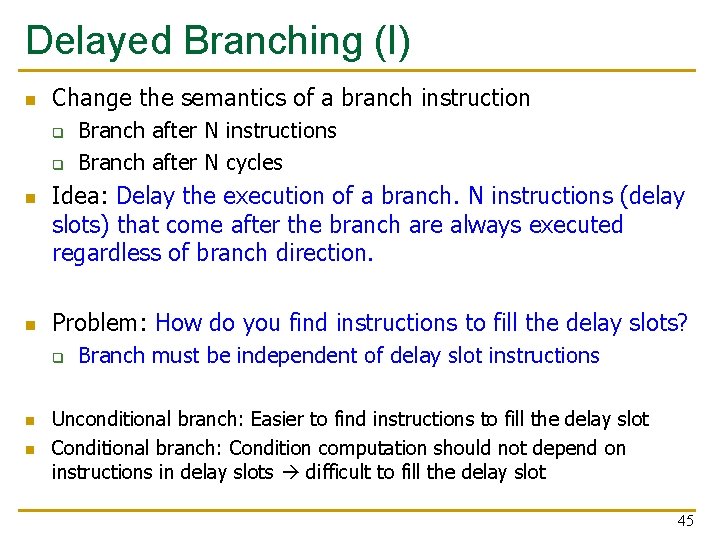

Delayed Branching (I) n Change the semantics of a branch instruction q q n n Idea: Delay the execution of a branch. N instructions (delay slots) that come after the branch are always executed regardless of branch direction. Problem: How do you find instructions to fill the delay slots? q n n Branch after N instructions Branch after N cycles Branch must be independent of delay slot instructions Unconditional branch: Easier to find instructions to fill the delay slot Conditional branch: Condition computation should not depend on instructions in delay slots difficult to fill the delay slot 45

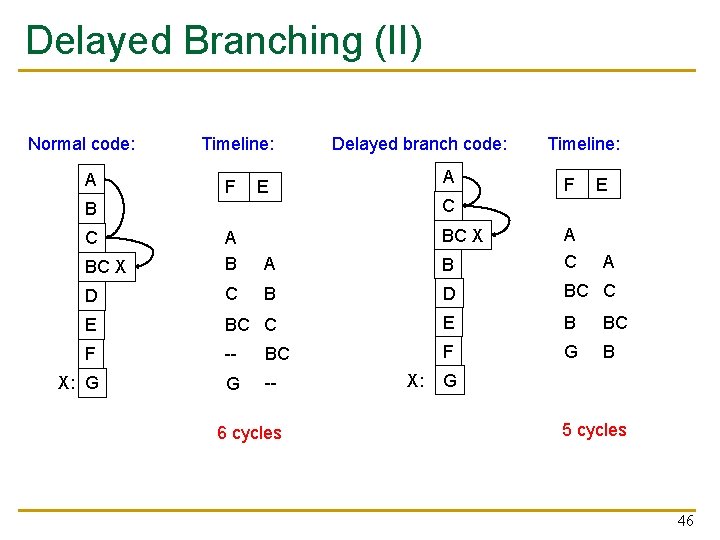

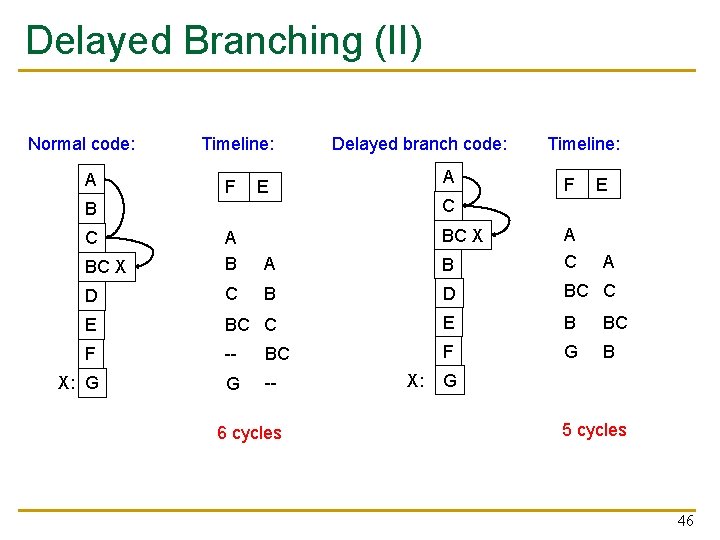

Delayed Branching (II) Normal code: A Timeline: F Delayed branch code: A E Timeline: F E C B BC X A B A C D C B D BC C E B BC F -- BC F G B X: G G -- C BC X 6 cycles X: A G 5 cycles 46

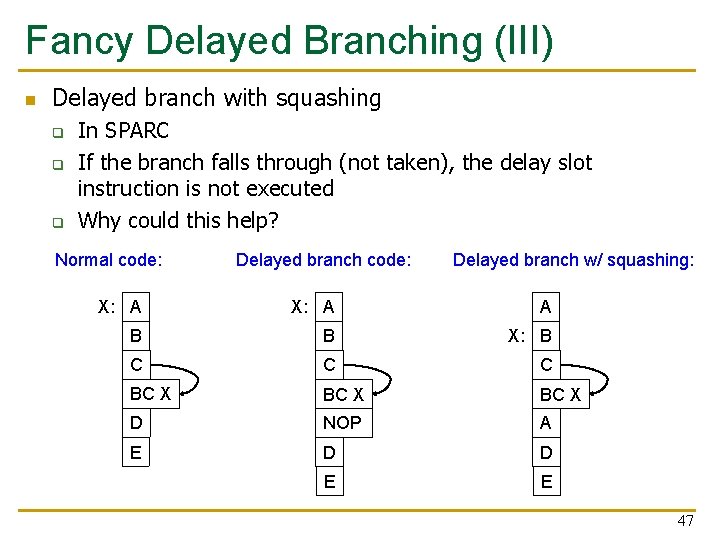

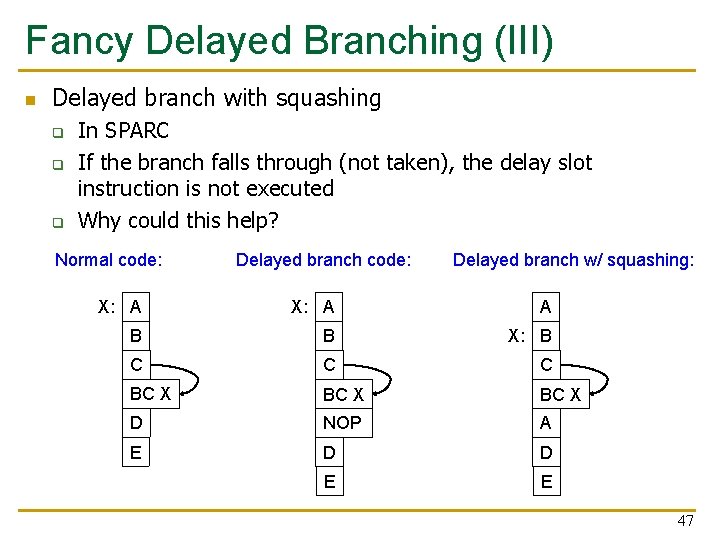

Fancy Delayed Branching (III) n Delayed branch with squashing q q q In SPARC If the branch falls through (not taken), the delay slot instruction is not executed Why could this help? Normal code: Delayed branch w/ squashing: X: A A B B X: B C C C BC X D NOP A E D D E E 47

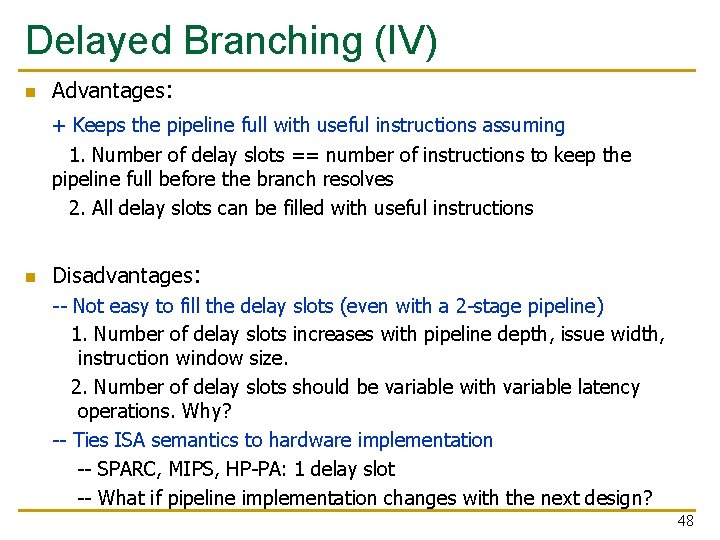

Delayed Branching (IV) n Advantages: + Keeps the pipeline full with useful instructions assuming 1. Number of delay slots == number of instructions to keep the pipeline full before the branch resolves 2. All delay slots can be filled with useful instructions n Disadvantages: -- Not easy to fill the delay slots (even with a 2 -stage pipeline) 1. Number of delay slots increases with pipeline depth, issue width, instruction window size. 2. Number of delay slots should be variable with variable latency operations. Why? -- Ties ISA semantics to hardware implementation -- SPARC, MIPS, HP-PA: 1 delay slot -- What if pipeline implementation changes with the next design? 48

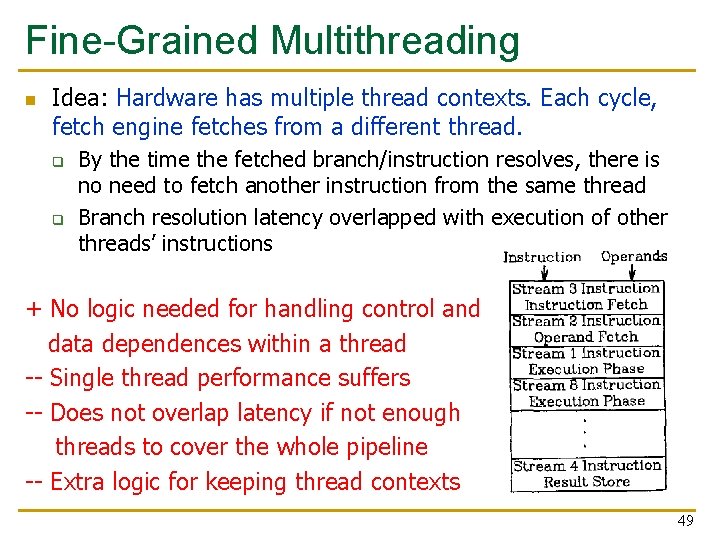

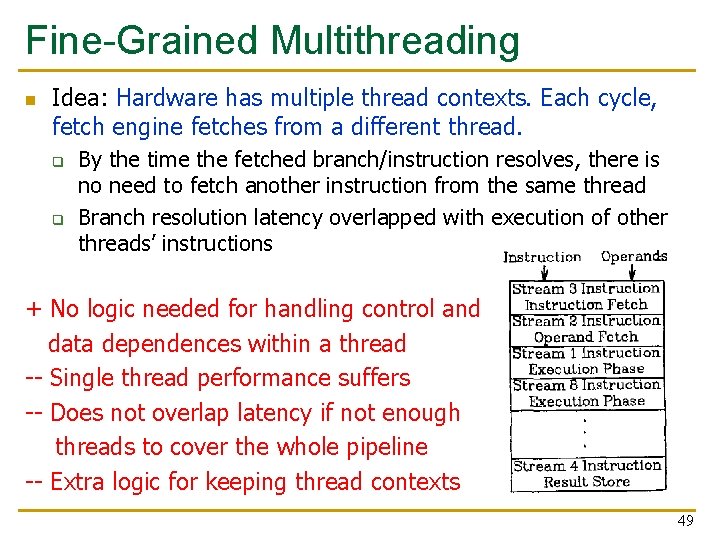

Fine-Grained Multithreading n Idea: Hardware has multiple thread contexts. Each cycle, fetch engine fetches from a different thread. q q By the time the fetched branch/instruction resolves, there is no need to fetch another instruction from the same thread Branch resolution latency overlapped with execution of other threads’ instructions + No logic needed for handling control and data dependences within a thread -- Single thread performance suffers -- Does not overlap latency if not enough threads to cover the whole pipeline -- Extra logic for keeping thread contexts 49

Pipelining the LC-3 b 50

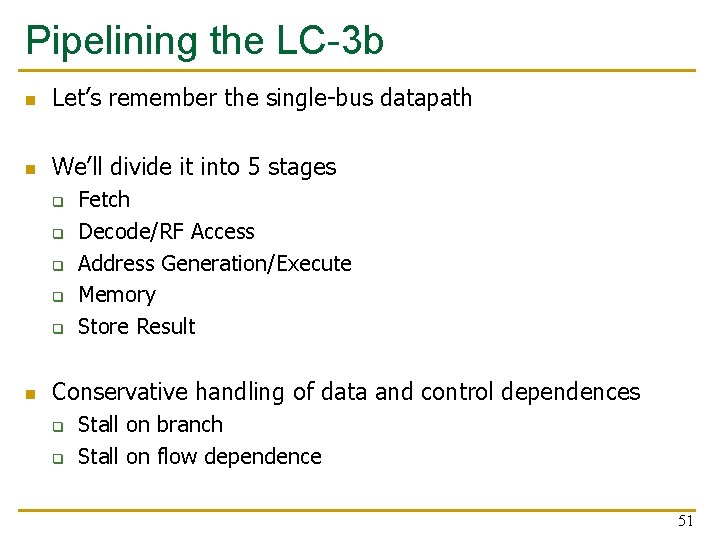

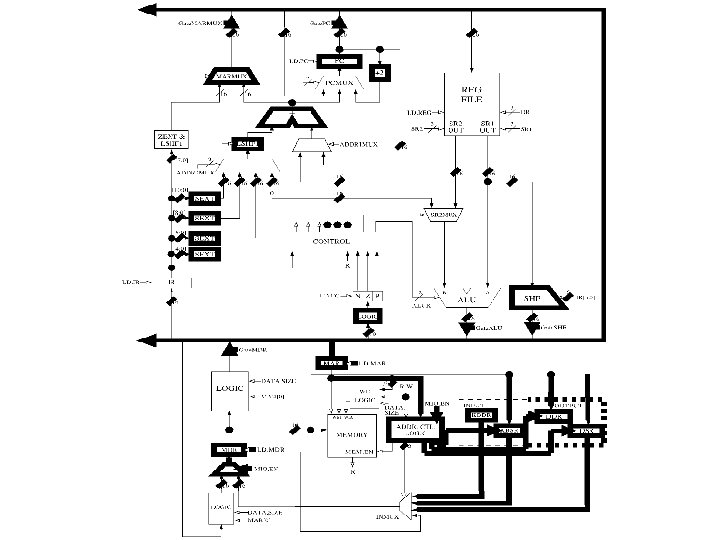

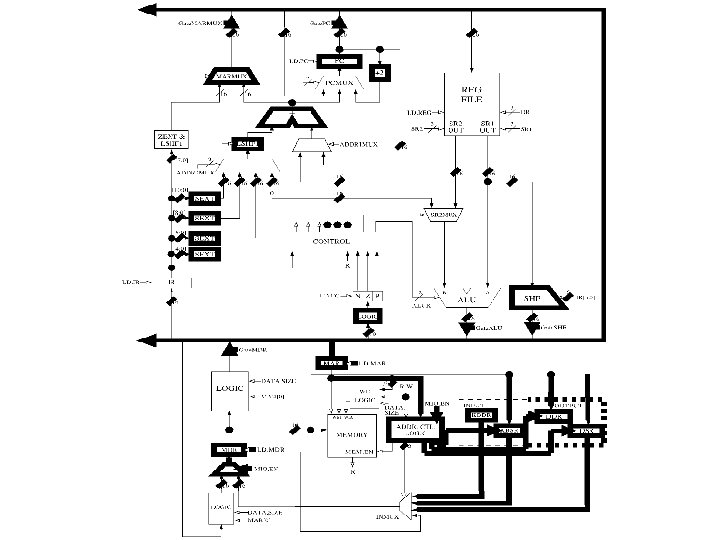

Pipelining the LC-3 b n Let’s remember the single-bus datapath n We’ll divide it into 5 stages q q q n Fetch Decode/RF Access Address Generation/Execute Memory Store Result Conservative handling of data and control dependences q q Stall on branch Stall on flow dependence 51

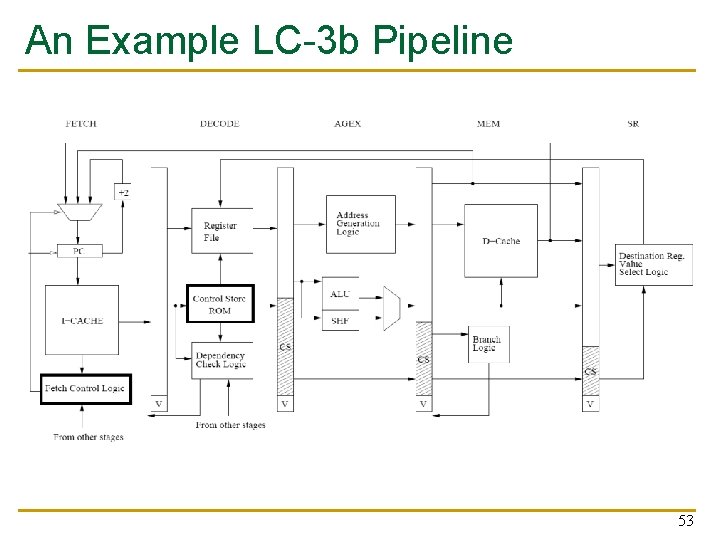

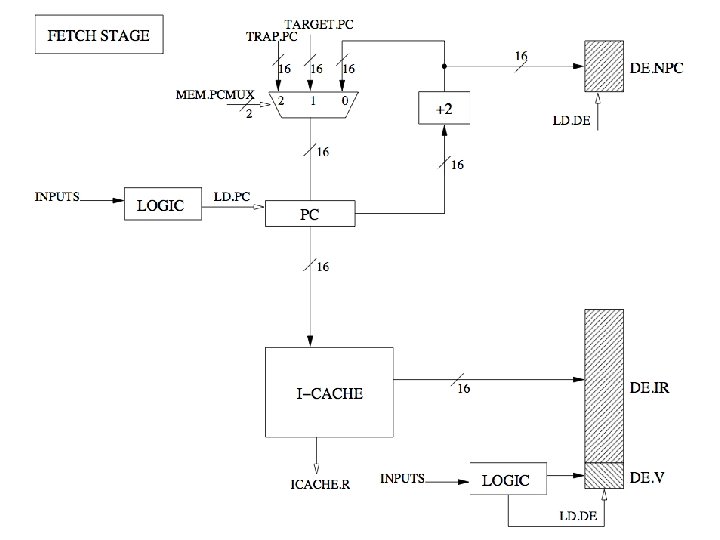

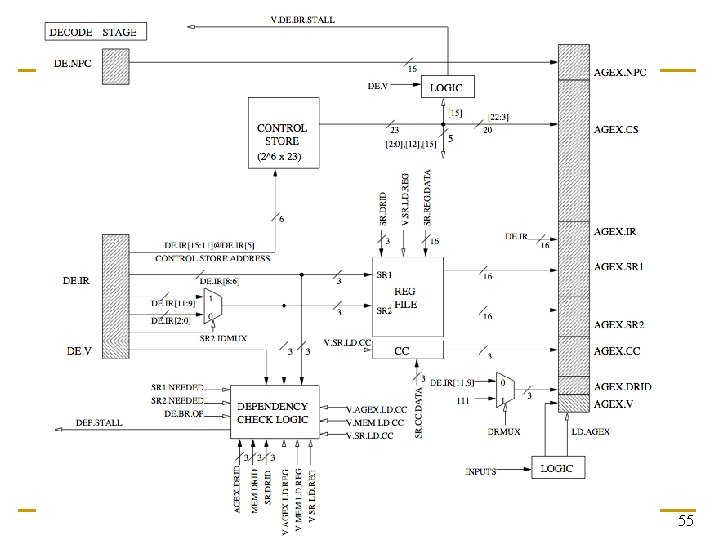

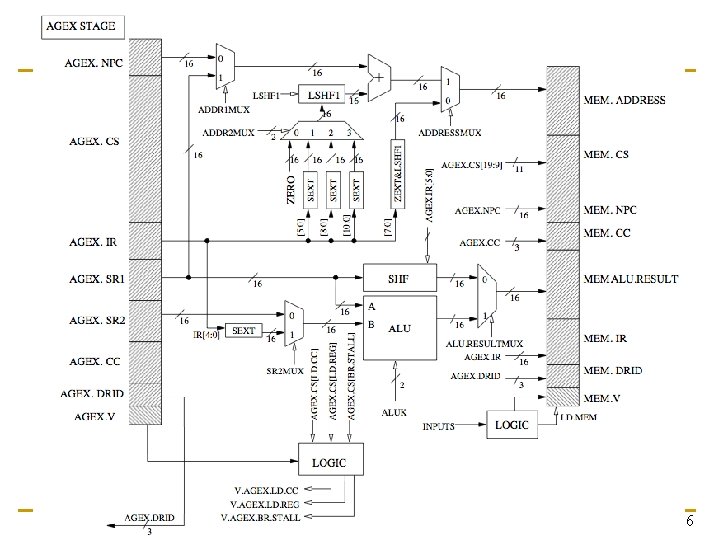

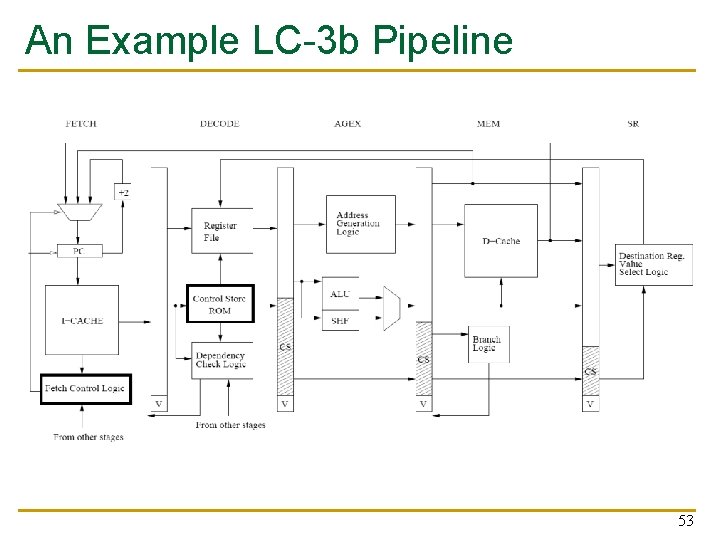

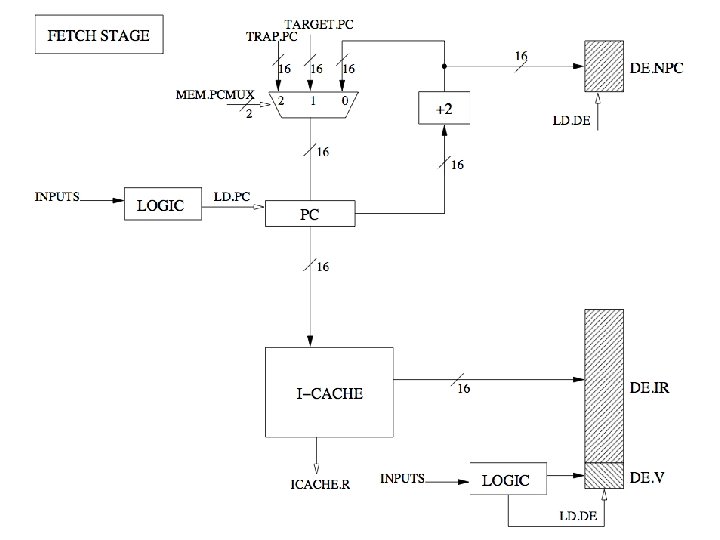

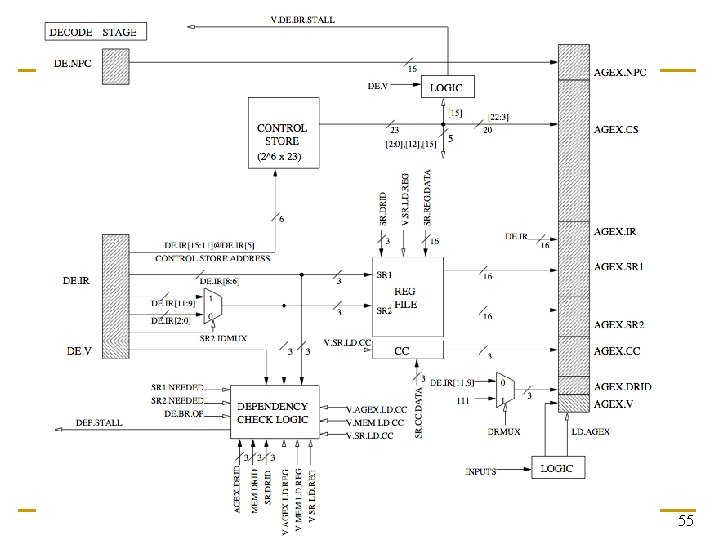

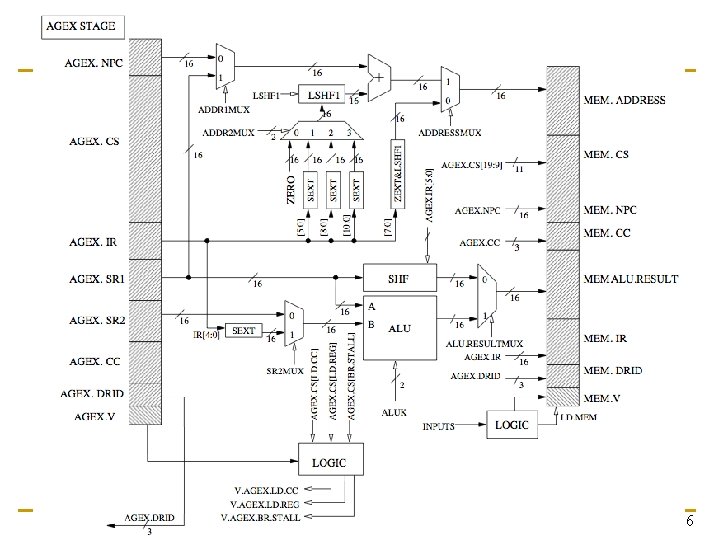

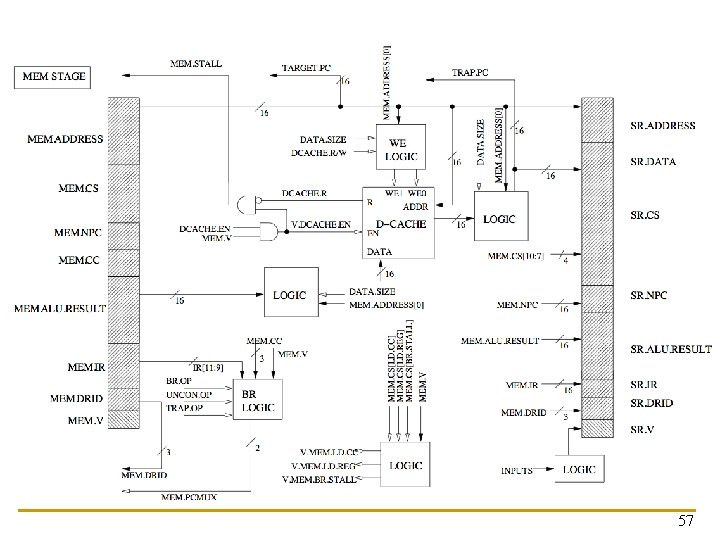

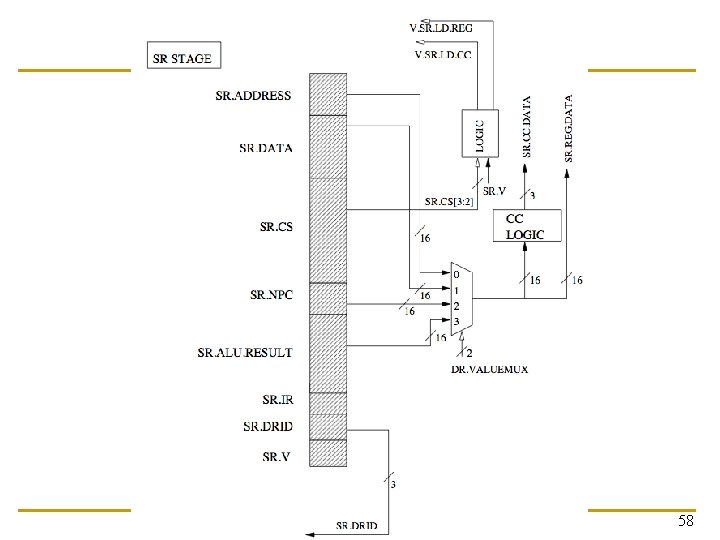

An Example LC-3 b Pipeline 53

54

55

56

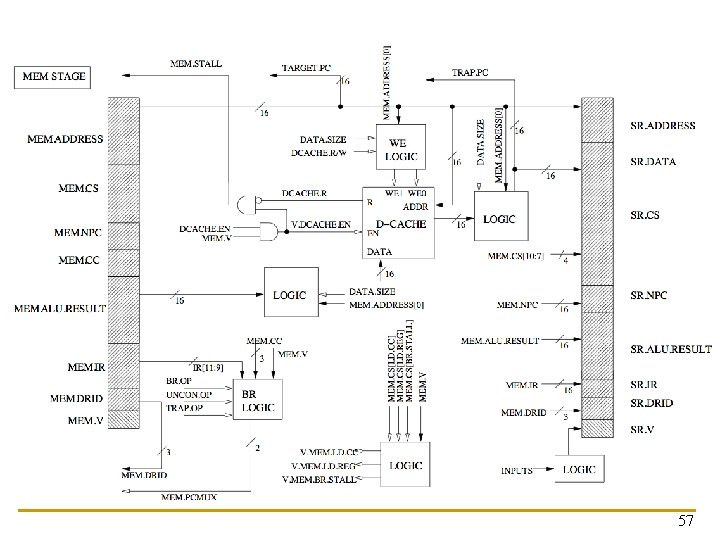

57

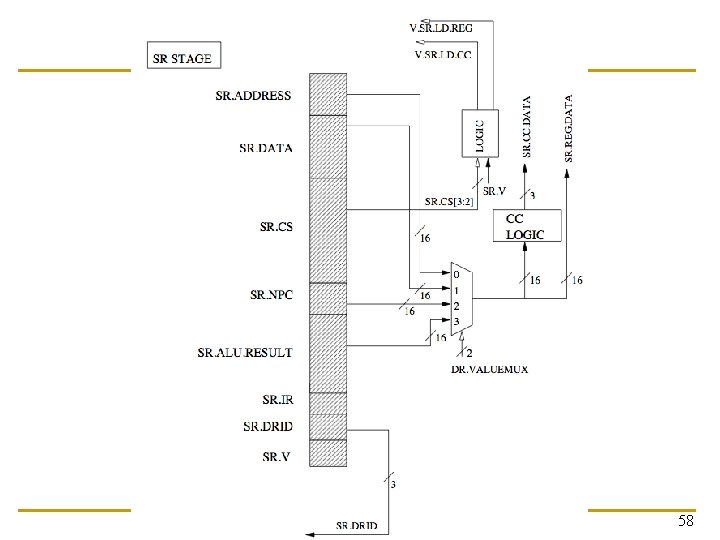

58

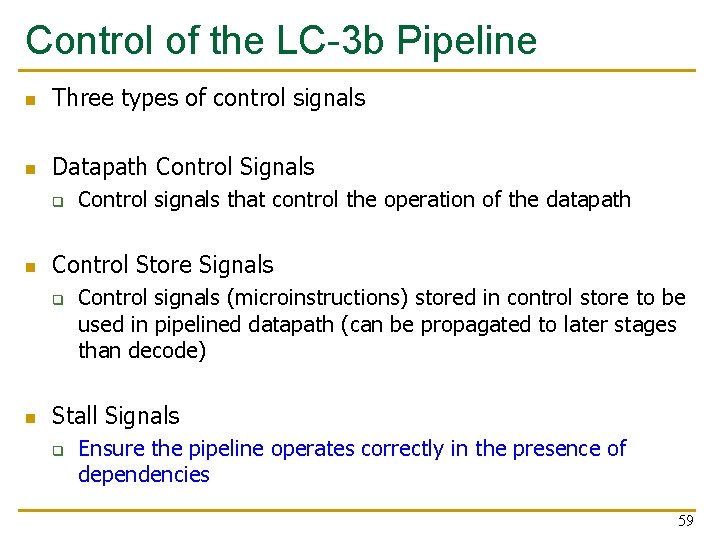

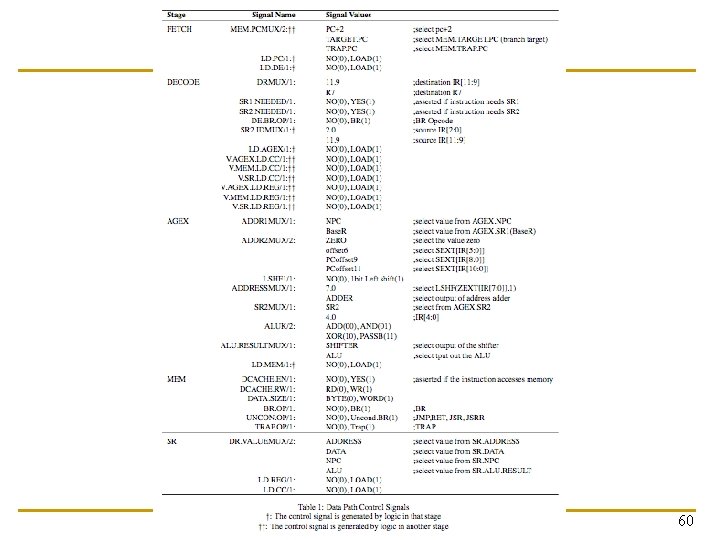

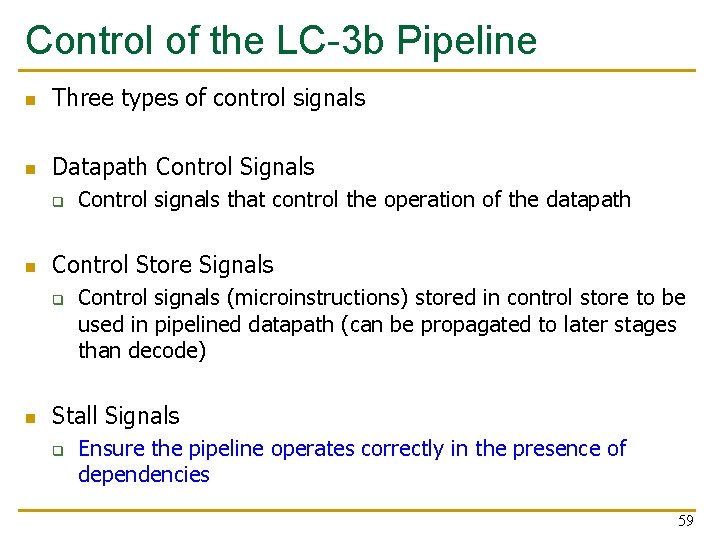

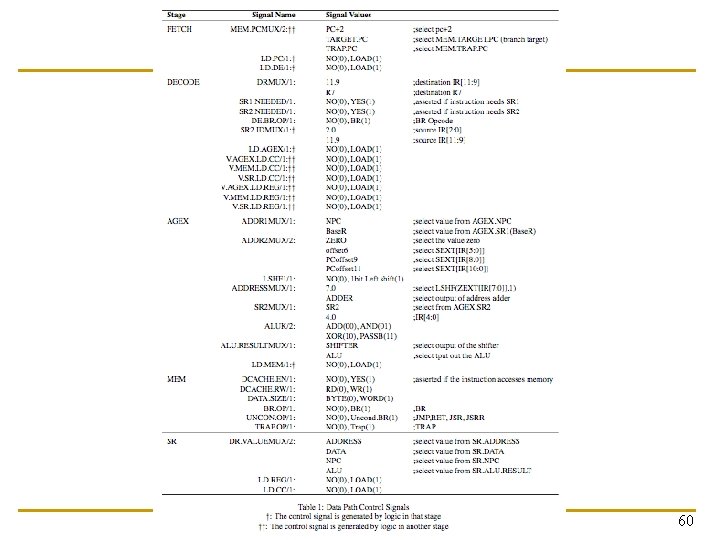

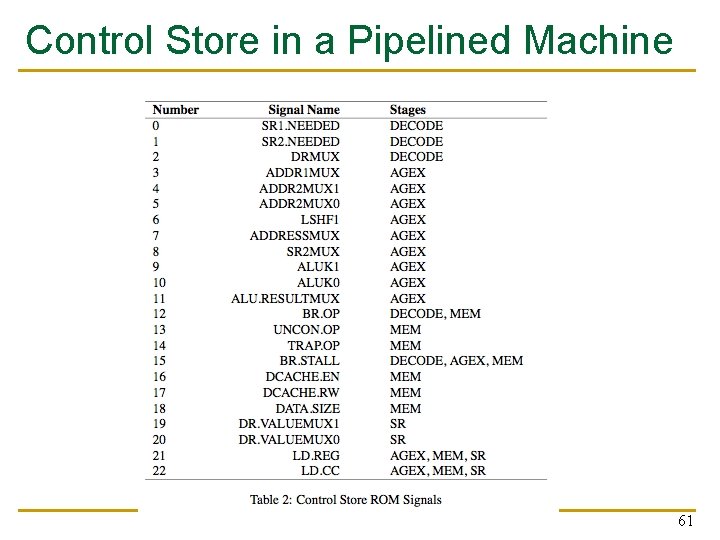

Control of the LC-3 b Pipeline n Three types of control signals n Datapath Control Signals q n Control Store Signals q n Control signals that control the operation of the datapath Control signals (microinstructions) stored in control store to be used in pipelined datapath (can be propagated to later stages than decode) Stall Signals q Ensure the pipeline operates correctly in the presence of dependencies 59

60

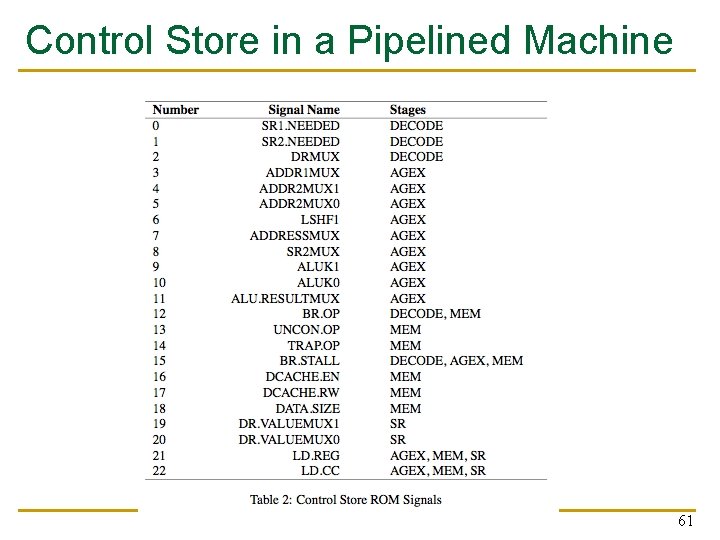

Control Store in a Pipelined Machine 61

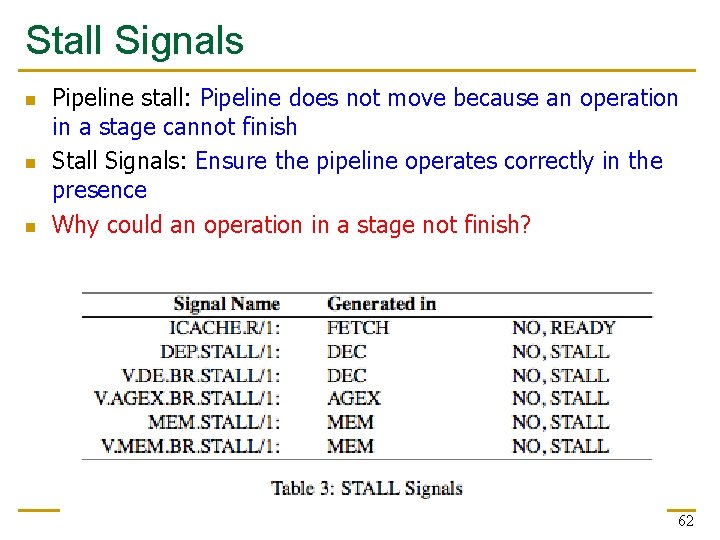

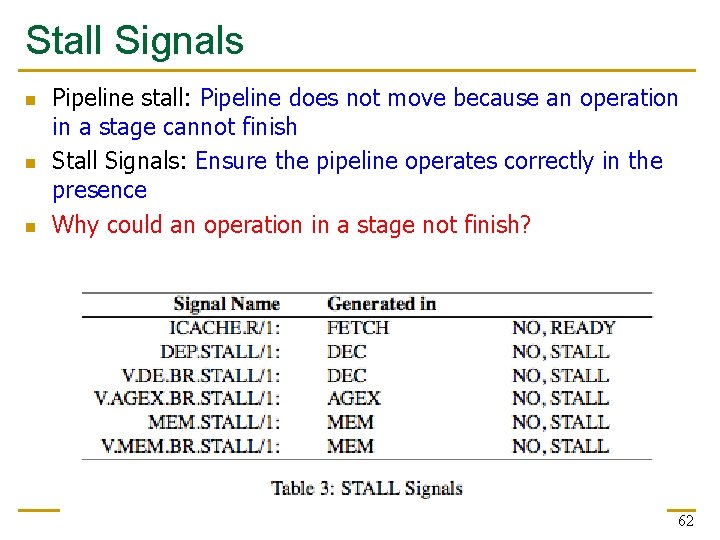

Stall Signals n n n Pipeline stall: Pipeline does not move because an operation in a stage cannot finish Stall Signals: Ensure the pipeline operates correctly in the presence Why could an operation in a stage not finish? 62