12 TCP Flow Control and Congestion Control Part

- Slides: 32

12. TCP Flow Control and Congestion Control – Part 1 n n TCP Flow Control Congestion control – general principles TCP congestion control overview TCP congestion control specifics Roch Guerin (with adaptations from Jon Turner and John De. Hart, and material from Kurose and Ross)

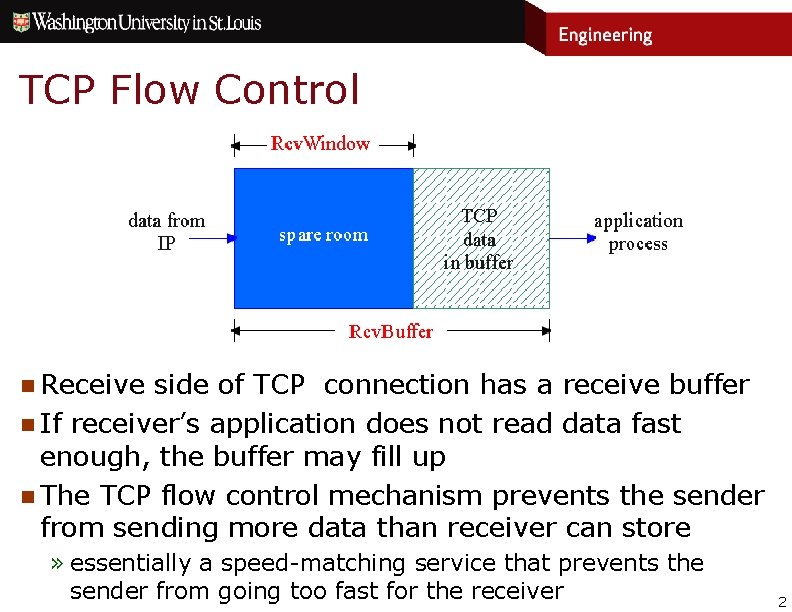

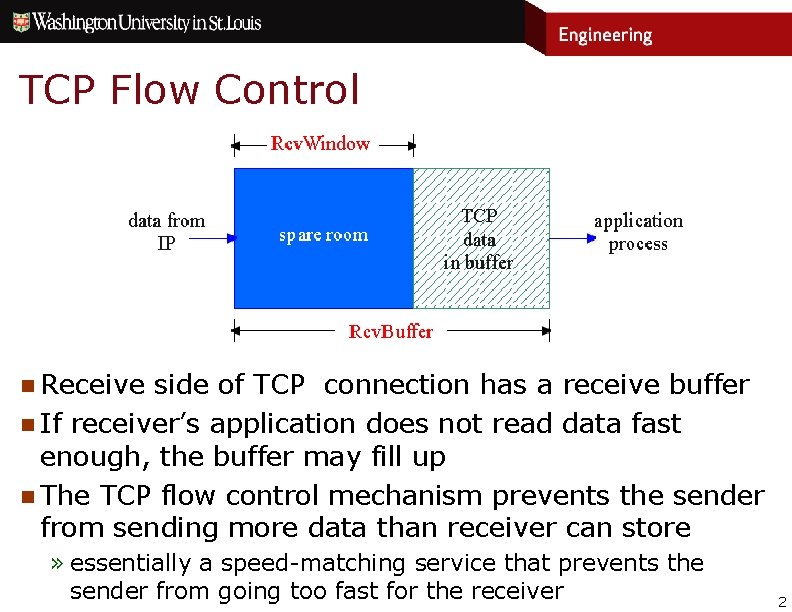

TCP Flow Control n Receive side of TCP connection has a receive buffer n If receiver’s application does not read data fast enough, the buffer may fill up n The TCP flow control mechanism prevents the sender from sending more data than receiver can store » essentially a speed-matching service that prevents the sender from going too fast for the receiver 2

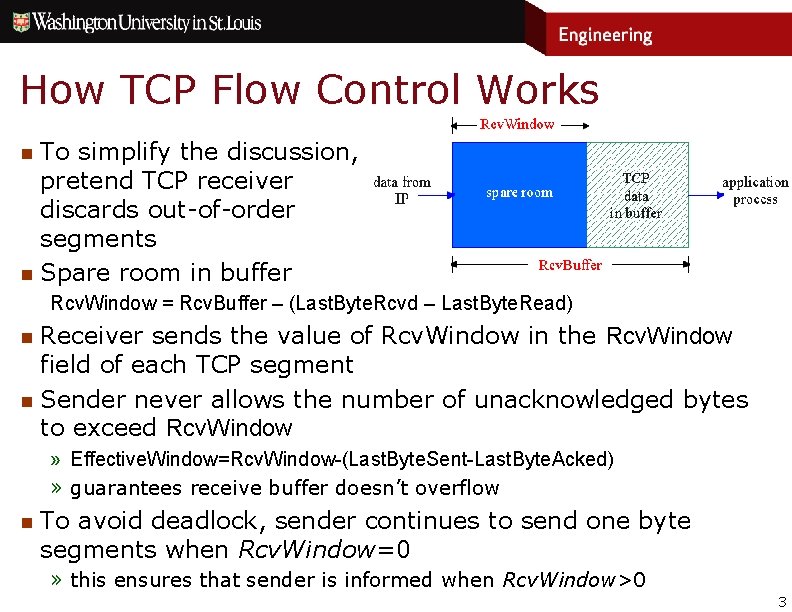

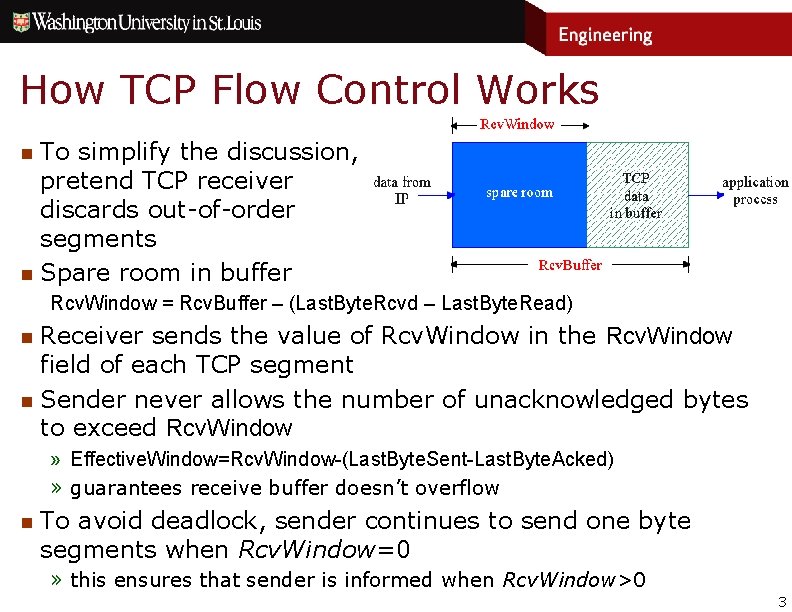

How TCP Flow Control Works To simplify the discussion, pretend TCP receiver discards out-of-order segments n Spare room in buffer n Rcv. Window = Rcv. Buffer – (Last. Byte. Rcvd – Last. Byte. Read) Receiver sends the value of Rcv. Window in the Rcv. Window field of each TCP segment n Sender never allows the number of unacknowledged bytes to exceed Rcv. Window n » Effective. Window=Rcv. Window-(Last. Byte. Sent-Last. Byte. Acked) » guarantees receive buffer doesn’t overflow n To avoid deadlock, sender continues to send one byte segments when Rcv. Window=0 » this ensures that sender is informed when Rcv. Window>0 3

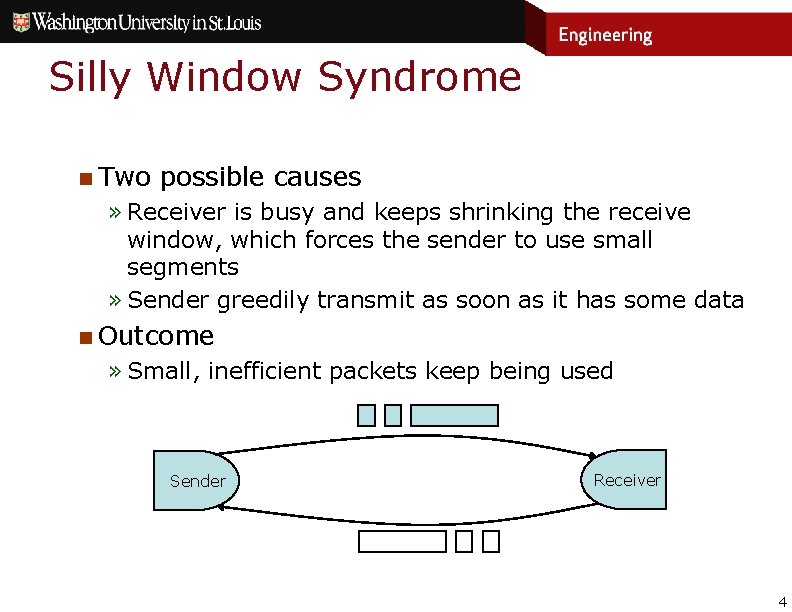

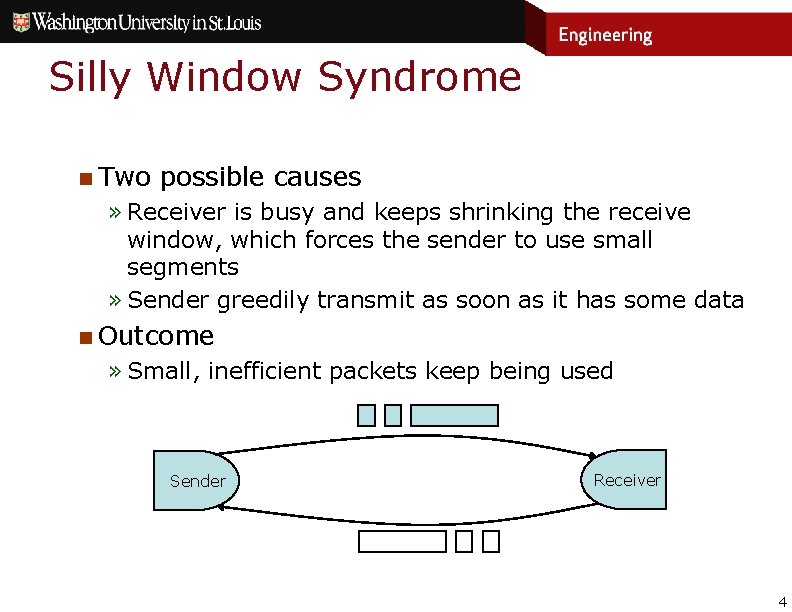

Silly Window Syndrome n Two possible causes » Receiver is busy and keeps shrinking the receive window, which forces the sender to use small segments » Sender greedily transmit as soon as it has some data n Outcome » Small, inefficient packets keep being used Sender Receiver 4

Silly Window Syndrome Solutions n Receiver-side solution » Advertised receive window has size of either zero or larger than a minimum size (usually MSS) » This delays “acknowledgements” but does not affect sender efficiency too much (e. g. , sending 20 bytes every 1 ms vs. sending 1, 500 bytes every 75 ms) n Sender-side solution » Nagle’s algorithm 5

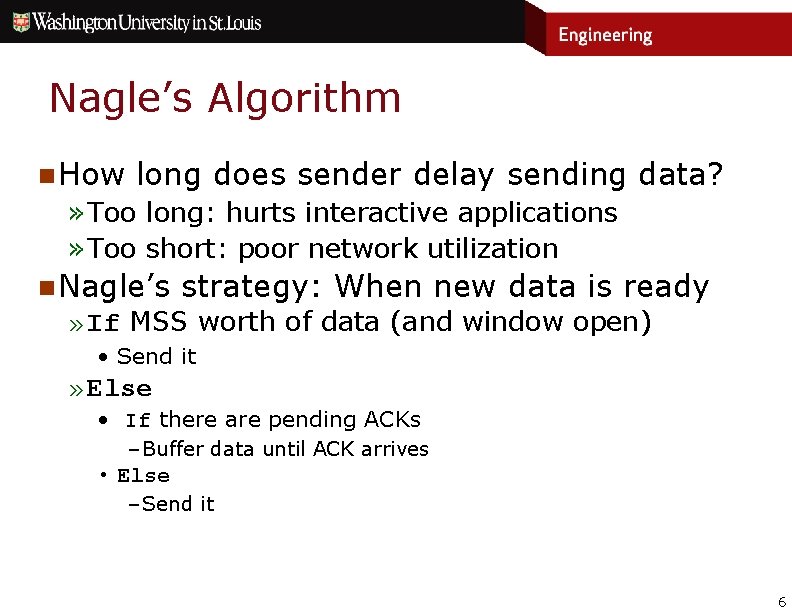

Nagle’s Algorithm n How long does sender delay sending data? » Too long: hurts interactive applications » Too short: poor network utilization n Nagle’s strategy: When new data is ready » If MSS worth of data (and window open) • Send it » Else • If there are pending ACKs – Buffer data until ACK arrives • Else – Send it 6

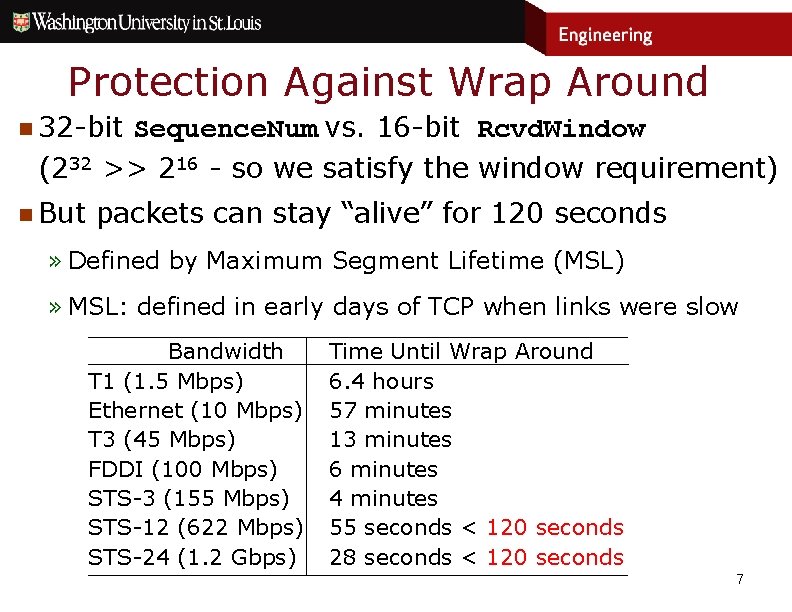

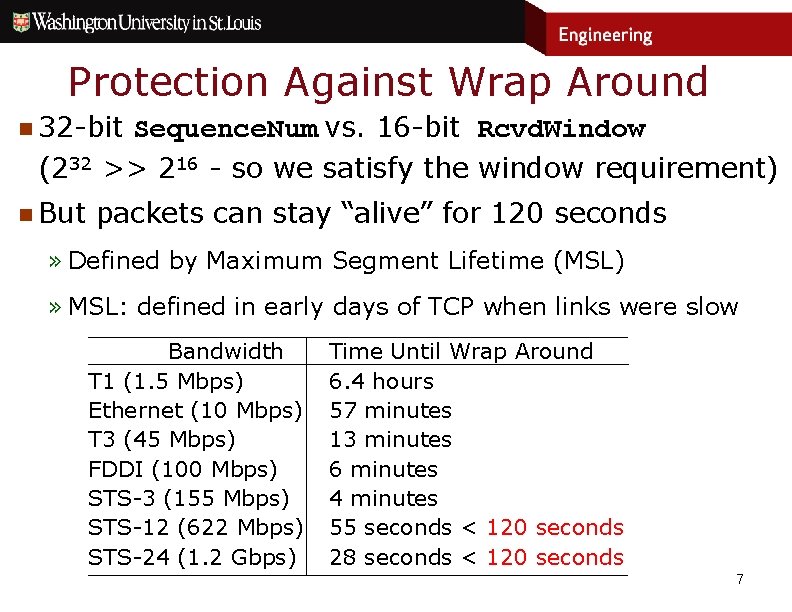

Protection Against Wrap Around n 32 -bit (232 n But Sequence. Num vs. 16 -bit Rcvd. Window >> 216 - so we satisfy the window requirement) packets can stay “alive” for 120 seconds » Defined by Maximum Segment Lifetime (MSL) » MSL: defined in early days of TCP when links were slow Bandwidth T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) Time Until Wrap Around 6. 4 hours 57 minutes 13 minutes 6 minutes 4 minutes 55 seconds < 120 seconds 28 seconds < 120 seconds 7

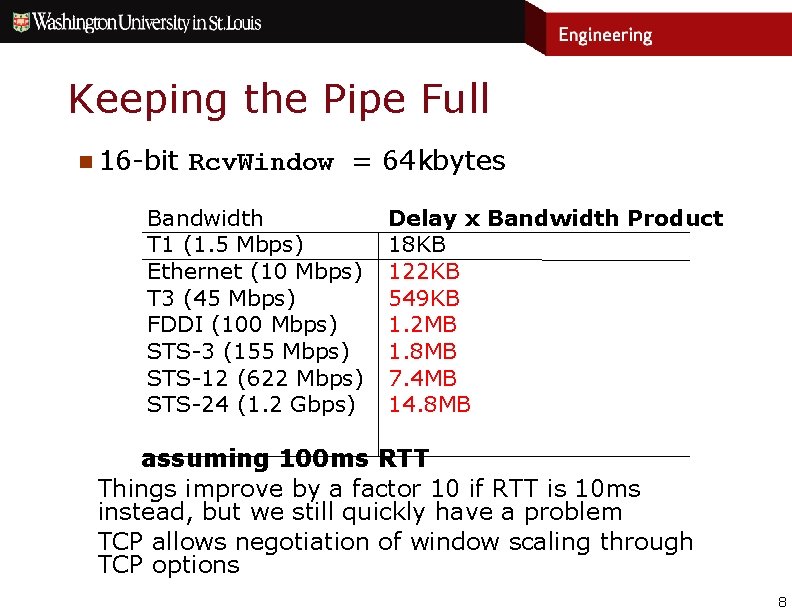

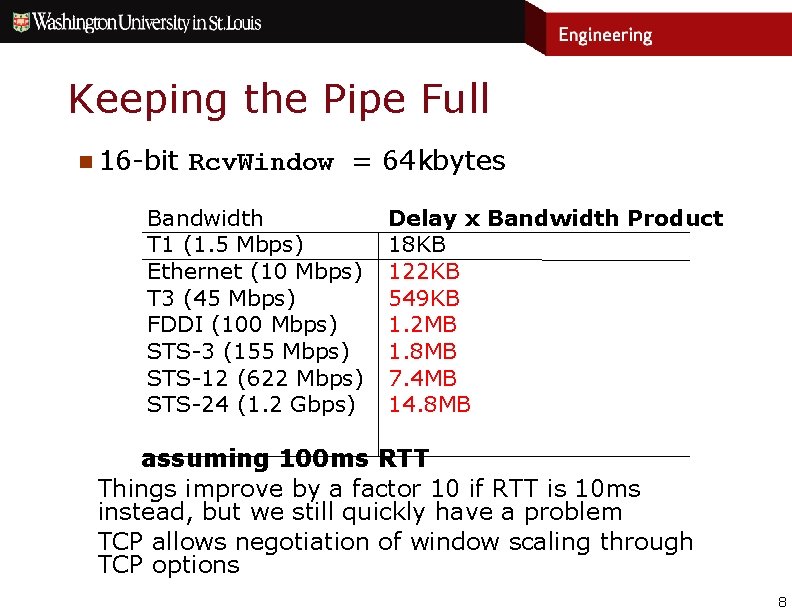

Keeping the Pipe Full n 16 -bit Rcv. Window = 64 kbytes Bandwidth T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) Delay x Bandwidth Product 18 KB 122 KB 549 KB 1. 2 MB 1. 8 MB 7. 4 MB 14. 8 MB assuming 100 ms RTT Things improve by a factor 10 if RTT is 10 ms instead, but we still quickly have a problem TCP allows negotiation of window scaling through TCP options 8

Principles of Congestion Control n What is meant by congestion? » the amount of traffic arriving at a network link exceeds the link rate for an “excessive time period” (over-runs the buffer) » caused by sources sending too much data for the link to handle • note that it’s the network that is limiting the traffic flow in this case, not the receiver » causes router queues to fill up and overflow n Consequences (Why is congestion bad? ) » packets get lost at routers » network delays get large » network throughput can actually drop as load increases • this happens because packets may be dropped after passing through several routers, wasting the capacity of “upstream” links • since some network effort is wasted, throughput drops below peak 9

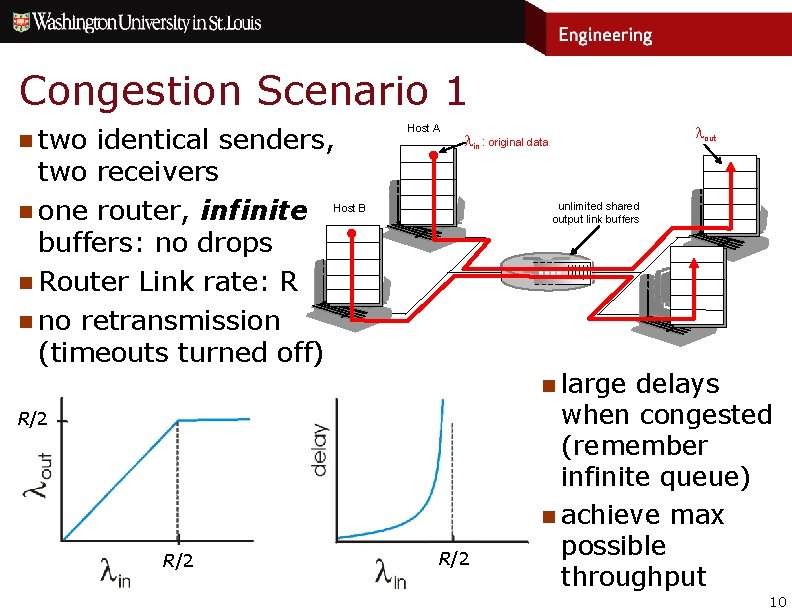

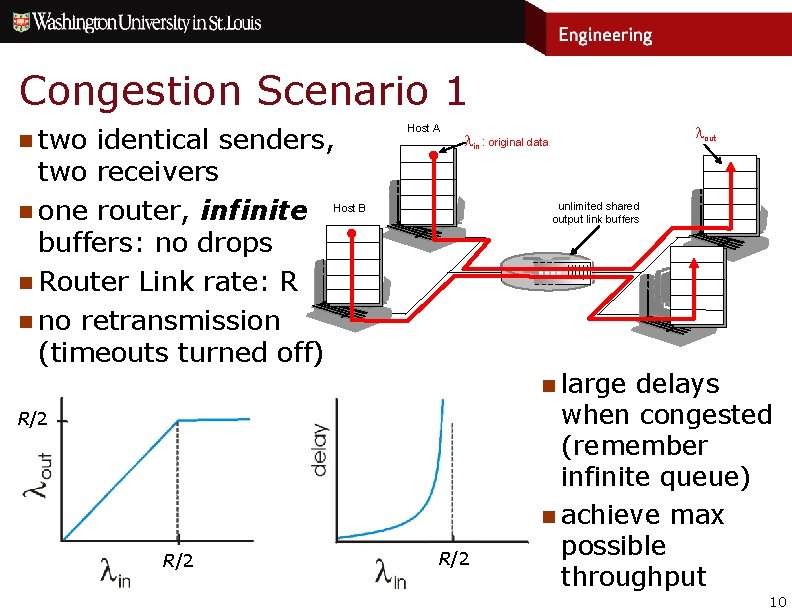

Congestion Scenario 1 n two identical senders, two receivers n one router, infinite Host B buffers: no drops n Router Link rate: R n no retransmission (timeouts turned off) Host A unlimited shared output link buffers n large R/2 lout lin : original data R/2 delays when congested (remember infinite queue) n achieve max possible throughput 10

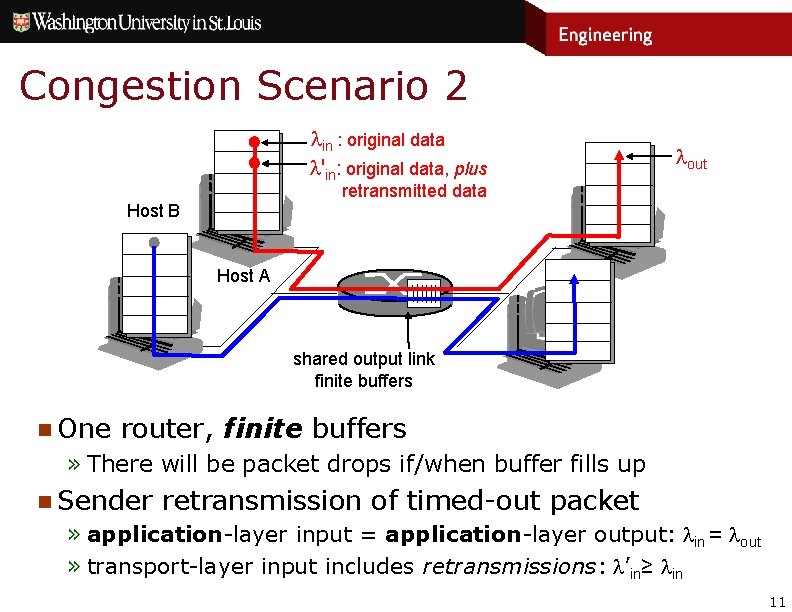

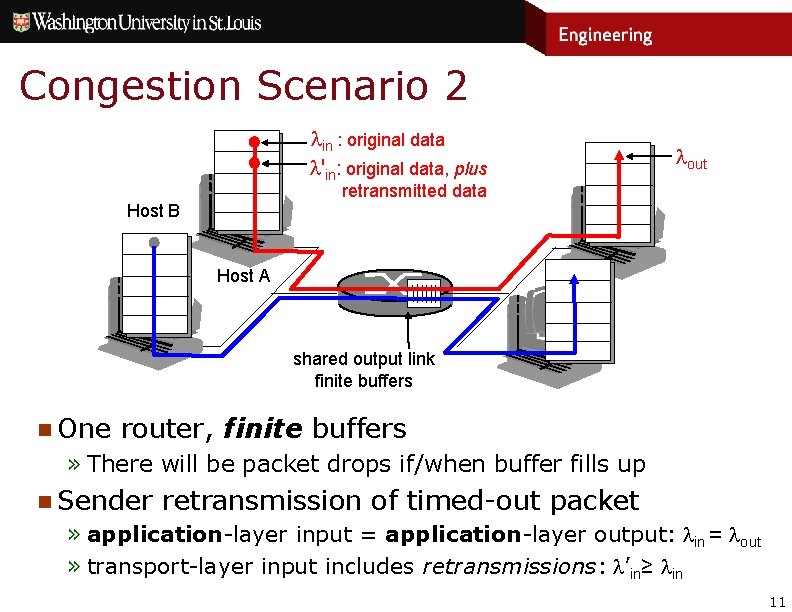

Congestion Scenario 2 lin : original data l'in: original data, plus lout retransmitted data Host B Host A shared output link finite buffers n One router, finite buffers » There will be packet drops if/when buffer fills up n Sender retransmission of timed-out packet » application-layer input = application-layer output: lin = lout » transport-layer input includes retransmissions: l’in≥ lin 11

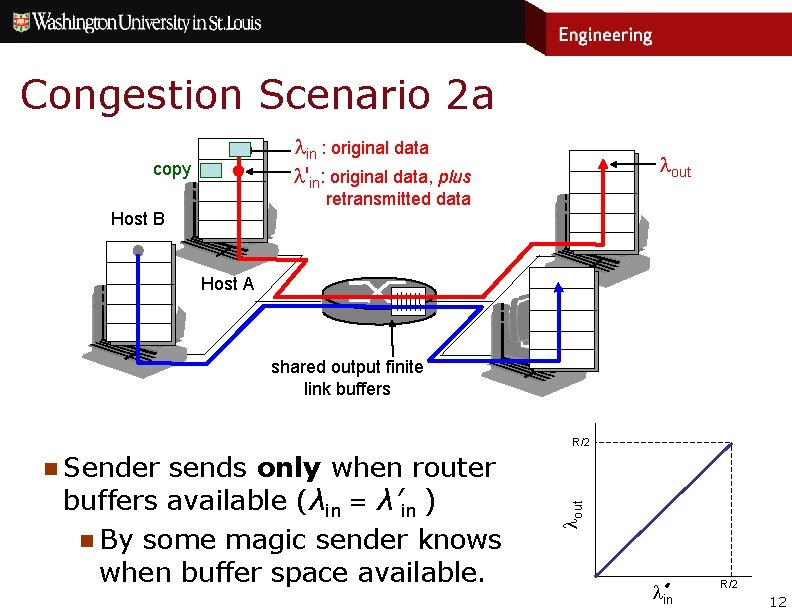

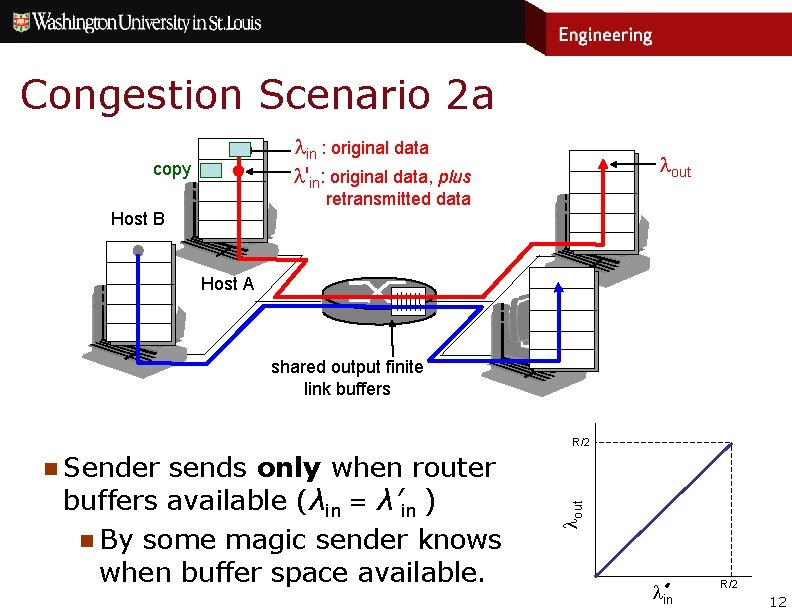

Congestion Scenario 2 a lin : original data l'in: original data, plus copy lout retransmitted data Host B Host A shared output finite link buffers R/2 sends only when router buffers available (λin = λ’in ) n By some magic sender knows when buffer space available. lout n Sender lin R/2 12

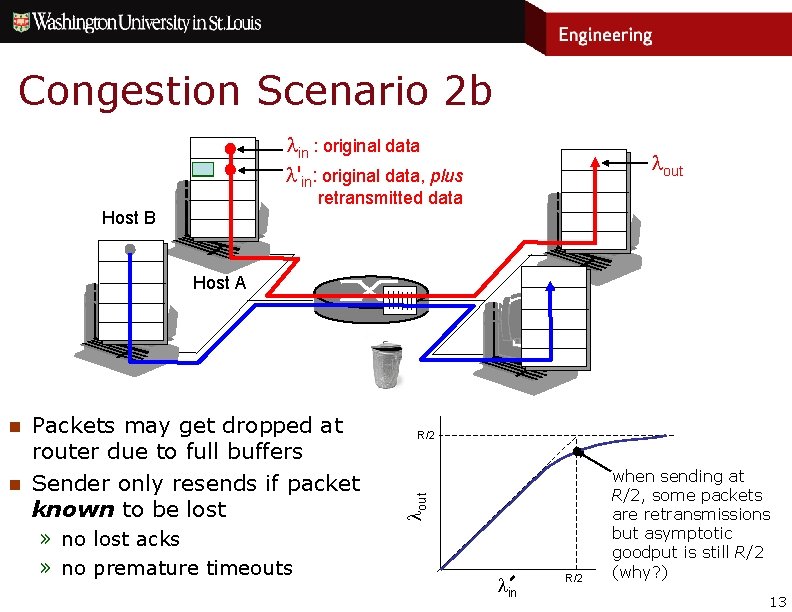

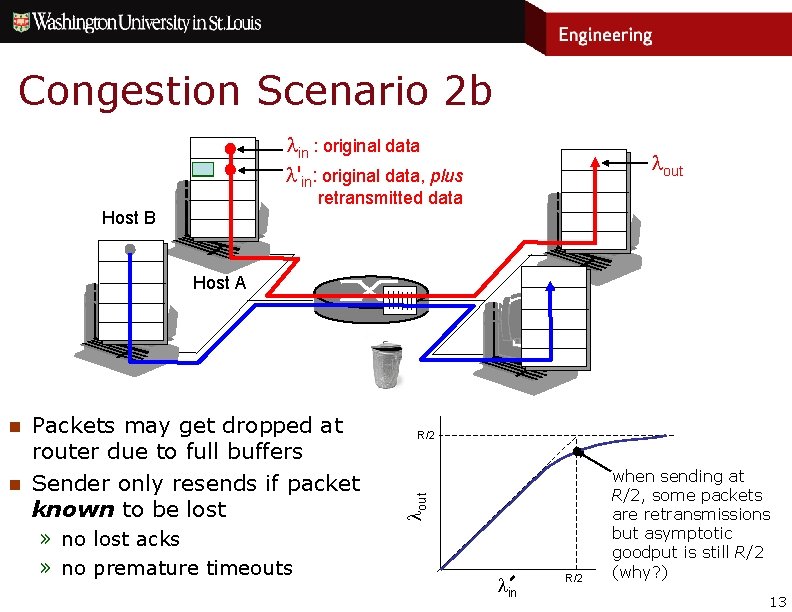

Congestion Scenario 2 b lin : original data l'in: original data, plus lout retransmitted data Host B Host A n Packets may get dropped at router due to full buffers Sender only resends if packet known to be lost » no lost acks » no premature timeouts R/2 lout n lin R/2 when sending at R/2, some packets are retransmissions but asymptotic goodput is still R/2 (why? ) 13

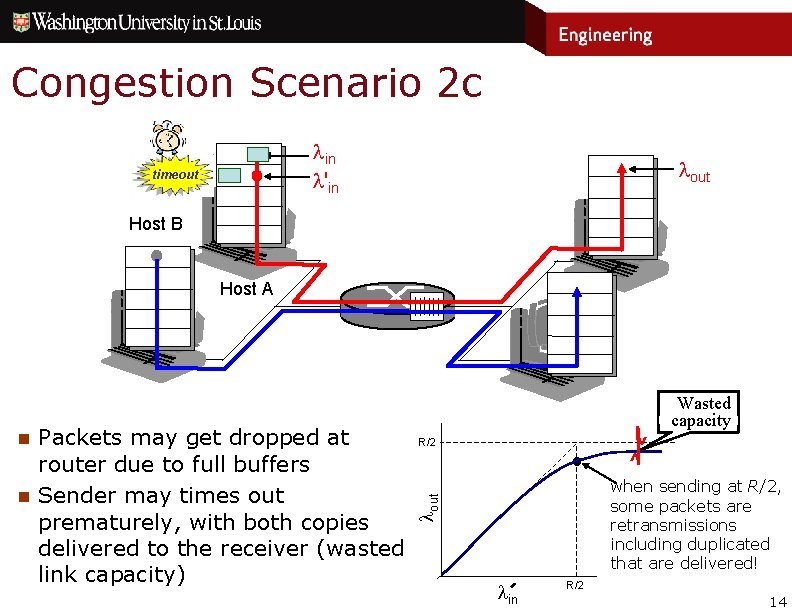

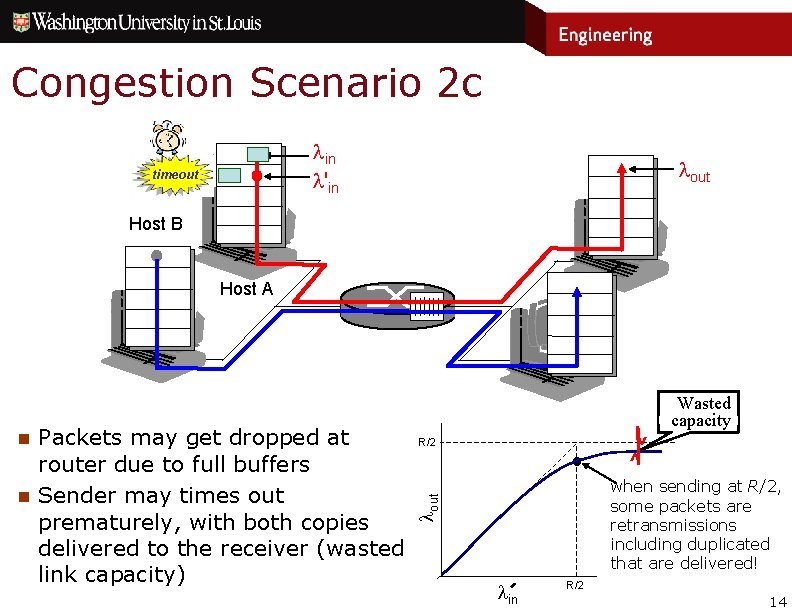

Congestion Scenario 2 c lin l'in timeout copy lout Host B Host A R/2 ^ when sending at R/2, some packets are retransmissions including duplicated that are delivered! lout n Packets may get dropped at router due to full buffers Sender may times out prematurely, with both copies delivered to the receiver (wasted link capacity) ^ n Wasted capacity lin R/2 14

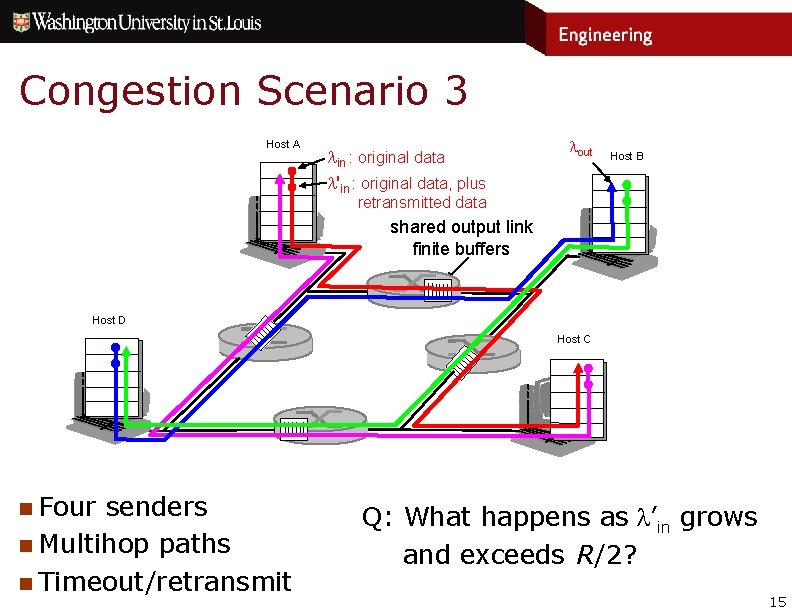

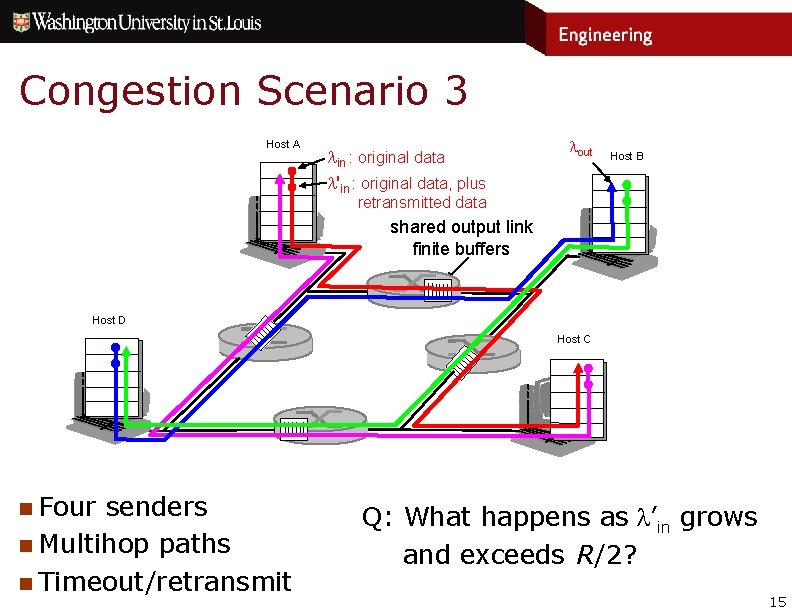

Congestion Scenario 3 Host A lin : original data l'in : original data, plus lout Host B retransmitted data shared output link finite buffers Host D Host C n Four senders n Multihop paths n Timeout/retransmit Q: What happens as l’in grows and exceeds R/2? 15

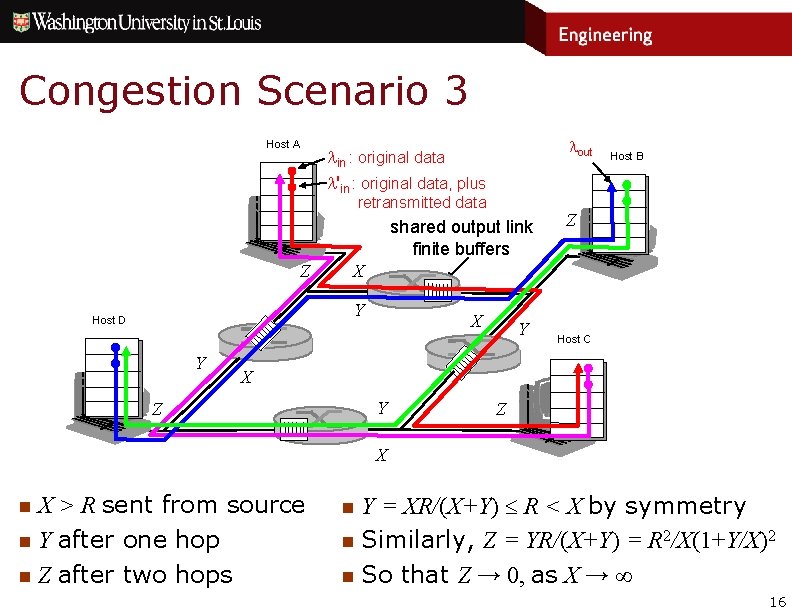

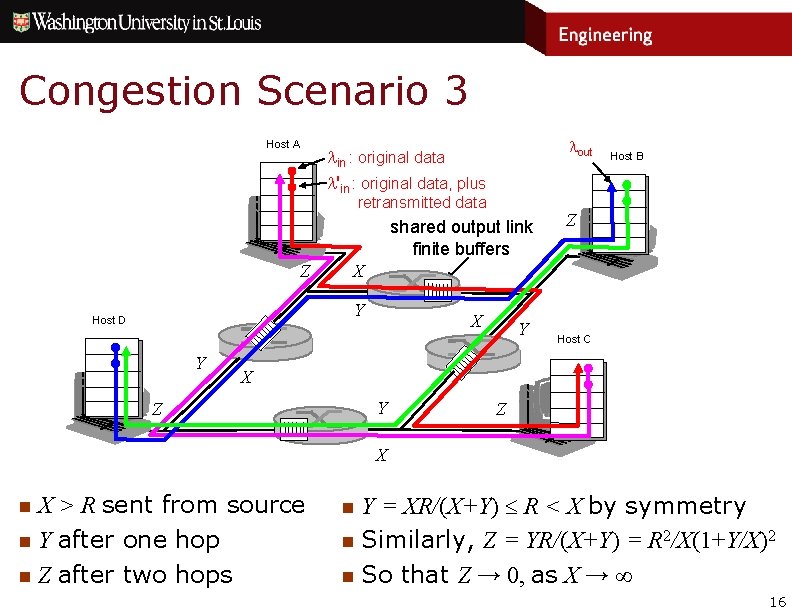

Congestion Scenario 3 Host A lout lin : original data l'in : original data, plus retransmitted data shared output link finite buffers Z Y Z X Y Host D Host B X Y Host C X Y Z Z X X > R sent from source n Y after one hop n Z after two hops n Y = XR/(X+Y) R < X by symmetry n Similarly, Z = YR/(X+Y) = R 2/X(1+Y/X)2 n So that Z → 0, as X → ∞ n 16

Congestion Control Options n Two major approaches to congestion control n End-to-end congestion control – used by TCP » no explicit feedback from network » congestion inferred from loss, and/or delay observed by hosts » relies on cooperation of end hosts (but most schemes do) n Network-assisted congestion control » routers provide feedback to end systems • more rapid response to traffic changes than end-to-end approach » simplest approach – single bit congestion indication (ECN) • TCP and IP support this, but capability is not consistently available » explicit rate control • senders request a sending rate, routers decide allowable rates • can prevent “greedy hosts” from hogging network capacity • was used in Asynchronous Transfer Mode (ATM) networks 17

TCP Congestion Control Big Picture n Objective: send as fast as possible, but not too fast » when lost segments are detected, sender reduces its rate (backs off) » when there are no lost segments, sender increases its rate (keeps probing the network for more bandwidth) n Key questions » when it’s time to cut rate, by how much should it be cut? • TCP cuts sending rate in half to reduce congestion quickly » when it’s time to increase rate, by how much should it increase? • TCP makes small incremental increases to avoid going right back into congestion • but TCP allows a “new sender” to increase its rate more quickly n Additive increase/multiplicative decrease (AIMD) » during stable traffic periods, rates oscillate around ideal rates » different end-to-end flows get roughly “fair shares” of capacity » can be slow to respond to traffic changes (requires a packet loss) 18

AIMD: Additive Increase n Additive (Linear) Increase » "Linear": 1 Maximum Size Segment (MSS) increase in cwnd every RTT » Cautious increase of cwnd n For each current cwnd-worth of segments ACKed within time-out period » cwnd = cwnd + 1 (incremented by one full MSS segment) » Actually, each ACKed segment yields a fractional increase cwnd_increment = MSS x MSS/cwnd bytes » e. g. , if cwnd = 8 MSS, an ACK increases cwnd by MSS/8 19 ESE 404/TCOM 500 - Introduction to Networks and Protocols

AIMD: Multiplicative Decrease n Multiplicative Decrease » Sender scrambles to reduce CW as soon as congestion is detected n Segment ACK Times-Out » cwnd = cwnd/2 » Essentially halves current transmission rate 20 ESE 404/TCOM 500 - Introduction to Networks and Protocols

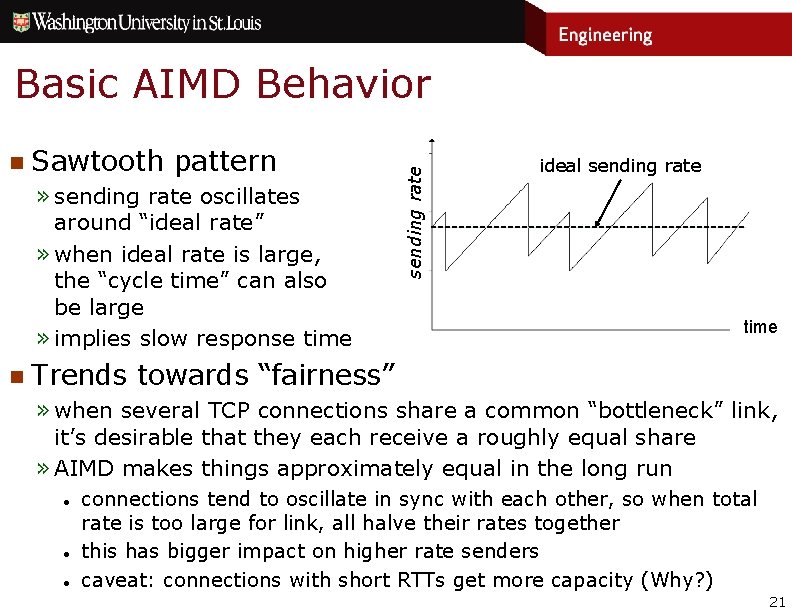

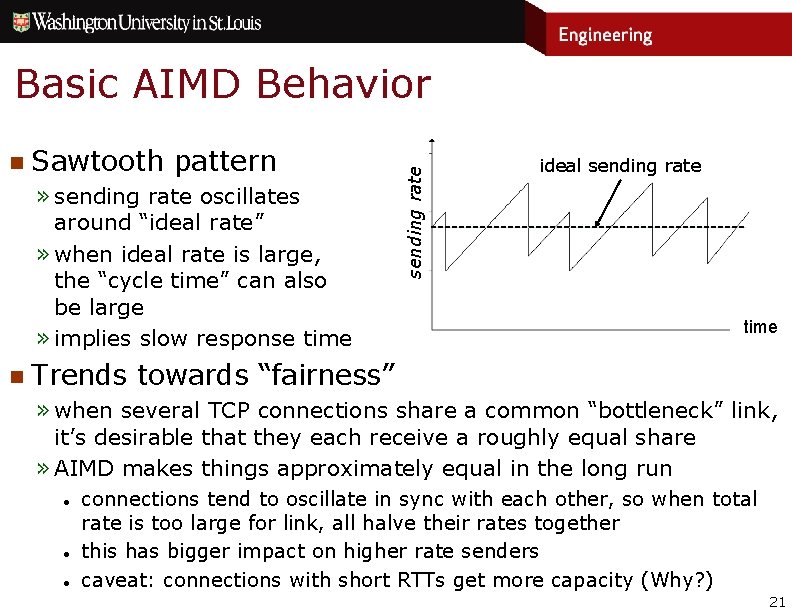

n Sawtooth pattern » sending rate oscillates around “ideal rate” » when ideal rate is large, the “cycle time” can also be large » implies slow response time n Trends sending rate Basic AIMD Behavior ideal sending rate time towards “fairness” » when several TCP connections share a common “bottleneck” link, it’s desirable that they each receive a roughly equal share » AIMD makes things approximately equal in the long run ● ● ● connections tend to oscillate in sync with each other, so when total rate is too large for link, all halve their rates together this has bigger impact on higher rate senders caveat: connections with short RTTs get more capacity (Why? ) 21

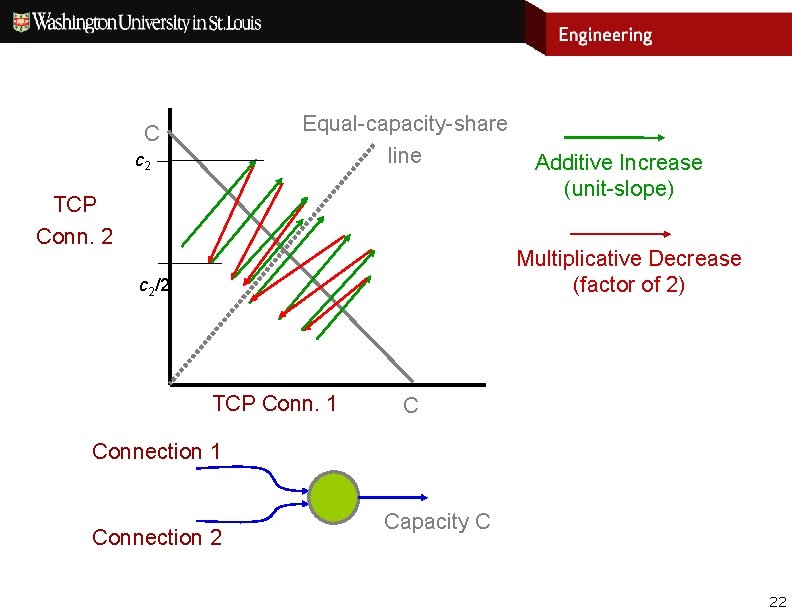

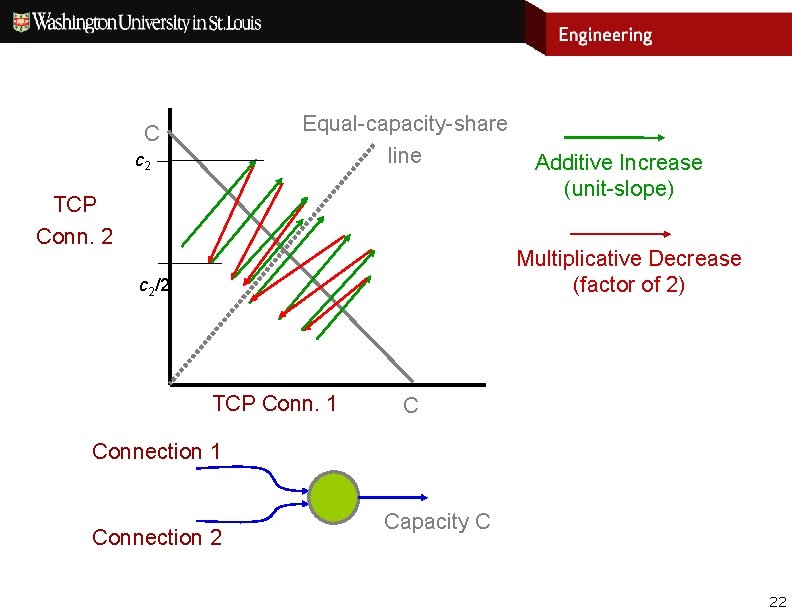

Equal-capacity-share line C c 2 TCP Conn. 2 Additive Increase (unit-slope) Multiplicative Decrease (factor of 2) c 2/2 TCP Conn. 1 C Connection 1 Connection 2 Capacity C 22

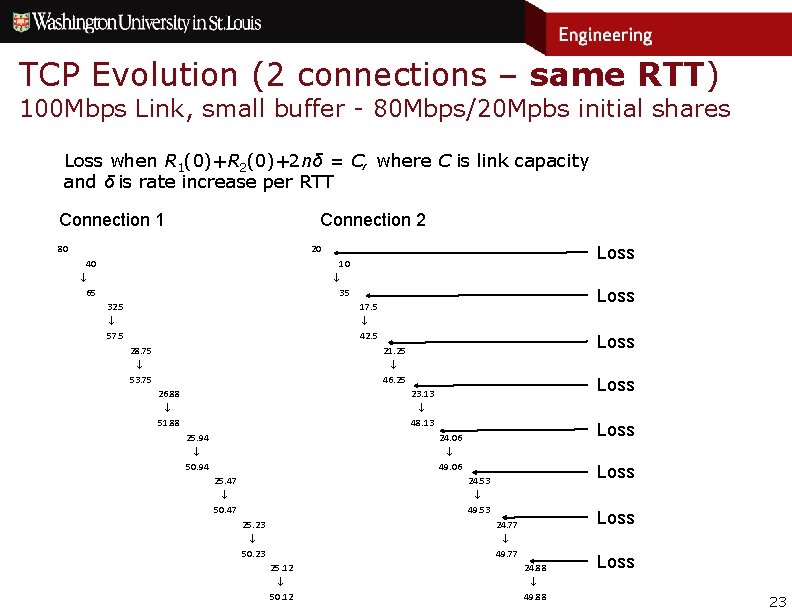

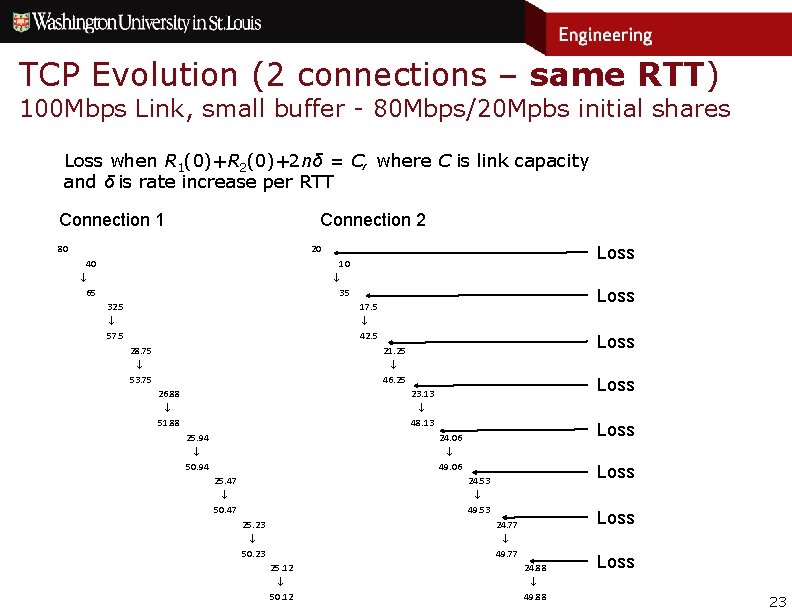

TCP Evolution (2 connections – same RTT) 100 Mbps Link, small buffer - 80 Mbps/20 Mpbs initial shares Loss when R 1(0)+R 2(0)+2 nδ = C, where C is link capacity and δ is rate increase per RTT Connection 1 Connection 2 80 Loss 20 40 10 ¯ ¯ 65 Loss 35 32. 5 ¯ 57. 5 17. 5 ¯ 42. 5 28. 75 ¯ 53. 75 Loss 21. 25 ¯ 46. 25 26. 88 ¯ 51. 88 Loss 23. 13 ¯ 48. 13 25. 94 ¯ 50. 94 Loss 24. 06 ¯ 49. 06 25. 47 ¯ 50. 47 Loss 24. 53 ¯ 49. 53 25. 23 ¯ 50. 23 Loss 24. 77 ¯ 49. 77 25. 12 ¯ 50. 12 24. 88 ¯ 49. 88 Loss 23

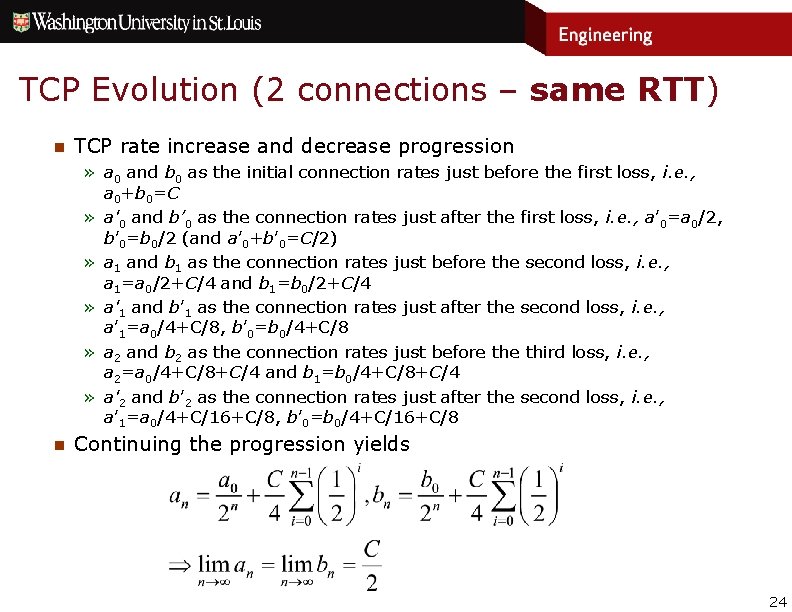

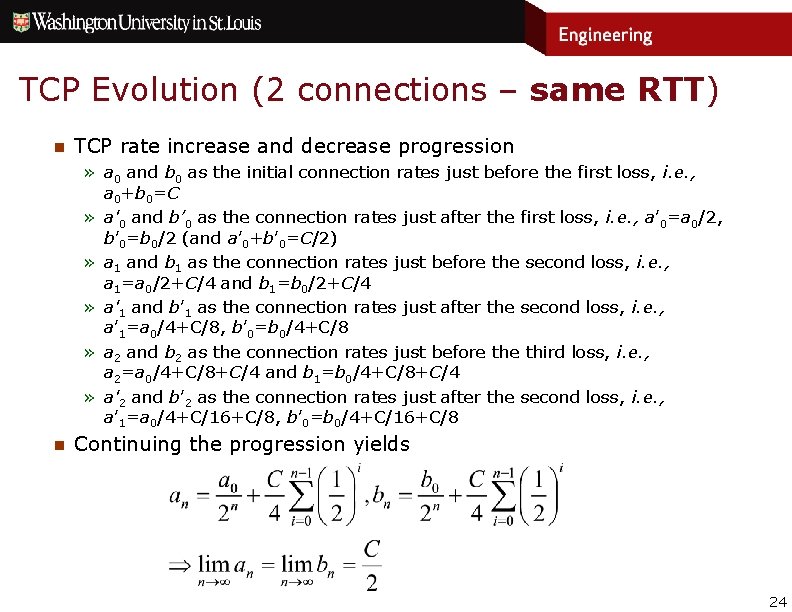

TCP Evolution (2 connections – same RTT) n TCP rate increase and decrease progression » a 0 and b 0 as the initial connection rates just before the first loss, i. e. , a 0+b 0=C » a'0 and b’ 0 as the connection rates just after the first loss, i. e. , a’ 0=a 0/2, b’ 0=b 0/2 (and a’ 0+b’ 0=C/2) » a 1 and b 1 as the connection rates just before the second loss, i. e. , a 1=a 0/2+C/4 and b 1=b 0/2+C/4 » a'1 and b’ 1 as the connection rates just after the second loss, i. e. , a’ 1=a 0/4+C/8, b’ 0=b 0/4+C/8 » a 2 and b 2 as the connection rates just before third loss, i. e. , a 2=a 0/4+C/8+C/4 and b 1=b 0/4+C/8+C/4 » a'2 and b’ 2 as the connection rates just after the second loss, i. e. , a’ 1=a 0/4+C/16+C/8, b’ 0=b 0/4+C/16+C/8 n Continuing the progression yields 24

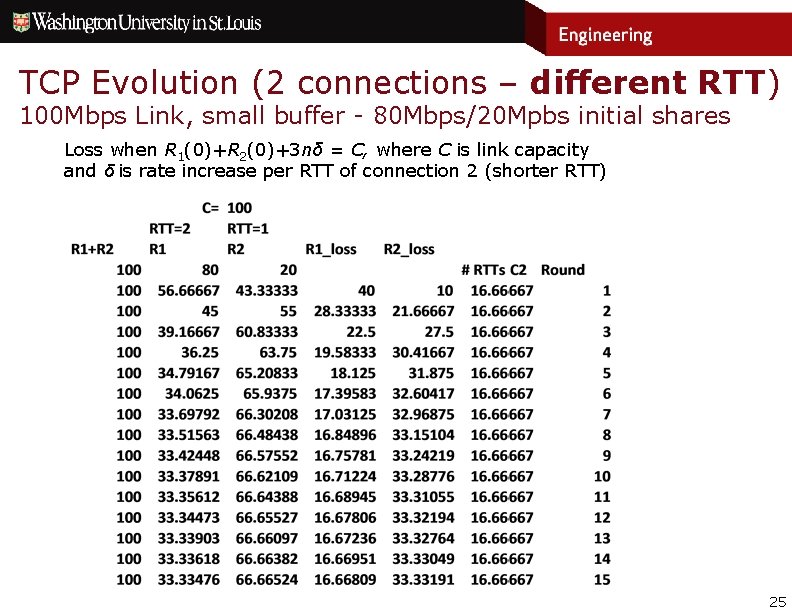

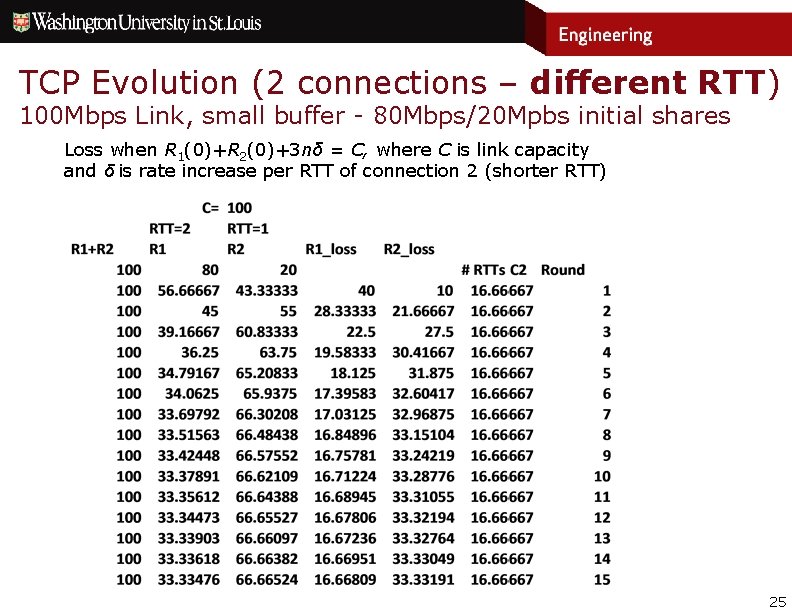

TCP Evolution (2 connections – different RTT) 100 Mbps Link, small buffer - 80 Mbps/20 Mpbs initial shares Loss when R 1(0)+R 2(0)+3 nδ = C, where C is link capacity and δ is rate increase per RTT of connection 2 (shorter RTT) 25

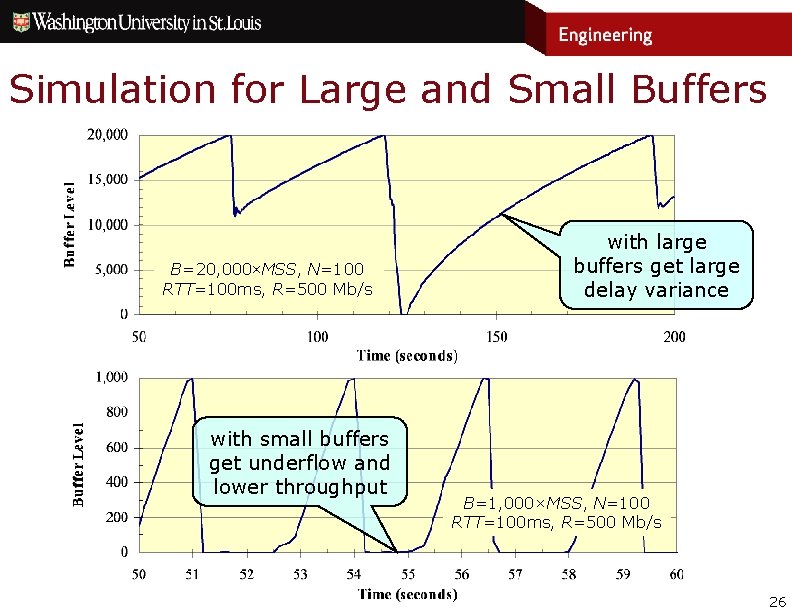

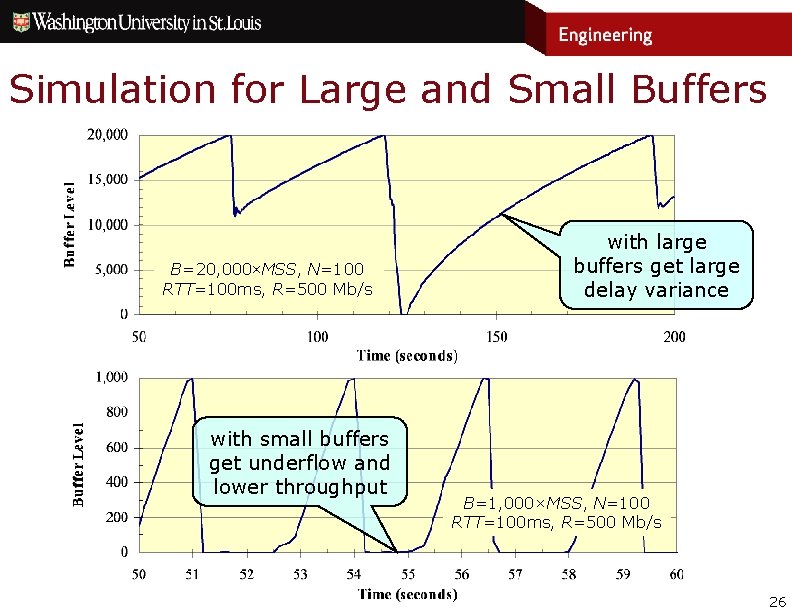

Simulation for Large and Small Buffers B=20, 000×MSS, N=100 RTT=100 ms, R=500 Mb/s with small buffers get underflow and lower throughput with large buffers get large delay variance B=1, 000×MSS, N=100 RTT=100 ms, R=500 Mb/s 26

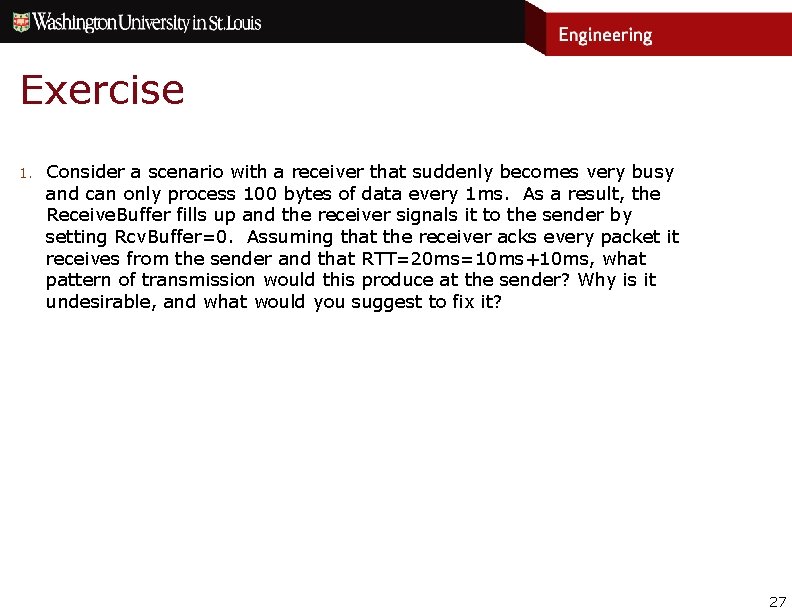

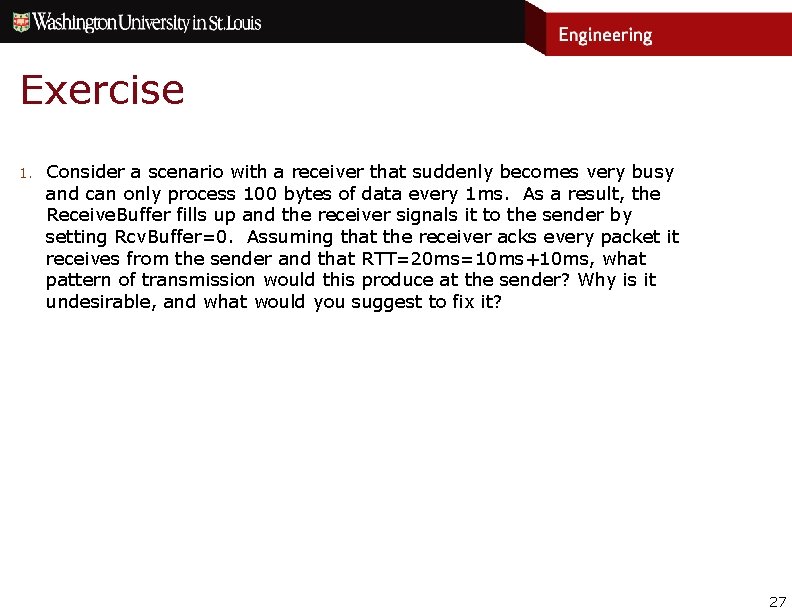

Exercise 1. Consider a scenario with a receiver that suddenly becomes very busy and can only process 100 bytes of data every 1 ms. As a result, the Receive. Buffer fills up and the receiver signals it to the sender by setting Rcv. Buffer=0. Assuming that the receiver acks every packet it receives from the sender and that RTT=20 ms=10 ms+10 ms, what pattern of transmission would this produce at the sender? Why is it undesirable, and what would you suggest to fix it? 27

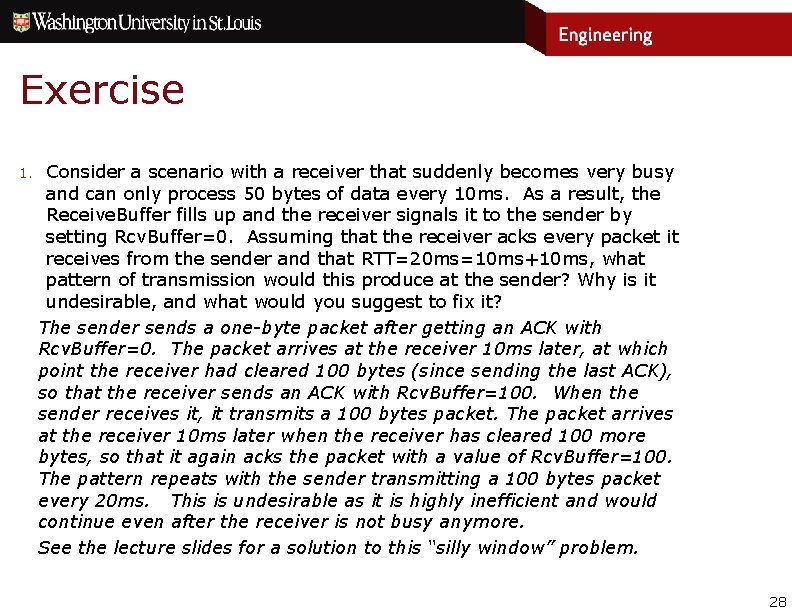

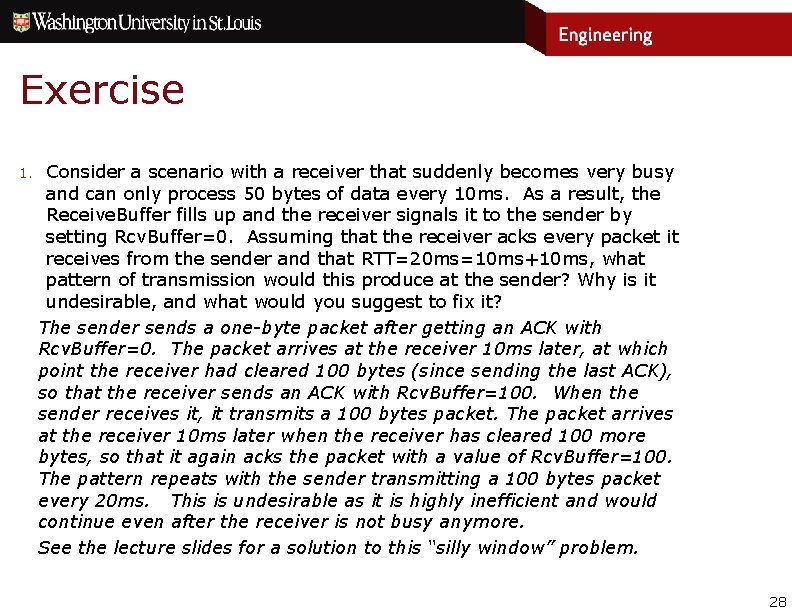

Exercise 1. Consider a scenario with a receiver that suddenly becomes very busy and can only process 50 bytes of data every 10 ms. As a result, the Receive. Buffer fills up and the receiver signals it to the sender by setting Rcv. Buffer=0. Assuming that the receiver acks every packet it receives from the sender and that RTT=20 ms=10 ms+10 ms, what pattern of transmission would this produce at the sender? Why is it undesirable, and what would you suggest to fix it? The sender sends a one-byte packet after getting an ACK with Rcv. Buffer=0. The packet arrives at the receiver 10 ms later, at which point the receiver had cleared 100 bytes (since sending the last ACK), so that the receiver sends an ACK with Rcv. Buffer=100. When the sender receives it, it transmits a 100 bytes packet. The packet arrives at the receiver 10 ms later when the receiver has cleared 100 more bytes, so that it again acks the packet with a value of Rcv. Buffer=100. The pattern repeats with the sender transmitting a 100 bytes packet every 20 ms. This is undesirable as it is highly inefficient and would continue even after the receiver is not busy anymore. See the lecture slides for a solution to this “silly window” problem. 28

Exercises 2. When TCP detects that a packet has been lost, it assumes that the loss was caused by congestion. Under what circumstances is this a reasonable assumption? In what common situation is it not reasonable? How does TCP’s assumption affect performance when packets may be lost for other reasons? 29

Exercises 2. When TCP detects that a packet has been lost, it assumes that the loss was caused by congestion. Under what circumstances is this a reasonable assumption? In what common situation is it not reasonable? How does TCP’s assumption affect performance when packets may be lost for other reasons? This is unreasonable when losses are not indicative of congestion, e. g. , when they are caused by errors, as would be the case on wireless links. This is reasonable when links are very reliable (e. g. , fiber links), and losses are mostly due to buffer overflows that arise because of congestion. TCP’s assumption that all losses are indicative of congestion can result in poor performance (throughput) in cases where this assumption does not hold, e. g. , over wireless links that are less reliable. 30

Exercises 3. Suppose that N TCP connections pass through a “bottleneck link” that has a rate of 1 Gb/s and a buffer capacity of 25 MB. Assume that for all connections, the RTT is 100 ms. Suppose that just before the buffer fills, the input rate is 1. 2 Gb/s. Assuming that this causes all of the TCP senders to halve their sending rate, i. e. , they all loose a packet, how much will the buffer level drop during the next 2 RTTs? 31

Exercises 3. Suppose that N TCP connections pass through a “bottleneck link” that has a rate of 1 Gb/s and a buffer capacity of 25 MB. Assume that for all connections, the RTT is 100 ms. Suppose that just before the buffer fills, the input rate is 1. 2 Gb/s. Assuming that this causes all of the TCP senders to halve their sending rate, i. e. , they all loose a packet, how much will the buffer level drop during the next 2 RTTs? If all connections halve their rates, the total rate drops down to 600 Mbps. The rate stays at that level for 100 ms (0. 1 sec of 600 Mbps in, 1 Gb/s out) which allows the link to drain 40 Mbits or 5 MB worth of buffer capacity, so that the buffer content drops to 20 MB after that time. In the next round (RTT), the aggregate rate will actually increase by Nx. MSS/RTT, but if we assume that this increase only happens at the end of the round, e. g. , because the senders only send full MSS packets, then the buffer again drops down by 5 MB to 15 MB. 32