10 Sensing How do you make a robot

10 Sensing How do you make a robot “see”? What sensors are essential for a robot? What’s sensor fusion? © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 1

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Objectives • List at least one advantage and disadvantage of common robotic sensors: GPS, INS, ultrasonics, laser stripers, IR rangers, laser rangers, computer vision • If given a small interleaved RGB image and a range of color values for a region, be able to extract color affordances using 1) threshold on color and 2) a color histogram • Be able to construct an occupancy grid and use polar plots for reactive navigation • Define each of the following terms in one or two sentences: proprioception, exteroception, exproprioception, proximity sensor, logical sensor, false positive, false negative, hue, saturation, image, pixel, image function, computer vision, GPS-denied area • Describe three types of behavioral sensor fusion: fission, fusion, fashion © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 2

10 Return to Layers • In behavioral layer, sensing… Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Supports a behavior – Releases a behavior • In deliberative layer, sensing… – Recognizes objects – Builds world model When it acts as a virtual sensor • In interactive layer, social sensing… – Personal spaces, facial features, and gestures A subtle distinction between sensors and sensing Sensors provide the raw data, while sensing is the combination of algorithm(s) and sensor(s) that produces a percept or world model nd © 2019 Robin Murphy Introduction to AI Robotics 2 Edition (MIT Press 2019) 3

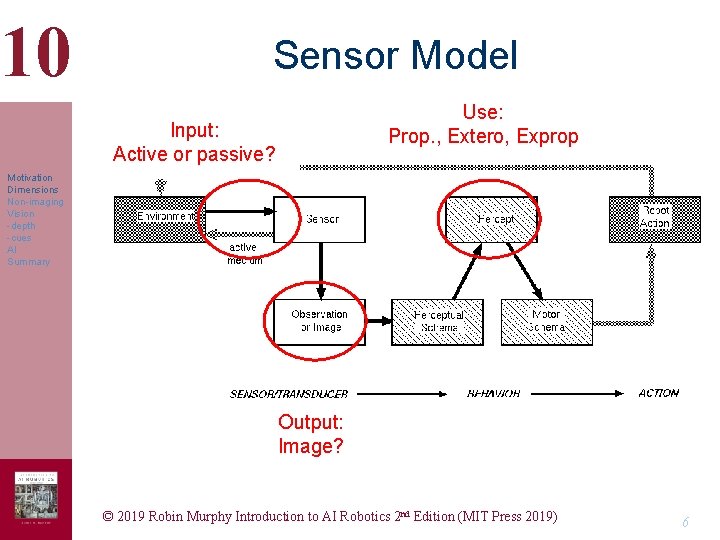

10 Ways of Organizing Sensors • 3 Types of Perception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Proprioceptive, exteroceptive, exproprioceptive • Input – Active vs. passive • Output – Image vs. non-image © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 4

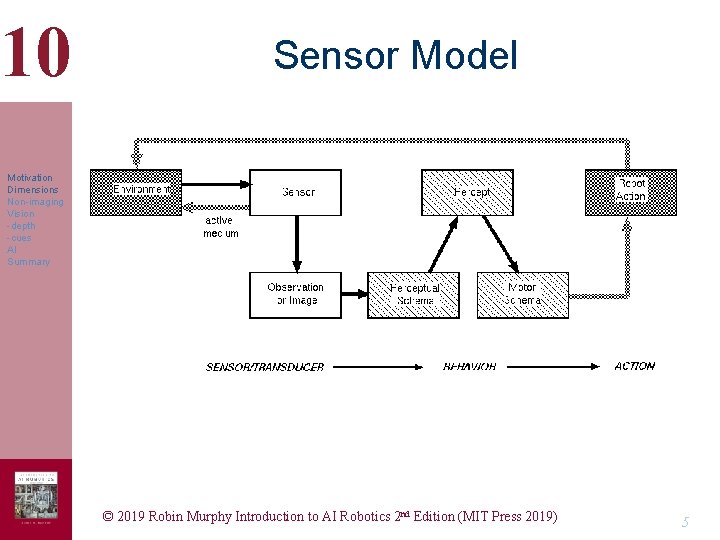

10 Sensor Model Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 5

10 Sensor Model Use: Prop. , Extero, Exprop Input: Active or passive? Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Output: Image? © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 6

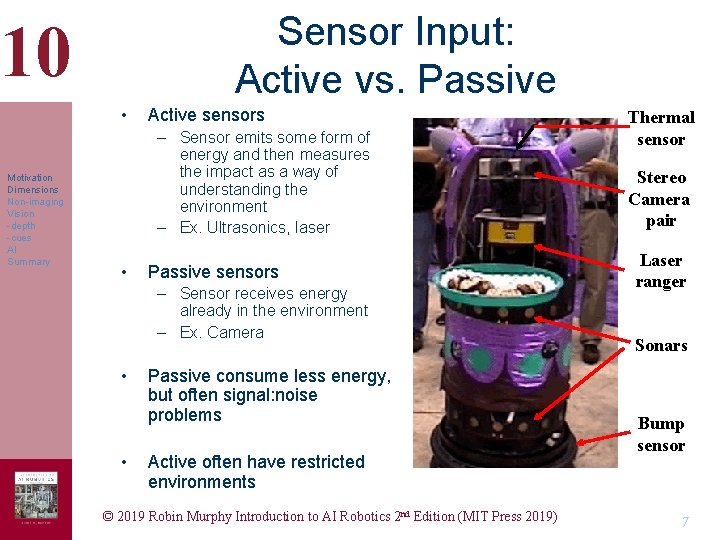

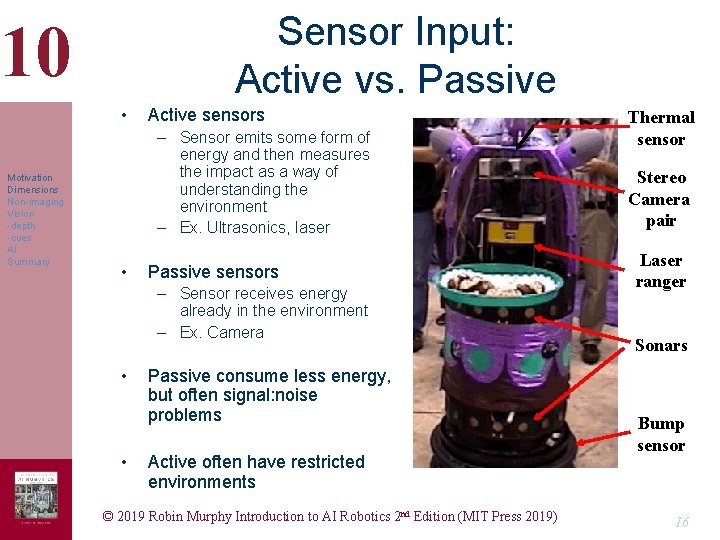

Sensor Input: Active vs. Passive 10 • Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Active sensors – Sensor emits some form of energy and then measures the impact as a way of understanding the environment – Ex. Ultrasonics, laser • Passive sensors – Sensor receives energy already in the environment – Ex. Camera • • Passive consume less energy, but often signal: noise problems Active often have restricted environments © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Thermal sensor Stereo Camera pair Laser ranger Sonars Bump sensor 7

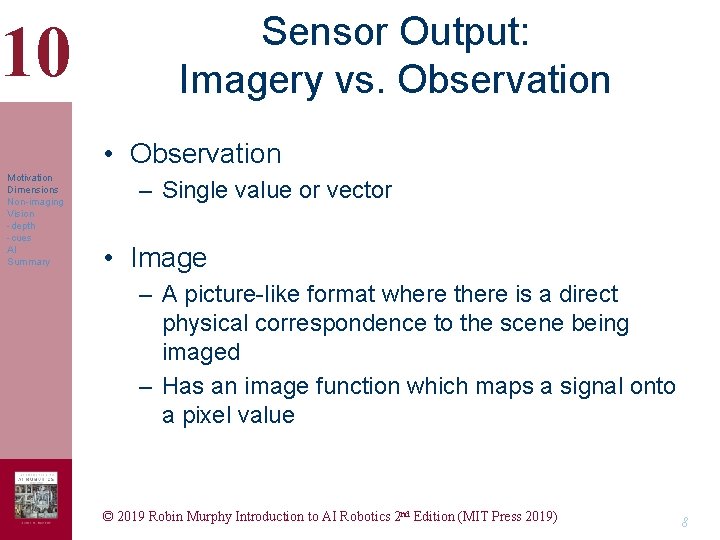

10 Sensor Output: Imagery vs. Observation • Observation Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Single value or vector • Image – A picture-like format where there is a direct physical correspondence to the scene being imaged – Has an image function which maps a signal onto a pixel value © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 8

10 Types of Sensors • Proprioception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – To locate the position of limbs and joints of the robot or to determine how much they have moved – Self-control • Exteroception – To detect objects in the external world and often the distance to those objects – Navigation – Object recognition • Exproprioception – To detect the position of the robot relative to objects in the world – Manipulation © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 9

10 Proprioceptive Sensors • Sensors that give information on the internal state of the robot, such as: – – – Motion Position (x, y, z) Orientation (about x, y, z axes) Velocity, acceleration Temperature Battery level • Example proprioceptive sensors: – – – Encoders (dead reckoning) Inertial navigation system (INS) Global positioning system (GPS) Compass Gyroscopes © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

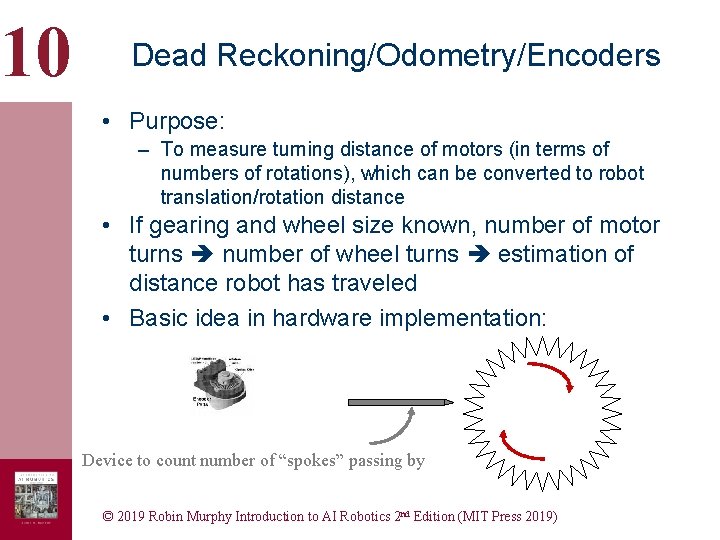

10 Dead Reckoning/Odometry/Encoders • Purpose: – To measure turning distance of motors (in terms of numbers of rotations), which can be converted to robot translation/rotation distance • If gearing and wheel size known, number of motor turns number of wheel turns estimation of distance robot has traveled • Basic idea in hardware implementation: Device to count number of “spokes” passing by © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

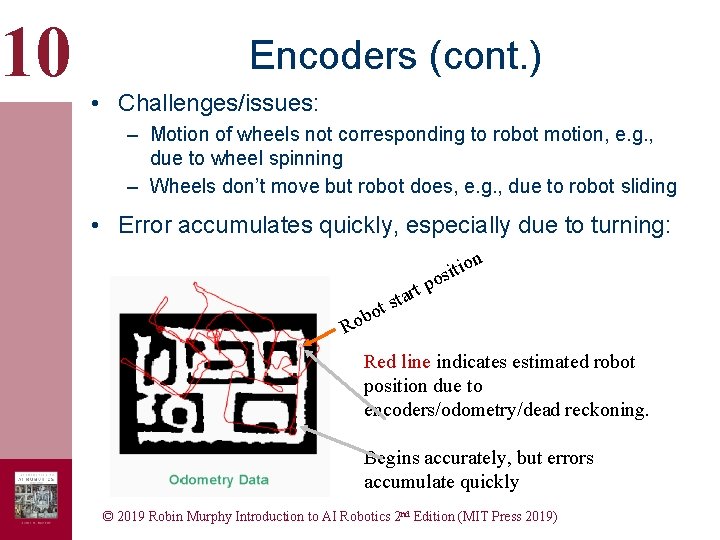

10 Encoders (cont. ) • Challenges/issues: – Motion of wheels not corresponding to robot motion, e. g. , due to wheel spinning – Wheels don’t move but robot does, e. g. , due to robot sliding • Error accumulates quickly, especially due to turning: t o b Ro rt a t s on i t i os p Red line indicates estimated robot position due to encoders/odometry/dead reckoning. Begins accurately, but errors accumulate quickly © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Inertial Navigation Sensors (INS) • Inertial navigation sensors: measure movements electronically through miniature accelerometers • Accuracy: quite good (e. g. , 0. 1% of distance traveled) if movements are smooth and sampling rate is high • Problem for mobile robots: – Expensive: $50, 000 - $100, 000 USD – Robots often violate smooth motion constraint – INS units typically large © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

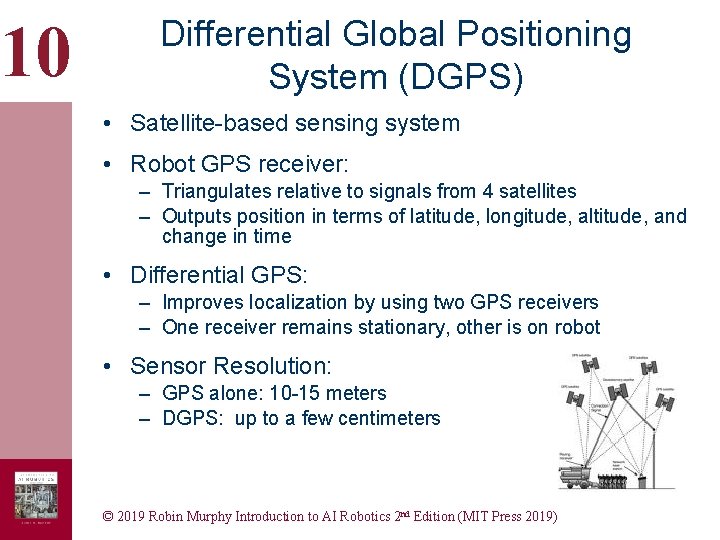

10 Differential Global Positioning System (DGPS) • Satellite-based sensing system • Robot GPS receiver: – Triangulates relative to signals from 4 satellites – Outputs position in terms of latitude, longitude, altitude, and change in time • Differential GPS: – Improves localization by using two GPS receivers – One receiver remains stationary, other is on robot • Sensor Resolution: – GPS alone: 10 -15 meters – DGPS: up to a few centimeters © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 DGPS Challenges • Does not work indoors in most buildings • Does not work outdoors in “urban canyons” (amidst tall buildings) • Forested areas (i. e. , trees) can block satellite signals • Cost is high (about $30, 000) © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

Sensor Input: Active vs. Passive 10 • Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Active sensors – Sensor emits some form of energy and then measures the impact as a way of understanding the environment – Ex. Ultrasonics, laser • Passive sensors – Sensor receives energy already in the environment – Ex. Camera • • Passive consume less energy, but often signal: noise problems Active often have restricted environments © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Thermal sensor Stereo Camera pair Laser ranger Sonars Bump sensor 16

10 Sensor Output: Imagery vs. Observation • Observation Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Single value or vector • Image – A picture-like format where there is a direct physical correspondence to the scene being imaged – Has an image function which maps a signal onto a pixel value © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 17

10 Popular Non-Imagery Navigation Sensors • Exteroception at a distance is the key Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • direct contact sensors – Bump sensors, whiskers • “look ahead” sensors – Range direct • IR rangers • Ultrasonics • Laser stripers/rangers © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 18

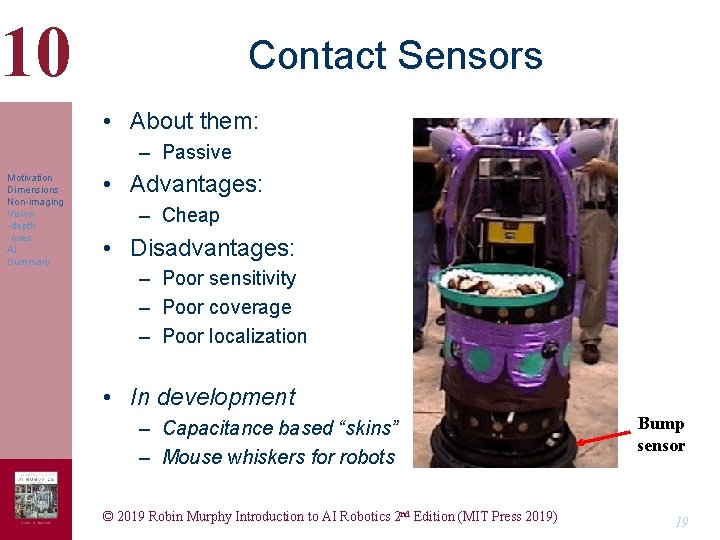

10 Contact Sensors • About them: – Passive Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages: – Cheap • Disadvantages: – Poor sensitivity – Poor coverage – Poor localization • In development – Capacitance based “skins” – Mouse whiskers for robots © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Bump sensor 19

10 Infrared and Thermal • Actually a spectrum of wavelengths, often emitted from heat Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • “IR” is cheap, used in remotes • True infrared, FLIR (forward looking infrared red) produces thermal imagery – Breakthrough in micro-bolometers • Night-vision is not really IR, it’s light amplification © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 20

10 IR • About them: Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Usually a point sensor, active – Emits a particular wavelength, then detects time to bounce back – Popular for indoor detection of collisions, “negative obstacles” • Advantages – Cheap – Can also detect dark/light (via strength) • Disadvantages – Sensitive to lighting conditions – Specular reflection: when waveform hits a surface at an acute and bounces away – Coverage of a line – Short range so can’t go fast © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 21

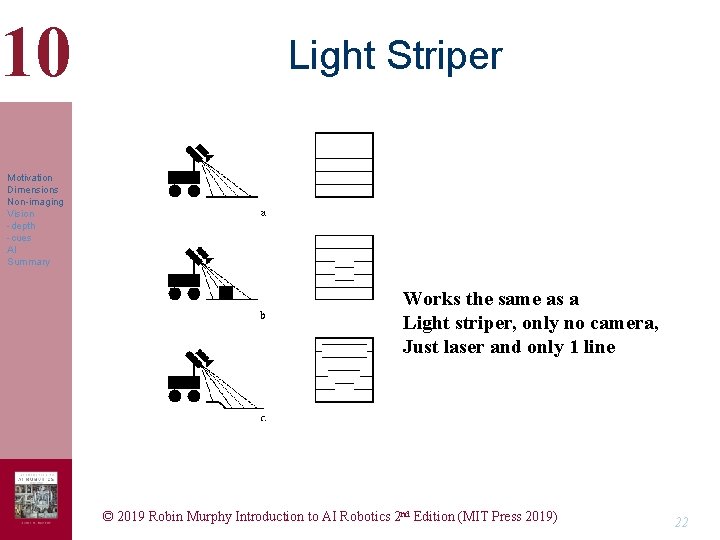

10 Light Striper Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Works the same as a Light striper, only no camera, Just laser and only 1 line © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 22

10 Sick • Accuracy & repeatability – Excellent results Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Responsiveness in target domain • Power consumption – High; reduce battery run time by half • Reliability – good • Size – A bit large • Computational Complexity – Not bad until try to “stack up” • Interpretation Reliability – Much better than any other ranger © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 23

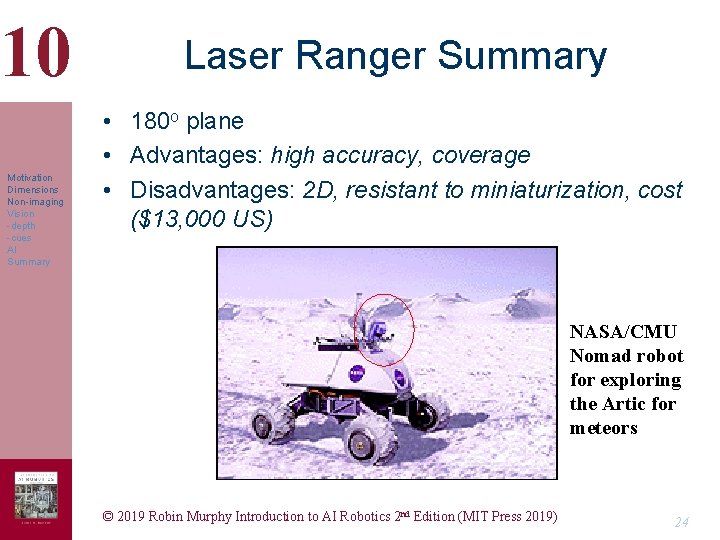

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Laser Ranger Summary • 180 o plane • Advantages: high accuracy, coverage • Disadvantages: 2 D, resistant to miniaturization, cost ($13, 000 US) NASA/CMU Nomad robot for exploring the Artic for meteors © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 24

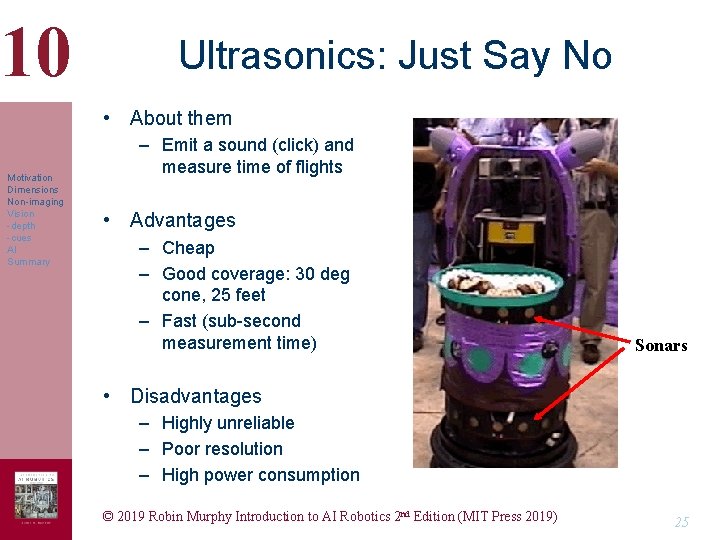

10 Ultrasonics: Just Say No • About them Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Emit a sound (click) and measure time of flights • Advantages – Cheap – Good coverage: 30 deg cone, 25 feet – Fast (sub-second measurement time) Sonars • Disadvantages – Highly unreliable – Poor resolution – High power consumption © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 25

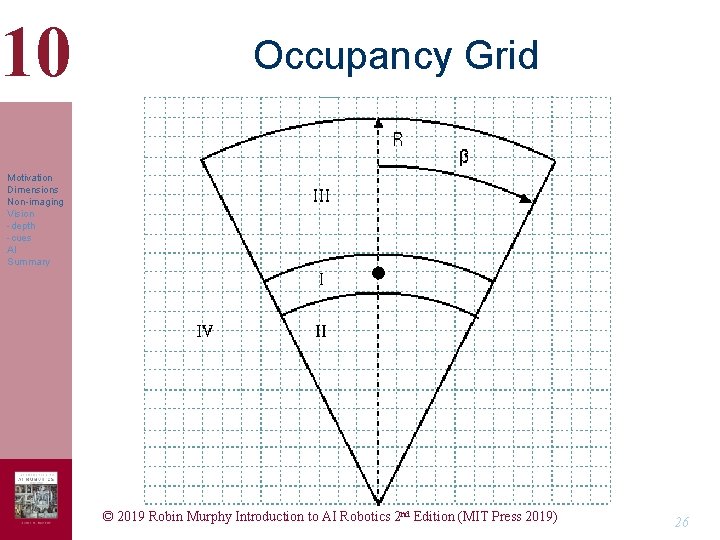

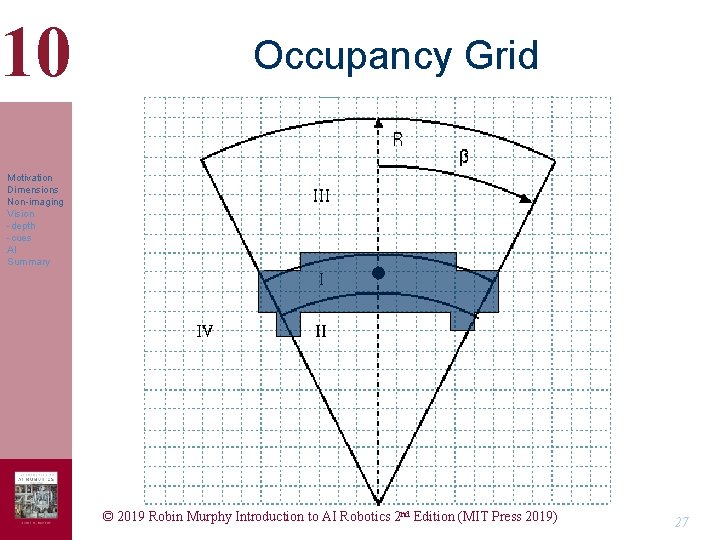

10 Occupancy Grid Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 26

10 Occupancy Grid Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 27

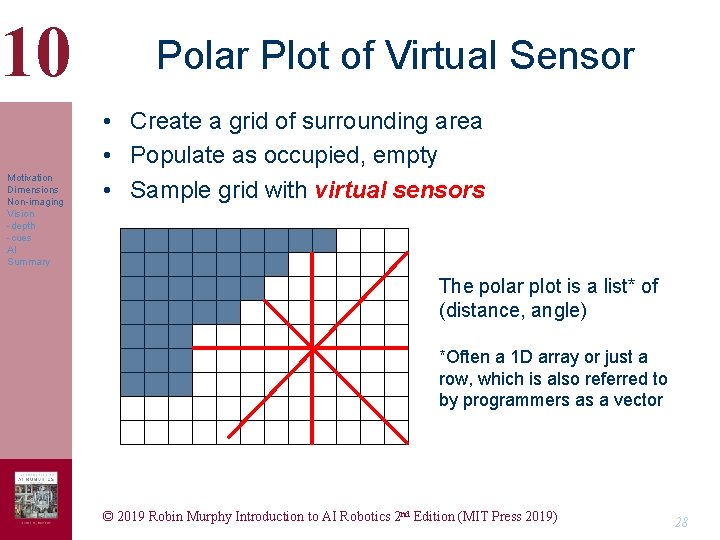

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Polar Plot of Virtual Sensor • Create a grid of surrounding area • Populate as occupied, empty • Sample grid with virtual sensors The polar plot is a list* of (distance, angle) *Often a 1 D array or just a row, which is also referred to by programmers as a vector © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 28

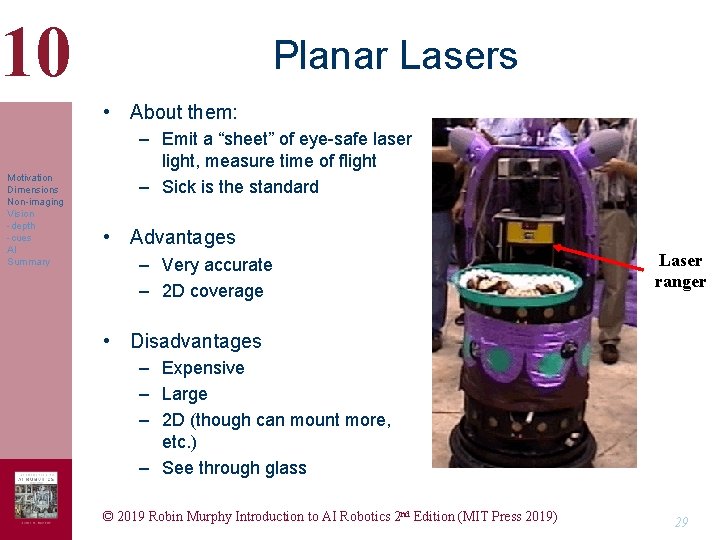

10 Planar Lasers • About them: Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Emit a “sheet” of eye-safe laser light, measure time of flight – Sick is the standard • Advantages – Very accurate – 2 D coverage Laser ranger • Disadvantages – Expensive – Large – 2 D (though can mount more, etc. ) – See through glass © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 29

10 Brief Summary of Non-Imaging Sensors • Proprioceptive – Needed Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Exteroceptive – Ultrasonics: problematic – Lasers: costs coming down – IR: unreliable but may work for niches • Occupancy grid is a hack, serving as a virtual sensor © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 30

10 Computer Vision: Navigation • Works on any image, regards of source (video, thermal imagery, …) Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – – Optic flow, looming Stereo range maps Depth from X algorithms Intriguing hacks • Note: most computer vision work focuses on single image, but now beginning to look at sequence Computer vision refers to processing data from any modality which uses the electromagnetic spectrum to produce an image © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 31

10 Optic Flow • About them – Affordance of time-to-contact Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages – Affordance derived from camera (which you’d probably have for other reasons) • Disadvantages – Computationally expensive; if not on chip, unlikely to operate fast enough – Typically brittle under lighting conditions, different speeds, environments with uniform texture © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) www. centeye. com 32

10 Stereo Range Maps • About them – Use two cameras Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages – Passive – Good coverage • Disadvantages – Environment may not support interest operators – Computationally expensive – Sensitive to calibration – Sensitive to lighting © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 33

10 Depth from X • About them Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Usually single camera, single image or short image sequence – Useful variants include • Depth from motion • Depth from focus • Depth from texture • Advantages – Nice theory, often computationally optimized – Usually have a camera anyway • Disadvantages – Tend to be brittle outside of highly controlled conditions © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 34

10 Outdoor Reactive Navigation Challenges • Most everything has been developed for indoors Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Pressing outdoor needs – Foilage: tall grass == granite rock – Terrain anticipation • Outdoor challenges – – Lighting and shadows Weather conditions Sparse objects Texture or lack of texture © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 35

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Sensing for Behavioral Navigation Summary • Have to decide between nearest protrusion and range maps • Planar lasers work best but expensive, big, need several or need spinning head • Ultrasonics were popular because cheap and distance, but now are hardly ever used due to their problems with specular reflection • IR are almost never used for reliable navigation, usually just “panic” stops • Computer vision is challenging due to lighting conditions – Optic flow chips can provide time-to-contact – Stereo can provide partial range maps • Outdoor conditions are significantly harder than indoor © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 36

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Object Recognition • For purely reactive systems, no reasoning, just affordances • Typically recognize specific things by pattern of color, heat signature, fusion of these algorithms, or through Hough transform © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 37

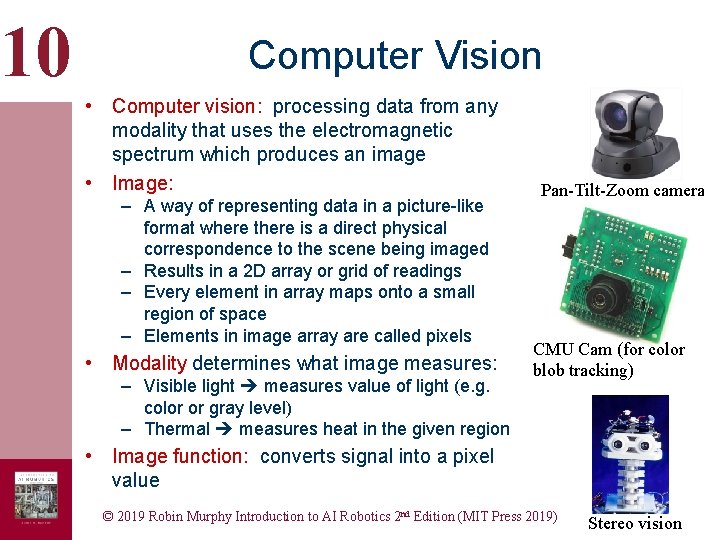

10 Computer Vision • Computer vision: processing data from any modality that uses the electromagnetic spectrum which produces an image • Image: – A way of representing data in a picture-like format where there is a direct physical correspondence to the scene being imaged – Results in a 2 D array or grid of readings – Every element in array maps onto a small region of space – Elements in image array are called pixels • Modality determines what image measures: – Visible light measures value of light (e. g. color or gray level) – Thermal measures heat in the given region Pan-Tilt-Zoom camera CMU Cam (for color blob tracking) • Image function: converts signal into a pixel value © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Stereo vision

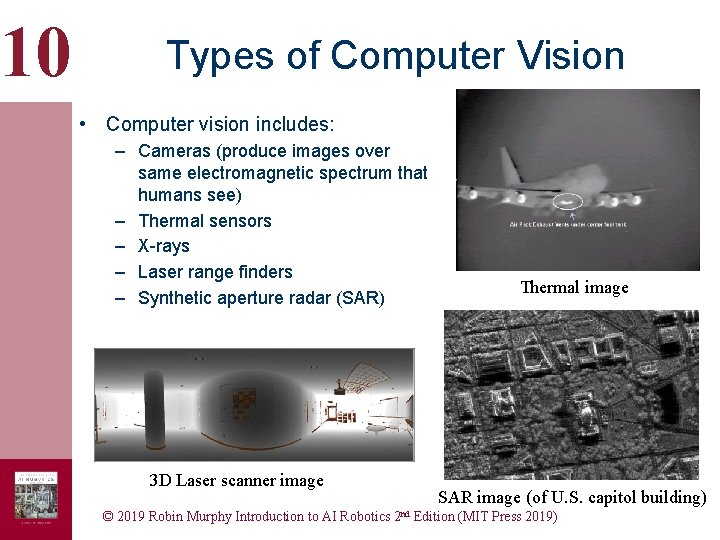

10 Types of Computer Vision • Computer vision includes: – Cameras (produce images over same electromagnetic spectrum that humans see) – Thermal sensors – X-rays – Laser range finders – Synthetic aperture radar (SAR) 3 D Laser scanner image Thermal image SAR image (of U. S. capitol building) © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Computer Vision is a Field of Study on its Own • Computer vision field has developed algorithms for: – – – Noise filtering Compensating for illumination problems Enhancing images Finding lines Matching lines to models Extracting shapes and building 3 D representations • However, behavior-based/reactive robots tend not to use these algorithms, due to high computational complexity © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

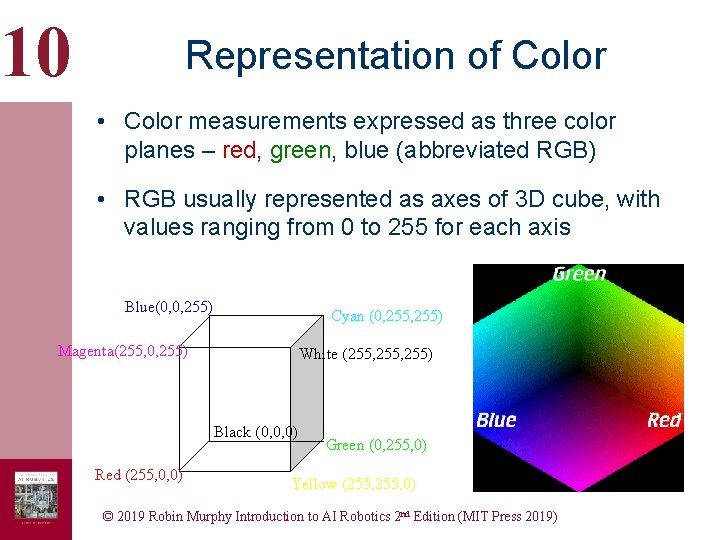

10 Representation of Color • Color measurements expressed as three color planes – red, green, blue (abbreviated RGB) • RGB usually represented as axes of 3 D cube, with values ranging from 0 to 255 for each axis Blue(0, 0, 255) Cyan (0, 255) Magenta(255, 0, 255) White (255, 255) Black (0, 0, 0) Red (255, 0, 0) Green (0, 255, 0) Yellow (255, 0) © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

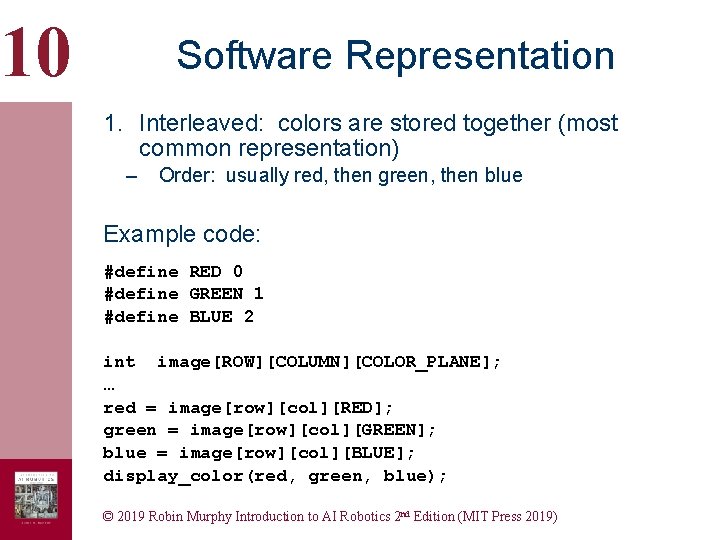

10 Software Representation 1. Interleaved: colors are stored together (most common representation) – Order: usually red, then green, then blue Example code: #define RED 0 #define GREEN 1 #define BLUE 2 int image[ROW][COLUMN][COLOR_PLANE]; … red = image[row][col][RED]; green = image[row][col][GREEN]; blue = image[row][col][BLUE]; display_color(red, green, blue); © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

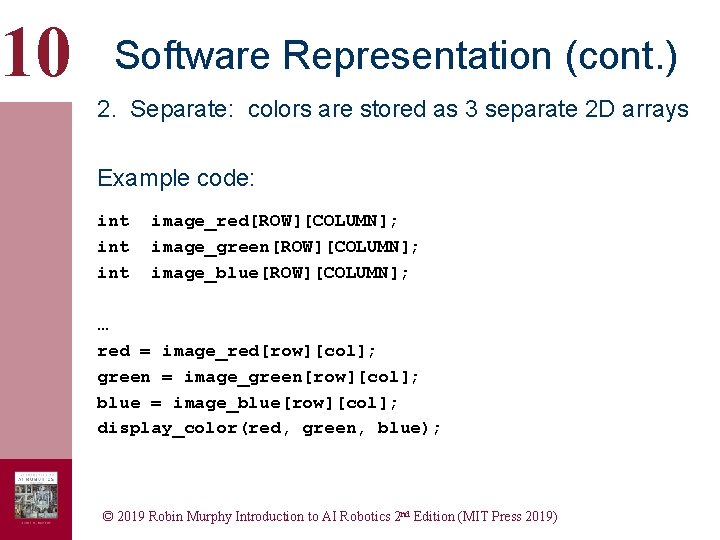

10 Software Representation (cont. ) 2. Separate: colors are stored as 3 separate 2 D arrays Example code: int int image_red[ROW][COLUMN]; image_green[ROW][COLUMN]; image_blue[ROW][COLUMN]; … red = image_red[row][col]; green = image_green[row][col]; blue = image_blue[row][col]; display_color(red, green, blue); © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

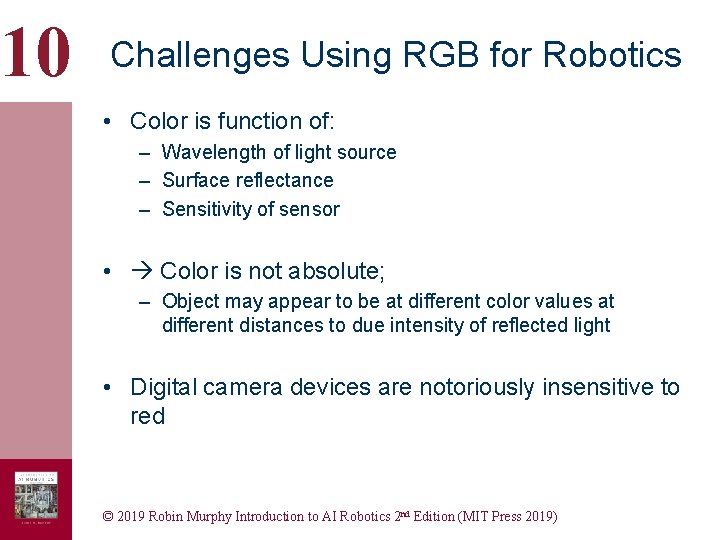

10 Challenges Using RGB for Robotics • Color is function of: – Wavelength of light source – Surface reflectance – Sensitivity of sensor • Color is not absolute; – Object may appear to be at different color values at different distances to due intensity of reflected light • Digital camera devices are notoriously insensitive to red © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

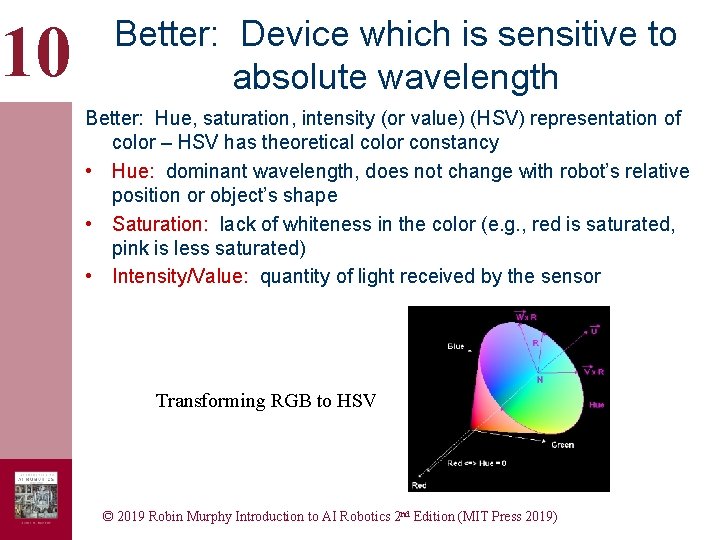

10 Better: Device which is sensitive to absolute wavelength Better: Hue, saturation, intensity (or value) (HSV) representation of color – HSV has theoretical color constancy • Hue: dominant wavelength, does not change with robot’s relative position or object’s shape • Saturation: lack of whiteness in the color (e. g. , red is saturated, pink is less saturated) • Intensity/Value: quantity of light received by the sensor Transforming RGB to HSV © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

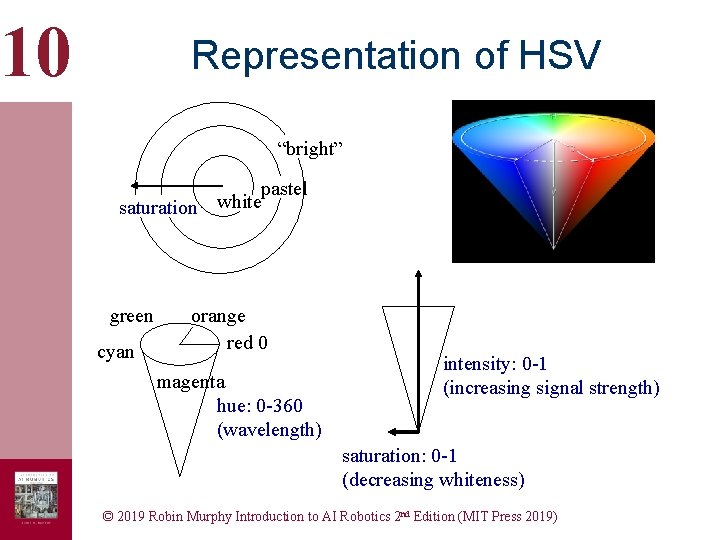

10 Representation of HSV “bright” pastel saturation white green cyan orange red 0 magenta hue: 0 -360 (wavelength) intensity: 0 -1 (increasing signal strength) saturation: 0 -1 (decreasing whiteness) © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 HSV Challenges for Robotics • Requires special cameras and framegrabbers • Very expensive equipment • Alternative: Use algorithm to convert -- Spherical Coordinate Transform (SCT) – Transforms RGB data to a color space that more closely duplicates response of human eye – Used in biomedical imaging, but not widely used for robotics – Much more insensitive to lighting changes © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

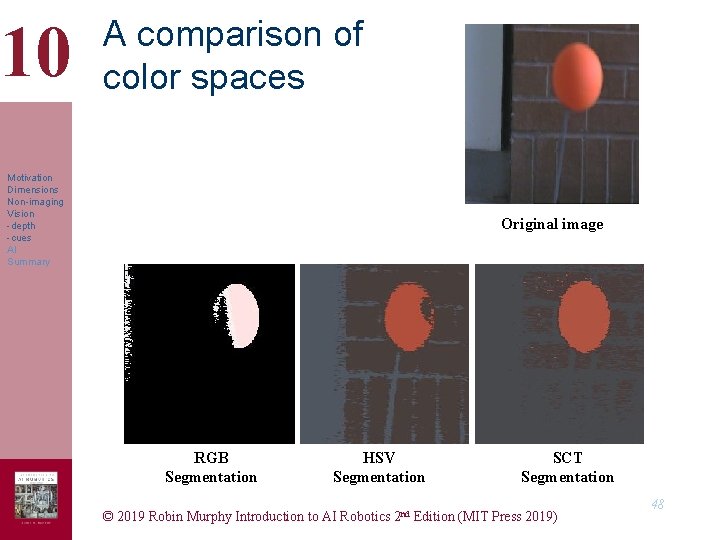

10 A comparison of color spaces Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Original image RGB Segmentation HSV Segmentation SCT Segmentation © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 48

10 Region Segmentation • Region Segmentation: most common use of computer vision in robotics, with goal to identify region in image with a particular color • Basic concept: identify all pixels in image which are part of the region, then navigate to the region’s centroid • Steps: – Threshold all pixels which share same color (thresholding) – Group those together, throwing out any that don’t seem to be in same area as majority of the pixels (region growing) © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

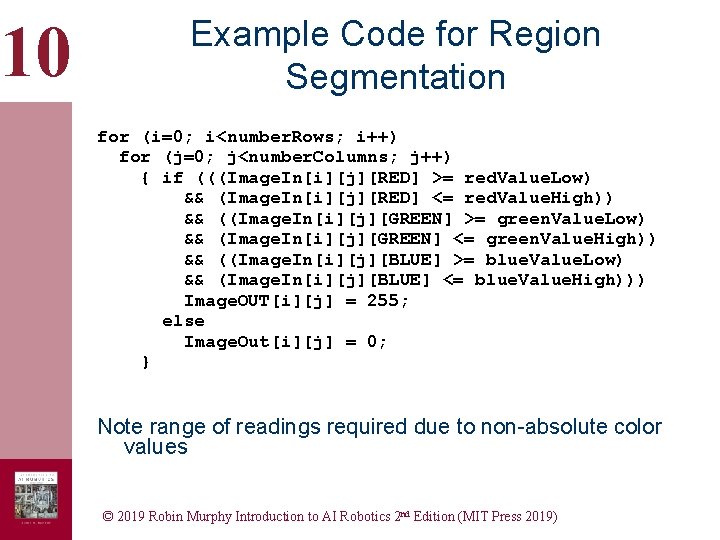

10 Example Code for Region Segmentation for (i=0; i<number. Rows; i++) for (j=0; j<number. Columns; j++) { if (((Image. In[i][j][RED] >= red. Value. Low) && (Image. In[i][j][RED] <= red. Value. High)) && ((Image. In[i][j][GREEN] >= green. Value. Low) && (Image. In[i][j][GREEN] <= green. Value. High)) && ((Image. In[i][j][BLUE] >= blue. Value. Low) && (Image. In[i][j][BLUE] <= blue. Value. High))) Image. OUT[i][j] = 255; else Image. Out[i][j] = 0; } Note range of readings required due to non-absolute color values © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

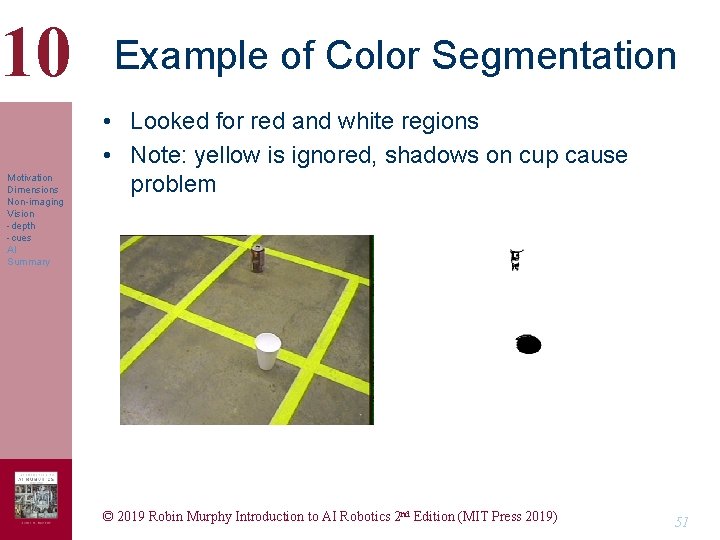

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Example of Color Segmentation • Looked for red and white regions • Note: yellow is ignored, shadows on cup cause problem © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 51

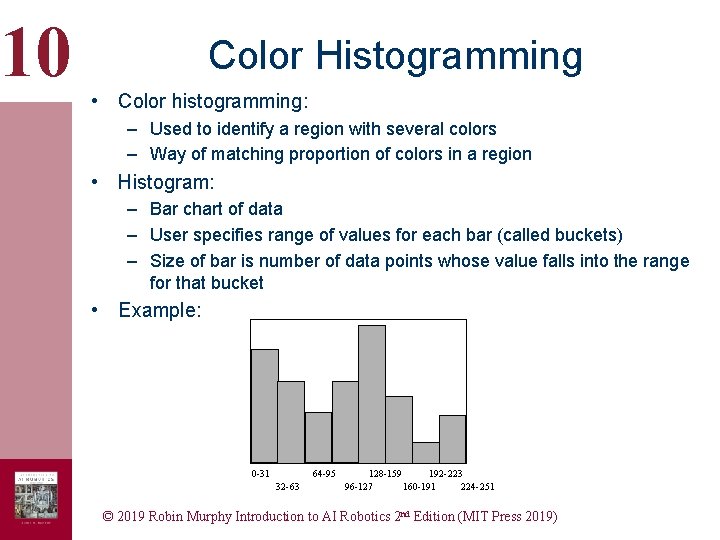

10 Color Histogramming • Color histogramming: – Used to identify a region with several colors – Way of matching proportion of colors in a region • Histogram: – Bar chart of data – User specifies range of values for each bar (called buckets) – Size of bar is number of data points whose value falls into the range for that bucket • Example: 0 -31 64 -95 32 -63 128 -159 192 -223 96 -127 160 -191 224 -251 © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Color Cueing Algorithms Summary • Thresholding/color segmentation, blob analysis Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Make a binary image with all pixels in color range – Each group of connected pixels== region (or blob) – Extract region statistics • Size (change in size can be tied to looming, relative position) • Centroid (where to aim) – Lots of neat program tricks exploiting arrays • Scan columns until find first region pixel to see where to avoid • Color histogramming – Distinguish an object by the proportions of each color in its signature • Problems with these algorithms – Color constancy is hard • Some colors/ color spaces are better than others – Often have to do some pre-processing to clean up the image(s) • Mean/median Filtering © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 53

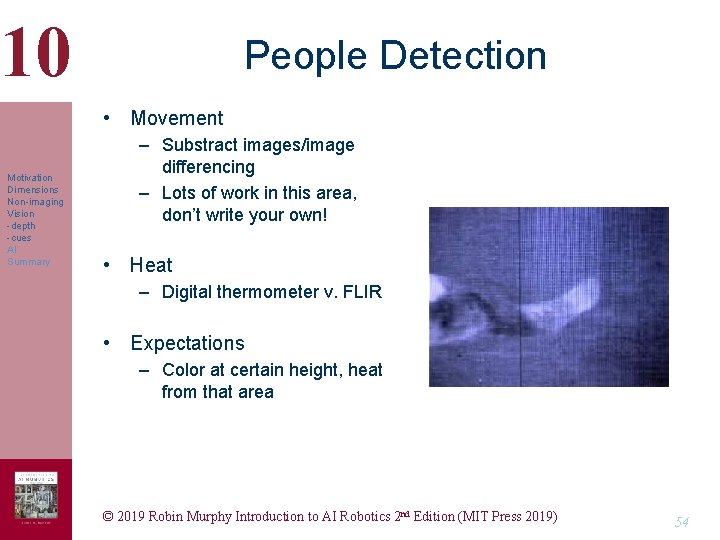

10 People Detection • Movement Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Substract images/image differencing – Lots of work in this area, don’t write your own! • Heat – Digital thermometer v. FLIR • Expectations – Color at certain height, heat from that area © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 54

10 Choosing Sensors and Sensing • Three concepts for choosing sensors and sensing Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Logical or equivalent sensors, that it may be possible to generate the same percept from different sensors or algorithms. – Behavioral sensor fusion, which describes the general methods of combining sensors to get a single percept or to support a complex behavior – Attributes of a sensor suite that can be used to help design a system © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 55

10 Sensor Modalities • Sensor modality: – Sensors which measure same form of energy and process it in similar ways – “Modality” refers to the raw input used by the sensors • Different modalities: – – Sound Pressure Temperature Light • • Visible light Infrared light X-rays Etc. © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Logical Sensors • Logical sensor: – Unit of sensing or module that supplies a particular percept – Consists of: signal processing from physical sensor, plus software processing needed to extract the percept – Can be easily implemented as a perceptual schema • Logical sensor contains all available alternative methods of obtaining a particular percept – Example: to obtain a 360 o polar plot of range data, can use: • • • Sonar Laser Stereo vision Texture Etc. © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Logical Sensors (cont. ) • Logical sensors can be used interchangeably if they return the same percept • However, not necessarily equivalent in performance or update rate • Logical sensors very useful for building-block effect - recursive, reusable, modular, etc. © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Behavioral Sensor Fusion • Sensor suite: set of sensors for a particular robot • Sensor fusion: any process that combines information from multiple sensors into a single percept • Multiple sensors used when: – A particular sensor is too imprecise or noisy to give reliable data • Sensor reliability problems: – False positive: • Sensor leads robot to believe a percept is present when it isn’t – False negative: • Sensor causes robot to miss a percept that is actually present © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Three Types of Multiple Sensor Combinations 1. Redundant (or, competing) – – Sensors return the same percept Physical vs. logical redundancy: • • Physical redundancy: – Multiple copies of same type of sensor – Example: two rings of sonar placed at different heights Logical redundancy: – Return identical percepts, but use different modalities or processing algorithms – Example: range from stereo cameras vs. laser range finder © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

10 Three Types of Multiple Sensor Combinations (cont. ) 2. Complementary – – Sensors provide disjoint types of information about a percept Example: thermal sensor for detecting heat + camera for detecting motion 3. Coordinated – – Use a sequence of sensors Example: cueing or focus-of-attention; see motion, then activate more specialized sensor © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019)

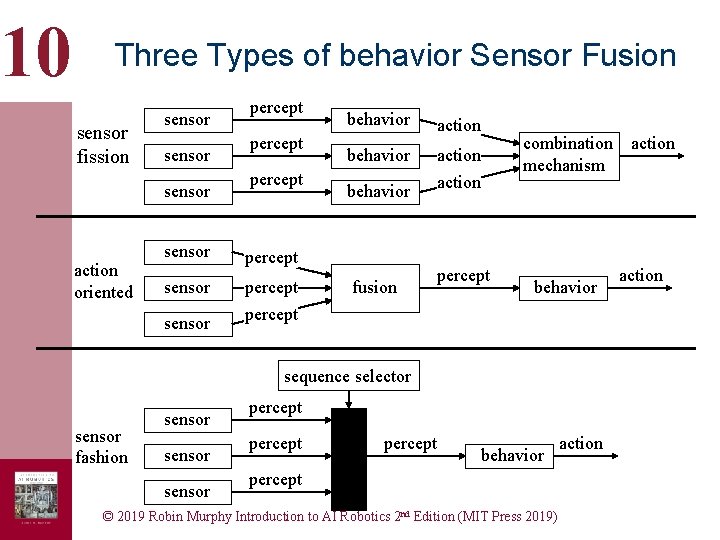

10 Three Types of behavior Sensor Fusion sensor fission sensor action oriented percept sensor percept sensor behavior action behavior fusion percept combination mechanism behavior sequence selector sensor fashion sensor percept behavior percept © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) action

10 Returning to Questions • How do you make a robot “see”? – Sensor or transducer provides a measurement, which is then transformed into a percept – Non-imaging sensors • Pose, location, range – Imaging sensors – Active or passive – Transformations are either • Direct perception – Color, motion, heat segmentation and blob analysis • Recognition – Object recognition- semantics, models – Construction of 3 D representation from 2 D sensor data © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 63

10 Returning to Questions • What sensors are essential for a robot? – Movement and Navigation • • Proprioception- joint, wheel encoders; accelerometers Exteroception- at least a camera, range is helpful Exproprioception- put the camera or haptics in the right place! GPS – Health • Power • Communications status (heart beat) • General diagnostics! – Two-way audio – MISSION SENSORS © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 64

10 Returning to Questions • What’s sensor fusion? – Getting a single “percept” – Control theoretic methods use digital signal processing to try to align and synchronize images – Behavioral: fusion, fission, fashion • Create combinations of redundant, complementary, competing sensors • May require evidential reasoning © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 65

10 Summary • Navigation needs depth perception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Lasers are preferred but have many drawbacks – Don’t use sonars • Sometimes create occupancy grids to improve certainty, then treat occupancy grid as the sensor output (virtual sensor) • Reactive “object recognition” exploits (or engineers) cues such as color, heat; sometimes can use a simple Hough transform • You don’t want to “grow your own” vision algorithms, especially computer vision- there’s a wealth of algorithms out there © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 66

- Slides: 66