10 Sensing How do you make a robot

10 Sensing How do you make a robot “see”? What sensors are essential for a robot? What’s sensor fusion? Doesn’t the Microsoft Kinect solve everything? © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 1

10 Note: This is based on a 2010 lecture so “ 8” appears as the module number © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 2

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Objectives • List at least one advantage and disadvantage of common robotic sensors: GPS, INS, ultrasonics, laser stripers, IR rangers, laser rangers, computer vision • If given a small interleaved RGB image and a range of color values for a region, be able to extract color affordances using 1) threshold on color and 2) a color histogram • Be able to construct an occupancy grid and use polar plots for reactive navigation • Define each of the following terms in one or two sentences: proprioception, exteroception, exproprioception, proximity sensor, logical sensor, false positive, false negative, hue, saturation, image, pixel, image function, computer vision, GPS-denied area • Describe three types of behavioral sensor fusion: fission, fusion, fashion © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 3

10 Return to Layers • In behavioral layer, sensing… Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Supports a behavior – Releases a behavior • In deliberative layer, sensing… – Recognizes and aids with reasoning about objects – Builds world model • In distributed layer, social sensing… – Is sensitive to proximity and targets affect © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 4

10 This Module • In behavioral layer, sensing… Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Supports a behavior – Releases a behavior • In deliberative layer, sensing… – Recognizes objects – Builds world model When it acts as a virtual sensor • In distributed layer, social sensing… – Is sensitive to proximity and targets affect © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 5

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Ways of Organizing Sensors NOOOO!!!! Not more lists!!!! © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 6

10 Ways of Organizing Sensors • 3 Types of Perception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Proprioceptive, exteroceptive, exproprioceptive • Input – Active vs. passive • Output – Image vs. non-image © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 7

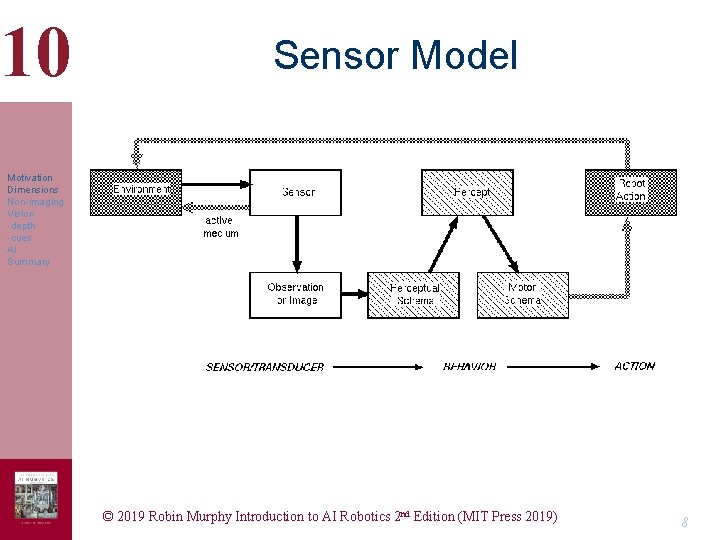

10 Sensor Model Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 8

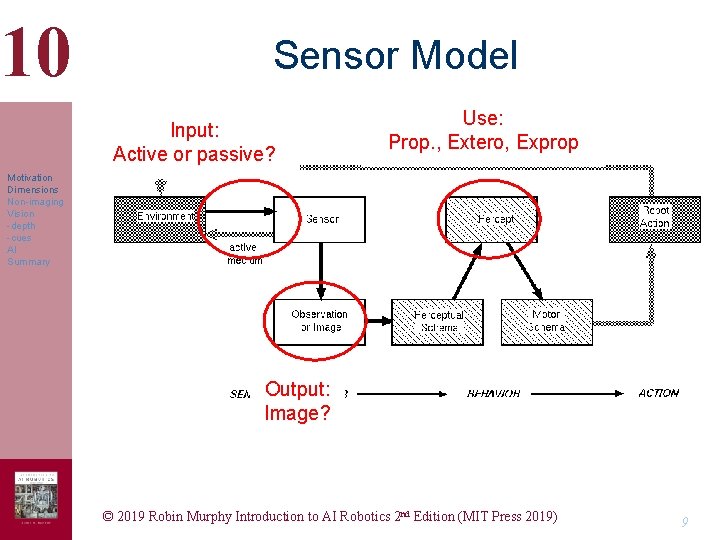

10 Sensor Model Input: Active or passive? Use: Prop. , Extero, Exprop Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Output: Image? © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 9

10 Types of Sensors • Proprioception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Self-control • Exteroception – Navigation – Object recognition • Exproprioception – Usually vision © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 10

10 Recap of What You Saw • Lack of proprioception (corrected on newer models) Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Exteroception via vision indicated the robot was at stairs • Exproprioception via vision attempted (operator shifting viewpoints) but unsuccessful Lack of proprioception and exproprioception= problems © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 11

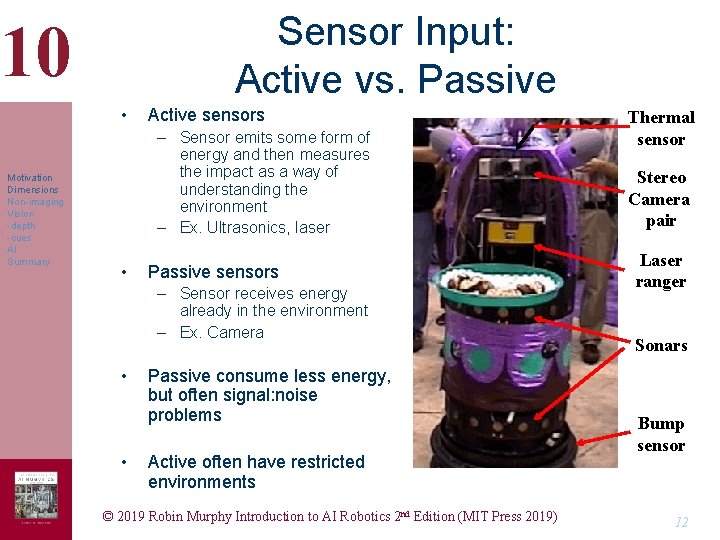

Sensor Input: Active vs. Passive 10 • Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Active sensors – Sensor emits some form of energy and then measures the impact as a way of understanding the environment – Ex. Ultrasonics, laser • Passive sensors – Sensor receives energy already in the environment – Ex. Camera • • Passive consume less energy, but often signal: noise problems Active often have restricted environments © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Thermal sensor Stereo Camera pair Laser ranger Sonars Bump sensor 12

10 Sensor Output: Imagery vs. Observation • Observation Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Single value or vector • Image – A picture-like format where there is a direct physical correspondence to the scene being imaged – Has an image function which maps a signal onto a pixel value © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 13

10 Popular Non-Imagery Navigation Sensors • Exteroception at a distance is the key Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • direct contact sensors – Bump sensors, whiskers • “look ahead” sensors – Range direct • IR rangers • Ultrasonics • Laser stripers/rangers © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 14

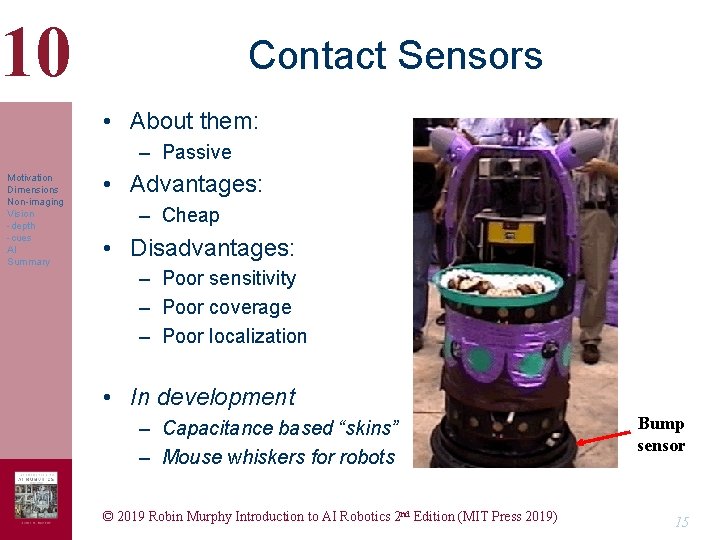

10 Contact Sensors • About them: – Passive Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages: – Cheap • Disadvantages: – Poor sensitivity – Poor coverage – Poor localization • In development – Capacitance based “skins” – Mouse whiskers for robots © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) Bump sensor 15

10 Infrared and Thermal • Actually a spectrum of wavelengths, often emitted from heat Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • “IR” is cheap, used in remotes • True infrared, FLIR (forward looking infrared red) produces thermal imagery – Breakthrough in micro-bolometers • Night-vision is not really IR, it’s light amplification © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 16

10 IR • About them: Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Usually a point sensor, active – Emits a particular wavelength, then detects time to bounce back – Popular for indoor detection of collisions, “negative obstacles” • Advantages – Cheap – Can also detect dark/light (via strength) • Disadvantages – – Sensitive to lighting conditions Specular reflection Coverage of a line Short range so can’t go fast © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 17

10 IR: In Development • Canesta range sensor, Swiss Ranger – Make an array of miniature IR range sensors Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Was this really THAT innovative? © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 18

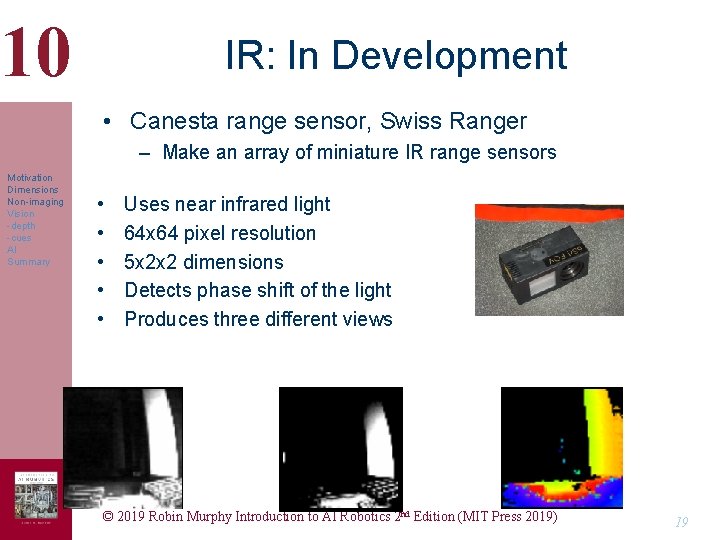

10 IR: In Development • Canesta range sensor, Swiss Ranger – Make an array of miniature IR range sensors Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • • • Uses near infrared light 64 x 64 pixel resolution 5 x 2 x 2 dimensions Detects phase shift of the light Produces three different views © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 19

10 Example of Canesta • Video from Fall 05 class (group project) Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 20

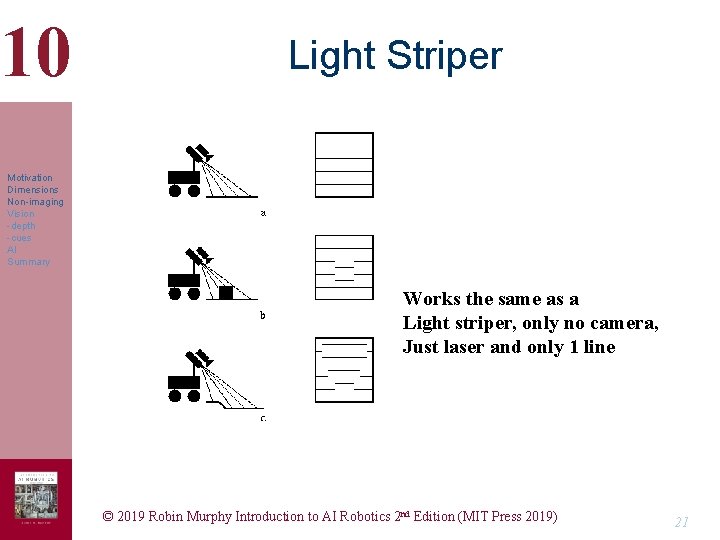

10 Light Striper Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Works the same as a Light striper, only no camera, Just laser and only 1 line © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 21

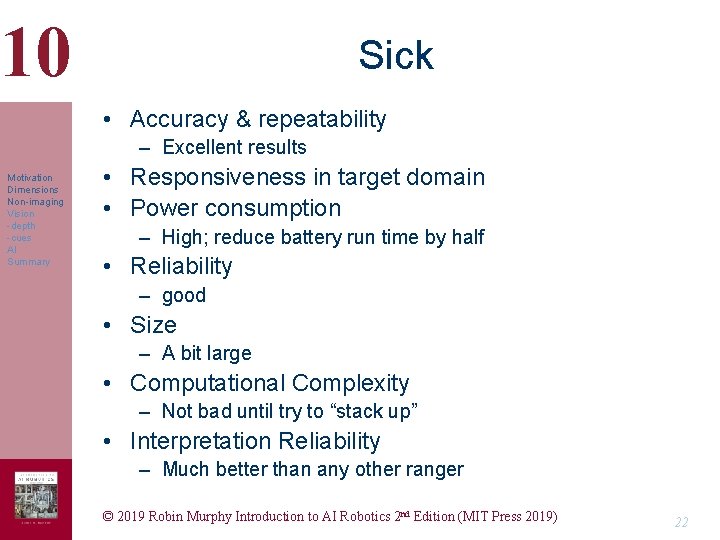

10 Sick • Accuracy & repeatability – Excellent results Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Responsiveness in target domain • Power consumption – High; reduce battery run time by half • Reliability – good • Size – A bit large • Computational Complexity – Not bad until try to “stack up” • Interpretation Reliability – Much better than any other ranger © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 22

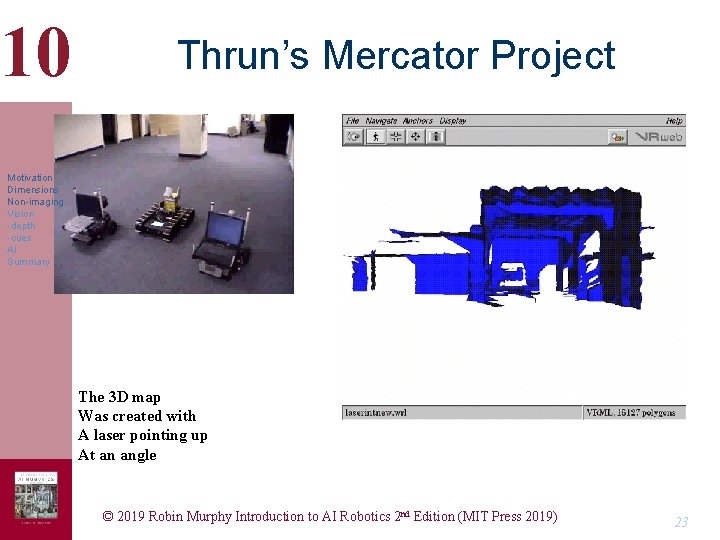

10 Thrun’s Mercator Project Motivation Dimensions Non-imaging Vision -depth -cues AI Summary The 3 D map Was created with A laser pointing up At an angle © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 23

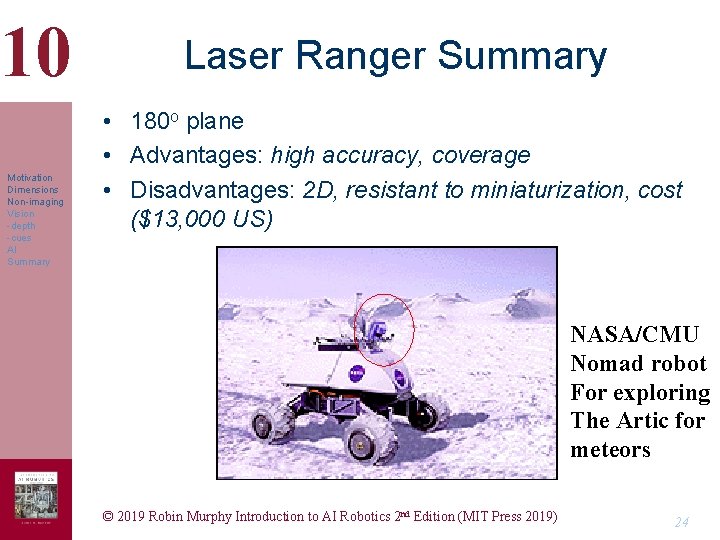

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Laser Ranger Summary • 180 o plane • Advantages: high accuracy, coverage • Disadvantages: 2 D, resistant to miniaturization, cost ($13, 000 US) NASA/CMU Nomad robot For exploring The Artic for meteors © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 24

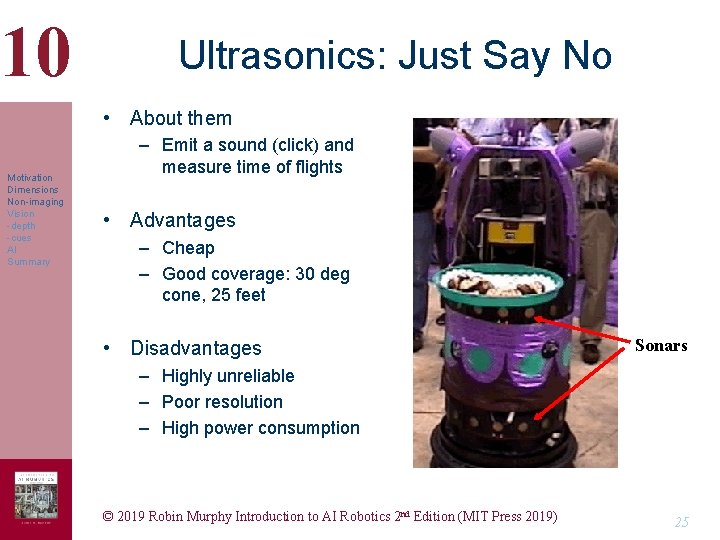

10 Ultrasonics: Just Say No • About them Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Emit a sound (click) and measure time of flights • Advantages – Cheap – Good coverage: 30 deg cone, 25 feet • Disadvantages Sonars – Highly unreliable – Poor resolution – High power consumption © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 25

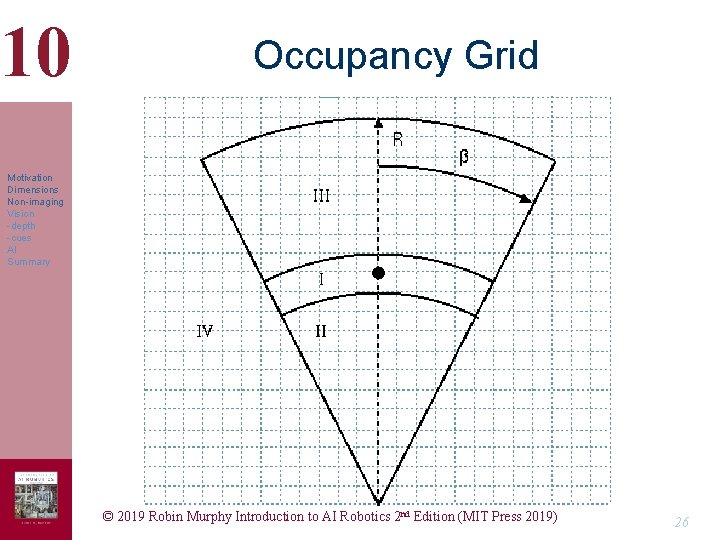

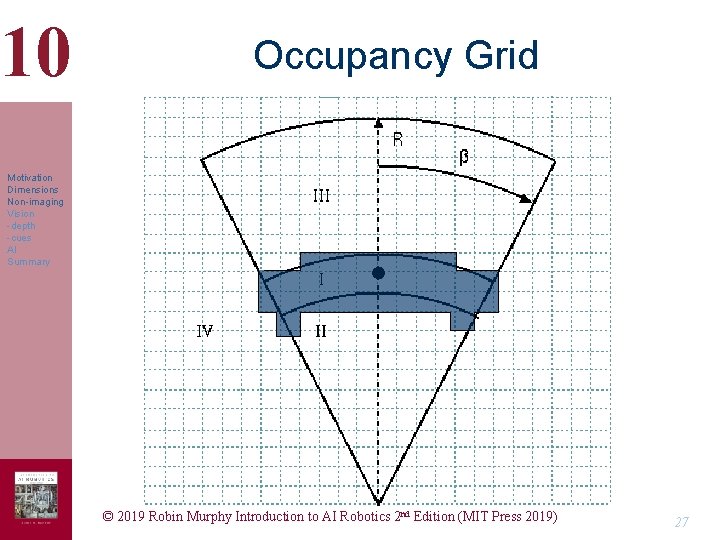

10 Occupancy Grid Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 26

10 Occupancy Grid Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 27

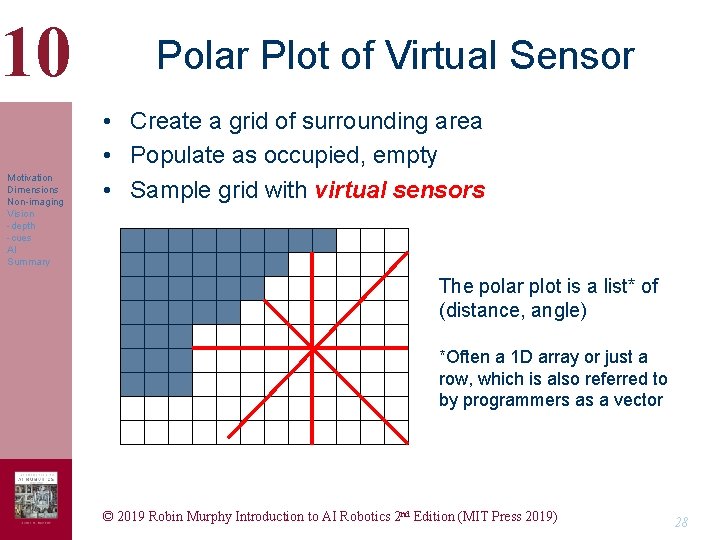

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Polar Plot of Virtual Sensor • Create a grid of surrounding area • Populate as occupied, empty • Sample grid with virtual sensors The polar plot is a list* of (distance, angle) *Often a 1 D array or just a row, which is also referred to by programmers as a vector © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 28

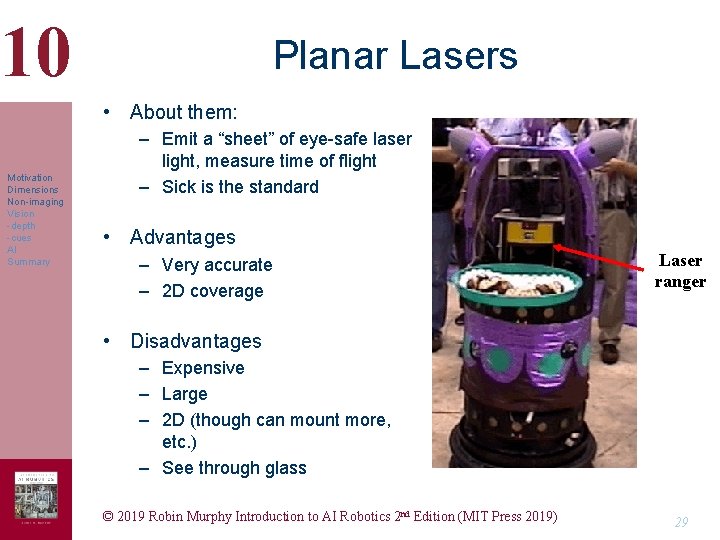

10 Planar Lasers • About them: Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Emit a “sheet” of eye-safe laser light, measure time of flight – Sick is the standard • Advantages – Very accurate – 2 D coverage Laser ranger • Disadvantages – Expensive – Large – 2 D (though can mount more, etc. ) – See through glass © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 29

10 Brief Summary of Non-Imaging Sensors • Proprioceptive – Needed Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Exteroceptive – Ultrasonics: problematic – Lasers: costs coming down – IR: unreliable but may work for niches • Occupancy grid is a hack, serving as a virtual sensor © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 30

10 Computer Vision: Navigation • Works on any image, regards of source (video, thermal imagery, …) Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – – Optic flow, looming Stereo range maps Depth from X algorithms Intriguing hacks • Note: most computer vision work focuses on single image, but now beginning to look at sequence © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 31

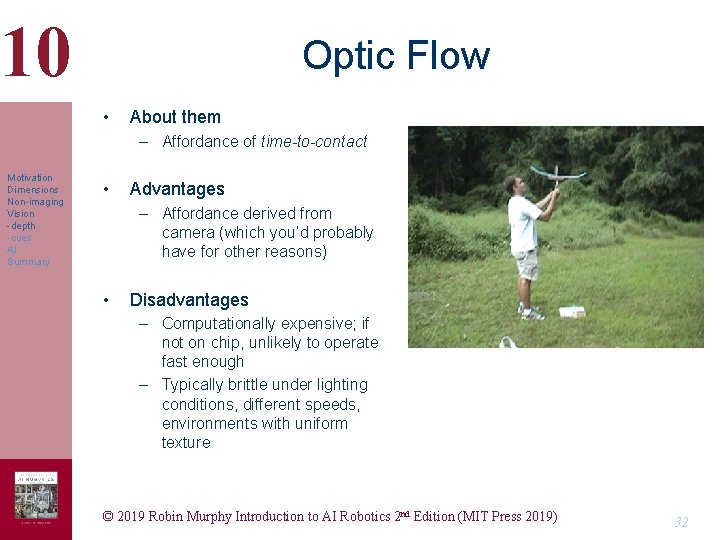

10 Optic Flow • About them – Affordance of time-to-contact Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages – Affordance derived from camera (which you’d probably have for other reasons) • Disadvantages – Computationally expensive; if not on chip, unlikely to operate fast enough – Typically brittle under lighting conditions, different speeds, environments with uniform texture © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) www. centeye. com 32

10 Stereo Range Maps • About them – Use two cameras Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Advantages – Passive – Good coverage • Disadvantages – Environment may not support interest operators – Computationally expensive – Sensitive to calibration – Sensitive to lighting © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 33

10 Depth from X • About them Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Usually single camera, single image or short image sequence – Useful variants include • Depth from motion • Depth from focus • Depth from texture • Advantages – Nice theory, often computationally optimized – Usually have a camera anyway • Disadvantages – Tend to be brittle outside of highly controlled conditions © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 34

10 Intriguing Hacks • Depth from texture for obstacle avoidance – Horswill at MIT for tour guide robot Motivation Dimensions Non-imaging Vision -depth -cues AI Summary © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 35

10 Outdoor Reactive Navigation Challenges • Most everything has been developed for indoors Motivation Dimensions Non-imaging Vision -depth -cues AI Summary • Pressing outdoor needs – Foilage: tall grass == granite rock – Terrain anticipation • Outdoor challenges – – Lighting and shadows Weather conditions Sparse objects Texture or lack of texture © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 36

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Sensing for Behavioral Navigation Summary • Have to decide between nearest protrusion and range maps • Planar lasers work best but expensive, big, need several or need spinning head • Ultrasonics were popular because cheap and distance, but now are hardly ever used due to their problems with specular reflection • IR are almost never used for reliable navigation, usually just “panic” stops • Computer vision is challenging due to lighting conditions – Optic flow chips can provide time-to-contact – Stereo can provide partial range maps • Outdoor conditions are significantly harder than indoor © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 37

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Object Recognition • For purely reactive systems, no reasoning, just affordances • Typically recognize specific things by pattern of color, heat signature, fusion of these algorithms, or through Hough transform © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 38

10 Color Cueing Algorithms • Thresholding/color segmentation, blob analysis Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Make a binary image with all pixels in color range – Each group of connected pixels== region (or blob) – Extract region statistics • Size (change in size can be tied to looming, relative position) • Centroid (where to aim) – Lots of neat program tricks exploiting arrays • Scan columns until find first region pixel to see where to avoid • Color histogramming – Distinguish an object by the proportions of each color in its signature • Problems with these algorithms – Color constancy is hard • Some colors/ color spaces are better than others – Often have to do some pre-processing to clean up the image(s) • Mean/median FIltering © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 39

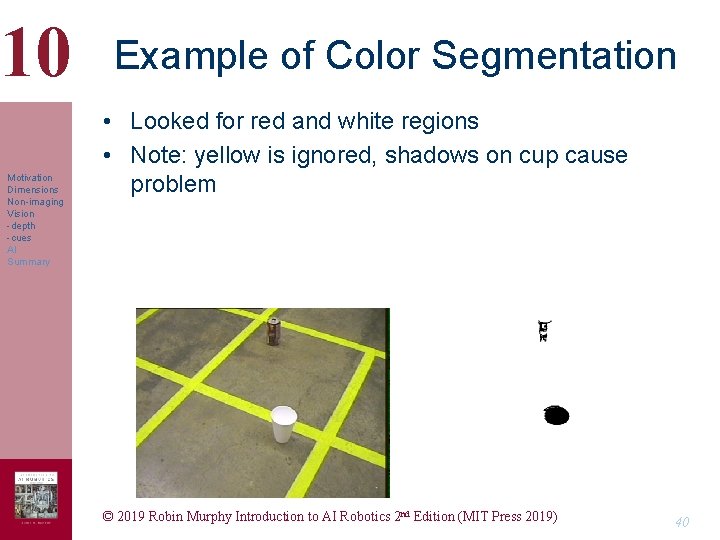

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Example of Color Segmentation • Looked for red and white regions • Note: yellow is ignored, shadows on cup cause problem © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 40

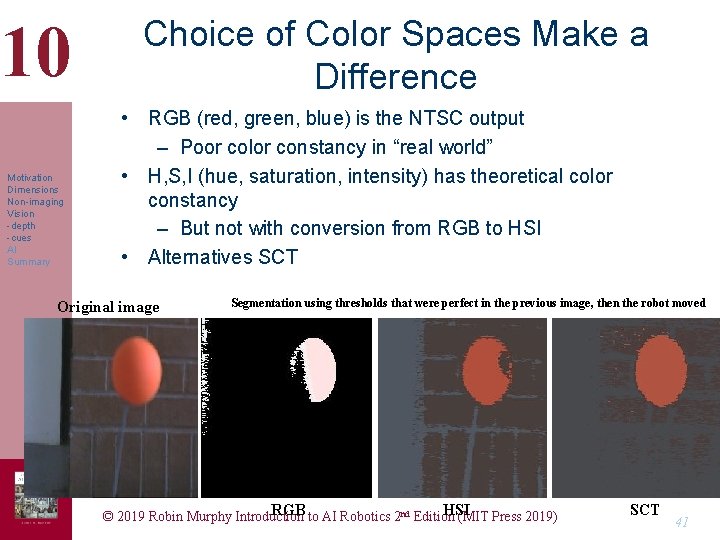

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Choice of Color Spaces Make a Difference • RGB (red, green, blue) is the NTSC output – Poor color constancy in “real world” • H, S, I (hue, saturation, intensity) has theoretical color constancy – But not with conversion from RGB to HSI • Alternatives SCT Original image Segmentation using thresholds that were perfect in the previous image, then the robot moved RGB to AI Robotics 2 nd Edition HSI © 2019 Robin Murphy Introduction (MIT Press 2019) SCT 41

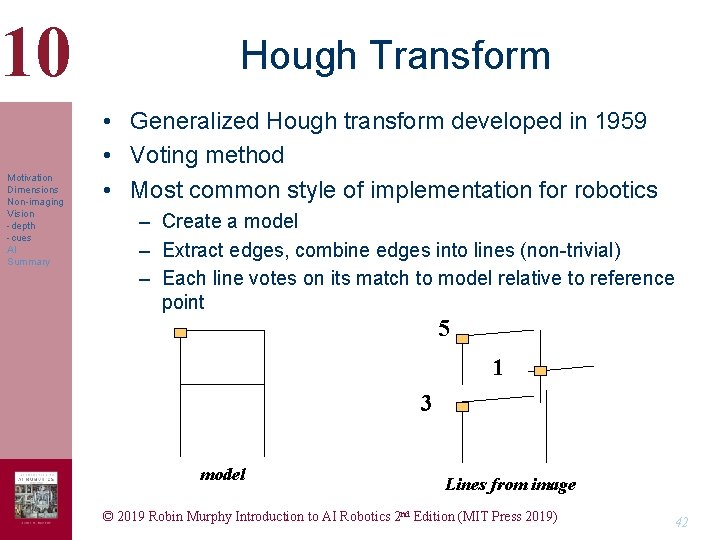

10 Motivation Dimensions Non-imaging Vision -depth -cues AI Summary Hough Transform • Generalized Hough transform developed in 1959 • Voting method • Most common style of implementation for robotics – Create a model – Extract edges, combine edges into lines (non-trivial) – Each line votes on its match to model relative to reference point 5 1 3 model Lines from image © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 42

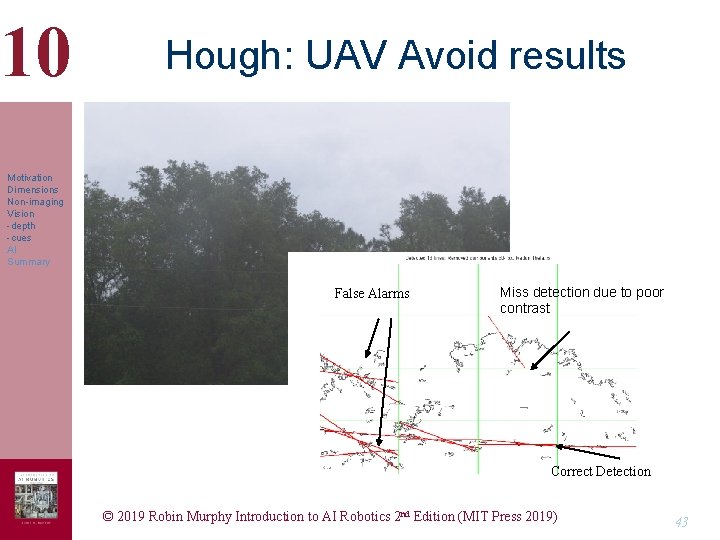

10 Hough: UAV Avoid results Motivation Dimensions Non-imaging Vision -depth -cues AI Summary False Alarms Miss detection due to poor contrast Correct Detection © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 43

10 People Detection • Movement Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Substract images/image differencing – Lots of work in this area, don’t write your own! • Heat – Digital thermometer v. FLIR • Expectations – Color at certain height, heat from that area © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 44

10 Fusion © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 45

10 © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 46

10 How does this tie into AI? • Representation Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – – Logical sensors Occupancy grids Image functions Polar plots • Vision © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 47

10 Returning to Questions • How do you make a robot “see”? – Sensor or transducer provides a measurement, which is then transformed into a percept – Non-imaging sensors • Pose, location, range – Imaging sensors – Active or passive – Transformations are either • Direct perception – Color, motion, heat segmentation and blob analysis • Recognition – Object recognition- semantics, models – Construction of 3 D representation from 2 D sensor data © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 48

10 Returning to Questions • What sensors are essential for a robot? – Movement and Navigation • • Proprioception- joint, wheel encoders; accelerometers Exteroception- at least a camera, range is helpful Exproprioception- put the camera or haptics in the right place! GPS – Health • Power • Communications status (heart beat) • General diagnostics! – Two-way audio – MISSION SENSORS © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 49

10 Returning to Questions • What’s sensor fusion? – Getting a single “percept” – Control theoretic methods use digital signal processing tp try to align and synchronize images – Behaviorial: fusion, fission, fashion • Create combinations of redundant, complementary, competing sensors • May require evidential reasoning © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 50

10 Returning to Questions • Doesn’t the Microsoft Kinect solve everything? – No, it uses an IR sensor at its core which has been carefully engineered for indoor game rooms and specific algorithms for people standing in the open © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 51

10 Summary • Navigation needs depth perception Motivation Dimensions Non-imaging Vision -depth -cues AI Summary – Lasers are preferred but have many drawbacks – Don’t use sonars • Sometimes create occupancy grids to improve certainty, then treat occupancy grid as the sensor output (virtual sensor) • Reactive “object recognition” exploits (or engineers) cues such as color, heat; sometimes can use a simple Hough transform • You don’t want to “grow your own” vision algorithms, especially computer vision- there’s a wealth of algorithms out there © 2019 Robin Murphy Introduction to AI Robotics 2 nd Edition (MIT Press 2019) 52

- Slides: 52