10 605 MapReduce Workflows William Cohen 1 PARALLELIZING

![Testing Large-vocab Naïve Bayes [For assignment] • For each example id, y, x 1, Testing Large-vocab Naïve Bayes [For assignment] • For each example id, y, x 1,](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-14.jpg)

![A stream-and-sort analog of the request-and-answer pattern… w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to A stream-and-sort analog of the request-and-answer pattern… w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-27.jpg)

![w Request w Counters found ~ctr to id 1 aardvark C[w^Y=sports]=2 aardvark ~ctr to w Request w Counters found ~ctr to id 1 aardvark C[w^Y=sports]=2 aardvark ~ctr to](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-43.jpg)

![w Request w Counters found id 1 aardvark C[w^Y=sports]=2 aardvark id 1 agent C[w^Y=sports]=1027, w Request w Counters found id 1 aardvark C[w^Y=sports]=2 aardvark id 1 agent C[w^Y=sports]=1027,](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-44.jpg)

![Abstract Implementation: [TF]IDF 1/2 data = pairs (docid , term) where term is a Abstract Implementation: [TF]IDF 1/2 data = pairs (docid , term) where term is a](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-45.jpg)

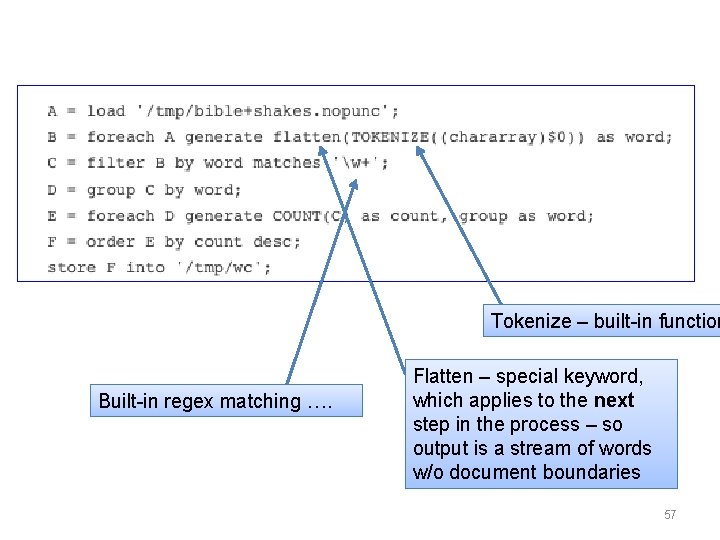

- Slides: 57

10 -605: Map-Reduce Workflows William Cohen 1

PARALLELIZING STREAM AND SORT 2

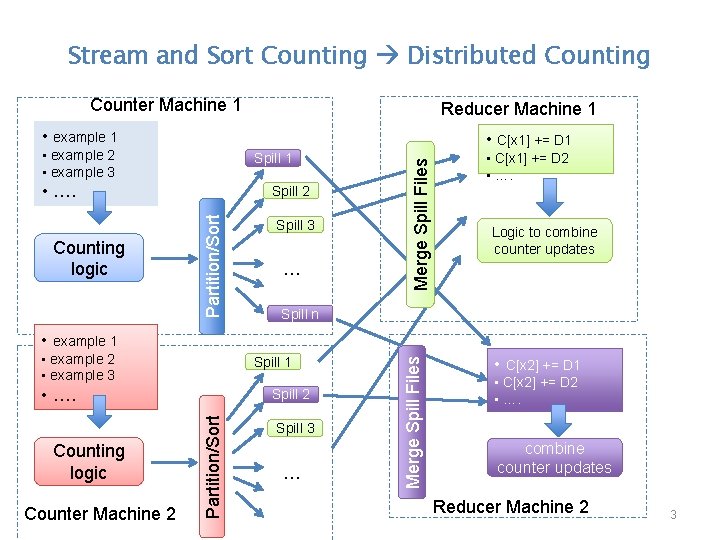

Stream and Sort Counting Distributed Counting Counter Machine 1 Reducer Machine 1 • example 1 Spill 1 • …. Partition/Sort Counting logic Spill 2 Spill 3 … Merge Spill Files • C[x 1] += D 1 • example 2 • example 3 • C[x 1] += D 2 • …. Logic to combine counter updates Spill n • example 2 • example 3 Spill 1 • …. Counter Machine 2 Partition/Sort Counting logic Spill 2 Spill 3 … Merge Spill Files • example 1 • C[x 2] += D 2 • …. combine counter updates Reducer Machine 2 3

Distributed Stream-and-Sort: Map, Shuffle-Sort, Reduce Map Process 1 Distributed Shuffle-Sort • example 1 Spill 1 • …. Partition/Sort Spill 2 Spill 3 … Merge Spill Files • C[x 1] += D 1 • example 2 • example 3 Counting logic Reducer 1 • C[x 1] += D 2 • …. Logic to combine counter updates Spill n • example 2 • example 3 Spill 1 • …. Map Process 2 Partition/Sort Counting logic Spill 2 Spill 3 … Merge Spill Files • example 1 • C[x 2] += D 2 • …. combine counter updates Reducer 2 4

Combiners in Hadoop 5

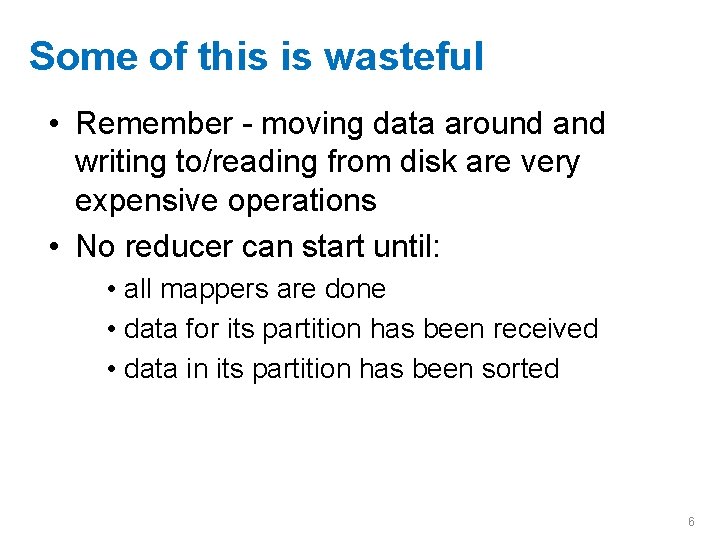

Some of this is wasteful • Remember - moving data around and writing to/reading from disk are very expensive operations • No reducer can start until: • all mappers are done • data for its partition has been received • data in its partition has been sorted 6

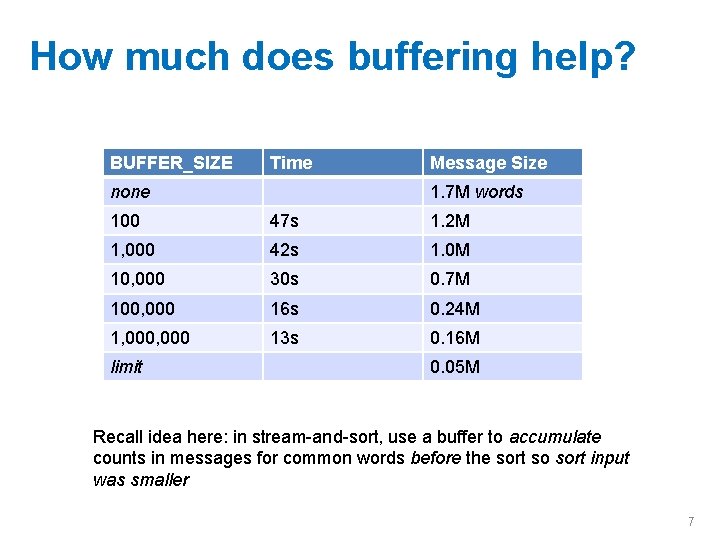

How much does buffering help? BUFFER_SIZE Time none Message Size 1. 7 M words 100 47 s 1. 2 M 1, 000 42 s 1. 0 M 10, 000 30 s 0. 7 M 100, 000 16 s 0. 24 M 1, 000 13 s 0. 16 M limit 0. 05 M Recall idea here: in stream-and-sort, use a buffer to accumulate counts in messages for common words before the sort so sort input was smaller 7

Combiners • Sits between the map and the shuffle – Do some of the reducing while you’re waiting for other stuff to happen – Avoid moving all of that data over the network – Eg, for wordcount: instead of sending (word, 1) send (word, n) where n is a partial count (over data seen by that mapper) • Reducer still just sums the counts • Only applicable when – order of reduce operations doesn’t matter (since order is undetermined) – effect is cumulative 8

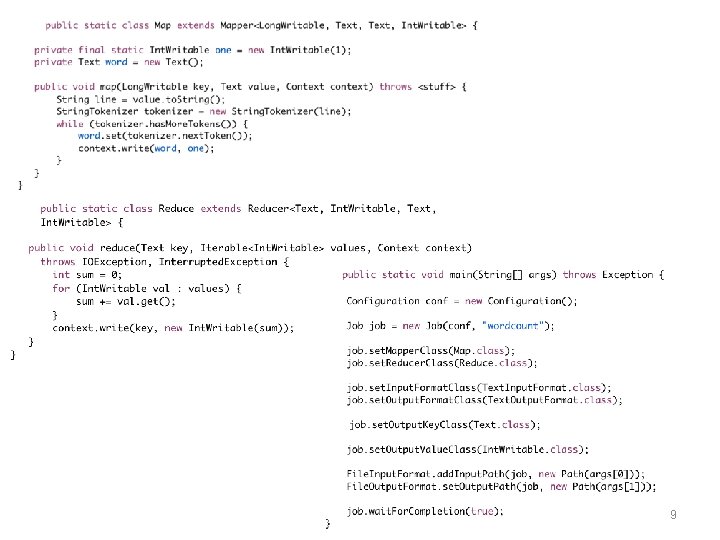

9

job. set. Combiner. Class(Reduce. class); 10

Deja vu: Combiner = Reducer • Often the combiner is the reducer. – like for word count – but not always – remember you have no control over when/whether the combiner is applied 11

Algorithms in Map-Reduce (Workflows) 12

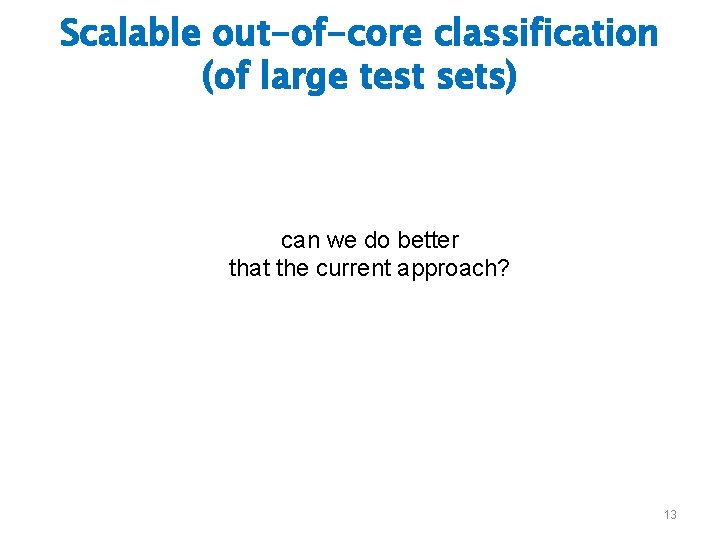

Scalable out-of-core classification (of large test sets) can we do better that the current approach? 13

![Testing Largevocab Naïve Bayes For assignment For each example id y x 1 Testing Large-vocab Naïve Bayes [For assignment] • For each example id, y, x 1,](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-14.jpg)

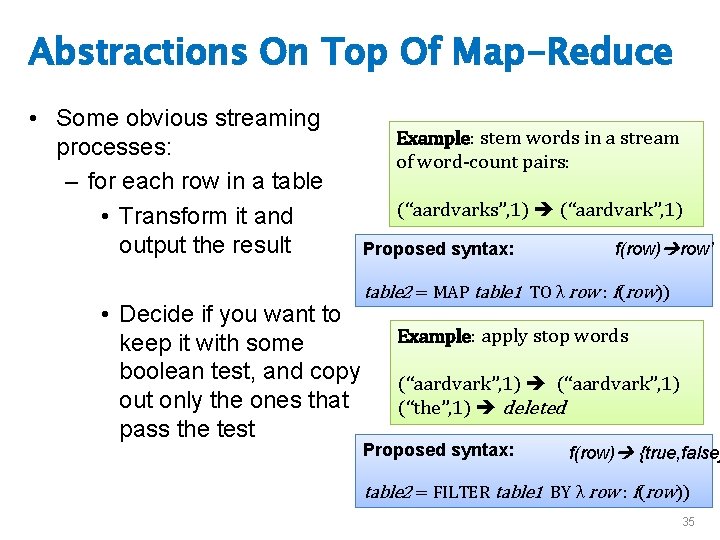

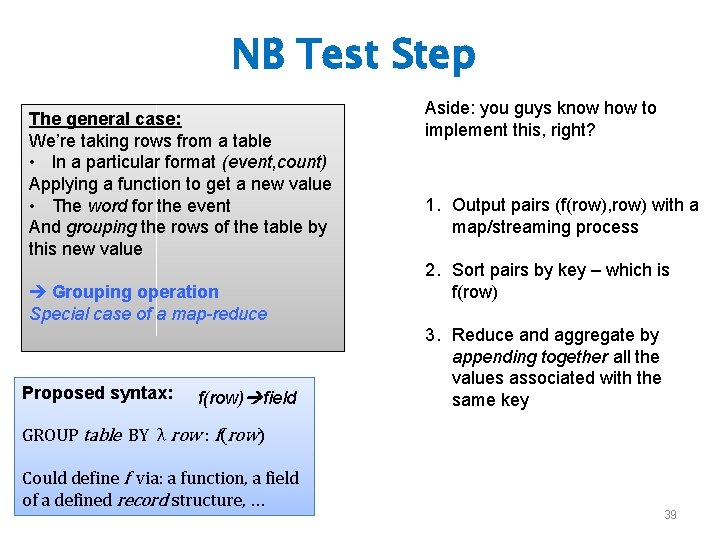

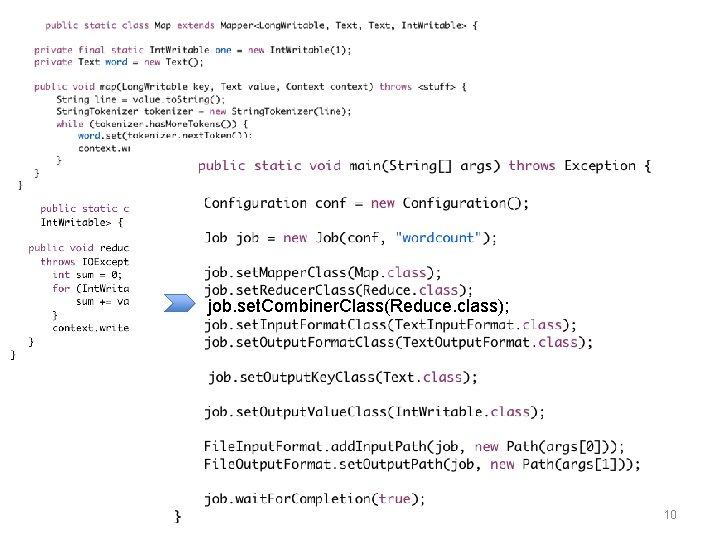

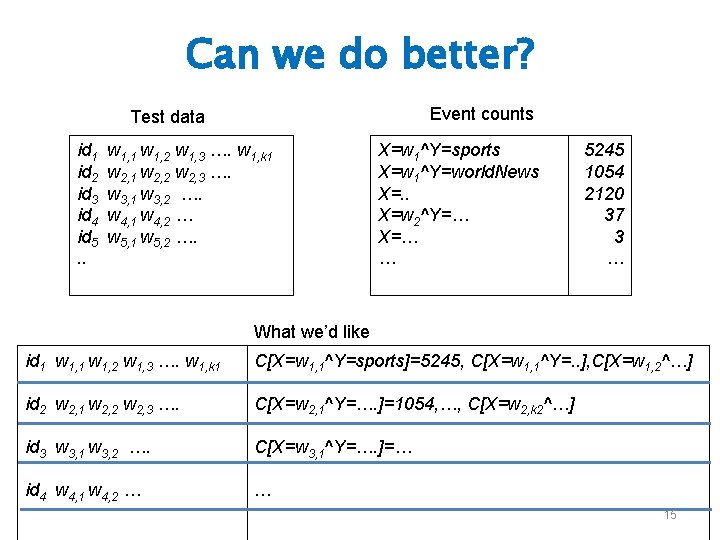

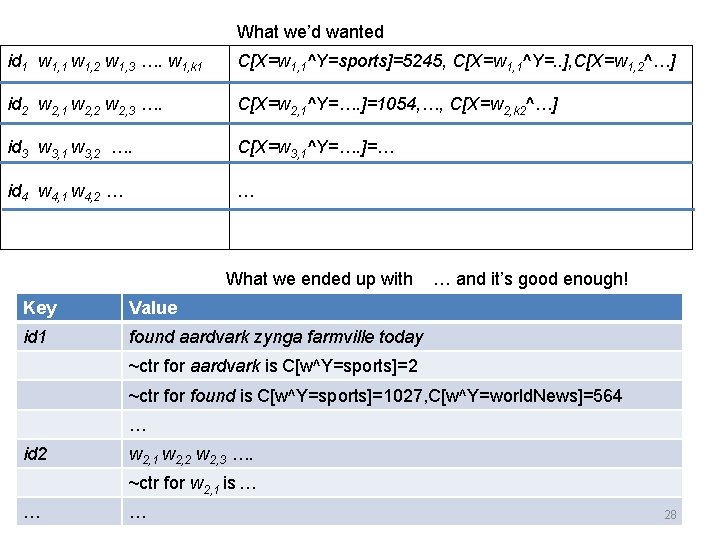

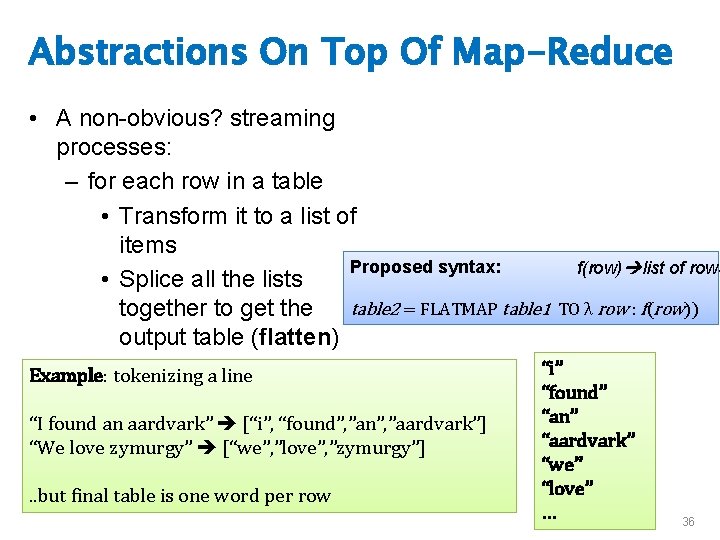

Testing Large-vocab Naïve Bayes [For assignment] • For each example id, y, x 1, …. , xd in train: • Sort the event-counter update “messages” • Scan and add the sorted messages and output the final collection of event counts is big counter values • Initialize a Hash. Set NEEDED and a hashtable C • For each example id, y, x 1, …. , xd in test: test is small – Add x 1, …. , xd to NEEDED • For each event, C(event) in the summed counters – If event involves a NEEDED term x read it into C • For each example id, y, x 1, …. , xd in test: – For each y’ in dom(Y): • Compute log Pr(y’, x 1, …. , xd) = …. 14

Can we do better? Event counts Test data id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … 5245 1054 2120 37 3 … What we’d like id 1 w 1, 2 w 1, 3 …. w 1, k 1 C[X=w 1, 1^Y=sports]=5245, C[X=w 1, 1^Y=. . ], C[X=w 1, 2^…] id 2 w 2, 1 w 2, 2 w 2, 3 …. C[X=w 2, 1^Y=…. ]=1054, …, C[X=w 2, k 2^…] id 3 w 3, 1 w 3, 2 …. C[X=w 3, 1^Y=…. ]=… id 4 w 4, 1 w 4, 2 … … 15

Can we do better? Event counts Step 1: group counters by word w How: • Stream and sort: • for each C[X=w^Y=y]=n • print “w C[Y=y]=n” • sort and build a list of values associated with each key w Like an inverted index X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … 5245 1054 2120 37 3 … w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. News]=564 … … zynga C[w^Y=sports]=21, C[w^Y=world. News]=4464 16

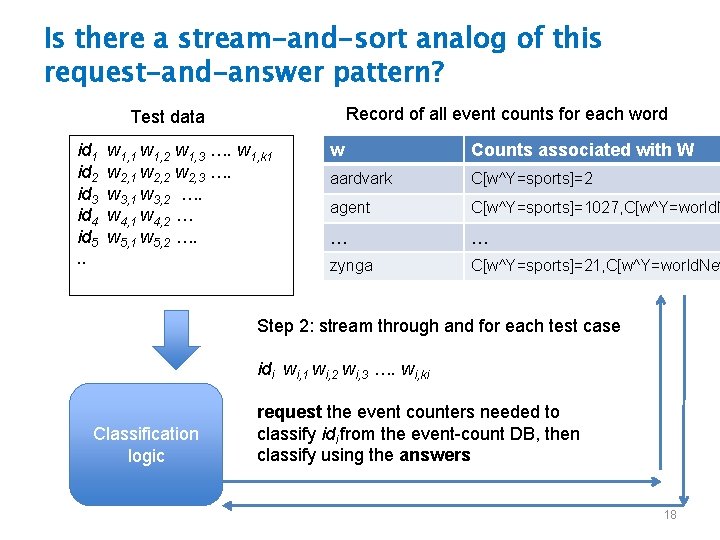

If these records were in a key-value DB we would know what to do…. Record of all event counts for each word Test data id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New Step 2: stream through and for each test case idi wi, 1 wi, 2 wi, 3 …. wi, ki Classification logic request the event counters needed to classify idi from the event-count DB, then classify using the answers 17

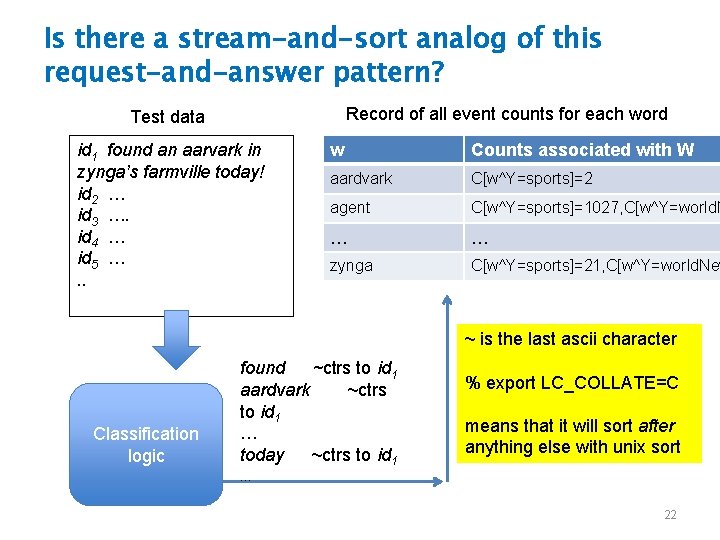

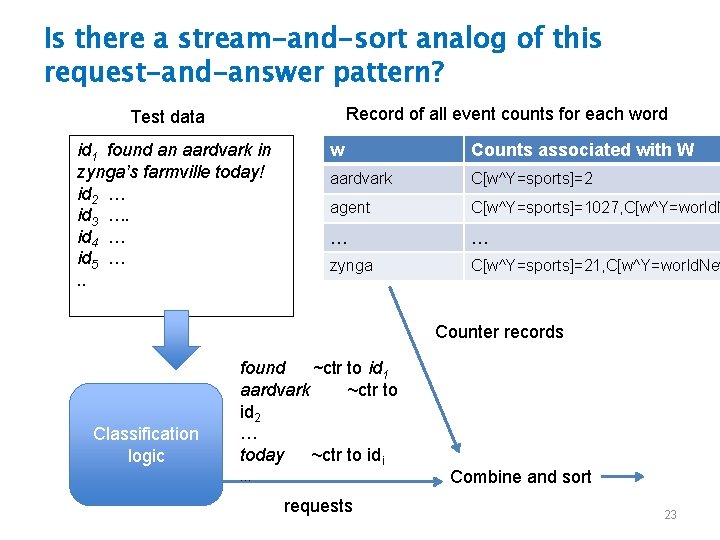

Is there a stream-and-sort analog of this request-and-answer pattern? Record of all event counts for each word Test data id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New Step 2: stream through and for each test case idi wi, 1 wi, 2 wi, 3 …. wi, ki Classification logic request the event counters needed to classify idi from the event-count DB, then classify using the answers 18

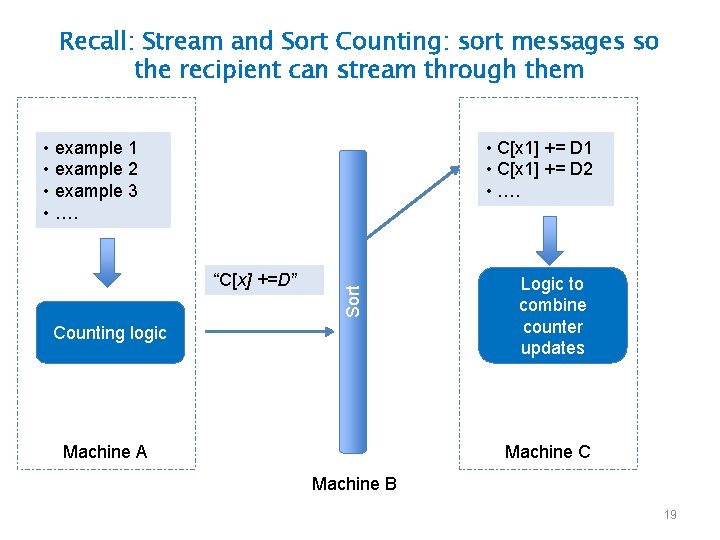

Recall: Stream and Sort Counting: sort messages so the recipient can stream through them • C[x 1] += D 1 • C[x 1] += D 2 • …. “C[x] +=D” Sort • example 1 • example 2 • example 3 • …. Counting logic Machine A Logic to combine counter updates Machine C Machine B 19

Is there a stream-and-sort analog of this request-and-answer pattern? Record of all event counts for each word Test data id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. Classification logic w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New W 1, 1 counters to id 1 W 1, 2 counters to id 1 … Wi, j counters to idi … 20

Is there a stream-and-sort analog of this request-and-answer pattern? Record of all event counts for each word Test data id 1 found an aarvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. . Classification logic w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New found ctrs to id 1 aardvark ctrs to id 1 … today ctrs to id 1 … 21

Is there a stream-and-sort analog of this request-and-answer pattern? Record of all event counts for each word Test data id 1 found an aarvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. . w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New ~ is the last ascii character Classification logic found ~ctrs to id 1 aardvark ~ctrs to id 1 … today ~ctrs to id 1 % export LC_COLLATE=C means that it will sort after anything else with unix sort … 22

Is there a stream-and-sort analog of this request-and-answer pattern? Record of all event counts for each word Test data id 1 found an aardvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. . w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New Counter records Classification logic found ~ctr to id 1 aardvark ~ctr to id 2 … today ~ctr to idi … requests Combine and sort 23

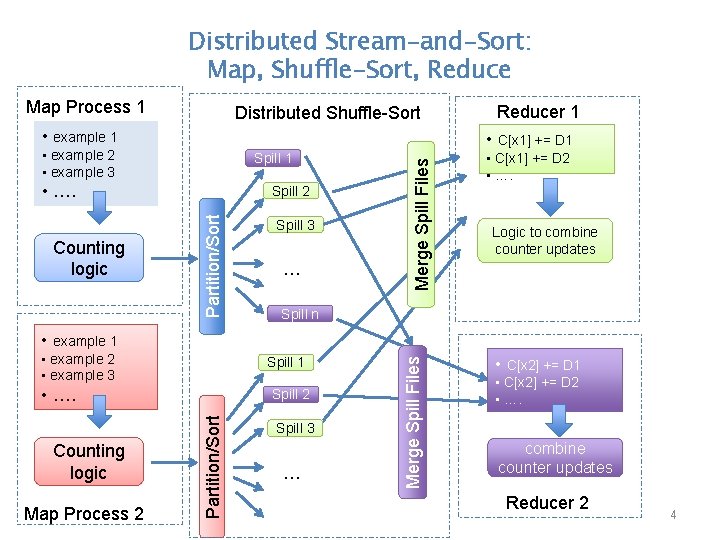

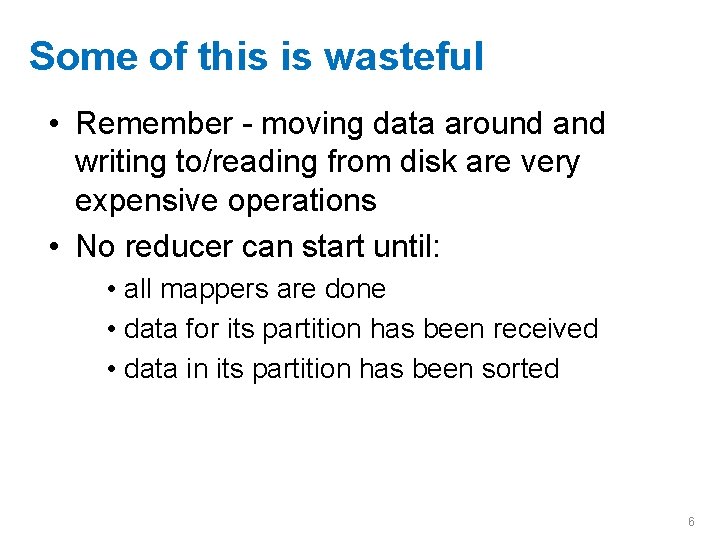

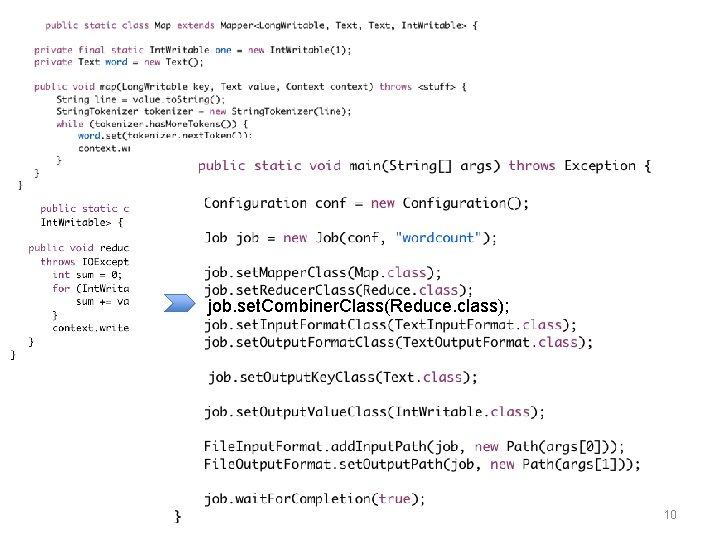

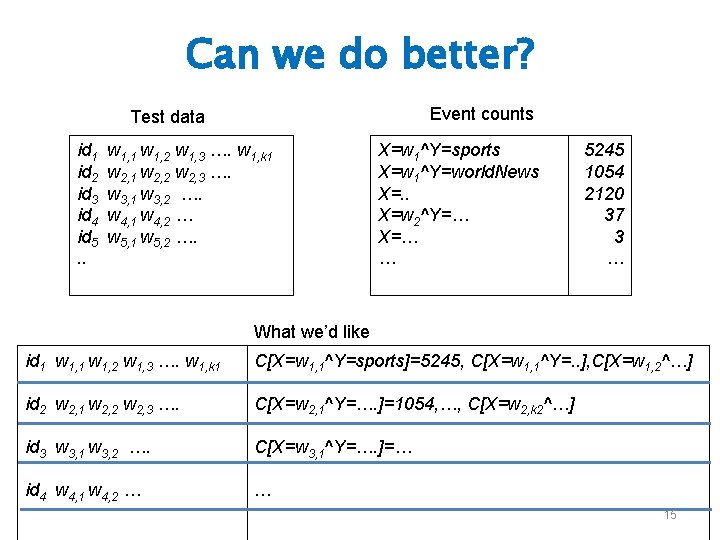

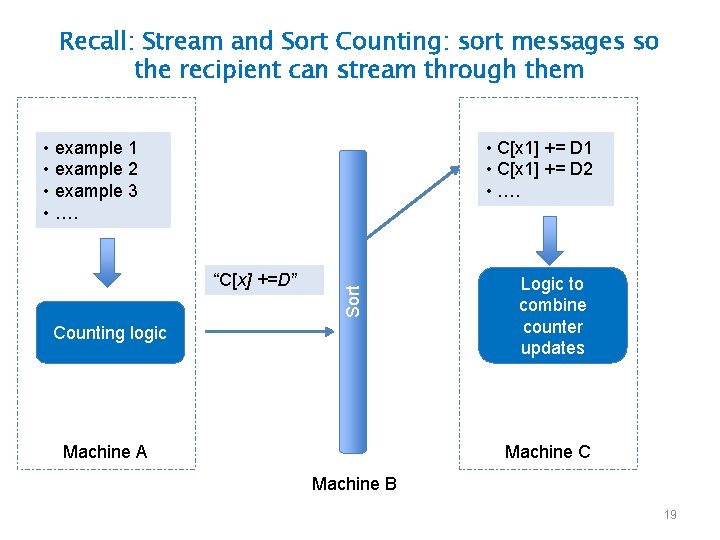

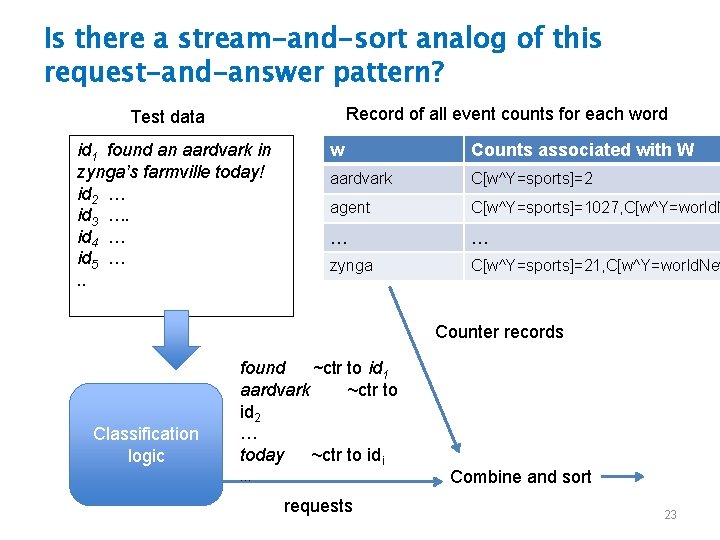

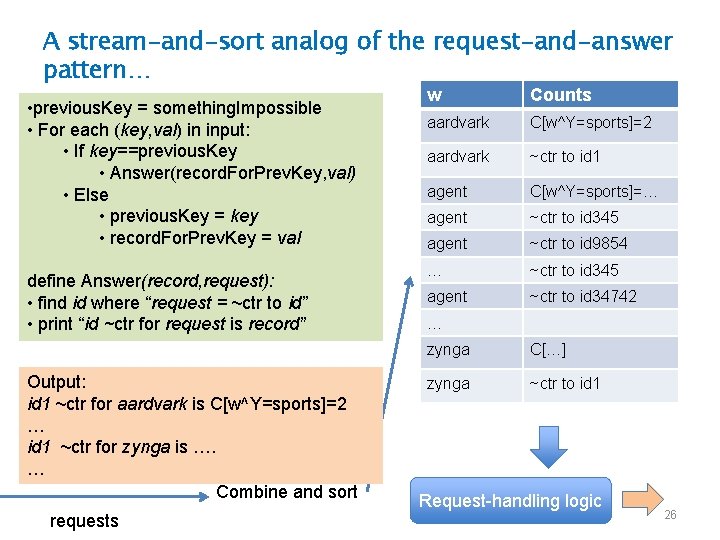

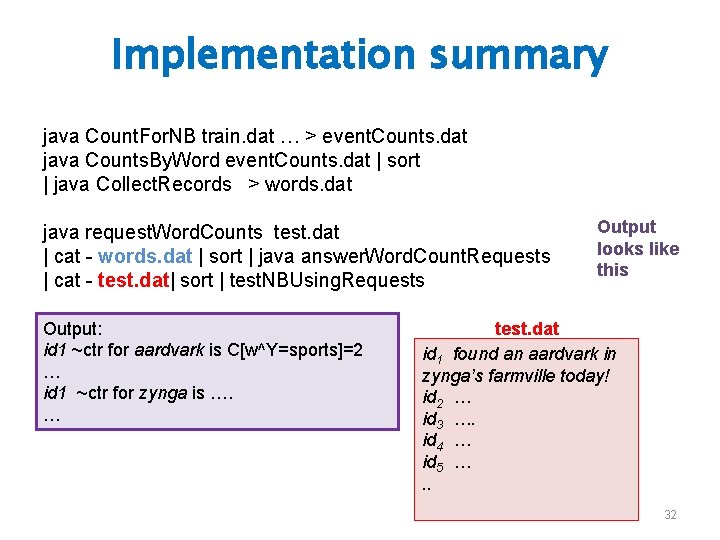

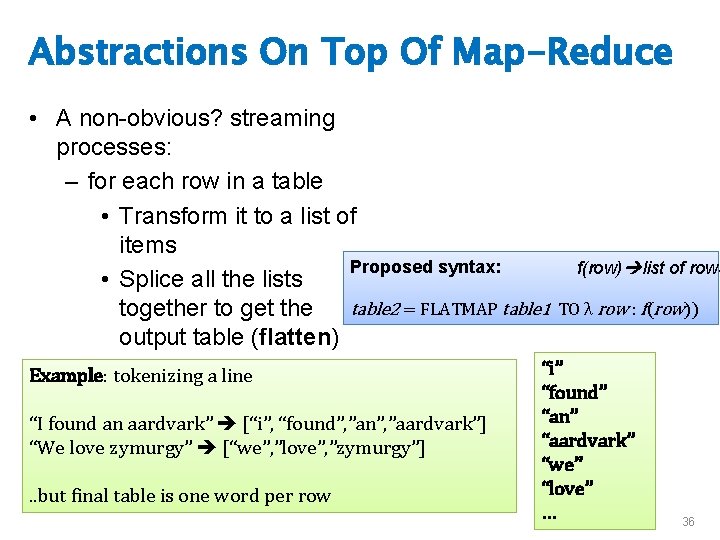

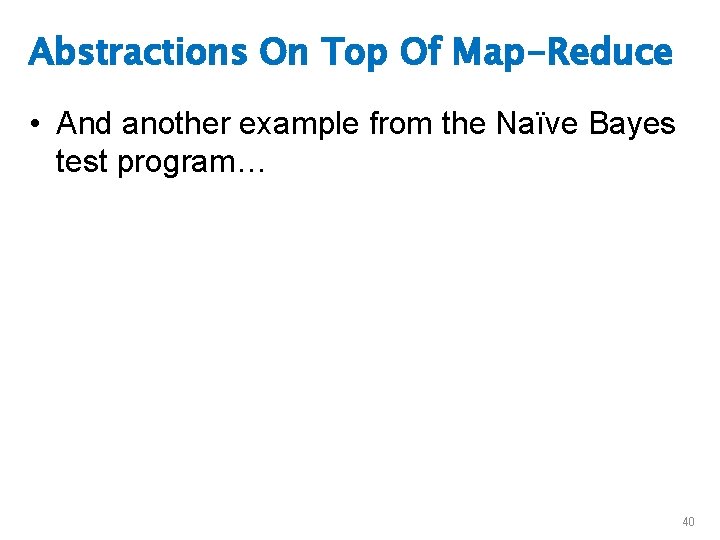

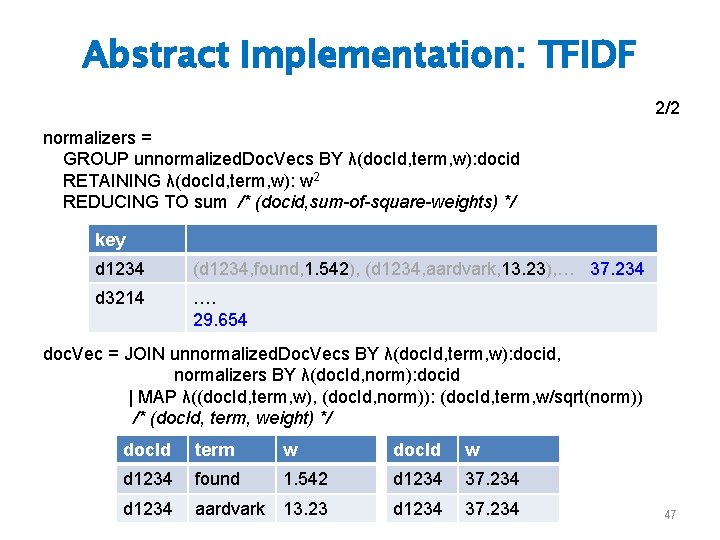

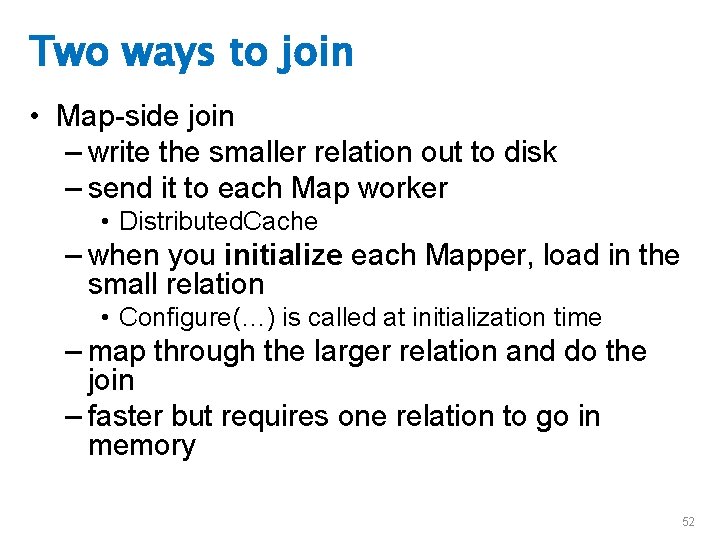

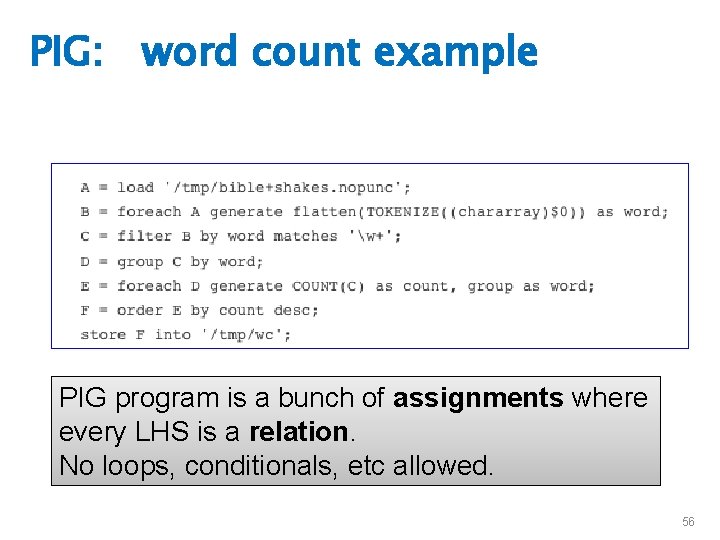

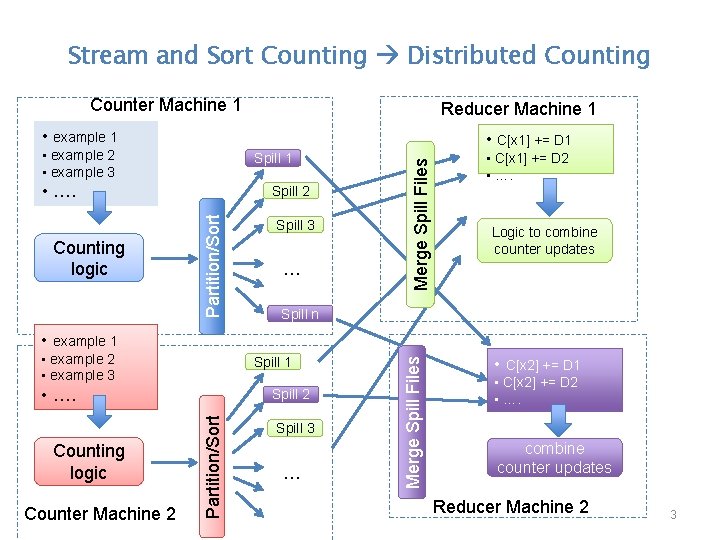

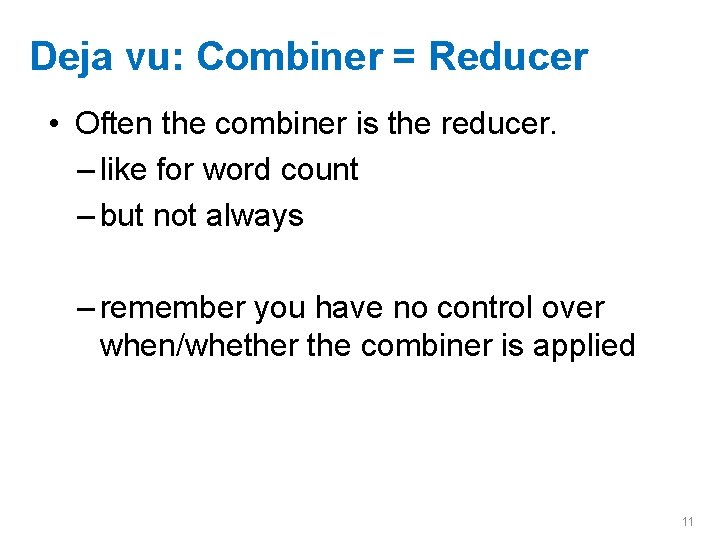

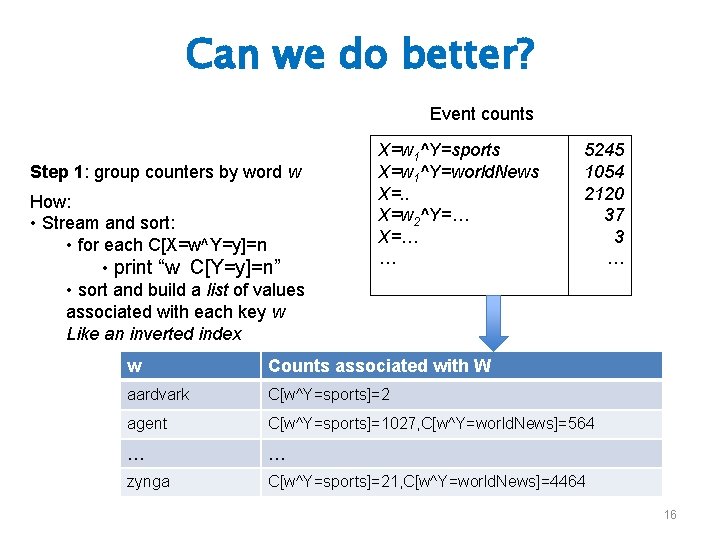

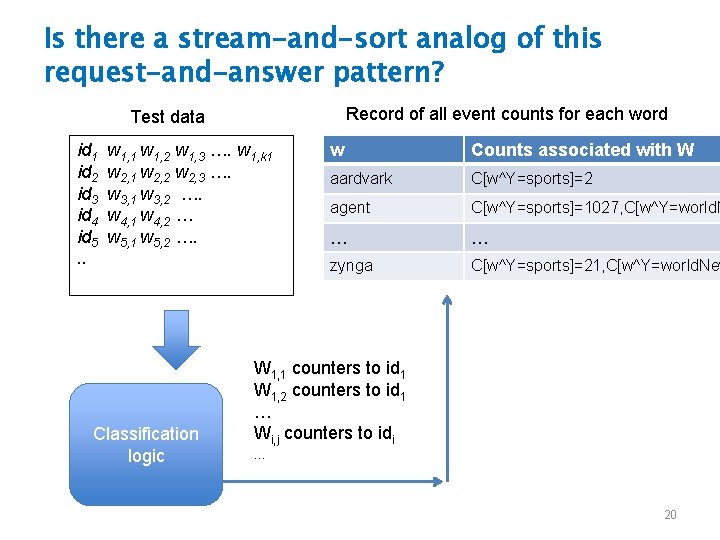

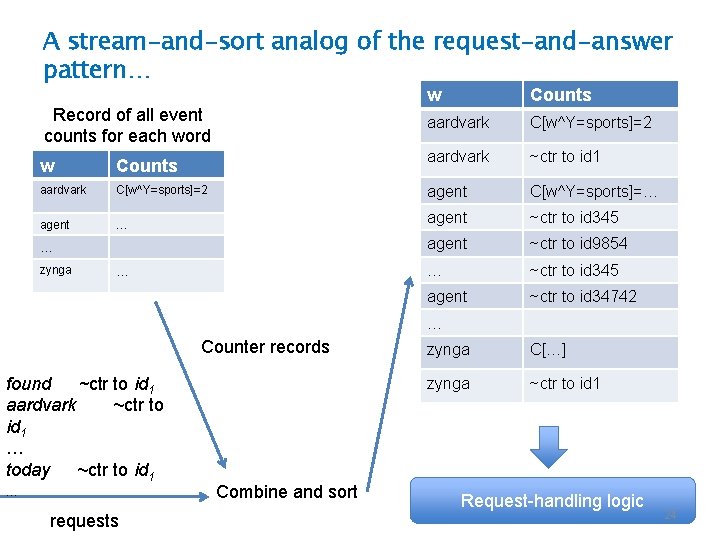

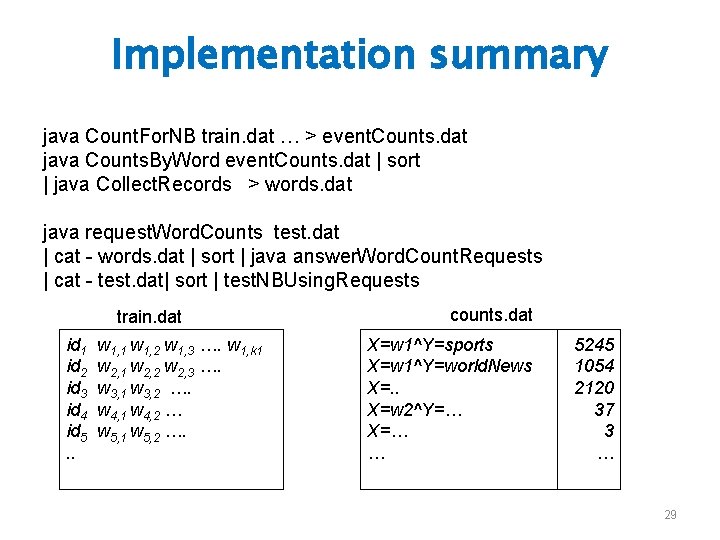

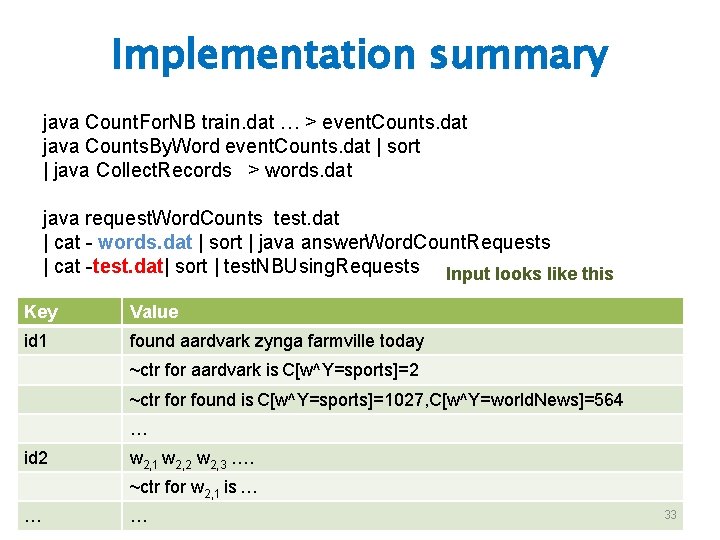

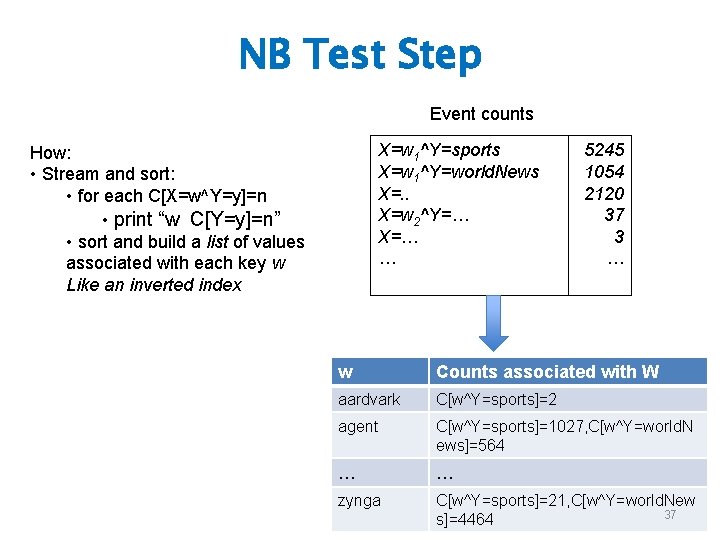

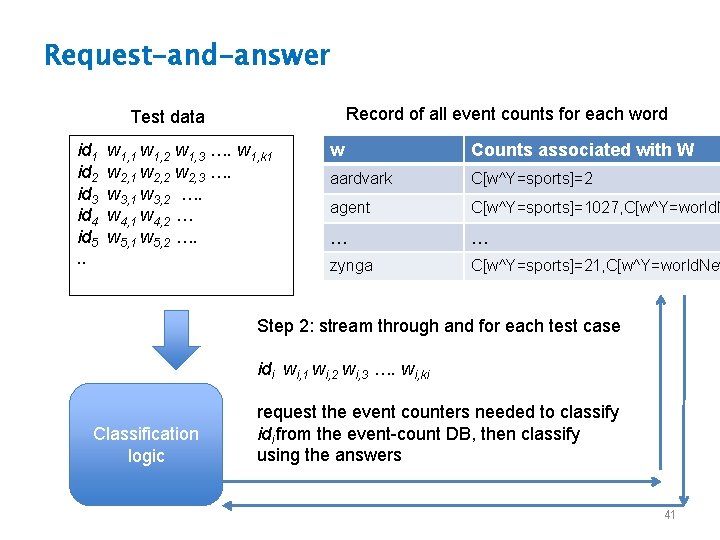

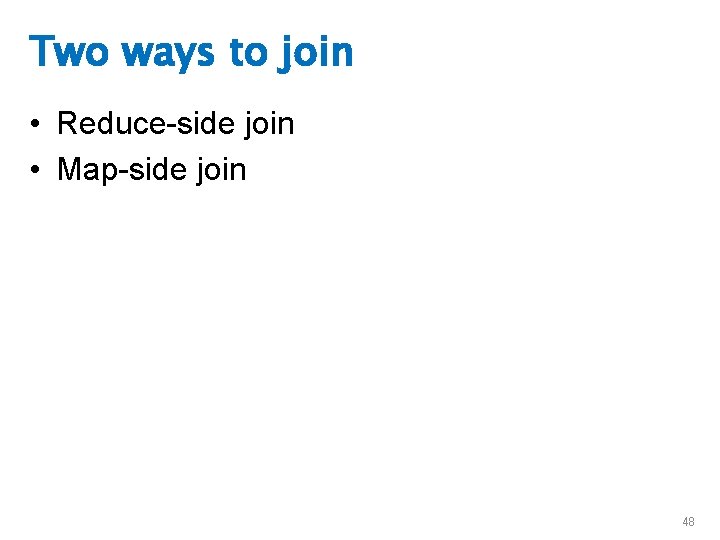

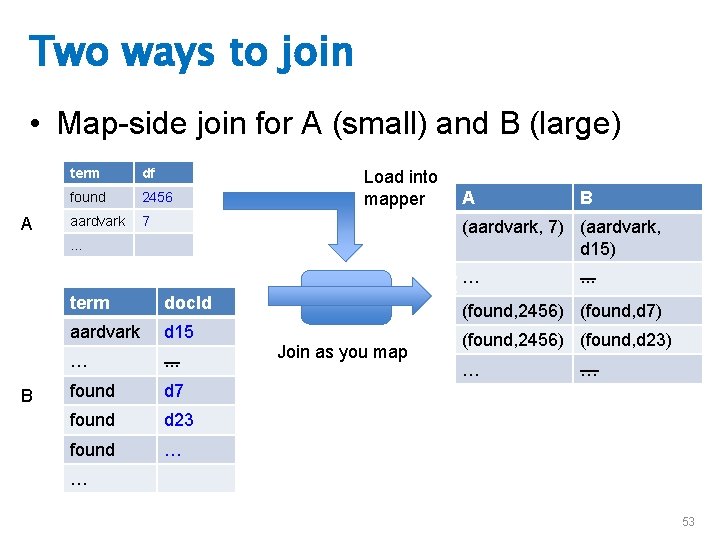

A stream-and-sort analog of the request-and-answer pattern… Record of all event counts for each word w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to id 1 w Counts aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 agent ~ctr to id 34742 … zynga … … Counter records found ~ctr to id 1 aardvark ~ctr to id 1 … today ~ctr to id 1 … requests Combine and sort zynga C[…] zynga ~ctr to id 1 Request-handling logic 24

A stream-and-sort analog of the request-and-answer pattern… • previous. Key = something. Impossible • For each (key, val) in input: • If key==previous. Key • Answer(record. For. Prev. Key, val) • Else • previous. Key = key • record. For. Prev. Key = val define Answer(record, request): • find id where “request = ~ctr to id” • print “id ~ctr for request is record” Combine and sort requests w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to id 1 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 agent ~ctr to id 34742 … zynga C[…] zynga ~ctr to id 1 Request-handling logic 25

A stream-and-sort analog of the request-and-answer pattern… • previous. Key = something. Impossible • For each (key, val) in input: • If key==previous. Key • Answer(record. For. Prev. Key, val) • Else • previous. Key = key • record. For. Prev. Key = val define Answer(record, request): • find id where “request = ~ctr to id” • print “id ~ctr for request is record” Output: id 1 ~ctr for aardvark is C[w^Y=sports]=2 … id 1 ~ctr for zynga is …. … Combine and sort requests w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to id 1 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 agent ~ctr to id 34742 … zynga C[…] zynga ~ctr to id 1 Request-handling logic 26

![A streamandsort analog of the requestandanswer pattern w Counts aardvark CwYsports2 aardvark ctr to A stream-and-sort analog of the request-and-answer pattern… w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-27.jpg)

A stream-and-sort analog of the request-and-answer pattern… w Counts aardvark C[w^Y=sports]=2 aardvark ~ctr to id 1 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 agent ~ctr to id 34742 … zynga C[…] zynga ~ctr to id 1 Request-handling logic Output: id 1 ~ctr for aardvark is C[w^Y=sports]=2 … id 1 ~ctr for zynga is …. … id 1 found an aardvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. . Combine and sort ? ? 27

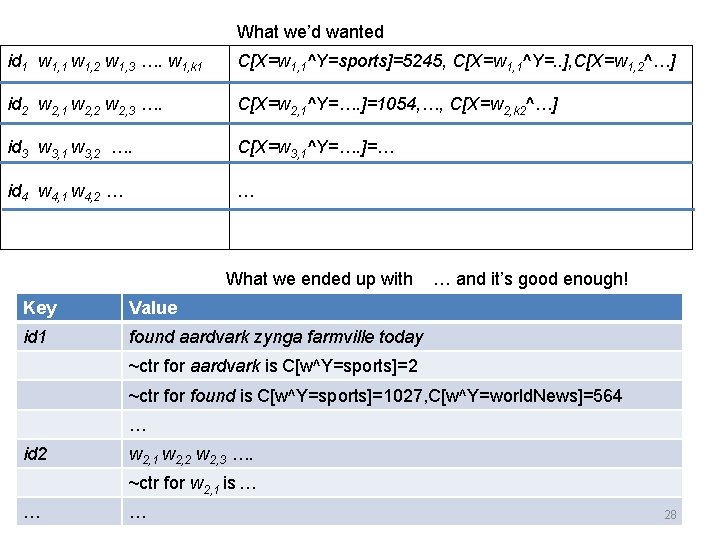

What we’d wanted id 1 w 1, 2 w 1, 3 …. w 1, k 1 C[X=w 1, 1^Y=sports]=5245, C[X=w 1, 1^Y=. . ], C[X=w 1, 2^…] id 2 w 2, 1 w 2, 2 w 2, 3 …. C[X=w 2, 1^Y=…. ]=1054, …, C[X=w 2, k 2^…] id 3 w 3, 1 w 3, 2 …. C[X=w 3, 1^Y=…. ]=… id 4 w 4, 1 w 4, 2 … … What we ended up with Key Value id 1 found aardvark zynga farmville today … and it’s good enough! ~ctr for aardvark is C[w^Y=sports]=2 ~ctr found is C[w^Y=sports]=1027, C[w^Y=world. News]=564 … id 2 w 2, 1 w 2, 2 w 2, 3 …. ~ctr for w 2, 1 is … … … 28

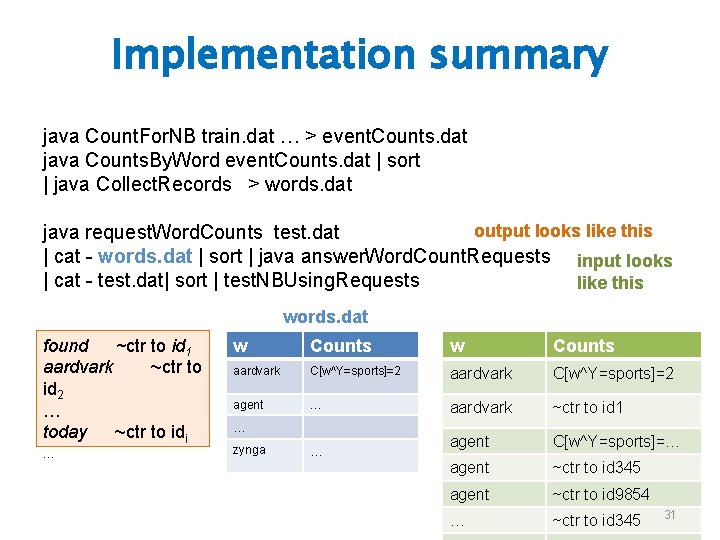

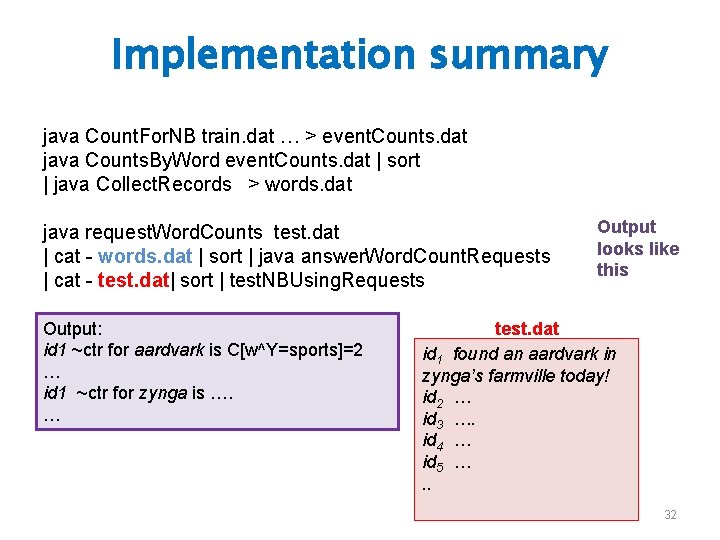

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat java request. Word. Counts test. dat | cat - words. dat | sort | java answer. Word. Count. Requests | cat - test. dat| sort | test. NBUsing. Requests train. dat id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. counts. dat X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … 5245 1054 2120 37 3 … 29

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat java request. Word. Counts test. dat | cat - words. dat | sort | java answer. Word. Count. Requests | cat - test. dat| sort | test. NBUsing. Requests words. dat w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. News]=564 … … zynga C[w^Y=sports]=21, C[w^Y=world. News]=4464 30

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat output looks like this java request. Word. Counts test. dat | cat - words. dat | sort | java answer. Word. Count. Requests input looks | cat - test. dat| sort | test. NBUsing. Requests like this words. dat found ~ctr to id 1 aardvark ~ctr to id 2 … today ~ctr to idi … w Counts aardvark C[w^Y=sports]=2 agent … aardvark ~ctr to id 1 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 … zynga … 31

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat java request. Word. Counts test. dat | cat - words. dat | sort | java answer. Word. Count. Requests | cat - test. dat| sort | test. NBUsing. Requests Output: id 1 ~ctr for aardvark is C[w^Y=sports]=2 … id 1 ~ctr for zynga is …. … Output looks like this test. dat id 1 found an aardvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. . 32

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat java request. Word. Counts test. dat | cat - words. dat | sort | java answer. Word. Count. Requests | cat -test. dat| sort | test. NBUsing. Requests Input looks like this Key Value id 1 found aardvark zynga farmville today ~ctr for aardvark is C[w^Y=sports]=2 ~ctr found is C[w^Y=sports]=1027, C[w^Y=world. News]=564 … id 2 w 2, 1 w 2, 2 w 2, 3 …. ~ctr for w 2, 1 is … … … 33

ABSTRACTIONS FOR MAP-REDUCE 34

Abstractions On Top Of Map-Reduce • Some obvious streaming processes: – for each row in a table • Transform it and output the result • Decide if you want to keep it with some boolean test, and copy out only the ones that pass the test Example: stem words in a stream of word-count pairs: (“aardvarks”, 1) (“aardvark”, 1) Proposed syntax: f(row) row’ table 2 = MAP table 1 TO λ row : f(row)) Example: apply stop words (“aardvark”, 1) (“the”, 1) deleted Proposed syntax: f(row) {true, false} table 2 = FILTER table 1 BY λ row : f(row)) 35

Abstractions On Top Of Map-Reduce • A non-obvious? streaming processes: – for each row in a table • Transform it to a list of items Proposed syntax: • Splice all the lists table 2 = FLATMAP table 1 together to get the output table (flatten) Example: tokenizing a line “I found an aardvark” [“i”, “found”, ”an”, ”aardvark”] “We love zymurgy” [“we”, ”love”, ”zymurgy”]. . but final table is one word per row f(row) list of rows TO λ row : f(row)) “i” “found” “an” “aardvark” “we” “love” … 36

NB Test Step Event counts X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … How: • Stream and sort: • for each C[X=w^Y=y]=n • print “w C[Y=y]=n” • sort and build a list of values associated with each key w Like an inverted index 5245 1054 2120 37 3 … w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N ews]=564 … … zynga C[w^Y=sports]=21, C[w^Y=world. New 37 s]=4464

NB Test Step Event counts The general case: We’re taking rows from a table • In a particular format (event, count) Applying a function to get a new value • The word for the event And grouping the rows of the table by this new value X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … 5245 1054 2120 37 3 … Grouping operation Special case of a map-reduce Proposed syntax: f(row) field GROUP table BY λ row : f(row) Could define f via: a function, a field of a defined record structure, … w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N ews]=564 … … zynga C[w^Y=sports]=21, C[w^Y=world. New 38 s]=4464

NB Test Step The general case: We’re taking rows from a table • In a particular format (event, count) Applying a function to get a new value • The word for the event And grouping the rows of the table by this new value Grouping operation Special case of a map-reduce Proposed syntax: f(row) field Aside: you guys know how to implement this, right? 1. Output pairs (f(row), row) with a map/streaming process 2. Sort pairs by key – which is f(row) 3. Reduce and aggregate by appending together all the values associated with the same key GROUP table BY λ row : f(row) Could define f via: a function, a field of a defined record structure, … 39

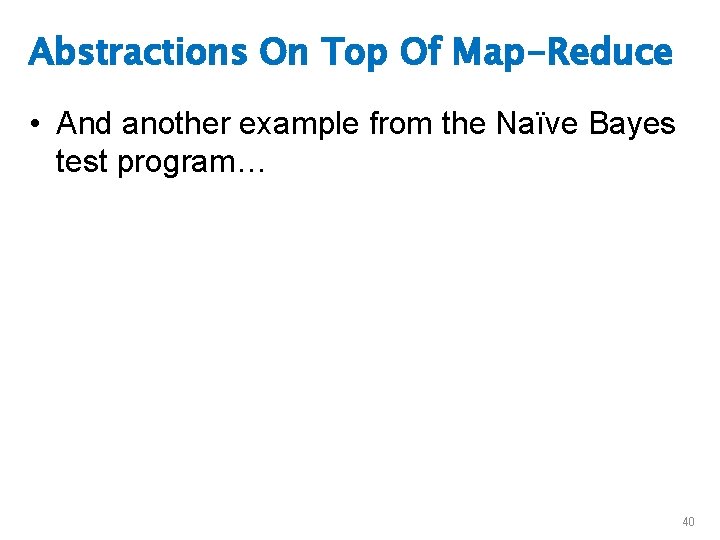

Abstractions On Top Of Map-Reduce • And another example from the Naïve Bayes test program… 40

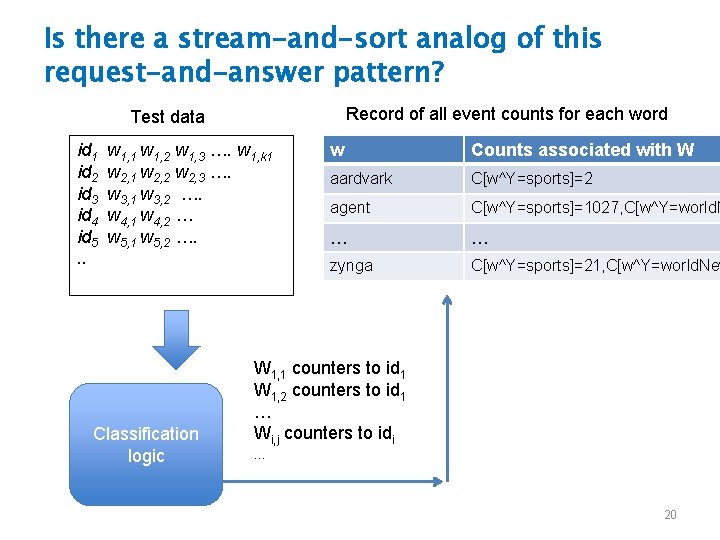

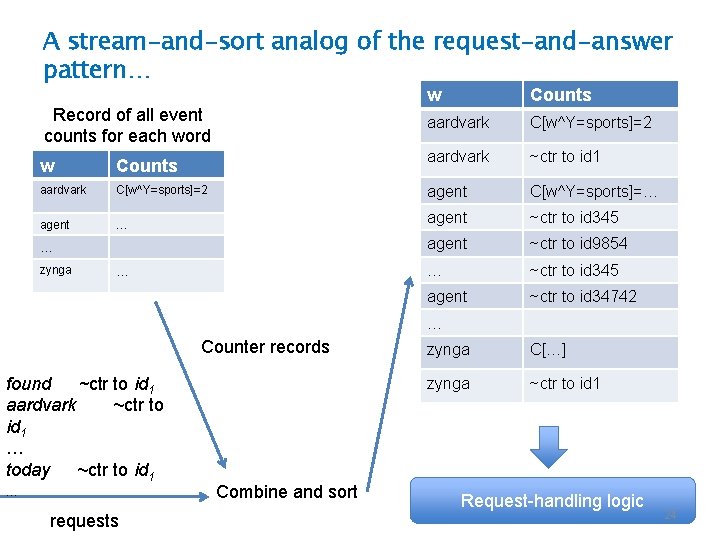

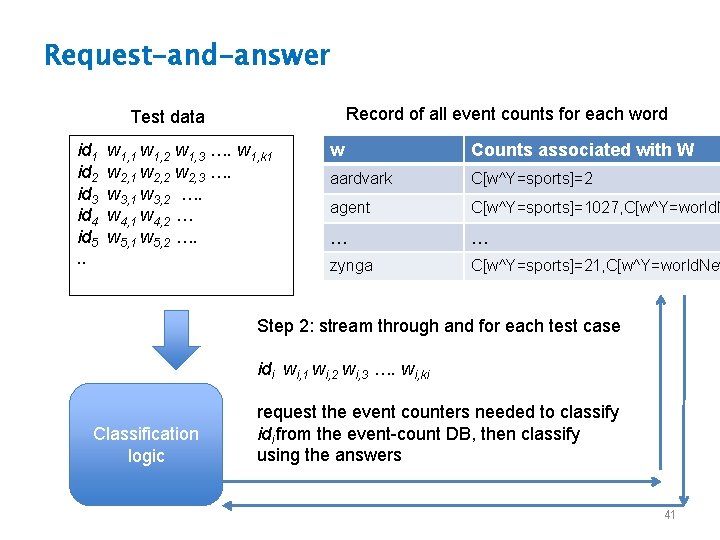

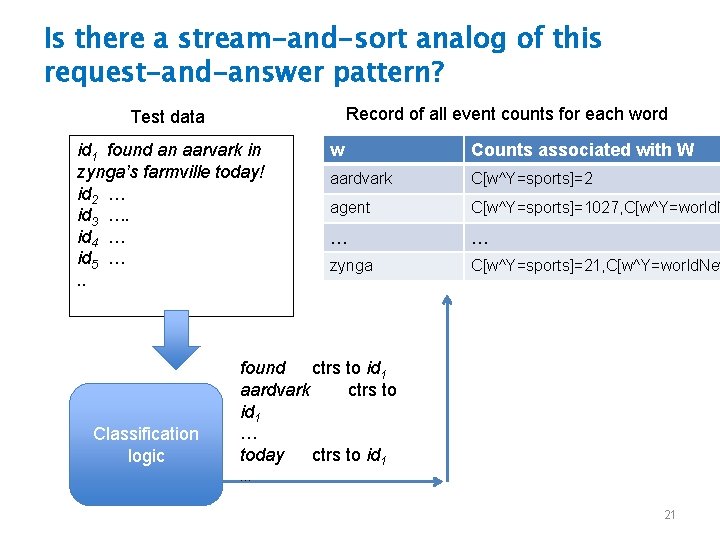

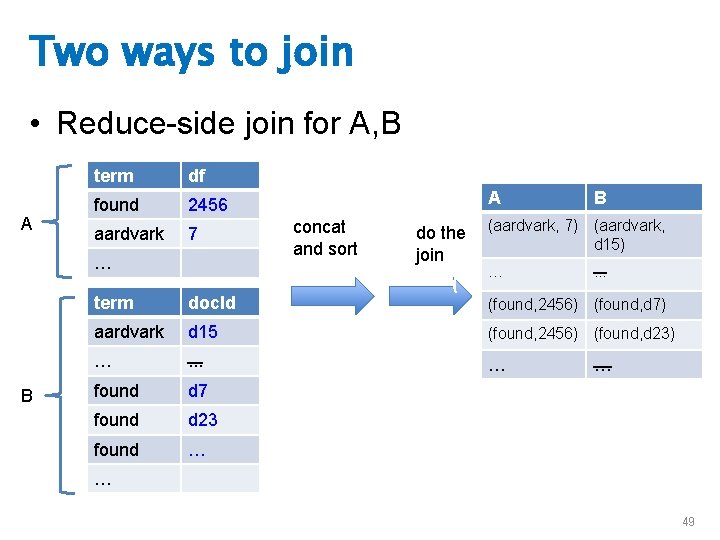

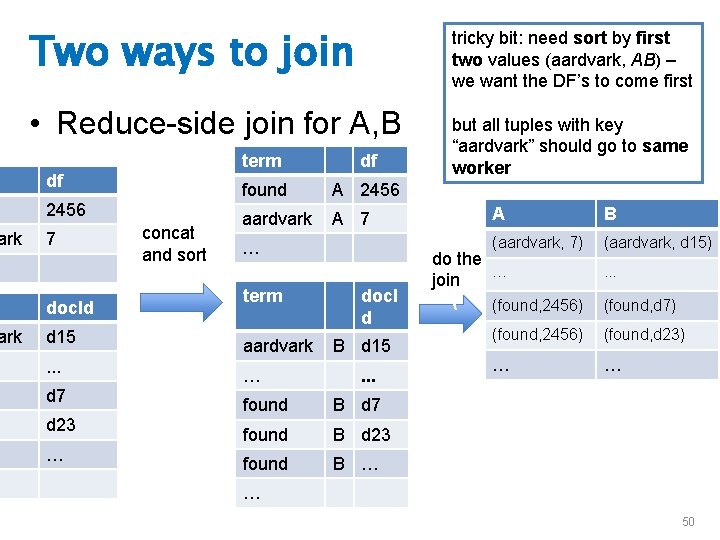

Request-and-answer Record of all event counts for each word Test data id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. N … … zynga C[w^Y=sports]=21, C[w^Y=world. New Step 2: stream through and for each test case idi wi, 1 wi, 2 wi, 3 …. wi, ki Classification logic request the event counters needed to classify idi from the event-count DB, then classify using the answers 41

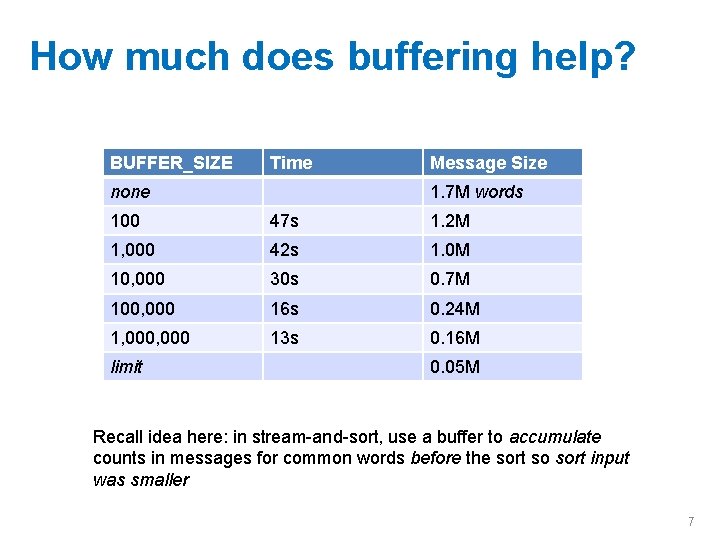

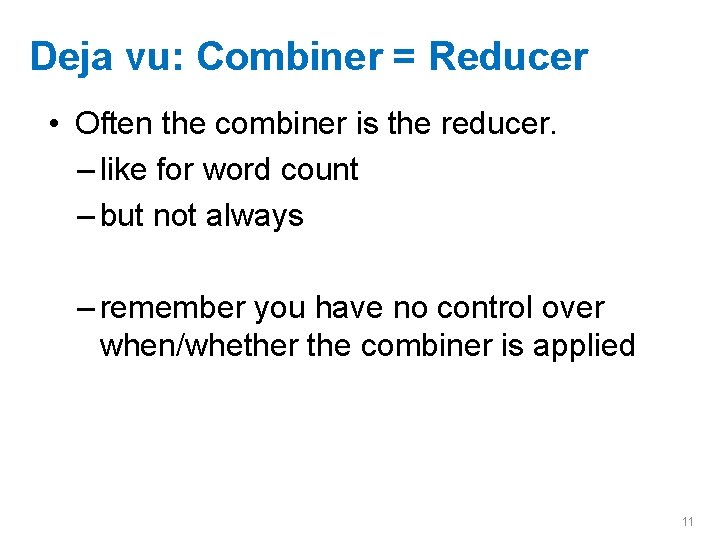

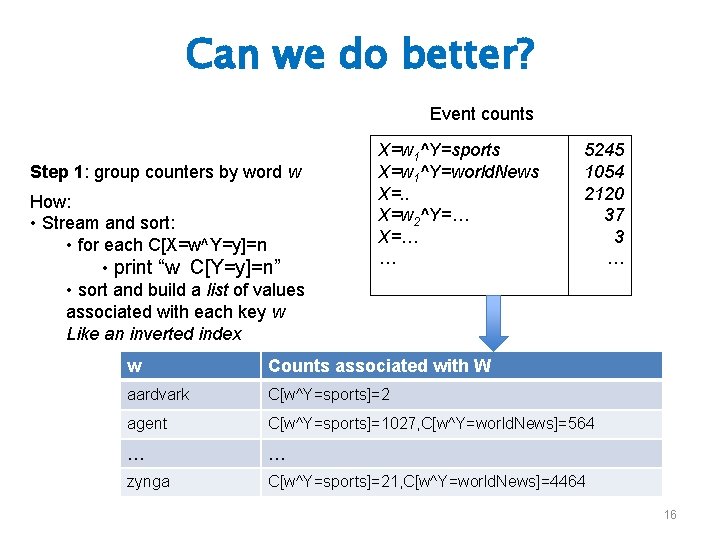

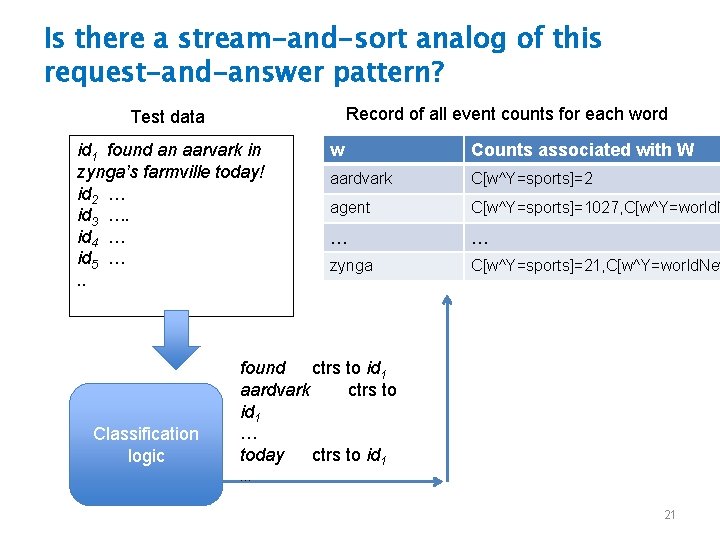

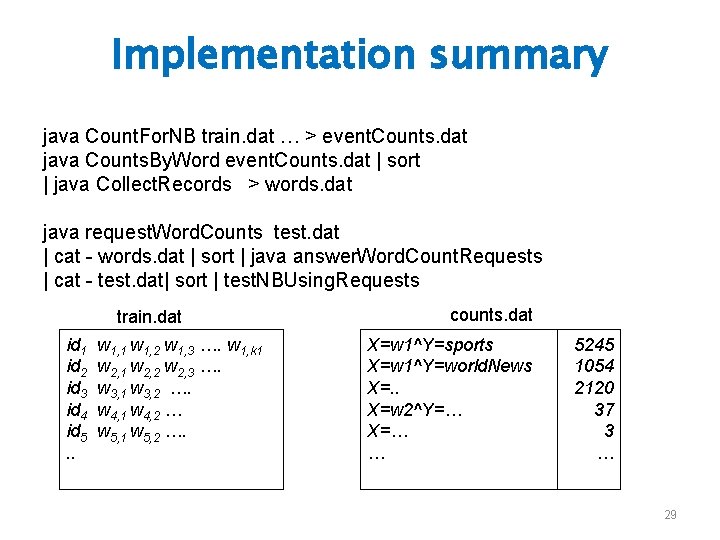

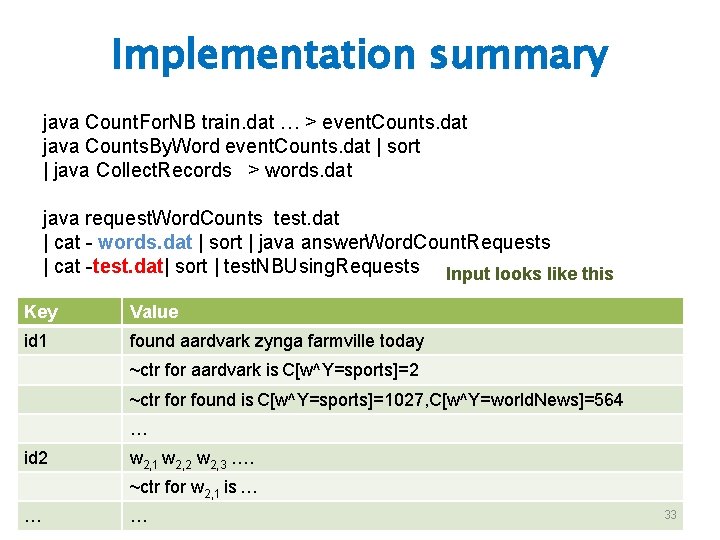

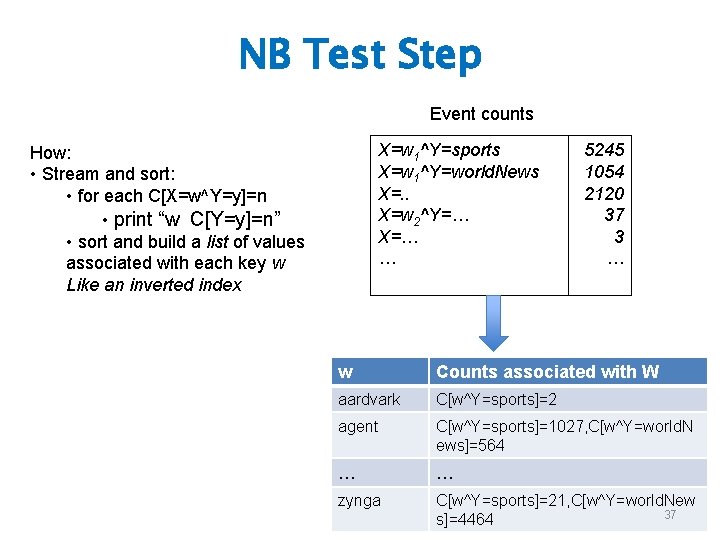

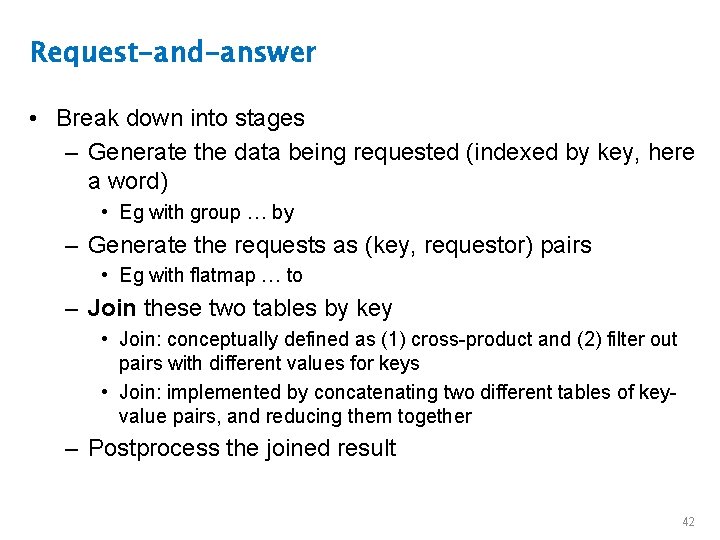

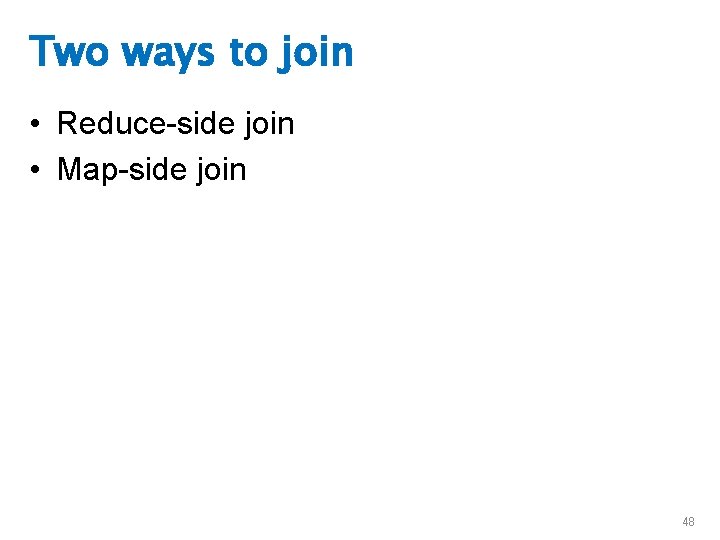

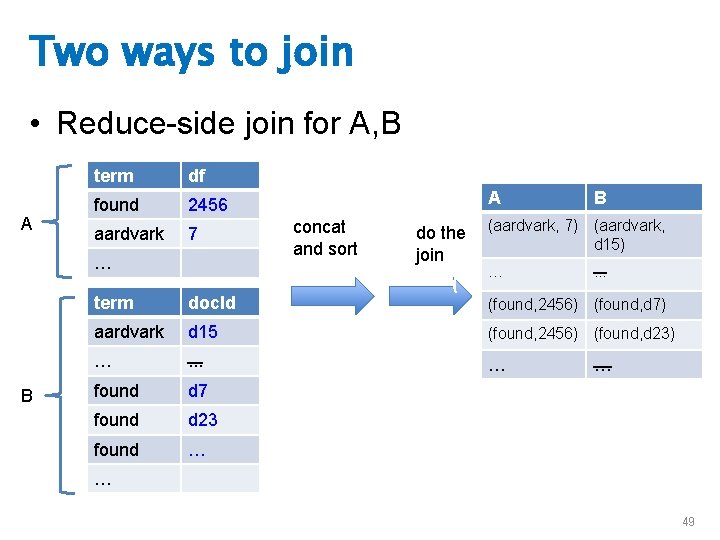

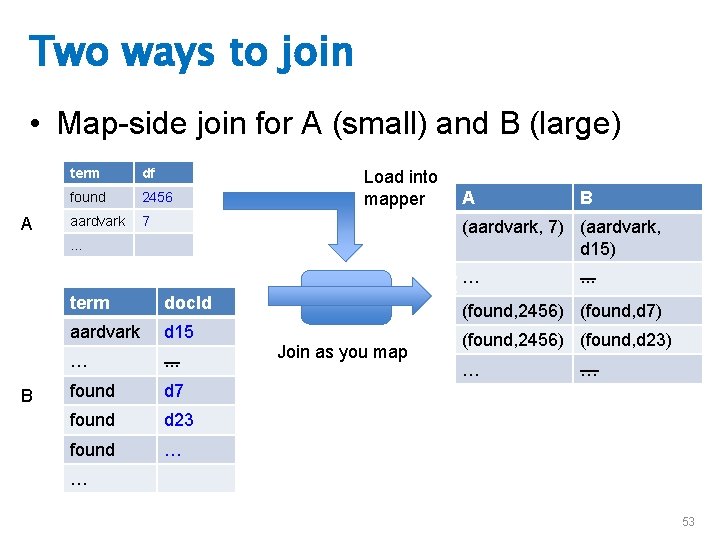

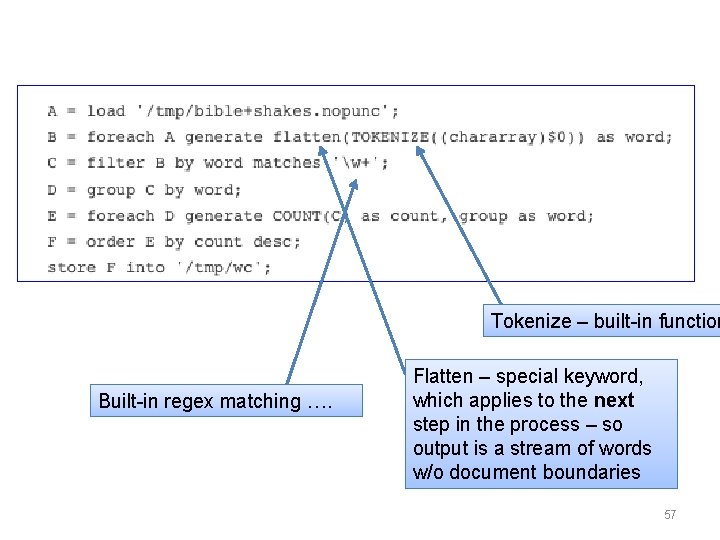

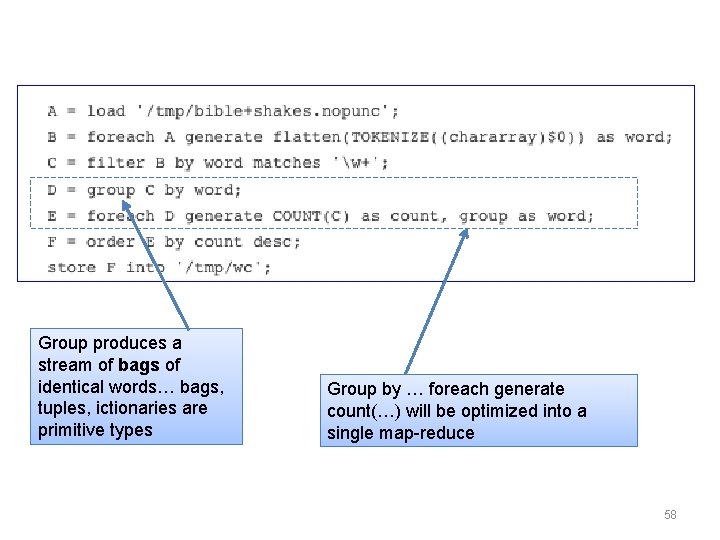

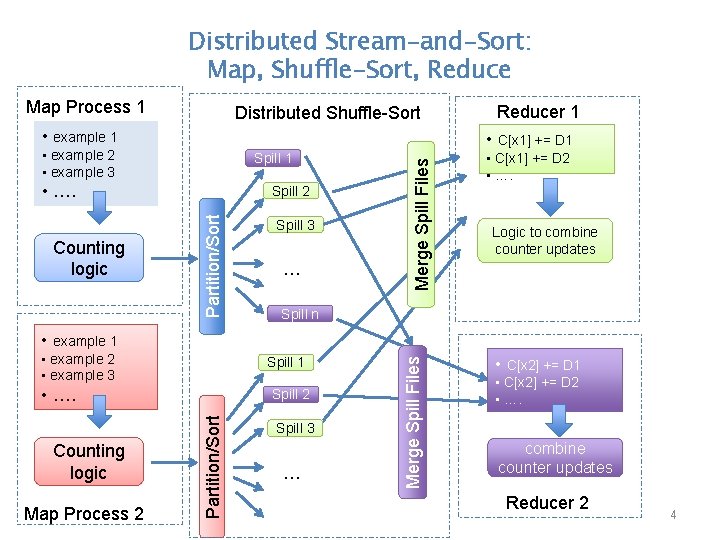

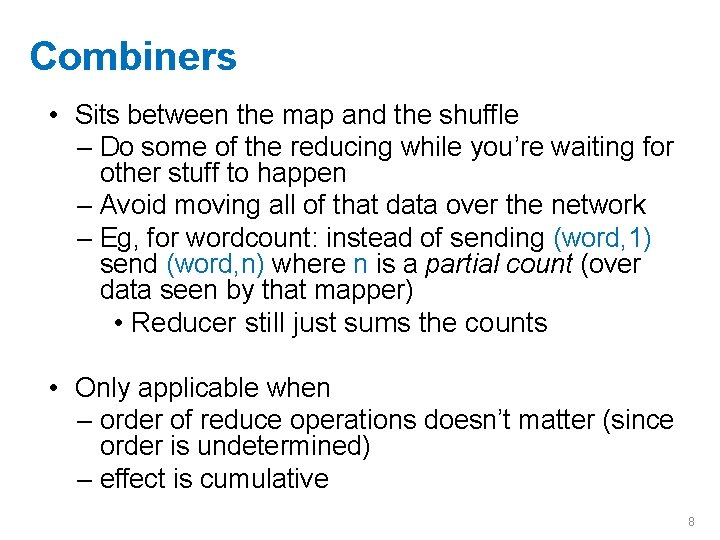

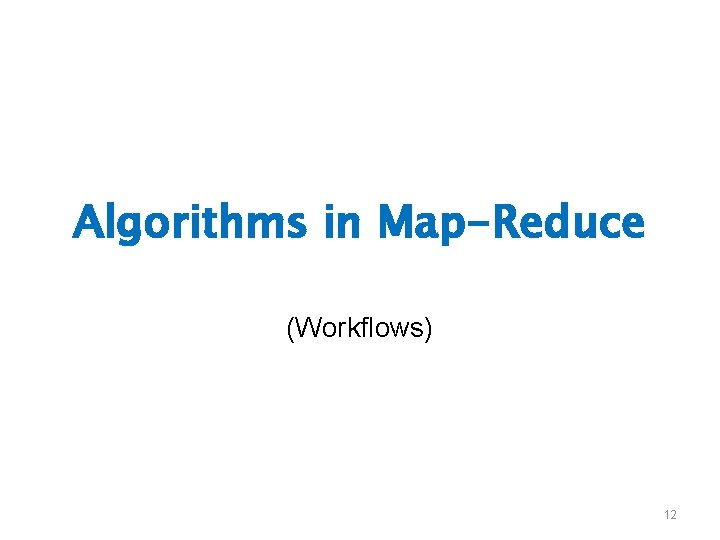

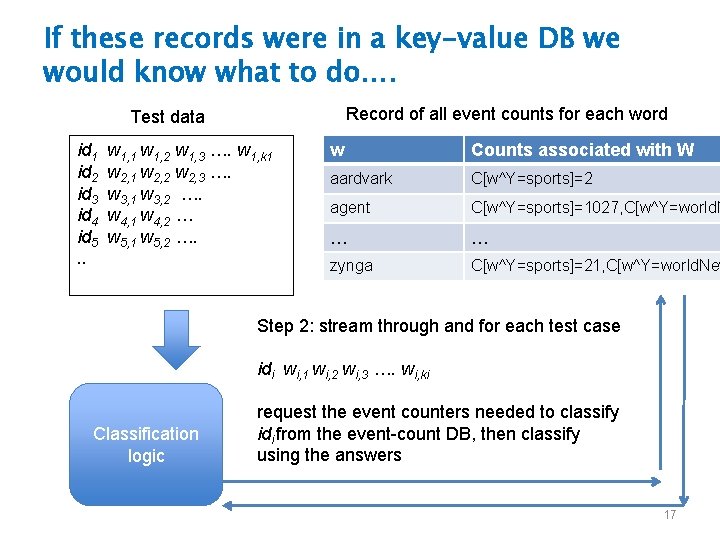

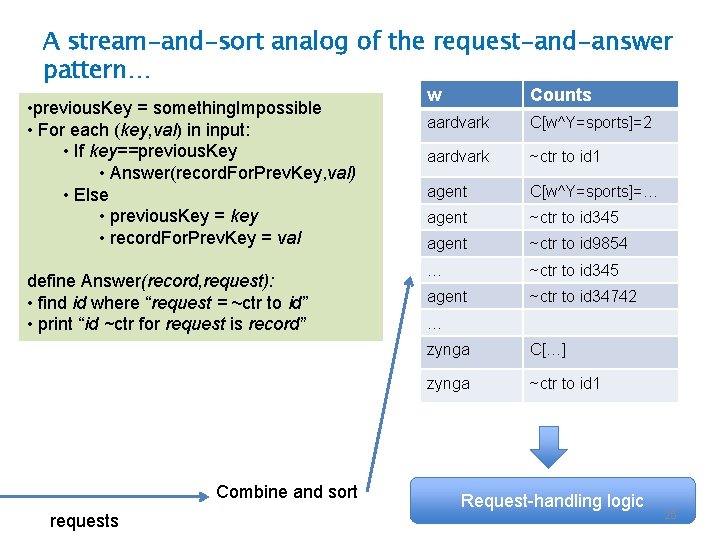

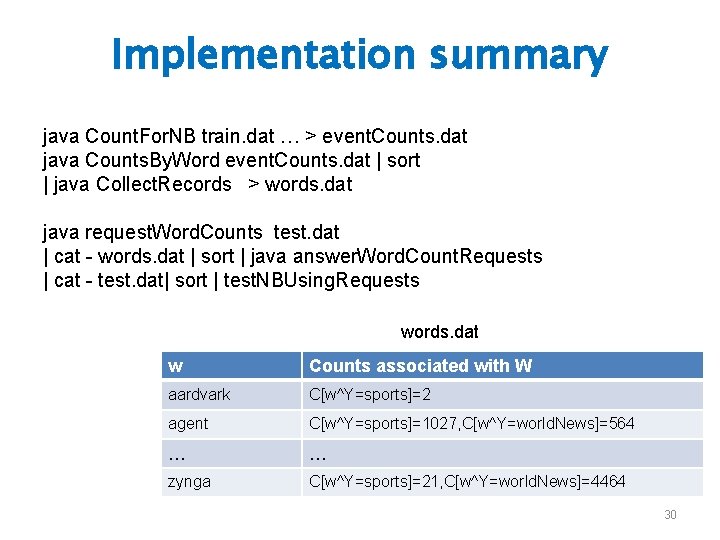

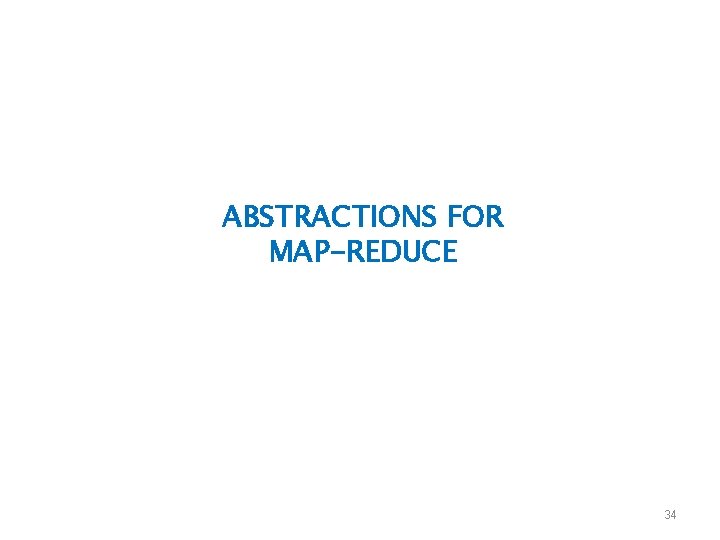

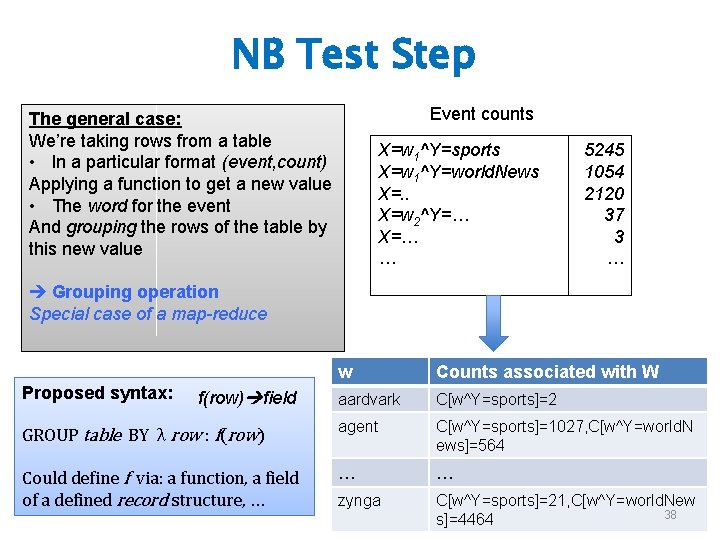

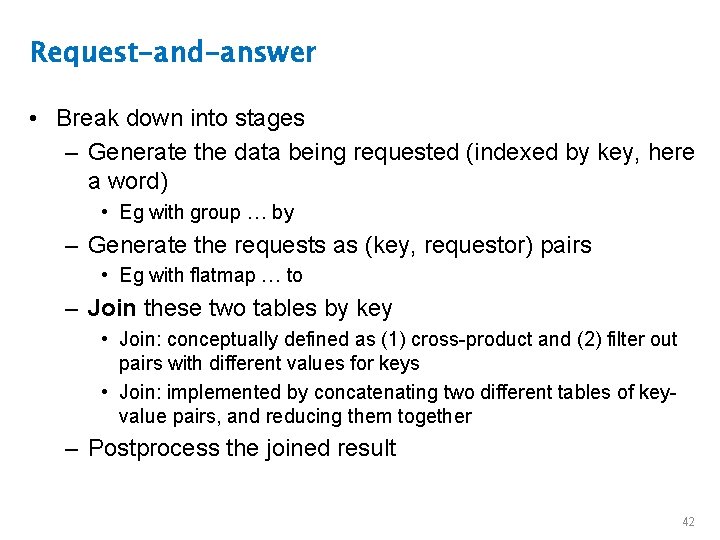

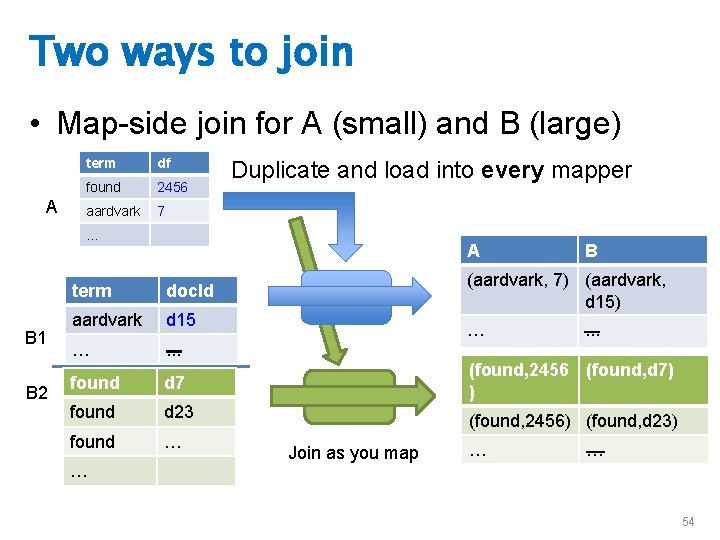

Request-and-answer • Break down into stages – Generate the data being requested (indexed by key, here a word) • Eg with group … by – Generate the requests as (key, requestor) pairs • Eg with flatmap … to – Join these two tables by key • Join: conceptually defined as (1) cross-product and (2) filter out pairs with different values for keys • Join: implemented by concatenating two different tables of keyvalue pairs, and reducing them together – Postprocess the joined result 42

![w Request w Counters found ctr to id 1 aardvark CwYsports2 aardvark ctr to w Request w Counters found ~ctr to id 1 aardvark C[w^Y=sports]=2 aardvark ~ctr to](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-43.jpg)

w Request w Counters found ~ctr to id 1 aardvark C[w^Y=sports]=2 aardvark ~ctr to id 1 agent C[w^Y=sports]=1027, C[w^Y=world. News]= … … zynga C[w^Y=sports]=21, C[w^Y=world. News]=44 … zynga ~ctr to id 1 … ~ctr to id 2 w Counters Requests aardvark C[w^Y=sports]=2 ~ctr to id 1 agent C[w^Y=sports]=… ~ctr to id 345 agent C[w^Y=sports]=… ~ctr to id 9854 agent C[w^Y=sports]=… ~ctr to id 345 … C[w^Y=sports]=… ~ctr to id 34742 zynga C[…] ~ctr to id 1 zynga C[…] … 43

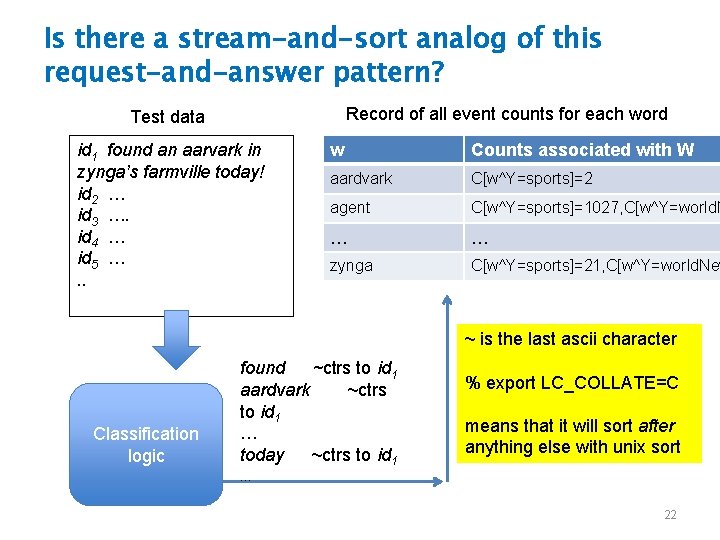

![w Request w Counters found id 1 aardvark CwYsports2 aardvark id 1 agent CwYsports1027 w Request w Counters found id 1 aardvark C[w^Y=sports]=2 aardvark id 1 agent C[w^Y=sports]=1027,](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-44.jpg)

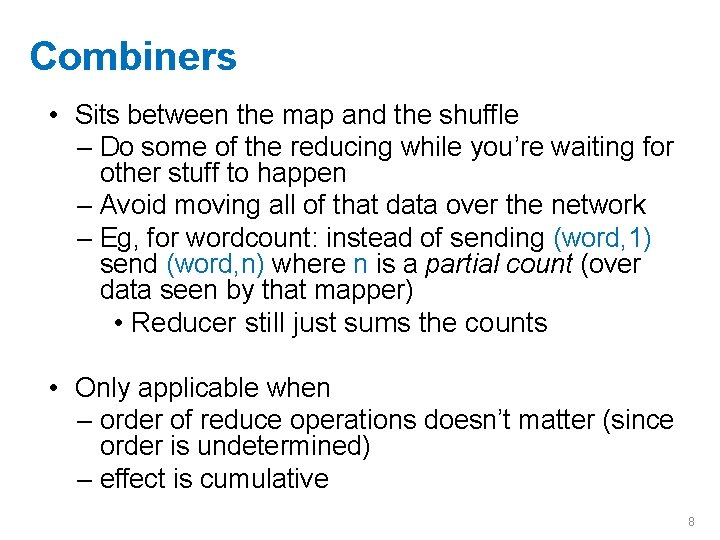

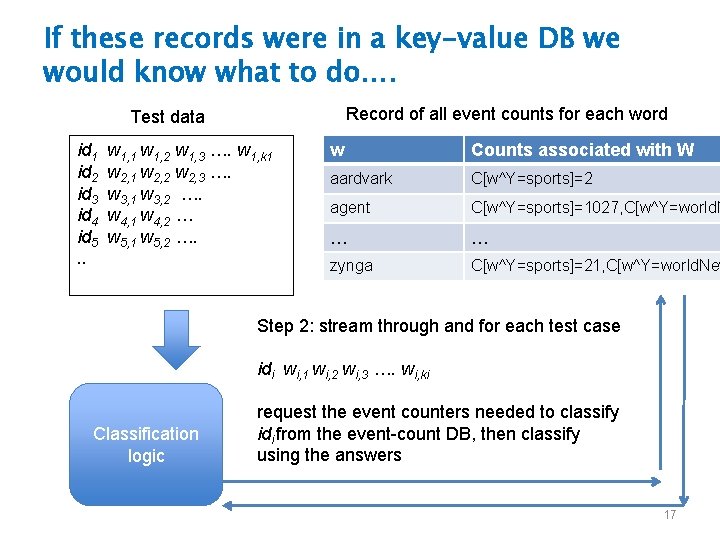

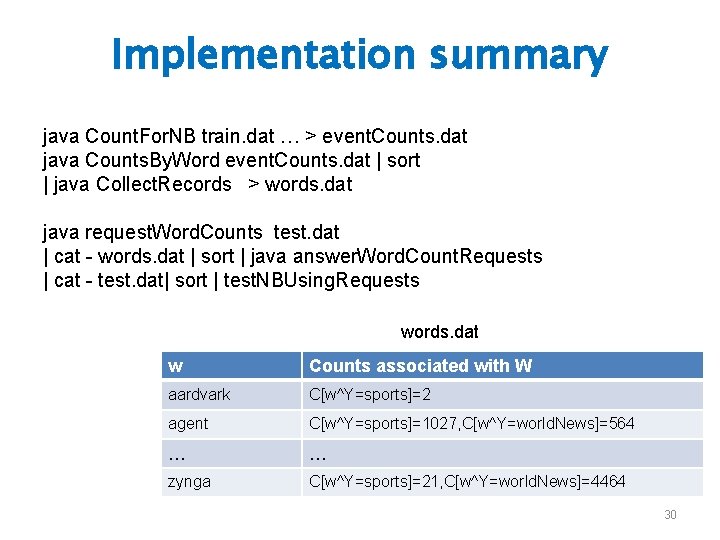

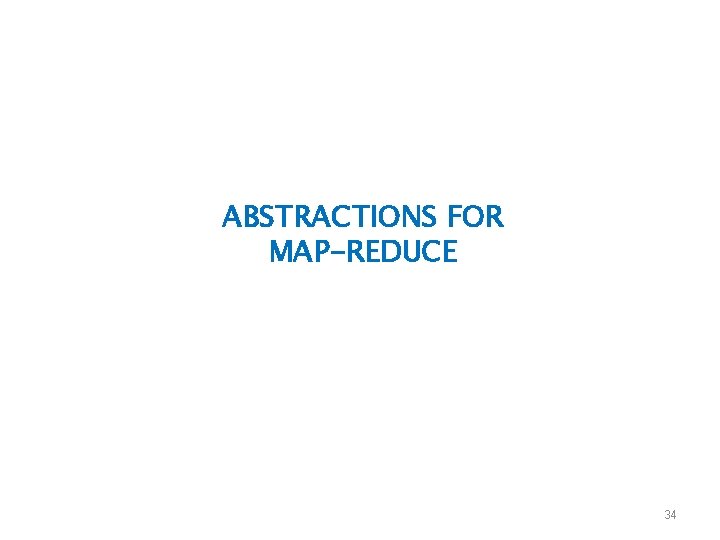

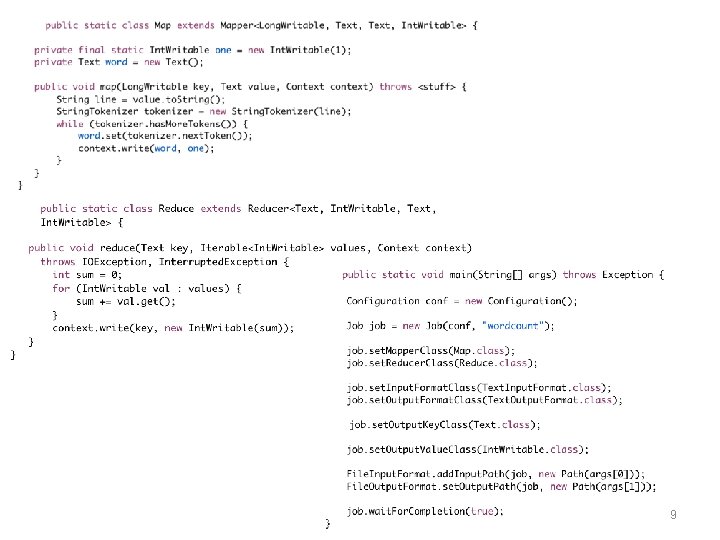

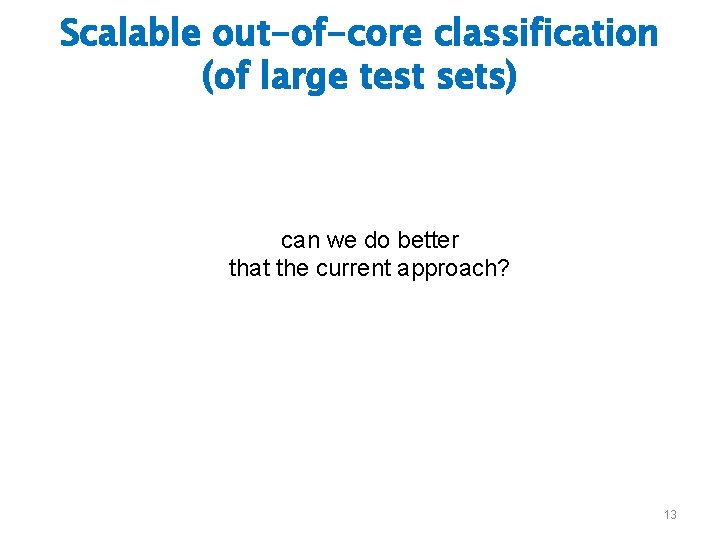

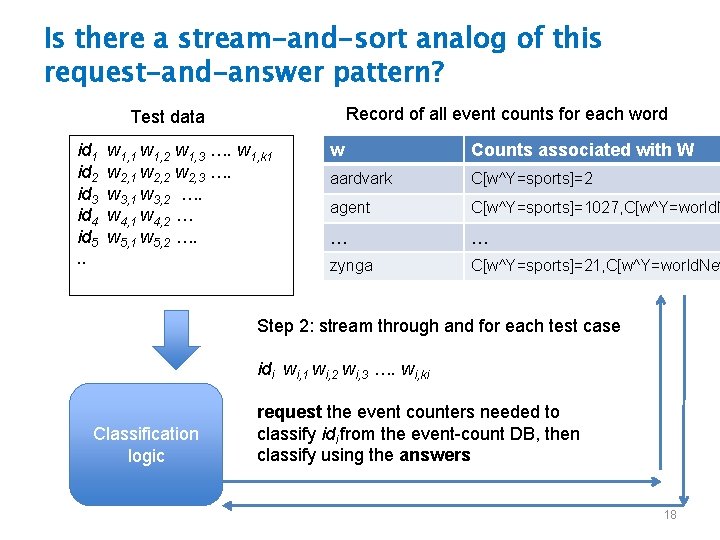

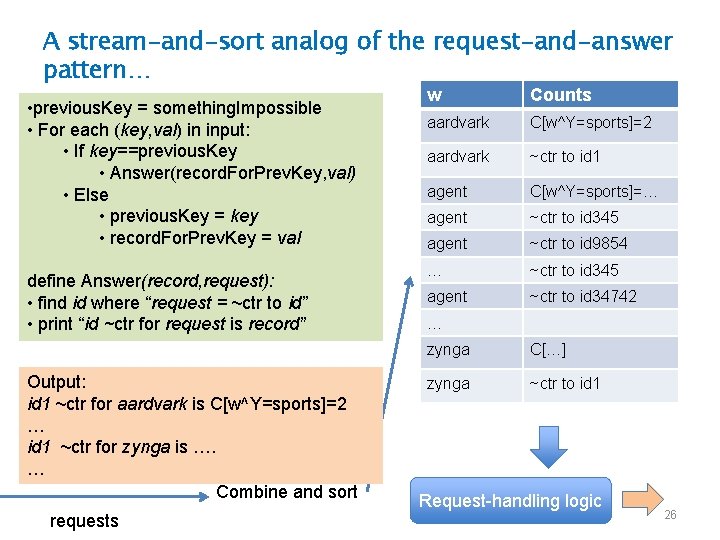

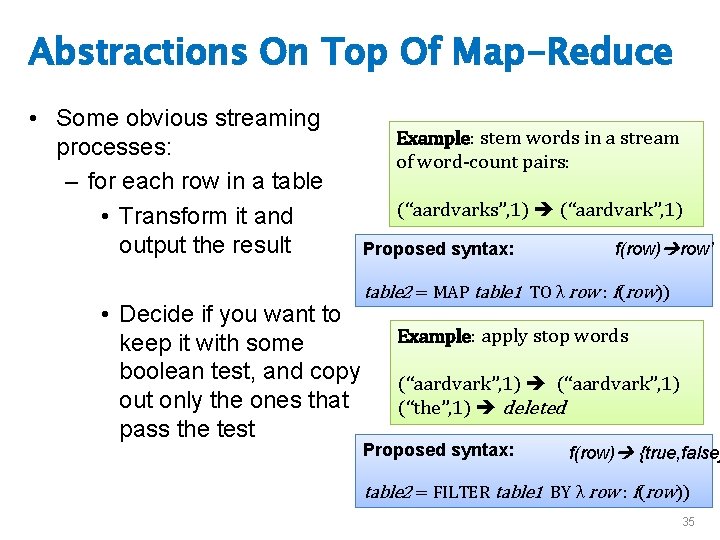

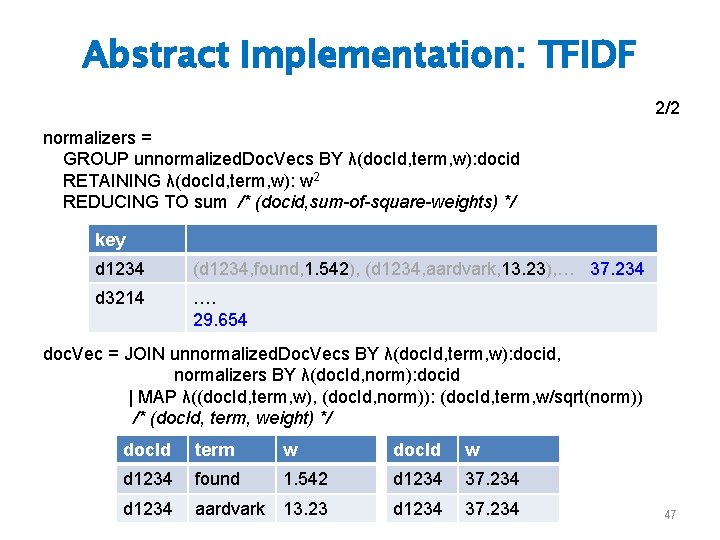

w Request w Counters found id 1 aardvark C[w^Y=sports]=2 aardvark id 1 agent C[w^Y=sports]=1027, C[w^Y=world. News]= … … zynga C[w^Y=sports]=21, C[w^Y=world. News]=44 … zynga id 1 … id 2 Examples: JOIN word. In. Doc BY word, word. Counters BY Counters word --- if word(row)Requests defined correctly w aardvark C[w^Y=sports]=2 id 1 JOIN word. In. Doc BY lambda (word, docid): word, word. Counters BY lambda agent (word, counters): word – using python syntax for functions C[w^Y=sports]=… id 345 Proposed syntax: agent C[w^Y=sports]=… id 9854 agent C[w^Y=sports]=… id 345 … C[w^Y=sports]=… id 34742 C[…] id 1 C[…] … JOIN table 1 BY λ row : f(row), zynga table 2 BY λ row : g(row) zynga 44

![Abstract Implementation TFIDF 12 data pairs docid term where term is a Abstract Implementation: [TF]IDF 1/2 data = pairs (docid , term) where term is a](https://slidetodoc.com/presentation_image_h2/3f074d4632e9d8e1768d806d5492b8d7/image-45.jpg)

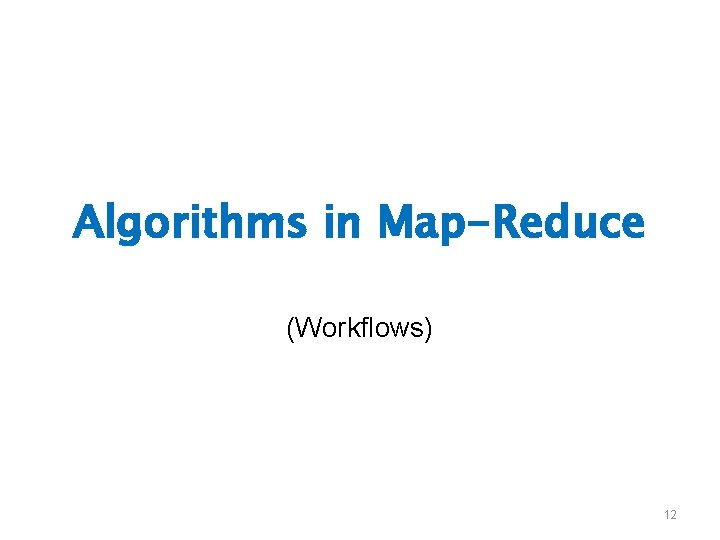

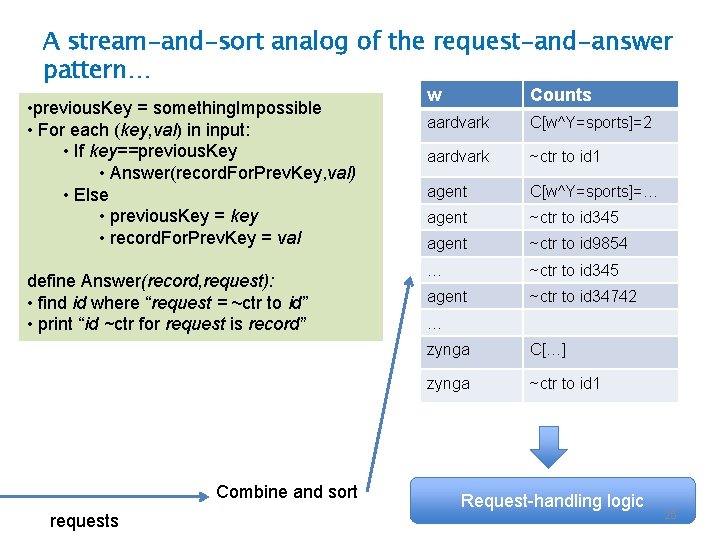

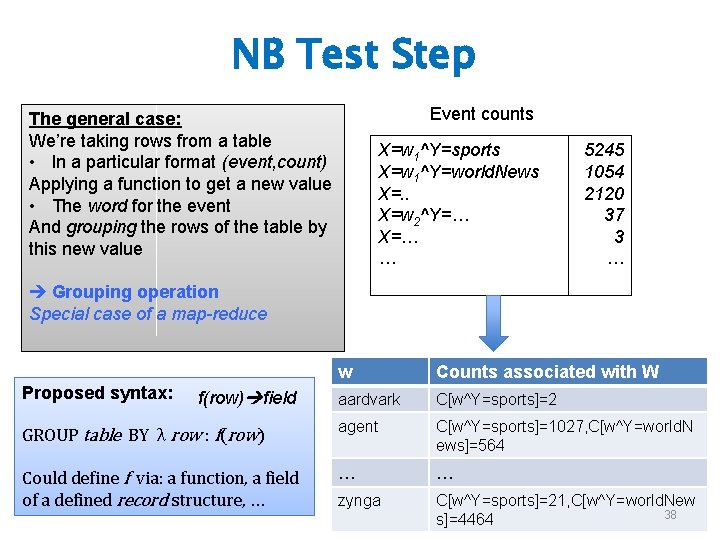

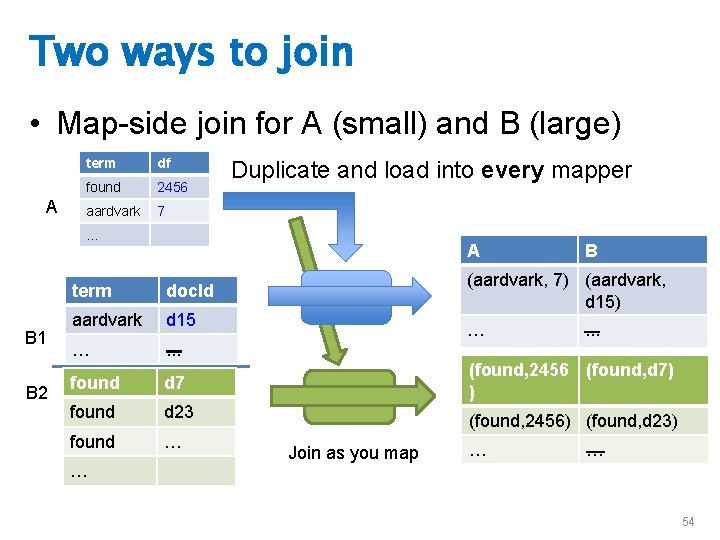

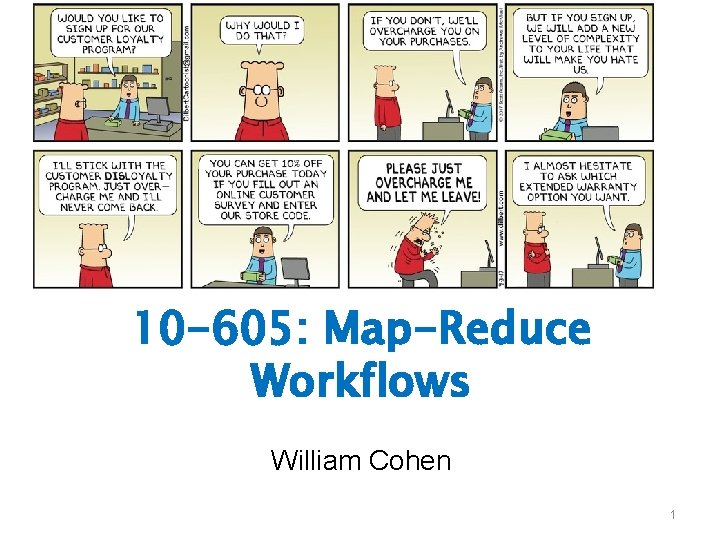

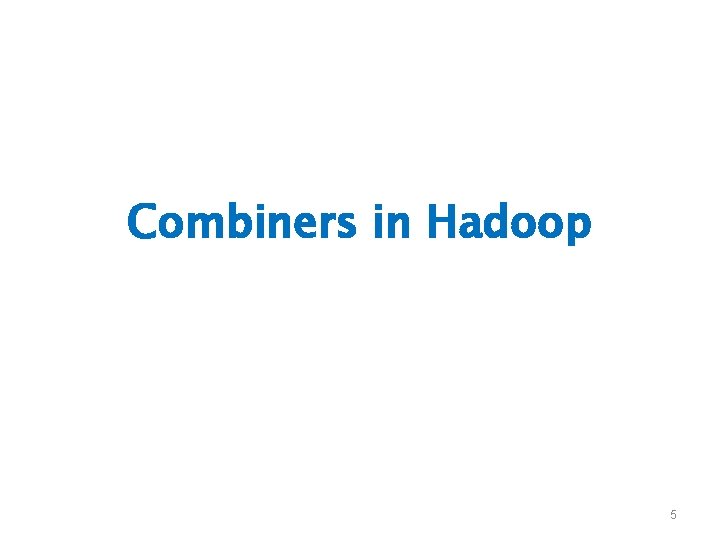

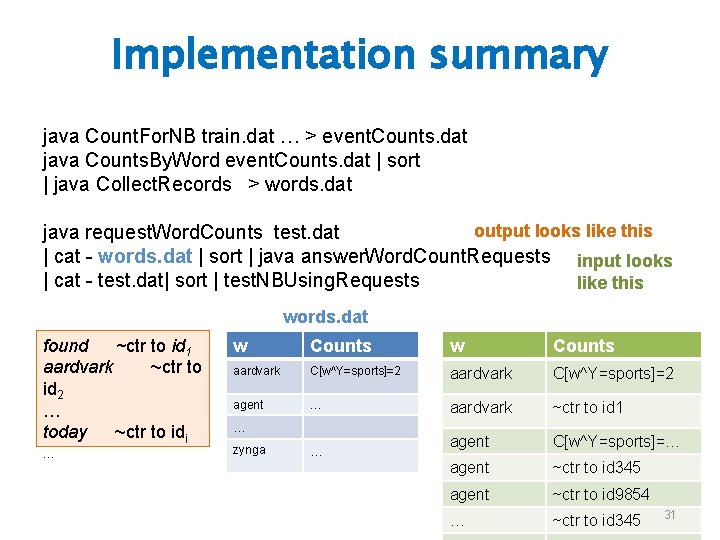

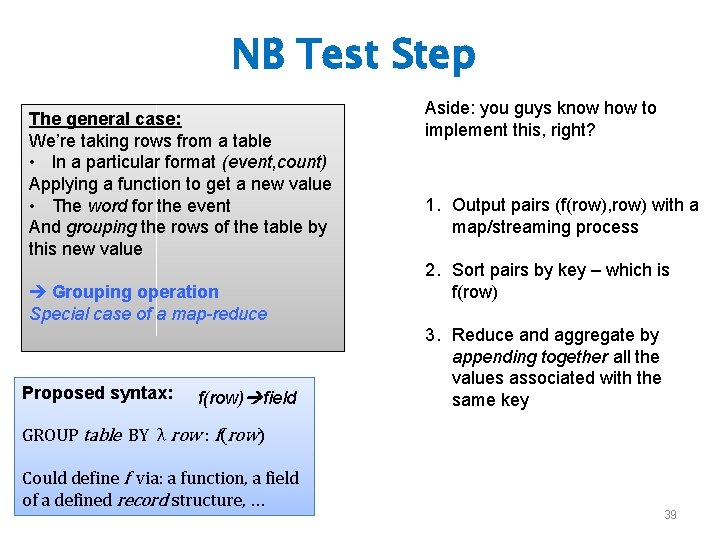

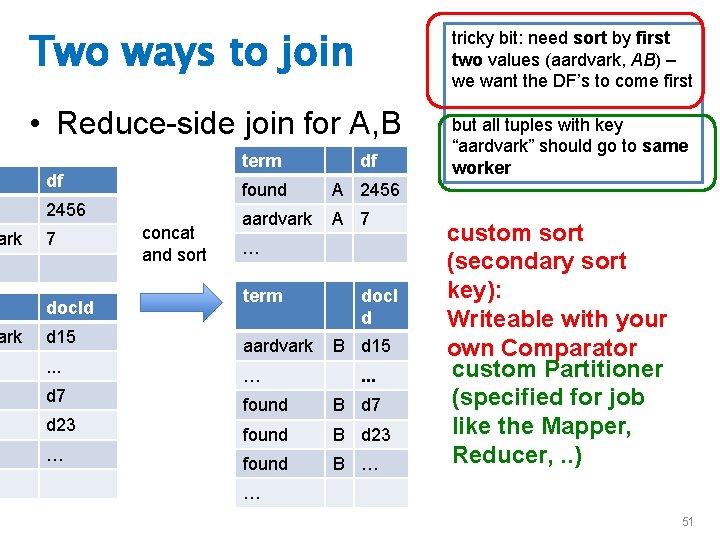

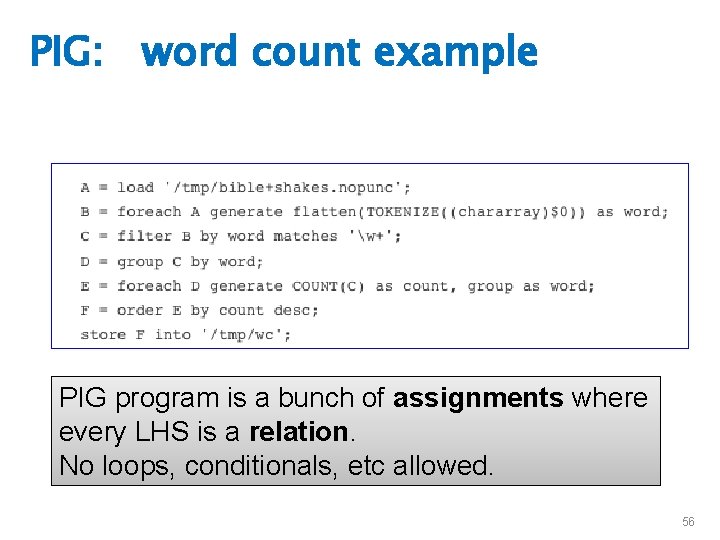

Abstract Implementation: [TF]IDF 1/2 data = pairs (docid , term) where term is a word appears in document with id docid key value operators: doc. Id term • DISTINCT, JOIN found MAP, (d 123, found), (d 134, found), … 2456 d 123 found • GROUP BY …. [RETAINING …] REDUCING TO a reduce step aardvark (d 123, aardvark), … 7 d 123 aardvark doc. Freq = DISTINCT data | GROUP BY λ(docid, term): term REDUCING TO key count /* (term, df) value */ 1 12451 doc. Ids = MAP DATA BY=λ(docid, term): docid | DISTINCT num. Docs = GROUP doc. Ids BY λdocid: 1 REDUCING TO count /* (1, num. Docs) */ question – how many reducers should I use here? data. Plus. DF = JOIN data BY λ(docid, term): term, doc. Freq BY λ(term, df): term | MAP λ((docid, term), (term, df)): (doc. Id, term, df) /* (doc. Id, term, document-freq) */ unnormalized. Doc. Vecs = JOIN data. Plus. DF by λrow: 1, num. Docs by λrow: 1 | MAP λ((doc. Id, term, df), (dummy, num. Docs)): (doc. Id, term, log(num. Docs/df)) /* (doc. Id, term, weight-before-normalizing) : u */ 46 question – how many reducers should I use here?

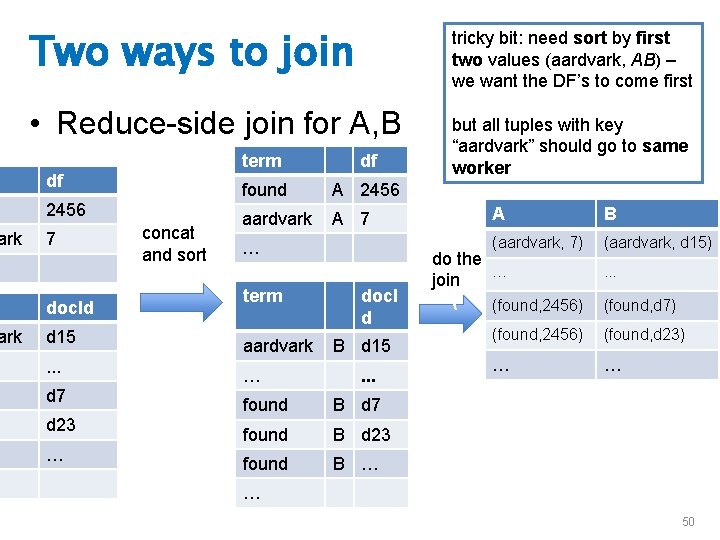

Abstract Implementation: TFIDF 2/2 normalizers = GROUP unnormalized. Doc. Vecs BY λ(doc. Id, term, w): docid RETAINING λ(doc. Id, term, w): w 2 REDUCING TO sum /* (docid, sum-of-square-weights) */ key d 1234 (d 1234, found, 1. 542), (d 1234, aardvark, 13. 23), … 37. 234 d 3214 …. 29. 654 doc. Vec = JOIN unnormalized. Doc. Vecs BY λ(doc. Id, term, w): docid, normalizers BY λ(doc. Id, norm): docid | MAP λ((doc. Id, term, w), (doc. Id, norm)): (doc. Id, term, w/sqrt(norm)) /* (doc. Id, term, weight) */ doc. Id term w doc. Id w d 1234 found 1. 542 d 1234 37. 234 d 1234 aardvark 13. 23 d 1234 37. 234 47

Two ways to join • Reduce-side join • Map-side join 48

Two ways to join • Reduce-side join for A, B A term df found 2456 aardvark 7 … B A concat and sort do the join ( B (aardvark, 7) (aardvark, d 15) … . . . term doc. Id aardvark d 15 (found, 2456) (found, d 23) … . . . … found d 7 found d 23 found … (found, 2456) (found, d 7) … … 49

ark Two ways to join tricky bit: need sort by first two values (aardvark, AB) – we want the DF’s to come first • Reduce-side join for A, B df 2456 7 doc. Id d 15. . . d 7 d 23 … concat and sort term df found A 2456 aardvark A 7 … term aardvark … doc. I d B d 15. . . found B d 7 found B d 23 found B … but all tuples with key “aardvark” should go to same worker A B (aardvark, 7) (aardvark, d 15) do the … join ( (found, 2456) . . . (found, d 7) (found, 2456) (found, d 23) … … … 50

ark Two ways to join tricky bit: need sort by first two values (aardvark, AB) – we want the DF’s to come first • Reduce-side join for A, B df 2456 7 doc. Id d 15. . . d 7 d 23 … concat and sort term df found A 2456 aardvark A 7 … term aardvark … doc. I d B d 15. . . found B d 7 found B d 23 found B … but all tuples with key “aardvark” should go to same worker custom sort (secondary sort key): Writeable with your own Comparator custom Partitioner (specified for job like the Mapper, Reducer, . . ) … 51

Two ways to join • Map-side join – write the smaller relation out to disk – send it to each Map worker • Distributed. Cache – when you initialize each Mapper, load in the small relation • Configure(…) is called at initialization time – map through the larger relation and do the join – faster but requires one relation to go in memory 52

Two ways to join • Map-side join for A (small) and B (large) A term df found 2456 aardvark 7 Load into mapper B (aardvark, 7) (aardvark, d 15) … B A term doc. Id aardvark d 15 … . . . found d 7 found d 23 found … (… . . . (found, 2456) (found, d 7) Join as you map (found, 2456) (found, d 23) … … … 53

Two ways to join • Map-side join for A (small) and B (large) A term df found 2456 aardvark 7 Duplicate and load into every mapper … B 1 B 2 A B ( (aardvark, 7) (aardvark, d 15) term doc. Id aardvark d 15 … . . . found d 7 (found, 2456 (found, d 7) ) found d 23 (found, 2456) (found, d 23) found … … … Join as you map … . . . … 54

PIG: A WORKFLOW LANGUAGE 55

PIG: word count example PIG program is a bunch of assignments where every LHS is a relation. No loops, conditionals, etc allowed. 56

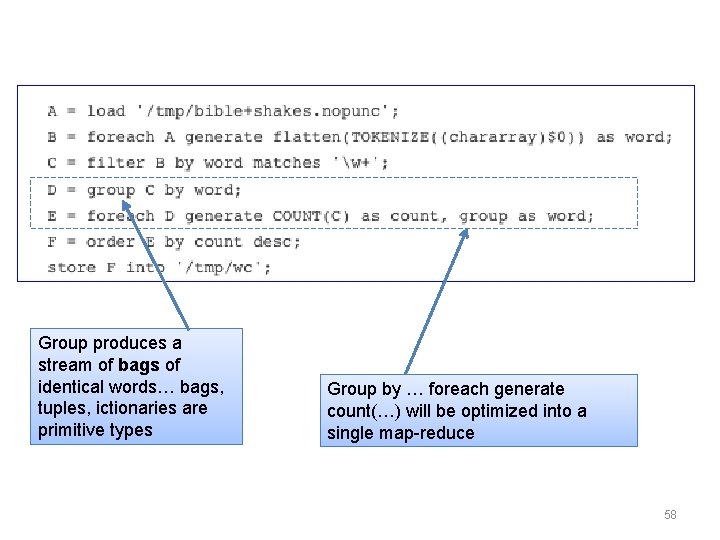

Tokenize – built-in function Built-in regex matching …. Flatten – special keyword, which applies to the next step in the process – so output is a stream of words w/o document boundaries 57

Group produces a stream of bags of identical words… bags, tuples, ictionaries are primitive types Group by … foreach generate count(…) will be optimized into a single map-reduce 58