1 Validity Outline 1 Definition 2 Two different

- Slides: 57

1 Validity – Outline 1. Definition 2. Two different views: Traditional 3. Two different views: CSEPT 4. Face Validity 5. Content Validity: CSEPT 6. Content Validity: Borsboom

2 Validity – Outline 7. Criterion Validity: CSEPT i. Predictive vs. Concurrent ii. Validity Coefficients 8. Criterion Validity: Borsboom 9. Construct Validity: CSEPT i. Convergent ii. Discriminant

3 Validity – Definition n Validity measures agreement between a test score and the characteristic it is believed to measure

4 Validity: CSEPT view n Validity is a property of test score interpretations n Validity exists when actions based on the interpretation are justified given a theoretical basis and social consequences

5 Validity: Traditional view n Validity is a property of tests n Does the test measure what you think it measures?

6 Note the difference: n Validity exists when actions based on the interpretation are justified given a theoretical basis and social consequences n Does the test measure what you think it measures?

7 A problem with the CSEPT view n Who is to say the ‘social consequences’ of test use are good or bad? n According to CSEPT validity is a subjective judgment n In my view, this makes the concept useless: “if you like the result the test gives you, you will consider it valid. If you don’t, you won’t. ” n That’s not how scientists think.

8 Borsboom et al. (2004) n Borsboom et al reject CSEPT’s view n “Validity… is a very basic concept and was correctly formulated, for instance, by Kelley (1927, p. 14) when he stated that a test is valid if it measures what it purports to measure. ” (p. 1061)

9 Borsboom et al. (2004) n Variations in what you are measuring cause variations in your measurements. n E. g. , variations across people in intelligence cause variations in their IQ scores n This is not a correlational model of validity

10 Borsboom et al. (2004) n You don’t create a test and then do the analysis necessary to establish its validity n Rather, you begin by doing theoretical work necessary to understand your subject and create a valid test in the first place. n On this view, validity is not a big problem.

11 Borsboom et al. vs. CSEPT n Who is right? n I agree with Borsboom n Each scientist has to et al. ’s arguments. n Other psychologists may disagree. make up his or her own mind on that question

12 The CSEPT view n CSEPT recognizes 3 types of evidence for test validity: n n n Content-related Criterion-related Construct-related n Boundaries not clearly defined n Cronbach (1980): Construct is basic, while Content & Criterion are subtypes.

13 Parenthetical Point – Face Validity n Face validity refers to the appearance that a test measures what it is intended to measure. n Face validity has P. R. value – test-takers may have better motivation if the test appears to be a sensible way to measure what it measures.

14 Content validity: CSEPT n Content-related evidence considers coverage of the conceptual domain tested. n Important in educational settings n Like face validity, it is determined by logic rather than statistics n Typically assessed by expert judges

15 Content validity: CSEPT n Construct-irrelevant variance n n n arises when irrelevant items are included or when external factors such as illness influence test scores requires a judgment about what is truly “external” n Construct under- representation n Is domain adequately covered or are parts of it left out?

16 Content validity: Borsboom et al. n Borsboom et al. would say that content validity is not something to be established after the test has been created. n Rather, you build it into your test by having a good theory of what you are testing

17 Criterion validity: CSEPT n Criterion-related evidence tells us how well a test score corresponds to a particular criterion measure. n Generally, we want the test score to tell us something about the criterion score. n How well the test does this provides criterionrelated evidence

18 Criterion validity: CSEPT n CSEPT: we could compare undergraduate GPAs to SAT scores to produce evidence of validity of conclusions draw on basis of SAT scores. n Two basic types: n Predictive n Concurrent

19 Criterion validity: CSEPT n Predictive validity n Test scores used to predict future performance – how good is the prediction? n E. g. , SAT is used to predict final undergraduate GPA n SAT – GPA are moderately correlated

20 Criterion validity: CSEPT n Predictive validity n Correlation between test n Concurrent validity scores and criterion when the two are measured at same time. n Test illuminates current performance rather than predicting future performance (e. g. , why does patient have a temperature? Why can’t student do math? )

21 Criterion validity: Borsboom et al. n “Criterion validity” involves a correlation, of test scores with some criterion such as GPA n That does not establish the test’s validity, only its utility. n E. g. , height and weight are correlated, but a test of height is not a test of what bathroom scales measure.

22 Criterion validity: Borsboom et al. n SAT is valid because it was developed on the sensible theory that “past academic achievement” is a good guide to “future academic achievement” n Validity is built into the test, not established after the test has been created

23 Criterion validity n Note: no point in developing a test if you already have a criterion – unless impracticality or expense makes use of the criterion difficult. n Criterion measure only available in the future? n Criterion too expensive to use?

24 Criterion validity n Validity Coefficient n Compute correlation (r) between test score and criterion. n r =. 30 or. 40 would be considered normal. n r >. 60 is rare n Note: r varies between -1. 0 and +1. 0

25 Criterion validity n Validity Coefficient n r 2 gives proportion of variance in criterion explained by test score. n E. g. , if rxy =. 30, r 2 =. 09, so 9% of variability in Y “can be explained by variation in X”

26 Interpreting validity coefficients n Watch out for: 1. Changes in causal relationships 2. What does criterion mean? Is it valid, reliable? 3. Is subject population for validity study appropriate? 4. Sample size

27 Interpreting validity coefficients n Watch out for: 5. Criterion/predictor confusion 6. Range restrictions 7. Do validity study results generalize? 8. Differential predictions

28 Construct validity: CSEPT n Problem: for many psychological characteristics of interest there is no agreed-upon “universe” of content and no clear criterion n We cannot assess content or criterion validity for such characteristics n These characteristics involve constructs: something built by mental synthesis.

29 Construct validity: CSEPT n Examples of constructs: n n Intelligence Love Curiosity Mental health n CSEPT: We obtain evidence of validity by simultaneously defining the construct and developing instruments to measure it. n This is ‘bootstrapping. ’

30 Bootstrapping construct validity n assemble evidence about what a test “means” – in other words, about the characteristic it is testing. n CSEPT: this process is never finished

31 Bootstrapping construct validity n assemble evidence about what a test “means” – in other words, about the characteristic it is testing. n Borsboom: this is part of the process of creating the test in the first place, not something done after the fact

32 Bootstrapping construct validity n assemble evidence n show relationships between a test and other tests n CSEPT: none of the other tests is a criterion but the web of relationships tells us what the test means

33 Bootstrapping construct validity n assemble evidence n show relationships between a test and other tests n Borsboom: these relationships do not tell us what a test score means n (e. g. , age is correlated with annual income but a measure of age is not a measure of annual income).

34 Bootstrapping construct validity n assemble evidence n show relationships n each new relationship adds meaning to the test n CSEPT: a test’s meaning is gradually clarified over time

35 Bootstrapping construct validity n assemble evidence n show relationships n each new relationship adds meaning to the test n Borsboom: would say, why all the mystery? The meaning of many tests (e. g. , WAIS, academic exams, Piaget’s tests) is clear right from the start

36 Construct validity n Example from text: Rubin’s work on Love. n Rubin collected a set of items for a Love scale n He read poetry, novels; he asked people for definitions n created a scale of Love and one of Liking

37 CSEPT: Construct validity n Rubin gave scale to many subjects & factoranalyzed results n Love integrates Attachment, Caring, & Intimacy n Liking integrates Adjustment, Maturity, Good Judgment, and Intelligence n The two are independent: you can love someone you don’t like (as songwriters know)

38 Rubin’s study of Love n Borsboom et al. : when creating a test, the researcher specifies “the processes that convey the effect of the measured attribute on the test score. ” n Rubin laboriously built a theory about what the construct Love means. n Rubin’s process – reading poetry and novels, asking people for definitions – was a good process, so his test has construct validity.

39 Campbell & Fiske (1959) n Two types of Construct- related Evidence n Convergent evidence n When a test correlates well with other tests believed to measure the same construct

40 Campbell & Fiske (1959) n Two types of Construct- related Evidence n Convergent evidence n Discriminant evidence n When a test does not correlate with other tests believed to measure some other construct.

41 Convergent validity n Example – Health Index n Scores correlated with age, number of symptoms, chronic medical conditions, physiological measures n Treatments designed to improve health should increase Health Index scores. They do.

42 Discriminant validity n Low correlations between new test and tests believed to tap unrelated constructs. n Evidence that the new test measures something unique

43 Validity & Reliability: CSEPT n CSEPT: No point in trying to establish validity of an unreliable test. n It’s possible to have a reliable test that is not valid (has no meaning). n Logically impossible to produce evidence of validity for an unreliable test.

44 Validity & Reliability: Borsboom n Borsboom et al: what does it mean to say that a test is reliable but not valid? n What is it a test of? n It isn’t a test at all, just a collection of items

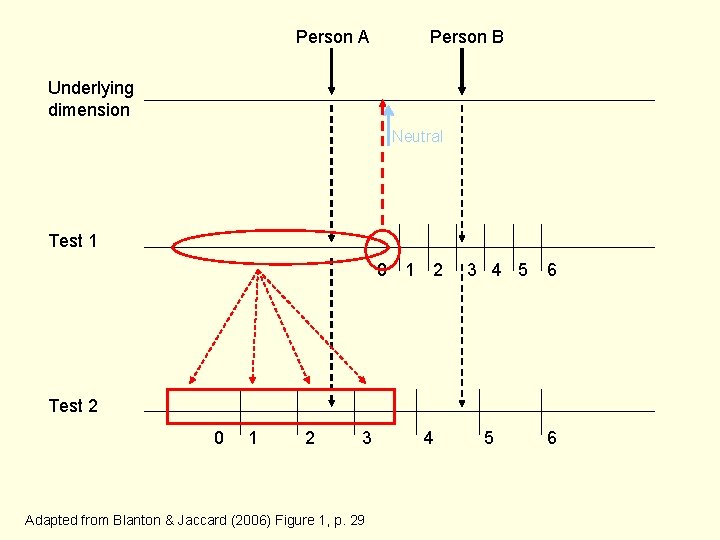

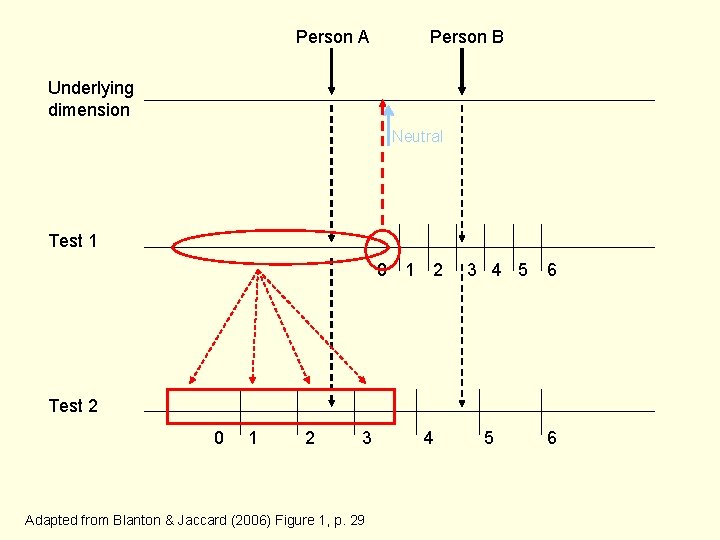

45 Blanton & Jaccard – arbitrary metrics n We observe a behavior n Such scores form an in order to learn about the underlying psychological characteristic n A person’s test score represents their standing on that underlying dimension arbitrary metric n That is, we do not know how the observed scores are related to the true scores on the underlying dimension

Person A Person B Underlying dimension Neutral Test 1 0 1 2 3 4 5 6 Test 2 0 1 2 3 Adapted from Blanton & Jaccard (2006) Figure 1, p. 29 4 5 6

47 Arbitrary metrics – the IAT n Implicit Association Test (IAT) – claimed to diagnose implicit attitudinal preferences – or racist attitudes n IAT authors say you may have prejudices you don’t know you have. n Are these claims true?

48 Arbitrary metrics – the IAT n Task: categorize stimuli using 2 pairs of categories n 2 buttons to press, 2 assignments of categories to buttons, used in sequence

49 Arbitrary metrics – the IAT n Assignment pattern A n Assignment pattern B n Button 1 – press if stimulus refers to the category White or the category Pleasant n Button 2 – press if stimulus refers to the category Black or the category Unpleasant stimulus refers to the category White or the category Unpleasant n Button 2 – press if stimulus refers to the category Black or the category Pleasant

50 Arbitrary metrics – the IAT n IAT authors claim that if responses are faster to Pattern A than to Pattern B, that indicates a “preference” for Whites over Blacks – in other words, a racist attitude n IAT authors also give test-takers feedback about how strong their preferences are, based on how much faster their responses are to Pattern A than to Pattern B n This is inappropriate

51 Arbitrary metrics – the IAT n Blanton & Jaccard: n The IAT does not tell us about racist attitudes n IAT authors take a dimension which is nonarbitrary when used by physicists – time – and use it in an arbitrary way in psychology

52 Arbitrary metrics – the IAT n The function relating the response dimension (time) to the underlying dimension (attitudes) is unknown n Zero on the (Pattern A – Pattern B) difference may not be zero on the underlying attitude preference dimension n There alternative models of how that (Pattern A – Pattern B) difference could arise

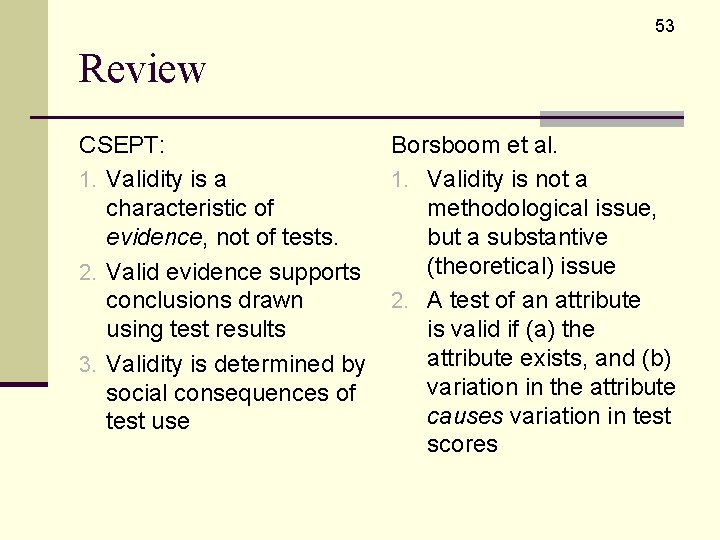

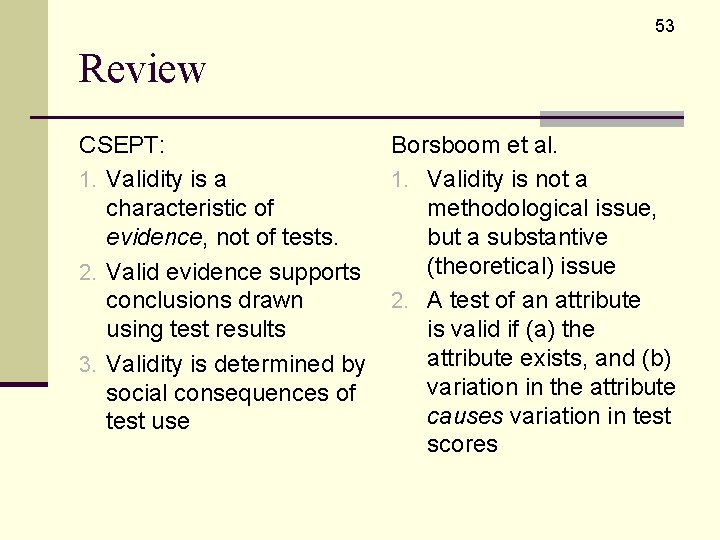

53 Review CSEPT: 1. Validity is a characteristic of evidence, not of tests. 2. Valid evidence supports conclusions drawn using test results 3. Validity is determined by social consequences of test use Borsboom et al. 1. Validity is not a methodological issue, but a substantive (theoretical) issue 2. A test of an attribute is valid if (a) the attribute exists, and (b) variation in the attribute causes variation in test scores

54 Review CSEPT: 4. Validity can be established in three ways, though boundaries between them are fuzzy: A. B. C. Content-related evidence Criterion-related evidence Construct-related evidence Borsboom et al: 3. It’s all the same validity: a test is valid if it measures what you think it measures 4. Validity is not mysterious

55 Review CSEPT 5. Content-related evidence: do test items represent whole domain of interest? 6. Criterion-related evidence: do test scores relate to a criterion either now (concurrent) or in the future (predictive)? Borsboom et al. 5. These questions are properly part of the process of creating a test

56 Review CSEPT 6. Construct-related evidence is obtained when we develop a psychological construct and the way to measure it at the same time. 7. A test can be reliable but not valid. A test cannot be valid if not reliable. Borsboom et al. 6. A test must be valid for a reliability estimate to have any meaning

57 Review n Blanton & Jaccard (2006) warn against over-interpretation of scores which are based on an arbitrary metric n For an arbitrary metric, we have no idea how the test scores are actually related to the underlying dimension