1 Using Rubrics for Student Assessment Peggy Porter

1

Using Rubrics for Student Assessment Peggy Porter and Janis Innis 2

Using Rubrics for Student Assessment 3

Workshop Goals Considering the various rubric options Creating a Customized Rubric Evaluating A Rubric for effective assessment Calibrating rubric scoring for reliable assessment data 4

Rubric Overview • Rubrics provide the criteria for classifying products or behaviors into categories that vary along a continuum. – They can be used to classify virtually any product or behavior, such as essays, research reports, portfolios, works of art, recitals, oral presentations, performances, and group activities. – Judgments can be self-assessments by students; or judgments can be made by others, such as faculty, other students, supervisors, or external reviewers. – Rubrics can be used to provide formative feedback to students, to grade students, and/or to assess programs. • There are two major types of scoring rubrics: – Holistic scoring: one global, holistic score for a product or behavior – Analytic rubrics: separate, holistic scoring of specified characteristics of a product or behavior. 5

Rubric Options • Considering the various rubric options – Nature of the rubric • Assessment of student – Knowledge – Performance • Assigning grades – Feedback for students • Assessment of curriculum – Feedback for faculty 6

Rubric Options • Considering the various rubric options (continued): – Nature of the rubric • Aligned with the course syllabus and student learning outcomes – Designed to assess what is being taught • User friendly – Can faculty scoring be calibrated to achieve reliable data? – Can students benefit from the rubric’s feedback? • Holistic – Summative • Analytic – Formative feedback » Quantitative » Qualitative 7

Rubric Options • What do you want to assess with your rubric? – Student • Knowledge: recall facts, terms, formulas • Skills: ability to use acquired knowledge • Performance: ability to produce a performance based product • Student Learning Outcomes • Learning Objectives 8

Creating Rubrics • What do you want to assess with your rubric? – Student • Knowledge: recall facts, terms, formulas rather than a rubric, a scantron test may be more suitable • Performance: ability to produce a performance based product An analytic rubric can generate a "profile" of specific student strengths and weaknesses 9

Creating Rubrics – Assigning Grades • Effective assessment and feedback for students – What are the outcomes and supporting objectives you want to assess? – Have the students been given the opportunity to practice these outcomes and objectives in class under the instructors’ supervision? – Are these outcomes and objectives present in the course syllabus? 10

Creating Rubrics – Assigning Grades • Effective assessment and feedback for students Look for meaningful assessment categories, wording and terminology that not only point out weaknesses and deficiencies, but also provide the information the student can use to understand why they received the grade they did as well as to improve their performance in the future. Avoid including in your rubric any outcomes and objectives that have not been included in the syllabus or taught in the class. 11

Creating Rubrics – Assessing course curriculum • Effective assessment and feedback for faculty – Is the rubric instructionally aligned with the course curriculum? – Does the rubric adequately address the student learning outcomes and supporting objectives assigned to that course? – Does the rubric provide enough information to allow faculty to pinpoint problems and weaknesses in the curriculum that will need to be adjusted in the future? 12

Creating Rubrics – Assessing course curriculum • Effective assessment and feedback for faculty • For curriculum purposes, a rubric should assess a course’s student learning outcomes and the supporting learning objectives, preferably one student learning outcome and its objectives per rubric instrument. • A holistic rubric would be given first consideration as they are less time consuming and not designed to provide detailed feedback to students. 13

Creating Rubrics – Course curriculum • Syllabus and student learning outcomes are aligned with actual classroom student performance products. – For rubric produced data to be reliable, the syllabus and its student learning outcomes should be aligned with what is actually being taught in the classroom. – Are full time faculty and adjuncts teaching the same material and using the same assignments? – Are the textbooks and syllabi aligned with the curriculum? 14

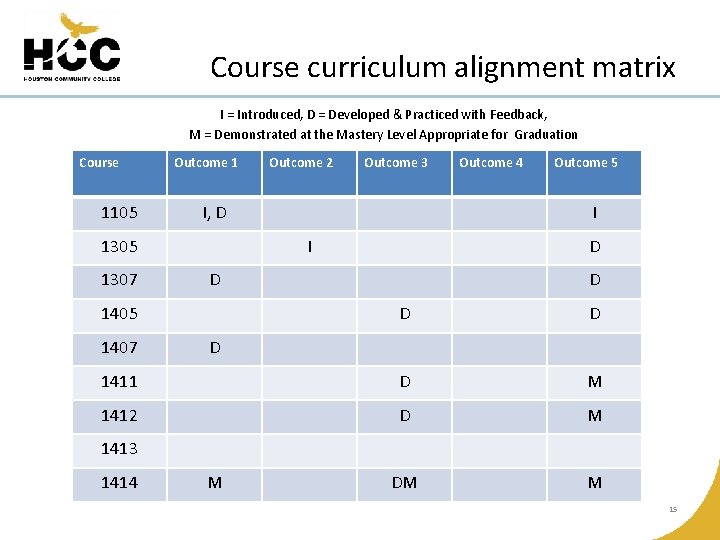

Course curriculum alignment matrix I = Introduced, D = Developed & Practiced with Feedback, M = Demonstrated at the Mastery Level Appropriate for Graduation Course 1105 Outcome 1 Outcome 3 I, D 1305 1307 Outcome 2 Outcome 5 I I D D 1405 Outcome 4 D D D 1411 D M 1412 D M DM M 1407 D 1413 1414 M 15

Rubric Options – Course curriculum • Syllabus and student learning outcomes are aligned with actual student performance • If there are too many variables between the curriculum, the textbooks, the syllabi, the full time faculty and the adjuncts; rubric produced data will be unreliable. • It is critical to ensure there alignment of course and teaching variables, as well as calibration of grading and scoring. 16

Rubric Options – Course curriculum • User friendly – Can faculty scoring be calibrated to achieve reliable data? » Is a wide variations in grading unusual? – Can students benefit from the rubric’s feedback? » Should the learning process continue throughout the testing or assessing process? 17

Rubric Options – Course curriculum • User friendly – Can faculty scoring be calibrated to achieve reliable data? – Faculty and adjuncts can be quickly trained to grade or score student work with standardized results – Can students benefit from the rubric’s feedback? – Using clear and easily understandable wording in the rubric provide students with helpful feedback that will encourage improved performance 18

Creating Rubrics – Course curriculum • Holistic – Summative – The focus of a score reported using a holistic rubric is on the overall quality, proficiency, or understanding of the specific content and skills. Holistic rubrics can result in a less time consuming scoring process than use of analytic rubrics. 19

Creating Rubrics – Course curriculum • Analytic – Student feedback » Quantitative » Qualitative – An analytic rubric articulates levels of performance for each criterion so instructor can assess student performance on each. Provides detailed feedback for the students on their strengths and weaknesses. Most important, it continues the learning process. 20

Creating Rubrics – Course curriculum • Analytic – Student feedback » Quantitative » Qualitative – The various levels of student performance can be defined using either quantitative (i. e. , numerical) or qualitative (i. e. , descriptive) labels. In some instances, both quantitative and qualitative labels can be utilized. If a rubric contains four levels of proficiency or understanding on a continuum, quantitative labels would typically range from "1" to "4. " When using qualitative labels, there is much more flexibility, and can be more creative. A common type of qualitative scale might include these labels: master, expert, apprentice, and novice. Nearly any type of qualitative scale will suffice, provided it "fits" with the task. 21

Creating Rubrics support data driven decision making – Data-driven decision making (DDDM): • uses data on function, quantity and quality of inputs • Examines how students learn to suggest educational solutions • Based on the assumption that scientific methods can effectively evaluate educational programs, and instructional methods. – Rubrics provide significant data to support effective DDDM. 22

Creating Rubrics • Creating a customized rubric • Step 1: Re-examine the learning objectives to be addressed by the task. This allows you to match your scoring guide with your objectives and actual instruction. • Step 2: Identify specific observable attributes that you want to see (as well as those you don’t want to see) your students demonstrate in their product, process, or performance. Specify the characteristics, skills, or behaviors that you will be looking for, as well as common mistakes you do not want to see. • Step 3: Brainstorm characteristics that describe each attribute. Identify ways to describe above average, and below average performance for each observable attribute identified in Step 2. 23

Creating Rubrics • Creating a customized rubric • Step 4 a: For holistic rubrics, write thorough narrative descriptions for excellent work and poor work incorporating each attribute into the description. Describe the highest and lowest levels of performance combining the descriptors for all attributes. • Step 4 b: For analytic rubrics, write thorough narrative descriptions for excellent work and poor work for each individual attribute. Describe the highest and lowest levels of performance using the descriptors for each attribute separately. 24

Creating Rubrics • Creating a customized rubric • Step 5 a: For holistic rubrics, complete the rubric by describing other levels on the continuum that ranges from excellent to poor work for the collective attributes. Write descriptions for all intermediate levels of performance. • Step 5 b: For analytic rubrics, complete the rubric by describing other levels on the continuum that ranges from excellent to poor work for each attribute. Write descriptions for all intermediate levels of performance for each attribute separately. 25

Creating Rubrics • Creating a customized rubric • Step 6: Collect samples of student work that exemplify each level. These will help you score in the future by serving as benchmarks. • Step 7: Revise the rubric, as necessary. Be prepared to reflect on the effectiveness of the rubric and revise it prior to its next implementation. 26

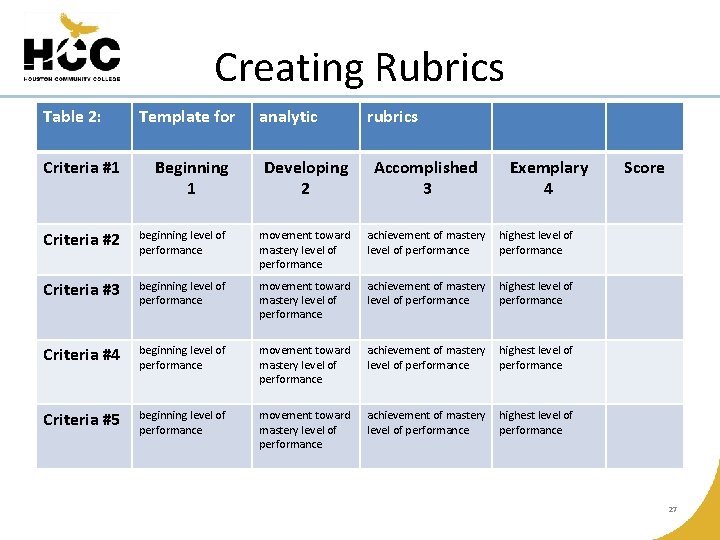

Creating Rubrics Table 2: Template for analytic rubrics Criteria #1 Beginning 1 Developing 2 Accomplished 3 Exemplary 4 Criteria #2 beginning level of performance movement toward mastery level of performance achievement of mastery level of performance highest level of performance Criteria #3 beginning level of performance movement toward mastery level of performance achievement of mastery level of performance highest level of performance Criteria #4 beginning level of performance movement toward mastery level of performance achievement of mastery level of performance highest level of performance Criteria #5 beginning level of performance movement toward mastery level of performance achievement of mastery level of performance highest level of performance Score 27

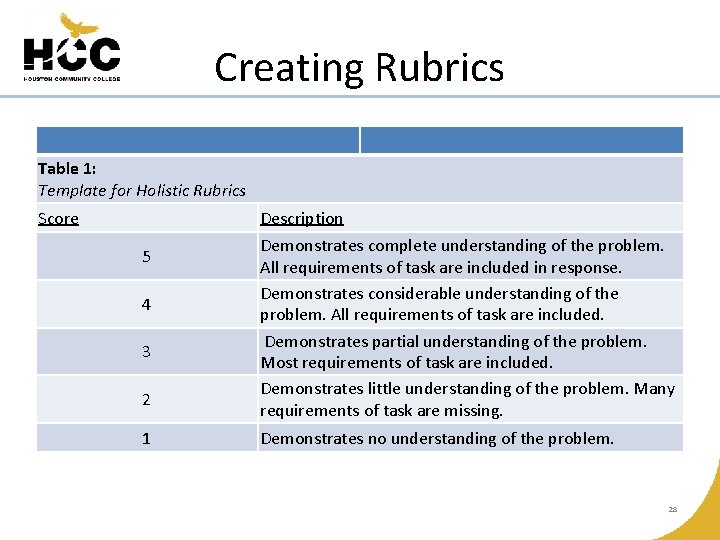

Creating Rubrics Table 1: Template for Holistic Rubrics Score Description 5 4 3 2 1 Demonstrates complete understanding of the problem. All requirements of task are included in response. Demonstrates considerable understanding of the problem. All requirements of task are included. Demonstrates partial understanding of the problem. Most requirements of task are included. Demonstrates little understanding of the problem. Many requirements of task are missing. Demonstrates no understanding of the problem. 28

Creating Rubrics • • • Assigning scores to the rubric If a rubric contains four levels of proficiency or understanding on a continuum, quantitative labels would typically range from "1" to "4. " When using qualitative labels, teachers have much more flexibility, and can be more creative. A common type of qualitative scale might include the following labels: master, expert, apprentice, and novice. Nearly any type of qualitative scale will suffice, provided it "fits" with the task. One potentially frustrating aspect of scoring student work with rubrics is the issue of somehow converting them to "grades. " It is not a good idea to think of rubrics in terms of percentages (Trice, 2000). For example, if a rubric has six levels (or "points"), a score of 3 should not be equated to 50% (an "F" in most letter grading systems). The process of converting rubric scores to grades or categories is more a process of logic than it is a mathematical one. Trice (2000) suggests that in a rubric scoring system, there are typically more scores at the average and above average categories (i. e. , equating to grades of "C" or better) than there are below average categories. For instance, if a rubric consisted of nine score categories, the equivalent grades and categories might look like this: 29

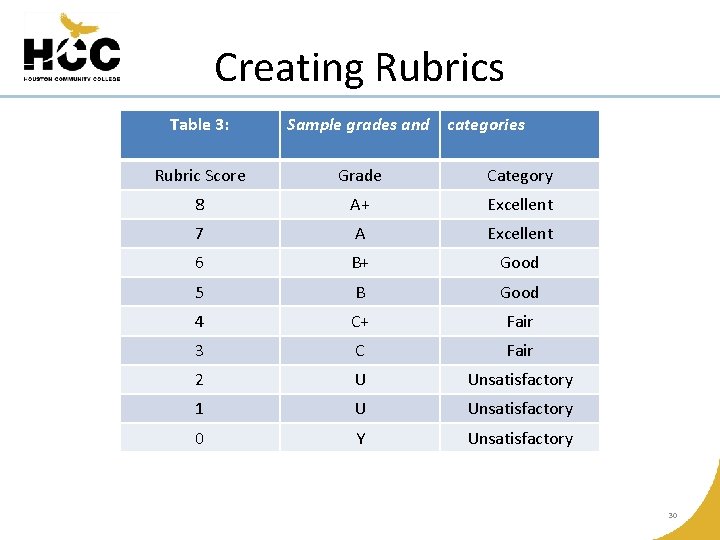

Creating Rubrics Table 3: Sample grades and categories Rubric Score Grade Category 8 A+ Excellent 7 A Excellent 6 B+ Good 5 B Good 4 C+ Fair 3 C Fair 2 U Unsatisfactory 1 U Unsatisfactory 0 Y Unsatisfactory 30

Hands on Practice Create a holistic or analytical rubric for a course in your discipline Use blank rubric forms in your handbook or go to either https: //www. rcampus. com/ and/or http: //rubistar. 4 teachers. org/ 31

Evaluating A Rubric • Evaluating A Rubric for effective assessment – A Rubric for Rubrics: A Tool for Assessing the Quality and Use of Rubrics in Education • Downloaded January 22, 2010 from • http: //webpages. charter. net/bbmullinix/Rubrics/A%20 Rubric%20 for%20 Rubrics. htm • Dr. Bonnie B. Mullinix © Monmouth University December 2003 32

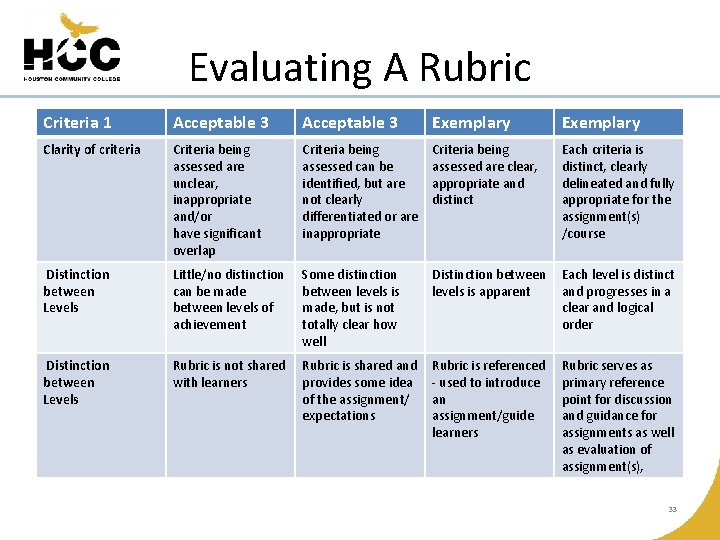

Evaluating A Rubric Criteria 1 Acceptable 3 Exemplary Clarity of criteria Criteria being assessed are unclear, inappropriate and/or have significant overlap Criteria being assessed can be identified, but are not clearly differentiated or are inappropriate Criteria being assessed are clear, appropriate and distinct Each criteria is distinct, clearly delineated and fully appropriate for the assignment(s) /course Distinction between Levels Little/no distinction can be made between levels of achievement Some distinction between levels is made, but is not totally clear how well Distinction between levels is apparent Each level is distinct and progresses in a clear and logical order Distinction between Levels Rubric is not shared with learners Rubric is shared and provides some idea of the assignment/ expectations Rubric is referenced - used to introduce an assignment/guide learners Rubric serves as primary reference point for discussion and guidance for assignments as well as evaluation of assignment(s), 33

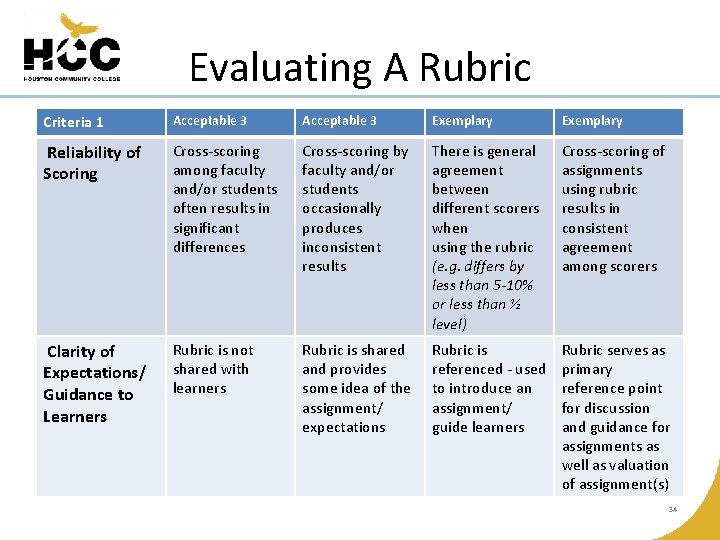

Evaluating A Rubric Criteria 1 Acceptable 3 Exemplary Reliability of Scoring Cross-scoring among faculty and/or students often results in significant differences Cross-scoring by faculty and/or students occasionally produces inconsistent results There is general agreement between different scorers when using the rubric (e. g. differs by less than 5 -10% or less than ½ level) Cross-scoring of assignments using rubric results in consistent agreement among scorers Clarity of Expectations/ Guidance to Learners Rubric is not shared with learners Rubric is shared and provides some idea of the assignment/ expectations Rubric is referenced - used to introduce an assignment/ guide learners Rubric serves as primary reference point for discussion and guidance for assignments as well as valuation of assignment(s) 34

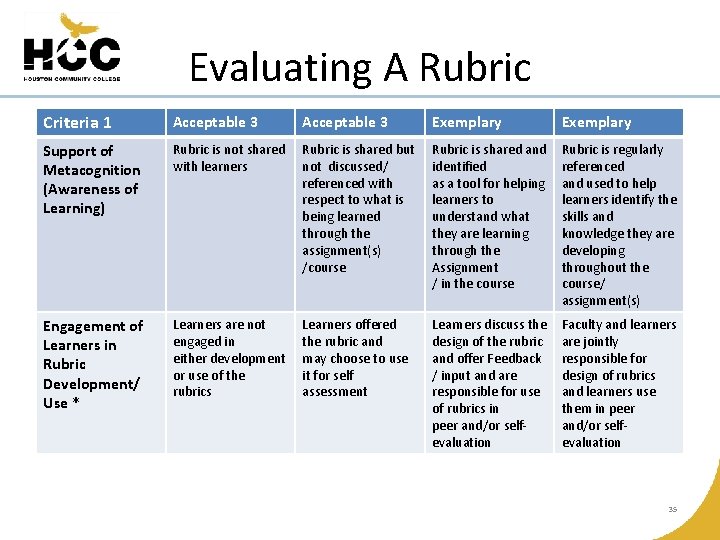

Evaluating A Rubric Criteria 1 Acceptable 3 Exemplary Support of Metacognition (Awareness of Learning) Rubric is not shared with learners Rubric is shared but not discussed/ referenced with respect to what is being learned through the assignment(s) /course Rubric is shared and identified as a tool for helping learners to understand what they are learning through the Assignment / in the course Rubric is regularly referenced and used to help learners identify the skills and knowledge they are developing throughout the course/ assignment(s) Engagement of Learners in Rubric Development/ Use * Learners are not engaged in either development or use of the rubrics Learners offered the rubric and may choose to use it for self assessment Learners discuss the design of the rubric and offer Feedback / input and are responsible for use of rubrics in peer and/or selfevaluation Faculty and learners are jointly responsible for design of rubrics and learners use them in peer and/or selfevaluation 35

Calibrating a Rubric • Calibrating rubric scoring for reliable assessment data. – The validity of your rubric and assessment depends in part on the validation of the rubric scores. – Therefore calibration is an essential process. – Calibration training for a group of instructors who can then score rubrics and produce valid data is critical. – The process for calibration will be determined by each discipline or program and may involve paired scoring or open table scoring. Rubric scores are determined by consensus and part of the calibration expert's role is to resolve discrepancies in scoring. 36

Calibrating Rubric Scoring • Each participant should have a copy of the task, samples of student work, extra rubrics, and scoring sheets. • Explain procedure (as below) • Ground rules: – – Only one person speaks at a time Wait to be called on No side conversations, please Respect each other’s comments • Designate a recorder to note any issues, record discussions, and initial and final scores for each box. 37

Calibrating Rubric Scoring • Examine task for standards, instructor and student directions, prompt, and rubric. • Discuss and record. • Read first sample of student work only. • Score individually, marking rubric. • When determining final score, consider the preponderance of evidence to determine where a student’s work falls. • Check the teacher directions page for any accommodations that might have been made for students with Letters of Accommodation. • When everyone has finished, ask for a show of hands for an overall score of exceeds, meets, etc. 38

Calibrating Rubric Scoring • Work box by box, asking for an overall initial score. • Then ask volunteers to support the evaluation, citing evidence from the student work. • Generally, start with someone who is most supportive of student work, work towards “below” categories. • This discussion takes quite a bit of time, depending upon the task and the sample of student work. • At the end of the discussion of each box, take a revote to determine the final score for that box. • When all boxes have been discussed, ask for a final overall score. • Use same procedure for sample two. 39

Calibrating Rubric Scoring • OR (depending upon time and level of expertise) pair score sample two and discuss as needed after an overall vote. • Read Central scoring calibration notes for comparison/validity purposes. • Pair score tasks, using score sheets. • Second scorer should not look at first evaluator’s score until his/her own evaluation is completed. 40

Calibrating Rubric Scoring • At that point, if both scores agree, record the score on the cover of the task. (Both scorers should also fill out a score sheet). • If there is a discrepancy of a 3 -4 or a 2 -3, then a discussion takes place to determine the final score. • If agreement is reached, one of the scorers changes his/her score sheet to agree with the other score. 41

Glossary of Terms • Analytic Rubric: An analytic rubric articulates levels of performance for each criterion so the teacher can assess student performance on each criterion. • Assessment: The systemic process of gathering and analyzing information about student learning to ensure the program and the students are achieving the desired results in relation to the student learning outcomes. • Assessment plan: a document which states the procedures and processes used to gather and interpret student learning outcome data. 42

Glossary of Terms • Authentic Task: An assignment given to students designed to assess their ability to apply standardsdriven knowledge and skills to real-world challenges. A task is considered authentic when 1) students are asked to construct their own responses rather than to select from ones presented; and 2) the task replicates challenges faced in the real world. Good performance on the task should demonstrate, or partly demonstrate, successful completion of one or more standards. The term task is often used synonymously with the term assessment in the field of authentic assessment. 43

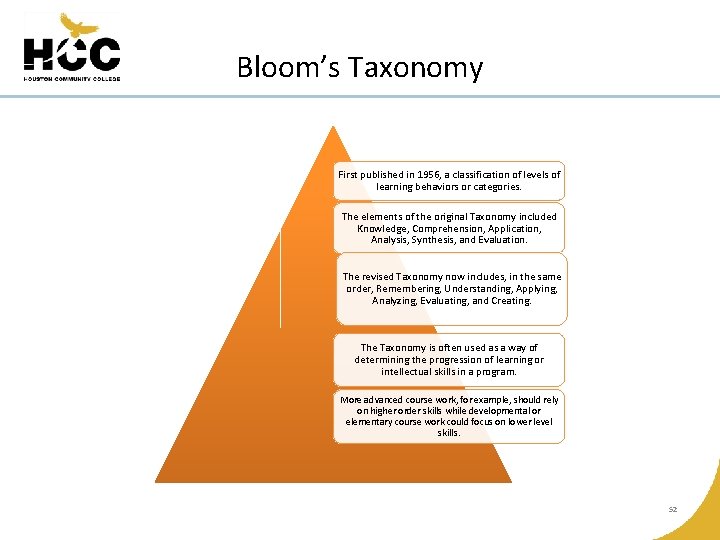

Glossary of Terms • Bloom’s Taxonomy: A classification of learning behaviors or categories used as a way of determining learning progression in a course or program. The revised taxonomy includes lower level skills and high order thinking skills. Bloom’s Taxonomy is used to align Student Learning Outcomes and their subsequent objectives. • Calibration: Training faculty to score rubrics in a similar fashion to ensure validity of scores and subsequent data. • Closing the Loop: Primarily regarded as the last step in the assessment process, closing the loop actually starts the process over again if the data is analyzed and the desired results are not achieved. Closing the loop refers specifically to using the data to improve student learning. 44

Glossary of Terms • Course Goals: Generally phrased, non measureable statements about what is included and covered in a course. • Course Guide: a booklet and online resource that helps students select subjects. • Curriculum Guide: a practical guide designed to aid teachers in planning and developing a teaching plan for specific subject areas. 45

Glossary of Terms • Course Objectives: A subset of student learning outcomes, course objectives are the specific teaching objectives detailing course content and activities. • Criteria: Characteristics of good performance on a particular task. For example, criteria for a persuasive essay might include well organized, clearly stated, and sufficient support for arguments. (The singular of criteria is criterion. • Data-driven decision making: A process of making decisions about curriculum and instruction based on the analysis of classroom data, rubric assessment, and standardized test data. 46

Glossary of Terms • Descriptors: Statements of expected performance at each level of performance for a particular criterion in a rubric - typically found in analytic rubrics. See example and further discussion of descriptors. • Direct Assessment Method: The assessment is based on an analysis of student behaviors or products in which they demonstrate how well they have mastered learning outcomes. 47

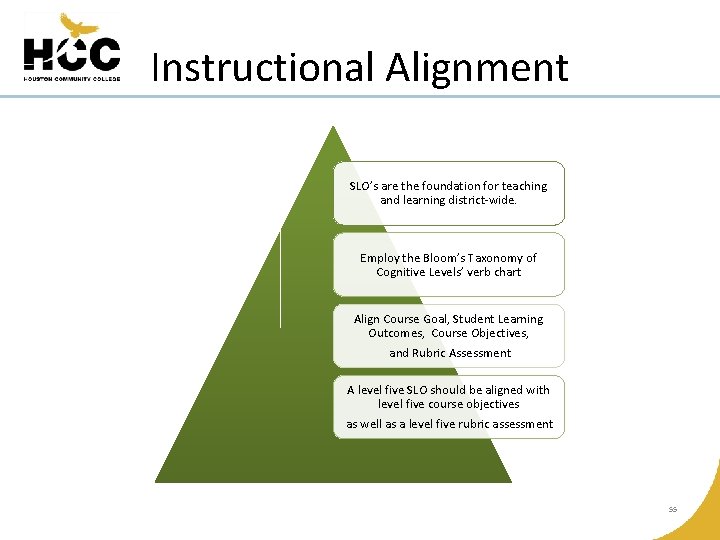

Glossary of Terms • Indirect Assessment Method: The assessment is based on an analysis of reported perceptions about student mastery of learning outcomes or the learning environment. • Instructional Alignment: the process of ensuring that Student Learning Outcomes and the subsequent objectives use the same learning behaviors or categories in Bloom’s Taxonomy. 48

Glossary of Terms • Program Assessment: An on-going, systemic process designed to evaluate and improve student learning by identifying strengths and areas for improvement. The data from the evaluation is used to guide decision making for the program. • Reliability: The degree to which a measure yields consistent results. • Rubric: the criteria for classifying products or behaviors into categories which varies along a continuum. Rubrics are used as a way of assessing a Student Learning Outcome. A scoring scale used to evaluate student work. A rubric is composed of at least two criteria by which student work is to be judged on a particular task and at least two levels of performance for each criterion. 49

Glossary of Terms • Student Learning Outcomes: Student Learning Outcomes are defined as the specific knowledge, skills, or attitude students should be able to demonstrate effectively at the end of a particular course or program. Student Learning Outcomes are measured and provide students, faculty, and staff the ability to assess student learning and instruction. Each course should have four to seven Student Learning Outcomes. • Validity: The degree to which a certain inference from a test is appropriate and meaningful. 50

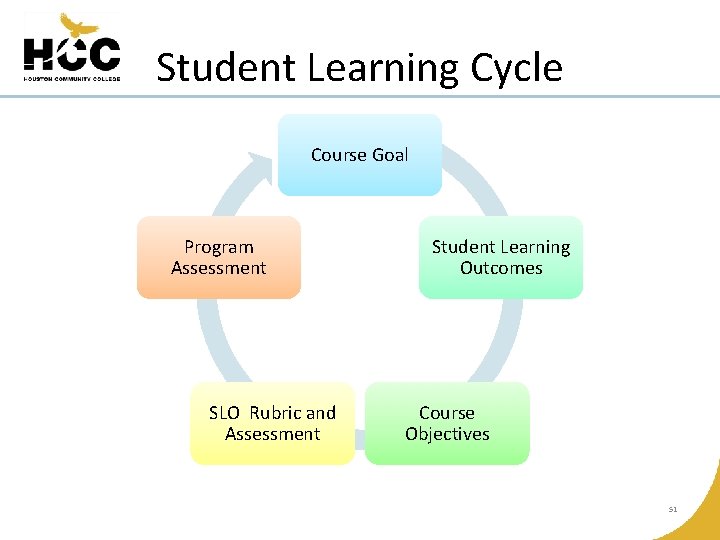

Student Learning Cycle Course Goal Program Assessment SLO Rubric and Assessment Student Learning Outcomes Course Objectives 51

Bloom’s Taxonomy First published in 1956, a classification of levels of learning behaviors or categories. The elements of the original Taxonomy included Knowledge, Comprehension, Application, Analysis, Synthesis, and Evaluation. The revised Taxonomy now includes, in the same order, Remembering, Understanding, Applying, Analyzing, Evaluating, and Creating. The Taxonomy is often used as a way of determining the progression of learning or intellectual skills in a program. More advanced course work, for example, should rely on higher order skills while developmental or elementary course work could focus on lower level skills. 52

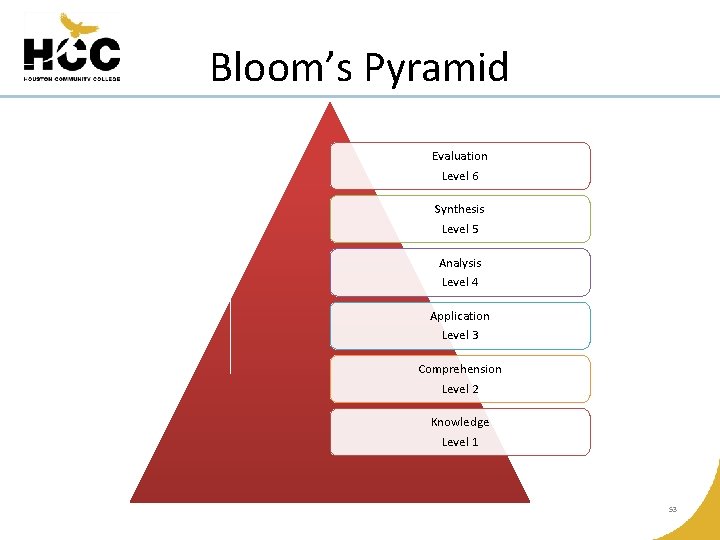

Bloom’s Pyramid Evaluation Level 6 Synthesis Level 5 Analysis Level 4 Application Level 3 Comprehension Level 2 Knowledge Level 1 53

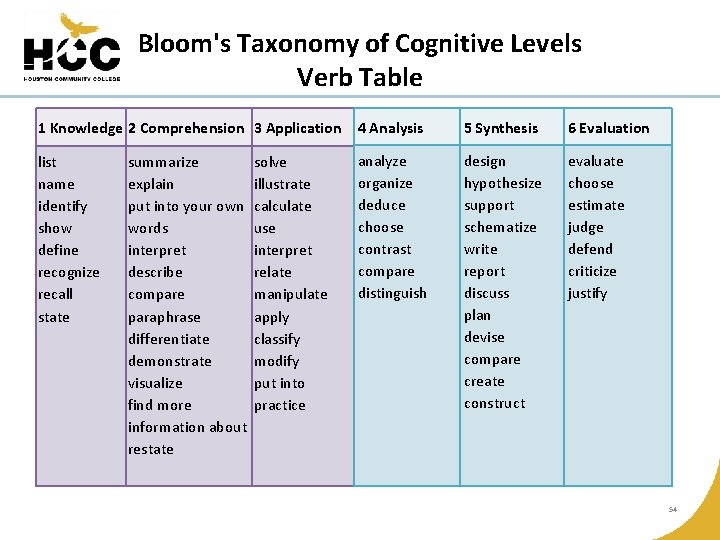

Bloom's Taxonomy of Cognitive Levels Verb Table 1 Knowledge 2 Comprehension 3 Application 4 Analysis 5 Synthesis 6 Evaluation list name identify show define recognize recall state analyze organize deduce choose contrast compare distinguish design hypothesize support schematize write report discuss plan devise compare create construct evaluate choose estimate judge defend criticize justify summarize explain put into your own words interpret describe compare paraphrase differentiate demonstrate visualize find more information about restate solve illustrate calculate use interpret relate manipulate apply classify modify put into practice 54

Instructional Alignment SLO’s are the foundation for teaching and learning district-wide. Employ the Bloom’s Taxonomy of Cognitive Levels’ verb chart Align Course Goal, Student Learning Outcomes, Course Objectives, and Rubric Assessment A level five SLO should be aligned with level five course objectives as well as a level five rubric assessment 55

Resource Links • http: //www. hccs. edu/hccs/faculty-staff/studentlearning-outcomes--01 • https: //www. rcampus. com/ • http: //rubistar. 4 teachers. org/ 56

THE CENTER for TEACHING & LEARNING EXCELLENCE Online Resources: http: //hccs. edu/tle 57

- Slides: 57