1 SW development processes and methods For Software

![Siemens/Telecoms [Briand/ Freimut, 2000] Cost-effectiveness of upstream and downstream inspections 14 A Practical and Siemens/Telecoms [Briand/ Freimut, 2000] Cost-effectiveness of upstream and downstream inspections 14 A Practical and](https://slidetodoc.com/presentation_image_h/dcb653ba8599065c21ba268bac0edc28/image-14.jpg)

- Slides: 44

1 SW development processes and methods For Software Engineers Aimed at Experienced and student software engineers 50 Minute session 13: 00 - 13: 50 Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 1

2 Purpose of lecture • To inform participants about technical details of a few powerful but too little known software engineering processes Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 2

3 The Best Software Development Processes • Standards-Based Processes – – • • • Process Owners Rules EPX Entry Process Exit Templates Evolutionary Project Management Inspection (Specification Quality Control) Defect Prevention Process (DPP) Design to (Multiple Scalar) Requirements: DTR Competitive Engineering Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 3

4 Characteristics of the best processes • • • Measurability Feedback Continuous Improvement Intelligence Scale up and Down Tailorable Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 4

Evo u u u u 7 da Vinci Principles: (Evo!) <-Gelb, p. 9 Curiosità u Insatiably curious, unrelenting quest for continuous learning Dimostrazione u Commitment to test knowledge through experience, willingness to learn from mistakes. Learning for ones self, through practical experience Sensazione u Continual refinement of senses. As means to enliven experience Sfumato u Willingness to embrace ambiguity, paradox, uncertainty Arte/Scienza u Balance science/art, logic & imagination, whole brain thinking Corporalità u Cultivation of grace, ambidexterity, fitness, poise Connessione u Recognition & appreciation for interconnectedness of all things and phenomena, Systems thinking 5

6 Results Expected From The Best Software Development Processes • • • Productivity Timeliness Quality Diffusability Competitiveness Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 6

7 What do the less good software processes tend to deliver? • • Late delivery Late recognition that they do not work Bad quality of product Focus on logic and programs not customers and quality • Over 50% project total failure rate Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 7

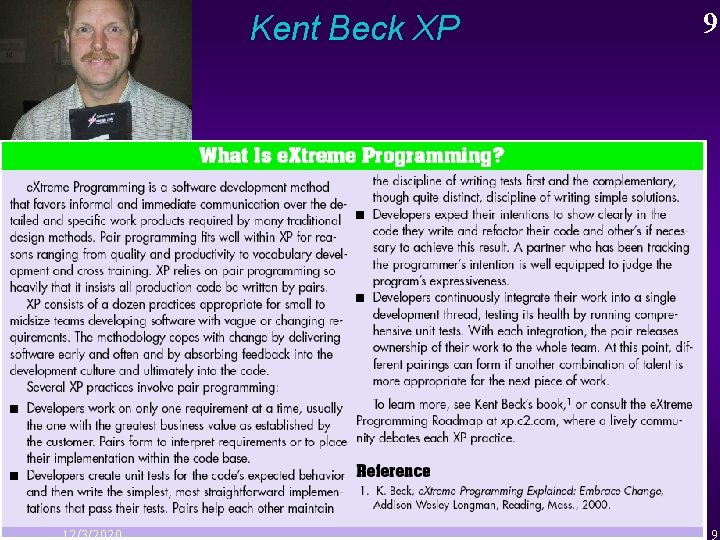

8 What are the ‘less good’ processes called? • Waterfall Project Management – Because no early reliable feedback and results • Reviews, Walkthroughs – Because no measure based, not feedback based, not based on requirements • Functional Requirements – Because not focus on qualities and costs – Because a lot of ‘design’ mixed in here • Extreme Programming – Not based on engineering and measurement – Pop Programming, but not serious industrial competitive software engineering Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 8

Evo Kent Beck XP 9

10 MORE DETAIL ON SPECIFIC BEST PRACTICES Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 10

11 Standards-Based Processes • Based on written best-practice standards – Not local passed on culture • Constantly improving – Based on insight, experience, measurement • Kept simple and un-bureaucratic – ONE-Page standards modules are the norm • Suitable for multinational spread and rapidly growing numbers of people Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 11

12 Process Owners • Designated individuals or groups who take responsibility for improving, maintaining, evaluating and spreading best practice standards • Decentralization of power (no standards committees, rapid change/improvement, now today this week) • Special interest and competence in the area of the process • Should be judged on measurable results of the process compared with other methods Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 12

13 Effectiveness Of Different Methods 13 Robert B. Grady, Hewlett-Packard Via Don Mills Slides NZ Aug 2000 13

![SiemensTelecoms Briand Freimut 2000 Costeffectiveness of upstream and downstream inspections 14 A Practical and Siemens/Telecoms [Briand/ Freimut, 2000] Cost-effectiveness of upstream and downstream inspections 14 A Practical and](https://slidetodoc.com/presentation_image_h/dcb653ba8599065c21ba268bac0edc28/image-14.jpg)

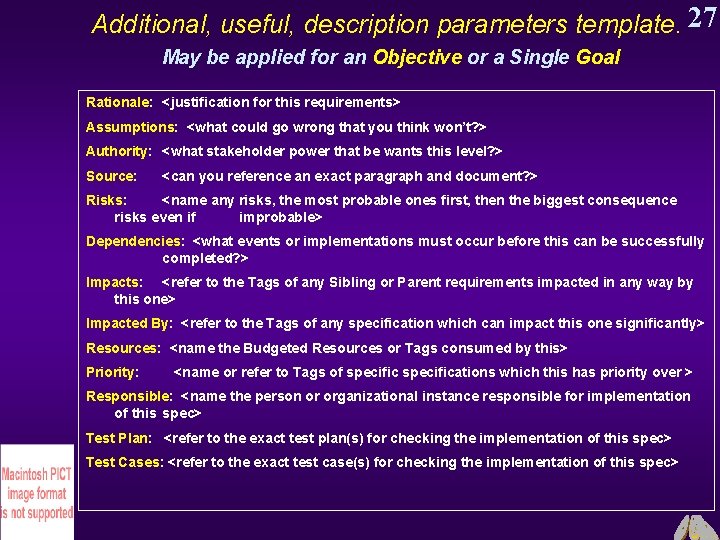

Siemens/Telecoms [Briand/ Freimut, 2000] Cost-effectiveness of upstream and downstream inspections 14 A Practical and Rigorous Way to Assess the Cost-Effectiveness of Inspections by Combining Project Data and Expert Opinion Lionel C. Briand, Bernd Freimut, IEEE Proceedings of the 10 th International Symposium on Software Reliability Engineering, And Veenendaal & Gilb (Editors) book on Inspection Experiences to be published 2002 -3? Lionel Briands is supported in part by NSERC, Canadas Science and Engineering Research Council 14 14

15 Rules • • ‘Rules for Writing’ Standards for specification. Owned by Process Owner Maximum one page modules – Generic and specific organization • • • Define a specification defect (rule not followed) Focus on Major severity effects (not minor) Tool for teaching practices Tool for objective inspections Tool for measuring degree of conformance to best practices Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 15

16 GENERIC RULES FOR TECHNICAL AND MANAGEMENT SPECIFICATION. Tag: RULES. GR 1: CLEAR: Specifications should be clear and unambiguous to their intended reader. Endnotes 0 The number is a rule tag (or identification, if you like), and the word after the colon is an equivalent alternative rule tag for referencing the rule. The following references are possible RULES. GR. 1, RULES. GR. CLEAR, STD. RULES. GR. CLEAR and other combinations. The dot indicates that what follows is part of a set of things named by the term proceeding the dot. GR is part of a set of things called RULES. 2: SIMPLE: Specifications should be written in their most elementary form. 3: TAG. Specifications shall each have a unique identification tag. 4: SOURCE: Specifications shall contain information about their detailed source, AUTHORITY and REASON. 5: GIST: Complex Specifications should be summarized by a Gist statement. 6: QUALIFY: When any Specification depends on a specific time, place or event being in force then this shall be specified by means of the [qualifier square brackets]. 7: FUZZY: When any element of a Specification is unclear then it shall be marked, for later clarification, by the <fuzzy angle brackets>. 8: COMMENT: any text which is secondary to a Specification, and where no defect could result in a costly problem later, shall be written in italic text statements, or/and headed by suitable warning (NOTE, RATIONALE, COMMENT) or moved to footnotes. Non-commentary specification shall be in plain text. Italic can be used for emphasis of single terms in noncommentary statements. Readers shall be able to visually, at a glance, without decoding the contents, distinguish ‘critical’ from ‘not critical’ specification. 9: UNIQUE: requirements and design Specifications shall be made one single time only. Then they shall be re-used, by cross reference to their identity tag. ‘Duplication’ (copy and paste) is strongly discouraged. Reuse it! Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 16

17 REQUIREMENTS SPECIFICATION RULES. SPECIFIC RULES. SR 0: GR-BASE: The generic rules (RULES. GR) are assumed to be at the base of these rules. 1: TESTABLE: The requirement must be specified so that it is possible to define an unambiguous test to prove that it is later implemented. 2: METER: Any test of Scale level, or proposed tests, may be specified using the parameter ‘Meter’. 3: SCALE: Any requirement which is capable of numeric specification, shall define a numeric scale fully and unambiguously, or reference such a definition. 4: MEET: The numeric level needed to meet requirements fully shall be specified in terms of one or more [qualifier defined] Target level { Plan, Must, Wish} Goals. Note: {…} is a Set of things, using Set Parenthesis. 5: FAIL: The minimum numeric levels to avoid system, political, or economic failure shall be specified in terms of one or more [qualifier defined] ‘Must’ level goals. 6: QUALIFY. Rich use of [qualifiers] shall specify [when, where, special conditions]. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 17

18 2. 3. Function Specification (RULES. FR) SPECIFIC RULES FOR FUNCTION REQUIREMENTS SPECIFICATION RULES. FR. 1: GENERIC: Generic Specification Rules (RULES. GR) apply. (see Chapter 0. 3) FR. 2: TESTABLE: The requirement must be specified so that it is possible to define an unambiguous test to prove that it is later implemented. FR. 3: TEST: Any notions of how or what needs to be tested to validate this function may be described either together with the function definition, using the parameter TEST, or separately using the qualifier of the function name. Example below. X: TEST [FUNCT-X]: tests shall be developed to demonstrate that this function is available for all Counties in this state and no other states or countries can access it. Audit: TEST [FUNCT-X] we must demonstrate to internal auditors that no counties which are financially insolvent are allowed access to this function. <- Audit Report August 4 th this year. FR. 4: DETAIL: The function should be specified in enough detail to understand (estimate) costs and other attributes to order-of-magnitude, when its attribute requirements are also known. This need can also be satisfied by the sufficiently detailed definition of its sub-functions. Example: FUZZY-FUNCTION-ENV: FUNCTION <Environmental considerations. > (a more-detailed function definition, below) Environmental Considerations: All legally and competitively necessary investments and costs, immediate and potential, regarding environmental protection, in the widest interpretation possible, to protect us against lawsuits, and give us a clear positive reputation amongst consumers. FR. 5: NOT DESIGN: The specified ‘function’ must not be a {design, strategy, device, method, process, or any other thing} whose justification is to satisfy a quality or cost requirement of the system, whether or not these requirements are yet explicitly specified. If it is, then it shall be re-classified as ‘design/strategy’ and action will be taken to properly define the attribute requirements which might justify using it. FR. 6: NOT DEGREES: Function specifications must not be described in terms of degrees or variability. Functions are binary (present or absent in totality) in nature. If something is ‘variable’ then it probably needs to be reclassified and redefined as quality or cost specification of a function. Example: what is “Usability: a state-of-the-art user-friendly interface. ” ? Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 18

3. 3. Rules: Quality Attribute Requirement Specification (RULES. QR) 19 Tag: RULES. QR Gist: Rules for Quality Attribute Requirement Specification. Version: 2002 -04 -06. Owner: Tom Gilb. Status: Draft update. QR. 0: Base: The generic rules for specification (Rules. GR) and the specific rules for requirements specification (Rules. SR) apply. QR. 1: Completeness: All arguably critical, to success or failure, qualities shall be identified and defined. QR 2: Explode: Where appropriate, a Complex quality requirement shall be specified in detail using a set of Elementary Quality attributes. QR 3: Scale: All elementary quality attributes must define a numeric Scale fully and unambiguously, or reference such a definition. QR 4: Meter: A practical and economic Meter, or set of Meters, should be specified for each Scale. As a minimum, an outline of the Meter process or, a reference to a full definition or standard process must be given. QR 5: Benchmark: Reasonable attempt shall be made to specify benchmarks {Past, Record, Trend} for our system and for relevant competitive systems. Explicit acknowledgement must be made where there is no known benchmark information. QR 6: Goals: The numeric levels needed to meet requirements fully (and so achieve success) must be specified. In other words, one or more [qualifier defined] Goal levels should be specified. The need for goals to specifically cover short term, long term and special cases must be considered. QR 7: Fail: The minimum numeric levels to avoid system, political, or economic failure must be specified. In other words, one or more [qualifier defined] Fail level goals should be specified. (Several Fail levels may be useful for a variety of short term, long term and special situations). QR 8: Wish: All known stakeholder wishes about levels shall be captured in the requirements database as a ‘Wish’ statement, even if the Wish level cannot realistically yet be converted into a harder target level. This is valuable competitive marketing information and may allow us to better satisfy the stakeholder at some point. QR 9: Requirement Level: At least one target {Goal, Wish, Stretch} or constraint {Fail, Survival} level must be stated for this specification to classify as a requirement specification. A specification with only Benchmarks is an analytical specification, but not a requirement of any kind. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 19

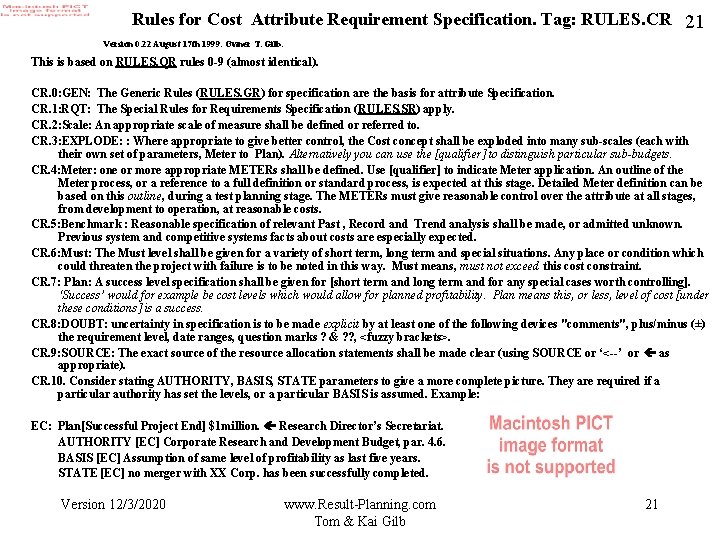

20 4. 3. Rules: Quality Quantification. (RULES. QQ) Version 0. 12 (Draft). Owner Ts. G. Date August 6 th 1999 0: RULES: Rules for technical specification (RULES. GR) apply. This may be used in addition to the Quality Requirement Specification Rules (RULES. QR), or whenever serious emphasis on quality definition is required. 1: STANDARD: The Scale shall, wherever possible, be derived from a standard Scale (in named files or referenced sources) and the standard shall be source referenced in the specification. E. g. Scale: … Corp Scale 1. 2 2: SCALENOTE: If the Scale is not standard, a notification to Scale Library Owner will inform about this case. "Note sent to <owner>" will be included as a spec comment to confirm this act. 3: RICH: Where appropriate, a quality concept will be specified with the aid of multiple Scale definitions, each with their own unique tag, and appropriate set of defining parameters. 4: METER: a practical and economic Meter or set of Meters will be specified for each Scale. Preference will be given to previously defined Meters in our “Meter Spec Library” 5: METERNOTE: When a Meter is 'essentially new' (no reference to previous case in Meter Library) Meter specifications are made, a notification to the Meter Library Owner will be made. "Note sent to <owner>" will be included as a spec comment. 6: BENCHMARK: Reasonable attempt to establish Benchmarks (Past , Record, Trend) will be made for our system's past, and for relevant competition. 7: TERMS: Targets (Must, Plan, Wish) will be made with regard to both long and short term. E. g. Plan [!st Release] 99%, [3 Years After 1 st Release] 99. 90%. 8: DIFFERENTIATE: A distinction will be made, using qualifiers, between those system components which must have significantly higher quality levels than others, and components which do not require such levels. "The best can cost too much". E. g. Must [Supervisor] 99. 98%, [Online Components] 99. 90%, [Offline Components] 99%. 9: SOURCE: Emphasis will be placed on giving the exact and detailed source (even if it is a personal guess) of all numeric specifications. Sources shall be given for any other specification which is derived from a process input document (like a Meter which is contractually defined). E. g. Meter …. . Contract 6. 4. 5 10: UNCERTAINTY: Whenever numbers are uncertain, we will have rich annotation about the degree (plus/minus) and reason (a comment like "because contract & supplier not determined yet"). The reader shall not be left to guess or remember what is known, or could be known, with reasonable inquiry by the author. E. g. Record [USA, After 1999, Juniors] <66 meters> ? ? “± 2 meters” My friend thought that was it. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 20

Rules for Cost Attribute Requirement Specification. Tag: RULES. CR 21 Version 0. 22 August 17 th 1999. Owner T. Gilb. This is based on RULES. QR rules 0 -9 (almost identical). CR. 0: GEN: The Generic Rules (RULES. GR) for specification are the basis for attribute Specification. CR. 1: RQT: The Special Rules for Requirements Specification (RULES. SR) apply. CR. 2: Scale: An appropriate scale of measure shall be defined or referred to. CR. 3: EXPLODE: : Where appropriate to give better control, the Cost concept shall be exploded into many sub-scales (each with their own set of parameters, Meter to Plan). Alternatively you can use the [qualifier] to distinguish particular sub-budgets. CR. 4: Meter: one or more appropriate METERs shall be defined. Use [qualifier] to indicate Meter application. An outline of the Meter process, or a reference to a full definition or standard process, is expected at this stage. Detailed Meter definition can be based on this outline, during a test planning stage. The METERs must give reasonable control over the attribute at all stages, from development to operation, at reasonable costs. CR. 5: Benchmark : Reasonable specification of relevant Past , Record and Trend analysis shall be made, or admitted unknown. Previous system and competitive systems facts about costs are especially expected. CR. 6: Must: The Must level shall be given for a variety of short term, long term and special situations. Any place or condition which could threaten the project with failure is to be noted in this way. Must means, must not exceed this cost constraint. CR. 7: Plan: A success level specification shall be given for [short term and long term and for any special cases worth controlling]. ‘Success’ would for example be cost levels which would allow for planned profitability. Plan means this, or less, level of cost [under these conditions] is a success. CR. 8: DOUBT: uncertainty in specification is to be made explicit by at least one of the following devices "comments", plus/minus (±) the requirement level, date ranges, question marks ? & ? ? , <fuzzy brackets>. CR. 9: SOURCE: The exact source of the resource allocation statements shall be made clear (using SOURCE or ‘<--’ or as appropriate). CR. 10. Consider stating AUTHORITY, BASIS, STATE parameters to give a more complete picture. They are required if a particular authority has set the levels, or a particular BASIS is assumed. Example: EC: Plan[Successful Project End] $1 million. Research Director’s Secretariat. AUTHORITY [EC] Corporate Research and Development Budget, par. 4. 6. BASIS [EC] Assumption of same level of profitability as last five years. STATE [EC] no merger with XX Corp. has been successfully completed. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 21

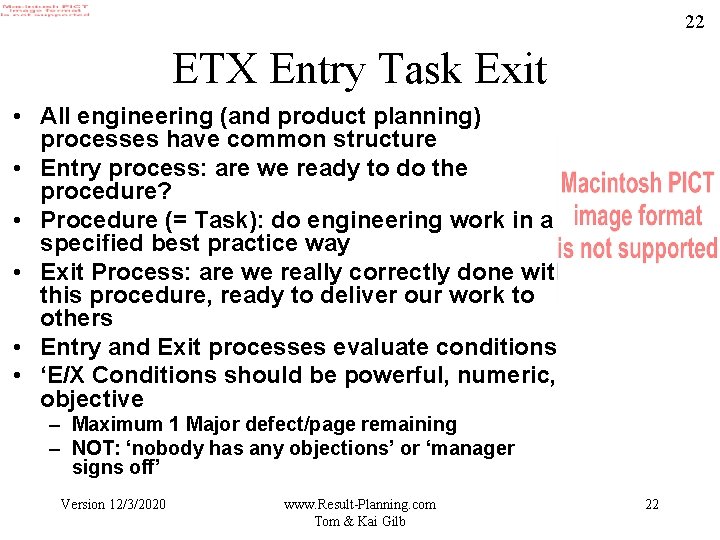

22 ETX Entry Task Exit • All engineering (and product planning) processes have common structure • Entry process: are we ready to do the procedure? • Procedure (= Task): do engineering work in a specified best practice way • Exit Process: are we really correctly done with this procedure, ready to deliver our work to others • Entry and Exit processes evaluate conditions • ‘E/X Conditions should be powerful, numeric, objective – Maximum 1 Major defect/page remaining – NOT: ‘nobody has any objections’ or ‘manager signs off’ Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 22

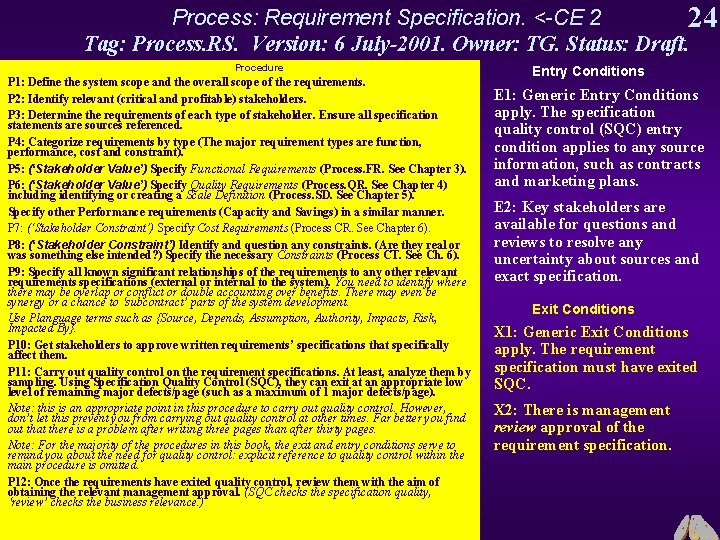

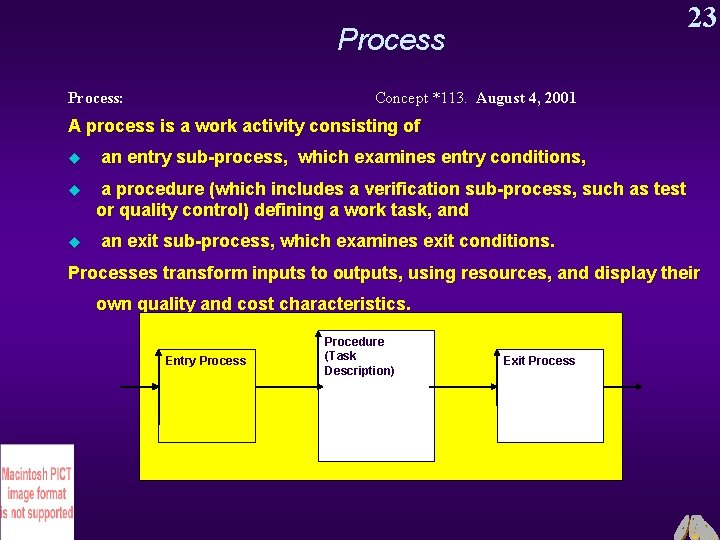

23 Process: Concept *113. August 4, 2001 A process is a work activity consisting of u an entry sub-process, which examines entry conditions, u a procedure (which includes a verification sub-process, such as test or quality control) defining a work task, and u an exit sub-process, which examines exit conditions. Processes transform inputs to outputs, using resources, and display their own quality and cost characteristics. Entry Process Min: Procedure (Task Description) Exit Process

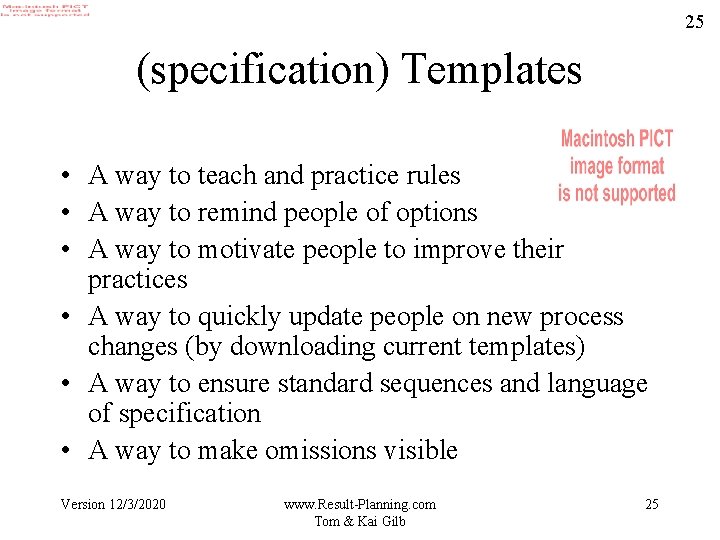

Process: Requirement Specification. <-CE 2 24 Tag: Process. RS. Version: 6 July-2001. Owner: TG. Status: Draft. Procedure P 1: Define the system scope and the overall scope of the requirements. P 2: Identify relevant (critical and profitable) stakeholders. P 3: Determine the requirements of each type of stakeholder. Ensure all specification statements are sources referenced. P 4: Categorize requirements by type (The major requirement types are function, performance, cost and constraint). P 5: (‘Stakeholder Value’) Specify Functional Requirements (Process. FR. See Chapter 3). P 6: (‘Stakeholder Value’) Specify Quality Requirements (Process. QR. See Chapter 4) including identifying or creating a Scale Definition (Process. SD. See Chapter 5). Specify other Performance requirements (Capacity and Savings) in a similar manner. P 7: (‘Stakeholder Constraint’) Specify Cost Requirements (Process CR. See Chapter 6). P 8: (‘Stakeholder Constraint’) Identify and question any constraints. (Are they real or was something else intended? ) Specify the necessary Constraints (Process CT. See Ch. 6). P 9: Specify all known significant relationships of the requirements to any other relevant requirements specifications (external or internal to the system). You need to identify where there may be overlap or conflict or double accounting over benefits. There may even be synergy or a chance to ‘subcontract’ parts of the system development. Use Planguage terms such as {Source, Depends, Assumption, Authority, Impacts, Risk, Impacted By}. P 10: Get stakeholders to approve written requirements’ specifications that specifically affect them. P 11: Carry out quality control on the requirement specifications. At least, analyze them by sampling. Using Specification Quality Control (SQC), they can exit at an appropriate low level of remaining major defects/page (such as a maximum of 1 major defects/page). Note: this is an appropriate point in this procedure to carry out quality control. However, don’t let this prevent you from carrying out quality control at other times. Far better you find out that there is a problem after writing three pages than after thirty pages. Note: For the majority of the procedures in this book, the exit and entry conditions serve to remind you about the need for quality control: explicit reference to quality control within the main procedure is omitted. P 12: Once the requirements have exited quality control, review them with the aim of obtaining the relevant management approval. (SQC checks the specification quality, ‘review’ checks the business relevance. ) Min: Entry Conditions E 1: Generic Entry Conditions apply. The specification quality control (SQC) entry condition applies to any source information, such as contracts and marketing plans. E 2: Key stakeholders are available for questions and reviews to resolve any uncertainty about sources and exact specification. Exit Conditions X 1: Generic Exit Conditions apply. The requirement specification must have exited SQC. X 2: There is management review approval of the requirement specification.

25 (specification) Templates • A way to teach and practice rules • A way to remind people of options • A way to motivate people to improve their practices • A way to quickly update people on new process changes (by downloading current templates) • A way to ensure standard sequences and language of specification • A way to make omissions visible Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 25

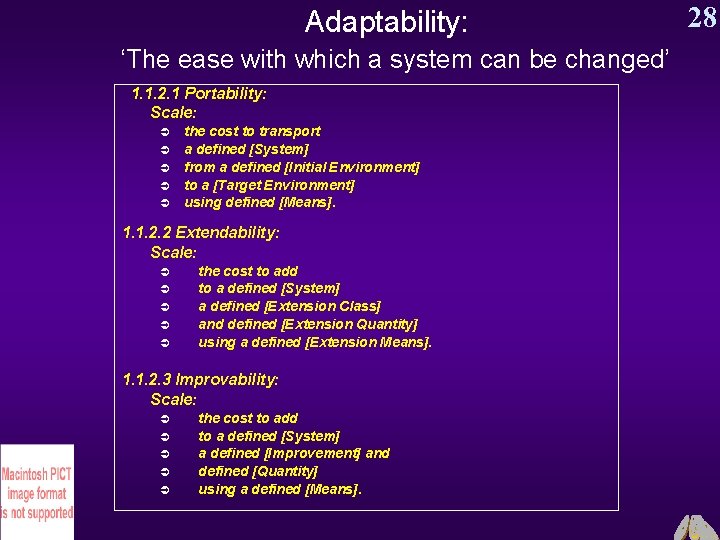

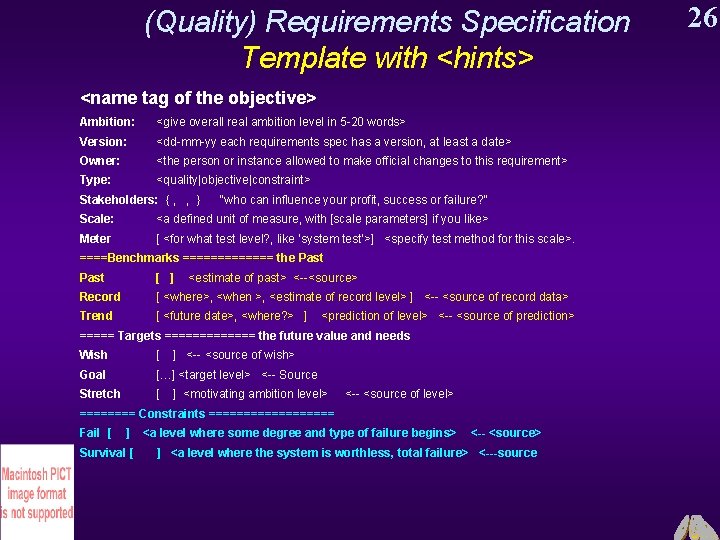

(Quality) Requirements Specification Template with <hints> <name tag of the objective> Ambition: <give overall real ambition level in 5 -20 words> Version: <dd-mm-yy each requirements spec has a version, at least a date> Owner: <the person or instance allowed to make official changes to this requirement> Type: <quality|objective|constraint> Stakeholders: { , , } “who can influence your profit, success or failure? ” Scale: <a defined unit of measure, with [scale parameters] if you like> Meter [ <for what test level? , like ‘system test’>] <specify test method for this scale>. ====Benchmarks ======= the Past [ ] <estimate of past> <--<source> Record [ <where>, <when >, <estimate of record level> ] <-- <source of record data> Trend [ <future date>, <where? > ] <prediction of level> <-- <source of prediction> ===== Targets ======= the future value and needs Wish [ ] <-- <source of wish> Goal […] <target level> <-- Source Stretch [ ] <motivating ambition level> <-- <source of level> ==== Constraints ========= Fail [ ] <a level where some degree and type of failure begins> <-- <source> Survival [ ] <a level where the system is worthless, total failure> <---source Min: 26

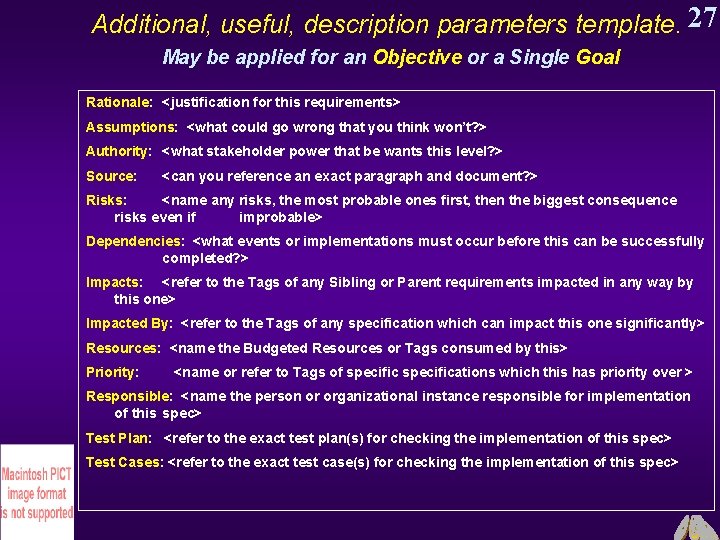

Additional, useful, description parameters template. 27 May be applied for an Objective or a Single Goal Rationale: <justification for this requirements> Assumptions: <what could go wrong that you think won’t? > Authority: <what stakeholder power that be wants this level? > Source: <can you reference an exact paragraph and document? > Risks: <name any risks, the most probable ones first, then the biggest consequence risks even if improbable> Dependencies: <what events or implementations must occur before this can be successfully completed? > Impacts: <refer to the Tags of any Sibling or Parent requirements impacted in any way by this one> Impacted By: <refer to the Tags of any specification which can impact this one significantly> Resources: <name the Budgeted Resources or Tags consumed by this> Priority: <name or refer to Tags of specifications which this has priority over > Responsible: <name the person or organizational instance responsible for implementation of this spec> Test Plan: <refer to the exact test plan(s) for checking the implementation of this spec> Test Cases: <refer to the exact test case(s) for checking the implementation of this spec> Min:

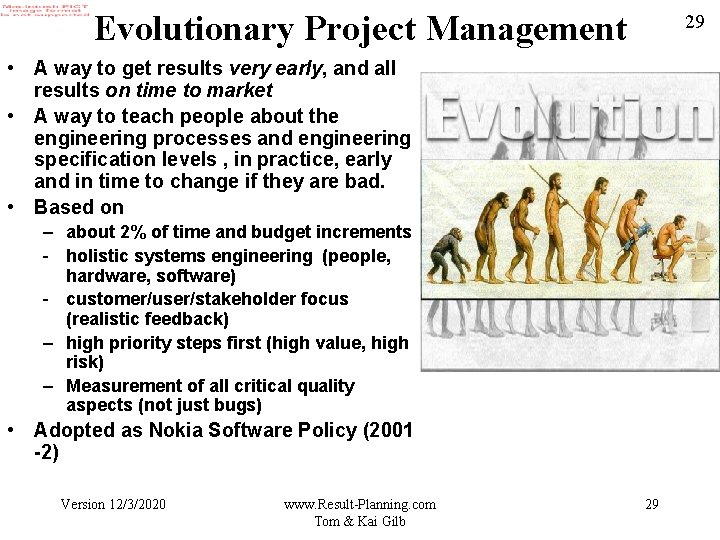

Adaptability: ‘The ease with which a system can be changed’ 1. 1. 2. 1 Portability: Scale: Ü Ü Ü the cost to transport a defined [System] from a defined [Initial Environment] to a [Target Environment] using defined [Means]. 1. 1. 2. 2 Extendability: Scale: Ü Ü Ü the cost to add to a defined [System] a defined [Extension Class] and defined [Extension Quantity] using a defined [Extension Means]. 1. 1. 2. 3 Improvability: Scale: Ü Ü Ü Min: the cost to add to a defined [System] a defined [Improvement] and defined [Quantity] using a defined [Means]. 28

Evolutionary Project Management 29 • A way to get results very early, and all results on time to market • A way to teach people about the engineering processes and engineering specification levels , in practice, early and in time to change if they are bad. • Based on – about 2% of time and budget increments - holistic systems engineering (people, hardware, software) - customer/user/stakeholder focus (realistic feedback) – high priority steps first (high value, high risk) – Measurement of all critical quality aspects (not just bugs) • Adopted as Nokia Software Policy (2001 -2) Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 29

Impact Table for Step Management 30 Evo

Inspection (Specification Quality Control: SQC) • NOT THE ‘OLD’ INSPECTION (1976) – (NOT cleaning up bad work, partly) • • Based on sampling Based on early and continuous sampling Based on measurement of major defects density Based on getting control of specification quality during the work process – Not on ‘cleaning up bad work afterwards’ (the ‘old’ inspection focus area) • Effective way to teach and enforce current specification best practice standards (Rules) Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 31 31

32 A Sampling Case Study 32 • 1986 Northern Europe – Air traffic control trainer system for export – 80, 000 pages contracted documentation before code – 40, 000 pages already written – Project seriously late already (customer informed) – About 7 management signatures approving the 40, 000 pages (pseudocode for coders) – Inspection of a sample of three pages • chosen by random numbers • declared to be representative • 19 Major defects found in half day inspection by the 7 managers • director checks the defect log and confirms Half-day Inspection Economics. Gilb@acm. org 32

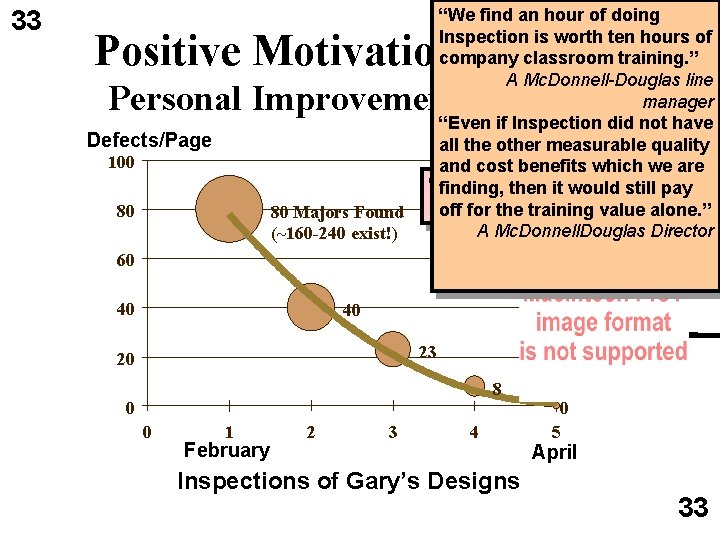

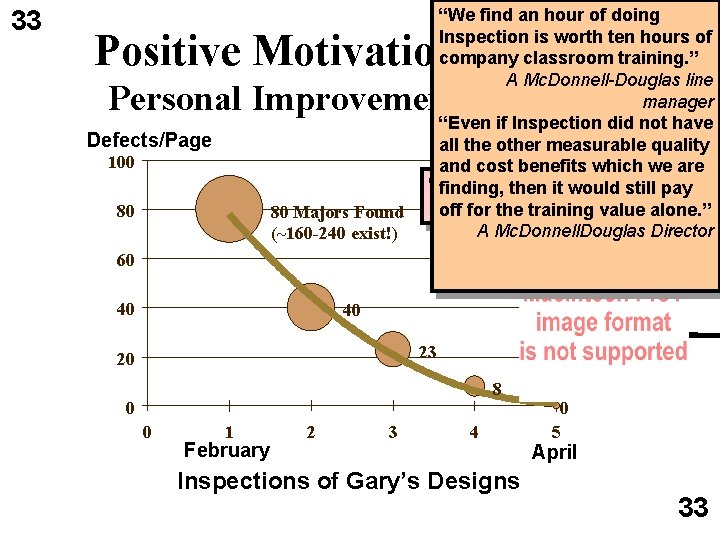

33 “We find an hour of doing 33 Inspection is worth ten hours of company classroom training. ” A Mc. Donnell-Douglas line manager “Even if Inspection did not have all the other measurable quality and cost benefits which we are “Gary” at finding, then it would still pay Mc. Donnell-Douglas off for the training value alone. ” A Mc. Donnell. Douglas Director Positive Motivation: Personal Improvement Defects/Page 100 80 80 Majors Found (~160 -240 exist!) 60 40 40 23 20 8 0 0 1 February 2 3 4 Inspections of Gary’s Designs 0 5 April 33

34 Bull 2000 The larger picture 34 © Bull Authors comment available in Powerpoint Note here. “While some of the conclusions and inferences are similar to those achieved using intuition or sound engineering judgement, we gained a fact-based understanding of many of the release processes we used, and a measurement that allowed us to predict post-ship defect rates. In the past we have attempted to do this, with limited success. For this release, we were able to set quality goals and measure the results, and predict a post-ship rate with confidence. ” , Fellow, Software Process, Bull Edward F Weller, Practical Applications of Statistical Process Control, IEEE and Ed. Weller@bull. com , 13430 N. Black Canyon , Phoenix, AZ 85029 USA, The attached is being published as part of the 10 th ICSQ in October 2000 34

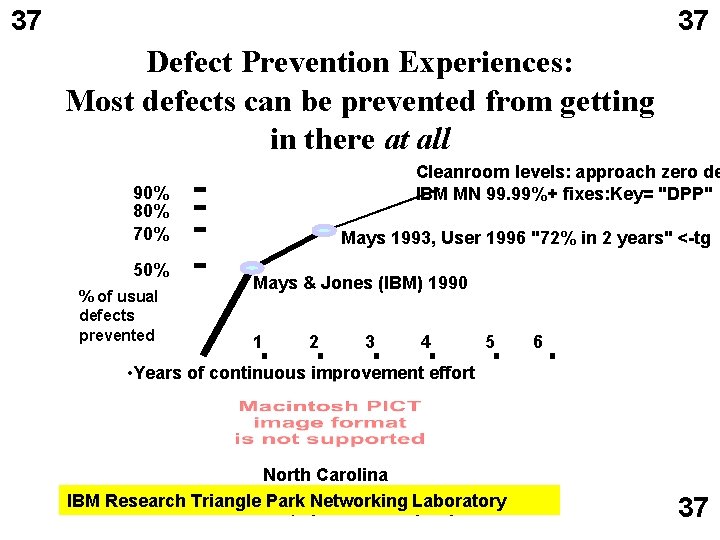

35 Defect Prevention Process (DPP) • Developed 1985 Robert Mays (C Jones) IBM • Focus on in-process (daily) learning about engineering process weaknesses • Decentralized analysis and change experiments – Based on current experiences. – ( Major defects today, analyzed in Inspections and from Tests) • • Corporate-wide/project-wide changes via Process Owner (Local and corporate) 50% defect injection reduction in 1 year, up to 95%+ later 200 to 2, 000 process changes annually: evolution of the processes • = CMMi Level 5 (you can start next week!) Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 35

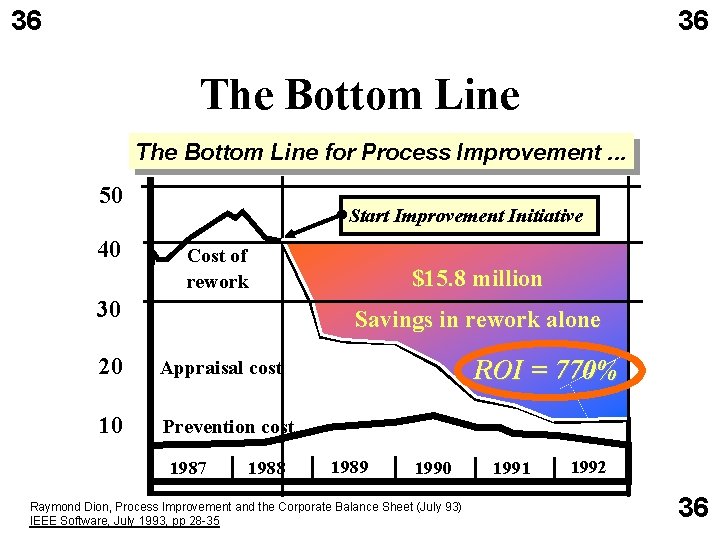

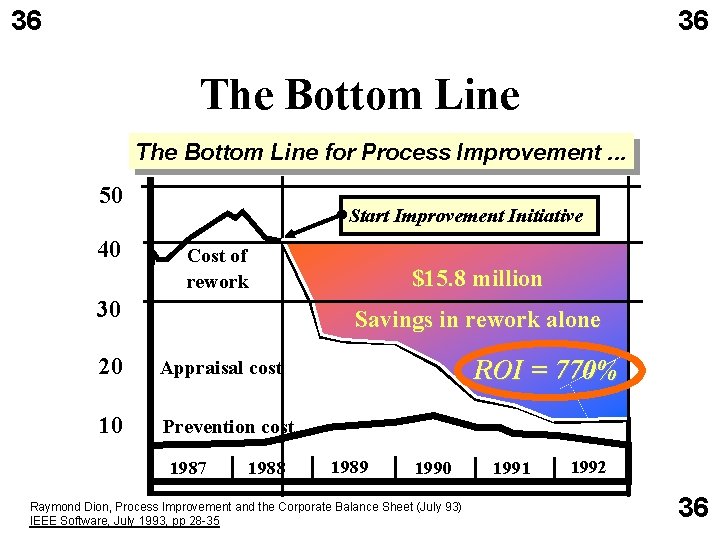

36 36 The Bottom Line for Process Improvement. . . 50 40 Start Improvement Initiative Cost of rework 30 $15. 8 million Savings in rework alone 20 Appraisal cost 10 Prevention cost 1987 1988 ROI = 770% 1989 1990 Raymond Dion, Process Improvement and the Corporate Balance Sheet (July 93) IEEE Software, July 1993, pp 28 -35 1991 1992 36

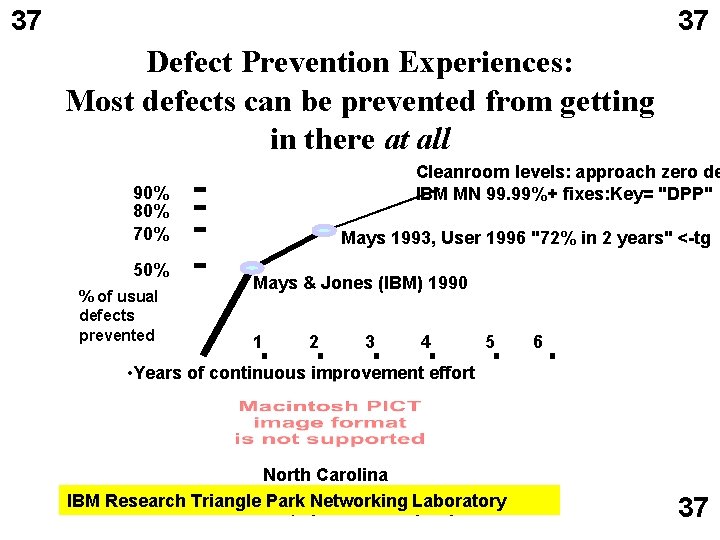

37 37 Defect Prevention Experiences: Most defects can be prevented from getting in there at all Cleanroom levels: approach zero de IBM MN 99. 99%+ fixes: Key= "DPP" 90% 80% 70% 50% % of usual defects prevented Mays 1993, User 1996 "72% in 2 years" <-tg Mays & Jones (IBM) 1990 1 2 3 4 5 6 • Years of continuous improvement effort North Carolina IBM Research Triangle Park Networking Laboratory Half-day Inspection Economics. Gilb@acm. org 37

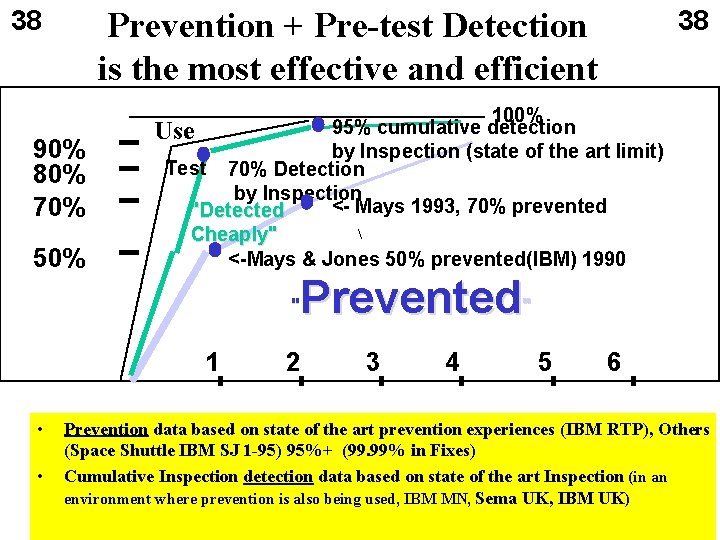

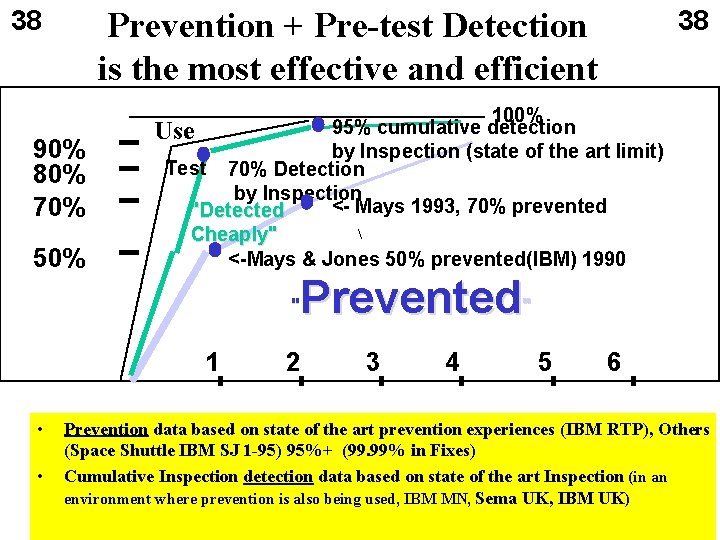

Prevention + Pre-test Detection is the most effective and efficient 38 90% 80% 70% 50% 38 100% 95% cumulative detection Use by Inspection (state of the art limit) Test 70% Detection by Inspection <- Mays 1993, 70% prevented "Detected Cheaply" <-Mays & Jones 50% prevented(IBM) 1990 Prevented" " 1 • • 2 3 4 5 6 Prevention data based on state of the art prevention experiences (IBM RTP), Others (Space Shuttle IBM SJ 1 -95) 95%+ (99. 99% in Fixes) Cumulative Inspection detection data based on state of the art Inspection (in an environment where prevention is also being used, IBM MN, Sema UK, IBM UK) Half-day Inspection Economics. Gilb@acm. org 38

39 Design to (Multiple Scalar) Requirements: DTR 1/2 • ‘You don’t get quality by testing it in, you get it by designing it in’ (old engineering wisdom) • If you have no clear performance, quality, cost and constraint requirements - design to meet them is structurally impossible. • Most software engineers do not have clear quantified multi-dimensional requirements - they can’t design. They don’t know, they don’t care and they don’t discuss the issue. They are programmers (softcrafters) not engineers. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 39

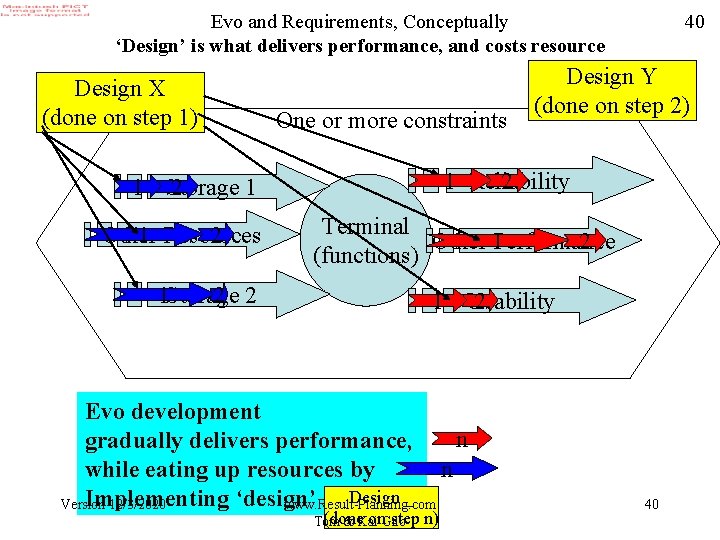

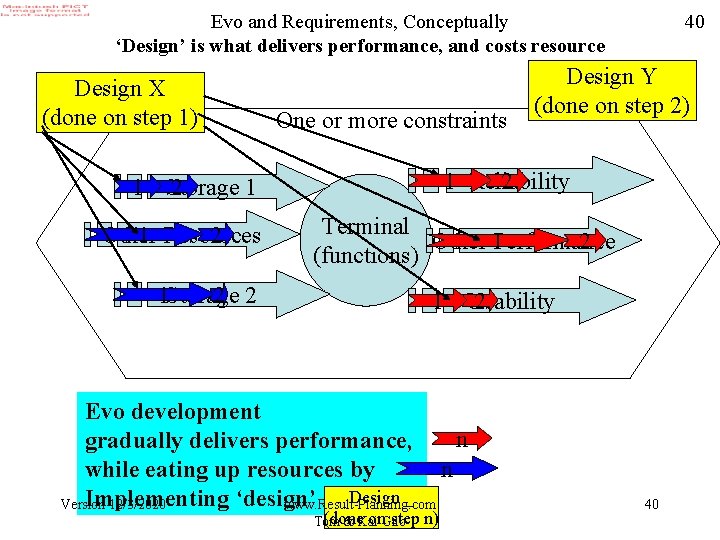

40 Evo and Requirements, Conceptually ‘Design’ is what delivers performance, and costs resource Design X (done on step 1) One or more constraints 1 Reliability 2 1 1 Storage 2 Other 1 Resources 2 Design Y (done on step 2) Terminal Other 1 Performance 2 (functions) 1 Storage 2 2 1 Usability 2 Evo development n gradually delivers performance, n while eating up resources by Design _ Implementing ‘design’ Version 12/3/2020 www. Result-Planning. com (done on. Gilb step Tom & Kai n) 40

41 Design To (Multiple Scalar) Requirements: DTR 2/2 • Many design ideas must be specified and tried out, in order to deliver desired performance within resource budgets and other constraints • The numeric effect and cumulative effect of each design must be estimated and confirmed • The designer(s) are looking to meet their goals with the least costs • This engineering process is nowhere described in conventional software literature – It is a real engineering/architecture process , not a matter of programming. Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 41

Impact Estimation Graphic <-LB Min: 42

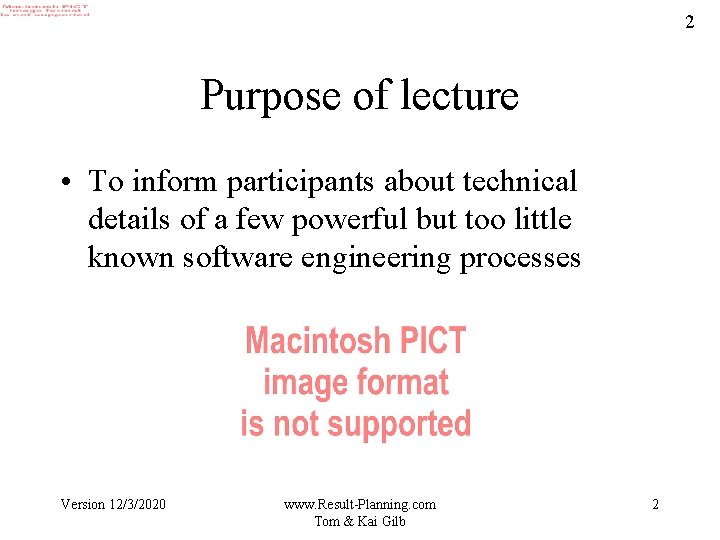

43 Competitive Engineering (CE) • CE is – all the above processes – integrated into one meta-process – which not only manages the software process, – but also the entire product engineering process Version 12/3/2020 www. Result-Planning. com Tom & Kai Gilb 43

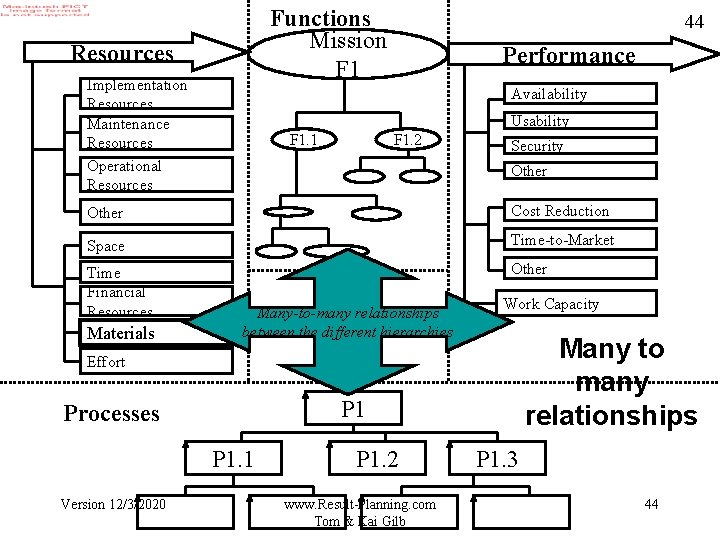

Functions Mission F 1 Resources Implementation Resources Maintenance Resources Operational Resources 44 Performance Availability Usability F 1. 1 F 1. 2 Security Other Cost Reduction Space Time-to-Market Time Financial Resources Other Materials Many-to-many relationships between the different hierarchies Work Capacity Many to many relationships Effort P 1 Processes P 1. 1 Version 12/3/2020 P 1. 2 www. Result-Planning. com Tom & Kai Gilb P 1. 3 44