1 Null Blocks in the Memory Hierarchy Julien

1 Null Blocks in the Memory Hierarchy Julien Dusser André Seznec IRISA/INRIA

2 Null data in the memory hierarchy For many applications, many manipulated data are null • Dynamic phenomenon – Load null bytes, null words, null blocks – Bandwidth wasting • Static phenomenon at all levels in the hierarchy – Null bytes, null words, null blocks – Space wasting

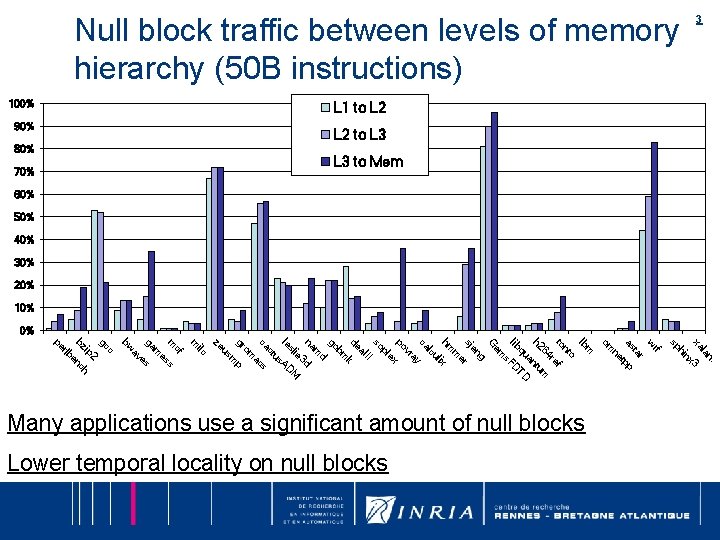

Null block traffic between levels of memory hierarchy (50 B instructions) 100% 3 L 1 to L 2 90% L 2 to L 3 80% L 3 to Mem 70% 60% 50% 40% 30% 20% 10% 0% n. 3 nx la xa hi sp Lower temporal locality on null blocks rf w r ta as tpp ne om m lb o nt to ef r 64 m h 2 ntu a qu TD lib FD s em G g en sj r e m hm ix ul lc ca y a vr po ex pl so II al de k bm go d m na d 3 ie M sl le AD us ct ca cs a om p m gr us ze ilc m c ch cf m ss e m ga s e av bw gc 2 en rlb ip bz pe Many applications use a significant amount of null blocks

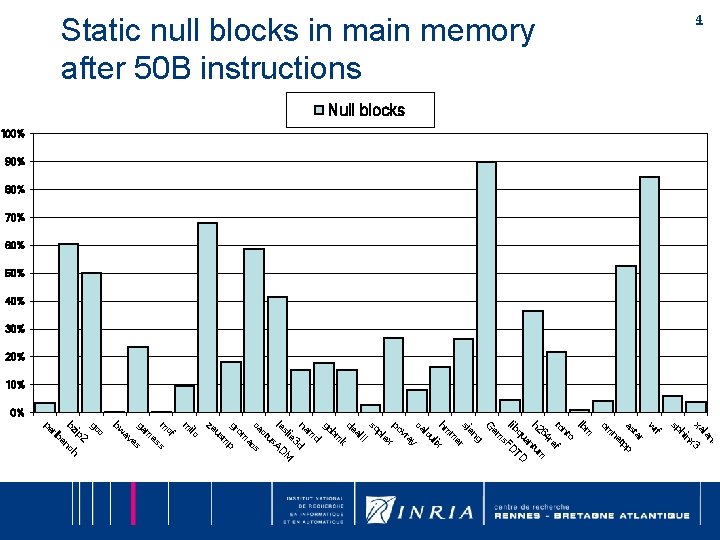

4 Static null blocks in main memory after 50 B instructions Null blocks 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% l xa . an 3 nx hi sp rf w r ta as pp t ne om m lb o nt to f re 64 m h 2 ntu a D qu lib FDT s em G g en sj r e m hm x i ul lc ca ay vr po ex pl so II al de k bm go d m na d 3 ie M sl le AD us ct ca cs a om gr p m us ze ilc m cf m s es m ga s e av bw c gc 2 ip bz nch e rlb pe

5 Observations Significant amount of null blocks • • For approx. half of the applications Lower temporal locality than non-null blocks Often high spatial locality of null blocks Different temporal locality on different levels Waste of cache and memory space • Null blocks occupy lot of space

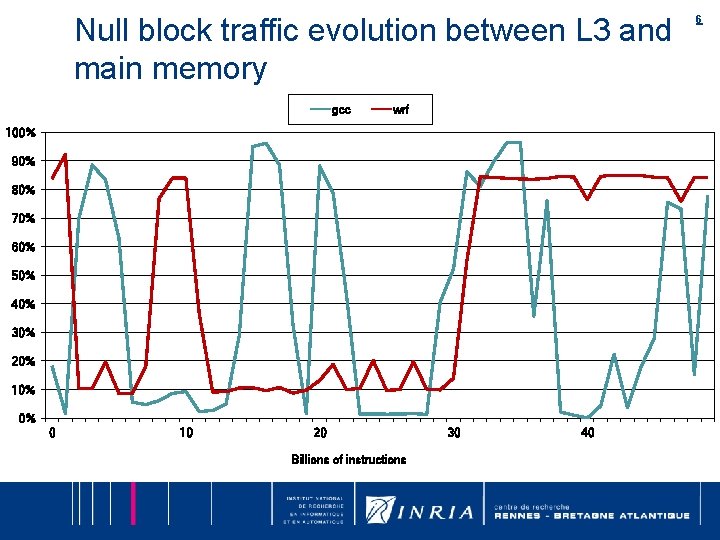

Null block traffic evolution between L 3 and main memory gcc wrf 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 0 10 20 Billions of instructions 30 40 6

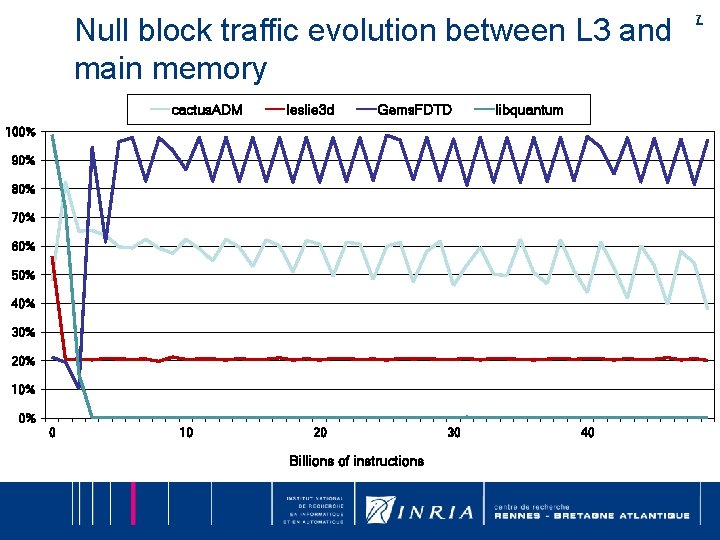

Null block traffic evolution between L 3 and main memory cactus. ADM leslie 3 d Gems. FDTD libquantum 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 0 10 20 Billions of instructions 30 40 7

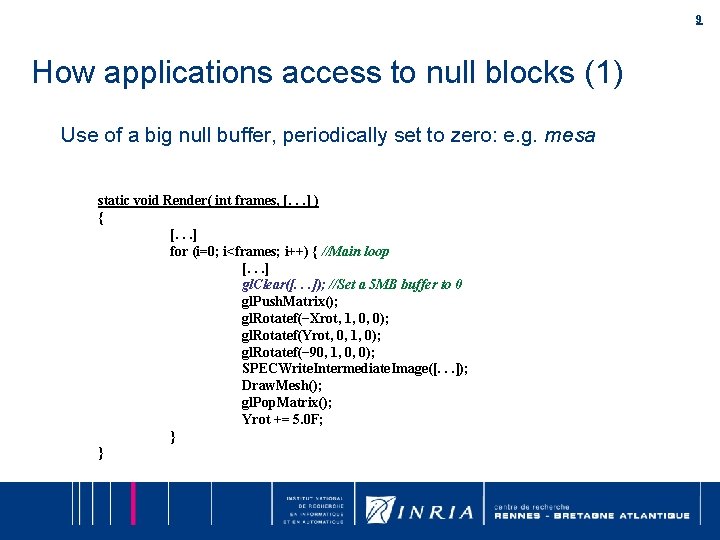

8 How applications access to null blocks (0) It is not just the initialization phase of the program

9 How applications access to null blocks (1) Use of a big null buffer, periodically set to zero: e. g. mesa static void Render( int frames, [. . . ] ) { [. . . ] for (i=0; i<frames; i++) { //Main loop [. . . ] gl. Clear([. . . ]); //Set a 5 MB buffer to 0 gl. Push. Matrix(); gl. Rotatef(−Xrot, 1, 0, 0); gl. Rotatef(Yrot, 0, 1, 0); gl. Rotatef(− 90, 1, 0, 0); SPECWrite. Intermediate. Image([. . . ]); Draw. Mesh(); gl. Pop. Matrix(); Yrot += 5. 0 F; } }

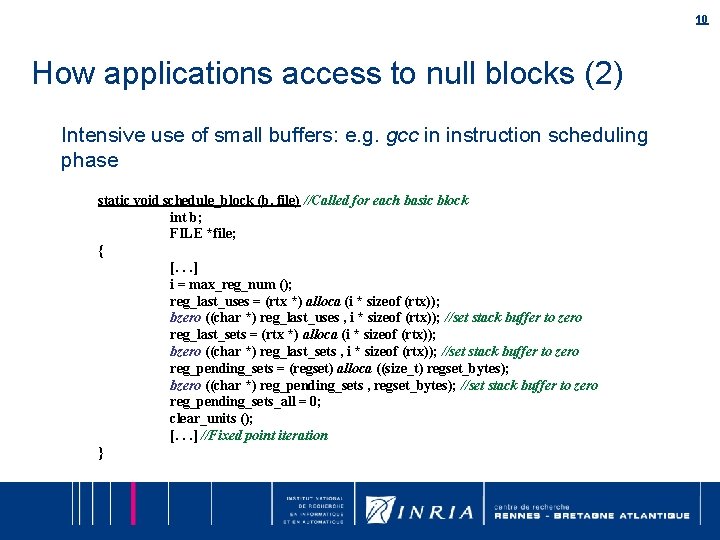

10 How applications access to null blocks (2) Intensive use of small buffers: e. g. gcc in instruction scheduling phase static void schedule_block (b, file) //Called for each basic block int b; FILE *file; { [. . . ] i = max_reg_num (); reg_last_uses = (rtx *) alloca (i * sizeof (rtx)); bzero ((char *) reg_last_uses , i * sizeof (rtx)); //set stack buffer to zero reg_last_sets = (rtx *) alloca (i * sizeof (rtx)); bzero ((char *) reg_last_sets , i * sizeof (rtx)); //set stack buffer to zero reg_pending_sets = (regset) alloca ((size_t) regset_bytes); bzero ((char *) reg_pending_sets , regset_bytes); //set stack buffer to zero reg_pending_sets_all = 0; clear_units (); [. . . ] //Fixed point iteration }

11 How applications access to null blocks (3) Intensive reuse of (almost) null data structures without updating them. • zeusmp, castus, leslie 3 d, gems. FTTD

12 EXPLOITING NULL BLOCKS IN THE MEMORY HIERARCHY

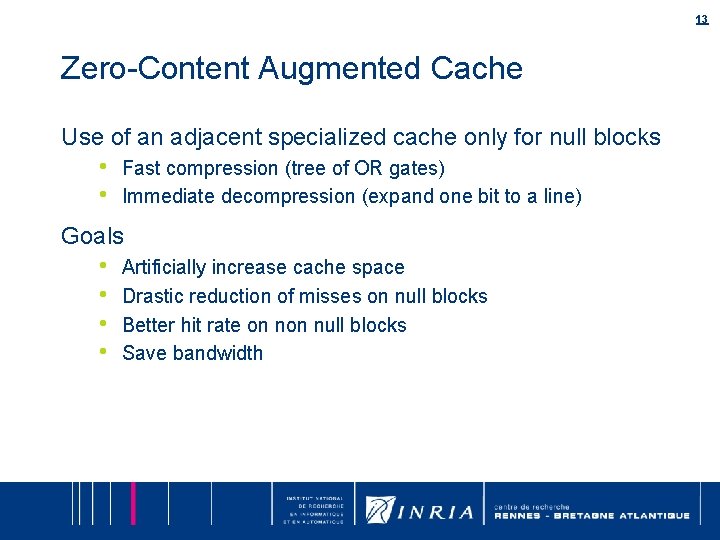

13 Zero-Content Augmented Cache Use of an adjacent specialized cache only for null blocks • • Fast compression (tree of OR gates) Immediate decompression (expand one bit to a line) Goals • • Artificially increase cache space Drastic reduction of misses on null blocks Better hit rate on null blocks Save bandwidth

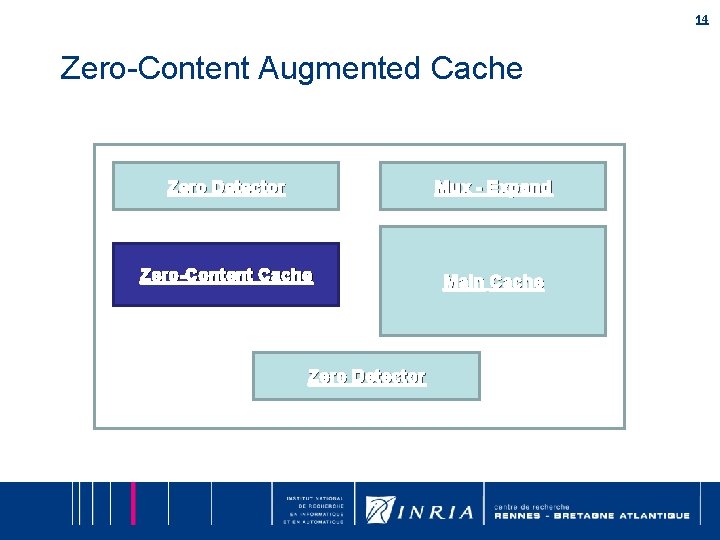

14 Zero-Content Augmented Cache Zero Detector Mux - Expand Zero-Content Cache Main Cache Zero Detector

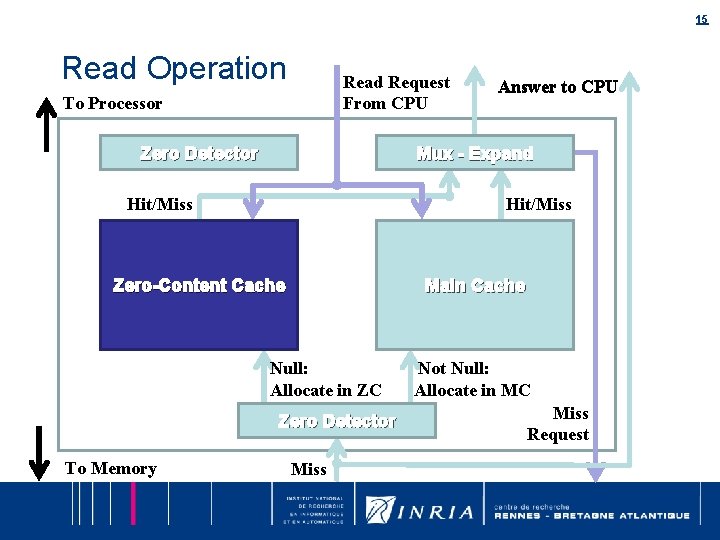

15 Read Operation Read Request From CPU To Processor Zero Detector Mux - Expand Hit/Miss Zero-Content Cache Main Cache Null: Allocate in ZC Zero Detector To Memory Answer to CPU Miss Not Null: Allocate in MC Miss Request

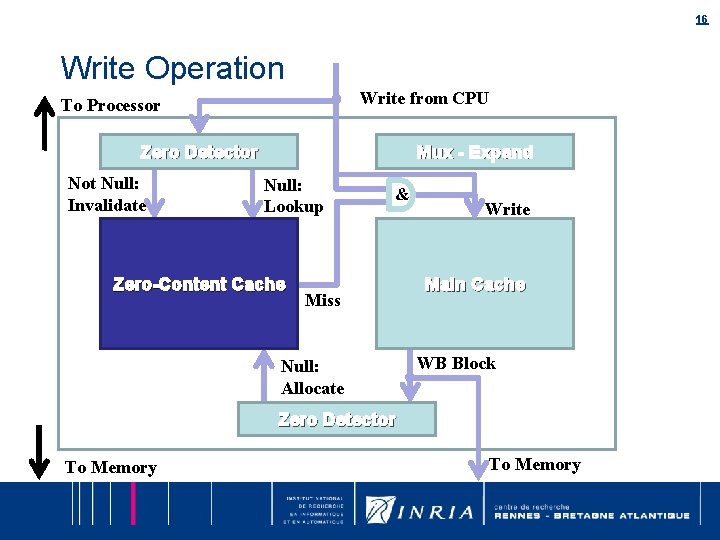

16 Write Operation Write from CPU To Processor Zero Detector Not Null: Invalidate Mux - Expand Null: Lookup Zero-Content Cache & 1 Miss Null: Allocate Write Main Cache WB Block Zero Detector To Memory

17 Trivial Cache Coherency on ZC ZC never owns a dirty block • • Dirty data is allocated in main cache Can easily be used in a multicore – Coherency is done by main cache – Just need to invalidate data in ZC

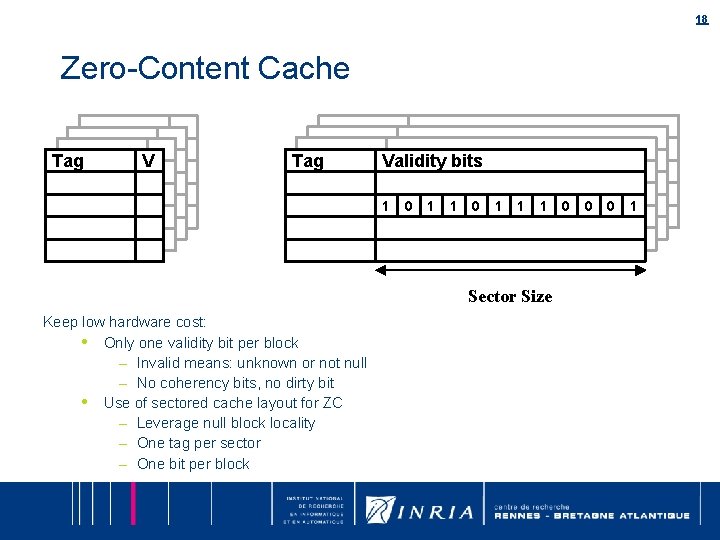

18 Zero-Content Cache Tag Validity bits 1 0 1 1 1 Sector Size Keep low hardware cost: • Only one validity bit per block – Invalid means: unknown or not null – No coherency bits, no dirty bit • Use of sectored cache layout for ZC – Leverage null block locality – One tag per sector – One bit per block 0 0 0 1

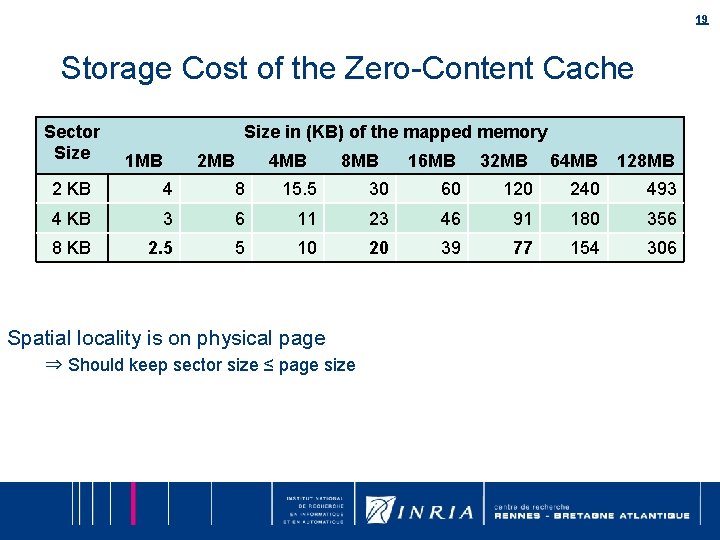

19 Storage Cost of the Zero-Content Cache Sector Size in (KB) of the mapped memory 1 MB 2 MB 4 MB 8 MB 16 MB 32 MB 64 MB 128 MB 2 KB 4 8 15. 5 30 60 120 240 493 4 KB 3 6 11 23 46 91 180 356 8 KB 2. 5 5 10 20 39 77 154 306 Spatial locality is on physical page ⇒ Should keep sector size ≤ page size

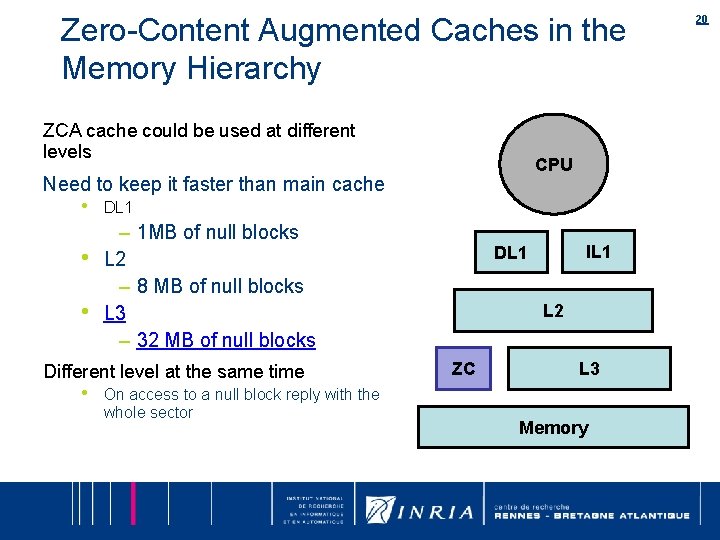

Zero-Content Augmented Caches in the Memory Hierarchy ZCA cache could be used at different levels CPU Need to keep it faster than main cache • • • DL 1 – 1 MB of null blocks L 2 – 8 MB of null blocks L 3 – 32 MB of null blocks Different level at the same time • On access to a null block reply with the whole sector IL 1 DL 1 L 2 ZC L 3 Memory 20

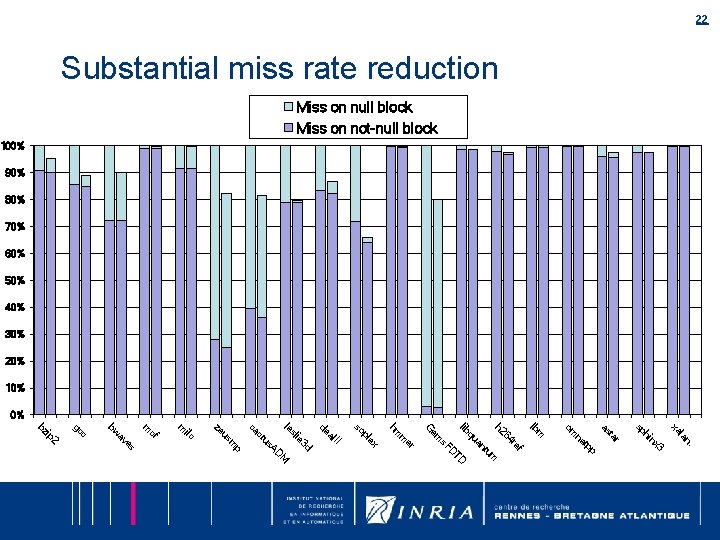

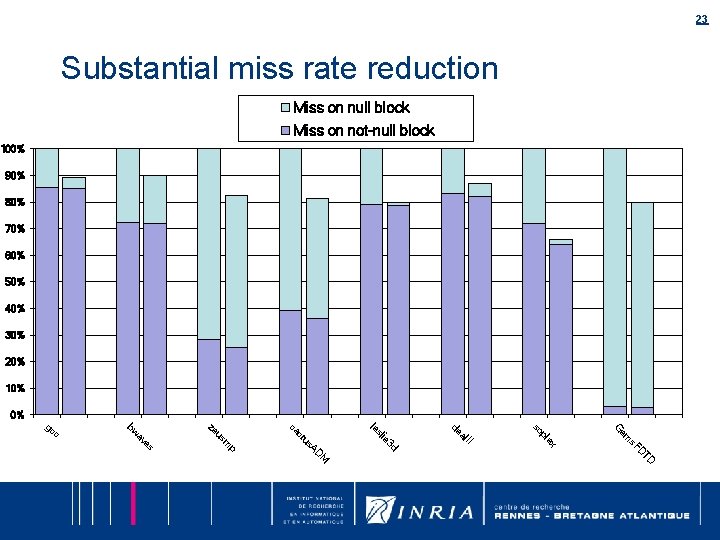

21 ZCA performances 4 -way O-O-O processor, ZCA on L 3 cache, up to 32 MB of null blocks Performance improvement • From 0 % to 11 % – Except soplex 54 %

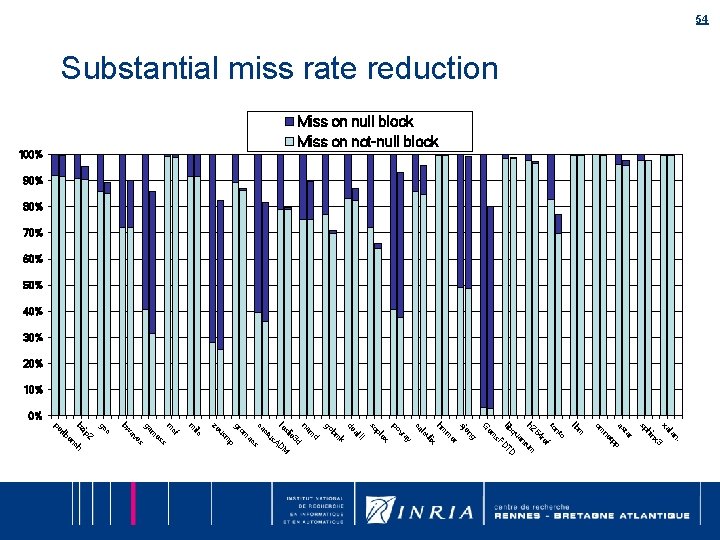

22 Substantial miss rate reduction Miss on null block Miss on not-null block 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% l xa . an p f tp x 3 in h sp r ta as m ne om lb re tu an 64 h 2 qu lib m TD D s. F em G er II ex m hm pl so al de 3 d ie sl le M AD us ct ca p m us ze ilc m cf m es 2 av bw c gc ip bz

23 Substantial miss rate reduction Miss on null block Miss on not-null block 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% TD D s. F ex II em G pl so al de 3 d M AD us ct ie sl le ca p m us ze es av bw c gc

24 MAIN MEMORY: COMPRESSING NULL BLOCKS

25 Why compressing the memory ? Main memory size grows exponentially Always applications that do not fit main memory • Page swap may kill performance Memory compression artificially enlarges main memory size

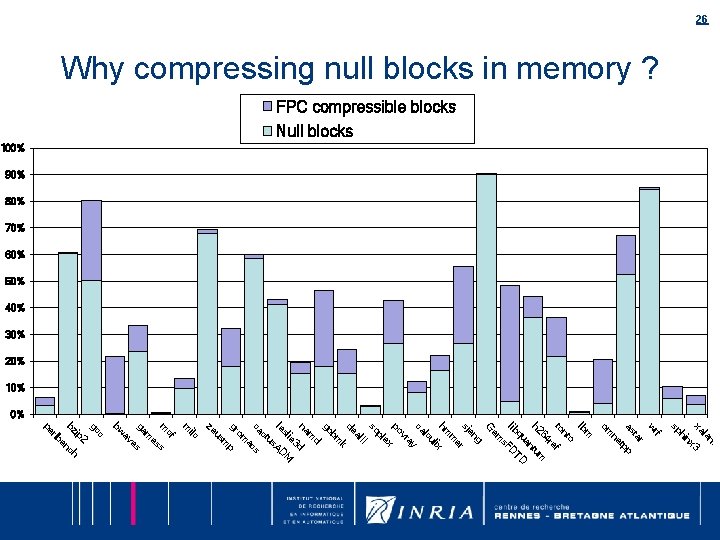

26 Why compressing null blocks in memory ? FPC compressible blocks 100% Null blocks 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% l xa . an 3 nx hi sp rf w r ta as pp t ne om m lb o nt to f re 64 m h 2 ntu a D qu lib FDT s em G g en sj r e m hm x i ul lc ca ay vr po ex pl so II al de k bm go d m na d 3 ie M sl le AD us ct ca cs a om gr p m us ze ilc m cf m s es m ga s e av bw c gc 2 ip bz nch e rlb pe

Why very few compressed memory proposals ? Compressing/Decompressing induce some latency • Might result in some performance loss on applications fitting in the main memory – Not a good marketing argument ! Some complexity in the memory system 27

28 Why compressing null blocks in memory (2) Combined with ZCA may increase performance: • • Access more data in parallel – Also power saving Transfer more data in parallel – Better usage of a scarce shared resource – The memory bandwidth – Reduce memory latency: – Prefetch blocks

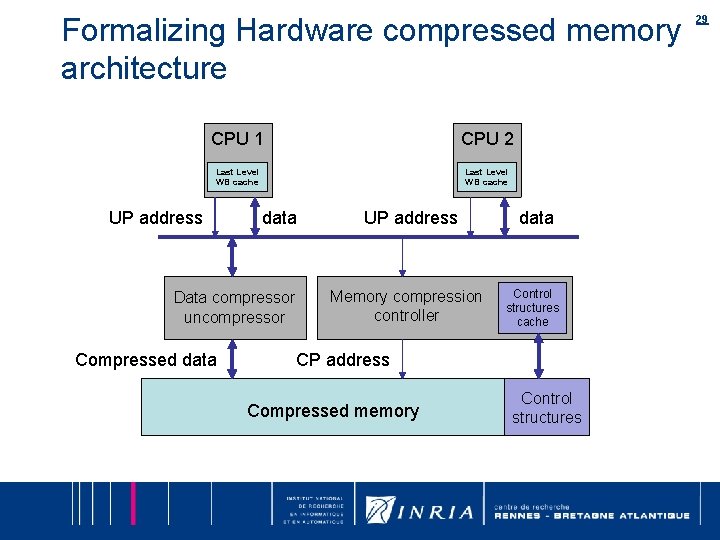

Formalizing Hardware compressed memory architecture CPU 1 CPU 2 Last Level WB cache UP address data Data compressor uncompressor Compressed data UP address Memory compression controller data Control structures cache CP address Compressed memory Control structures 29

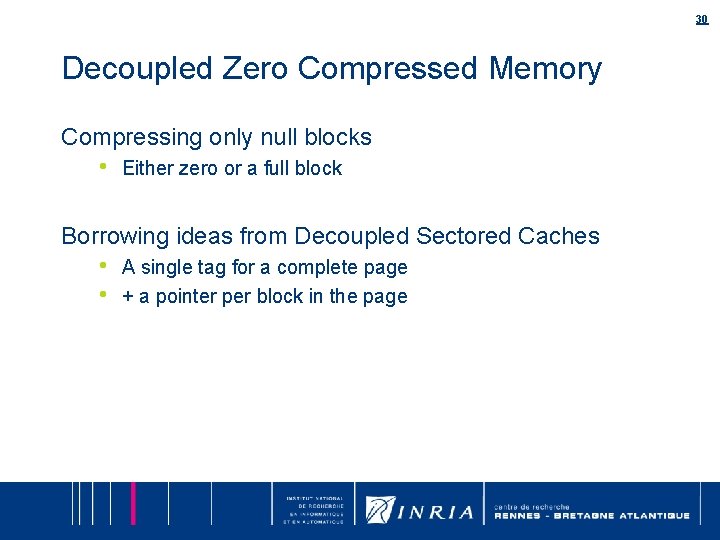

30 Decoupled Zero Compressed Memory Compressing only null blocks • Either zero or a full block Borrowing ideas from Decoupled Sectored Caches • • A single tag for a complete page + a pointer per block in the page

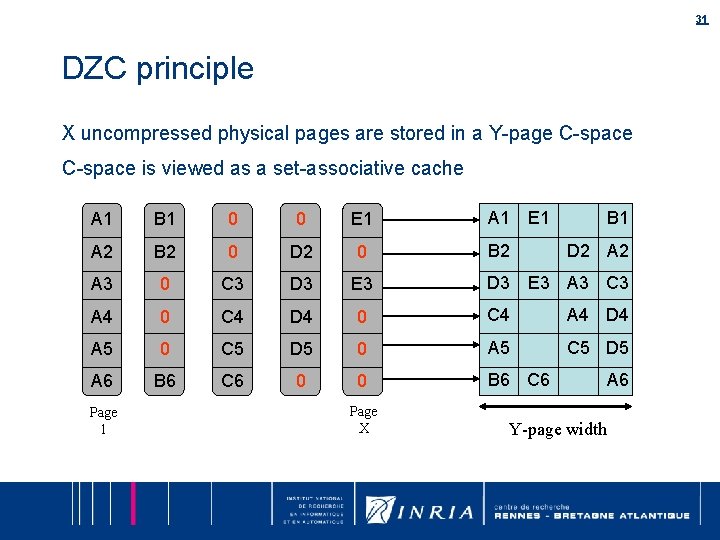

31 DZC principle X uncompressed physical pages are stored in a Y-page C-space is viewed as a set-associative cache A 1 E 1 B 1 A 1 B 1 0 0 E 1 A 2 B 2 0 D 2 0 A 3 0 C 3 D 3 E 3 A 4 0 C 4 D 4 0 C 4 A 4 D 4 A 5 0 C 5 D 5 0 A 5 C 5 D 5 A 6 B 6 C 6 0 0 B 6 C 6 Page 1 Page X B 2 D 2 A 2 D 3 E 3 A 3 C 3 A 6 Y-page width

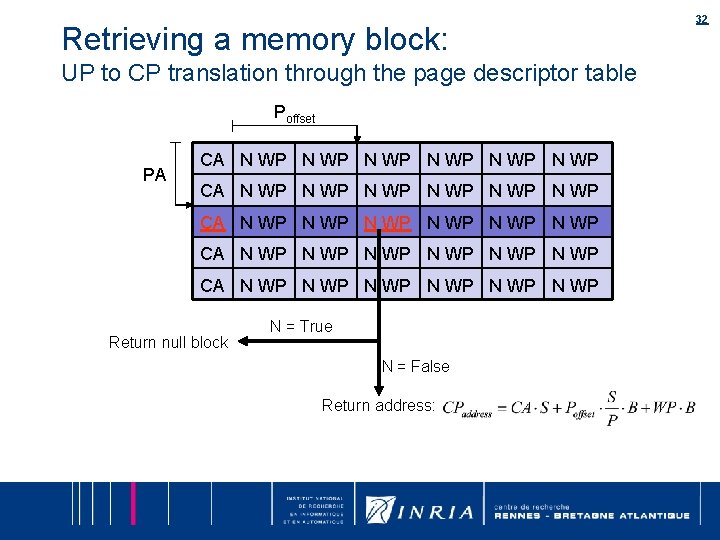

Retrieving a memory block: UP to CP translation through the page descriptor table Poffset PA CA N WP N WP N WP N WP N WP N WP CA N WP N WP Return null block N = True N = False Return address: 32

33 The read access scenarios Read a null block: • Read the page descriptor, return a null block Read a non-null block: • Read the page descriptor, retrieve the address in the C-space, read the memory

34 Write access scenarios Write null block on already null block • Not an issue Write non-null block on alredy non-null block • Not an issue Change status: problems

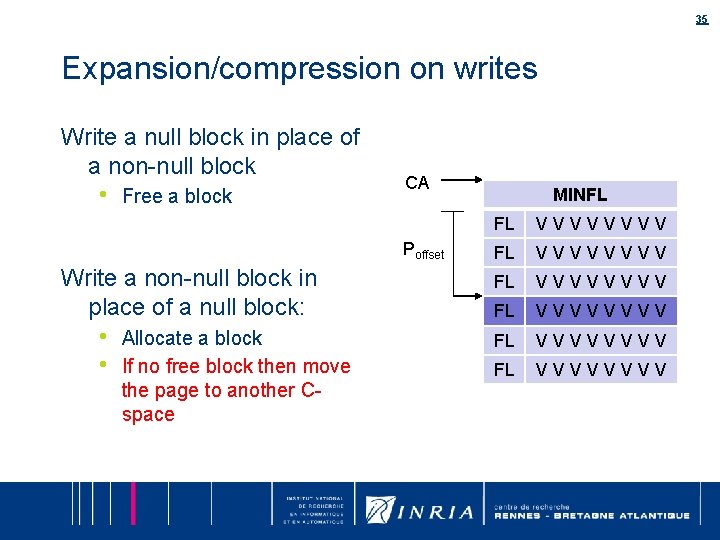

35 Expansion/compression on writes Write a null block in place of a non-null block • Free a block CA Poffset Write a non-null block in place of a null block: • • Allocate a block If no free block then move the page to another Cspace MINFL FL VVVVVVVV FL VVVVVVVV

36 Control structure overhead P-page descriptor • • C-space pointer A way-pointer and a null bit per block C-space descriptor: • 1 bit per block 8 KB page, 64 B per block, 4 MB C-space • 3. 2 % memory overhead

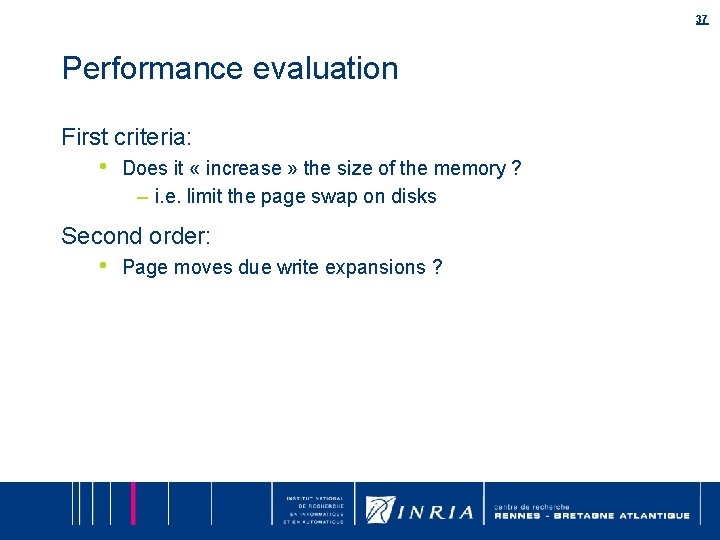

37 Performance evaluation First criteria: • Does it « increase » the size of the memory ? – i. e. limit the page swap on disks Second order: • Page moves due write expansions ?

38 Evaluation framework Full system simulation through SIMICS First 50 billions instructions on SPEC 2006 Varying main memory sizes

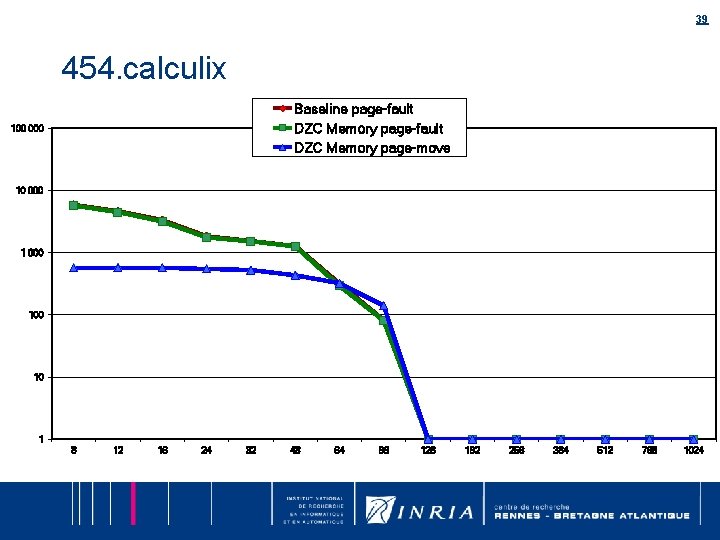

39 454. calculix Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

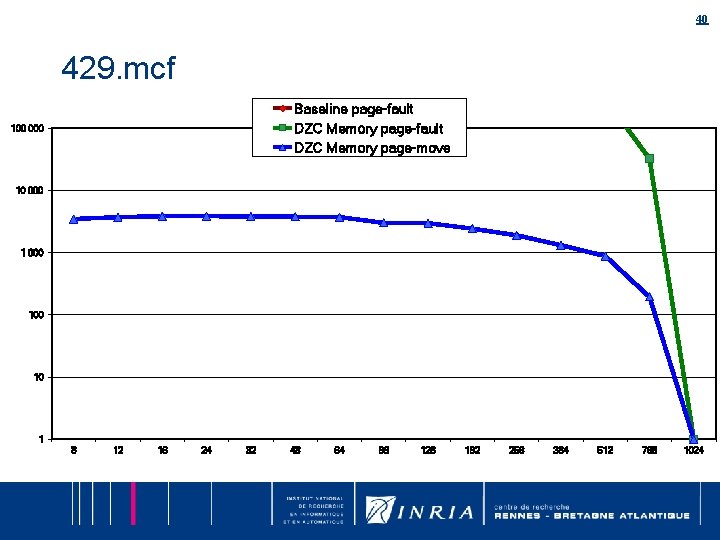

40 429. mcf Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

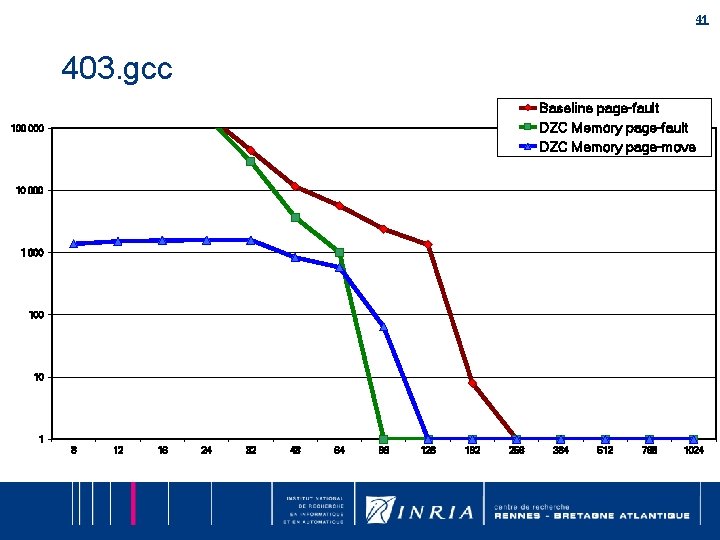

41 403. gcc Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

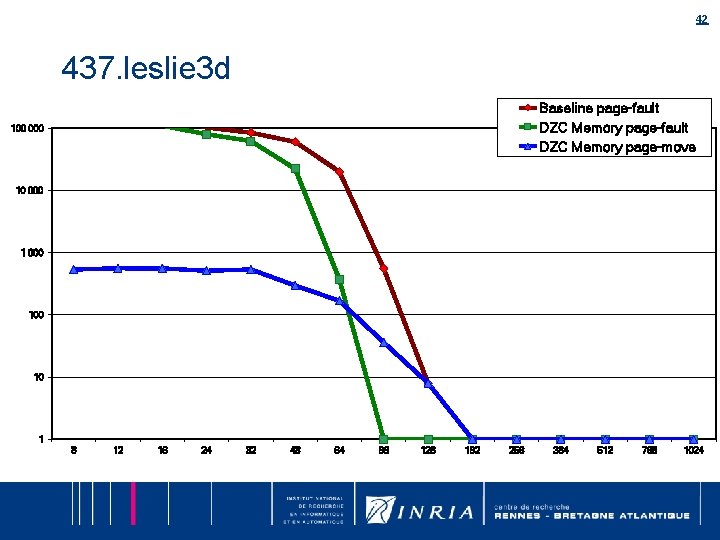

42 437. leslie 3 d Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

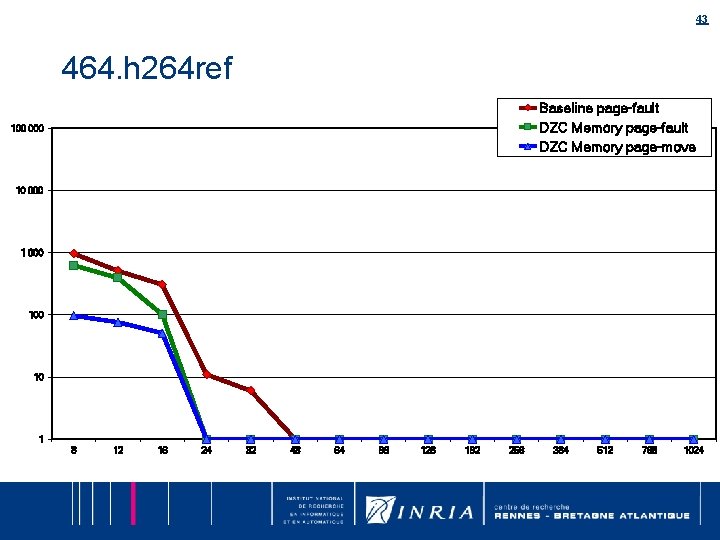

43 464. h 264 ref Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

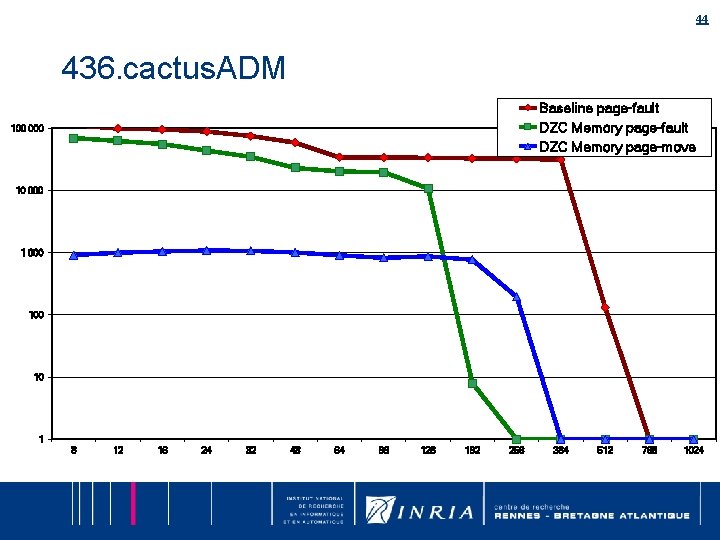

44 436. cactus. ADM Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

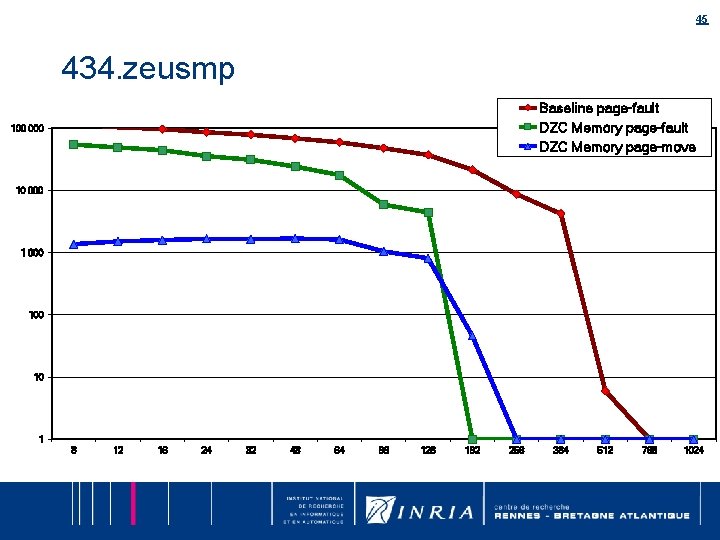

45 434. zeusmp Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

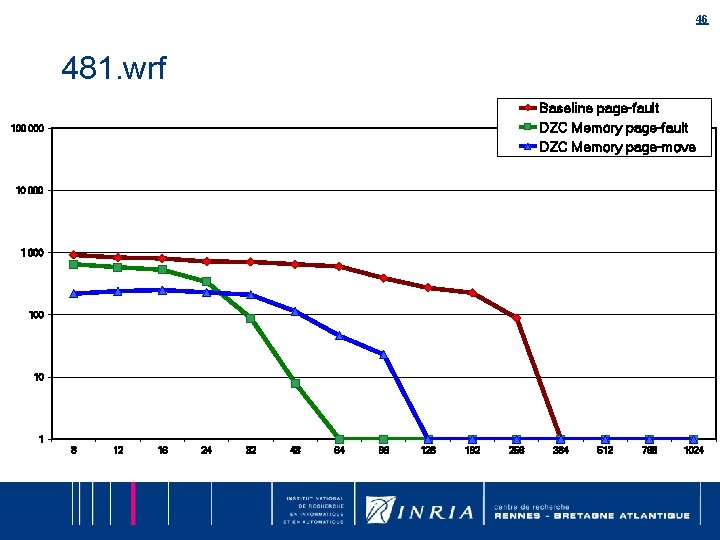

46 481. wrf Baseline page-fault DZC Memory page-move 100 000 10 1 8 12 16 24 32 48 64 96 128 192 256 384 512 768 1024

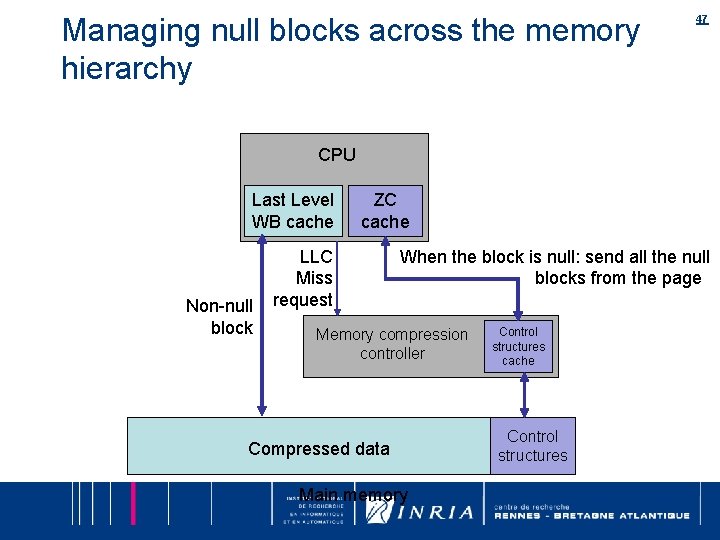

Managing null blocks across the memory hierarchy 47 CPU Last Level WB cache Non-null block ZC cache LLC Miss request When the block is null: send all the null blocks from the page Memory compression controller Compressed data Main memory Control structures cache Control structures

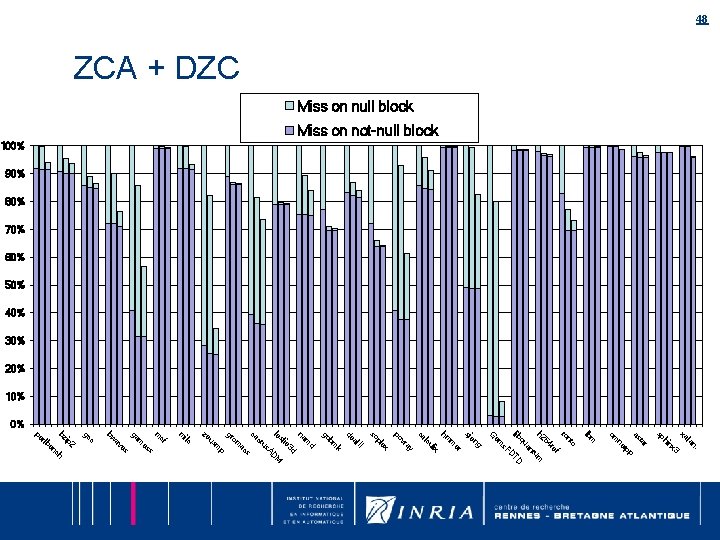

48 ZCA + DZC Miss on null block Miss on not-null block 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% . n la xa p 3 nx hi sp r tp ne ta as om o f re m tu TD D s. F an 64 nt m lb to h 2 qu lib em G lix er g en sj m hm II ex ay vr u lc ca po pl so al k bm de go p s ac d m na 3 d ie sl M le AD us ct ca om gr m us ze s es m cf ilc m m ga 2 en ch es av bw c gc ip bz rlb pe

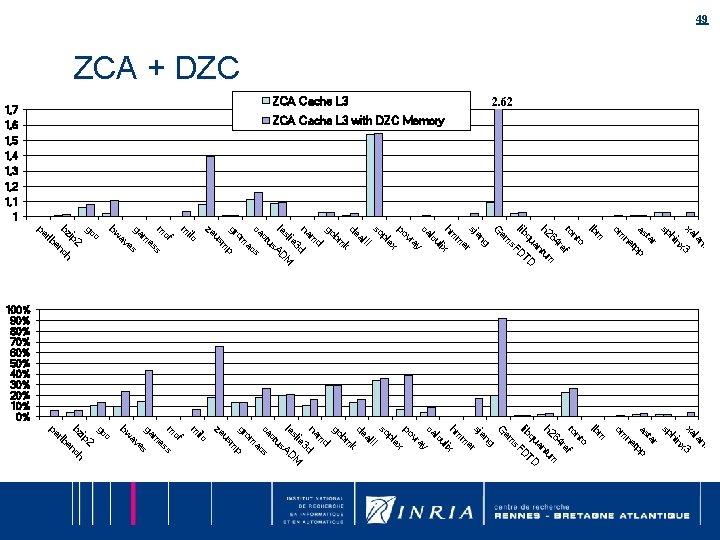

49 ZCA + DZC ZCA Cache L 3 with DZC Memory 1, 6 2. 62 ZCA Cache L 3 1, 7 1, 5 1, 4 1, 3 1, 2 1, 1 1 la xa n. p 3 tp nx hi sp r ta as ne om m lb o nt to f re 64 m h 2 tu an qu TD lib D s. F em G g en sj r e m hm x i ul lc ca ay vr po ex pl so II al de k bm go d m na d 3 ie M sl le AD us ct ca cs a om gr p m us ze ilc m s es es m cf m ga av bw c gc 2 ch en rlb ip bz pe 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% n. la xa 3 nx hi sp r ta as pp t ne om m lb o nt to f re 64 m u h 2 t an D qu lib FDT s em G g en sj r e m hm x i ul lc ca ay vr po ex pl so II al de k bm go d m na d 3 ie M sl le AD us ct ca cs a om gr p m us ze ilc m cf m s es m ga s e av bw c gc 2 ip bz nch e rlb pe

50 Conclusion Significant proportion of null blocks • Create an opportunity to compress: – Trivial compression/uncompression Can be leveraged through the whole hierarchy through combining ZCA cache and DZC cache

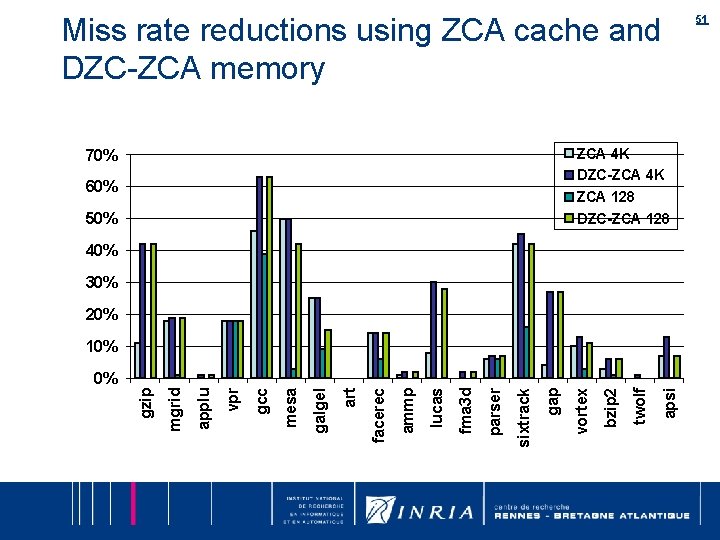

Miss rate reductions using ZCA cache and DZC-ZCA memory ZCA 4 K DZC-ZCA 4 K ZCA 128 DZC-ZCA 128 70% 60% 50% 40% 30% 20% 10% apsi twolf bzip 2 vortex gap sixtrack parser fma 3 d lucas ammp facerec art galgel mesa gcc vpr applu mgrid gzip 0% 51

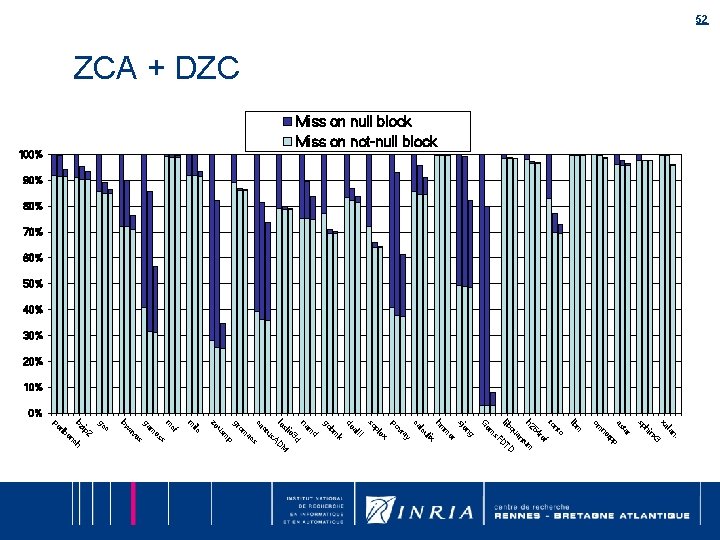

52 ZCA + DZC Miss on null block Miss on not-null block 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% r n. la xa 3 nx hi sp p f tp ne ta as om o nt m lb to re m tu TD D s. F an 64 h 2 qu lib lix er g em G en sj m hm ay vr u lc ca po p s ac ex pl so II al de k bm go d m na 3 d ie sl M le AD us ct ca om gr m us ze s es m cf ilc m m ga 2 en ch es av bw c gc ip bz rlb pe

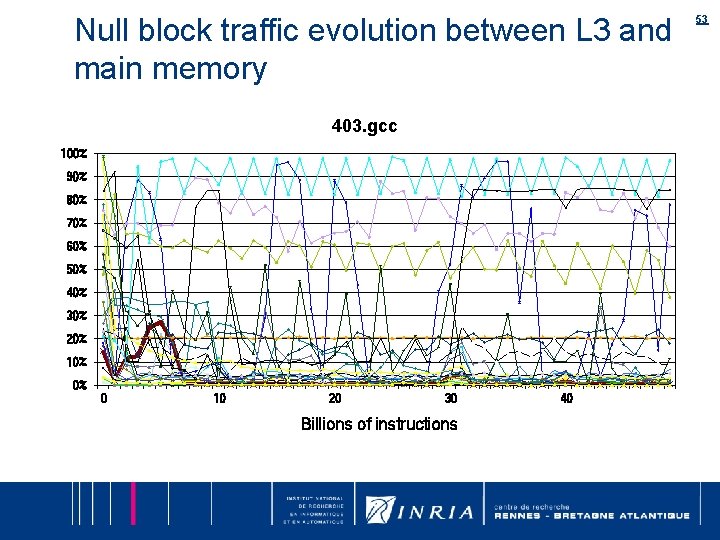

Null block traffic evolution between L 3 and main memory 403. gcc 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 0 10 20 30 Billions of instructions 40 53

54 Substantial miss rate reduction Miss on null block Miss on not-null block 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% r n. la xa 3 nx hi sp p m tu f tp ne ta as om o nt m lb to re 64 TD D s. F an qu h 2 lib lix er g em G m en sj hm ay vr u lc ca po p s ac ex pl so II al de k bm go d m na 3 d ie sl M le AD us ct ca om gr m us ze es s es m cf ilc m m ga av bw c gc ch en 2 ip bz rlb pe

- Slides: 54