1 Introduction CSEP 545 Transaction Processing Philip A

![To Commit 1. First copy Pt. T[a] and Pt. T[b] to disk D I To Commit 1. First copy Pt. T[a] and Pt. T[b] to disk D I](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-32.jpg)

![Examples of Equivalence • The following histories are equivalent H 1 = r 1[x] Examples of Equivalence • The following histories are equivalent H 1 = r 1[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-45.jpg)

![Another Example • H 6 = r 1[x] r 2[x] w 1[x] r 3[x] Another Example • H 6 = r 1[x] r 2[x] w 1[x] r 3[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-47.jpg)

![Strictness • It’s convenient to undo a write, w[x], by restoring its before image Strictness • It’s convenient to undo a write, w[x], by restoring its before image](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-54.jpg)

![Strictness (cont’d) • More precisely, a system is strict if it only executes ri[x] Strictness (cont’d) • More precisely, a system is strict if it only executes ri[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-55.jpg)

![Basic Locking Isn’t Enough • Basic locking doesn’t guarantee serializability • rl 1[x] ru Basic Locking Isn’t Enough • Basic locking doesn’t guarantee serializability • rl 1[x] ru](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-57.jpg)

- Slides: 61

1. Introduction CSEP 545 Transaction Processing Philip A. Bernstein Copyright © 2003 Philip A. Bernstein 3/27/03 1

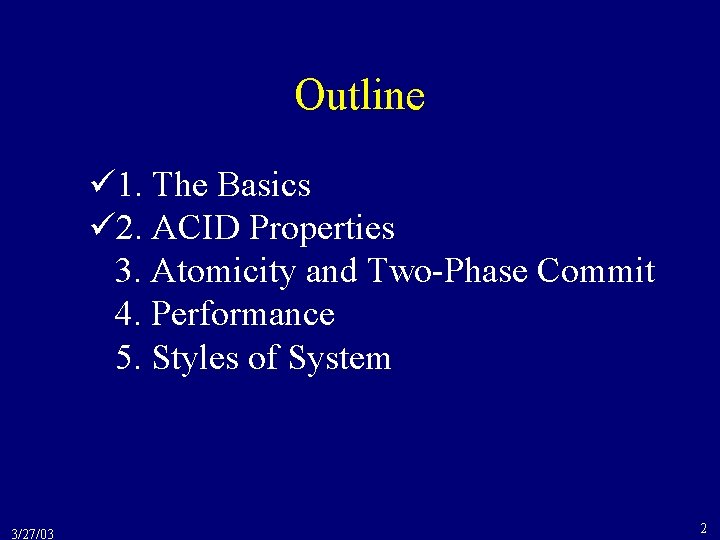

Outline 1. The Basics 2. ACID Properties 3. Atomicity and Two-Phase Commit 4. Performance 5. Styles of System 3/27/03 2

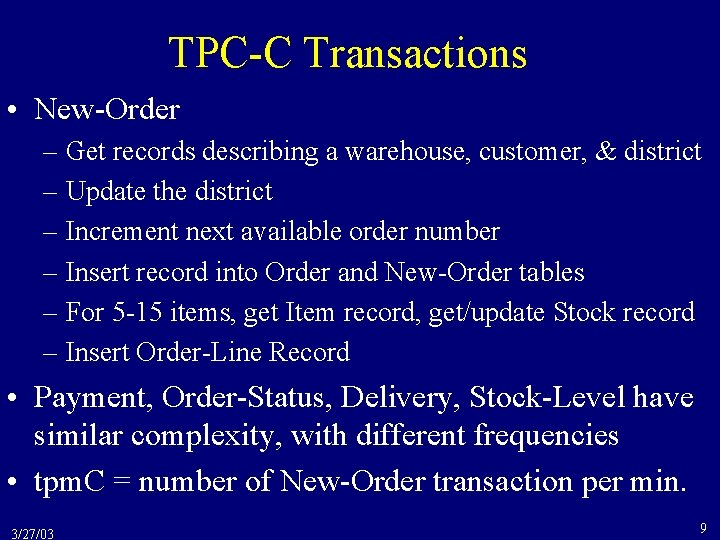

1. 3 Atomicity and Two-Phase Commit • Distributed systems make atomicity harder • Suppose a transaction updates data managed by two DB systems. • One DB system could commit the transaction, but a failure could prevent the other system from committing. • The solution is the two-phase commit protocol. • Abstract “DB system” by resource manager (could be a SQL DBMS, message mgr, queue mgr, OO DBMS, etc. ) 3/27/03 3

Two-Phase Commit • Main idea - all resource managers (RMs) save a durable copy of the transaction’s updates before any of them commit. • If one RM fails after another commits, the failed RM can still commit after it recovers. • The protocol to commit transaction T – Phase 1 - T’s coordinator asks all participant RMs to “prepare the transaction”. Participant RMs replies “prepared” after T’s updates are durable. – Phase 2 - After receiving “prepared” from all participant RMs, the coordinator tells all participant RMs to commit. 3/27/03 4

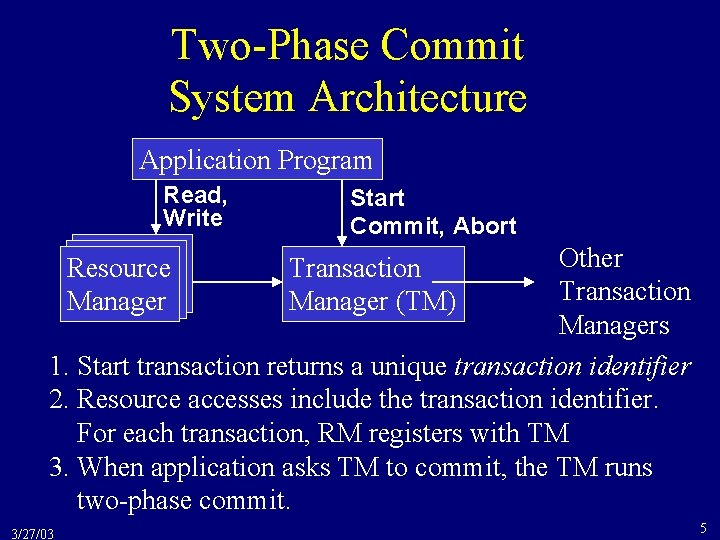

Two-Phase Commit System Architecture Application Program Read, Write Start Commit, Abort Other Transaction Managers 1. Start transaction returns a unique transaction identifier 2. Resource accesses include the transaction identifier. For each transaction, RM registers with TM 3. When application asks TM to commit, the TM runs two-phase commit. Resource Manager 3/27/03 Transaction Manager (TM) 5

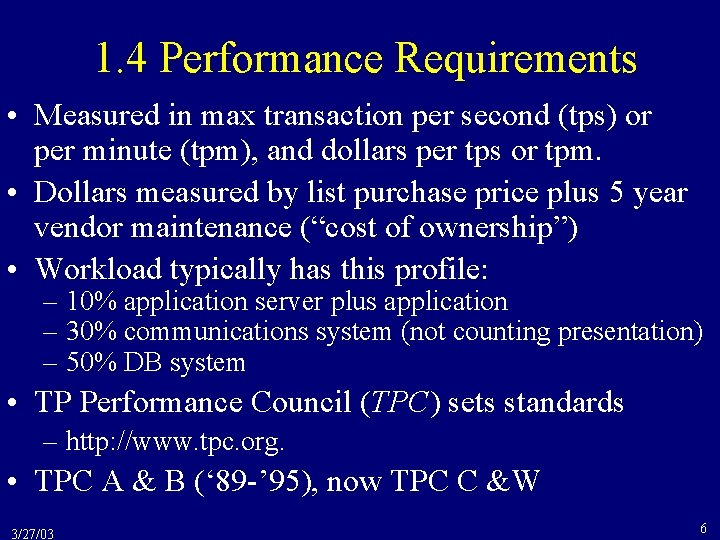

1. 4 Performance Requirements • Measured in max transaction per second (tps) or per minute (tpm), and dollars per tps or tpm. • Dollars measured by list purchase price plus 5 year vendor maintenance (“cost of ownership”) • Workload typically has this profile: – 10% application server plus application – 30% communications system (not counting presentation) – 50% DB system • TP Performance Council (TPC) sets standards – http: //www. tpc. org. • TPC A & B (‘ 89 -’ 95), now TPC C &W 3/27/03 6

TPC-A/B — Bank Tellers • Obsolete (a retired standard), but interesting • Input is 100 byte message requesting deposit/withdrawal • Database tables = {Accounts, Tellers, Branches, History} Start Read message from terminal (100 bytes) Read+write account record (random access) Write history record (sequential access) Read+write teller record (random access) Read+write branch record (random access) Write message to terminal (200 bytes) Commit • End of history and branch records are bottlenecks 3/27/03 7

The TPC-C Order-Entry Benchmark • TPC-C uses heavier weight transactions 3/27/03 8

TPC-C Transactions • New-Order – Get records describing a warehouse, customer, & district – Update the district – Increment next available order number – Insert record into Order and New-Order tables – For 5 -15 items, get Item record, get/update Stock record – Insert Order-Line Record • Payment, Order-Status, Delivery, Stock-Level have similar complexity, with different frequencies • tpm. C = number of New-Order transaction per min. 3/27/03 9

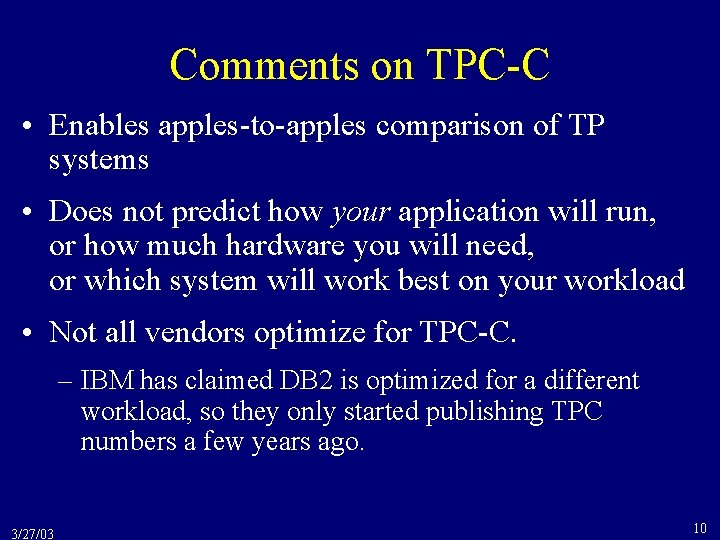

Comments on TPC-C • Enables apples-to-apples comparison of TP systems • Does not predict how your application will run, or how much hardware you will need, or which system will work best on your workload • Not all vendors optimize for TPC-C. – IBM has claimed DB 2 is optimized for a different workload, so they only started publishing TPC numbers a few years ago. 3/27/03 10

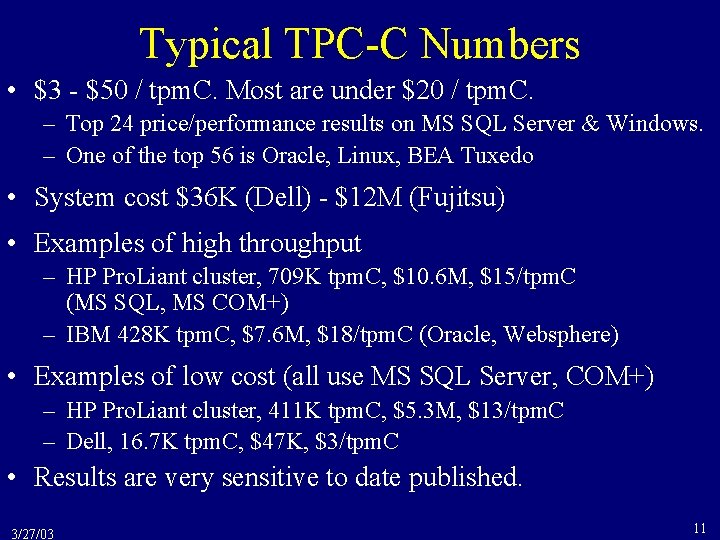

Typical TPC-C Numbers • $3 - $50 / tpm. C. Most are under $20 / tpm. C. – Top 24 price/performance results on MS SQL Server & Windows. – One of the top 56 is Oracle, Linux, BEA Tuxedo • System cost $36 K (Dell) - $12 M (Fujitsu) • Examples of high throughput – HP Pro. Liant cluster, 709 K tpm. C, $10. 6 M, $15/tpm. C (MS SQL, MS COM+) – IBM 428 K tpm. C, $7. 6 M, $18/tpm. C (Oracle, Websphere) • Examples of low cost (all use MS SQL Server, COM+) – HP Pro. Liant cluster, 411 K tpm. C, $5. 3 M, $13/tpm. C – Dell, 16. 7 K tpm. C, $47 K, $3/tpm. C • Results are very sensitive to date published. 3/27/03 11

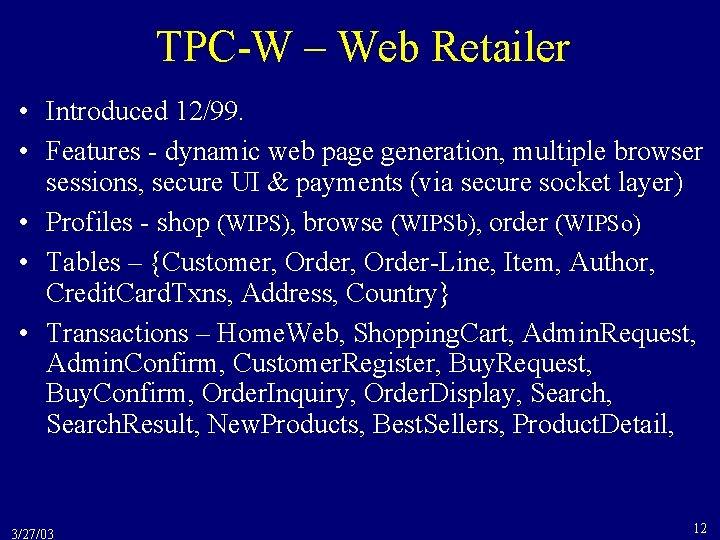

TPC-W – Web Retailer • Introduced 12/99. • Features - dynamic web page generation, multiple browser sessions, secure UI & payments (via secure socket layer) • Profiles - shop (WIPS), browse (WIPSb), order (WIPSo) • Tables – {Customer, Order-Line, Item, Author, Credit. Card. Txns, Address, Country} • Transactions – Home. Web, Shopping. Cart, Admin. Request, Admin. Confirm, Customer. Register, Buy. Request, Buy. Confirm, Order. Inquiry, Order. Display, Search. Result, New. Products, Best. Sellers, Product. Detail, 3/27/03 12

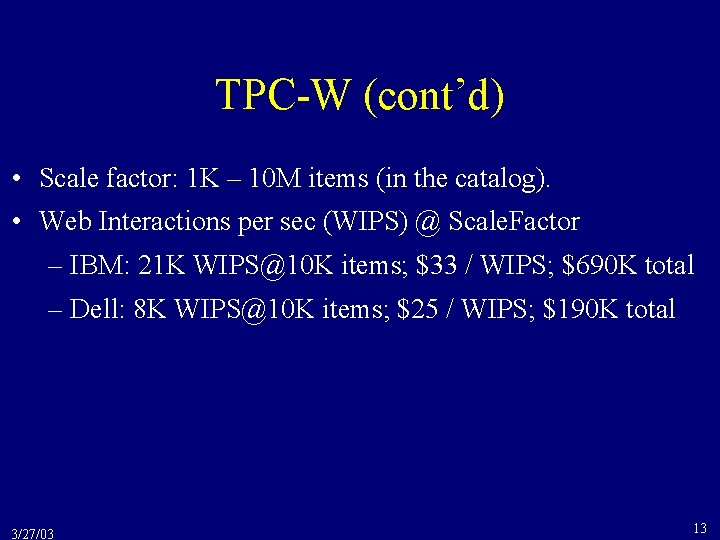

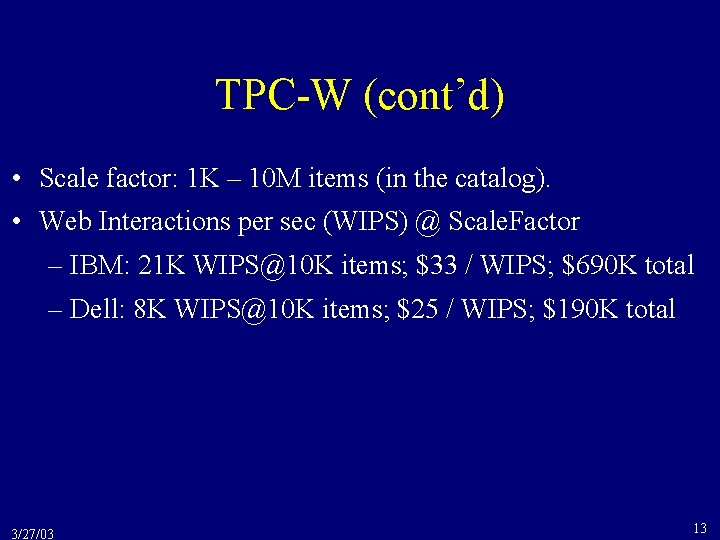

TPC-W (cont’d) • Scale factor: 1 K – 10 M items (in the catalog). • Web Interactions per sec (WIPS) @ Scale. Factor – IBM: 21 K WIPS@10 K items; $33 / WIPS; $690 K total – Dell: 8 K WIPS@10 K items; $25 / WIPS; $190 K total 3/27/03 13

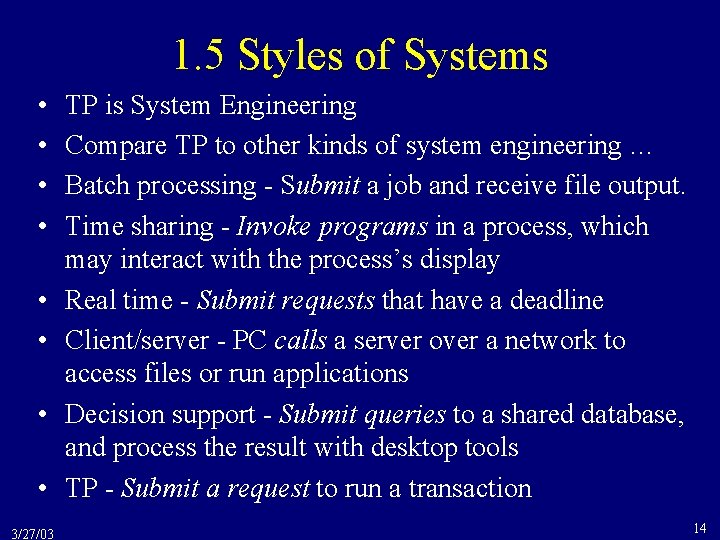

1. 5 Styles of Systems • • 3/27/03 TP is System Engineering Compare TP to other kinds of system engineering … Batch processing - Submit a job and receive file output. Time sharing - Invoke programs in a process, which may interact with the process’s display Real time - Submit requests that have a deadline Client/server - PC calls a server over a network to access files or run applications Decision support - Submit queries to a shared database, and process the result with desktop tools TP - Submit a request to run a transaction 14

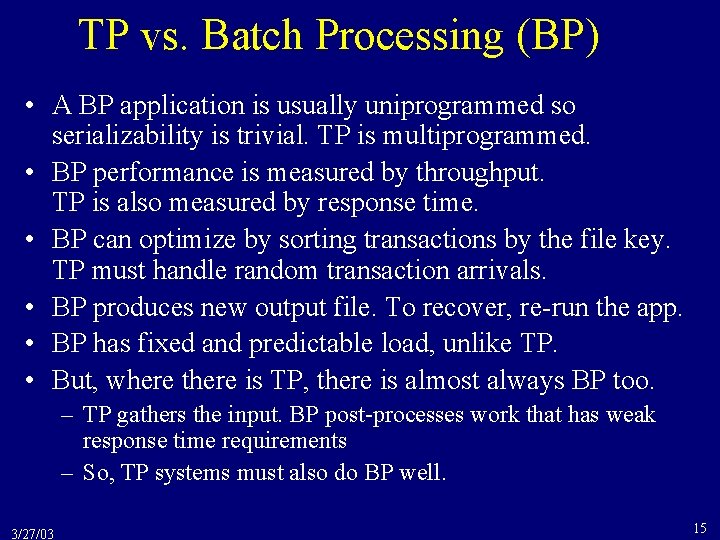

TP vs. Batch Processing (BP) • A BP application is usually uniprogrammed so serializability is trivial. TP is multiprogrammed. • BP performance is measured by throughput. TP is also measured by response time. • BP can optimize by sorting transactions by the file key. TP must handle random transaction arrivals. • BP produces new output file. To recover, re-run the app. • BP has fixed and predictable load, unlike TP. • But, where there is TP, there is almost always BP too. – TP gathers the input. BP post-processes work that has weak response time requirements – So, TP systems must also do BP well. 3/27/03 15

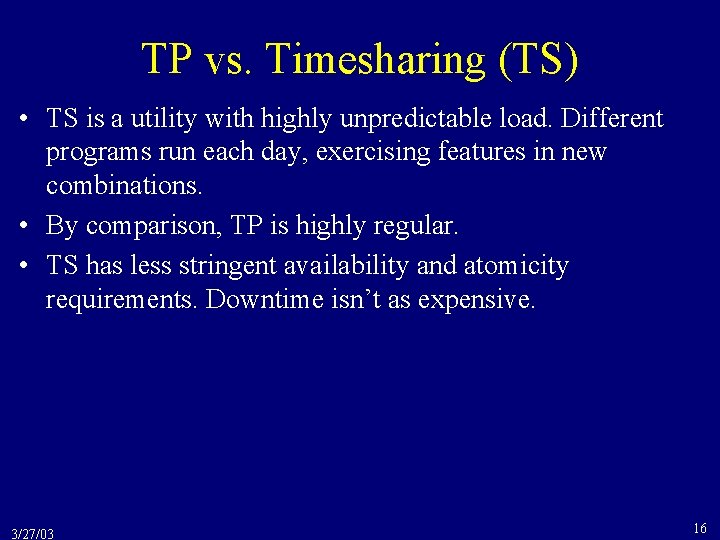

TP vs. Timesharing (TS) • TS is a utility with highly unpredictable load. Different programs run each day, exercising features in new combinations. • By comparison, TP is highly regular. • TS has less stringent availability and atomicity requirements. Downtime isn’t as expensive. 3/27/03 16

TP vs. Real Time (RT) • RT has more stringent response time requirements. It may control a physical process. • RT deals with more specialized devices. • RT doesn’t need or use a transaction abstraction – usually loose about atomicity and serializability • In RT, response time goals are usually more important than completeness or correctness. In TP, correctness is paramount. 3/27/03 17

TP and Client/Server (C/S) • Is commonly used for TP, where client prepares requests and server runs transactions • In a sense, TP systems were the first C/S systems, where the client was a terminal 3/27/03 18

TP and Decision Support Systems (DSSs) • DSSs run long queries, usually with lower data integrity requirements than TP. • A. k. a. data warehouse (DSS is the more generic term. ) • TP systems provide the raw data for DSSs. 3/27/03 19

Outline 1. The Basics 2. ACID Properties 3. Atomicity and Two-Phase Commit 4. Performance 5. Styles of System 3/27/03 20

What’s Next? • This chapter covered TP system structure and properties of transactions and TP systems • The rest of the course drills deeply into each of these areas, one by one. 3/27/03 21

2. Atomicity & Durability Using Shadow Paging CSEP 545 Transaction Processing for E-Commerce Philip A. Bernstein Copyright © 2003 Philip A. Bernstein 3/27/03 22

Introduction • To get started on the Java-C# project, you need to implement atomicity and durability in a centralized resource manager (i. e. a database). • Recommend approach is shadowing. • This section provides a quick introduction. • A more thorough explanation of the overall topic of database recovery will be presented in a couple of weeks. 3/27/03 23

Review of Atomicity & Durability • Atomicity - a transaction is all-or-nothing • Durability - results of a committed transaction will survive failures • Problem – The only hardware operation that is atomic with respect to failure and whose result is durable is “write one disk block” – But the database doesn’t fit on one disk block! 3/27/03 24

Shadowing in a Nutshell • • • The database is a tree whose root is a single disk block There are two copies of the tree, the master and shadow The root points to the master copy Updates are applied to a shadow copy To install the updates, overwrite the root so it points to the shadow, thereby swapping the master and shadow – Before writing the root, none of the transaction’s updates are part of the disk-resident database – After writing the root, all of the transaction’s updates are part of the disk-resident database – Which means the transaction is atomic and durable 3/27/03 25

More Specifically … • The database consists of a set of files • Each file consists of a page table P and a set of pages that P points to. • A master page points to each file’s master page table. • Assume no concurrency. I. e. , one transaction runs at any given time. • Assume the transaction has a private shadow copy of each page table. 3/27/03 26

Initial State of Files a and b D I S K 3/27/03 Initial State Master a b Pt 1[a] 1 2 3. . . P 1 a Pt 1[b] 1 2 3. . . P 1 b P 2 a P 2 b Pt. T[a] 1 2 Main 3 Memory. . . For T Pt. T[b] 1 2 3. . . 27

To Write a Page Pi • Transaction writes a shadow copy of page Pi to disk • Transaction updates its page table to point to the shadow copy of Pi • Transaction marks Pi’s entry in the page table (to remember which pages were updated) 3/27/03 28

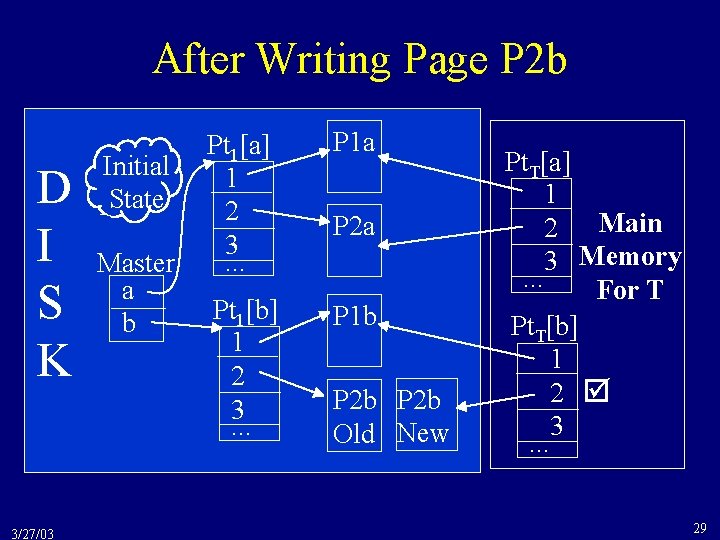

After Writing Page P 2 b D I S K 3/27/03 Initial State Master a b Pt 1[a] 1 2 3. . . P 1 a Pt 1[b] 1 2 3. . . P 1 b P 2 a P 2 b Old New Pt. T[a] 1 2 Main 3 Memory. . . For T Pt. T[b] 1 2 3. . . 29

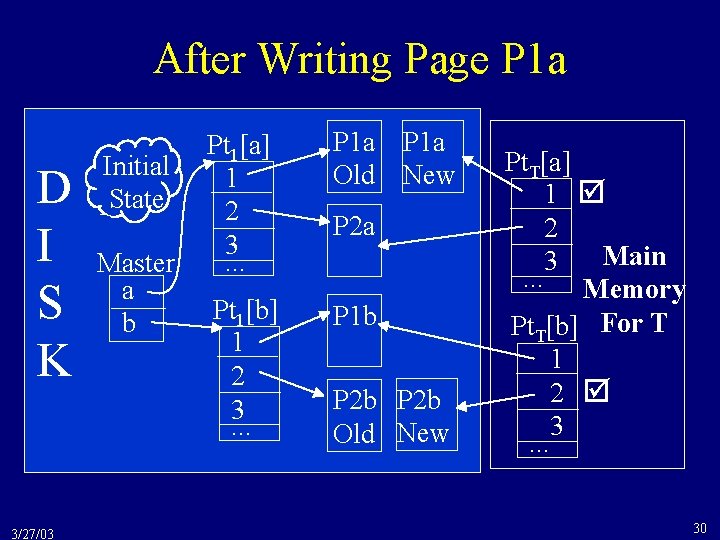

After Writing Page P 1 a D I S K 3/27/03 Initial State Master a b Pt 1[a] 1 2 3. . . P 1 a Old Pt 1[b] 1 2 3. . . P 1 b P 1 a New P 2 a P 2 b Old New Pt. T[a] 1 2 Main 3. . . Memory Pt. T[b] For T 1 2 3. . . 30

What if the System Fails? • Main memory is lost • The current transaction is effectively aborted • But the database is still consistent 3/27/03 31

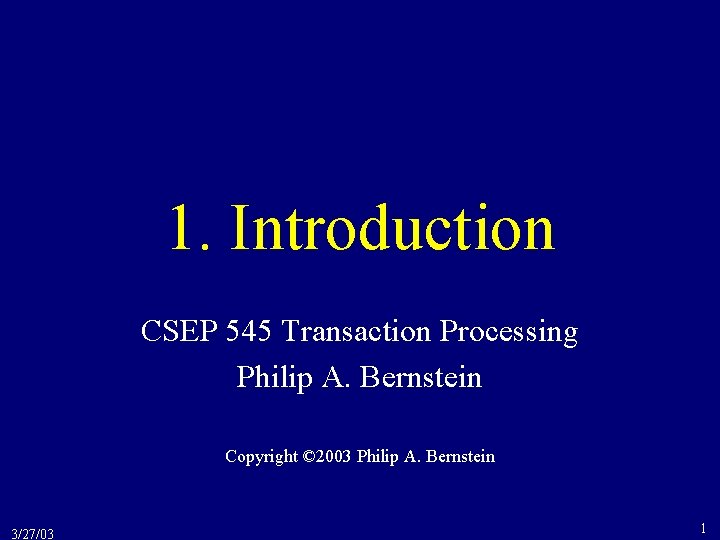

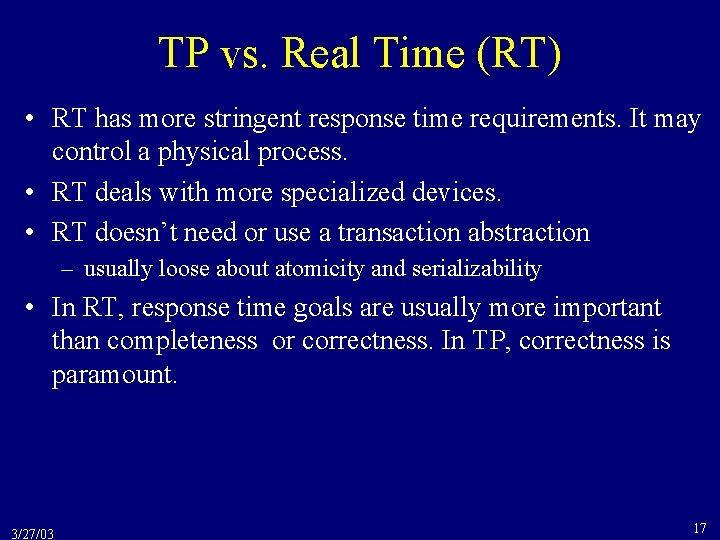

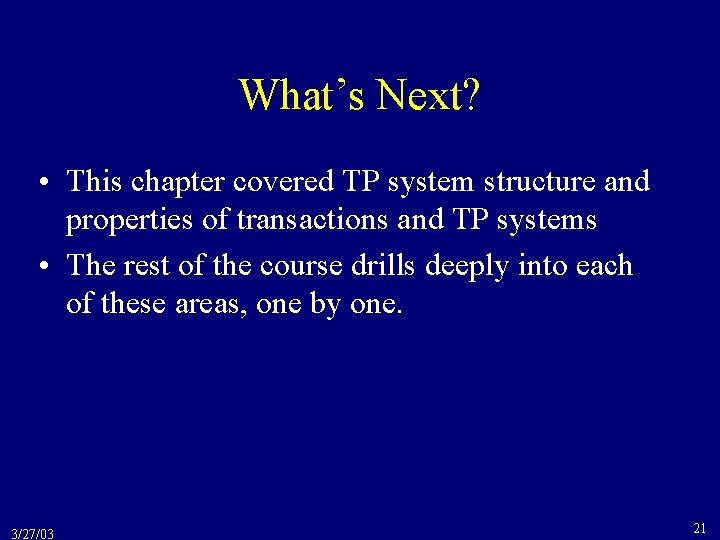

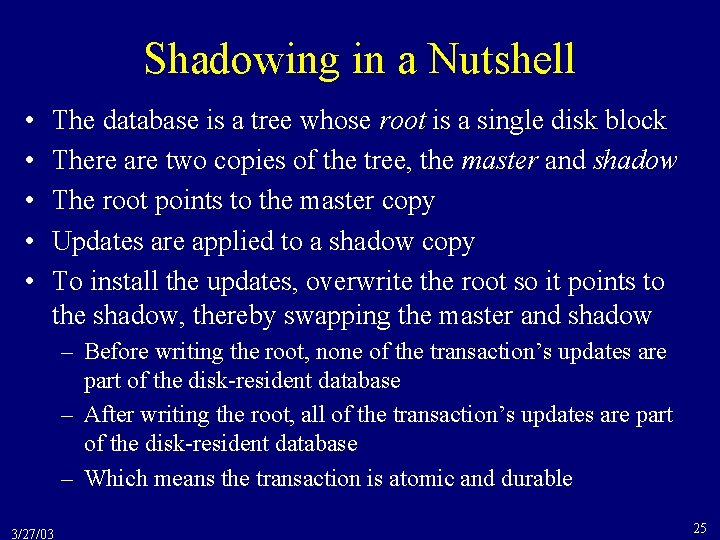

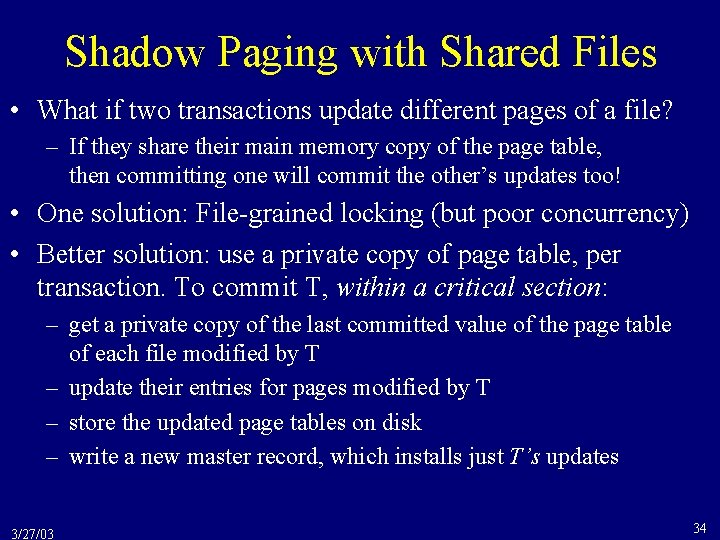

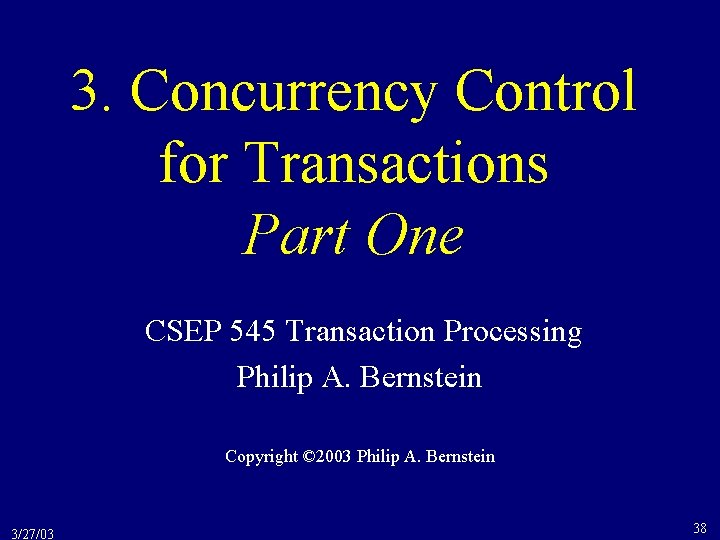

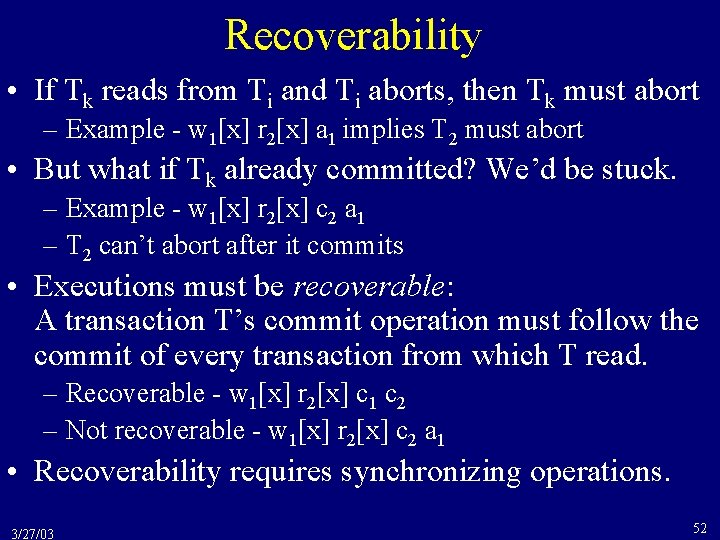

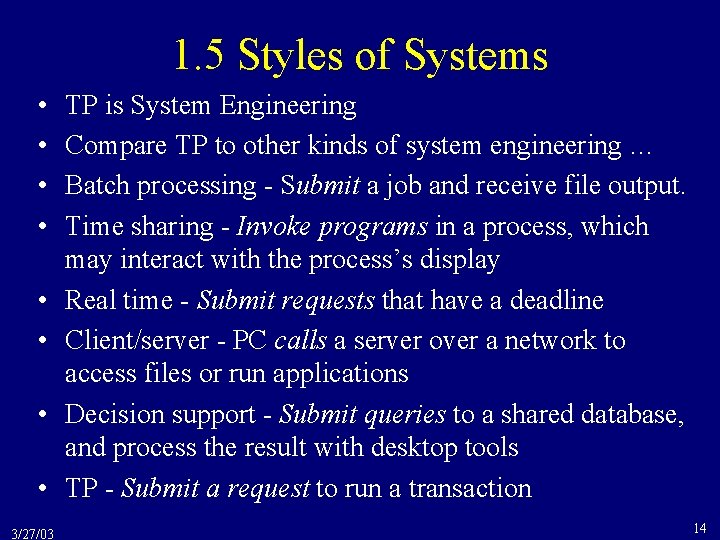

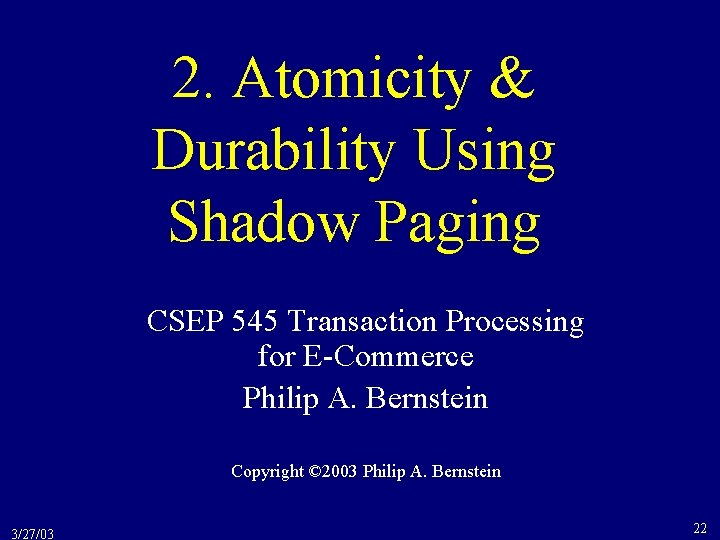

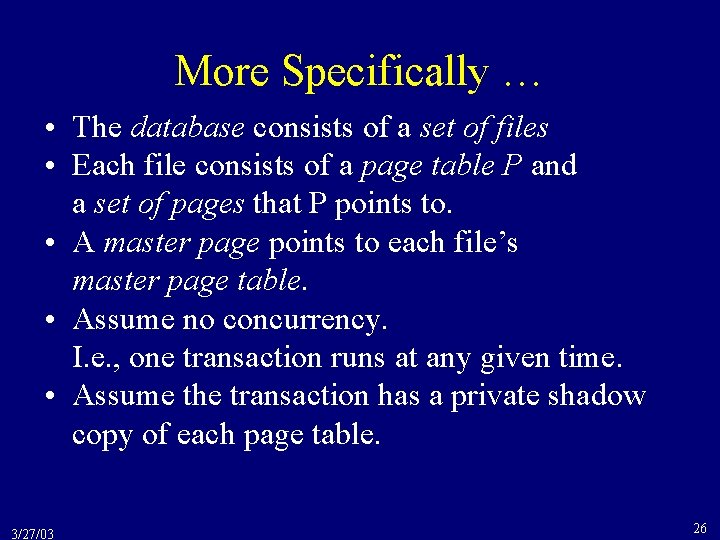

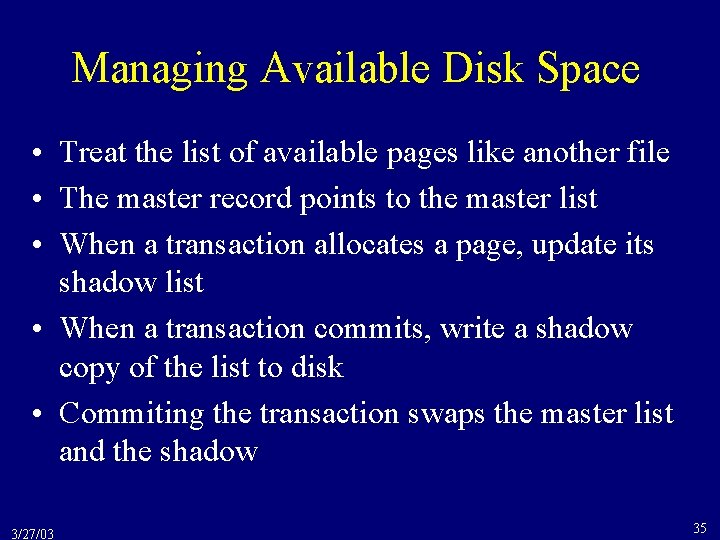

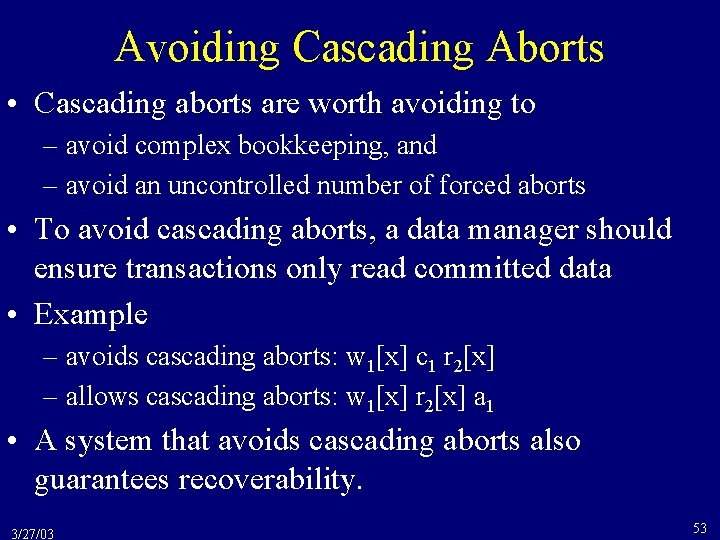

![To Commit 1 First copy Pt Ta and Pt Tb to disk D I To Commit 1. First copy Pt. T[a] and Pt. T[b] to disk D I](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-32.jpg)

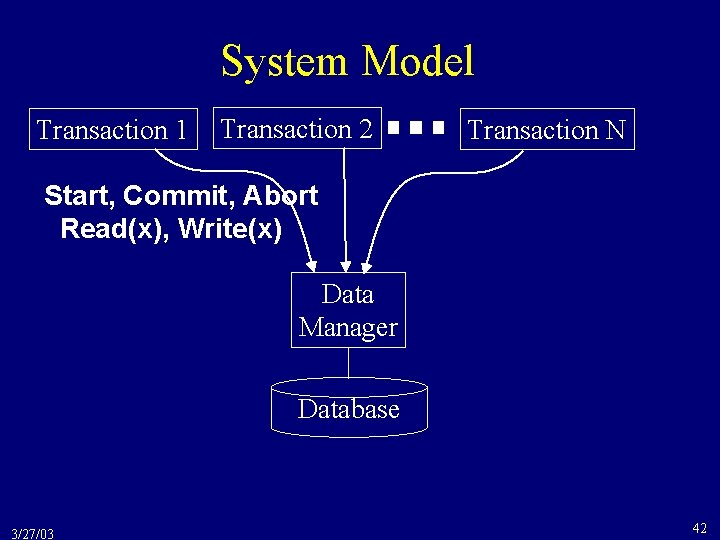

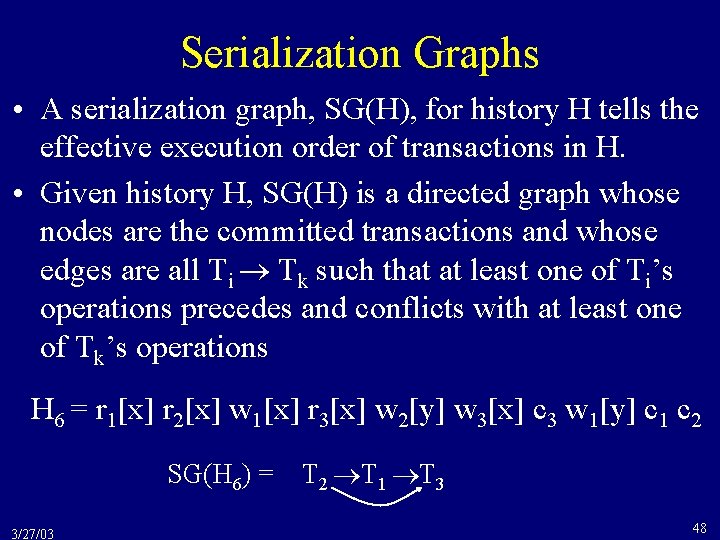

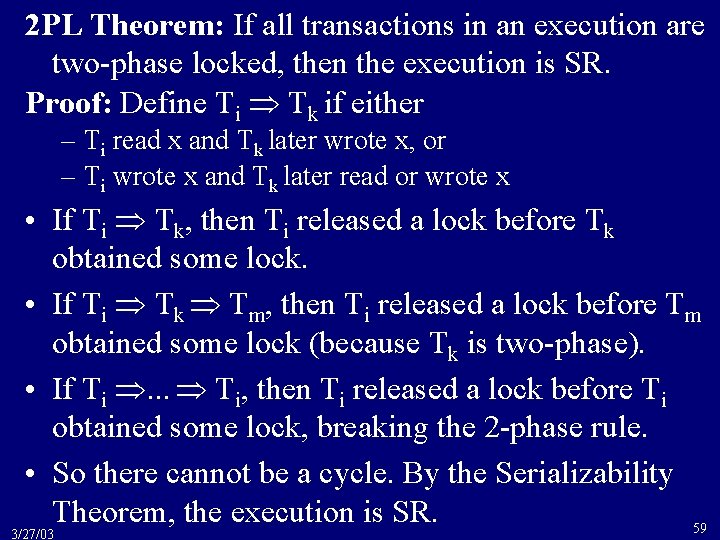

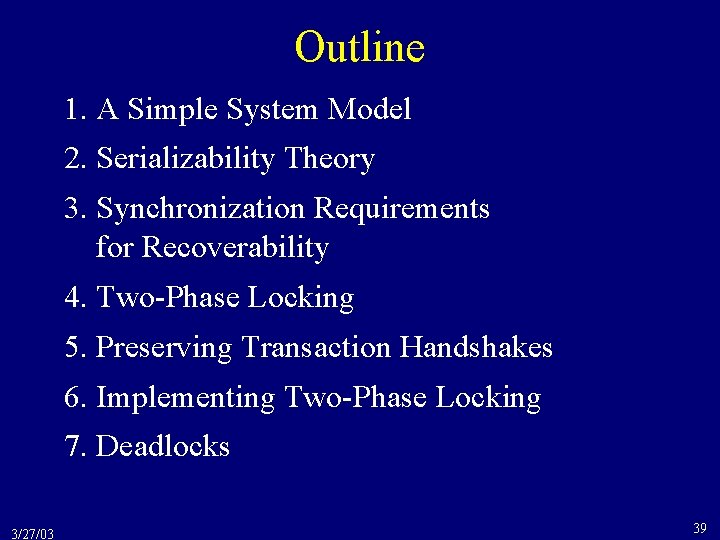

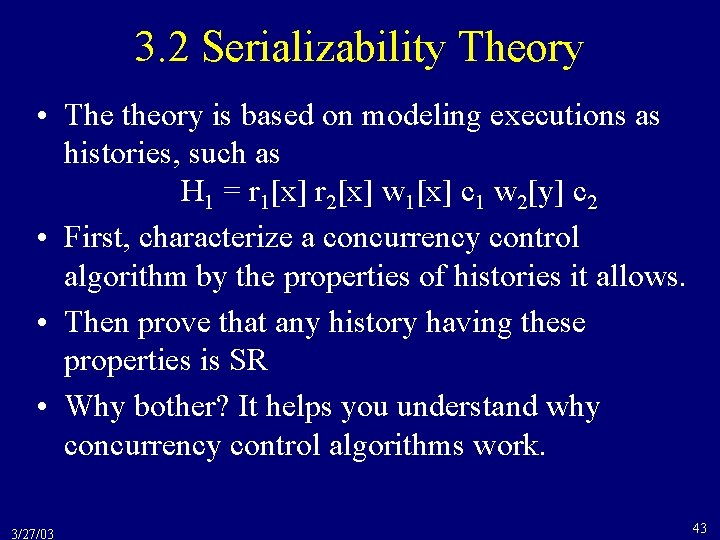

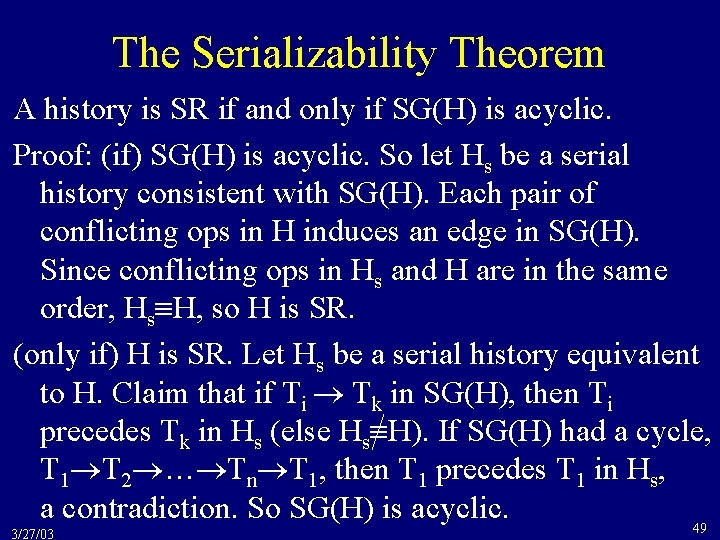

To Commit 1. First copy Pt. T[a] and Pt. T[b] to disk D I S K 3/27/03 Initial State Master a b Pt 1[a] 1 2 3. . . P 1 a Old Pt 1[b] 1 2 3. . . P 1 b P 1 a New P 2 a P 2 b Old New Pt. T[a] 1 2 3. . . Pt. T[b] 1 2 3. . . 32

To Commit (cont’d) 2. Then overwrite Master to point to the new Pt’s. D I S K 3/27/03 Initial State Master a b Pt 1[a] 1 2 3. . . P 1 a Old Pt 1[b] 1 2 3. . . P 1 b P 1 a New P 2 a P 2 b Old New Pt. T[a] 1 2 3. . . Pt. T[b] 1 2 3. . . 33

Shadow Paging with Shared Files • What if two transactions update different pages of a file? – If they share their main memory copy of the page table, then committing one will commit the other’s updates too! • One solution: File-grained locking (but poor concurrency) • Better solution: use a private copy of page table, per transaction. To commit T, within a critical section: – get a private copy of the last committed value of the page table of each file modified by T – update their entries for pages modified by T – store the updated page tables on disk – write a new master record, which installs just T’s updates 3/27/03 34

Managing Available Disk Space • Treat the list of available pages like another file • The master record points to the master list • When a transaction allocates a page, update its shadow list • When a transaction commits, write a shadow copy of the list to disk • Commiting the transaction swaps the master list and the shadow 3/27/03 35

Final Remarks • Don’t need to write shadow pages to disk until the transaction is ready to commit – Saves disk writes if a transaction writes a page multiple times • Main benefit is that doesn’t require much code • Used in the Gemstone OO DBMS. • Not good for TPC benchmarks – count disk updates per transaction – how to do record level locking? 3/27/03 36

References • P. A. Bernstein, V. Hadzilacos, N. Goodman, Concurrency Control and Recovery in Database Systems, Chapter 6, Section 7 (pp. 201 -204) – The book is downloadable from http: //research. microsoft. com/pubs/ccontrol/ • Originally proposed by Raymond Lorie in “Physical Integrity in a Large Segmented Database”ACM Transactions on Database Systems, March 1977. 3/27/03 37

3. Concurrency Control for Transactions Part One CSEP 545 Transaction Processing Philip A. Bernstein Copyright © 2003 Philip A. Bernstein 3/27/03 38

Outline 1. A Simple System Model 2. Serializability Theory 3. Synchronization Requirements for Recoverability 4. Two-Phase Locking 5. Preserving Transaction Handshakes 6. Implementing Two-Phase Locking 7. Deadlocks 3/27/03 39

3. 1 A Simple System Model • Goal - Ensure serializable (SR) executions • Implementation technique - Delay operations that would lead to non-SR results (e. g. set locks on shared data) • For good performance minimize overhead and delay from synchronization operations • First, we’ll study how to get correct (SR) results • Then, we’ll study performance implications (mostly in Part Two) 3/27/03 40

Assumption - Atomic Operations • We will synchronize Reads and Writes. • We must therefore assume they’re atomic – else we’d have to synchronize the finer-grained operations that implement Read and Write • Read(x) - returns the current value of x in the DB • Write(x, val) overwrites all of x (the whole page) • This assumption of atomic operations is what allows us to abstract executions as sequences of reads and writes (without loss of information). – Otherwise, what would wk[x] ri[x] mean? • Also, commit (ci) and abort (ai) are atomic 3/27/03 41

System Model Transaction 1 Transaction 2 Transaction N Start, Commit, Abort Read(x), Write(x) Data Manager Database 3/27/03 42

3. 2 Serializability Theory • The theory is based on modeling executions as histories, such as H 1 = r 1[x] r 2[x] w 1[x] c 1 w 2[y] c 2 • First, characterize a concurrency control algorithm by the properties of histories it allows. • Then prove that any history having these properties is SR • Why bother? It helps you understand why concurrency control algorithms work. 3/27/03 43

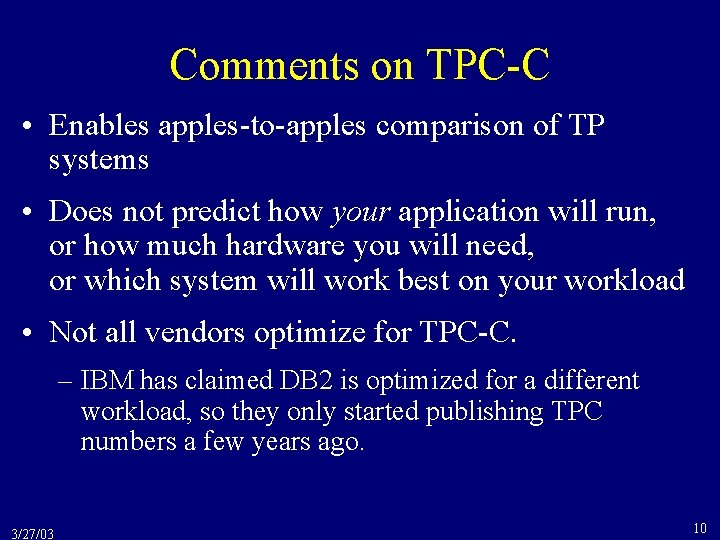

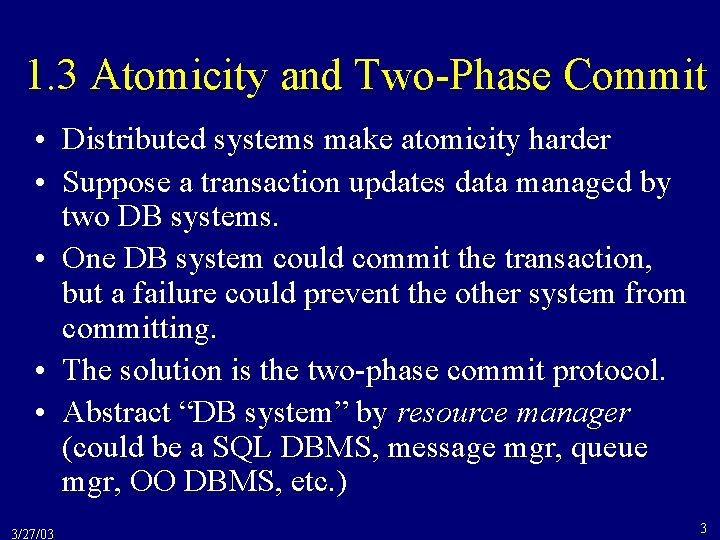

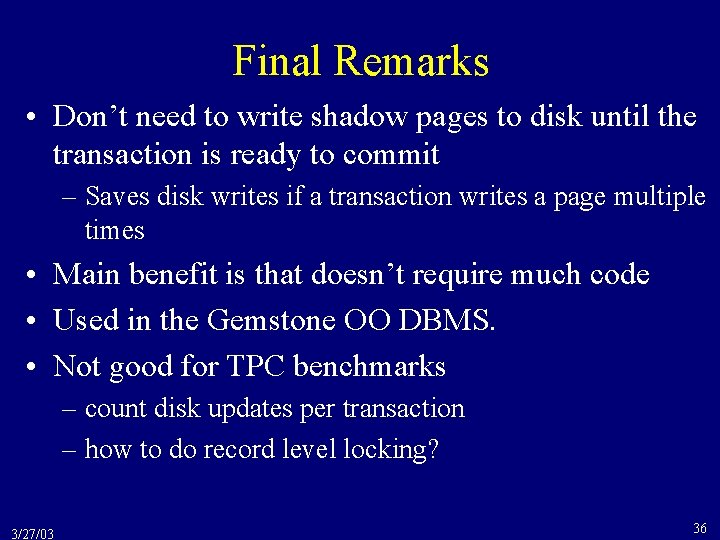

Equivalence of Histories • Two operations conflict if their execution order affects their return values or the DB state. – a read and write on the same data item conflict – two writes on the same data item conflict – two reads (on the same data item) do not conflict • Two histories are equivalent if they have the same operations and conflicting operations are in the same order in both histories – because only the relative order of conflicting operations can affect the result of the histories 3/27/03 44

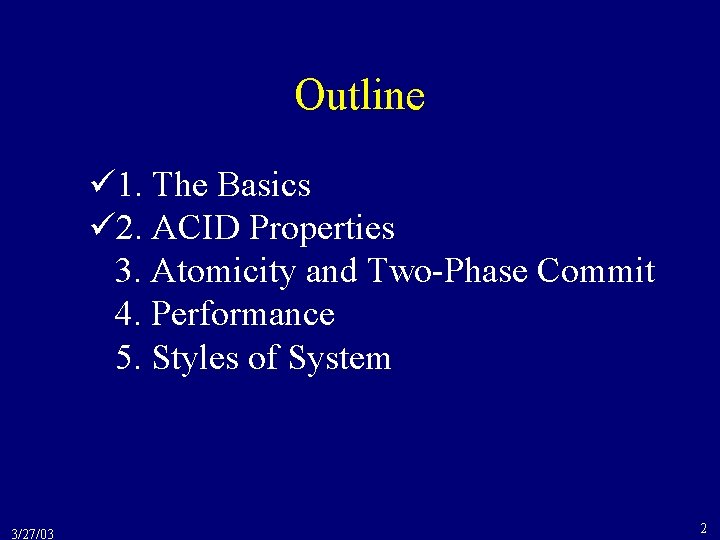

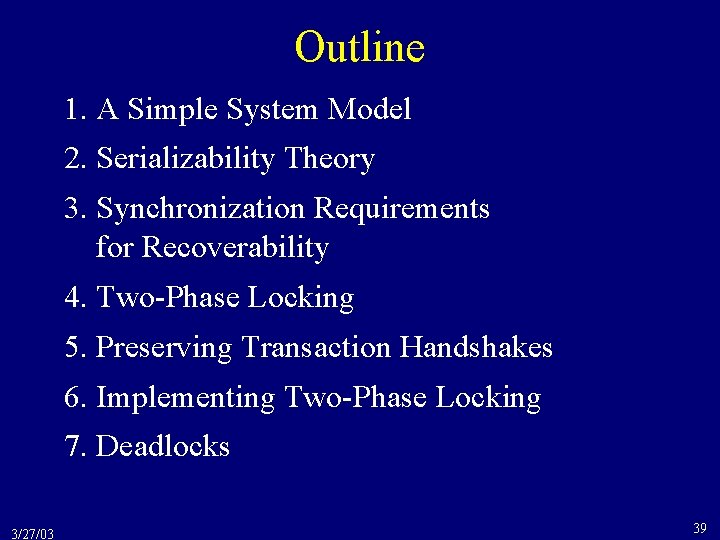

![Examples of Equivalence The following histories are equivalent H 1 r 1x Examples of Equivalence • The following histories are equivalent H 1 = r 1[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-45.jpg)

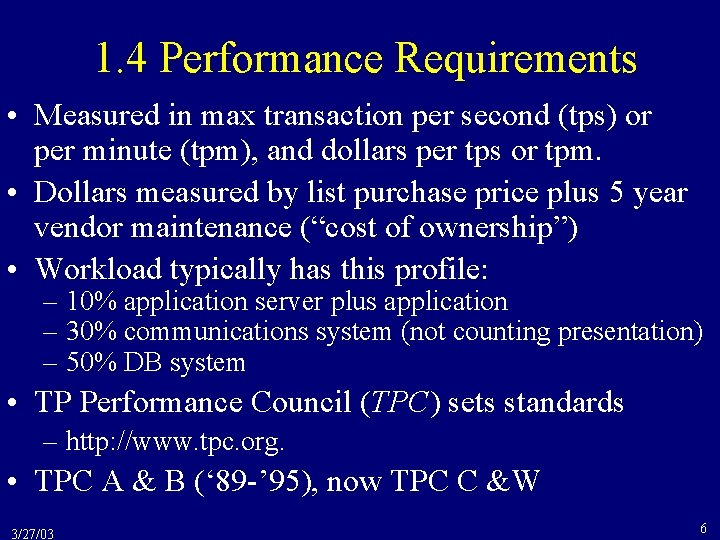

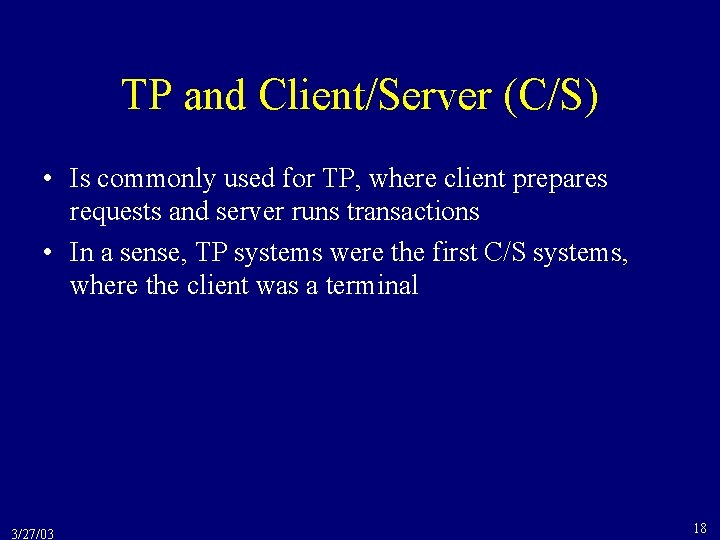

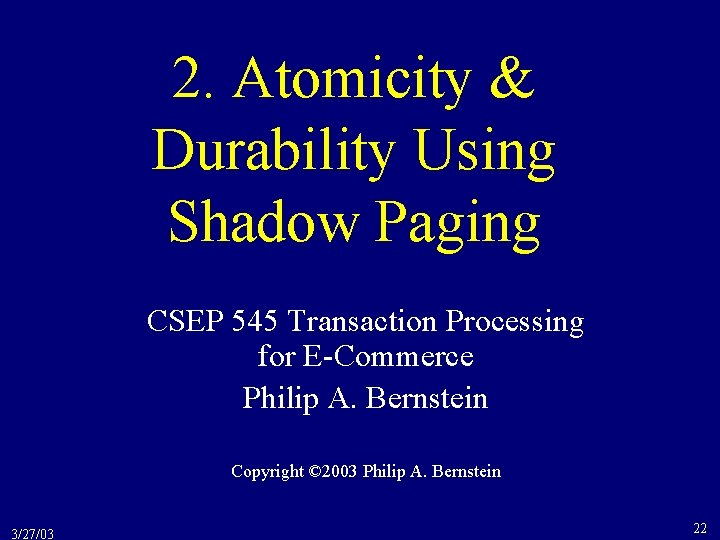

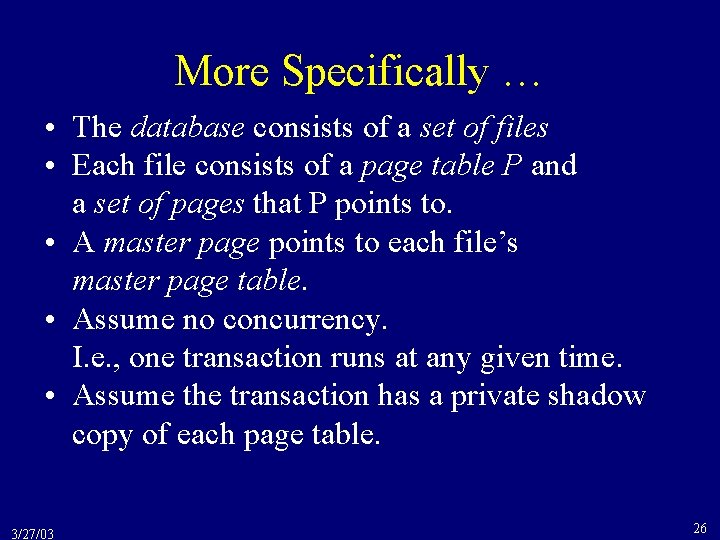

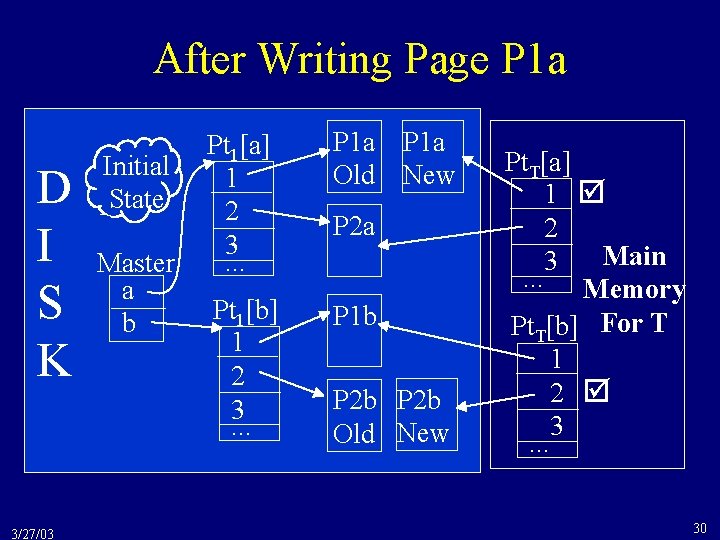

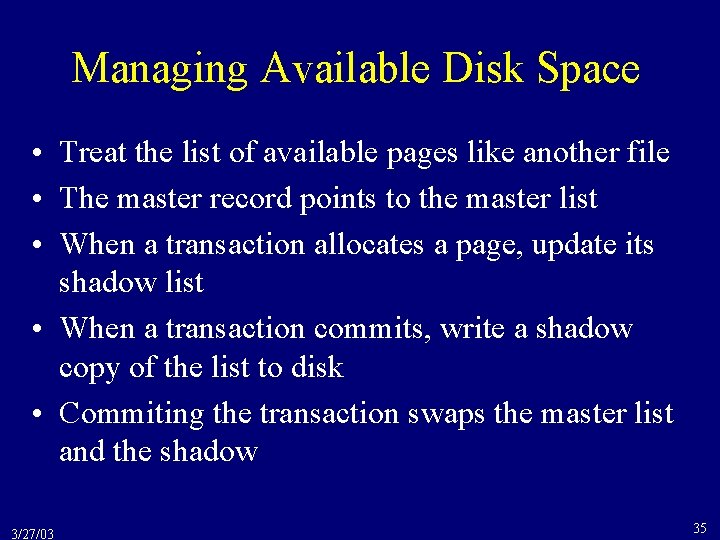

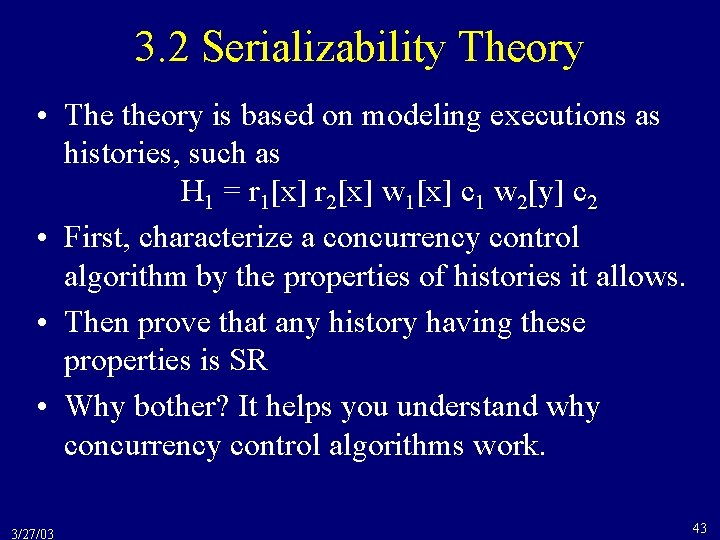

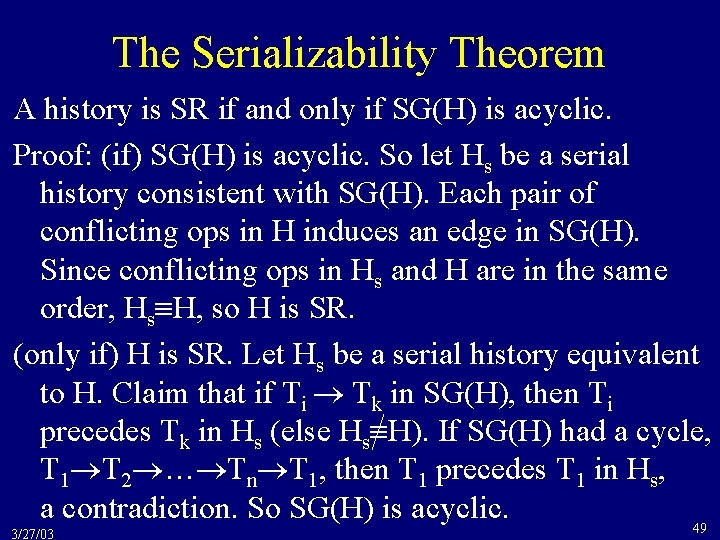

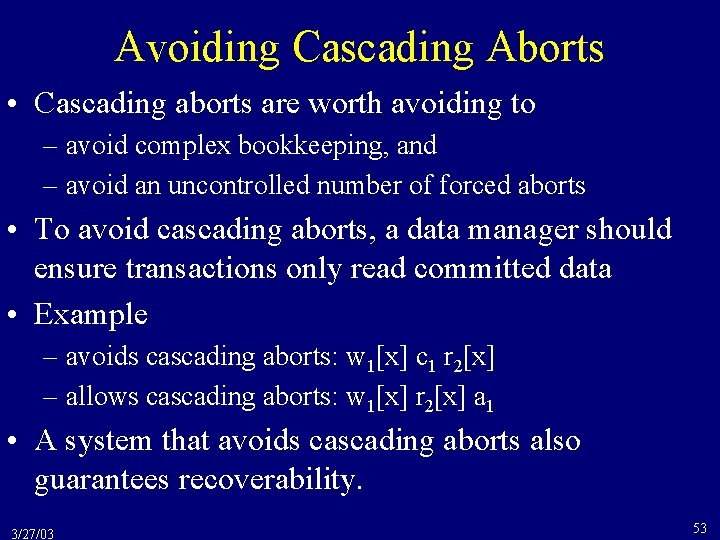

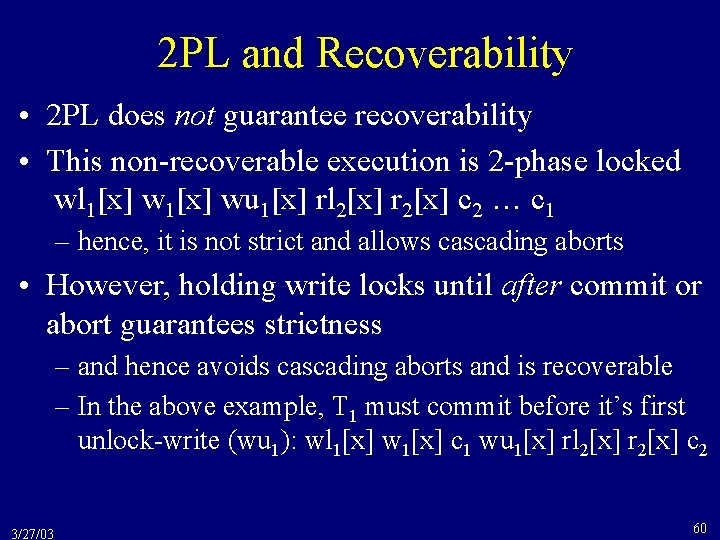

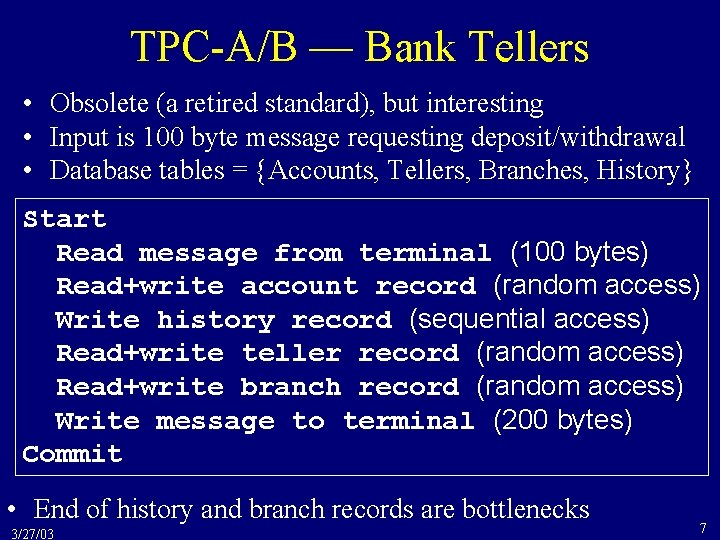

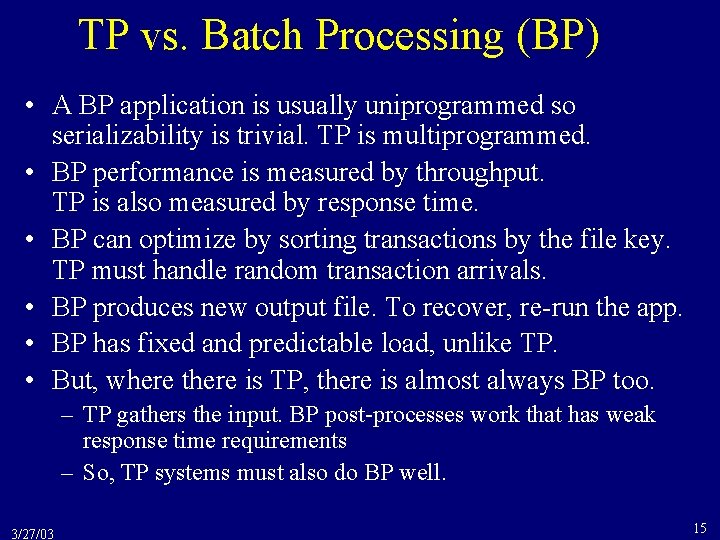

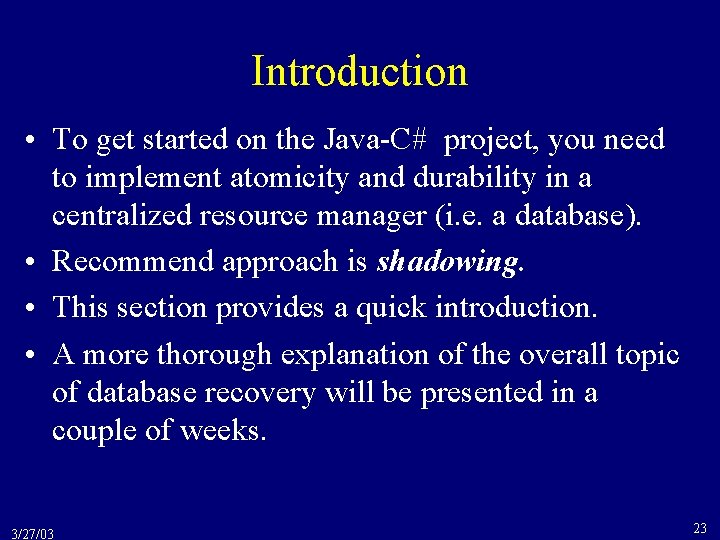

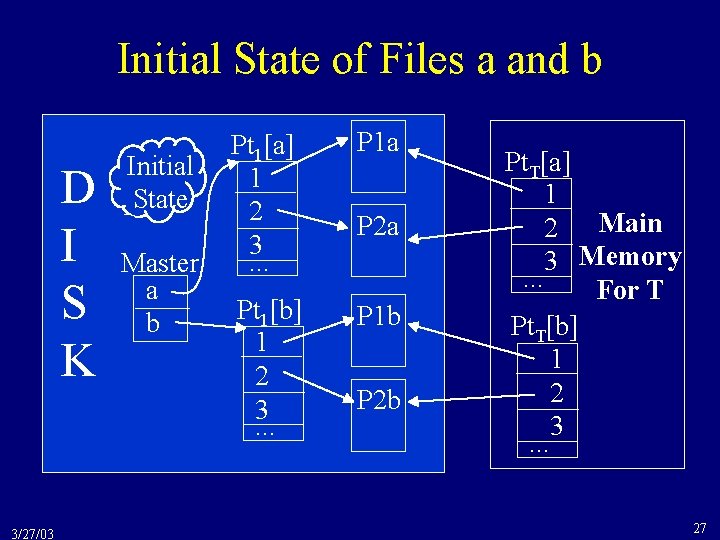

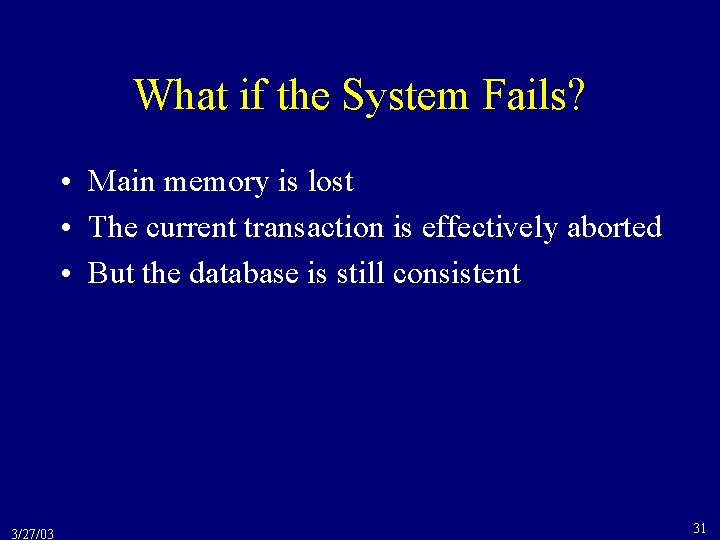

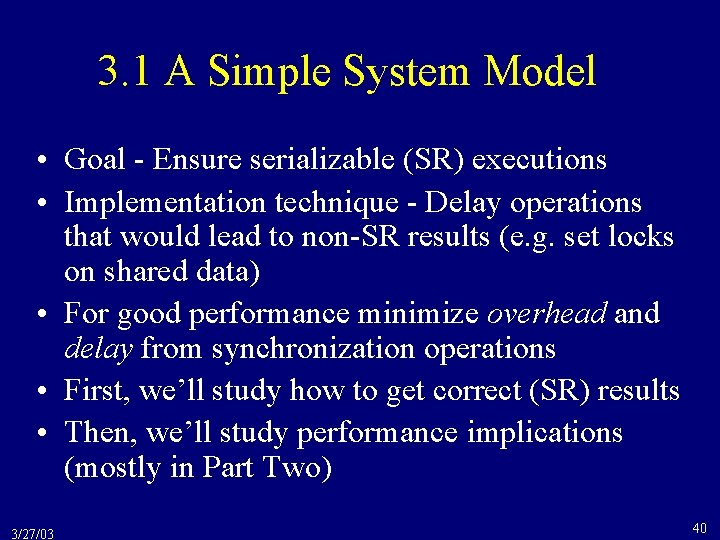

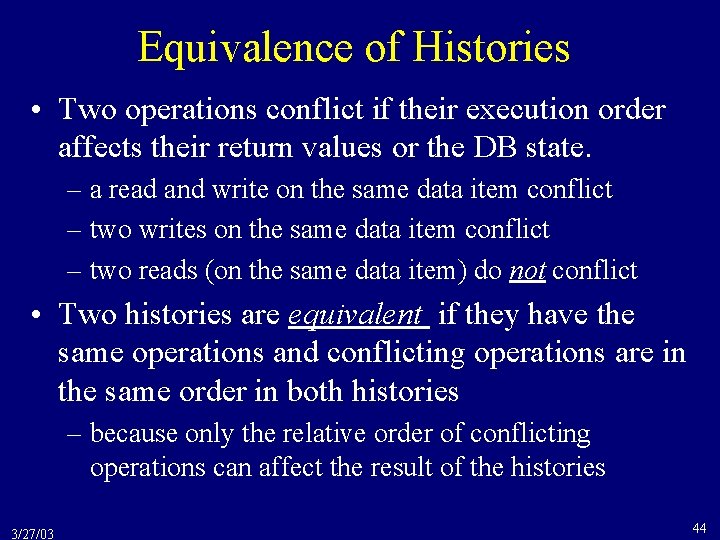

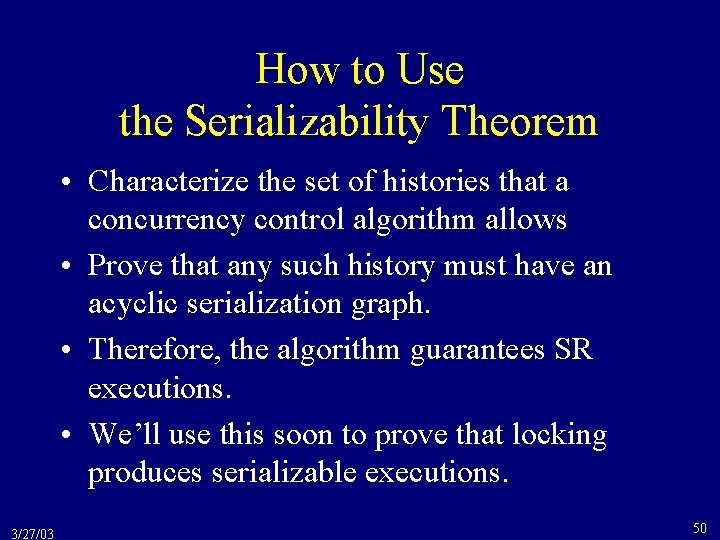

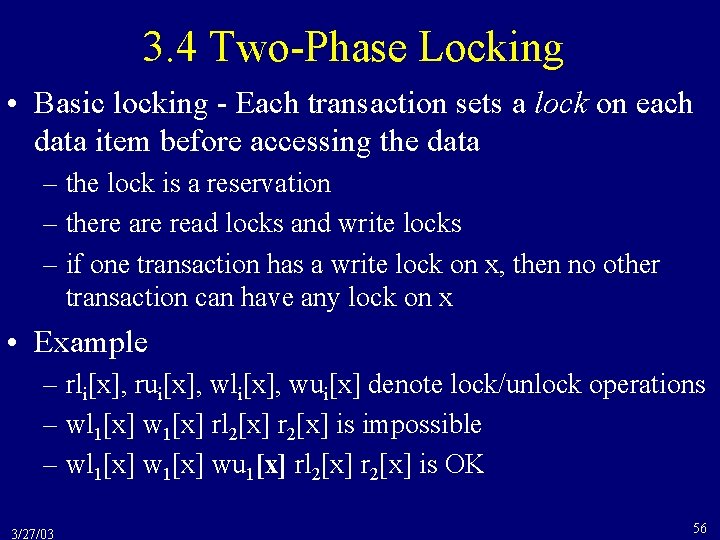

Examples of Equivalence • The following histories are equivalent H 1 = r 1[x] r 2[x] w 1[x] c 1 w 2[y] c 2 H 2 = r 2[x] r 1[x] w 1[x] c 1 w 2[y] c 2 H 3 = r 2[x] r 1[x] w 2[y] c 2 w 1[x] c 1 H 4 = r 2[x] w 2[y] c 2 r 1[x] w 1[x] c 1 • But none of them are equivalent to H 5 = r 1[x] w 1[x] r 2[x] c 1 w 2[y] c 2 because r 2[x] and w 1[x] conflict and r 2[x] precedes w 1[x] in H 1 - H 4, but w 1[x] precedes r 2[x] in H 5. 3/27/03 45

Serializable Histories • A history is serializable if it is equivalent to a serial history • For example, H 1 = r 1[x] r 2[x] w 1[x] c 1 w 2[y] c 2 is equivalent to H 4 = r 2[x] w 2[y] c 2 r 1[x] w 1[x] c 1 (r 2[x] and w 1[x] are in the same order in H 1 and H 4. ) • Therefore, H 1 is serializable. 3/27/03 46

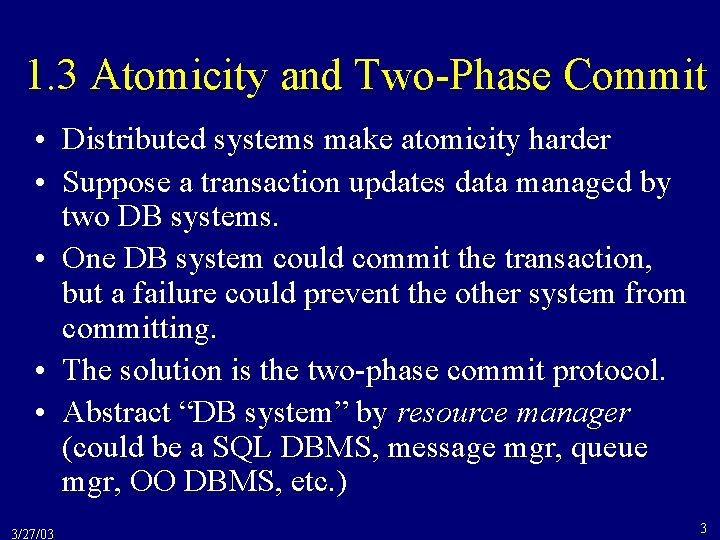

![Another Example H 6 r 1x r 2x w 1x r 3x Another Example • H 6 = r 1[x] r 2[x] w 1[x] r 3[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-47.jpg)

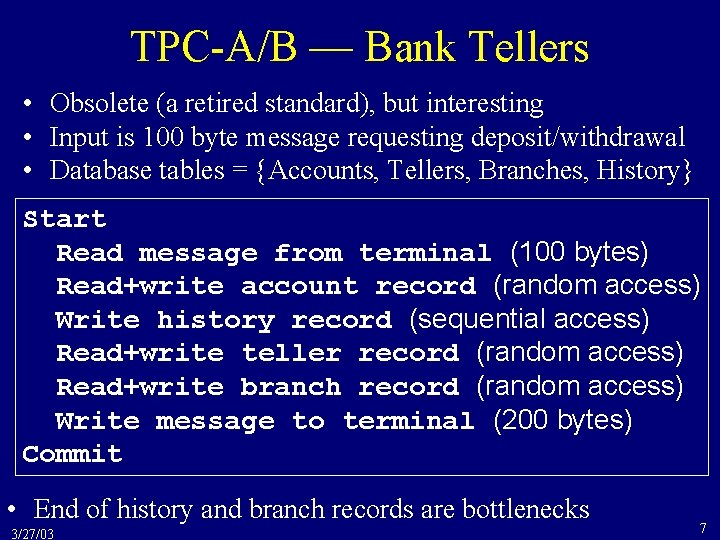

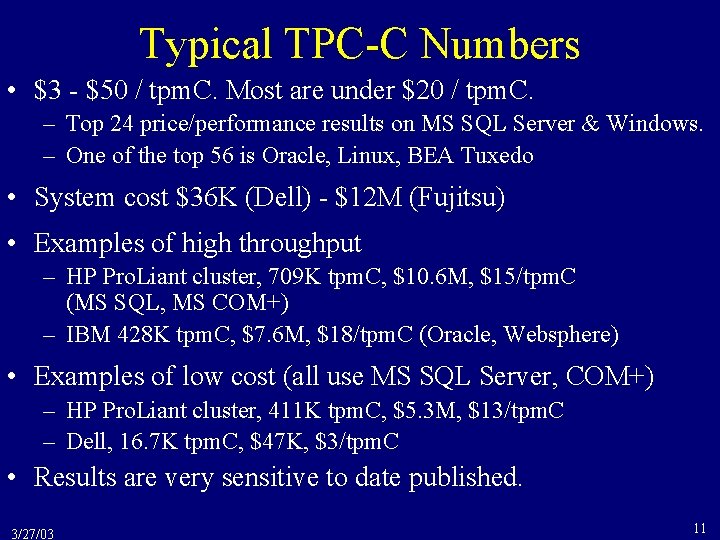

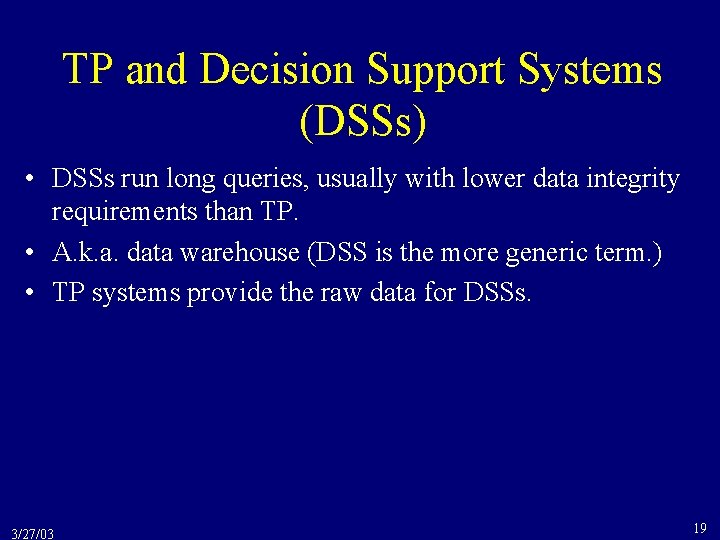

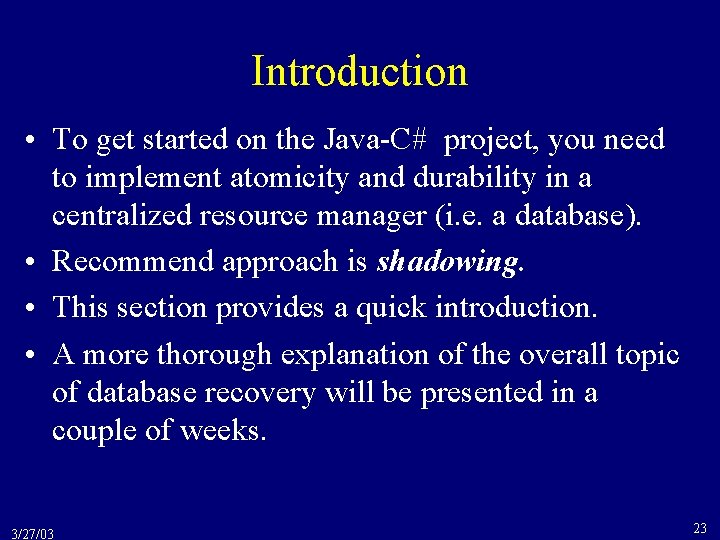

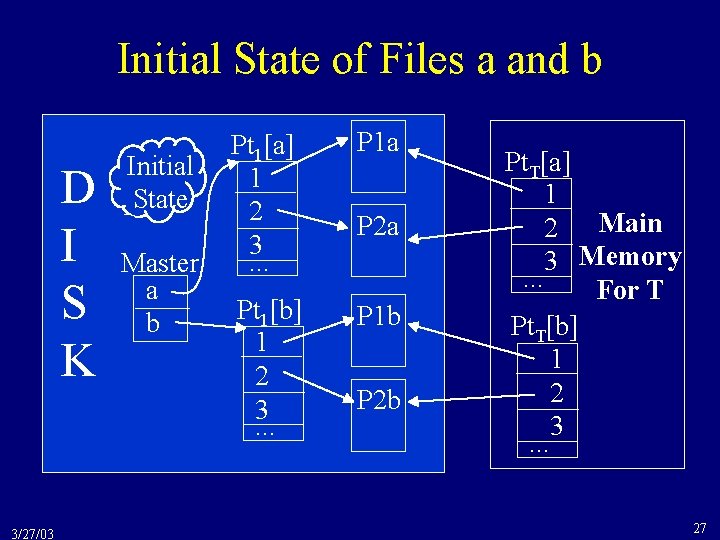

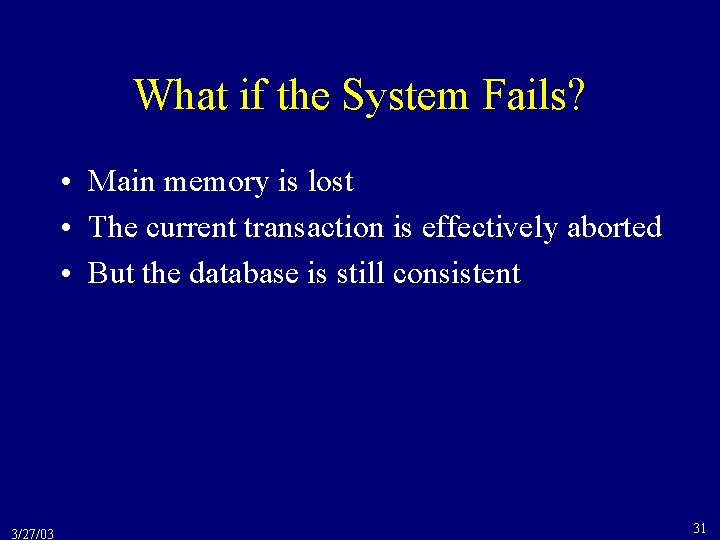

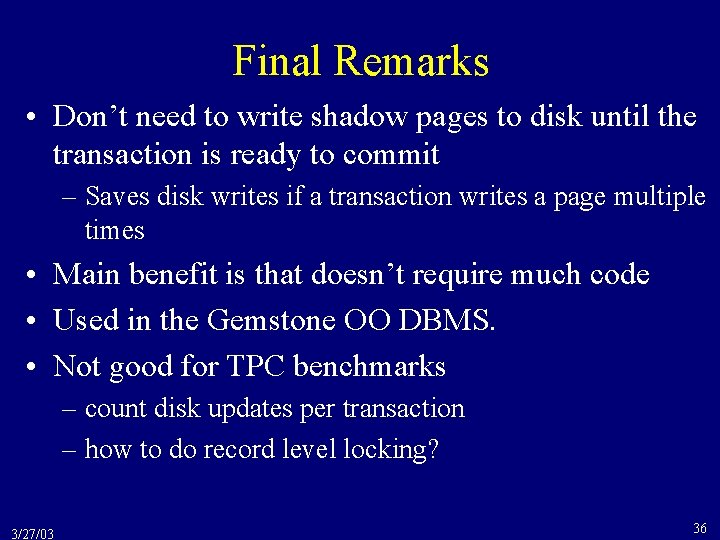

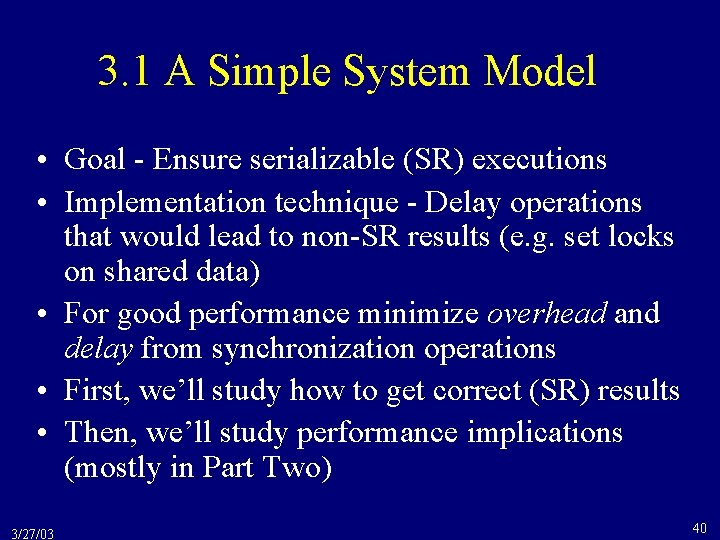

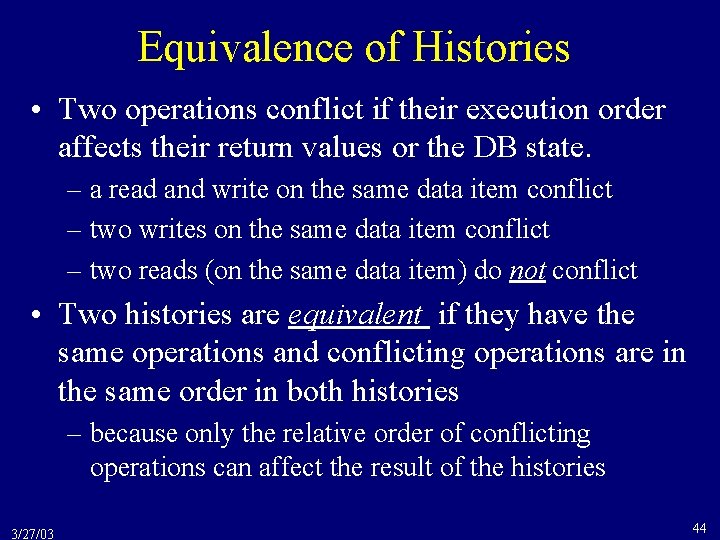

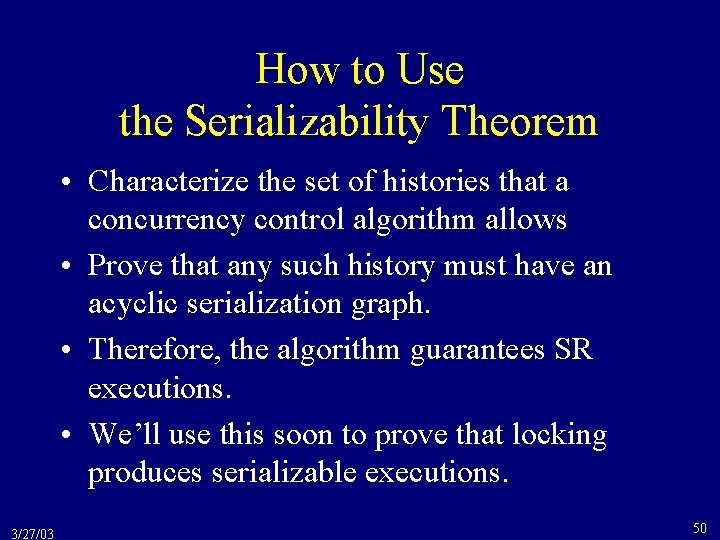

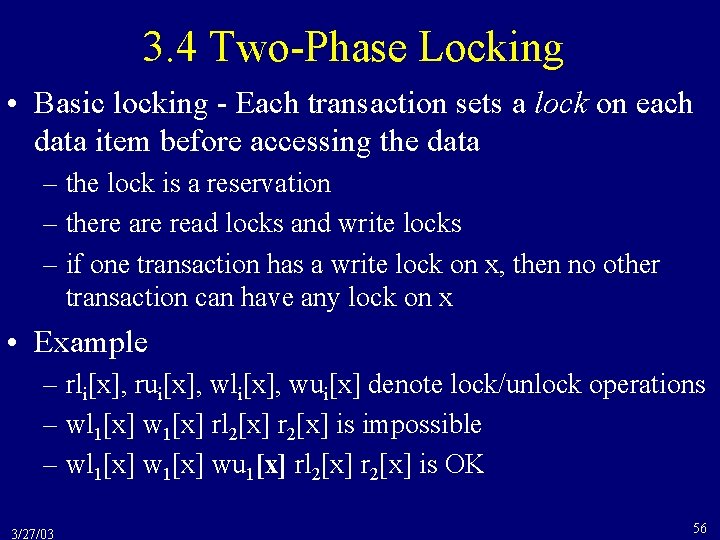

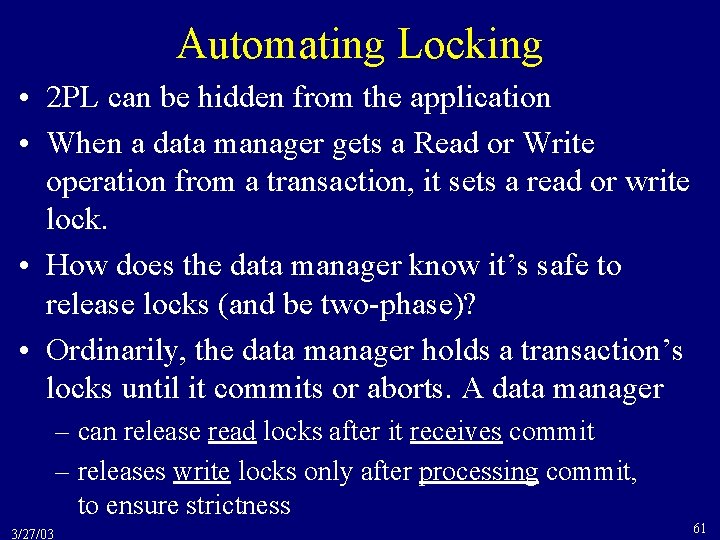

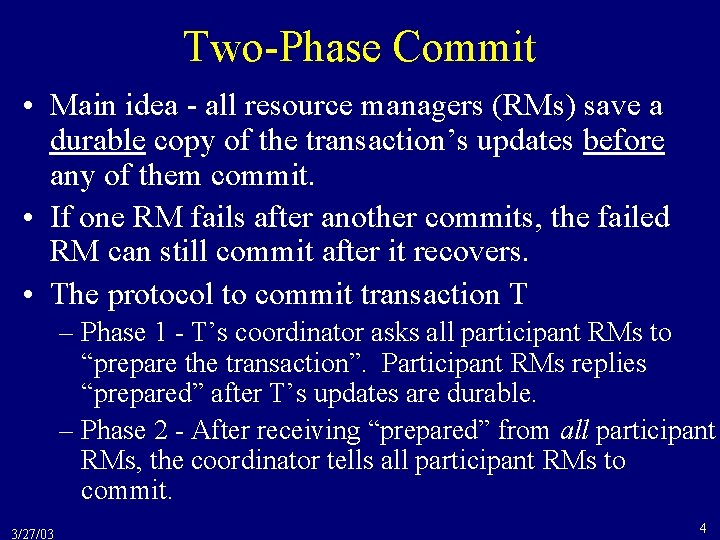

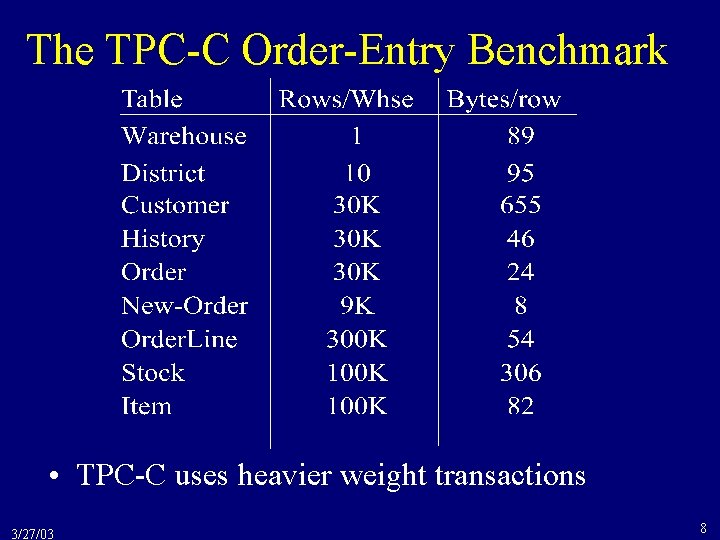

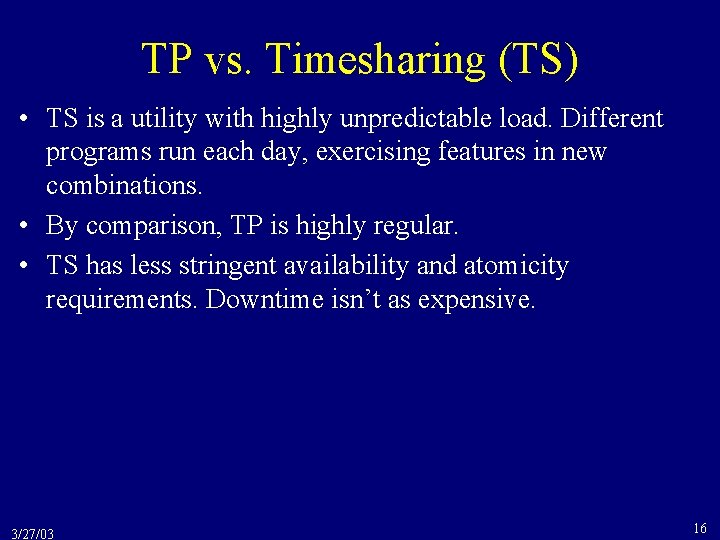

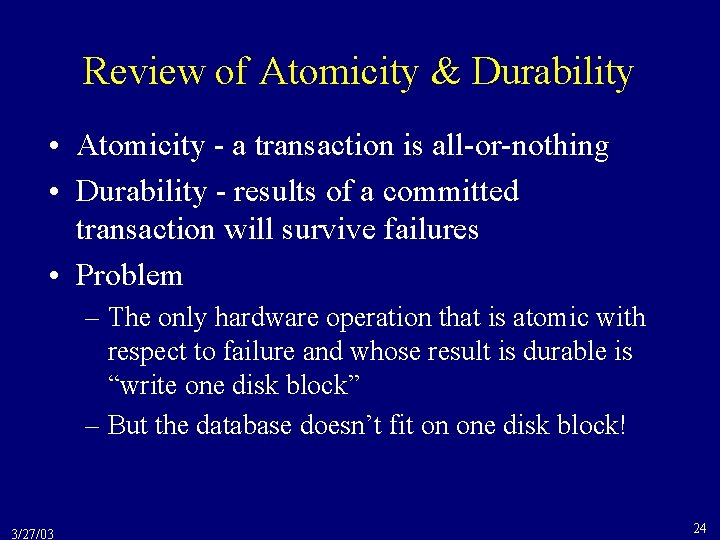

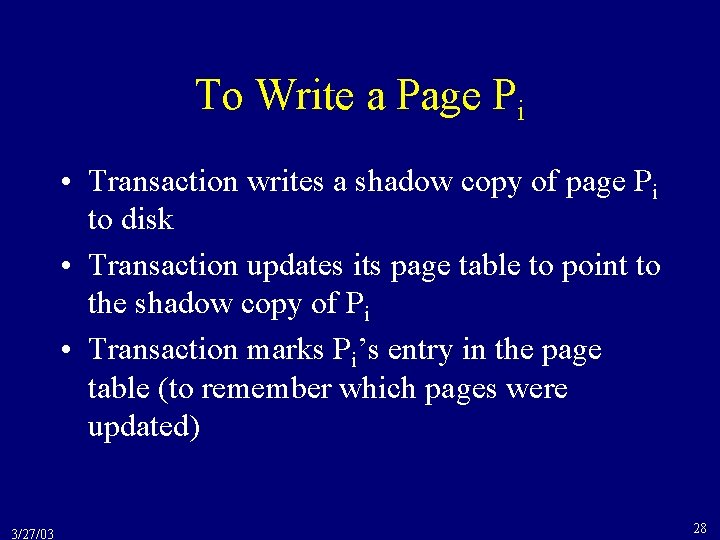

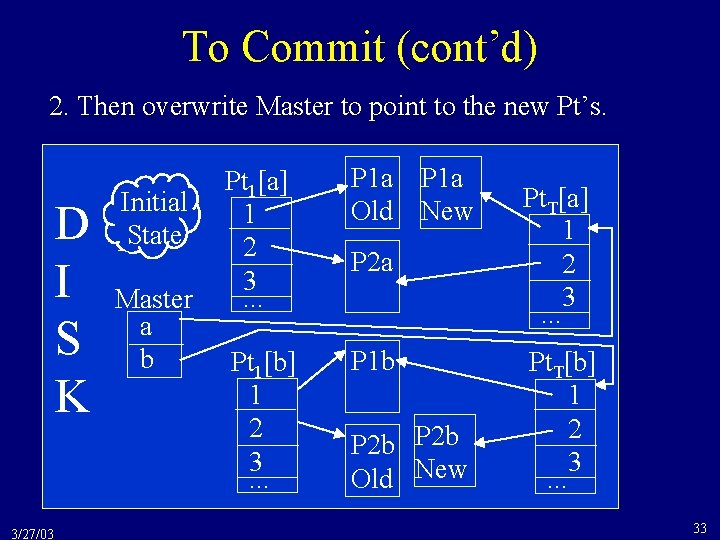

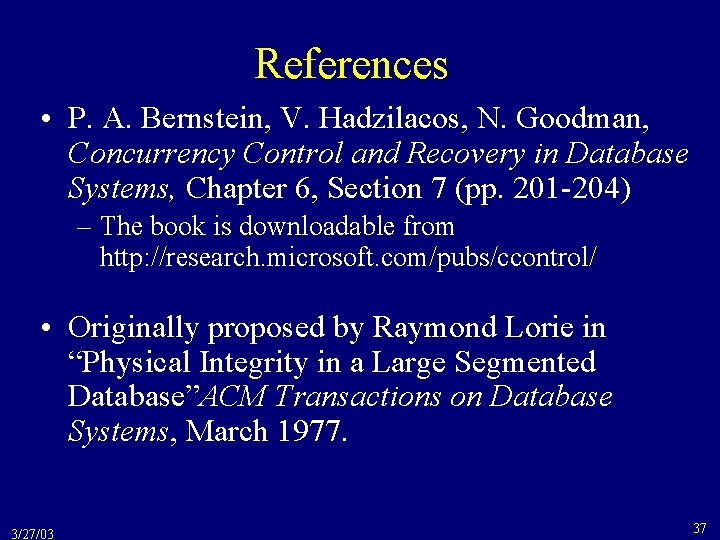

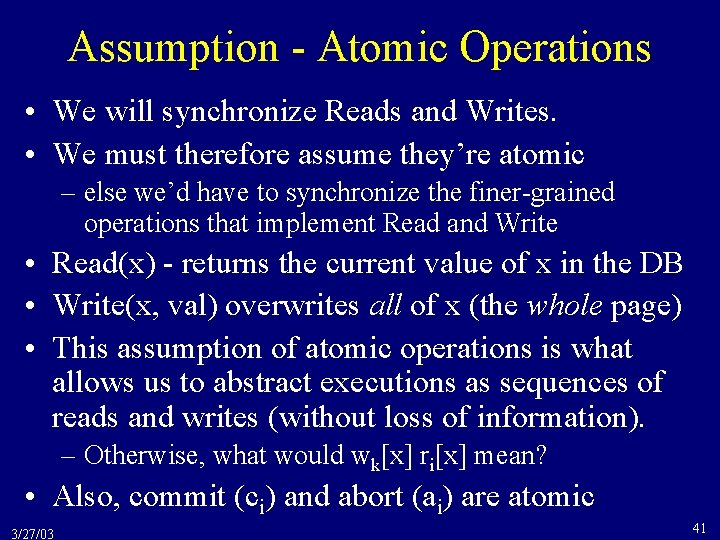

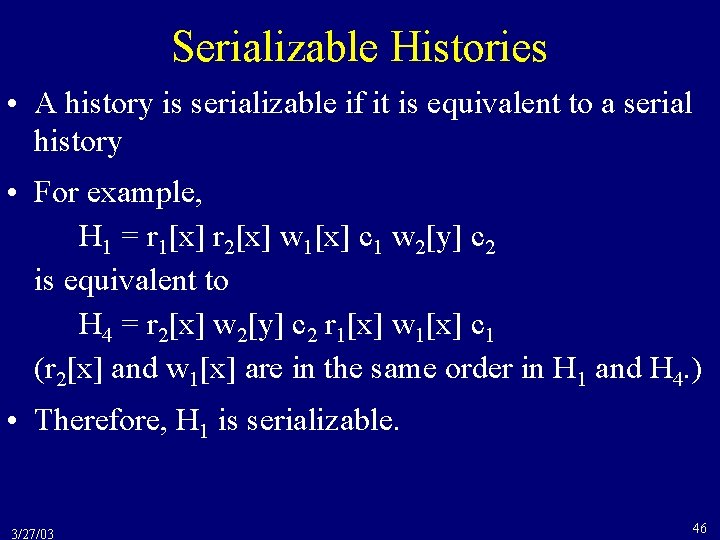

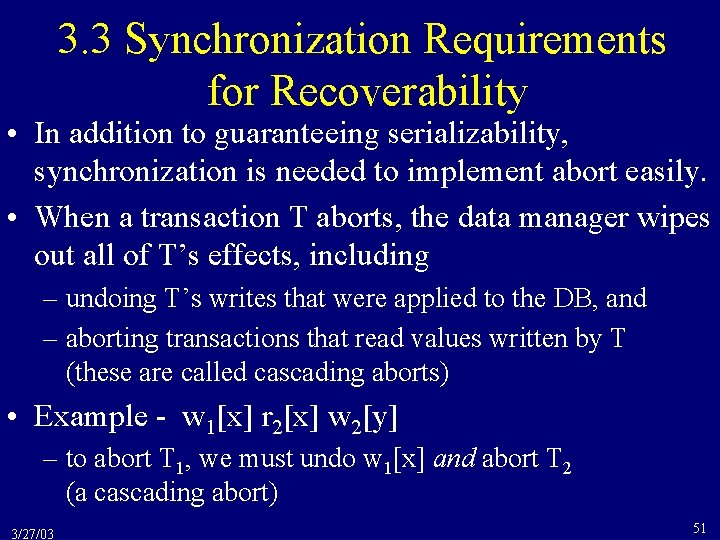

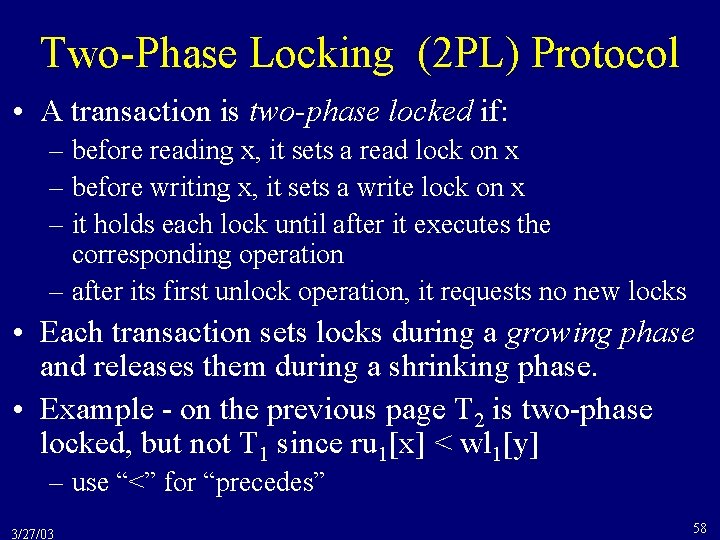

Another Example • H 6 = r 1[x] r 2[x] w 1[x] r 3[x] w 2[y] w 3[x] c 3 w 1[y] c 1 c 2 is equivalent to a serial execution of T 2 T 1 T 3, H 7 = r 2[x] w 2[y] c 2 r 1[x] w 1[y] c 1 r 3[x] w 3[x] c 3 • Each conflict implies a constraint on any equivalent serial history: T 2 T 3 H 6 = r 1[x] r 2[x] w 1[x] r 3[x] w 2[y] w 3[x] c 3 w 1[y] c 1 c 2 T 1 T 3 3/27/03 T 2 T 1 47

Serialization Graphs • A serialization graph, SG(H), for history H tells the effective execution order of transactions in H. • Given history H, SG(H) is a directed graph whose nodes are the committed transactions and whose edges are all Ti Tk such that at least one of Ti’s operations precedes and conflicts with at least one of Tk’s operations H 6 = r 1[x] r 2[x] w 1[x] r 3[x] w 2[y] w 3[x] c 3 w 1[y] c 1 c 2 SG(H 6) = 3/27/03 T 2 T 1 T 3 48

The Serializability Theorem A history is SR if and only if SG(H) is acyclic. Proof: (if) SG(H) is acyclic. So let Hs be a serial history consistent with SG(H). Each pair of conflicting ops in H induces an edge in SG(H). Since conflicting ops in Hs and H are in the same order, Hs H, so H is SR. (only if) H is SR. Let Hs be a serial history equivalent to H. Claim that if Ti Tk in SG(H), then Ti precedes Tk in Hs (else Hs H). If SG(H) had a cycle, T 1 T 2 … Tn T 1, then T 1 precedes T 1 in Hs, a contradiction. So SG(H) is acyclic. 49 3/27/03

How to Use the Serializability Theorem • Characterize the set of histories that a concurrency control algorithm allows • Prove that any such history must have an acyclic serialization graph. • Therefore, the algorithm guarantees SR executions. • We’ll use this soon to prove that locking produces serializable executions. 3/27/03 50

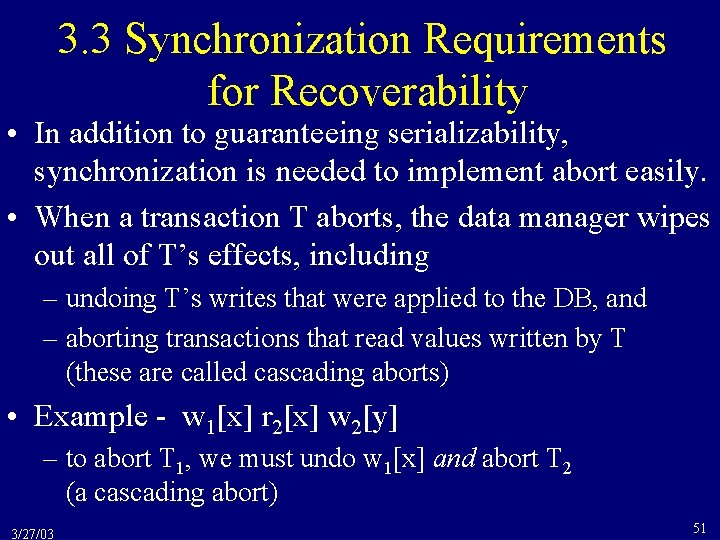

3. 3 Synchronization Requirements for Recoverability • In addition to guaranteeing serializability, synchronization is needed to implement abort easily. • When a transaction T aborts, the data manager wipes out all of T’s effects, including – undoing T’s writes that were applied to the DB, and – aborting transactions that read values written by T (these are called cascading aborts) • Example - w 1[x] r 2[x] w 2[y] – to abort T 1, we must undo w 1[x] and abort T 2 (a cascading abort) 3/27/03 51

Recoverability • If Tk reads from Ti and Ti aborts, then Tk must abort – Example - w 1[x] r 2[x] a 1 implies T 2 must abort • But what if Tk already committed? We’d be stuck. – Example - w 1[x] r 2[x] c 2 a 1 – T 2 can’t abort after it commits • Executions must be recoverable: A transaction T’s commit operation must follow the commit of every transaction from which T read. – Recoverable - w 1[x] r 2[x] c 1 c 2 – Not recoverable - w 1[x] r 2[x] c 2 a 1 • Recoverability requires synchronizing operations. 3/27/03 52

Avoiding Cascading Aborts • Cascading aborts are worth avoiding to – avoid complex bookkeeping, and – avoid an uncontrolled number of forced aborts • To avoid cascading aborts, a data manager should ensure transactions only read committed data • Example – avoids cascading aborts: w 1[x] c 1 r 2[x] – allows cascading aborts: w 1[x] r 2[x] a 1 • A system that avoids cascading aborts also guarantees recoverability. 3/27/03 53

![Strictness Its convenient to undo a write wx by restoring its before image Strictness • It’s convenient to undo a write, w[x], by restoring its before image](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-54.jpg)

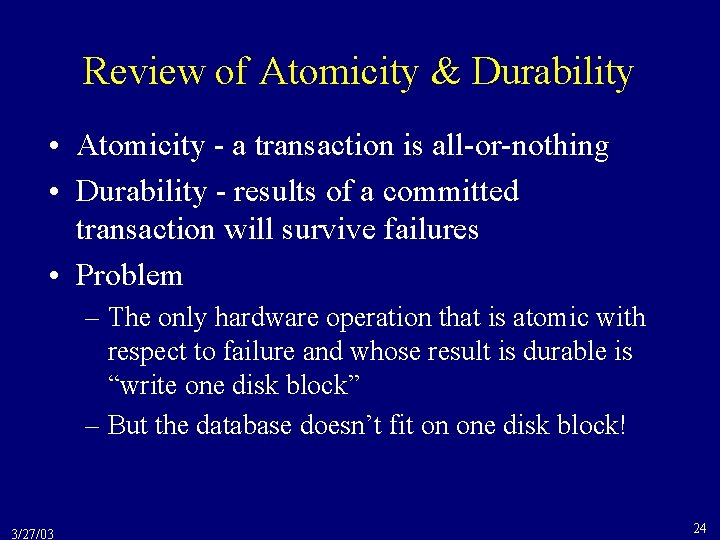

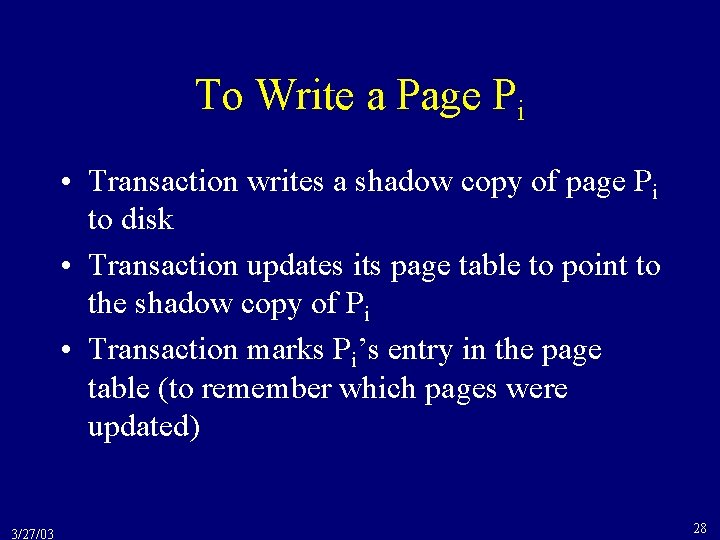

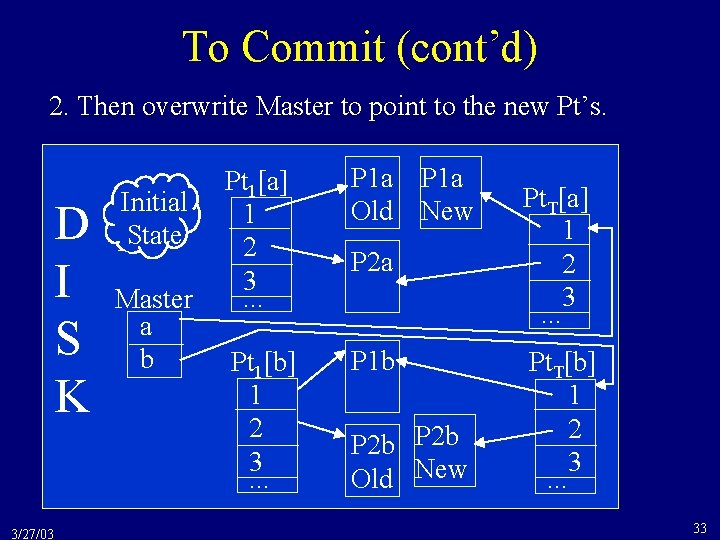

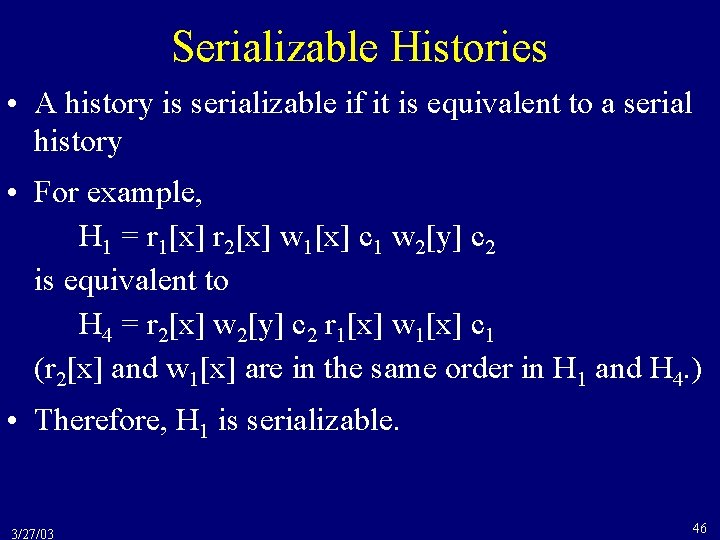

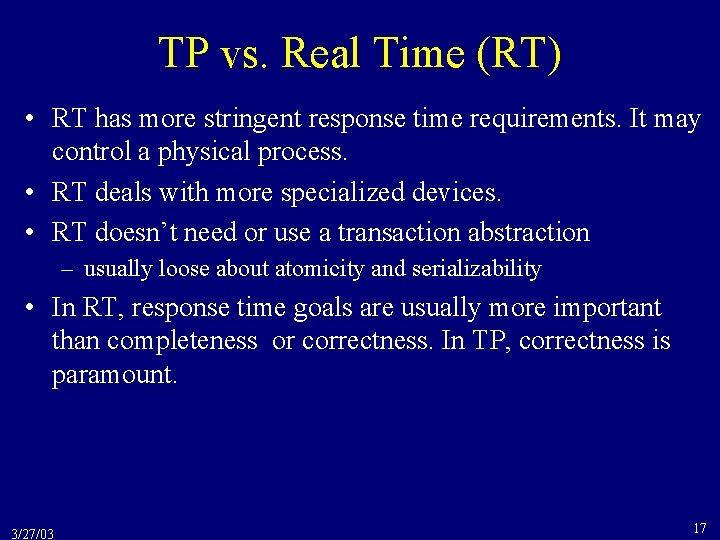

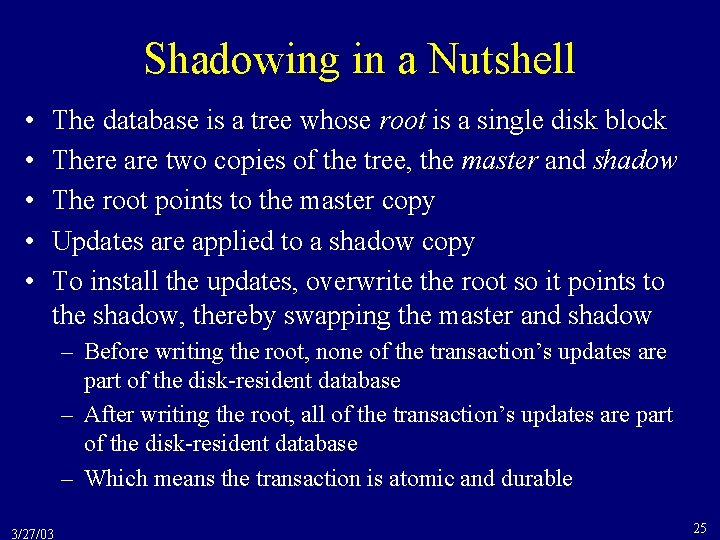

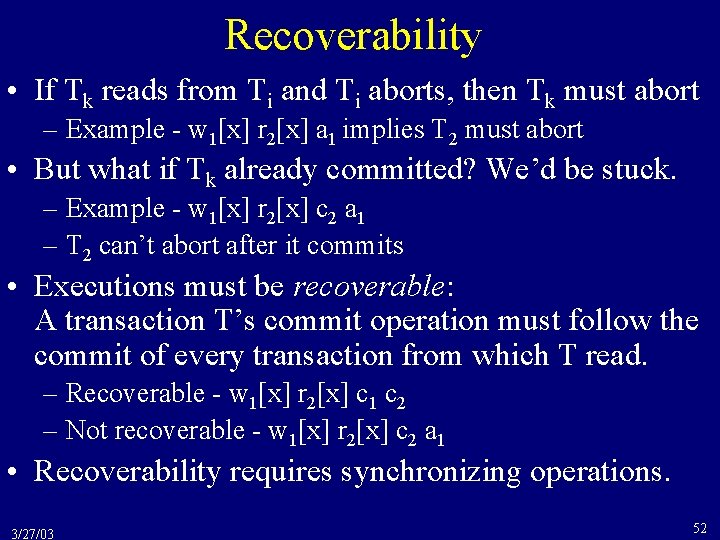

Strictness • It’s convenient to undo a write, w[x], by restoring its before image (=the value of x before w[x] executed) • Example - w 1[x, 1] writes the value “ 1” into x. – w 1[x, 1] w 1[y, 3] c 1 w 2[y, 1] r 2[x] a 2 – abort T 2 by restoring the before image of w 2[y, 1], = 3 • But this isn’t always possible. – For example, consider w 1[x, 2] w 2[x, 3] a 1 a 2 – a 1 & a 2 can’t be implemented by restoring before images – notice that w 1[x, 2] w 2[x, 3] a 2 a 1 would be OK • A system is strict if it only reads or overwrites committed data. 3/27/03 54

![Strictness contd More precisely a system is strict if it only executes rix Strictness (cont’d) • More precisely, a system is strict if it only executes ri[x]](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-55.jpg)

Strictness (cont’d) • More precisely, a system is strict if it only executes ri[x] or wi[x] if all previous transactions that wrote x committed or aborted. • Examples (“…” marks a non-strict prefix) – strict: w 1[x] c 1 w 2[x] a 2 – not strict: w 1[x] w 2[x] … a 1 a 2 – strict: w 1[x] w 1[y] c 1 w 2[y] r 2[x] a 2 – not strict: w 1[x] w 1[y] w 2[y] a 1 r 2[x] a 2 • “Strict” implies “avoids cascading aborts. ” 3/27/03 55

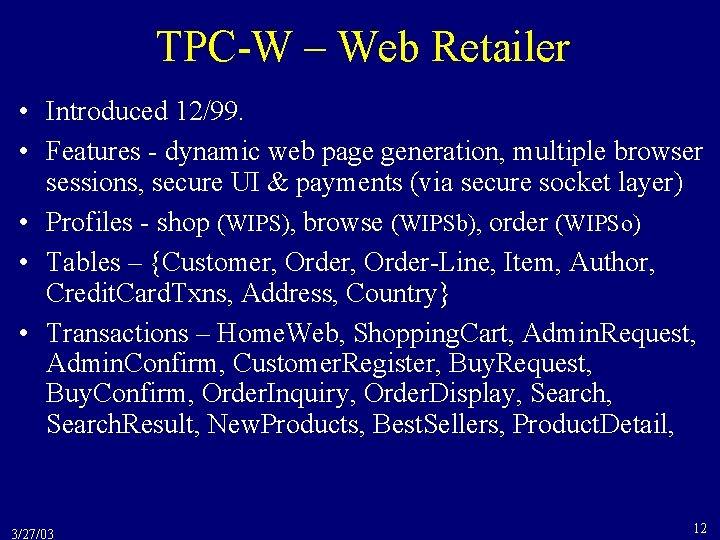

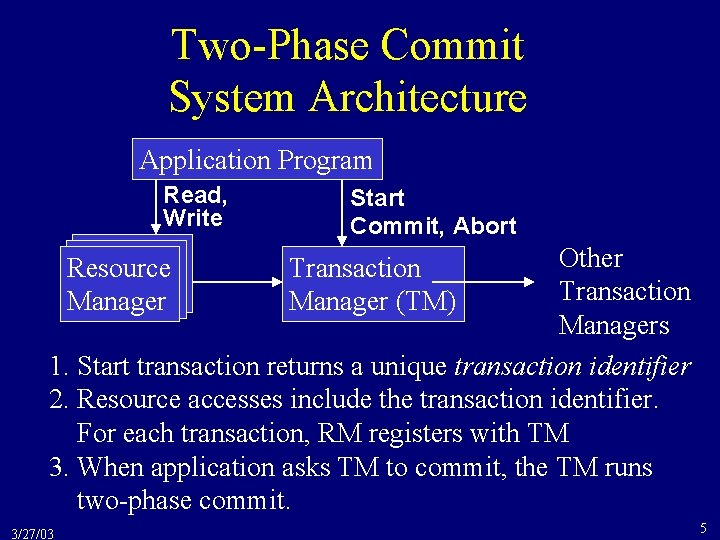

3. 4 Two-Phase Locking • Basic locking - Each transaction sets a lock on each data item before accessing the data – the lock is a reservation – there are read locks and write locks – if one transaction has a write lock on x, then no other transaction can have any lock on x • Example – rli[x], rui[x], wli[x], wui[x] denote lock/unlock operations – wl 1[x] w 1[x] rl 2[x] r 2[x] is impossible – wl 1[x] wu 1[x] rl 2[x] r 2[x] is OK 3/27/03 56

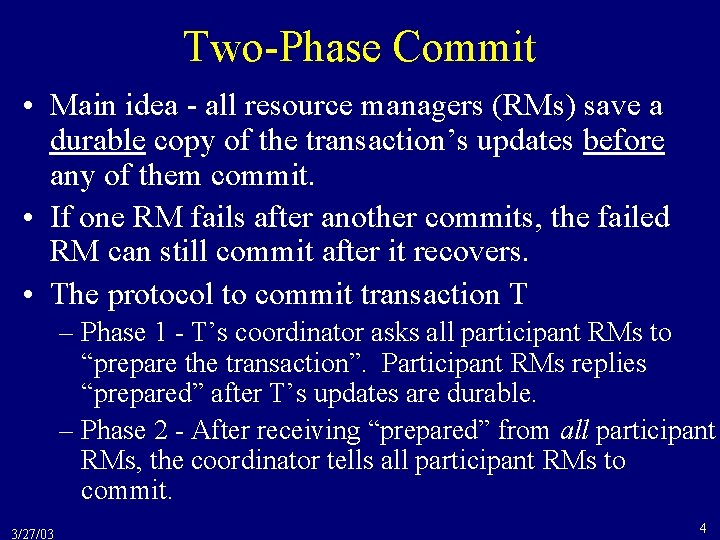

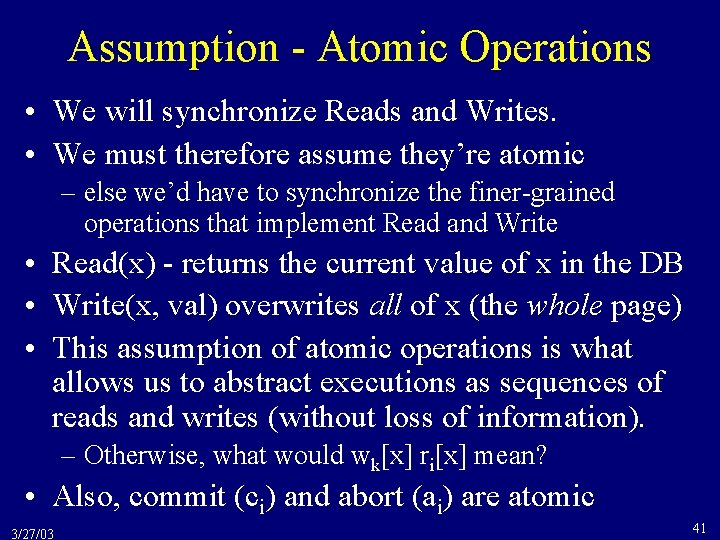

![Basic Locking Isnt Enough Basic locking doesnt guarantee serializability rl 1x ru Basic Locking Isn’t Enough • Basic locking doesn’t guarantee serializability • rl 1[x] ru](https://slidetodoc.com/presentation_image_h2/6936ec3d5aa04b26d2c062e9f8c88892/image-57.jpg)

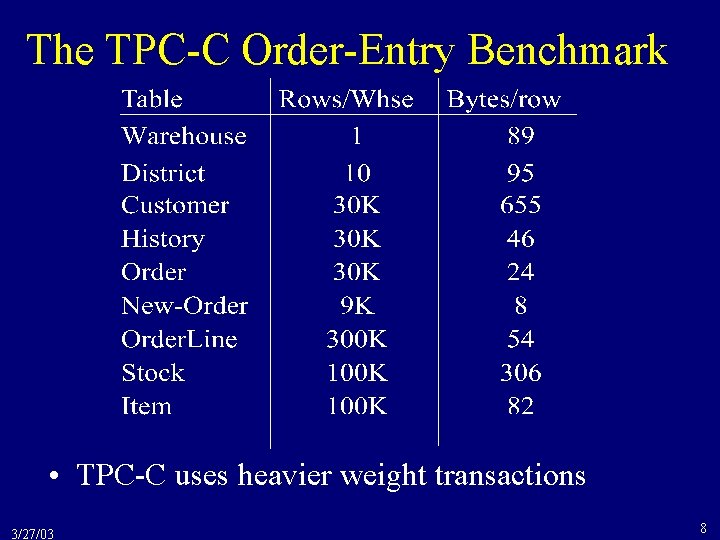

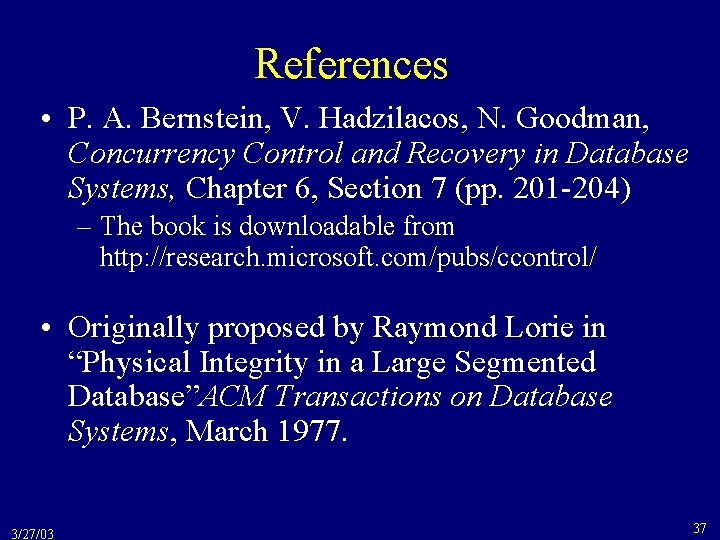

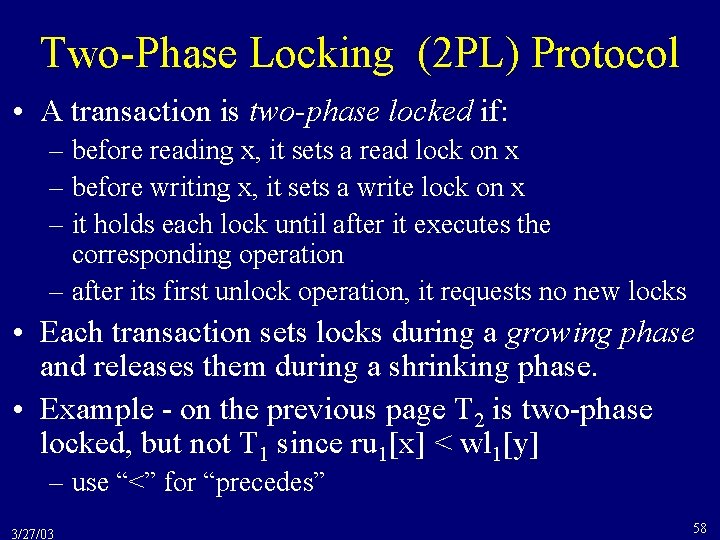

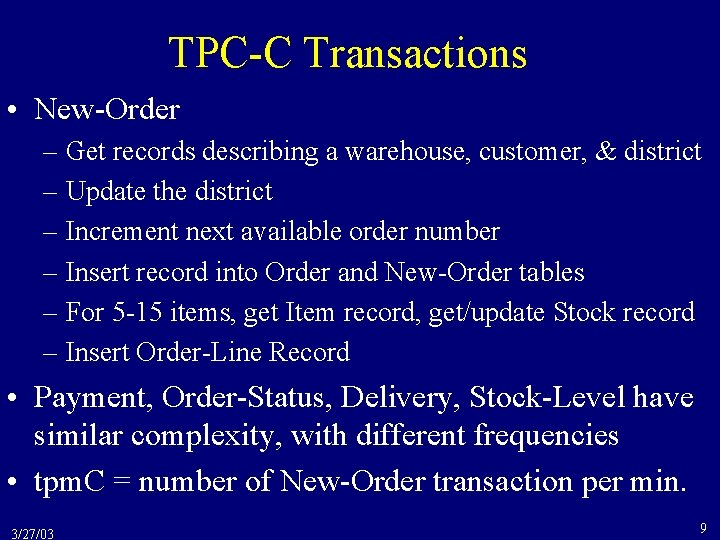

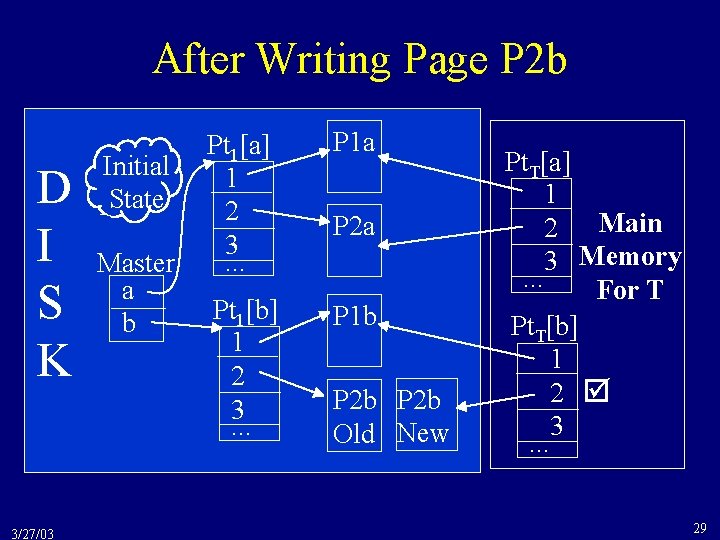

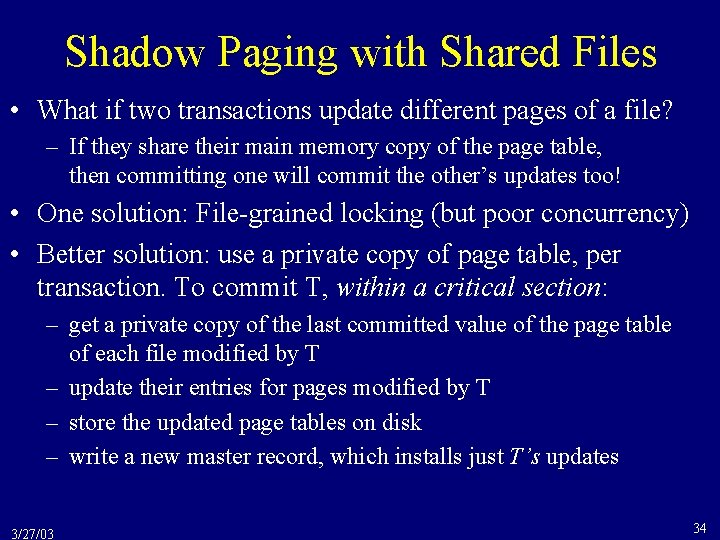

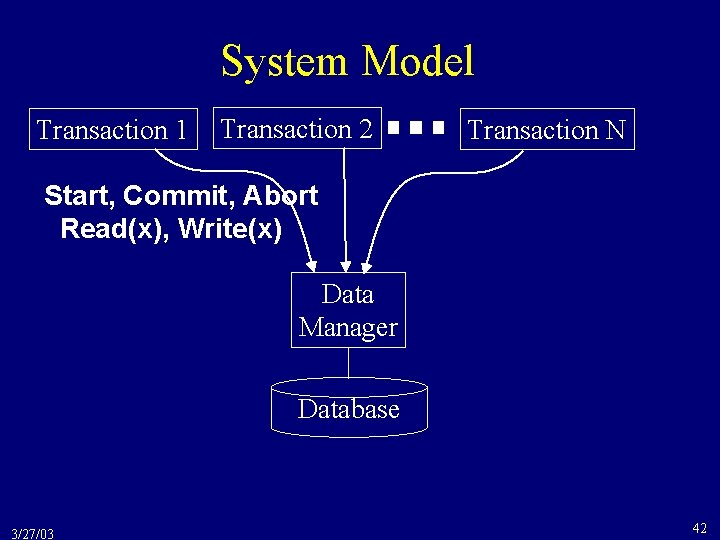

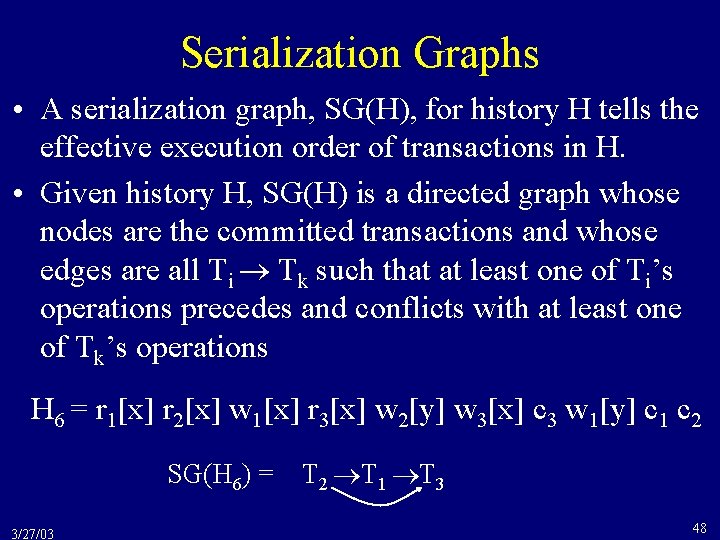

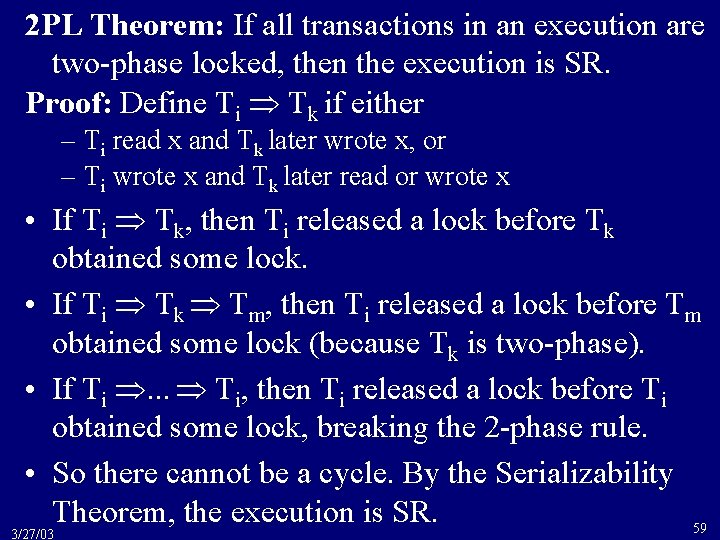

Basic Locking Isn’t Enough • Basic locking doesn’t guarantee serializability • rl 1[x] ru 1[x] wl 1[y] wu 1[y] c 1 rl 2[y] r 2[y] wl 2[x] w 2[x] ru 2[y] wu 2[x] c 2 • Eliminating the lock operations, we have r 1[x] r 2[y] w 2[x] c 2 w 1[y] c 1 which isn’t SR • The problem is that locks aren’t being released properly. 3/27/03 57

Two-Phase Locking (2 PL) Protocol • A transaction is two-phase locked if: – before reading x, it sets a read lock on x – before writing x, it sets a write lock on x – it holds each lock until after it executes the corresponding operation – after its first unlock operation, it requests no new locks • Each transaction sets locks during a growing phase and releases them during a shrinking phase. • Example - on the previous page T 2 is two-phase locked, but not T 1 since ru 1[x] < wl 1[y] – use “<” for “precedes” 3/27/03 58

2 PL Theorem: If all transactions in an execution are two-phase locked, then the execution is SR. Proof: Define Ti Tk if either – Ti read x and Tk later wrote x, or – Ti wrote x and Tk later read or wrote x • If Ti Tk, then Ti released a lock before Tk obtained some lock. • If Ti Tk Tm, then Ti released a lock before Tm obtained some lock (because Tk is two-phase). • If Ti . . . Ti, then Ti released a lock before Ti obtained some lock, breaking the 2 -phase rule. • So there cannot be a cycle. By the Serializability Theorem, the execution is SR. 59 3/27/03

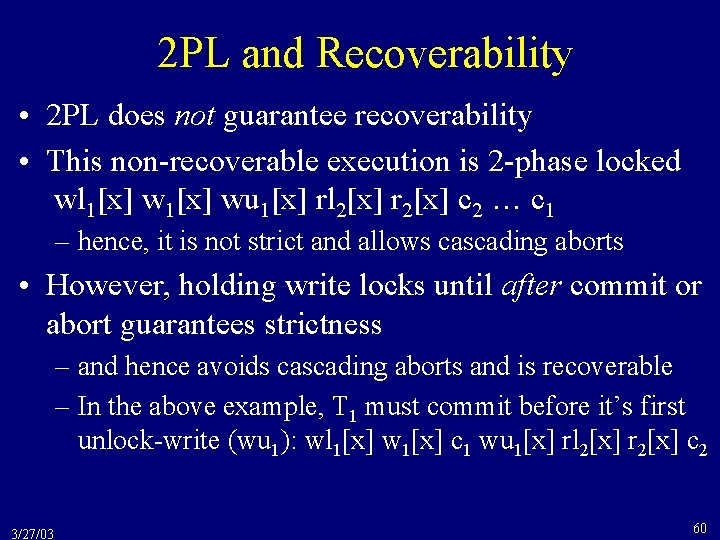

2 PL and Recoverability • 2 PL does not guarantee recoverability • This non-recoverable execution is 2 -phase locked wl 1[x] wu 1[x] rl 2[x] r 2[x] c 2 … c 1 – hence, it is not strict and allows cascading aborts • However, holding write locks until after commit or abort guarantees strictness – and hence avoids cascading aborts and is recoverable – In the above example, T 1 must commit before it’s first unlock-write (wu 1): wl 1[x] w 1[x] c 1 wu 1[x] rl 2[x] r 2[x] c 2 3/27/03 60

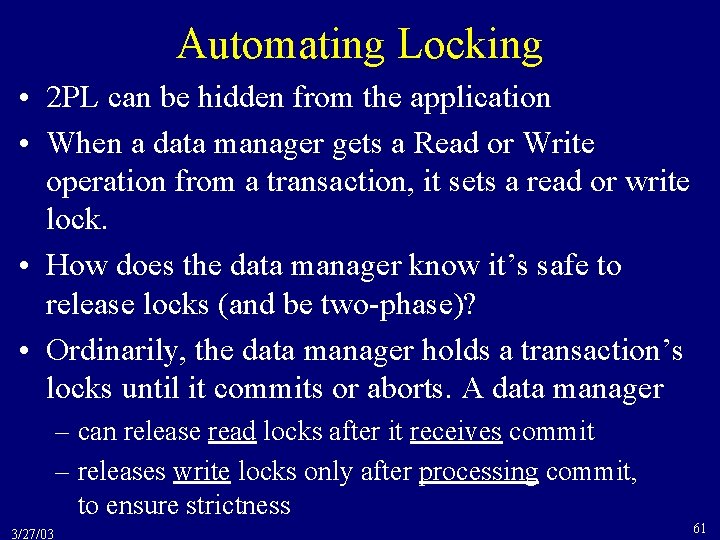

Automating Locking • 2 PL can be hidden from the application • When a data manager gets a Read or Write operation from a transaction, it sets a read or write lock. • How does the data manager know it’s safe to release locks (and be two-phase)? • Ordinarily, the data manager holds a transaction’s locks until it commits or aborts. A data manager – can release read locks after it receives commit – releases write locks only after processing commit, to ensure strictness 3/27/03 61