1 Data Preprocessing in Python Ahmedul Kabir TA

1 Data Preprocessing in Python Ahmedul Kabir TA, CS 548, Spring 2015

2 Preprocessing Techniques Covered Standardization and Normalization Missing value replacement Resampling Discretization Feature Selection Dimensionality Reduction: PCA

3 Python Packages/Tools for Data Mining Scikit-learn Orange Pandas MLPy MDP Py. Brain … and many more

4 Some Other Basic Packages Num. Py and Sci. Py Fundamental Packages for scientific computing with Python Contains powerful n-dimensional array objects Useful linear algebra, random number and other capabilities Pandas Contains useful data structures and algorithms Matplotlib Contains functions for plotting/visualizing data.

5 Standardization and Normalization Standardization: To transform data so that it has zero mean and unit variance. Also called scaling Use function sklearn. preprocessing. scale() Parameters: X: Data to be scaled with_mean: Boolean. Whether to center the data (make zero mean) with_std: Boolean (whether to make unit standard deviation Normalization: to transform data so that it is scaled to the [0, 1] range. Use function sklearn. preprocessing. normalize() Parameters: X: Data to be normalized norm: which norm to use: l 1 or l 2 axis: whether to normalize by row or column

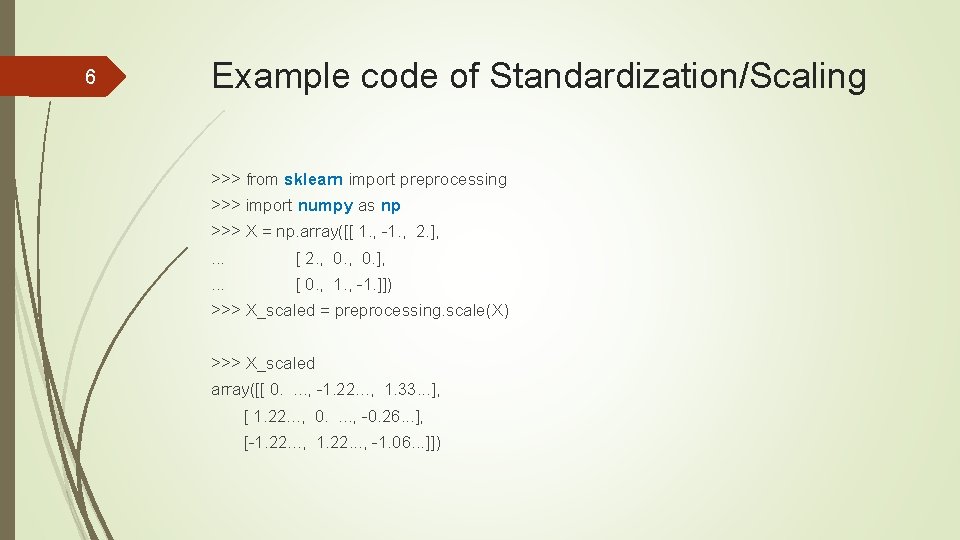

6 Example code of Standardization/Scaling >>> from sklearn import preprocessing >>> import numpy as np >>> X = np. array([[ 1. , -1. , 2. ], . . . [ 2. , 0. ], . . . [ 0. , 1. , -1. ]]) >>> X_scaled = preprocessing. scale(X) >>> X_scaled array([[ 0. . , -1. 22. . . , 1. 33. . . ], [ 1. 22. . . , 0. . , -0. 26. . . ], [-1. 22. . . , -1. 06. . . ]])

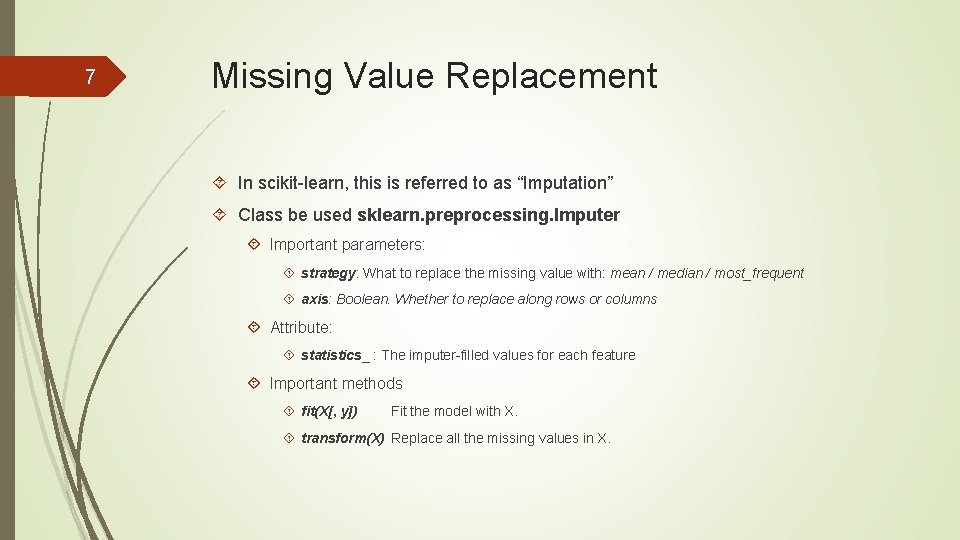

7 Missing Value Replacement In scikit-learn, this is referred to as “Imputation” Class be used sklearn. preprocessing. Imputer Important parameters: strategy: What to replace the missing value with: mean / median / most_frequent axis: Boolean. Whether to replace along rows or columns Attribute: statistics_ : The imputer-filled values for each feature Important methods fit(X[, y]) Fit the model with X. transform(X) Replace all the missing values in X.

8 Example code for Replacing Missing Values >>> import numpy as np >>> from sklearn. preprocessing import Imputer >>> imp = Imputer(missing_values='Na. N', strategy='mean', axis=0) >>> imp. fit([[1, 2], [np. nan, 3], [7, 6]]) Imputer(axis=0, copy=True, missing_values='Na. N', strategy='mean', verbose=0) >>> X = [[np. nan, 2], [6, np. nan], [7, 6]] >>> print(imp. transform(X)) [[ 4. 2. ] [ 6. 3. 666. . . ] [ 7. 6. ]]

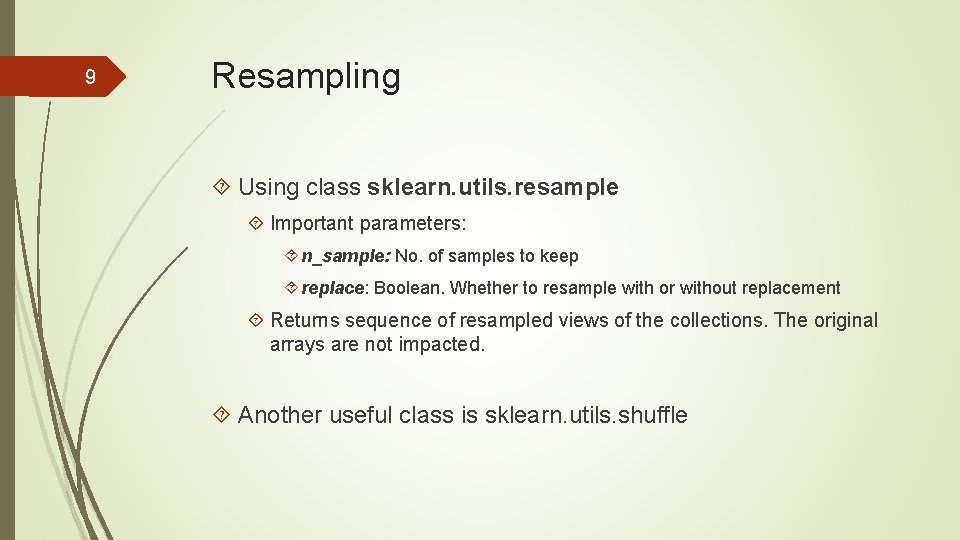

9 Resampling Using class sklearn. utils. resample Important parameters: n_sample: No. of samples to keep replace: Boolean. Whether to resample with or without replacement Returns sequence of resampled views of the collections. The original arrays are not impacted. Another useful class is sklearn. utils. shuffle

10 Discretization Scikit-learn doesn’t have a direct class that performs discretization. Can be performed with cut and qcut functions available in pandas. Orange has discretization functions in Orange. feature. discretization

11 Feature Selection The sklearn. feature_selection module implements feature selection algorithms. Some classes in this module are: Generic. Univariate. Select: Univariate feature selector based on statistical tests. Select. KBest: Select features according to the k highest scores. RFE: Feature ranking with recursive feature elimination. Variance. Threshold: Feature selector that removes all low-variance features. Scikit-learn does not have a CFS implementation, but RFE works in somewhat similar fashion.

12 Dimensionality Reduction: PCA The sklearn. decomposition module includes matrix decomposition algorithms, including PCA sklearn. decomposition. PCA class Important parameters: n_components: No. of components to keep Important attributes: components_ : Components with maximum variance explained_variance_ratio_ : Percentage of variance explained by each of the selected components Important methods fit(X[, y]) Fit the model with X. score_samples(X) Return the log-likelihood of each sample transform(X) Apply the dimensionality reduction on X.

13 Other Useful Information Generate a random permutation of numbers 1. … n: numpy. random. permutation(n) You can randomly generate some toy datasets using Sample generators in sklearn. datasets Scikit-learn doesn’t directly handle categorical/nominal attributes well. In order to use them in the dataset, some sort of encoding needs to be performed. One good way to encode categorical attributes: if there are n categories, create n dummy binary variables representing each category. Can be done easily using the sklearn. preprocessing. one. Hot. Encoder class.

14 References Preprocessing Modules: http: //scikit-learn. org/stable/modules/preprocessing. html Video Tutorial: http: //conference. scipy. org/scipy 2013/tutorial_detail. php? id=107 Quick Start Tutorial http: //scikit-learn. org/stable/tutorial/basic/tutorial. html User Guide http: //scikit-learn. org/stable/user_guide. html API Reference http: //scikit-learn. org/stable/modules/classes. html Example Gallery http: //scikit-learn. org/stable/auto_examples/index. html

- Slides: 14