1 A Biterm Topic Model for Short Texts

1 A Biterm Topic Model for Short Texts WWW '13 Proceedings of the 22 nd international conference on World Wide Web Pages 1445 -1456 Xiaohui Yan, Jiafeng Guo, Yanyan Lan, Xueqi Cheng (Institute of Computing Technology, CAS, Beijing, China) 2014/10/30 M 1 sakusa

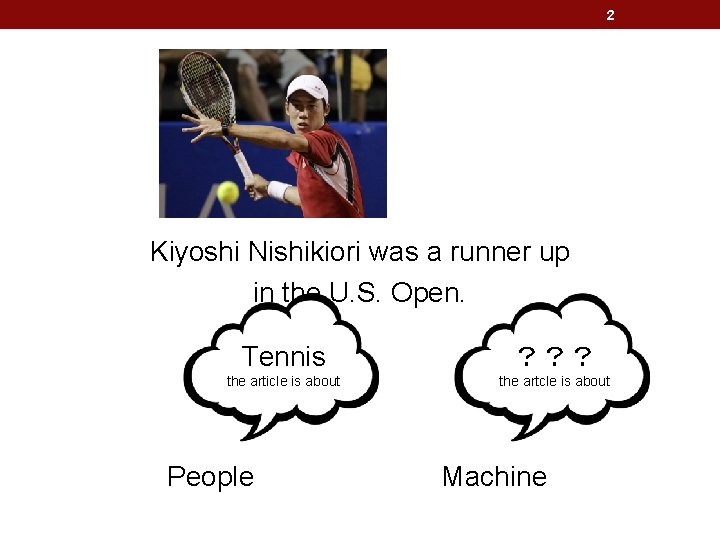

2 Kiyoshi Nishikiori was a runner up in the U. S. Open. Tennis ??? the article is about the artcle is about People Machine

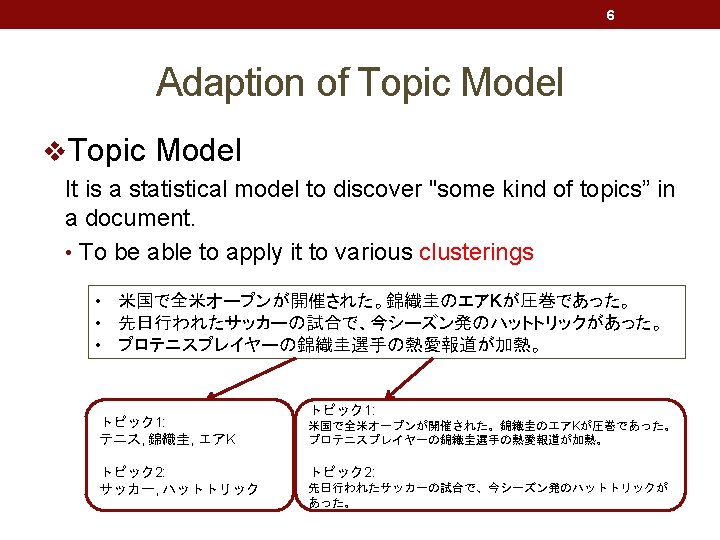

3 Topic Model v. Topic Model It is a statistical model to discover "some kind of topics” in a document. l. Basic Idea – The thing with a similar meaning should be used by a similar method. – The thing with a similar meaning should co-occur for similar context. – May people learn a meaning from a collocation called the thing context, too? Kiyoshi Nishikiori was a runner up in the U. S. Open. v Using a corpus, it learns the meaning of the word from the collocations such as a word - sentence, the document to check this idea.

4 Topic Model v. Topic Model It is a statistical model to discover "some kind of topics” in a document. • It models co-occurrence characteristics of the word basically. • In brief, it is a clustering of the words.

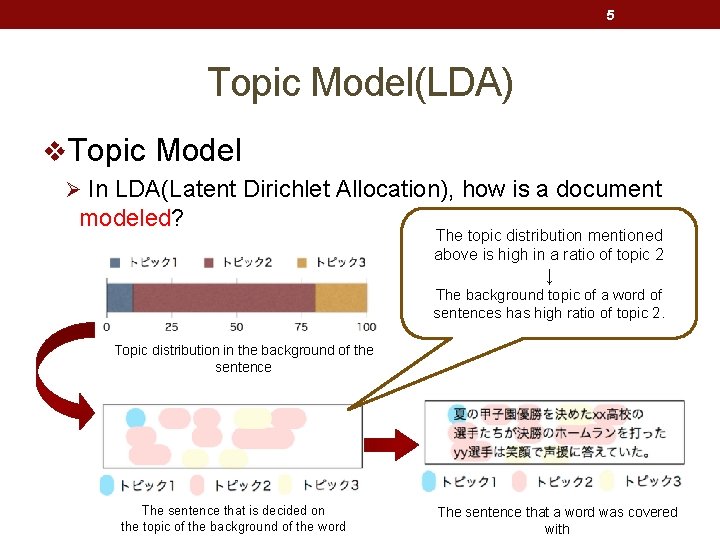

5 Topic Model(LDA) v. Topic Model Ø In LDA(Latent Dirichlet Allocation), how is a document modeled? We think that there is "topic mixture rate" in the background of the sentence The topic distribution mentioned above is high in a ratio of topic 2 ↓ The background topic of a word of sentences has high ratio of topic 2. Topic distribution in the background of the sentence We think that there is one topic in the background of each word of sentences The sentence that is decided on the topic of the background of the word Each word is generated according to the topic of the background The sentence that a word was covered with

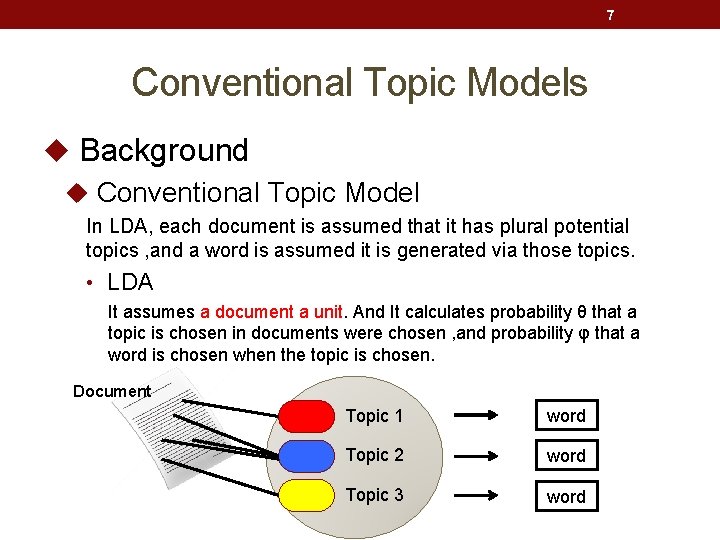

7 Conventional Topic Models u Background u Conventional Topic Model In LDA, each document is assumed that it has plural potential topics , and a word is assumed it is generated via those topics. • LDA It assumes a document a unit. And It calculates probability θ that a topic is chosen in documents were chosen , and probability φ that a word is chosen when the topic is chosen. Document Topic 1 word Topic 2 word Topic 3 word

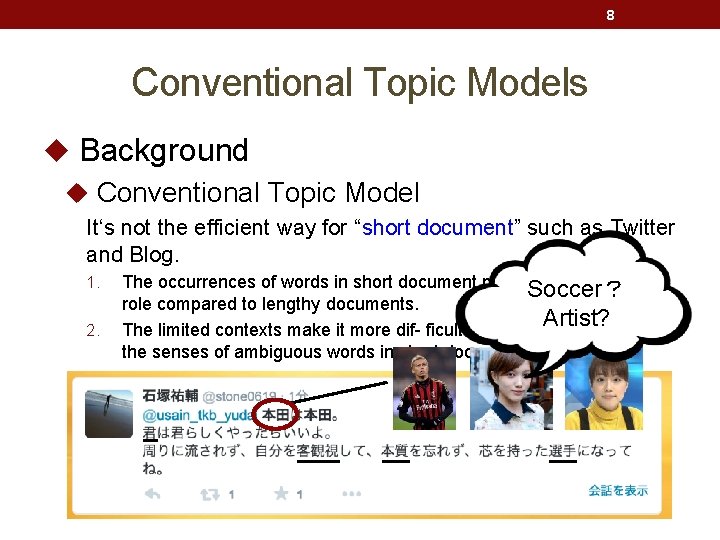

8 Conventional Topic Models u Background u Conventional Topic Model It‘s not the efficient way for “short document” such as Twitter and Blog. 1. 2. The occurrences of words in short document play less discriminative Soccer? role compared to lengthy documents. Artist? The limited contexts make it more dif- ficult for topic models to identify the senses of ambiguous words in short documents.

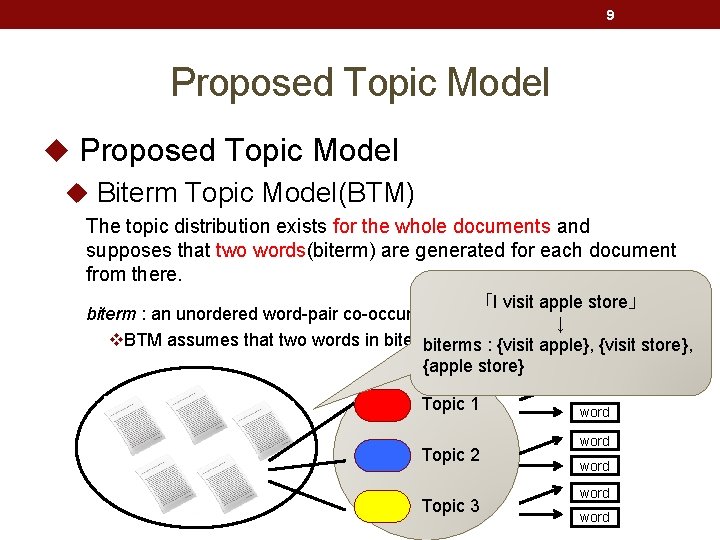

9 Proposed Topic Model u Biterm Topic Model(BTM) The topic distribution exists for the whole documents and supposes that two words(biterm) are generated for each document from there. 「I visit apple store」 biterm : an unordered word-pair co-occurring in a short context ↓ v. BTM assumes that two words in biterm invlolve the same topic. biterms : {visit apple}, {visit store}, {apple store} word Topic 1 Topic 2 Topic 3 word word

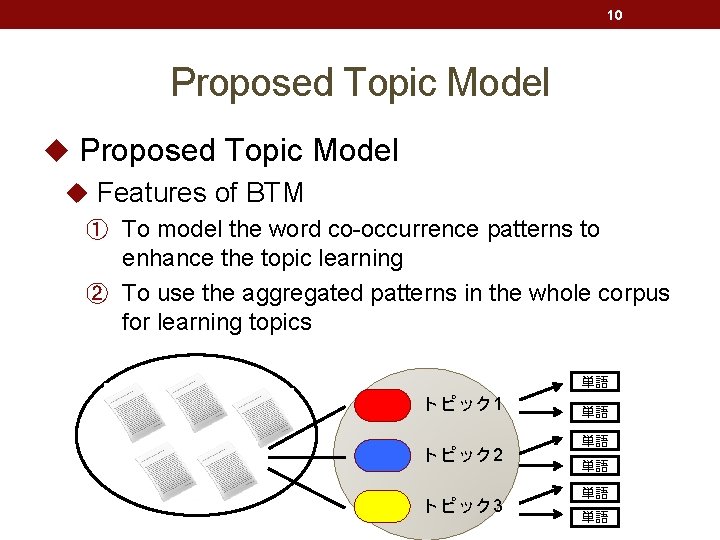

10 Proposed Topic Model u Features of BTM ① To model the word co-occurrence patterns to enhance the topic learning ② To use the aggregated patterns in the whole corpus for learning topics 単語 トピック 1 トピック 2 トピック 3 単語 単語 単語

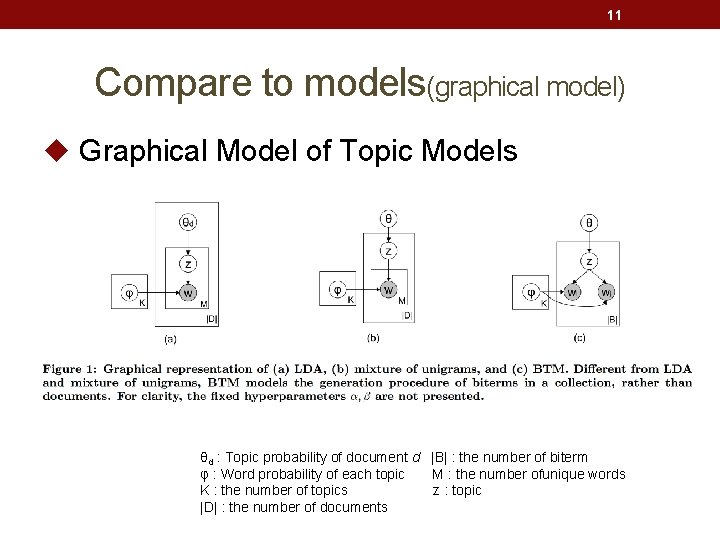

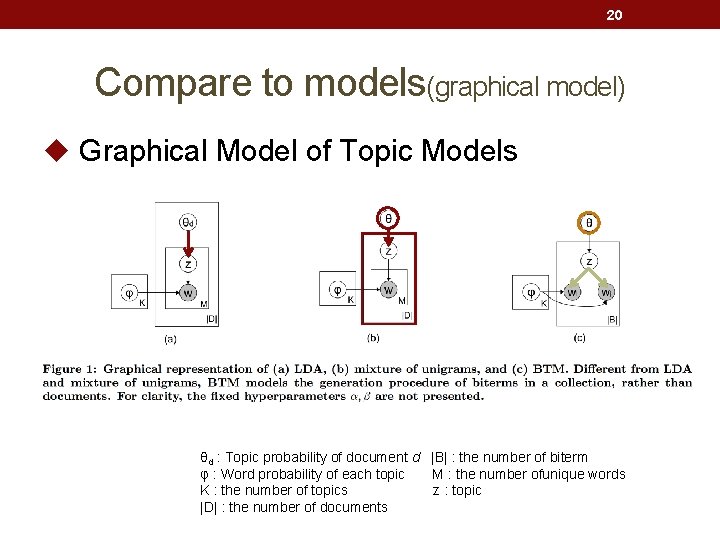

11 Compare to models(graphical model) u Graphical Model of Topic Models θd : Topic probability of document d |B| : the number of biterm φ : Word probability of each topic M : the number ofunique words K : the number of topics z : topic |D| : the number of documents

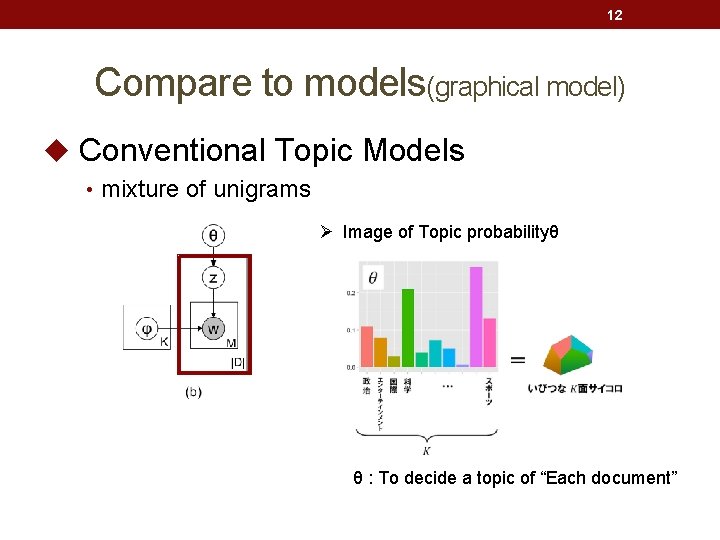

12 Compare to models(graphical model) u Conventional Topic Models • mixture of unigrams Ø Image of Topic probabilityθ θ : To decide a topic of “Each document”

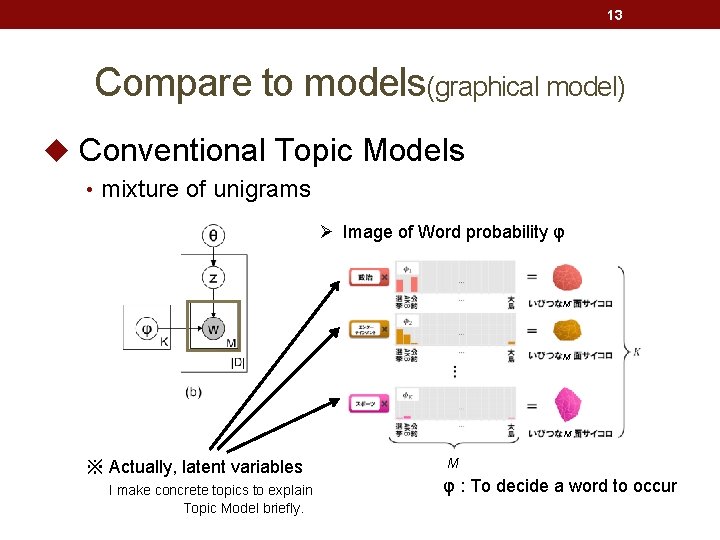

13 Compare to models(graphical model) u Conventional Topic Models • mixture of unigrams Ø Image of Word probability φ M M M ※ Actually, latent variables I make concrete topics to explain Topic Model briefly. M φ : To decide a word to occur

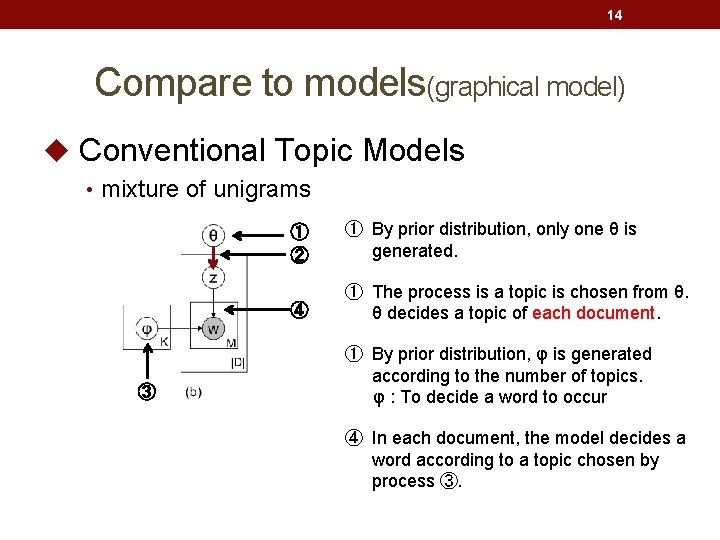

14 Compare to models(graphical model) u Conventional Topic Models • mixture of unigrams ③ ① ② ① By prior distribution, only one θ is generated. ④ ① The process is a topic is chosen from θ. θ decides a topic of each document. ① By prior distribution, φ is generated according to the number of topics. φ : To decide a word to occur ④ In each document, the model decides a word according to a topic chosen by process ③.

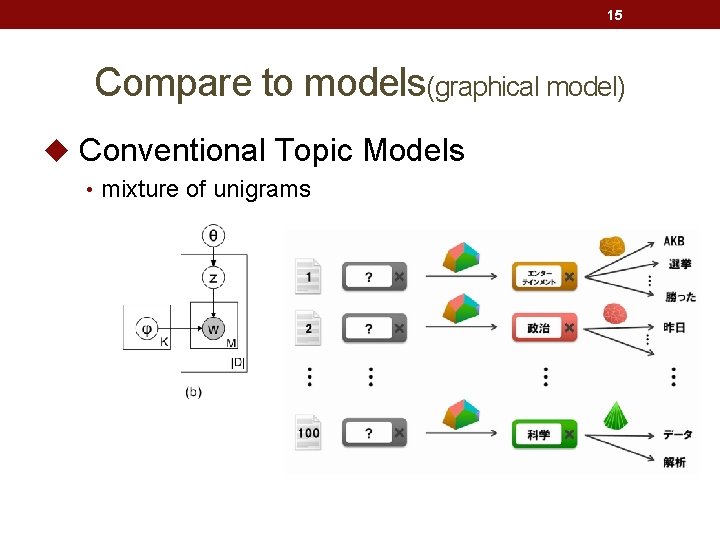

15 Compare to models(graphical model) u Conventional Topic Models • mixture of unigrams

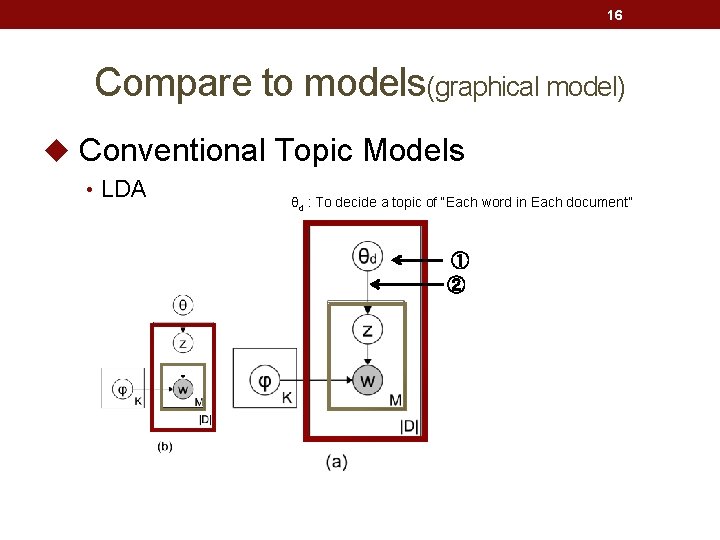

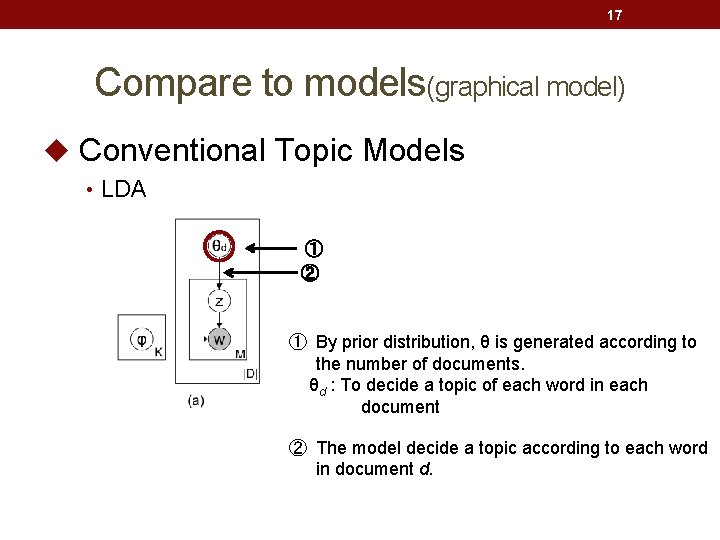

16 Compare to models(graphical model) u Conventional Topic Models • LDA θd : To decide a topic of “Each word in Each document” ① ②

17 Compare to models(graphical model) u Conventional Topic Models • LDA ① ② ① By prior distribution, θ is generated according to the number of documents. θd : To decide a topic of each word in each document ② The model decide a topic according to each word in document d.

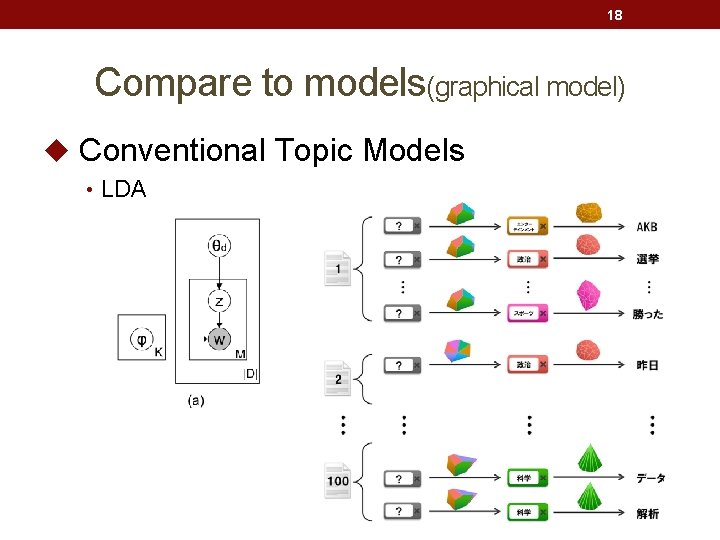

18 Compare to models(graphical model) u Conventional Topic Models • LDA

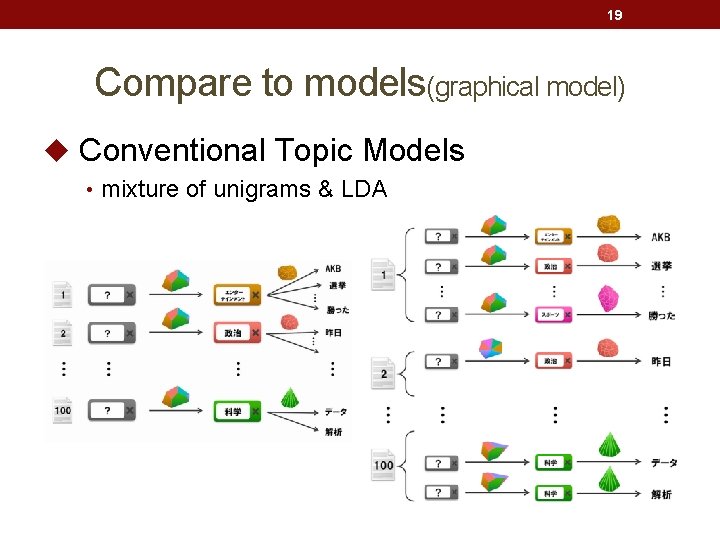

19 Compare to models(graphical model) u Conventional Topic Models • mixture of unigrams & LDA

20 Compare to models(graphical model) u Graphical Model of Topic Models θd : Topic probability of document d |B| : the number of biterm φ : Word probability of each topic M : the number ofunique words K : the number of topics z : topic |D| : the number of documents

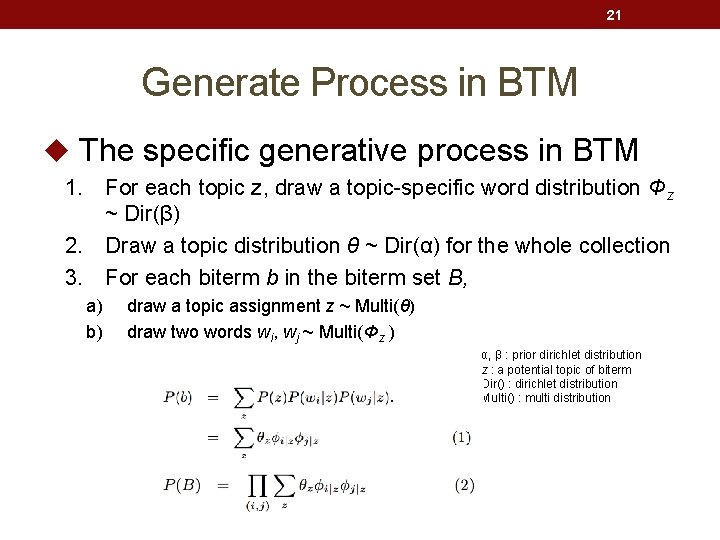

21 Generate Process in BTM u The specific generative process in BTM 1. For each topic z, draw a topic-specific word distribution Φz ~ Dir(β) 2. Draw a topic distribution θ ~ Dir(α) for the whole collection 3. For each biterm b in the biterm set B, a) b) draw a topic assignment z ~ Multi(θ) draw two words wi, wj ~ Multi(Φz ) α, β : prior dirichlet distribution z : a potential topic of biterm Dir() : dirichlet distribution Multi() : multi distribution

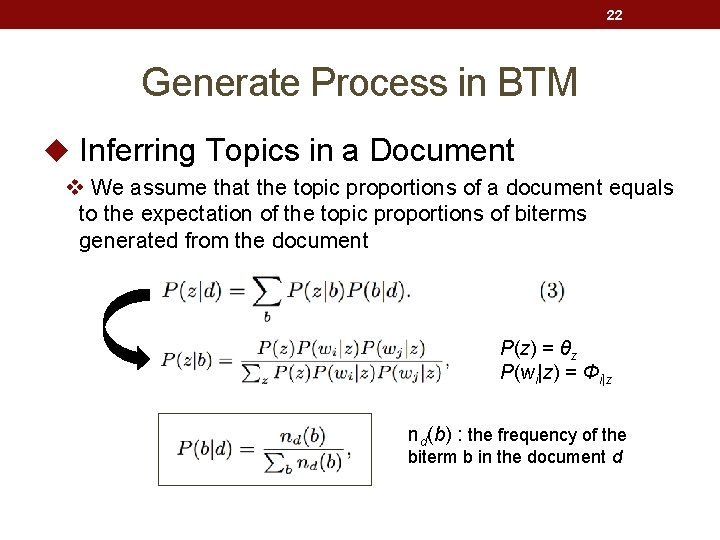

22 Generate Process in BTM u Inferring Topics in a Document v We assume that the topic proportions of a document equals to the expectation of the topic proportions of biterms generated from the document P(z) = θz P(wi|z) = Φi|z nd(b) : the frequency of the biterm b in the document d

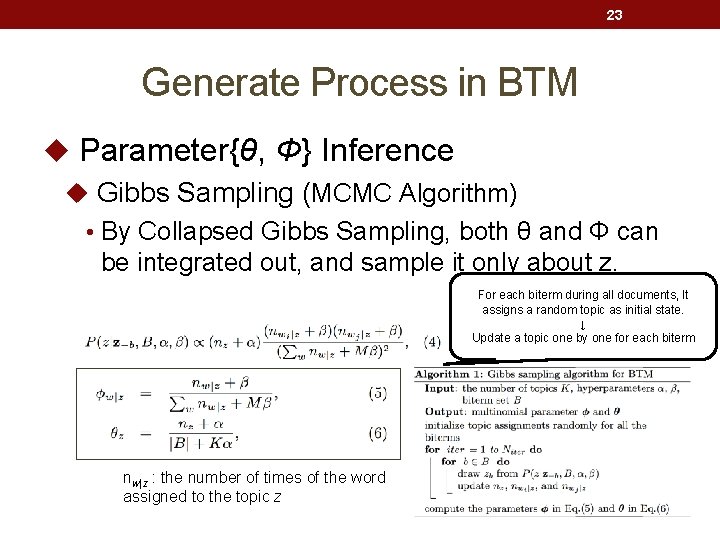

23 Generate Process in BTM u Parameter{θ, Φ} Inference u Gibbs Sampling (MCMC Algorithm) • By Collapsed Gibbs Sampling, both θ and Φ can be integrated out, and sample it only about z. For each biterm during all documents, It assigns a random topic as initial state. ↓ Update a topic one by one for each biterm nw|z : the number of times of the word assigned to the topic z

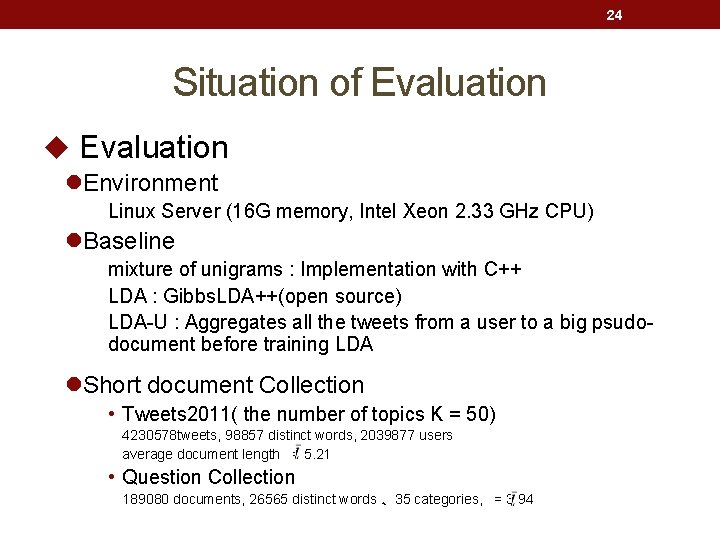

24 Situation of Evaluation u Evaluation l. Environment Linux Server (16 G memory, Intel Xeon 2. 33 GHz CPU) l. Baseline mixture of unigrams : Implementation with C++ LDA : Gibbs. LDA++(open source) LDA-U : Aggregates all the tweets from a user to a big psudodocument before training LDA l. Short document Collection • Tweets 2011( the number of topics K = 50) 4230578 tweets, 98857 distinct words, 2039877 users average document length = 5. 21 • Question Collection 189080 documents, 26565 distinct words 、35 categories, = 3. 94

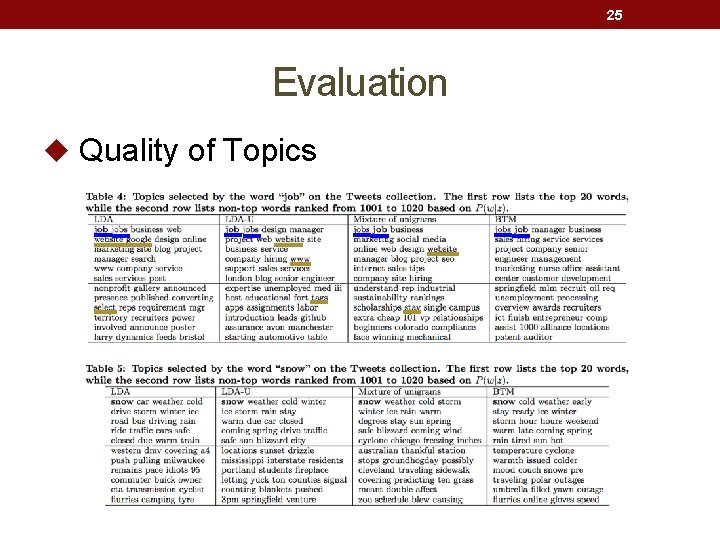

25 Evaluation u Quality of Topics

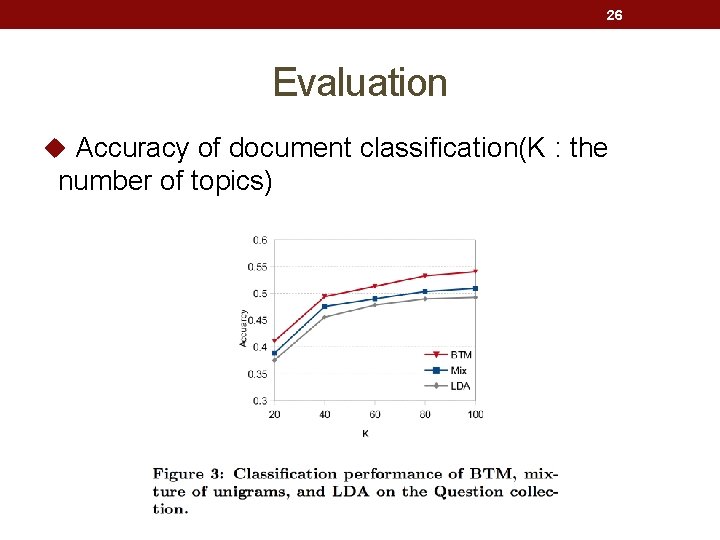

26 Evaluation u Accuracy of document classification(K : the number of topics)

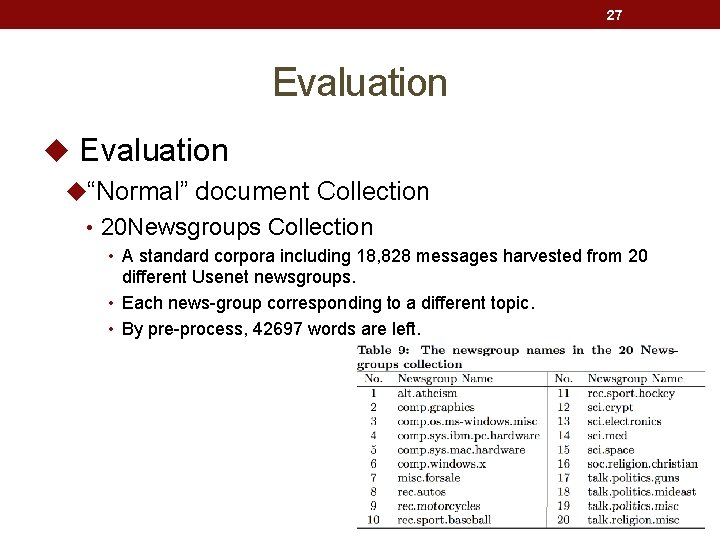

27 Evaluation u“Normal” document Collection • 20 Newsgroups Collection • A standard corpora including 18, 828 messages harvested from 20 different Usenet newsgroups. • Each news-group corresponding to a different topic. • By pre-process, 42697 words are left.

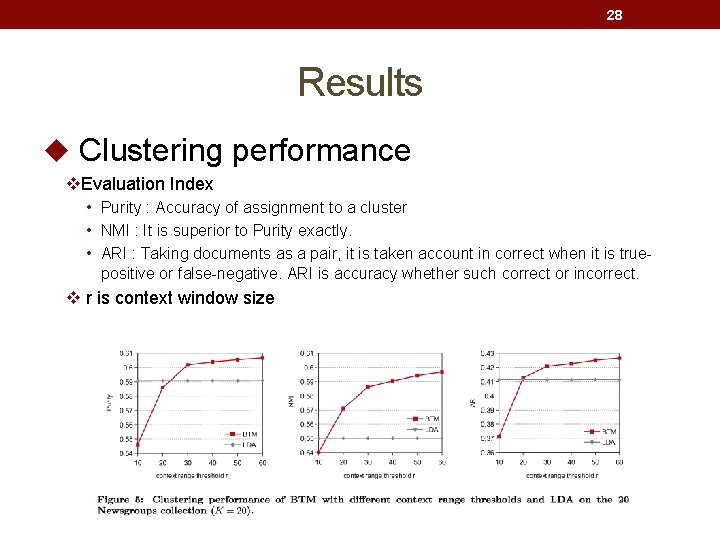

28 Results u Clustering performance v. Evaluation Index • Purity : Accuracy of assignment to a cluster • NMI : It is superior to Purity exactly. • ARI : Taking documents as a pair, it is taken account in correct when it is truepositive or false-negative. ARI is accuracy whether such correct or incorrect. v r is context window size

29 Conclusion & Future work • In this paper, we propose a novel probabilistic topic model for short texts, namely biterm topic model (BTM). • BTM can well capture the topics within short texts as it explicitly models the word co-occurrence patterns and uses the aggregated patterns in the whole corpus. • The results demonstrated that BTM not only can learn higher quality topics, but also more accurately capture the topics of documents than previous methods. • In the future, we would like to find more sophisticated way to estimate the distribution P(b|d). • Moreover, it is also interesting to explore the usage of our model in various real-world applications,

- Slides: 29