048866 Packet Switch Architectures OutputQueued Switches Deterministic Queueing

![Deficit Round Robin (DRR) [Shreedhar & Varghese, ’ 95] An O(1) approximation to WFQ Deficit Round Robin (DRR) [Shreedhar & Varghese, ’ 95] An O(1) approximation to WFQ](https://slidetodoc.com/presentation_image_h2/d372061e3fbd28f028990ae371a5a2f7/image-48.jpg)

![References 1. 2. [GPS] A. K. Parekh and R. Gallager “A Generalized Processor Sharing References 1. 2. [GPS] A. K. Parekh and R. Gallager “A Generalized Processor Sharing](https://slidetodoc.com/presentation_image_h2/d372061e3fbd28f028990ae371a5a2f7/image-60.jpg)

- Slides: 60

048866: Packet Switch Architectures • Output-Queued Switches • Deterministic Queueing Analysis • Fairness and Delay Guarantees Dr. Isaac Keslassy Electrical Engineering, Technion Spring 2005 isaac@ee. technion. ac. il 044114 http: //comnet. technion. ac. il/~isaac/

Outline 1. 2. 3. 4. Spring 2006 Output Queued Switches Terminology: Queues and Arrival Processes. Deterministic Queueing Analysis Output Link Scheduling 048866 – Packet Switch Architectures 2

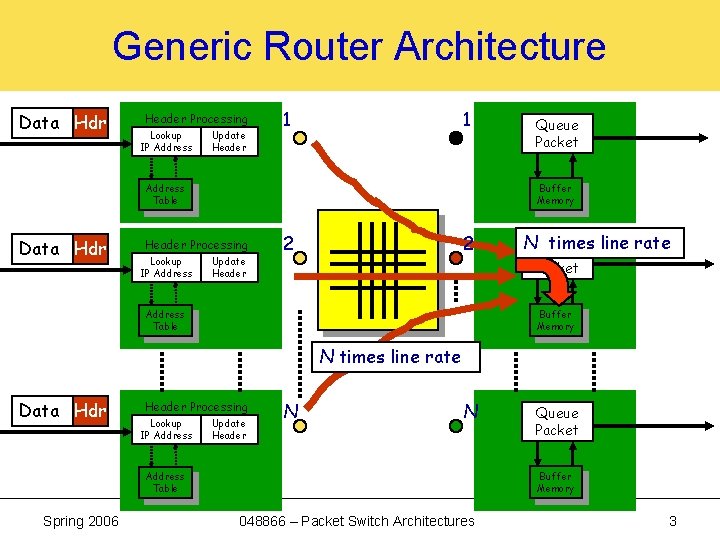

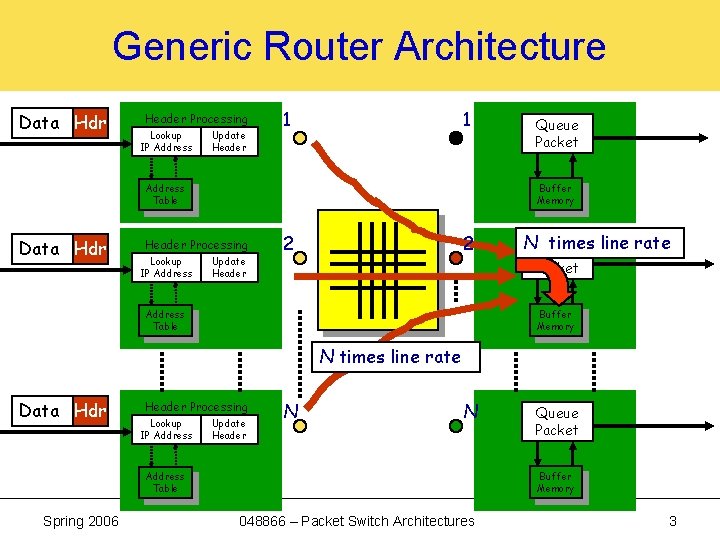

Generic Router Architecture Data Hdr Header Processing Lookup IP Address Update Header 1 1 Buffer Memory Address Table Data Hdr Header Processing Lookup IP Address Queue Packet Update Header 2 2 NQueue times line rate Packet Buffer Memory Address Table N times line rate Data Hdr Header Processing Lookup IP Address Update Header N N Buffer Memory Address Table Spring 2006 Queue Packet 048866 – Packet Switch Architectures 3

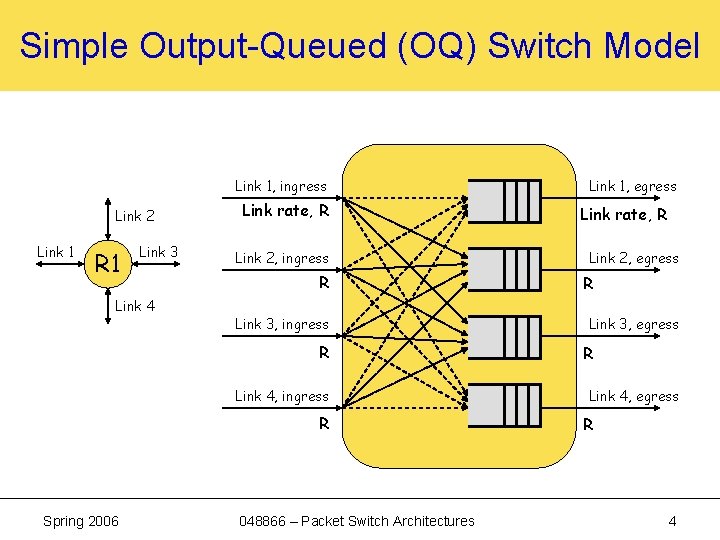

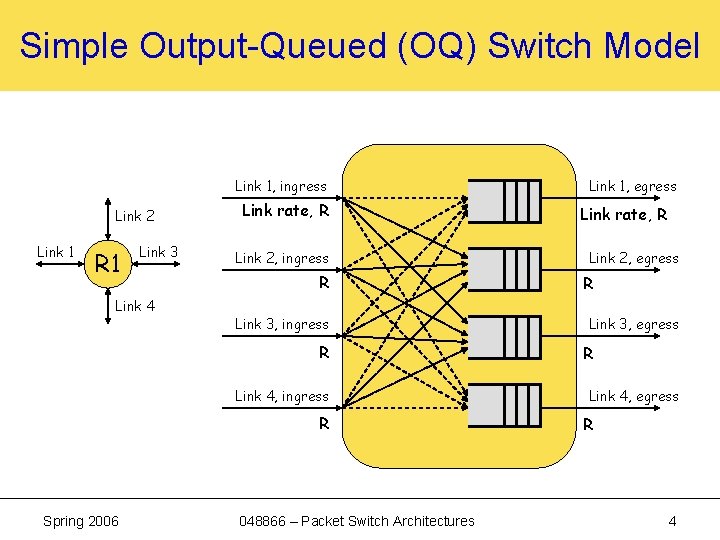

Simple Output-Queued (OQ) Switch Model Link 1, ingress Link 2 Link 1 R 1 Link 3 Link 4 Link rate, R Link 2, ingress R Link 3, ingress R Link 4, ingress R Spring 2006 048866 – Packet Switch Architectures Link 1, egress Link rate, R Link 2, egress R Link 3, egress R Link 4, egress R 4

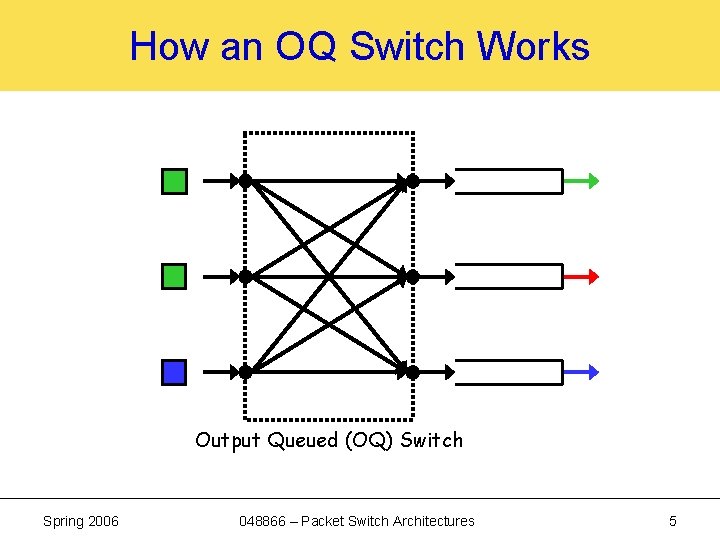

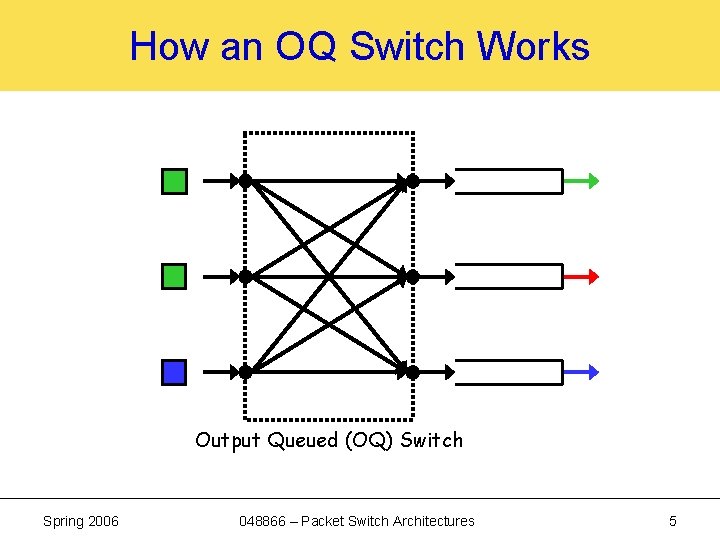

How an OQ Switch Works Output Queued (OQ) Switch Spring 2006 048866 – Packet Switch Architectures 5

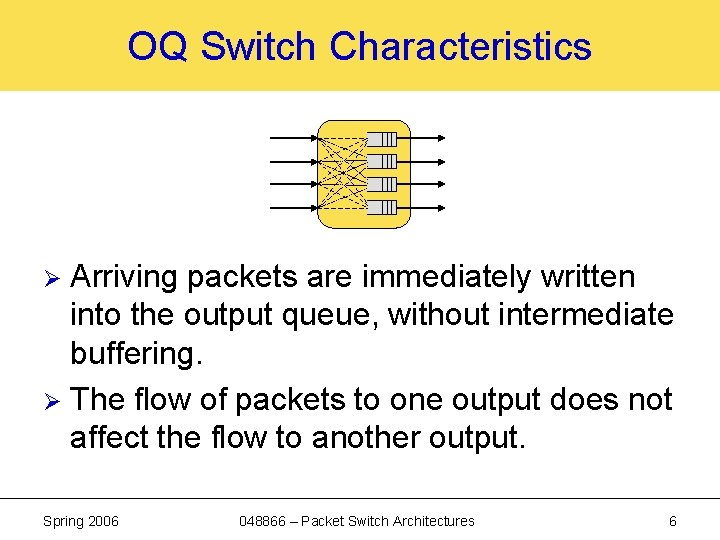

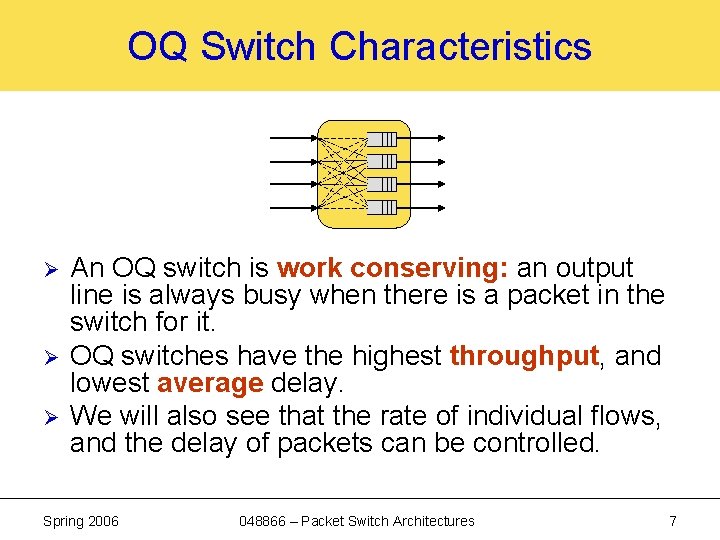

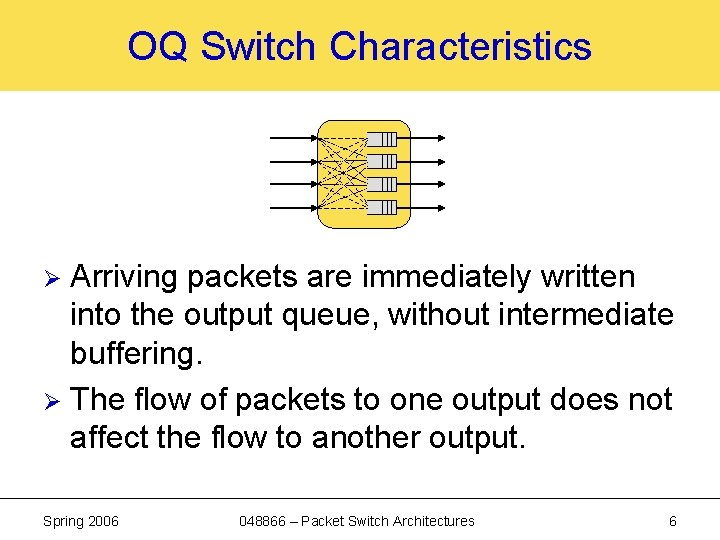

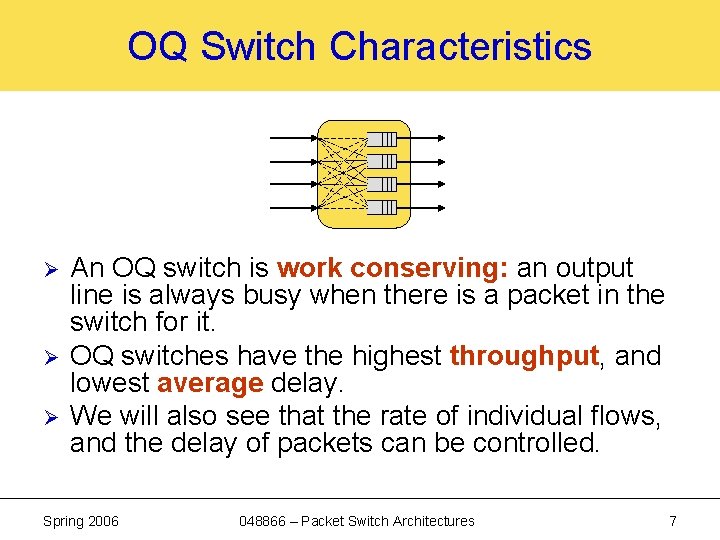

OQ Switch Characteristics Arriving packets are immediately written into the output queue, without intermediate buffering. Ø The flow of packets to one output does not affect the flow to another output. Ø Spring 2006 048866 – Packet Switch Architectures 6

OQ Switch Characteristics Ø Ø Ø An OQ switch is work conserving: an output line is always busy when there is a packet in the switch for it. OQ switches have the highest throughput, and lowest average delay. We will also see that the rate of individual flows, and the delay of packets can be controlled. Spring 2006 048866 – Packet Switch Architectures 7

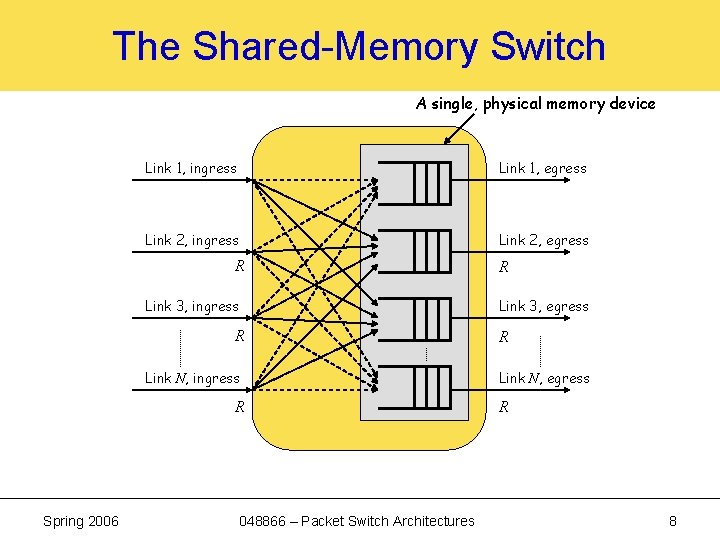

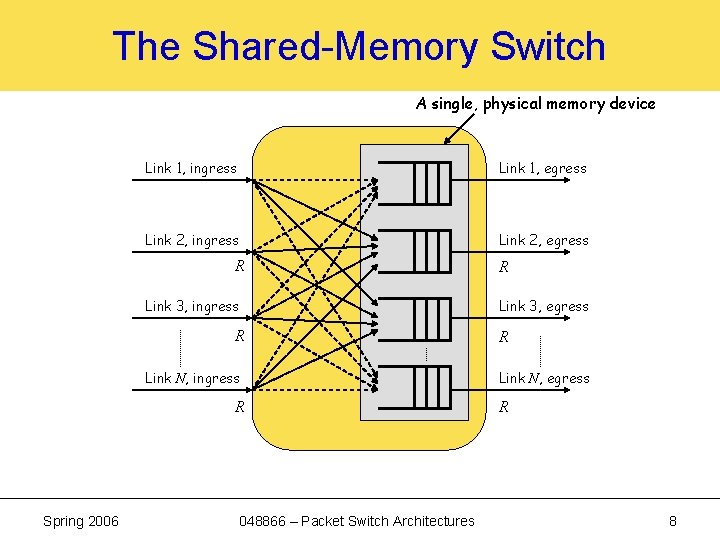

The Shared-Memory Switch A single, physical memory device Link 1, ingress Link 1, egress Link 2, ingress Link 2, egress R Link 3, ingress Link 3, egress R Link N, ingress R Spring 2006 R 048866 – Packet Switch Architectures R Link N, egress R 8

OQ vs. Shared-Memory Ø Memory Bandwidth Ø Buffer Size Spring 2006 048866 – Packet Switch Architectures 9

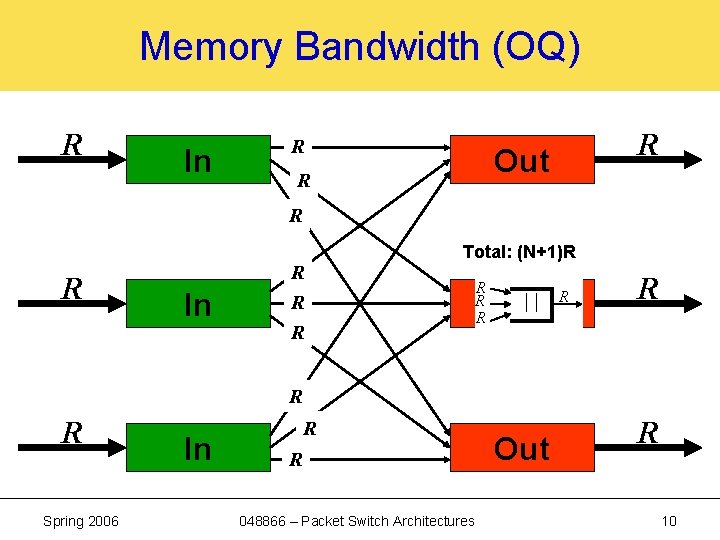

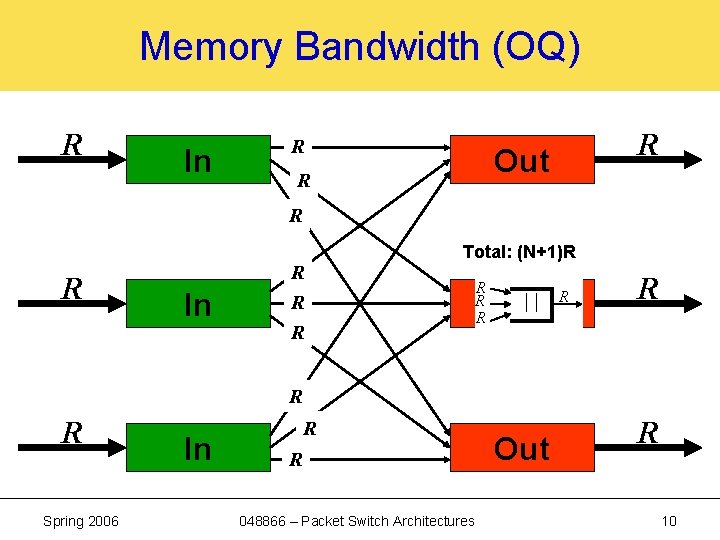

Memory Bandwidth (OQ) R In ? R R Out R ? R In ? R ? R Total: (N+1)R R Out R R R ? R Spring 2006 In ? R R ? 048866 – Packet Switch Architectures Out R 10

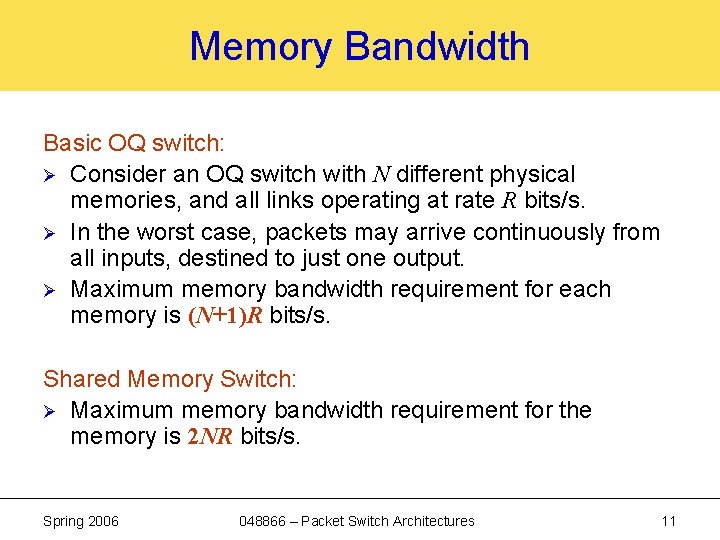

Memory Bandwidth Basic OQ switch: Ø Consider an OQ switch with N different physical memories, and all links operating at rate R bits/s. Ø In the worst case, packets may arrive continuously from all inputs, destined to just one output. Ø Maximum memory bandwidth requirement for each memory is (N+1)R bits/s. Shared Memory Switch: Ø Maximum memory bandwidth requirement for the memory is 2 NR bits/s. Spring 2006 048866 – Packet Switch Architectures 11

OQ vs. Shared-Memory Ø Memory Bandwidth Ø Buffer Size Spring 2006 048866 – Packet Switch Architectures 12

Buffer Size In an OQ switch, let Qi(t) be the length of the queue for output i at time t. Ø Let M be the total buffer size in the shared memory switch. Ø Is a shared-memory switch more bufferefficient than an OQ switch? Ø Spring 2006 048866 – Packet Switch Architectures 13

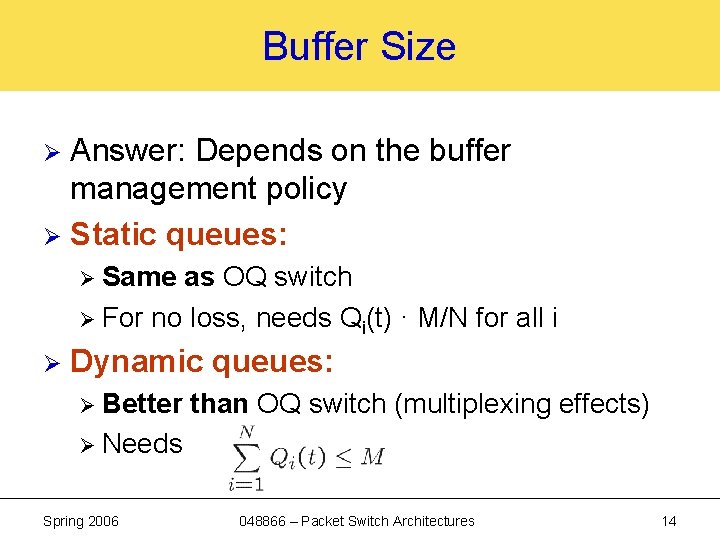

Buffer Size Answer: Depends on the buffer management policy Ø Static queues: Ø Ø Same as OQ switch Ø For no loss, needs Qi(t) · M/N for all i Ø Dynamic queues: Ø Better than OQ switch (multiplexing effects) Ø Needs Spring 2006 048866 – Packet Switch Architectures 14

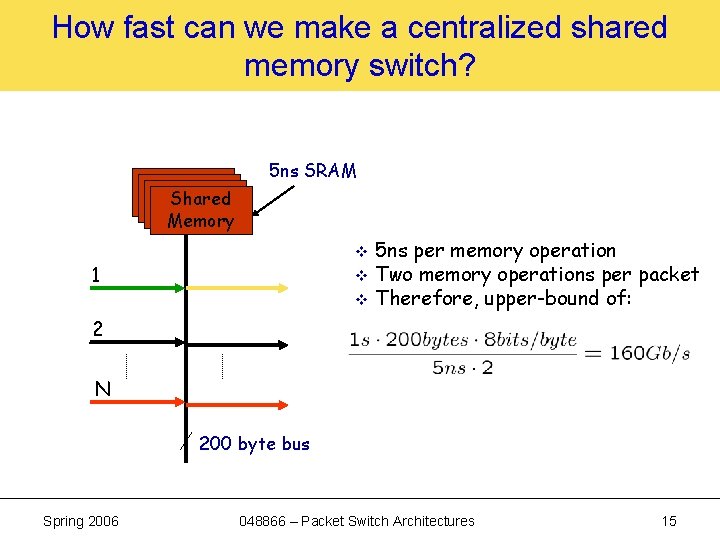

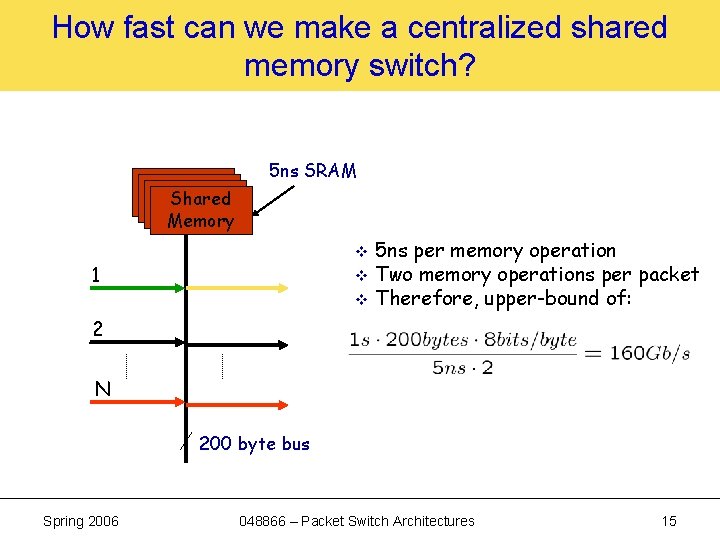

How fast can we make a centralized shared memory switch? 5 ns SRAM Shared Memory 5 ns per memory operation v Two memory operations per packet v Therefore, upper-bound of: v 1 2 N 200 byte bus Spring 2006 048866 – Packet Switch Architectures 15

Outline 1. 2. 3. 4. Spring 2006 Output Queued Switches Terminology: Queues and Arrival Processes. Deterministic Queueing Analysis Output Link Scheduling 048866 – Packet Switch Architectures 16

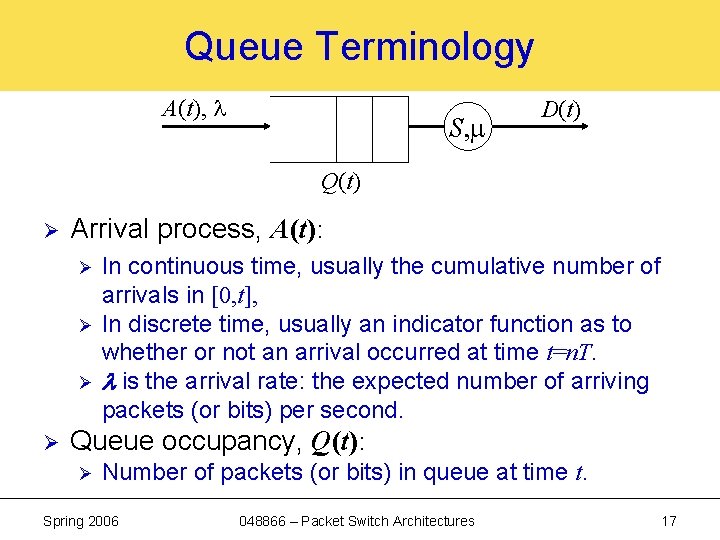

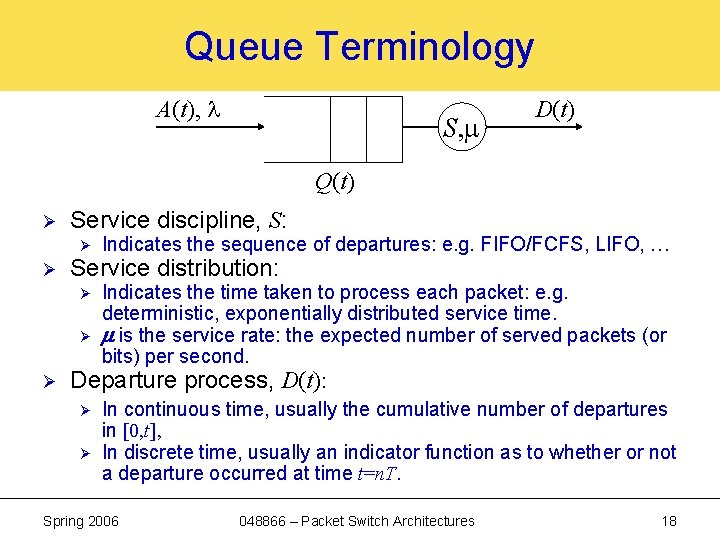

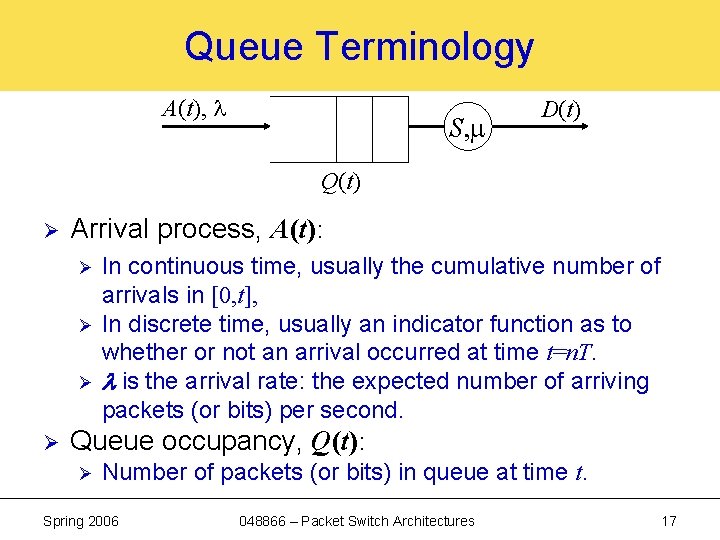

Queue Terminology A(t), l S, m D(t) Q(t) Ø Arrival process, A(t): Ø Ø In continuous time, usually the cumulative number of arrivals in [0, t], In discrete time, usually an indicator function as to whether or not an arrival occurred at time t=n. T. l is the arrival rate: the expected number of arriving packets (or bits) per second. Queue occupancy, Q(t): Ø Number of packets (or bits) in queue at time t. Spring 2006 048866 – Packet Switch Architectures 17

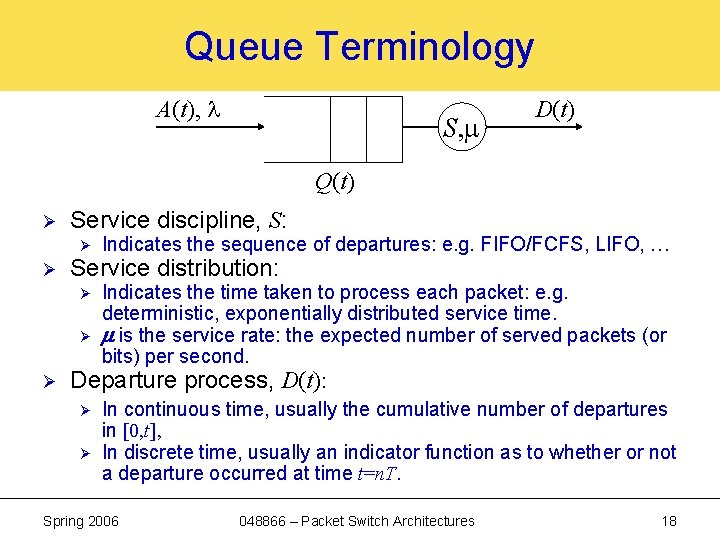

Queue Terminology A(t), l S, m D(t) Q(t) Ø Service discipline, S: Ø Ø Service distribution: Ø Ø Ø Indicates the sequence of departures: e. g. FIFO/FCFS, LIFO, … Indicates the time taken to process each packet: e. g. deterministic, exponentially distributed service time. m is the service rate: the expected number of served packets (or bits) per second. Departure process, D(t): Ø Ø In continuous time, usually the cumulative number of departures in [0, t], In discrete time, usually an indicator function as to whether or not a departure occurred at time t=n. T. Spring 2006 048866 – Packet Switch Architectures 18

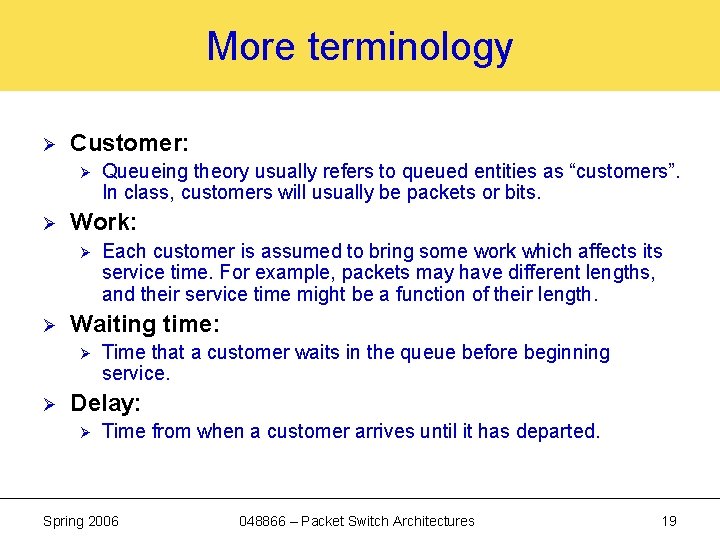

More terminology Ø Customer: Ø Ø Work: Ø Ø Each customer is assumed to bring some work which affects its service time. For example, packets may have different lengths, and their service time might be a function of their length. Waiting time: Ø Ø Queueing theory usually refers to queued entities as “customers”. In class, customers will usually be packets or bits. Time that a customer waits in the queue before beginning service. Delay: Ø Time from when a customer arrives until it has departed. Spring 2006 048866 – Packet Switch Architectures 19

Arrival Processes Ø Deterministic arrival processes: Ø Ø Ø E. g. 1 arrival every second; or a burst of 4 packets every other second. A deterministic sequence may be designed to be adversarial to expose some weakness of the system. Random arrival processes: Ø (Discrete time) Bernoulli i. i. d. arrival process: • Let A(t) = 1 if an arrival occurs at time t, where t = n. T, n=0, 1, … • A(t) = 1 w. p. p and 0 w. p. 1 -p. • Series of independent coin tosses with p-coin. Ø (Continuous time) Poisson arrival process: • Exponentially distributed interarrival times. Spring 2006 048866 – Packet Switch Architectures 20

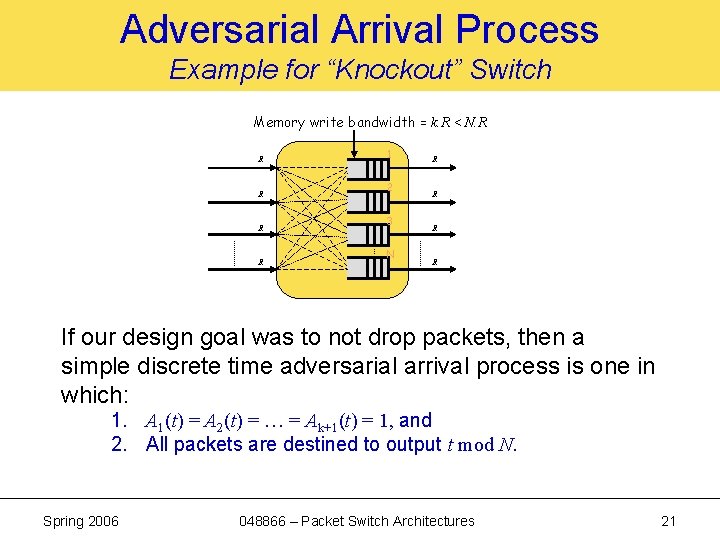

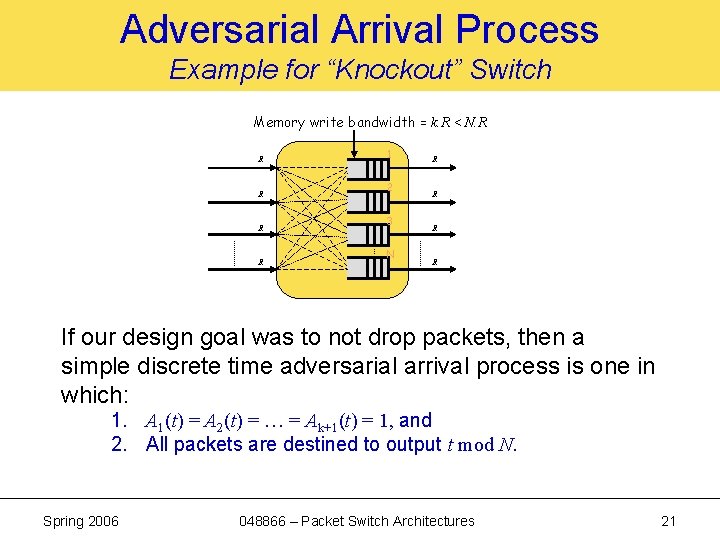

Adversarial Arrival Process Example for “Knockout” Switch Memory write bandwidth = k. R < N. R R R 1 2 3 N R R If our design goal was to not drop packets, then a simple discrete time adversarial arrival process is one in which: 1. A 1(t) = A 2(t) = … = Ak+1(t) = 1, and 2. All packets are destined to output t mod N. Spring 2006 048866 – Packet Switch Architectures 21

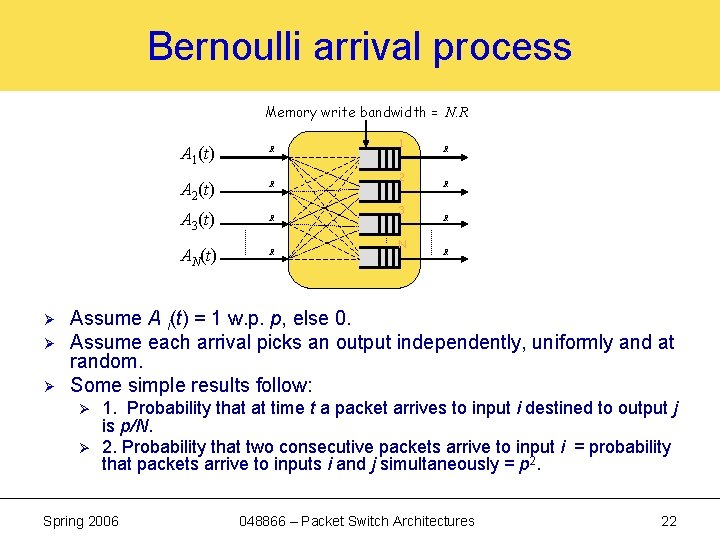

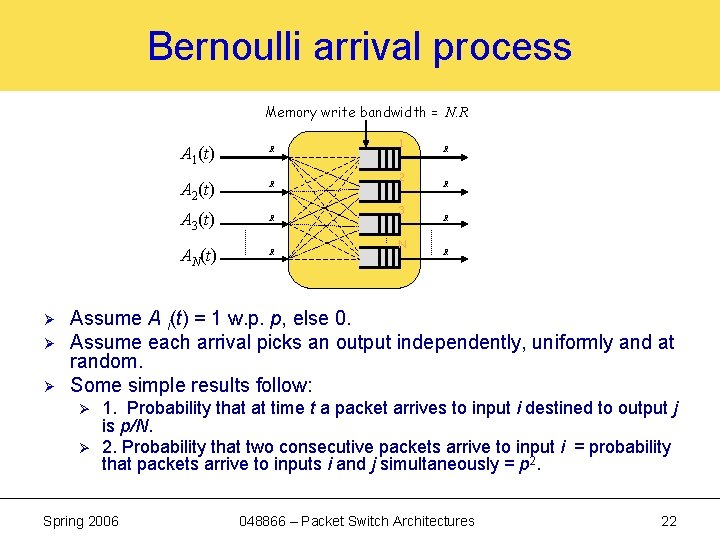

Bernoulli arrival process Memory write bandwidth = N. R Ø Ø Ø A 1(t) R A 2(t) R A 3(t) R AN(t) R 1 2 3 N R R Assume A i(t) = 1 w. p. p, else 0. Assume each arrival picks an output independently, uniformly and at random. Some simple results follow: Ø Ø 1. Probability that at time t a packet arrives to input i destined to output j is p/N. 2. Probability that two consecutive packets arrive to input i = probability that packets arrive to inputs i and j simultaneously = p 2. Spring 2006 048866 – Packet Switch Architectures 22

Outline 1. 2. 3. 4. Spring 2006 Output Queued Switches Terminology: Queues and Arrival Processes. Deterministic Queueing Analysis Output Link Scheduling 048866 – Packet Switch Architectures 23

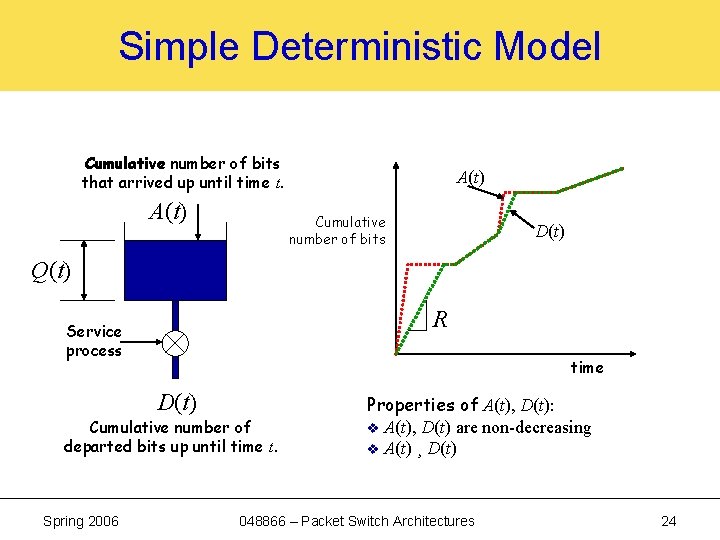

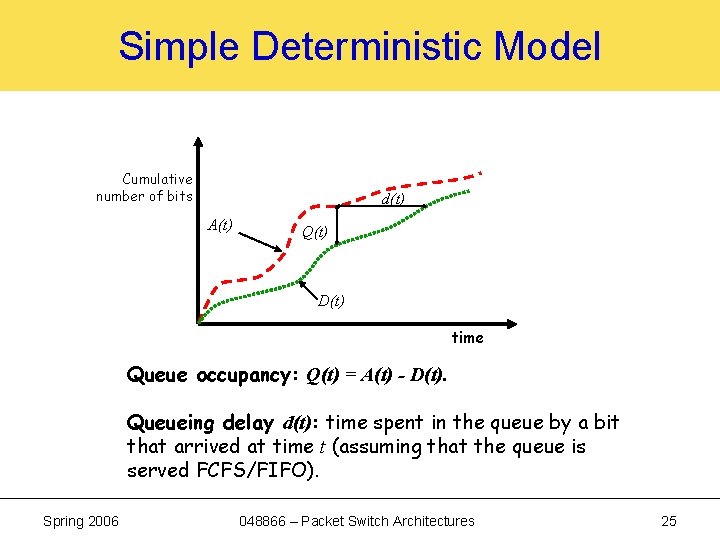

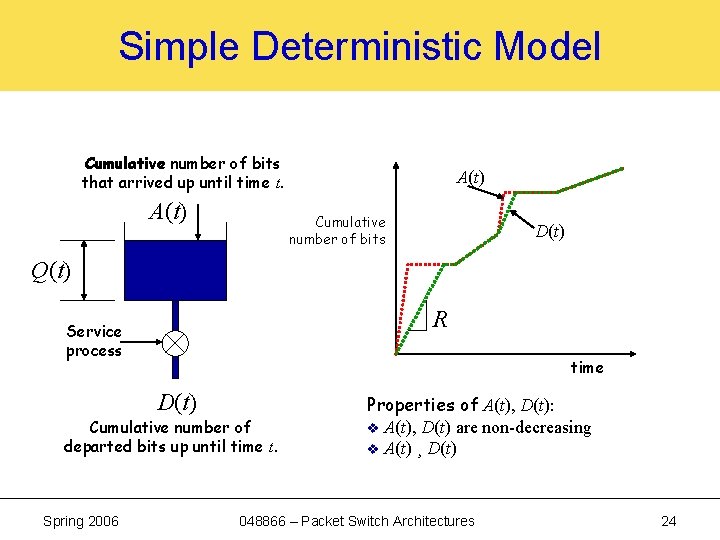

Simple Deterministic Model Cumulative number of bits that arrived up until time t. A(t) Cumulative number of bits D(t) Q(t) R Service process time D(t) Cumulative number of departed bits up until time t. Spring 2006 Properties of A(t), D(t): v A(t), D(t) are non-decreasing v A(t) ¸ D(t) 048866 – Packet Switch Architectures 24

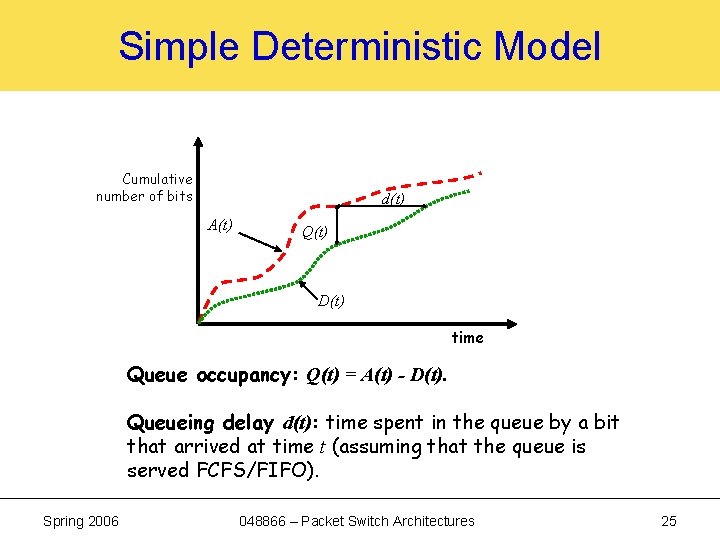

Simple Deterministic Model Cumulative number of bits d(t) A(t) Q(t) D(t) time Queue occupancy: Q(t) = A(t) - D(t). Queueing delay d(t): time spent in the queue by a bit that arrived at time t (assuming that the queue is served FCFS/FIFO). Spring 2006 048866 – Packet Switch Architectures 25

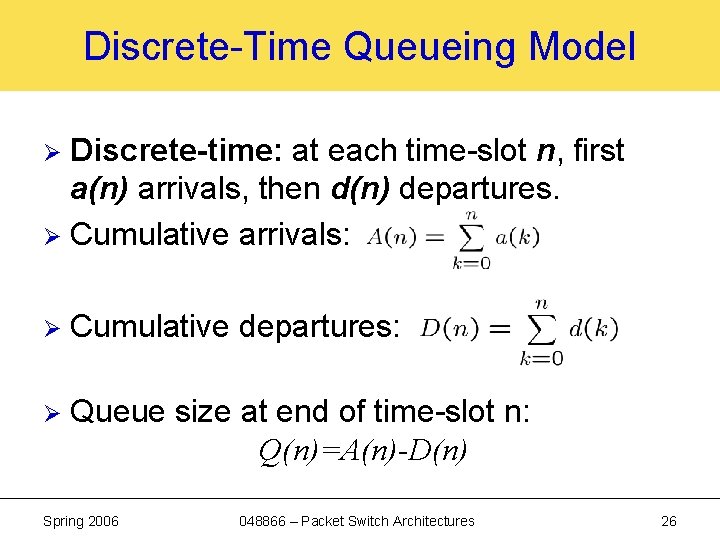

Discrete-Time Queueing Model Discrete-time: at each time-slot n, first a(n) arrivals, then d(n) departures. Ø Cumulative arrivals: Ø Ø Cumulative departures: Ø Queue size at end of time-slot n: Q(n)=A(n)-D(n) Spring 2006 048866 – Packet Switch Architectures 26

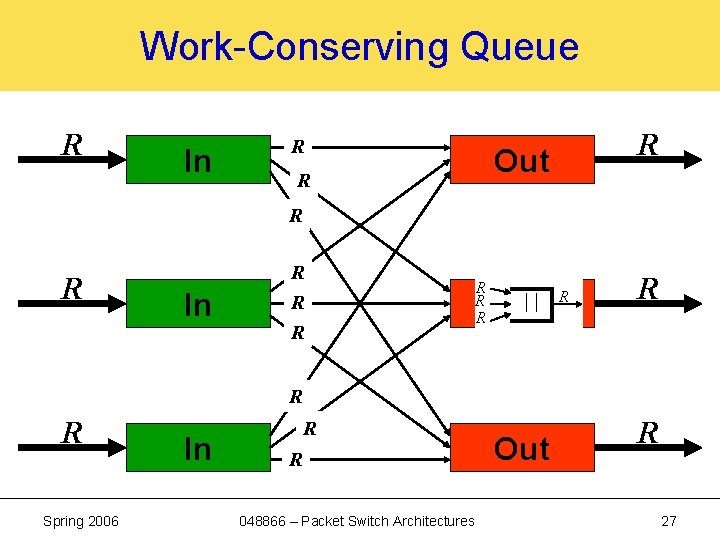

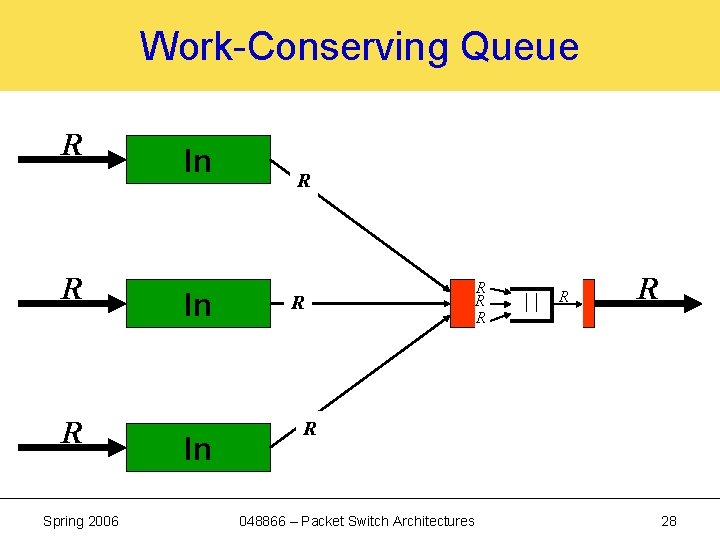

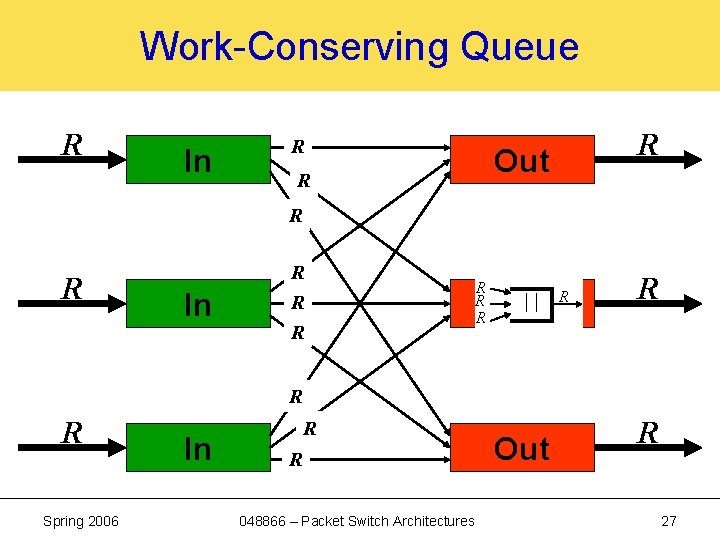

Work-Conserving Queue R In ? R R Out R ? R In ? R ? R R Out R R R ? R Spring 2006 In ? R R ? 048866 – Packet Switch Architectures Out R 27

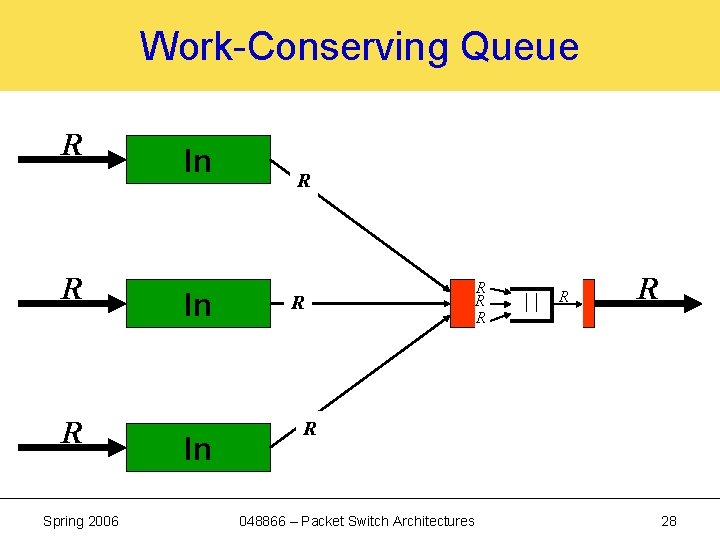

Work-Conserving Queue R R R Spring 2006 In In In ? R R R R Out R R ? R 048866 – Packet Switch Architectures 28

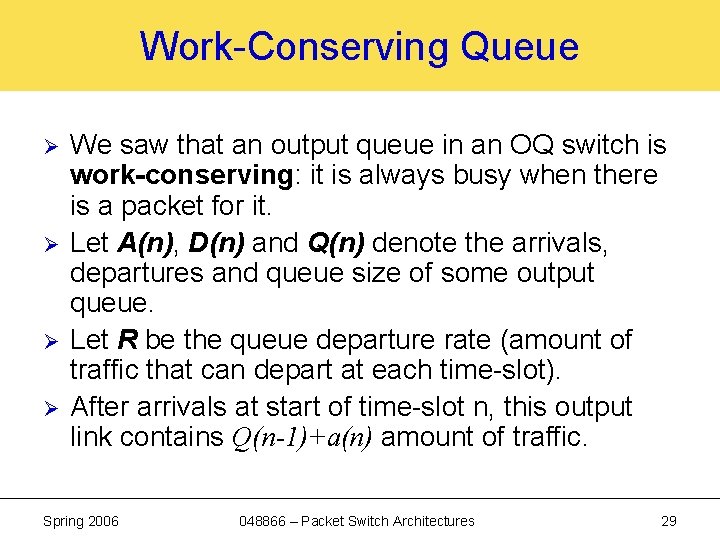

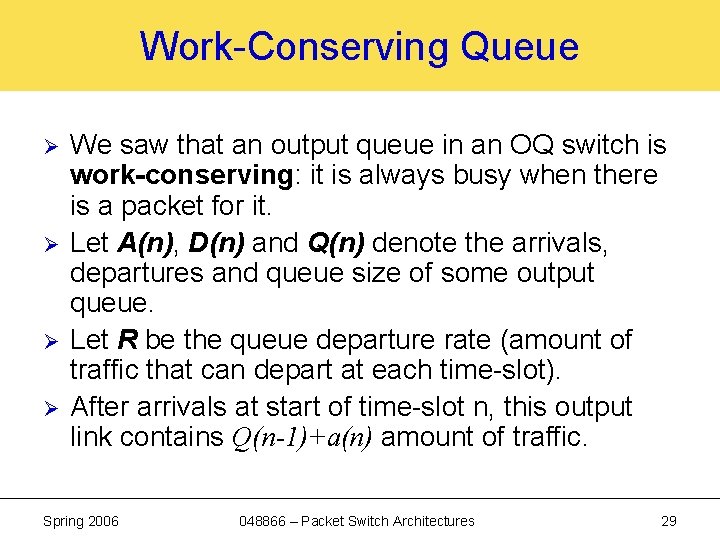

Work-Conserving Queue Ø Ø We saw that an output queue in an OQ switch is work-conserving: it is always busy when there is a packet for it. Let A(n), D(n) and Q(n) denote the arrivals, departures and queue size of some output queue. Let R be the queue departure rate (amount of traffic that can depart at each time-slot). After arrivals at start of time-slot n, this output link contains Q(n-1)+a(n) amount of traffic. Spring 2006 048866 – Packet Switch Architectures 29

Work-Conserving Output Link Ø Case 1: Q(n-1)+a(n) · R ) everything is serviced, nothing is left in the queue. Case 2: Q(n-1)+a(n) > R ) exactly R amount of traffic is serviced, Q(n)=Q(n-1)+a(n) - R. Ø Lindley’s Equation: Ø Note: to find cumulative departures, use: Ø Q(n) = max(Q(n-1)+a(n)-R, 0) = (Q(n-1)+a(n)-R)+ D(n)=A(n)-Q(n) Spring 2006 048866 – Packet Switch Architectures 30

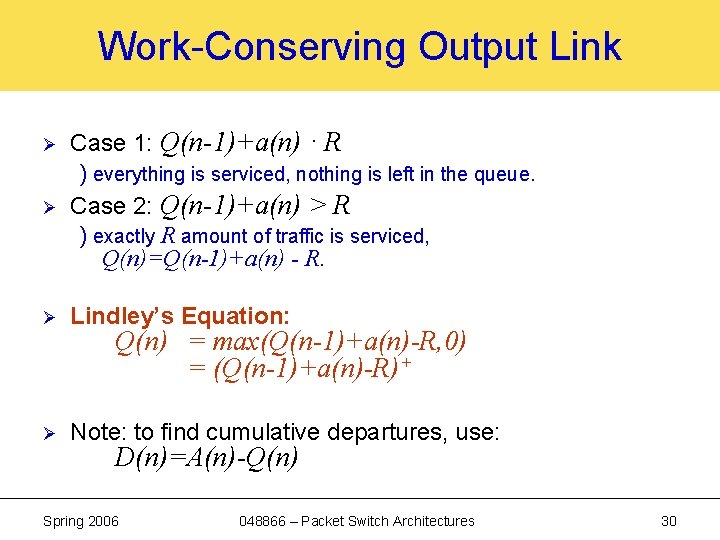

Outline 1. 2. 3. 4. Spring 2006 Output Queued Switches Terminology: Queues and Arrival Processes. Deterministic Queueing Analysis Output Link Scheduling 048866 – Packet Switch Architectures 31

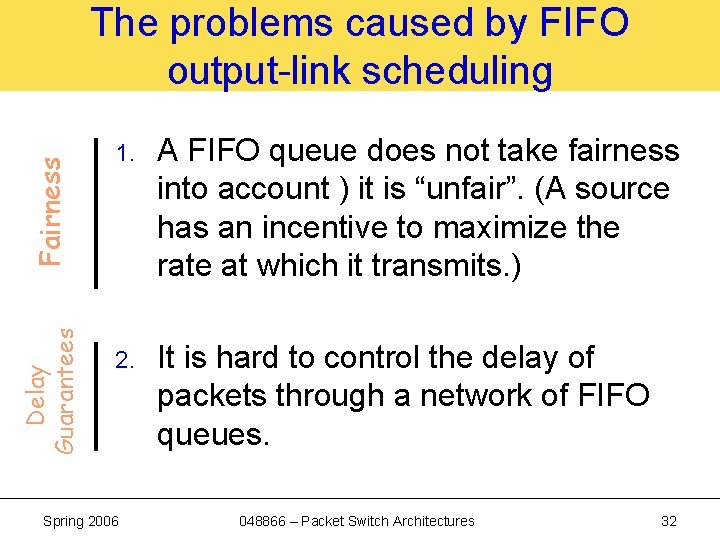

Delay Guarantees Fairness The problems caused by FIFO output-link scheduling 1. A FIFO queue does not take fairness into account ) it is “unfair”. (A source has an incentive to maximize the rate at which it transmits. ) 2. It is hard to control the delay of packets through a network of FIFO queues. Spring 2006 048866 – Packet Switch Architectures 32

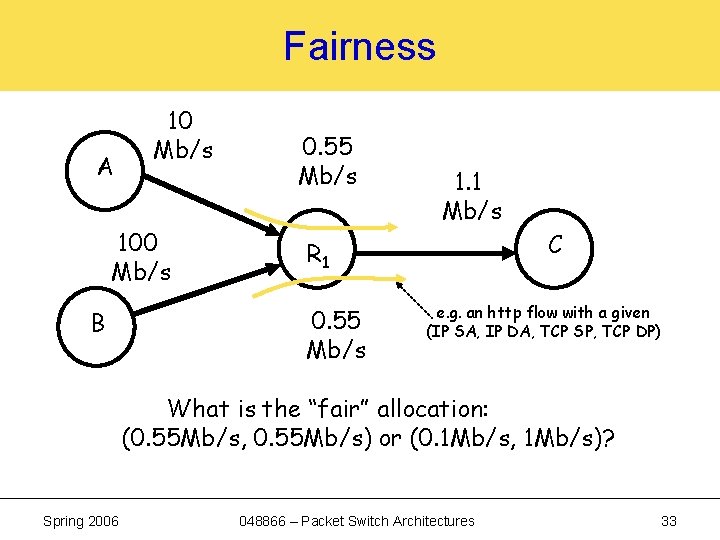

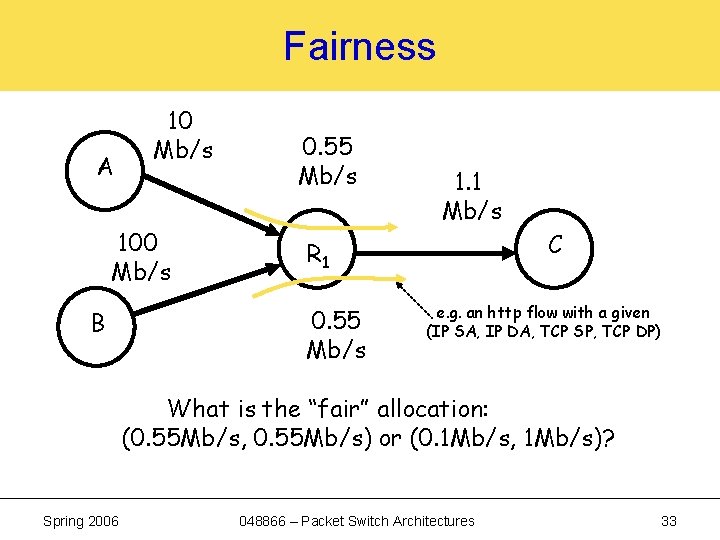

Fairness A 10 Mb/s 100 Mb/s B 0. 55 Mb/s 1. 1 Mb/s C R 1 0. 55 Mb/s e. g. an http flow with a given (IP SA, IP DA, TCP SP, TCP DP) What is the “fair” allocation: (0. 55 Mb/s, 0. 55 Mb/s) or (0. 1 Mb/s, 1 Mb/s)? Spring 2006 048866 – Packet Switch Architectures 33

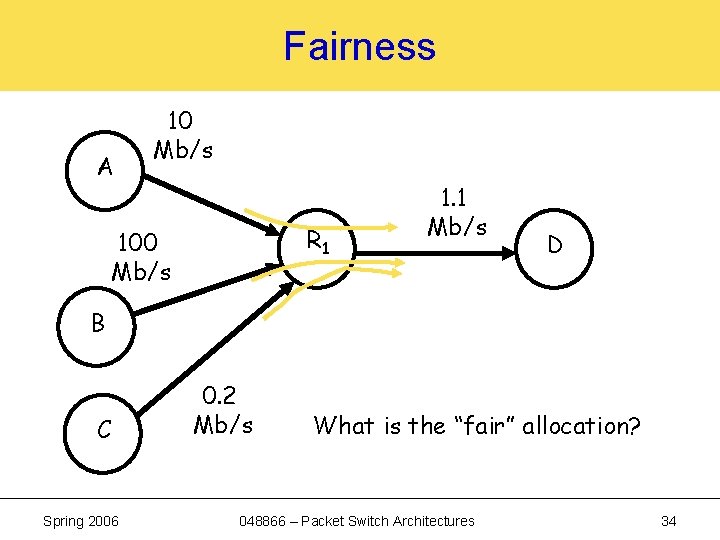

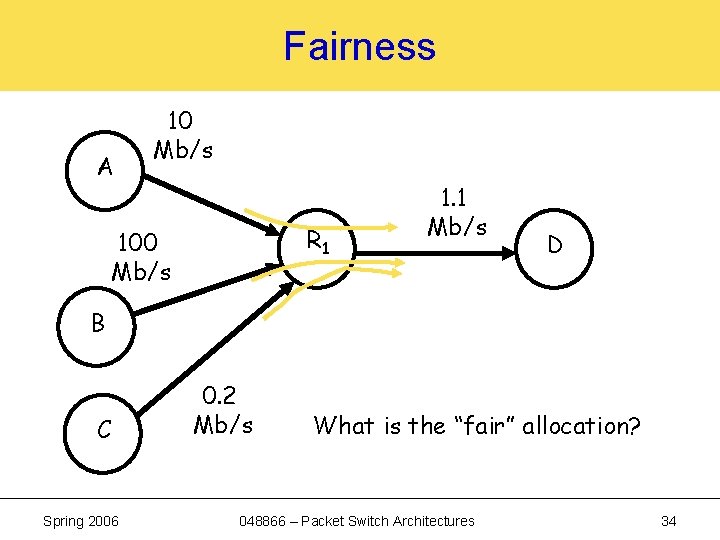

Fairness A 10 Mb/s R 1 100 Mb/s 1. 1 Mb/s D B C Spring 2006 0. 2 Mb/s What is the “fair” allocation? 048866 – Packet Switch Architectures 34

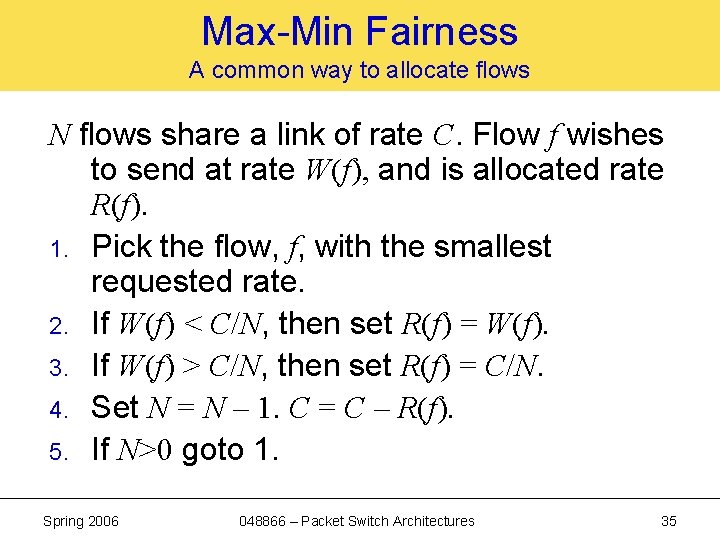

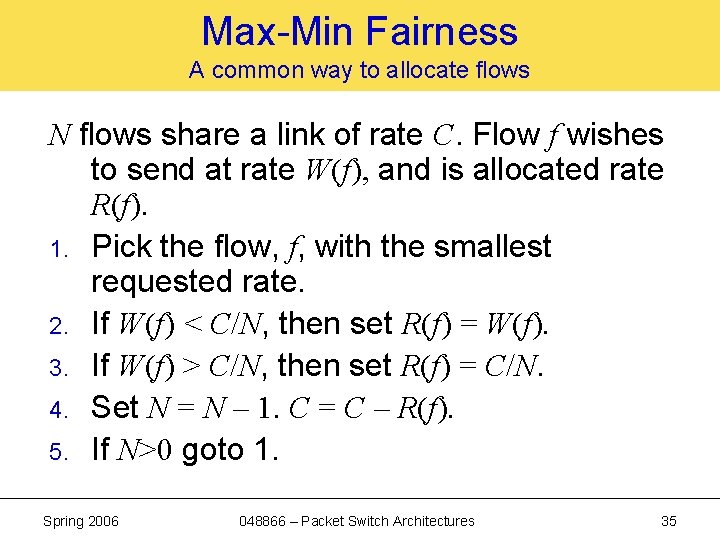

Max-Min Fairness A common way to allocate flows N flows share a link of rate C. Flow f wishes to send at rate W(f), and is allocated rate R(f). 1. Pick the flow, f, with the smallest requested rate. 2. If W(f) < C/N, then set R(f) = W(f). 3. If W(f) > C/N, then set R(f) = C/N. 4. Set N = N – 1. C = C – R(f). 5. If N>0 goto 1. Spring 2006 048866 – Packet Switch Architectures 35

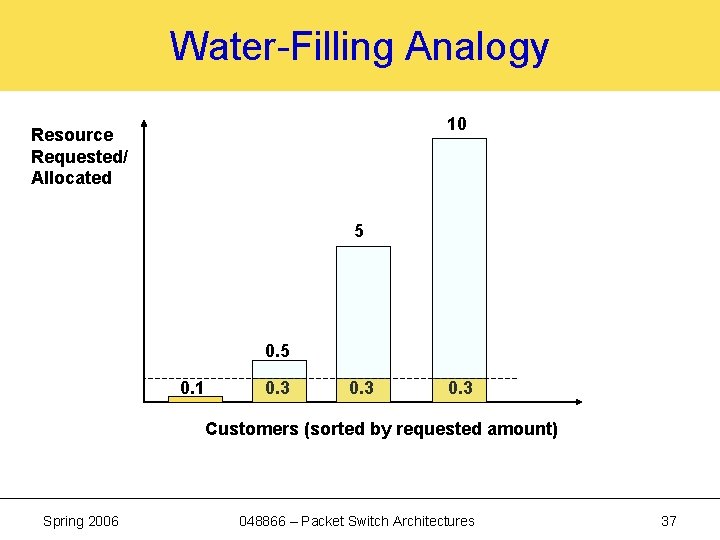

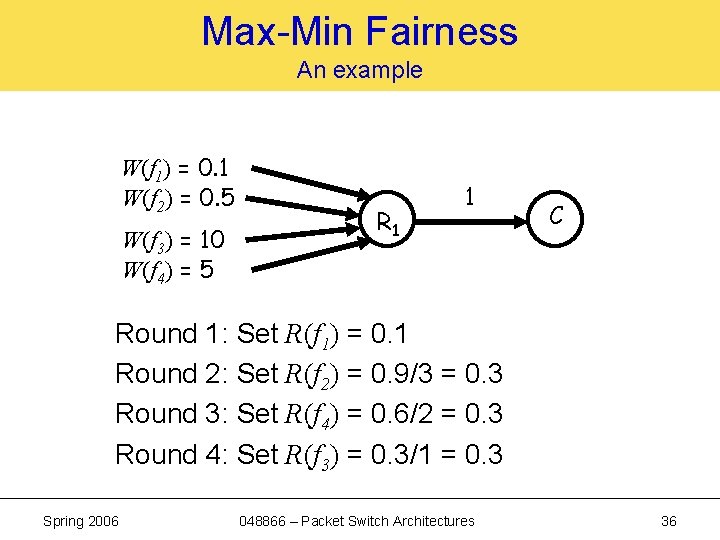

Max-Min Fairness An example W(f 1) = 0. 1 W(f 2) = 0. 5 W(f 3) = 10 W(f 4) = 5 R 1 1 C Round 1: Set R(f 1) = 0. 1 Round 2: Set R(f 2) = 0. 9/3 = 0. 3 Round 3: Set R(f 4) = 0. 6/2 = 0. 3 Round 4: Set R(f 3) = 0. 3/1 = 0. 3 Spring 2006 048866 – Packet Switch Architectures 36

Water-Filling Analogy 10 Resource Requested/ Allocated 5 0. 1 0. 3 Customers (sorted by requested amount) Spring 2006 048866 – Packet Switch Architectures 37

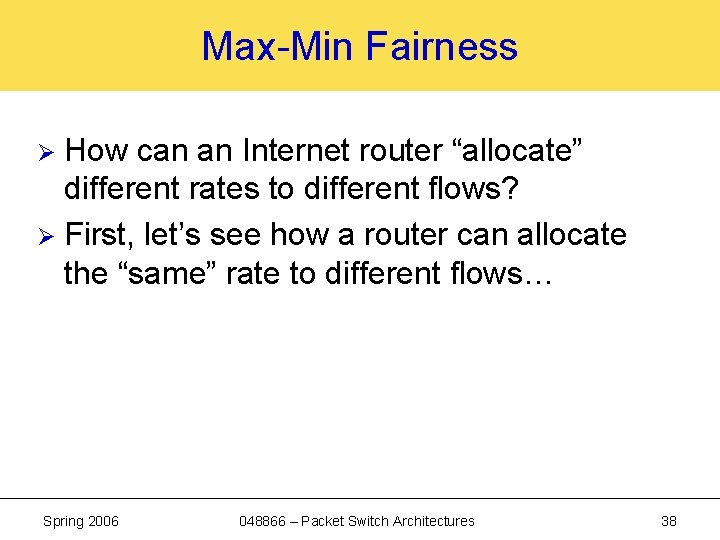

Max-Min Fairness How can an Internet router “allocate” different rates to different flows? Ø First, let’s see how a router can allocate the “same” rate to different flows… Ø Spring 2006 048866 – Packet Switch Architectures 38

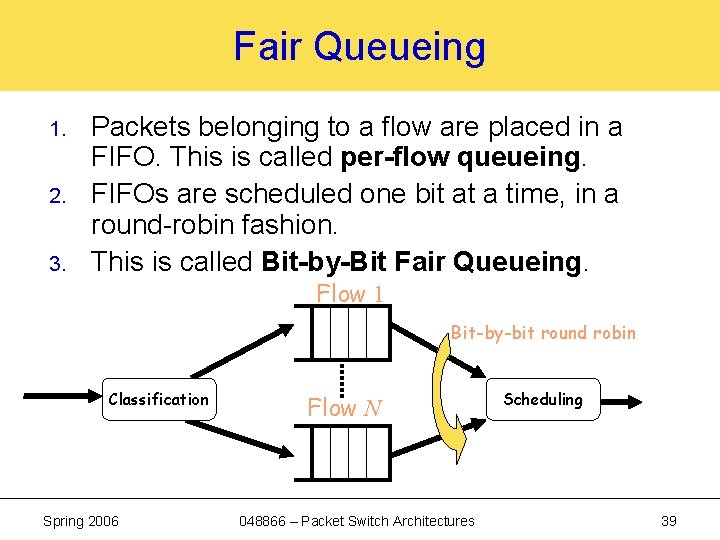

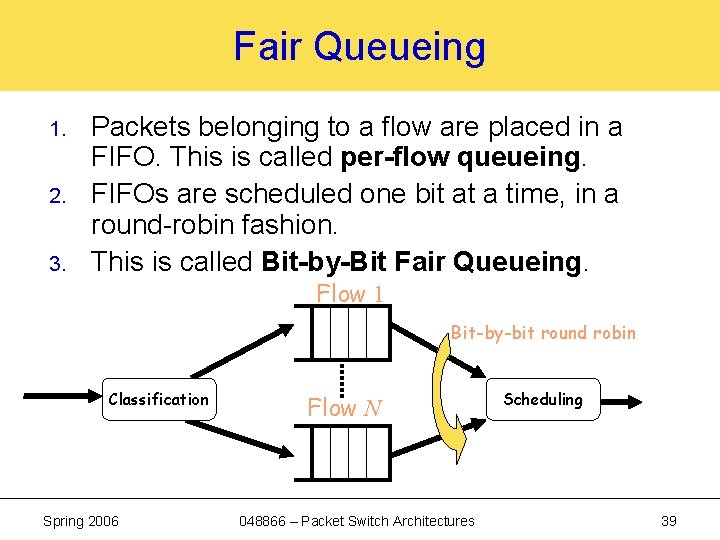

Fair Queueing 1. 2. 3. Packets belonging to a flow are placed in a FIFO. This is called per-flow queueing. FIFOs are scheduled one bit at a time, in a round-robin fashion. This is called Bit-by-Bit Fair Queueing. Flow 1 Bit-by-bit round robin Classification Spring 2006 Flow N 048866 – Packet Switch Architectures Scheduling 39

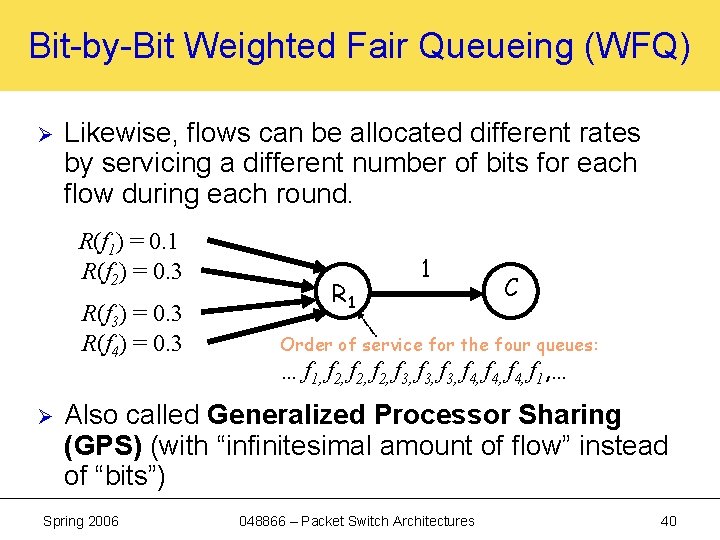

Bit-by-Bit Weighted Fair Queueing (WFQ) Ø Likewise, flows can be allocated different rates by servicing a different number of bits for each flow during each round. R(f 1) = 0. 1 R(f 2) = 0. 3 R(f 3) = 0. 3 R(f 4) = 0. 3 Ø R 1 1 C Order of service for the four queues: … f 1, f 2, f 3, f 4, f 1, … Also called Generalized Processor Sharing (GPS) (with “infinitesimal amount of flow” instead of “bits”) Spring 2006 048866 – Packet Switch Architectures 40

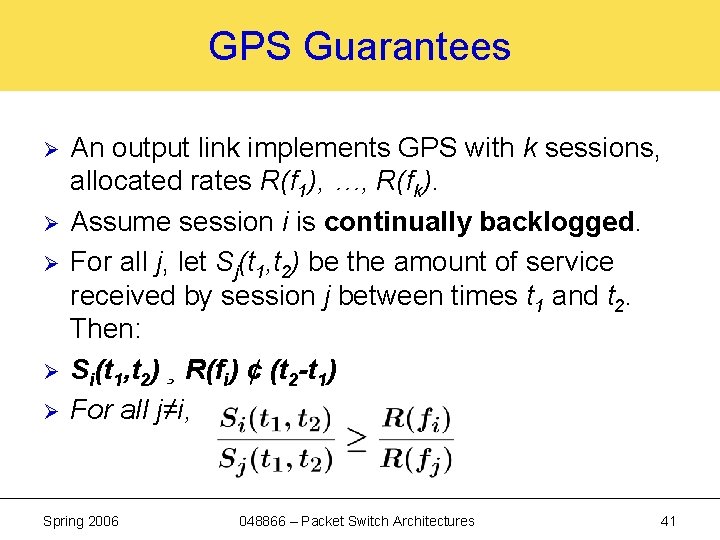

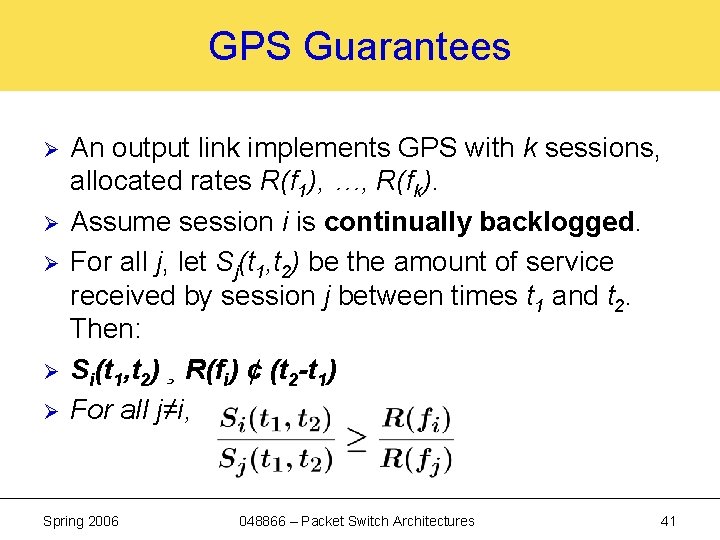

GPS Guarantees Ø Ø Ø An output link implements GPS with k sessions, allocated rates R(f 1), …, R(fk). Assume session i is continually backlogged. For all j, let Sj(t 1, t 2) be the amount of service received by session j between times t 1 and t 2. Then: Si(t 1, t 2) ¸ R(fi) ¢ (t 2 -t 1) For all j≠i, Spring 2006 048866 – Packet Switch Architectures 41

Packetized Weighted Fair Queueing (WFQ) Problem: We need to serve a whole packet at a time. Solution: 1. 2. Determine at what time a packet p would complete if we served flows bit-by-bit. Call this the packet’s finishing time, Fp. Serve packets in the order of increasing finishing time. Also called Packetized Generalized Processor Sharing (PGPS) Spring 2006 048866 – Packet Switch Architectures 42

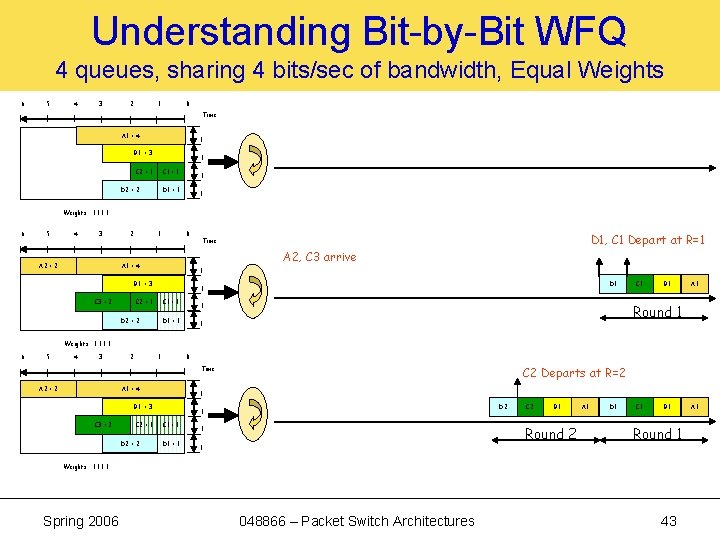

Understanding Bit-by-Bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Equal Weights 6 5 4 3 2 1 0 Time A 1 = 4 1 B 1 = 3 1 C 2 = 1 C 1 = 1 D 2 = 2 1 D 1 = 1 1 Weights : 1: 1 6 5 4 3 A 2 = 2 2 1 0 A 2, C 3 arrive A 1 = 4 1 B 1 = 3 C 3 = 2 D 1, C 1 Depart at R=1 Time D 1 1 C 2 = 1 C 1 = 1 D 2 = 2 B 1 A 1 Round 1 1 D 1 = 1 C 1 1 Weights : 1: 1 6 5 4 3 2 1 0 C 2 Departs at R=2 Time A 2 = 2 A 1 = 4 1 B 1 = 3 C 3 = 2 C 2 = 1 D 2 = 2 D 2 1 C 1 = 1 D 1 = 1 C 2 B 1 Round 2 1 1 A 1 D 1 C 1 B 1 Round 1 Weights : 1: 1 Spring 2006 048866 – Packet Switch Architectures 43 A 1

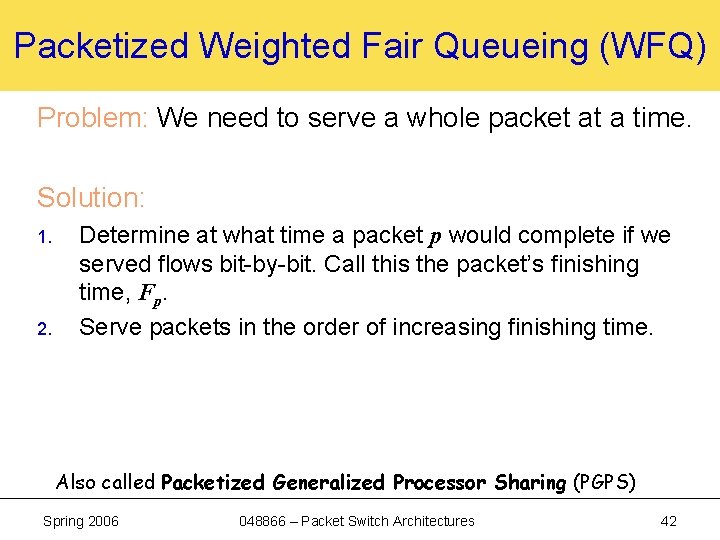

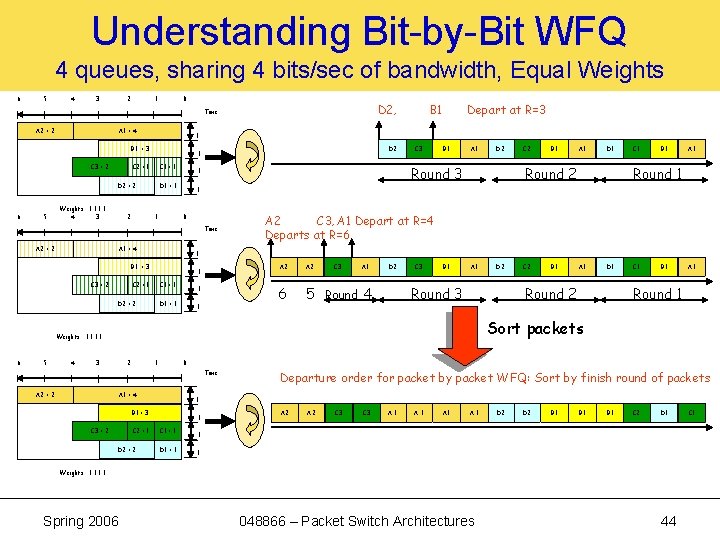

Understanding Bit-by-Bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Equal Weights 6 5 4 3 2 1 0 D 2, Time A 2 = 2 A 1 = 4 5 C 2 = 1 Weights : 1: 1 4 3 2 D 2 1 C 1 = 1 D 2 = 2 6 1 B 1 A 1 0 1 C 2 = 1 C 1 = 1 D 2 = 2 4 B 1 A 1 D 1 Round 2 C 1 B 1 A 1 Round 1 A 2 C 3, A 1 Depart at R=4 Departs at R=6 1 D 1 = 1 1 A 2 C 3 A 1 6 5 Round 4 D 2 C 3 B 1 A 1 D 2 C 2 B 1 A 1 D 1 Round 2 Round 3 C 1 B 1 A 1 Round 1 Sort packets Weights : 1: 1 5 C 2 1 B 1 = 3 6 D 2 1 A 1 = 4 C 3 = 2 C 3 Round 3 1 D 1 = 1 Time A 2 = 2 Depart at R=3 1 B 1 = 3 C 3 = 2 B 1 3 2 1 0 Time A 2 = 2 A 1 = 4 1 B 1 = 3 C 3 = 2 C 2 = 1 D 2 = 2 Departure order for packet by packet WFQ: Sort by finish round of packets 1 C 1 = 1 D 1 = 1 A 2 C 3 A 1 A 1 D 2 B 1 B 1 C 2 D 1 1 1 Weights : 1: 1 Spring 2006 048866 – Packet Switch Architectures 44 C 1

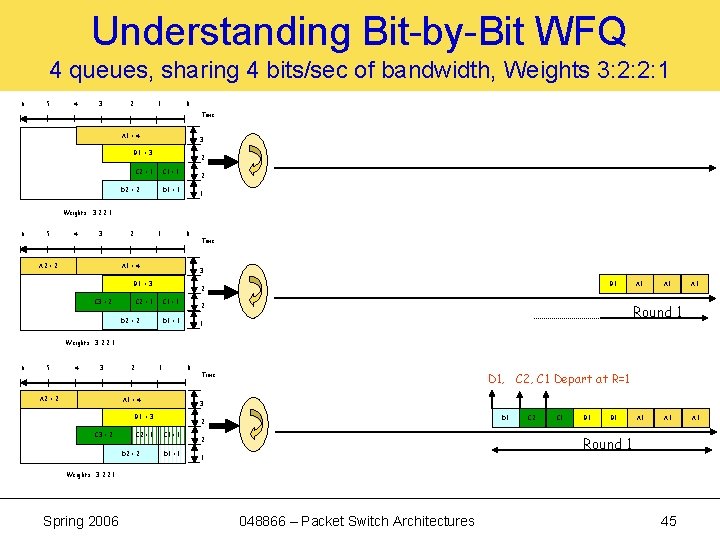

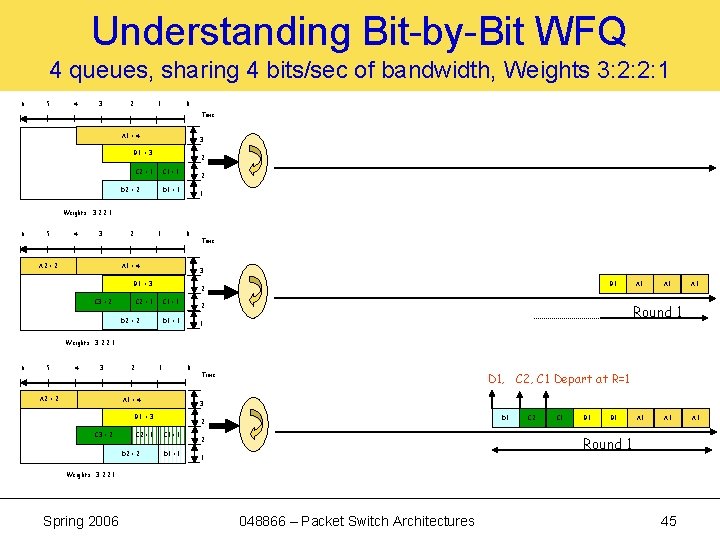

Understanding Bit-by-Bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Weights 3: 2: 2: 1 6 5 4 3 2 1 0 Time A 1 = 4 3 B 1 = 3 2 C 2 = 1 C 1 = 1 D 2 = 2 2 D 1 = 1 1 Weights : 3: 2: 2: 1 6 5 4 3 A 2 = 2 2 1 0 A 1 = 4 3 B 1 = 3 C 3 = 2 Time B 1 2 C 2 = 1 C 1 = 1 D 2 = 2 A 1 Round 1 2 D 1 = 1 A 1 1 Weights : 3: 2: 2: 1 6 5 4 3 A 2 = 2 2 1 0 A 1 = 4 3 B 1 = 3 C 3 = 2 C 2 = 1 D 2 = 2 D 1, C 2, C 1 Depart at R=1 Time D 1 2 C 1 = 1 D 1 = 1 C 2 C 1 B 1 A 1 Round 1 2 1 Weights : 3: 2: 2: 1 Spring 2006 048866 – Packet Switch Architectures 45 A 1

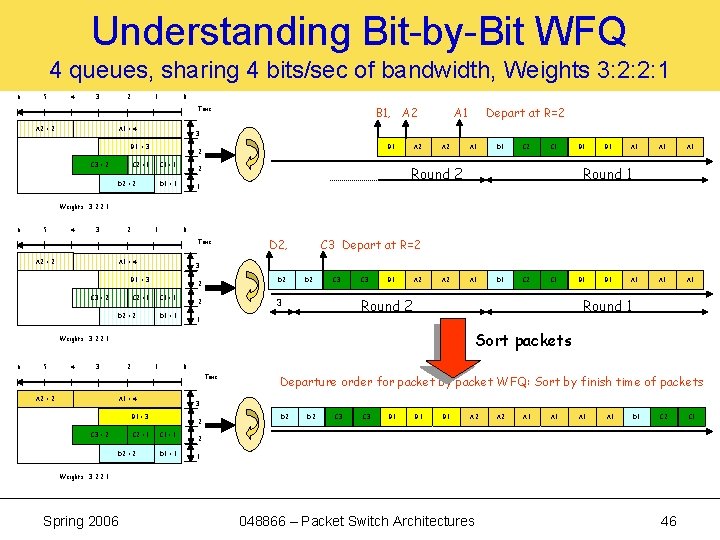

Understanding Bit-by-Bit WFQ 4 queues, sharing 4 bits/sec of bandwidth, Weights 3: 2: 2: 1 6 5 4 3 2 1 0 B 1, A 2 Time A 2 = 2 A 1 = 4 Depart at R=2 3 B 1 = 3 C 3 = 2 A 1 B 1 2 C 2 = 1 C 1 = 1 D 2 = 2 A 1 D 1 C 2 C 1 Round 2 2 D 1 = 1 A 2 1 B 1 A 1 A 1 A 1 Round 1 Weights : 3: 2: 2: 1 6 5 4 3 2 1 0 Time A 2 = 2 A 1 = 4 C 3 Depart at R=2 3 B 1 = 3 C 3 = 2 D 2, D 2 2 C 2 = 1 C 1 = 1 D 2 = 2 C 3 B 1 A 2 A 1 5 4 3 2 1 B 1 A 1 Round 1 0 A 1 = 4 C 2 = 1 D 2 = 2 Weights : 1: 1 Departure order for packet by packet WFQ: Sort by finish time of packets 3 B 1 = 3 C 3 = 2 C 1 Sort packets Time A 2 = 2 C 2 1 Weights : 3: 2: 2: 1 6 D 1 Round 2 3 2 D 1 = 1 D 2 2 C 1 = 1 D 2 D 2 C 3 B 1 B 1 A 2 A 1 A 1 D 1 C 2 2 1 Weights : 3: 2: 2: 1 Spring 2006 048866 – Packet Switch Architectures 46 C 1

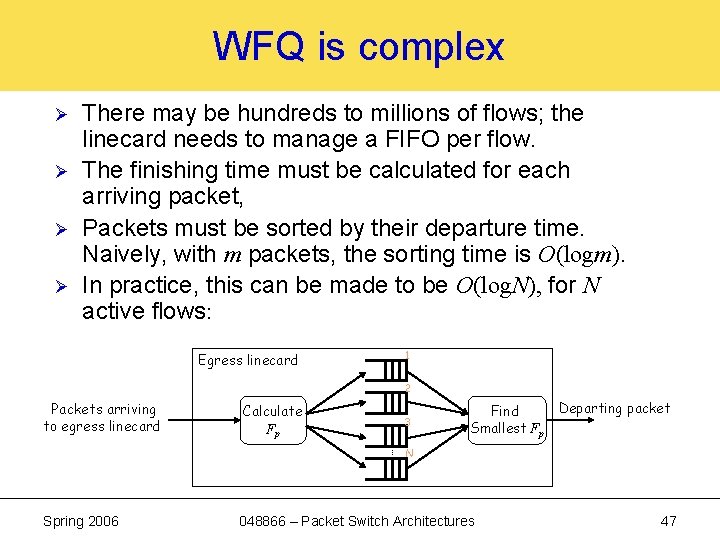

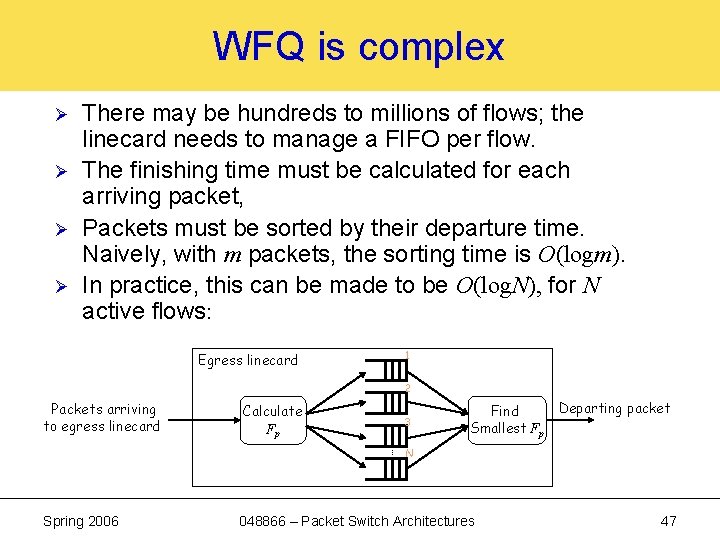

WFQ is complex Ø Ø There may be hundreds to millions of flows; the linecard needs to manage a FIFO per flow. The finishing time must be calculated for each arriving packet, Packets must be sorted by their departure time. Naively, with m packets, the sorting time is O(logm). In practice, this can be made to be O(log. N), for N active flows: Egress linecard 1 2 Packets arriving to egress linecard Calculate Fp 3 Find Smallest Fp Departing packet N Spring 2006 048866 – Packet Switch Architectures 47

![Deficit Round Robin DRR Shreedhar Varghese 95 An O1 approximation to WFQ Deficit Round Robin (DRR) [Shreedhar & Varghese, ’ 95] An O(1) approximation to WFQ](https://slidetodoc.com/presentation_image_h2/d372061e3fbd28f028990ae371a5a2f7/image-48.jpg)

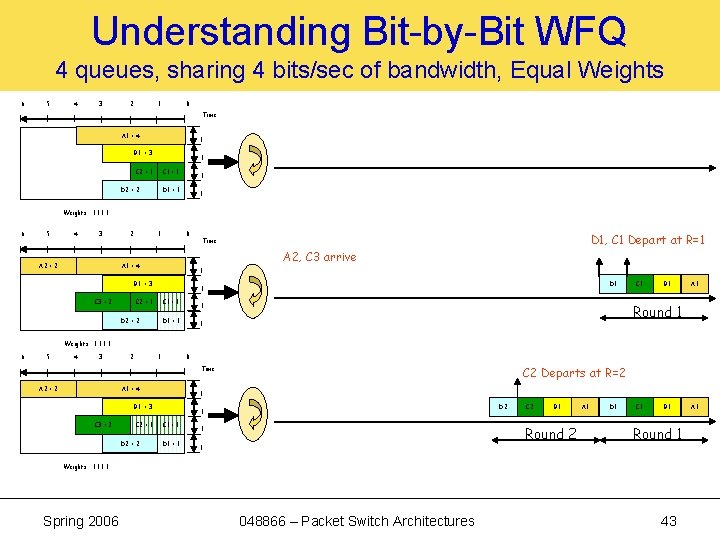

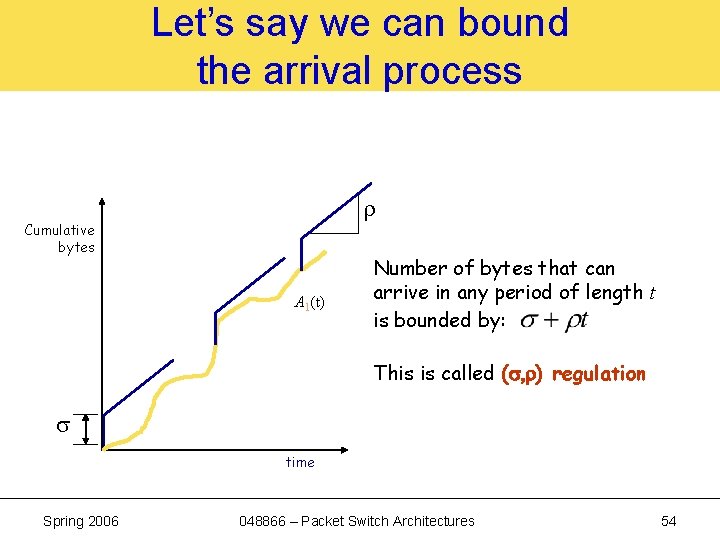

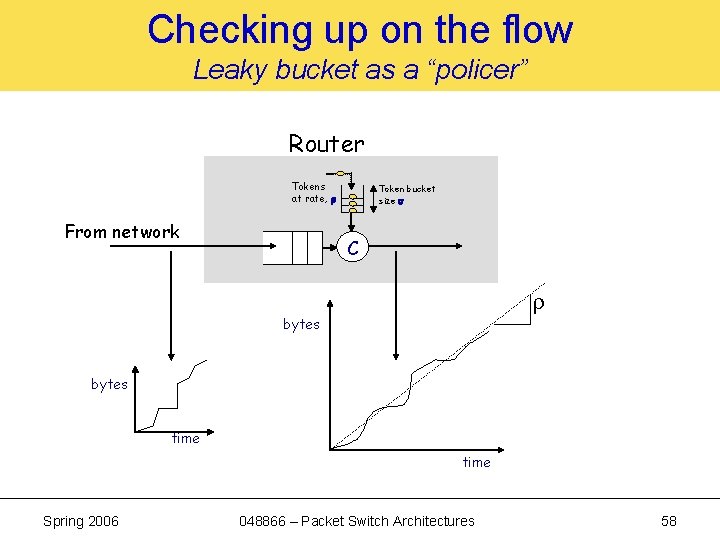

Deficit Round Robin (DRR) [Shreedhar & Varghese, ’ 95] An O(1) approximation to WFQ Step 1: Step 2, 3, 4: Active packet queues 100 400 60 Active packet queues 200 100 600 150 Remaining credits 0 400 600 400 100 0 50 0 340 400 150 60 100 600 200 400 600 400 100 50 340 150 200 Quantum Size = 200 It is easy to implement Weighted DRR using a different quantum size for each queue. v Often-adopted solution in practice v Spring 2006 048866 – Packet Switch Architectures 48

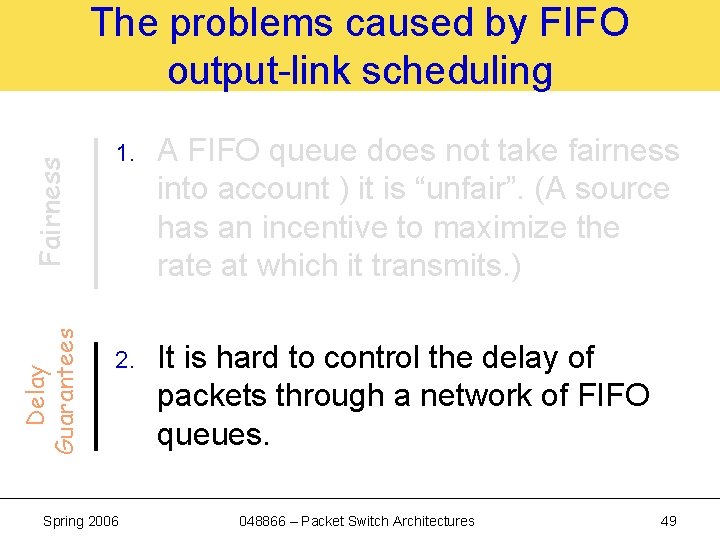

Delay Guarantees Fairness The problems caused by FIFO output-link scheduling 1. A FIFO queue does not take fairness into account ) it is “unfair”. (A source has an incentive to maximize the rate at which it transmits. ) 2. It is hard to control the delay of packets through a network of FIFO queues. Spring 2006 048866 – Packet Switch Architectures 49

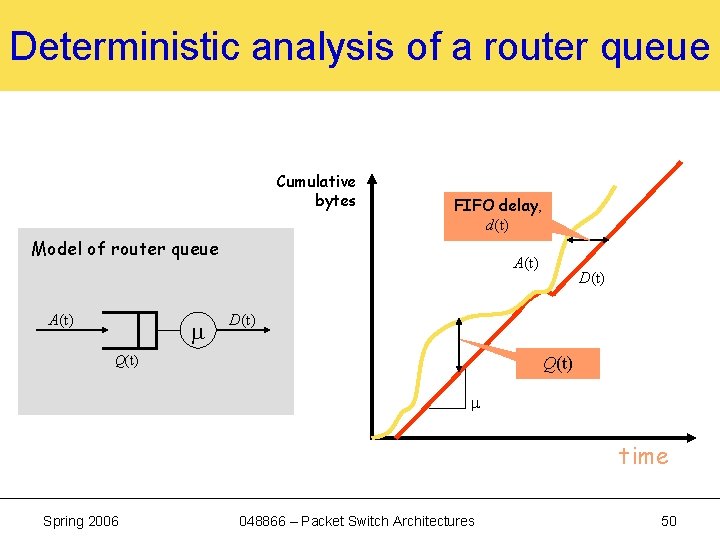

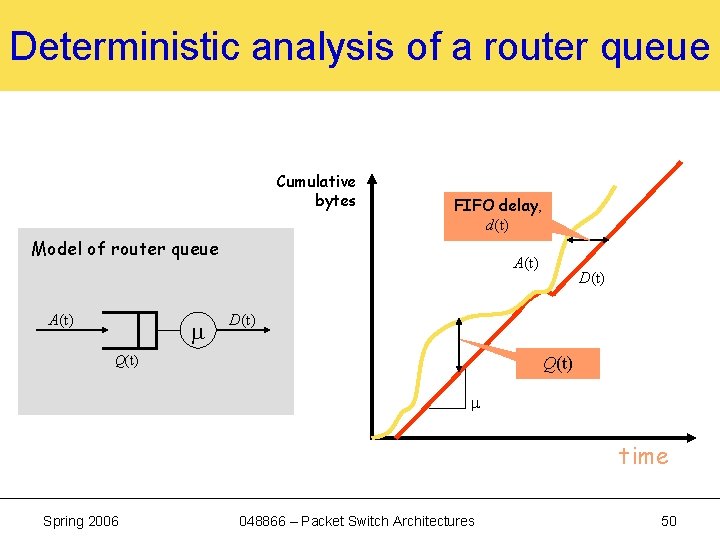

Deterministic analysis of a router queue Cumulative bytes FIFO delay, d(t) Model of router queue A(t) m A(t) D(t) Q(t) m time Spring 2006 048866 – Packet Switch Architectures 50

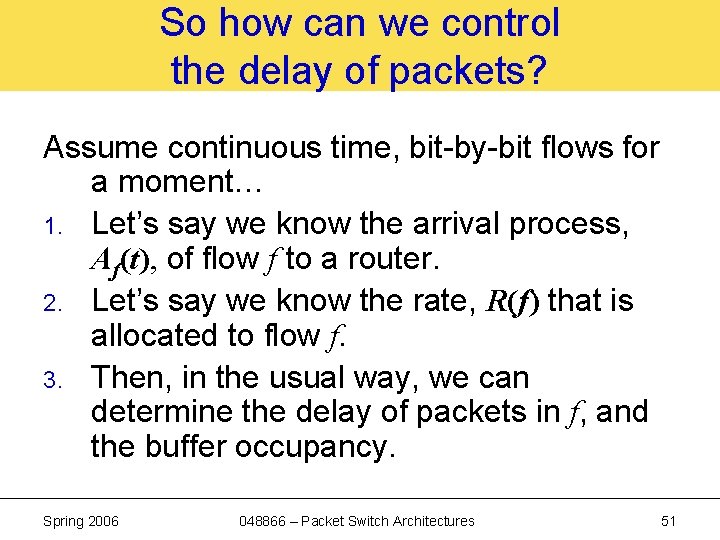

So how can we control the delay of packets? Assume continuous time, bit-by-bit flows for a moment… 1. Let’s say we know the arrival process, Af(t), of flow f to a router. 2. Let’s say we know the rate, R(f) that is allocated to flow f. 3. Then, in the usual way, we can determine the delay of packets in f, and the buffer occupancy. Spring 2006 048866 – Packet Switch Architectures 51

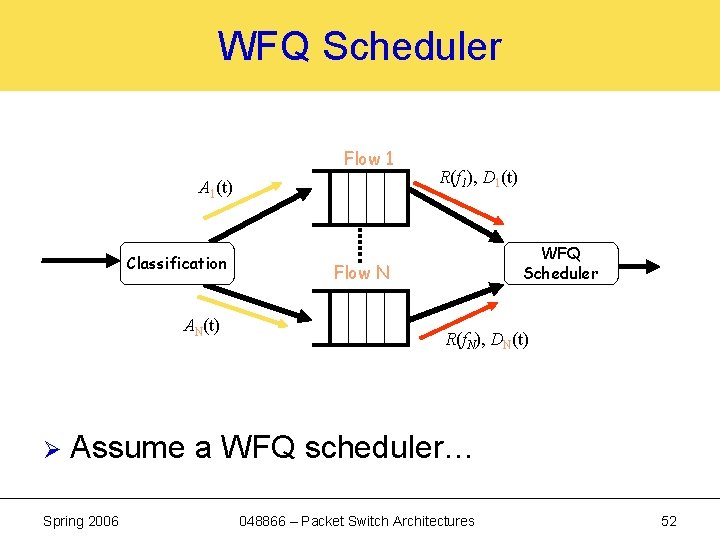

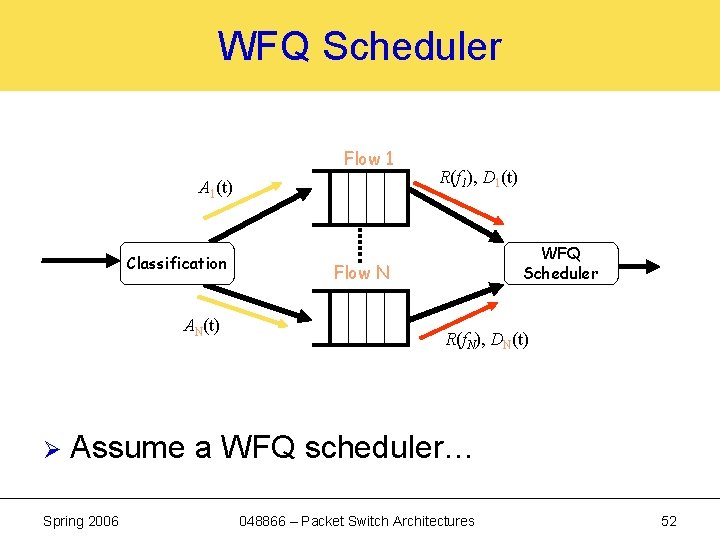

WFQ Scheduler Flow 1 A 1(t) Classification AN(t) Ø R(f 1), D 1(t) WFQ Scheduler Flow N R(f. N), DN(t) Assume a WFQ scheduler… Spring 2006 048866 – Packet Switch Architectures 52

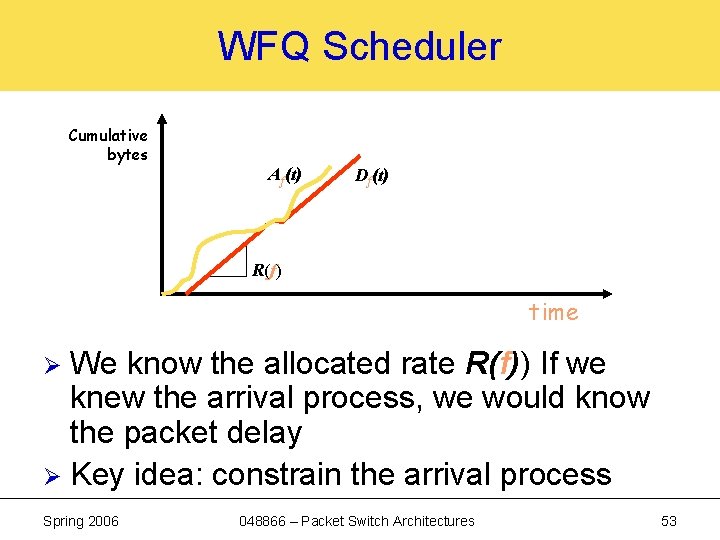

WFQ Scheduler Cumulative bytes Af(t) Df(t) R(f) time We know the allocated rate R(f)) If we knew the arrival process, we would know the packet delay Ø Key idea: constrain the arrival process Ø Spring 2006 048866 – Packet Switch Architectures 53

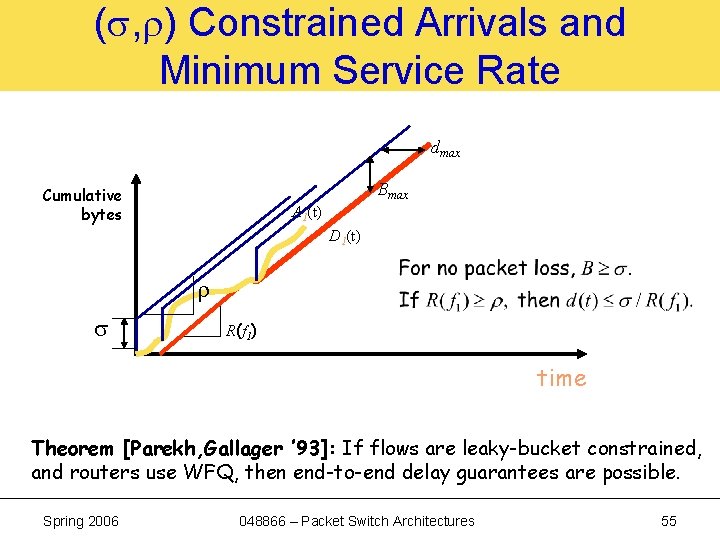

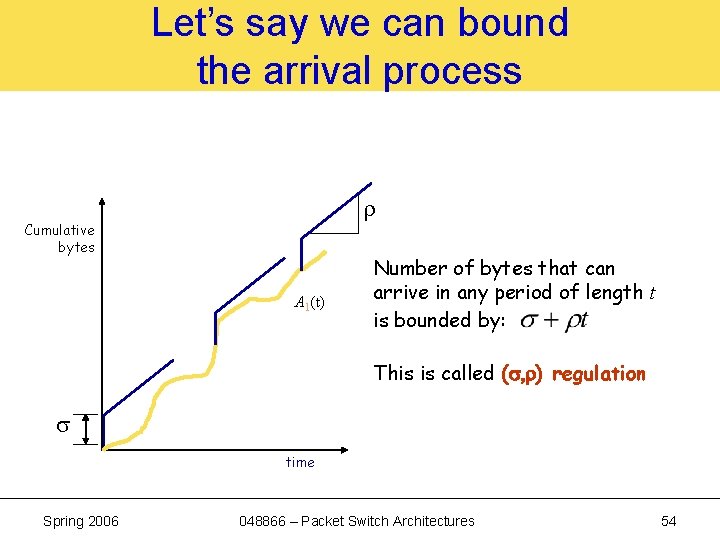

Let’s say we can bound the arrival process Cumulative bytes A 1(t) Number of bytes that can arrive in any period of length t is bounded by: This is called (s, r) regulation time Spring 2006 048866 – Packet Switch Architectures 54

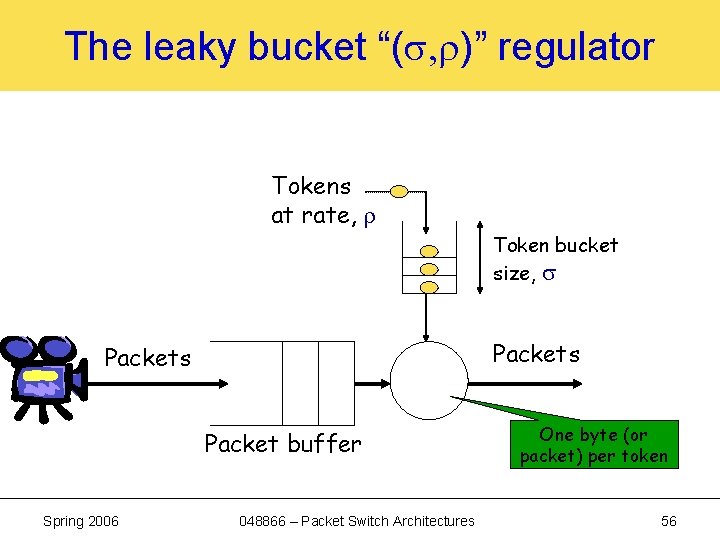

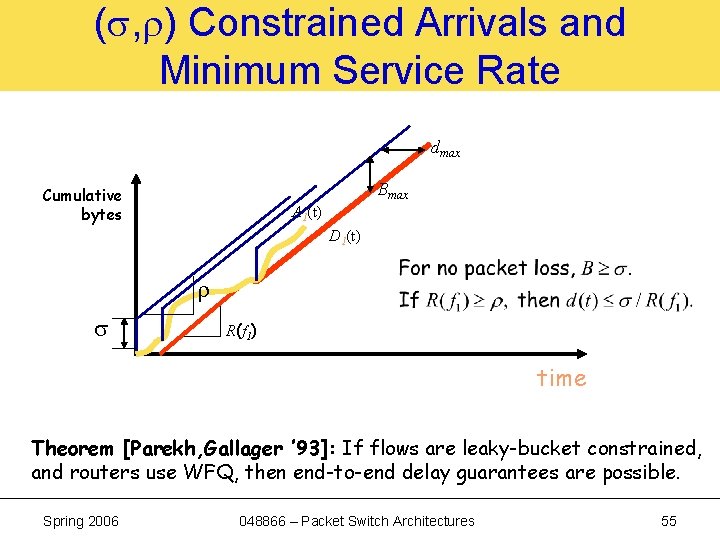

( , ) Constrained Arrivals and Minimum Service Rate dmax Bmax Cumulative bytes A 1(t) D 1(t) R(f 1) time Theorem [Parekh, Gallager ’ 93]: If flows are leaky-bucket constrained, and routers use WFQ, then end-to-end delay guarantees are possible. Spring 2006 048866 – Packet Switch Architectures 55

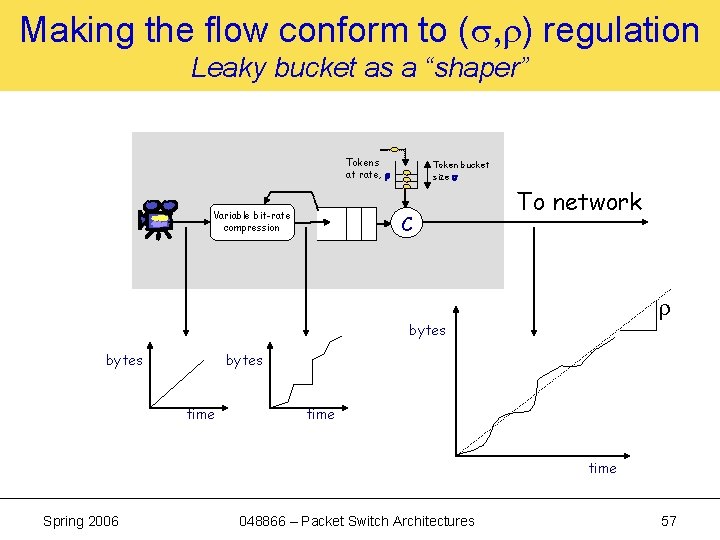

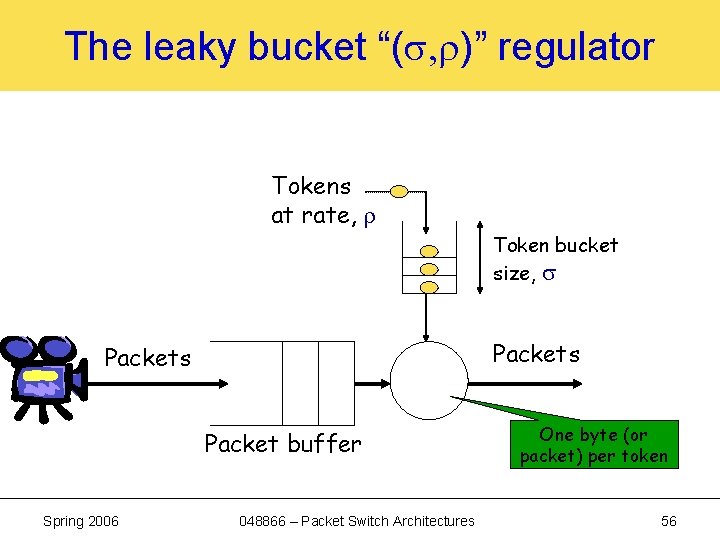

The leaky bucket “( , )” regulator Tokens at rate, Packets Packet buffer Spring 2006 Token bucket size, 048866 – Packet Switch Architectures One byte (or packet) per token 56

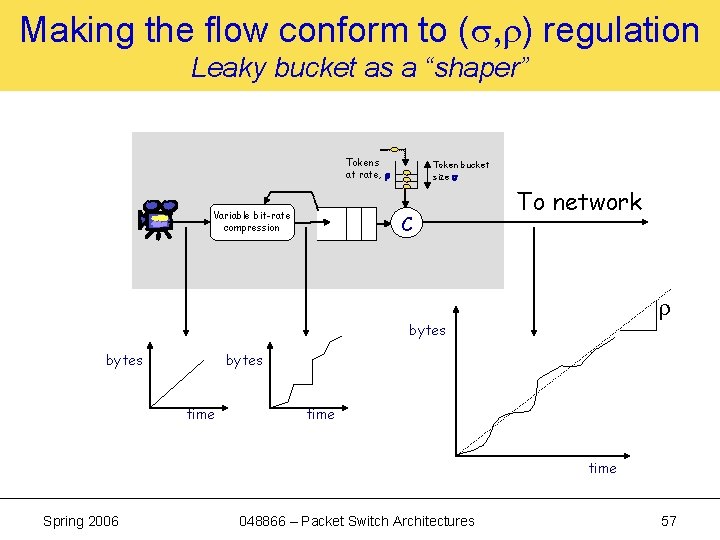

Making the flow conform to ( , ) regulation Leaky bucket as a “shaper” Tokens at rate, r Variable bit-rate compression Token bucket size s C To network bytes time Spring 2006 048866 – Packet Switch Architectures 57

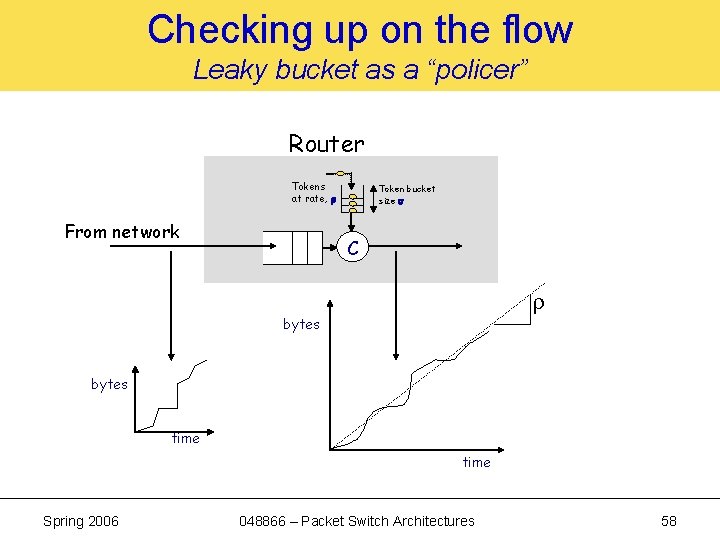

Checking up on the flow Leaky bucket as a “policer” Router Tokens at rate, r From network Token bucket size s C bytes time Spring 2006 048866 – Packet Switch Architectures 58

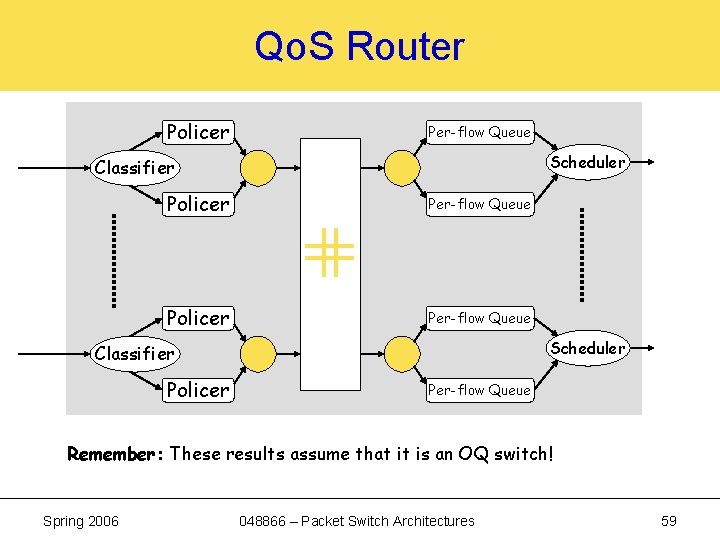

Qo. S Router Policer Per-flow Queue Scheduler Classifier Policer Per-flow Queue Remember: These results assume that it is an OQ switch! Spring 2006 048866 – Packet Switch Architectures 59

![References 1 2 GPS A K Parekh and R Gallager A Generalized Processor Sharing References 1. 2. [GPS] A. K. Parekh and R. Gallager “A Generalized Processor Sharing](https://slidetodoc.com/presentation_image_h2/d372061e3fbd28f028990ae371a5a2f7/image-60.jpg)

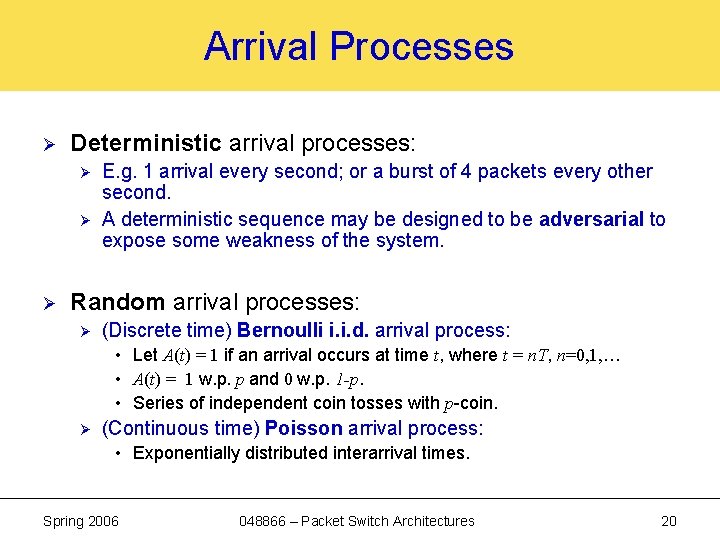

References 1. 2. [GPS] A. K. Parekh and R. Gallager “A Generalized Processor Sharing Approach to Flow Control in Integrated Services Networks: The Single Node Case, ” IEEE Transactions on Networking, June 1993. [DRR] M. Shreedhar and G. Varghese “Efficient Fair Queueing using Deficit Round Robin, ” ACM Sigcomm, 1995. Spring 2006 048866 – Packet Switch Architectures 60